ELK 란?

데이터를 수집 및 분석하여 시각화 하기 위한 오픈 소스 플랫폼

Elasticsearch

- 분산 검색 및 분석 엔진

Logstash

- 데이터를 수집하는 엔진

Kibana

- 수집, 분석한 데이터를 시각화하는 도구

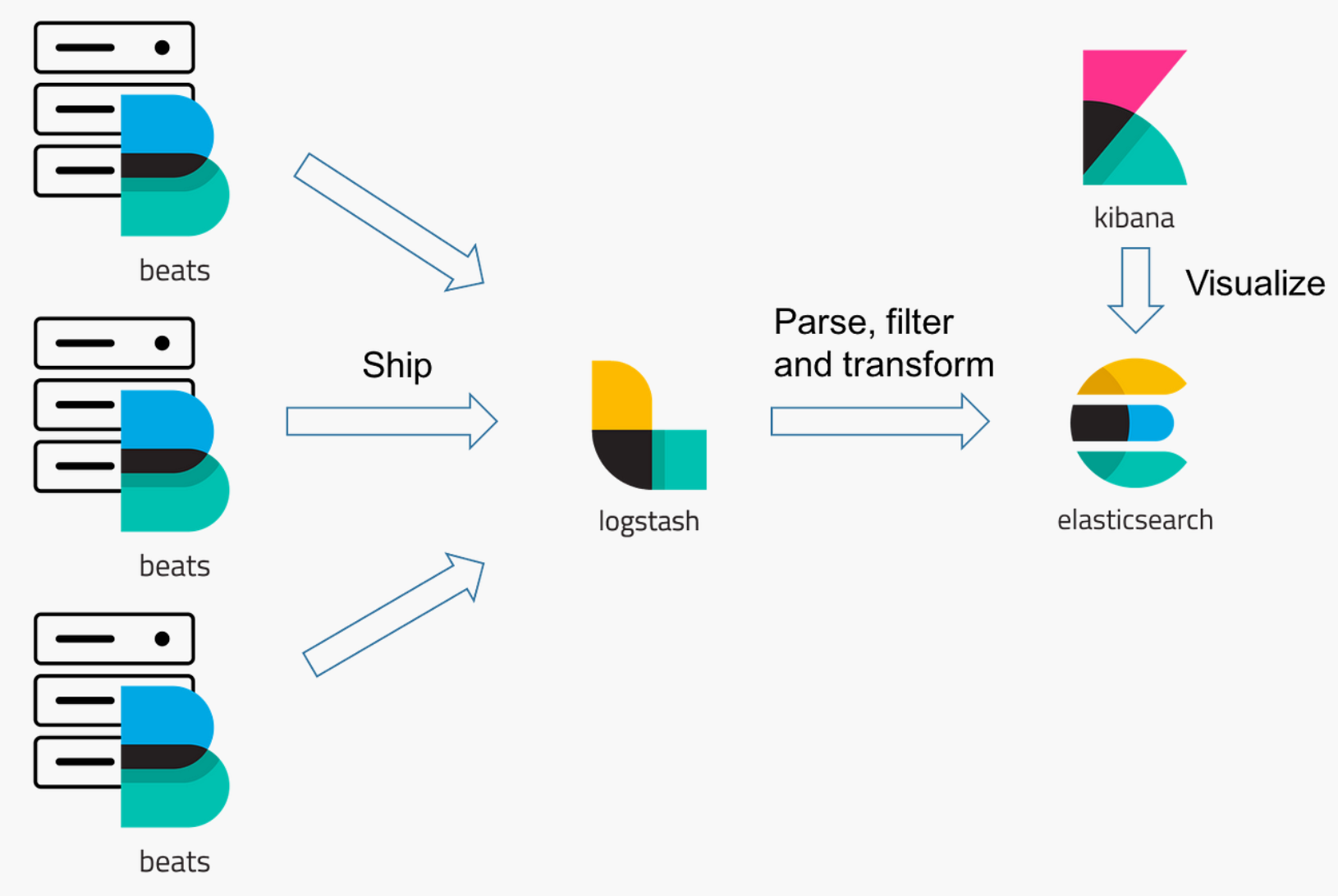

Beats

- 경량화 된 로그 수집기 도구 ex) filebeat

- 로그파일을 Filebeat로 수집하고,

- 수집한 로그를 Logstash에 전달하고,

- 전달 받은 로그를 Elasticsearch에 저장하고,

- 저장된 로그를 Kibana를 통해 시각화한다.

ELk 구축하기

- Django Framework 사용

- Docker compose 구성

- 먼저 프로젝트 폴더에 docker-elk를 내려받는다.

git clone https://github.com/deviantony/docker-elk.git

docker-compose up setup

docker-compose up- elasticsearch.yml 파일 내

xpack.license.self_generated.type: basic

xpack.security.enabled: false- filebeat/config/filebeat.yml을 추가

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/*.log # 수집할 로그 파일 위치

output.logstash:

enabled: true

hosts: ["logstash:5044"]

setup.kibana:

host: "kibana:5601"

username: "elastic"

password: "changeme"nginx로 부터 로그를 생성하고 생성된 로그를 Filebeat로 수집하기 위해 다음과 같이 코드를 구성한다.

수집된 로그는 Elasticsearch로 바로 보낼 수도 있지만, 여기서는 Logstash로 보내 관리한다.

- logstash/logstash.conf

input {

beats {

port => 5044

}

}

filter {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

}

geoip {

source => "clientip"

target => "geoip"

add_tag => [ "nginx-geoip" ]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

useragent {

source => "agent"

}

}

output {

stdout{

codec => "dots"

}

elasticsearch{

hosts => ["elasticsearch:9200"]

index => "weblogs-%{+YYYY.MM.dd}"

user => "logstash_internal"

password => "changeme"

ecs_compatibility => disabled

}

}filter:

- grok - 로그 메세지를 정규 표현식을 사용하여 필드를 추출

- mutate - 필드의 데이터 유형을 변경

- geoip - IP 주소를 사용하여 지리적 정보를 추가

- data - 로그 메세지의 timestamp 필드를 정의된 날짜 형식에 따라 파싱하고 파싱이 완료되면 timestamp 필드를 제거

- useragent - 로그 메세지의 agent 필드에서 사용자 에이전트 정보를 추출

- docker-compose.logging.yml

version: '3.7'

services:

elasticsearch:

build:

context: docker-elk/elasticsearch/

args:

ELASTIC_VERSION: 8.5.2

volumes:

- ./docker-elk/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml:ro,Z

- elasticsearch:/usr/share/elasticsearch/data:Z

ports:

- 9200:9200

- 9300:9300

environment:

node.name: elasticsearch

ES_JAVA_OPTS: -Xms512m -Xmx512m

# Bootstrap password.

# Used to initialize the keystore during the initial startup of

# Elasticsearch. Ignored on subsequent runs.

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD:-}

# Use single node discovery in order to disable production mode and avoid bootstrap checks.

# see: https://www.elastic.co/guide/en/elasticsearch/reference/current/bootstrap-checks.html

discovery.type: single-node

networks:

- elk

restart: unless-stopped

logstash:

build:

context: docker-elk/logstash/

args:

ELASTIC_VERSION: 8.5.2

volumes:

- ./docker-elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro,Z

- ./docker-elk/logstash/pipeline:/usr/share/logstash/pipeline:ro,Z

ports:

- 5044:5044

- 50000:50000/tcp

- 50000:50000/udp

- 9600:9600

environment:

LS_JAVA_OPTS: -Xms256m -Xmx256m

LOGSTASH_INTERNAL_PASSWORD: ${LOGSTASH_INTERNAL_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearch

restart: unless-stopped

kibana:

build:

context: docker-elk/kibana/

args:

ELASTIC_VERSION: 8.5.2

volumes:

- ./docker-elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml:ro,Z

ports:

- 5601:5601

environment:

KIBANA_SYSTEM_PASSWORD: ${KIBANA_SYSTEM_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearch

restart: unless-stopped

filebeat:

build:

context: ./docker-elk/filebeat

args:

ELASTIC_VERSION: 8.5.2

entrypoint: "filebeat -e -strict.perms=false"

volumes:

- ./docker-elk/filebeat/config/filebeat.yml:/usr/share/filebeat/filebeat.yml

- ./nginx/log:/var/log/nginx # nginx log path (require same option on nginx container)

depends_on:

- logstash

- elasticsearch

- kibana

networks:

- elk

networks:

elk:

driver: bridge

volumes:

elasticsearch:

도커를 실행해 localhost:5601에 접속하면, Dicover탭에서 로그가 정상적으로 표시되는 것을 볼 수있다.

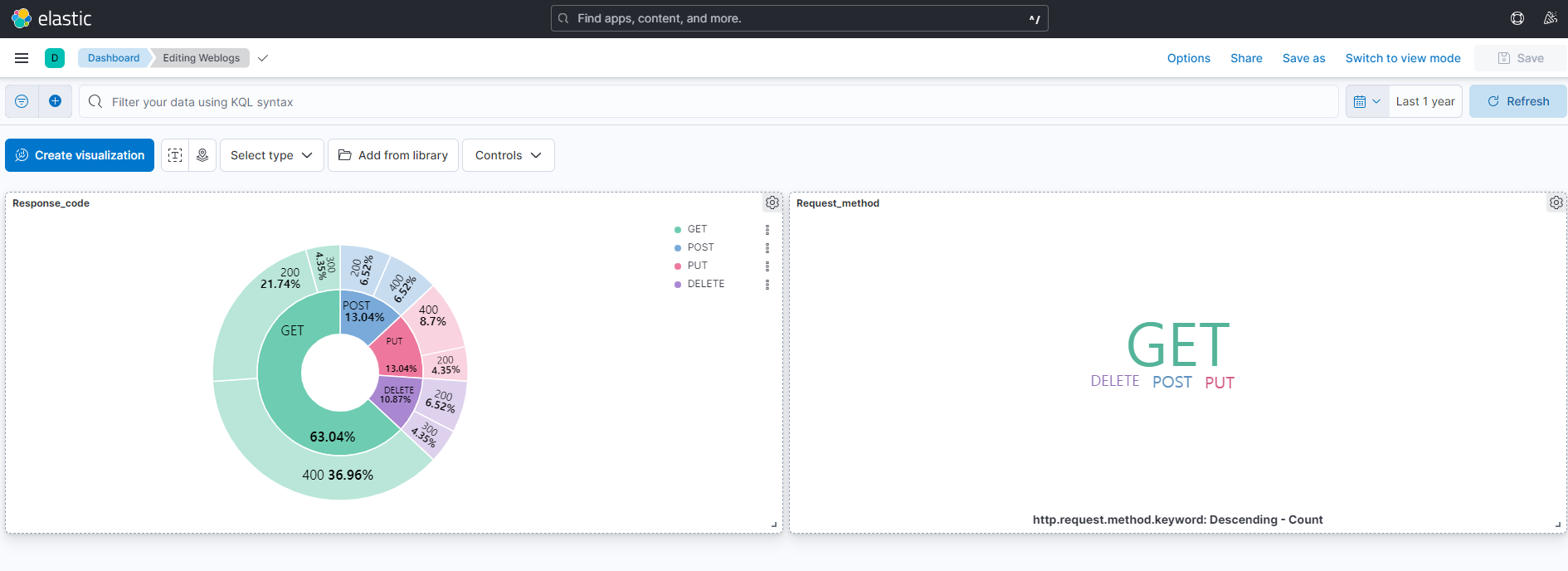

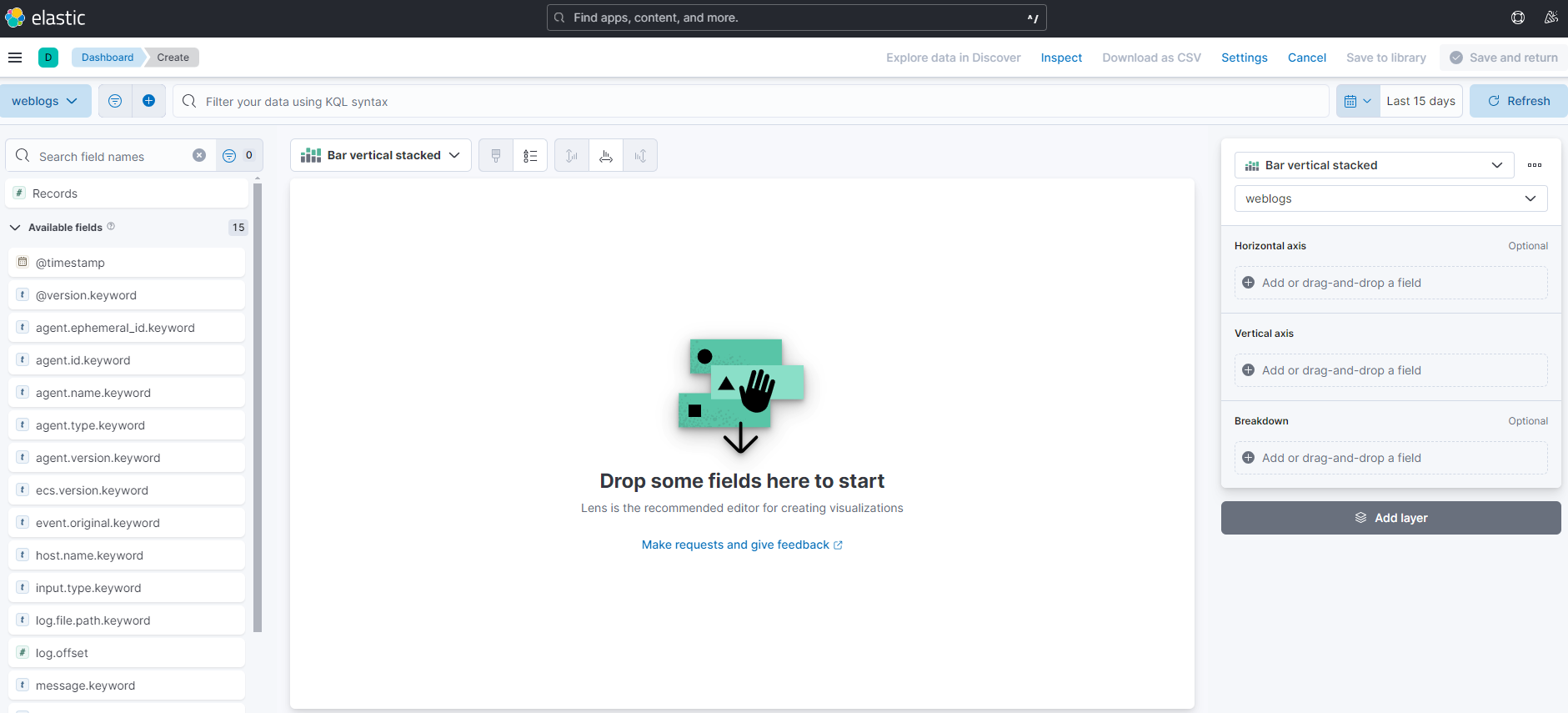

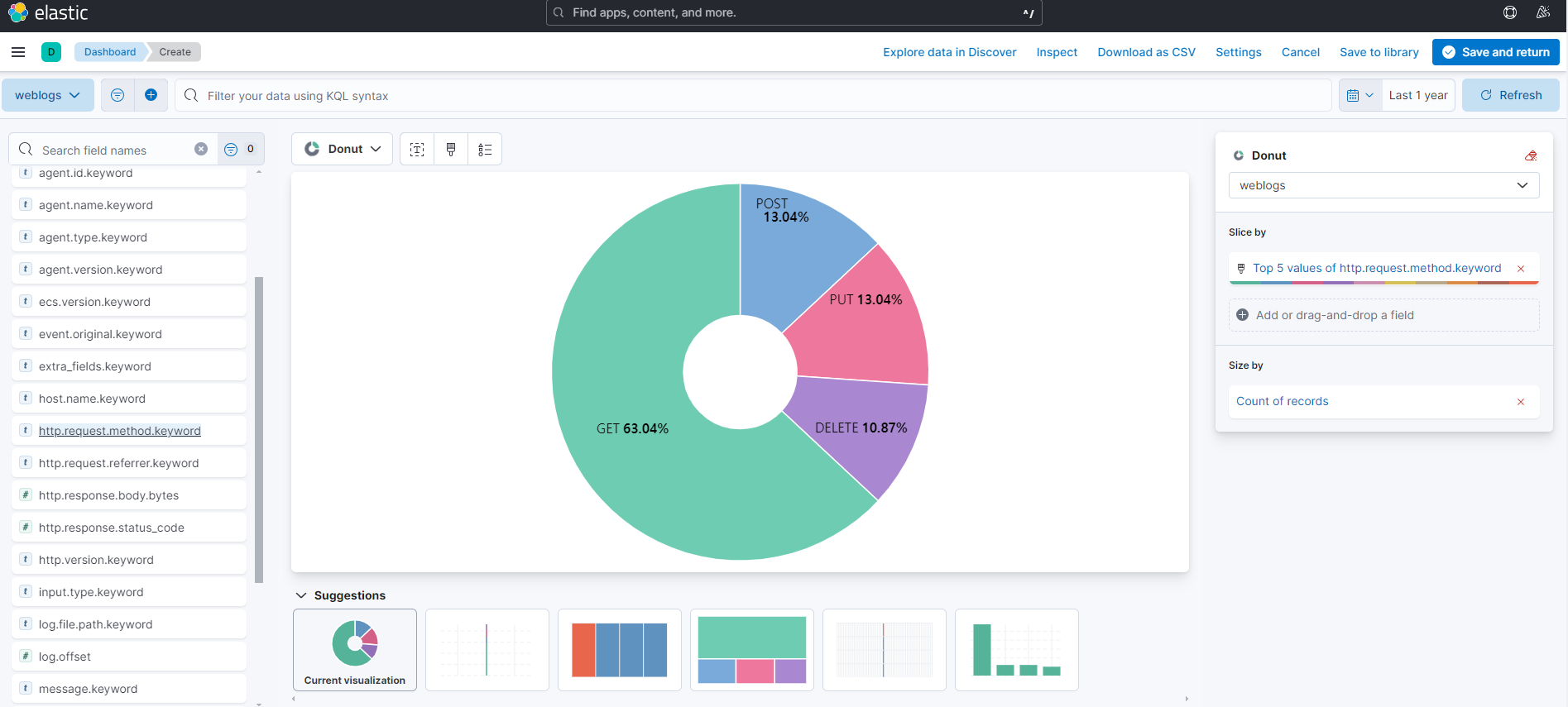

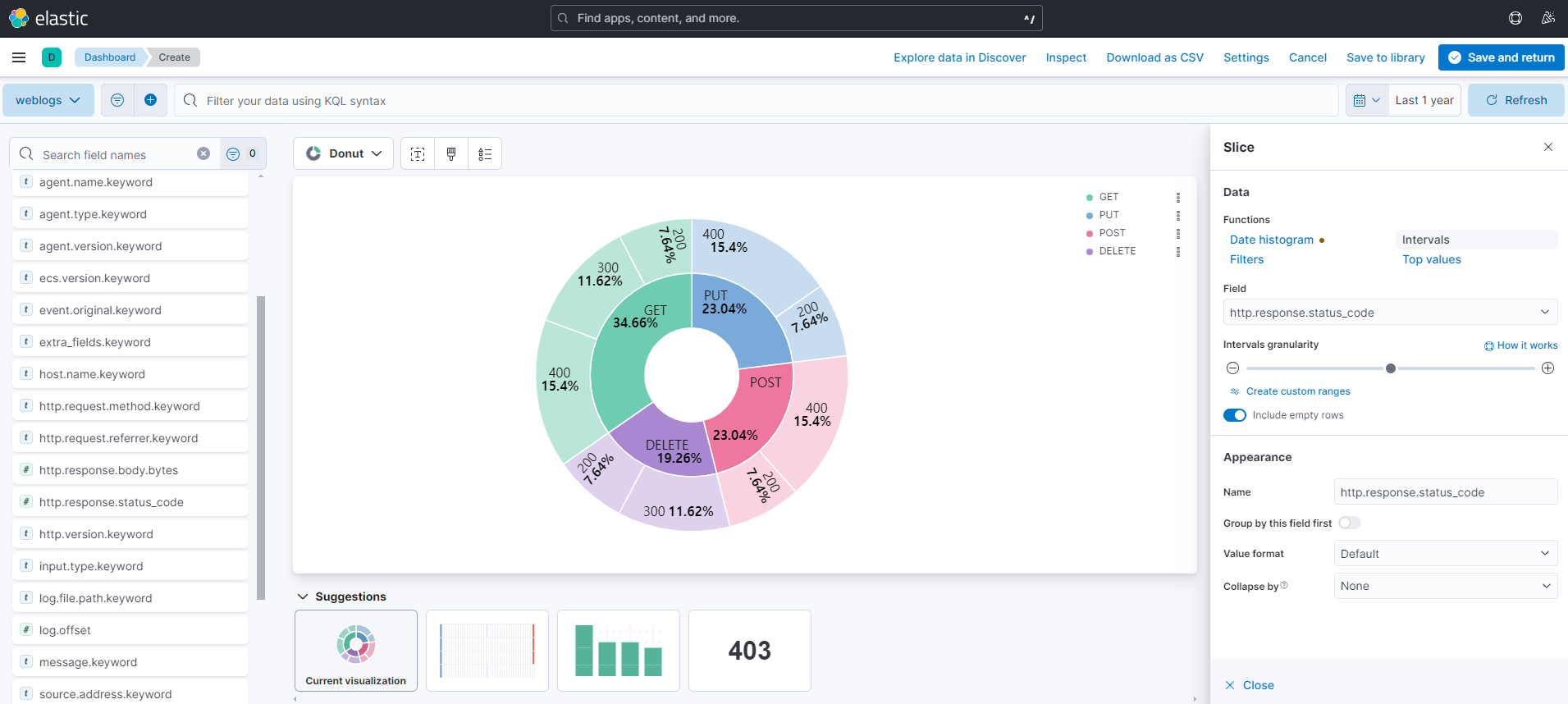

Kibana 시각화

Analytics -> Dashboard에서 Create visualization을 누르고

원하는 필드를 가져와서 여러 가지 형태로 시각화를 할 수 있다.

다음과 같이 태그 클라우드도 설정하여 자주 사용되는 키워드를 나타낼 수 있다.