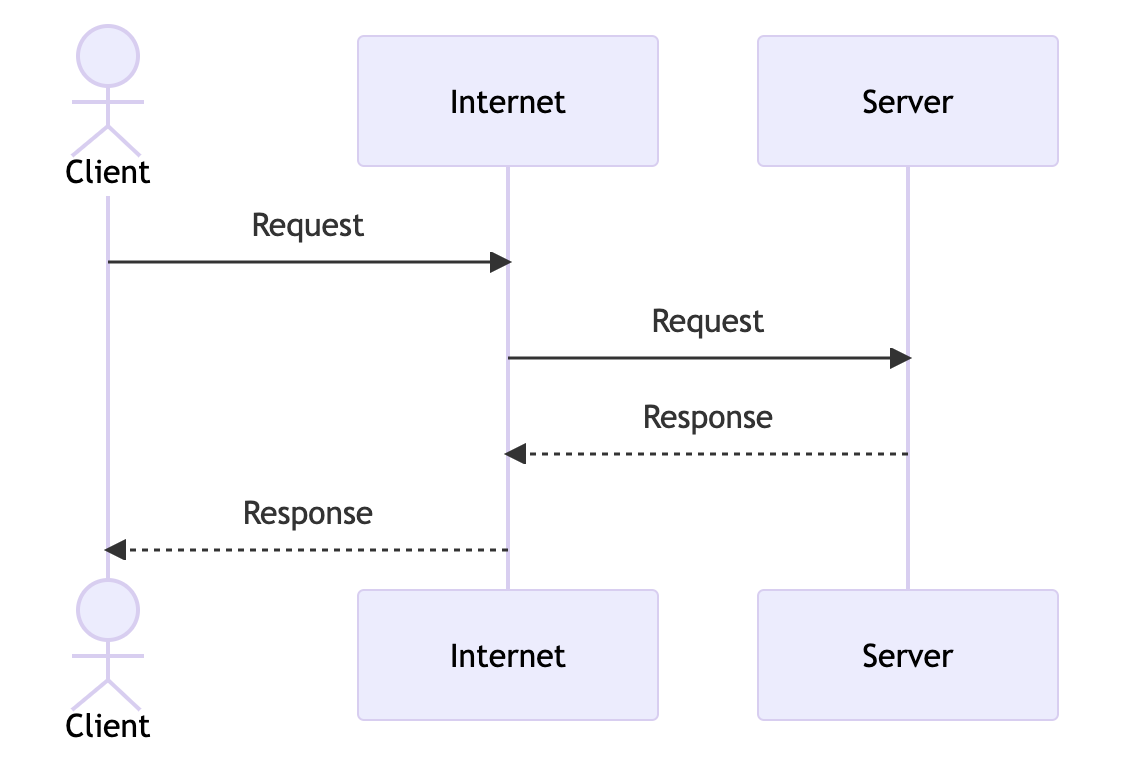

Suppose a connection is made between client and server as follows. Here, assume that server is about to send file.

What happens in the server?

For simple example let's say we have web server. Having server also means that it opens binding socket that it listens on for client connection.

Receive & Send

For anyone who's familiar with Linux would know socket is basically file and server is nothing but a process. Upon the file, what the process can do is commonly referred to as rwx(read, write, execute). In terms of socket however, there is no such thing as execute so it's either r or x. In socket programming parlance, "read" and "write" are referred to as receive and send.

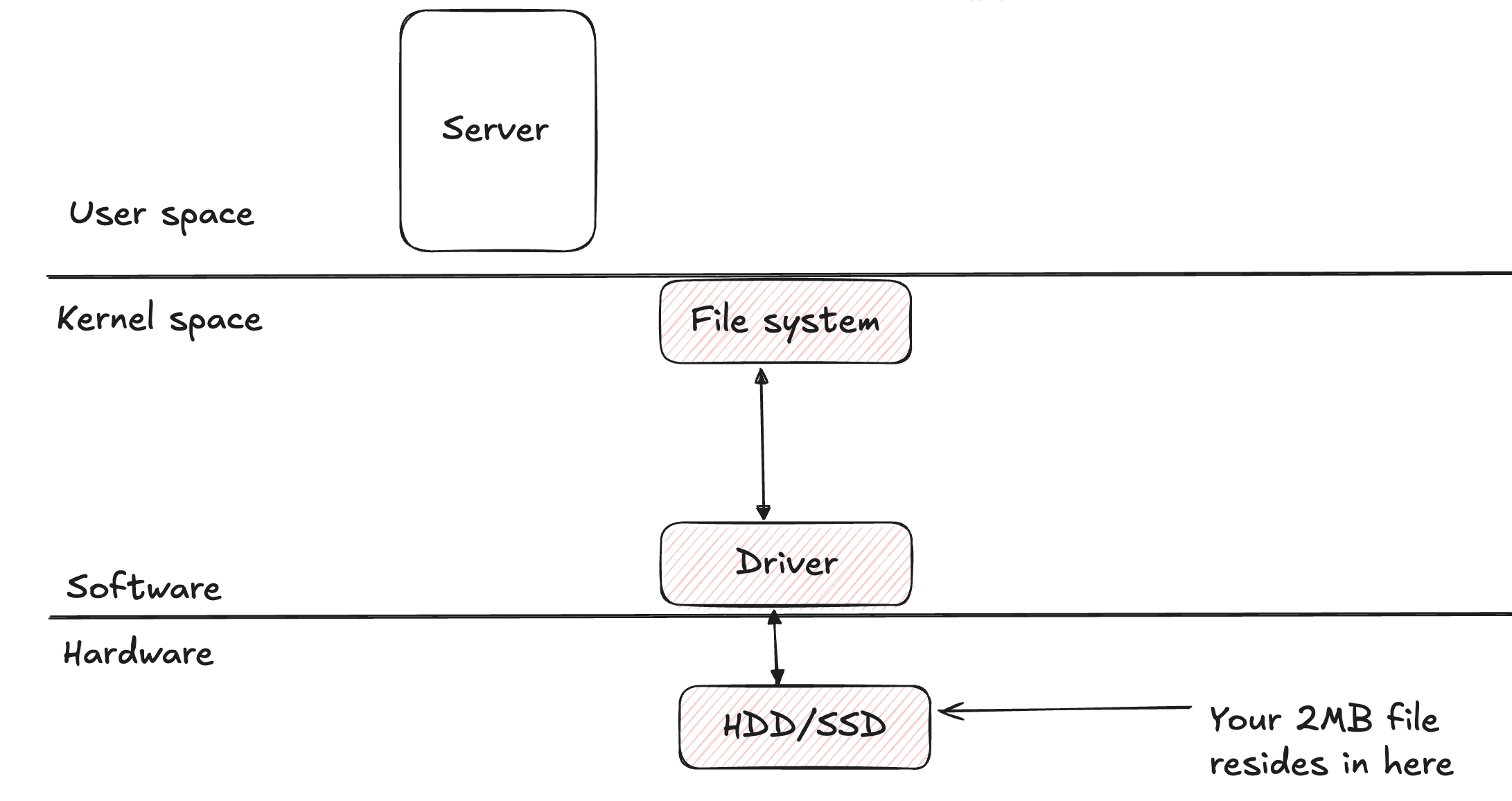

How does server read "the file"?

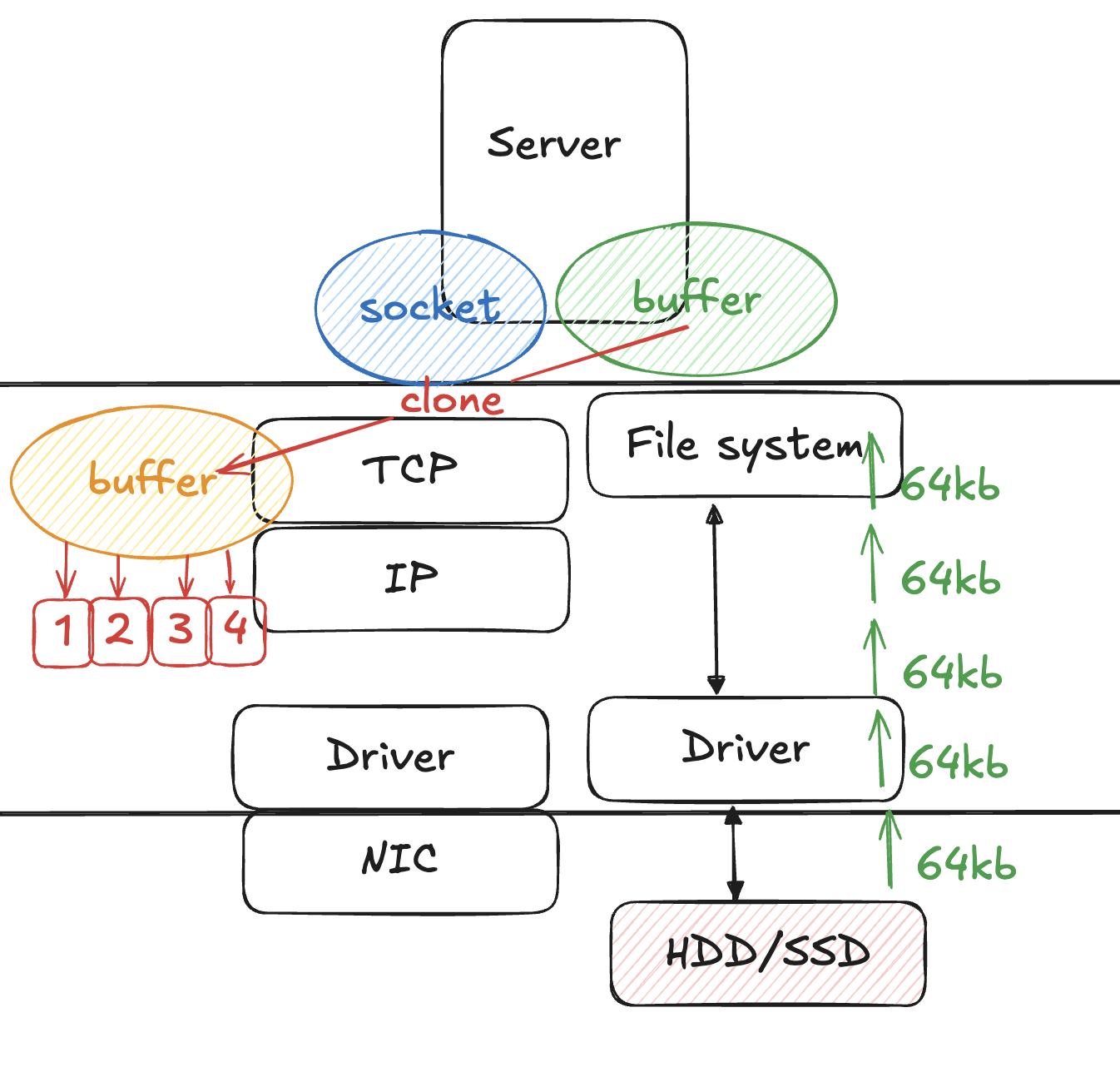

Now, you should know that every file is managed through file system. Suppose the file we want to send over the network is about 2MB. So, it will be:

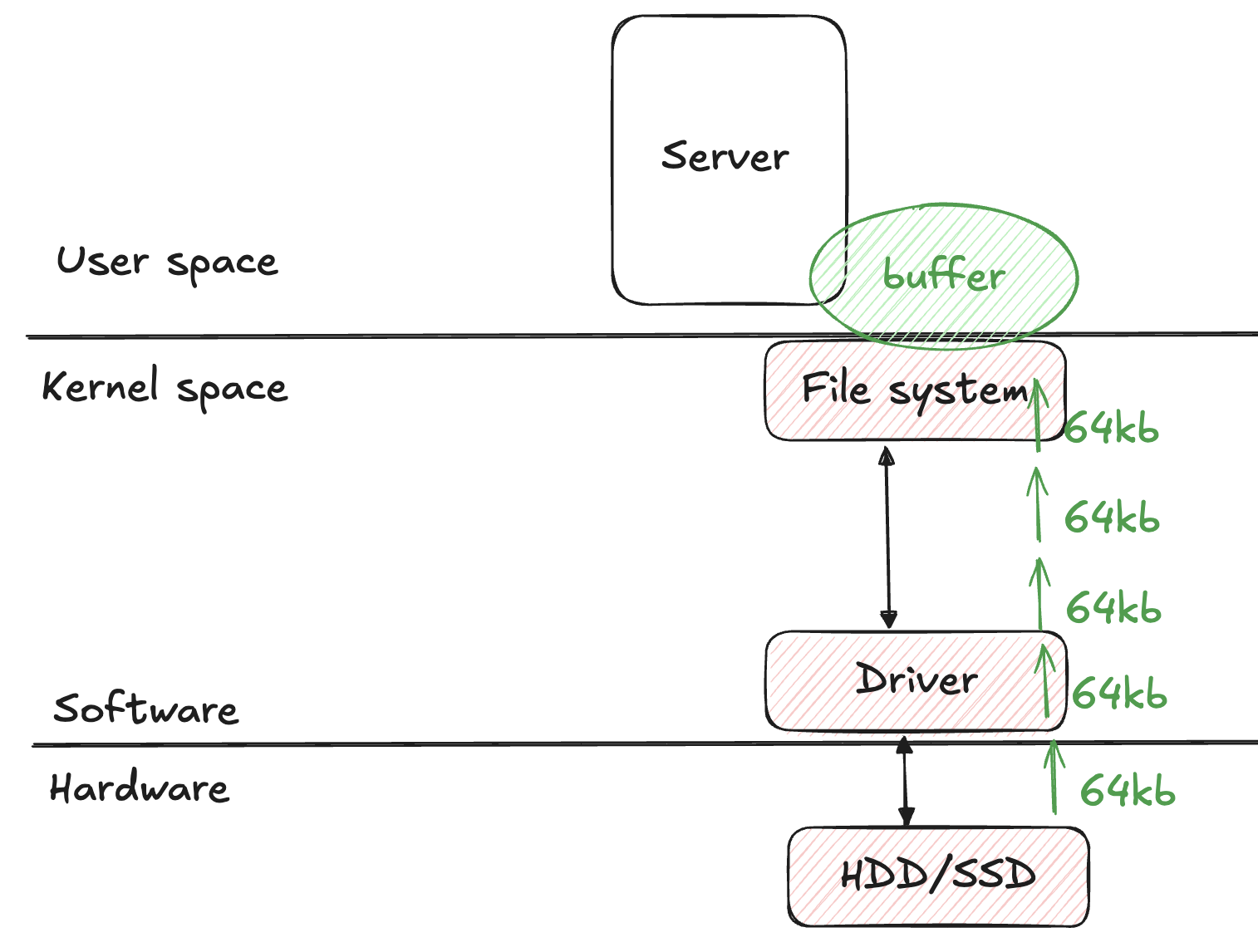

In this picture, when we read file, what is going to happen? Does the file go straigh into the memory? No. The server read file by chunk, and it has buffer to read file. So your 2MB file will come into the server as follows:

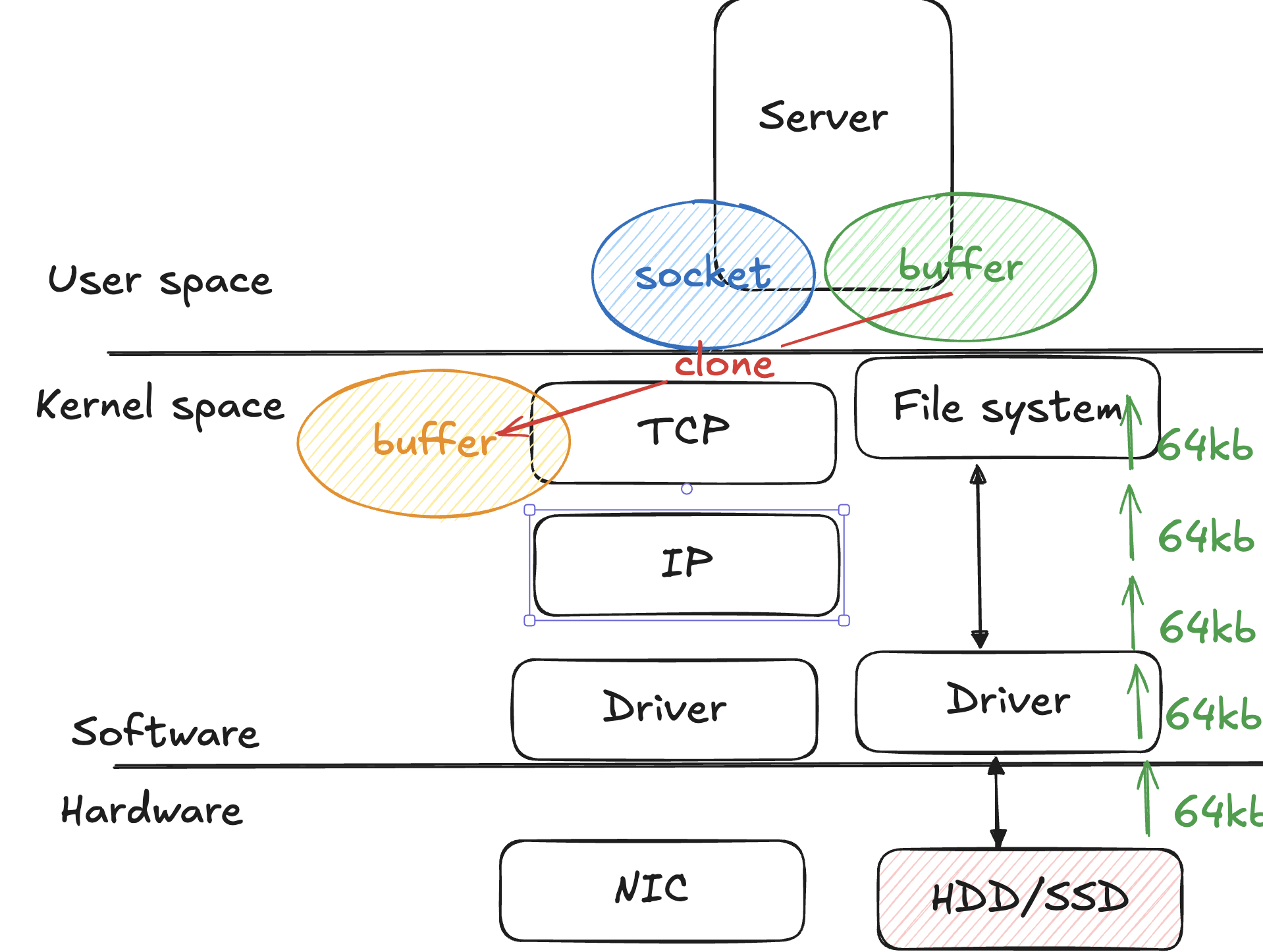

So, if we shift to "file" for network, namely socket, what is going to happen?

You have buffer"S", not a single

Let's consider the buffer we saw above as application buffer. The critical point here is that on the TCP side which resides in kernel space also we also have a different beffer. Let's call it tcp buffer.

So, when you send the data over the network from your server to client, copy from first buffer to second buffer happens.

This kind of IO processing is called Buffered I/O and this is by default.

Segmentation

When data in TCP buffer is passed down to IP level, it goes through what's called segmentation.

Note that this time however, you want to add other metadata like sequence so the receiving end can assemble those messages in case things do not go in order.

Frame

By now you would understand the fact that data is chunked, buffered, segmented. Before going over the network, we have one thing more to do; putting stuff to the frame. You can consider TCP packet as a box, and frame as a delivery vehicle.

If you have ever made a purchase on the internet, you'd know that your item doesn't come directly to you place. It makes quite a few stopby in multiple places.

The same thing happens to your data. The frame is created and deleted every time it goes through encapsulation and decapsulation.

Client side

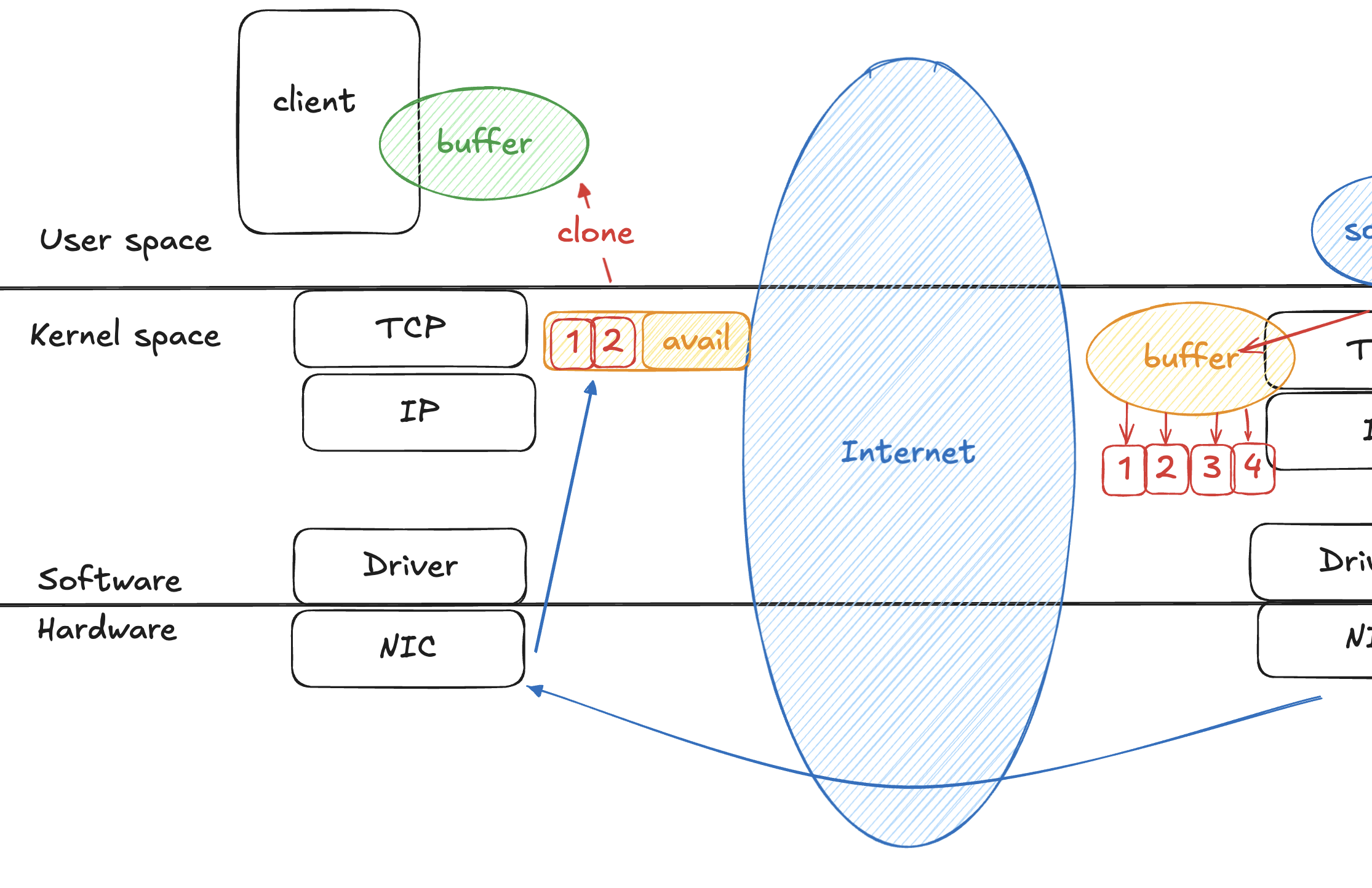

Skipping over physical layer and optic fiber and so on, let's say we've reassemble the frame and it goes through client side. What would happen - It's quite the opposite:

You see see in the picture that client gets TCP packet sequenced 1 and 2. Now, client would sent back to server saying "I received until #2. Give me #3", the message is what we called ACK.

Wait, didn't we send 1,2,3,4? - No, not this time. The server waits until it gets ACK for certain number of segments.

The implication of this is if the server waits long for ACK to arrive, it introduces delay. This is the main reason why programming folks often say "TCP is slower than UDP."

You can also see from the picture that the client has a sized-buffer with some of the spaces available. We call this size as window size.

Going back to server

Okay, now we learned that client side has window size and sends ACK to server. Interestingly, what's included inside ACK is window size of client. - THIS is important because the server decides how many more packets it can send to client based on the window size included in the ACK.

Imagine window size in ACK comes as 0. Then server will NOT send message in its TCP buffer to client. The basical calculation is as follows:

if (Window size > MSS) {

send

}

else{

wait

}What does "delay" mean?

From what we've learned so far, we should be able to make an educated guess on "delay" in client-server data transfer.

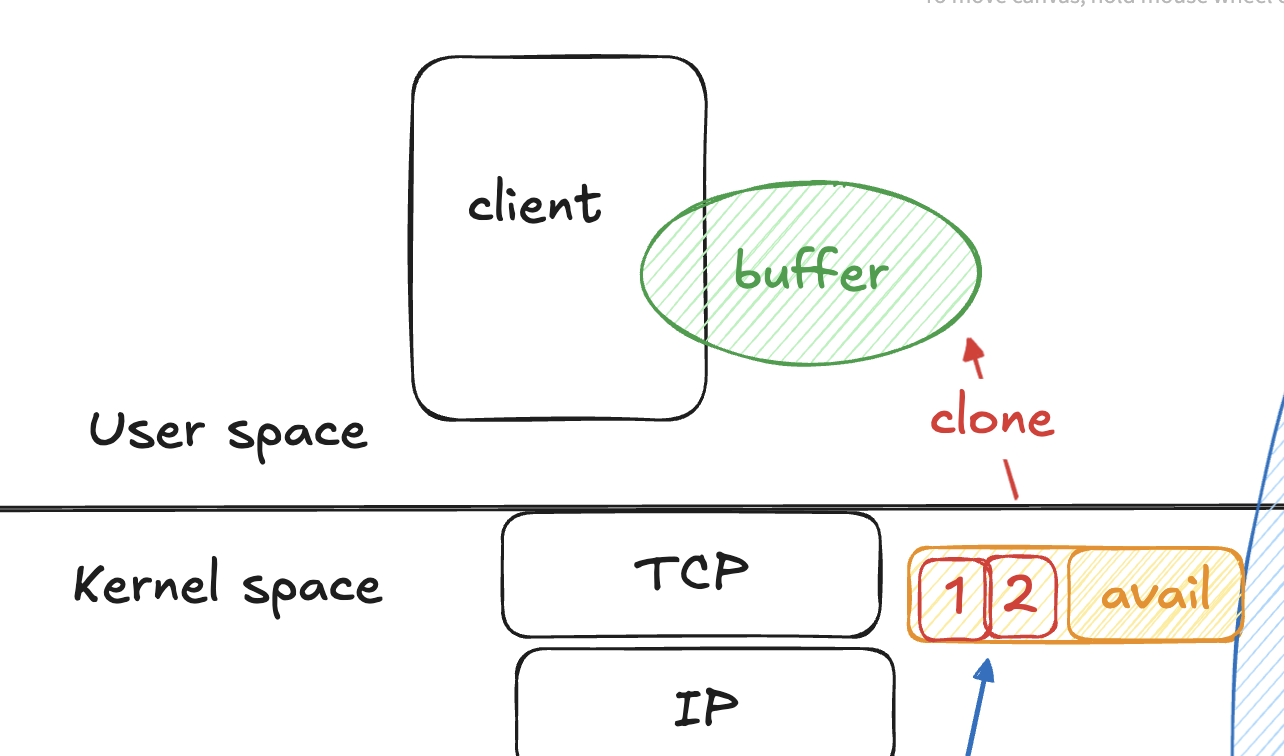

Let's take a closer look at the following picture:

The crticial question to ask is what is the condition to flush TCP buffer? - that's when you copy the data over the application buffer successfully. If that gets slow, window size will get smaller, and it blocks server as well from sending its data to the client.

Simply put, the following should be met to improve client side experience:

read speed > network transfer speed

Contrary to the common belief that "the server is too slow," delays on the recipient side due to the lagging on clearing the TCP buffer are a frequent issue. This happens when:

- Heavy Processing on the Client: If the client application performs expensive computations or is overwhelmed by other tasks, it may not read from the TCP buffer quickly

- Inefficient Code: A poorly written application may introduce artificial delays in reading data.

This of course happens between servers

"Client" is just a general word to describe anyone that requests something to server. So, in server-to-server(or peer-to-peer) interaction as well, this can happen.

The the following code most likely cause a lot of failures:

#[tokio::main]

async fn main() {

// Simulate a large number of clients

let client_ids = 1..=10000;

// Spawn a task for each client without throttling

let mut handles = vec![];

for client_id in client_ids {

let handle = tokio::spawn(async move {

fetch_data(client_id).await;

});

handles.push(handle);

}

// Wait for all tasks to complete

for handle in handles {

handle.await.unwrap();

}

}