0721[Kubernetes]

📌 복습

📙 수동배치

apiVersion: v1

kind: Pod

metadata:

name: pod-nodename-metadata

labels:

app: pod-nodename-labels

spec:

containers:

- name: pod-nodename-containers

image: nginx

ports:

- containerPort: 80

nodeName: worker1

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodename-service

spec:

type: NodePort

selector:

app: pod-nodename-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master1 test2]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ad-hoc1 1/1 Running 1 16h 10.244.1.27 worker1 <none> <none>

pod-nodename-metadata 1/1 Running 0 20s 10.244.1.29 worker1 <none> <none>

pod-nodeselector-metadata 0/1 NodeAffinity 0 15h <none> worker1 <none> <none>

pod-schedule-metadata 1/1 Running 1 16h 10.244.2.27 worker2 <none> <none>

📙 노드 셀렉터(수동배치2)

✔️라벨링

[root@master1 test2]# kubectl label node worker2 app=dev

[root@master1 test2]# kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

worker2 Ready <none> 45h v1.19.16 app=dev,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker2,kubernetes.io/os=linux

✔️노드 셀렉터로 파드 생성

# vi pod-nodeselector.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodeselector-metadata-app

labels:

app: pod-nodeselector-labels

spec:

containers:

- name: pod-nodeselector-containers

image: nginx

ports:

- containerPort: 80

nodeSelector:

app: dev

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodeselector-service2

spec:

type: NodePort

selector:

app: pod-nodeselector-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master1 test2]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ad-hoc1 1/1 Running 1 16h 10.244.1.27 worker1 <none> <none>

pod-nodename-metadata 1/1 Running 0 7m30s 10.244.1.29 worker1 <none> <none>

pod-nodeselector-metadata 0/1 NodeAffinity 0 16h <none> worker1 <none> <none>

pod-nodeselector-metadata-app 1/1 Running 0 10s 10.244.2.28 worker2 <none> <none>

pod-schedule-metadata 1/1 Running 1 16h 10.244.2.27 worker2 <none> <none>

✔️할당했던 라벨 삭제

# kubectl label nodes worker2 app-

[root@master1 test2]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master1 Ready master 46h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master1,kubernetes.io/os=linux,node-role.kubernetes.io/master=

worker1 Ready <none> 45h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker1,kubernetes.io/os=linux

worker2 Ready <none> 45h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker2,kubernetes.io/os=linux

📌 taint , toleration

📙 taint 테스트

# kubectl taint node worker1 tiger=cet:NoSchedule ##tiger, cat부분임의로 지정하는 부분

# kubectl taint node worker2 tiger=cet:NoSchedule

[root@master1 test2]# kubectl describe nodes worker2 | grep Taints

Taints: tiger=cat:NoSchedule

[root@master1 test2]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

test-pod 0/1 Pending 0 7s <none> <none> <none> <none>📙 toleration

# vi pod-taint.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata

labels:

app: pod-taint-labels

spec:

containers:

- name: pod-taint-containers

image: nginx

ports:

- containerPort: 80

tolerations:

- key: "tiger"

operator: "Equal"

value: "cat"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service

spec:

type: NodePort

selector:

app: pod-taint-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

📌 AWS - EKS(Elastic Kubernetes Service)

📙 인스턴스 생성

이름 : docker

유형 t2.micro

키페어 생성해서 설정

네트워크 - my-vpc, 서브넷 - public subnet 2c

보안그룹 - MY-SG-WEB

사용자 데이터:

#!/bin/bash

cd /tmp

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

./aws/install

sudo amazon-linux-extras install docker -y

sudo systemctl start docker && systemctl enable docker

curl https://raw.githubusercontent.com/docker/docker-ce/master/components/cli/contrib/completion/bash/docker -o /etc/bash_completion.d/docker.sh

sudo usermod -a -G docker ec2-user

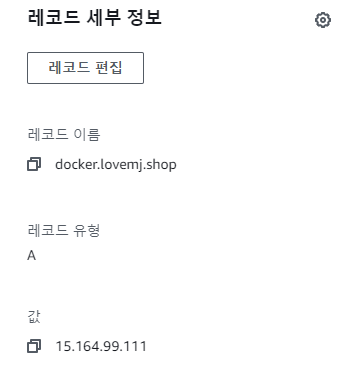

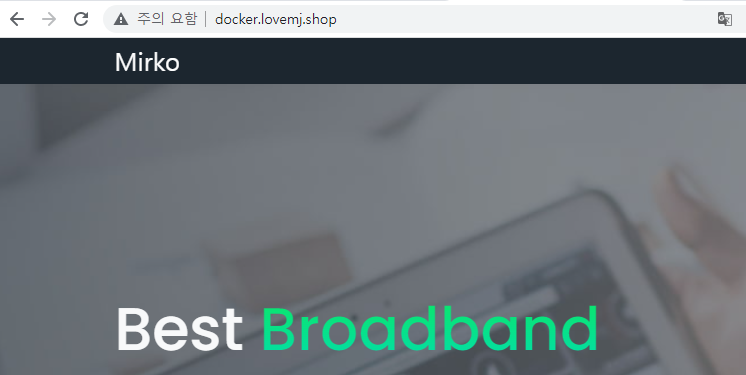

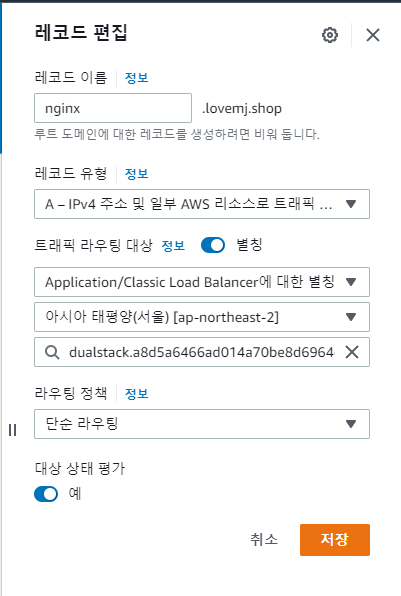

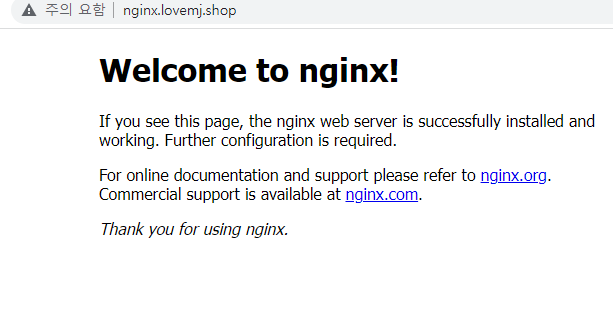

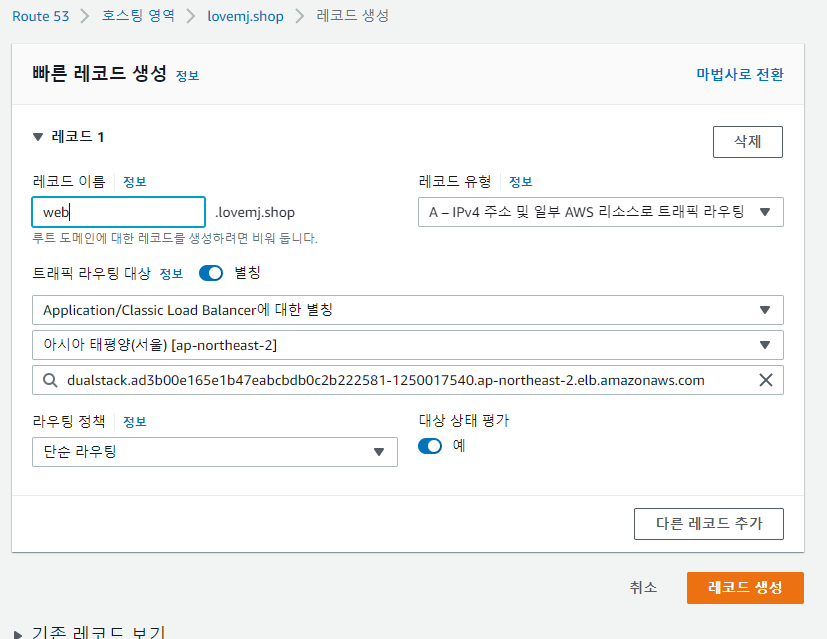

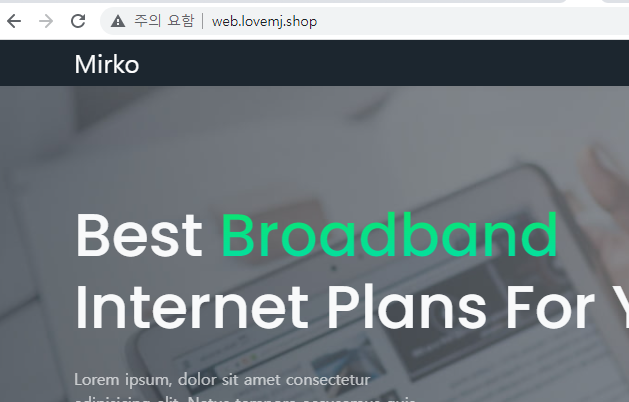

📙 퍼블릭 IP로 도메인 할당

📙 dockerhub에서 이미지 가져오기

mabaxterm에 진입해서 진행

$ docker run -d -p 80:80 --name=test-site mj030kk/web-site:v2.0

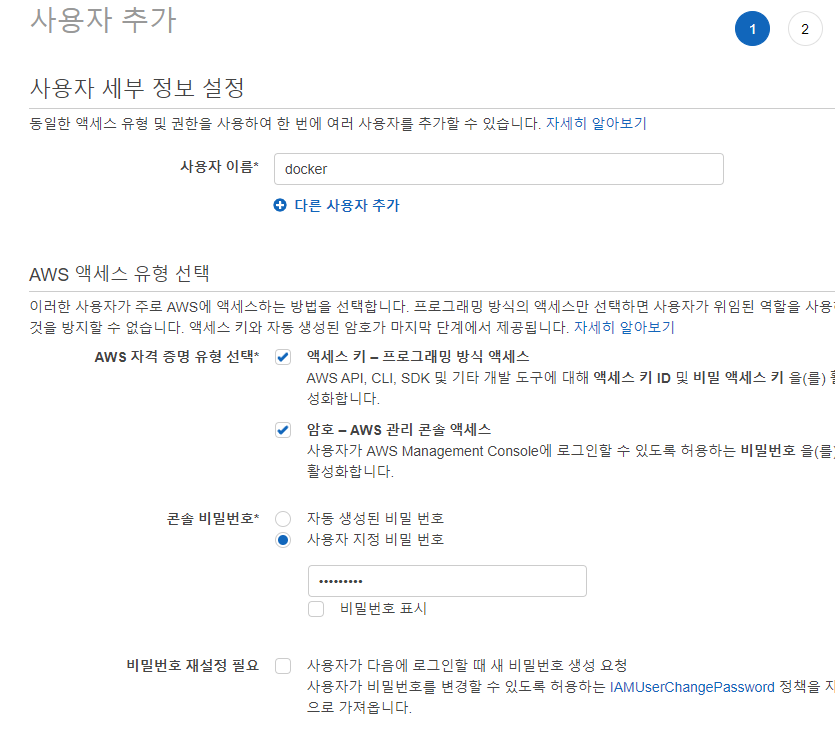

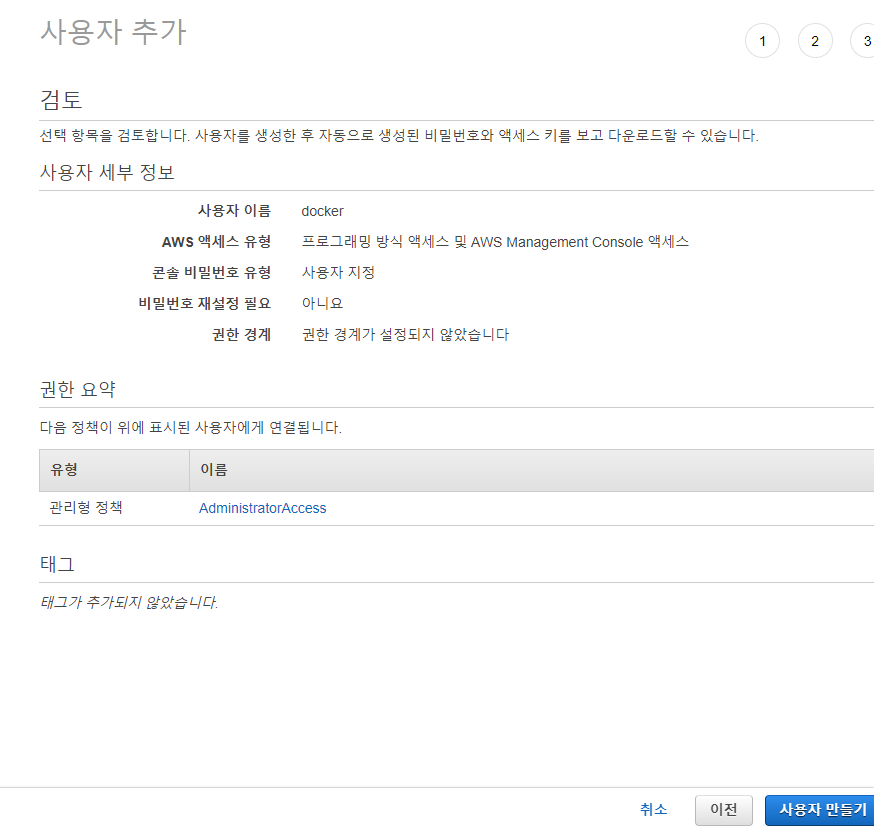

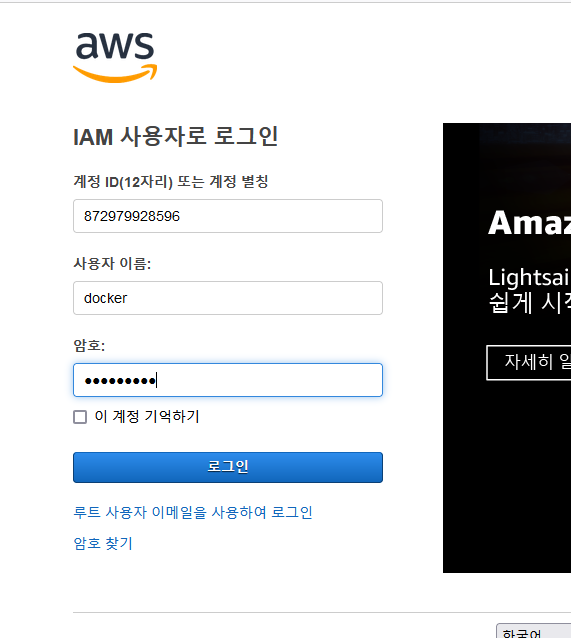

📙 IAM 사용자 만들기

✔️IAM - 사용자 추가 - 사용자이름:docker - 자격증명유형 : 액세스 키, 암호 - 비밀번호; 사용자 지정,재설정필요 체크 해제 (내가 이용할 목적) - 다음

✔️기존 정책 직접 연결 - AdministratorAccess 선택 - 다음 - 다음 - 사용자 만들기

✔️csv파일 다운로드

📙 CLI로그인

[ec2-user@ip-10-14-33-225 ~]$ aws configure ##.csv파일 확인

AWS Access Key ID [None]:

AWS Secret Access Key [None]:

Default region name [None]: ap-northeast-2

Default output format [None]: json

✔️ .aws폴더삭제하면 로그인 풀림. -> 로그인 하지 않고 이용하고싶으면 역할 사용. 📌 기타 ⭐️ IAM-역할참고.

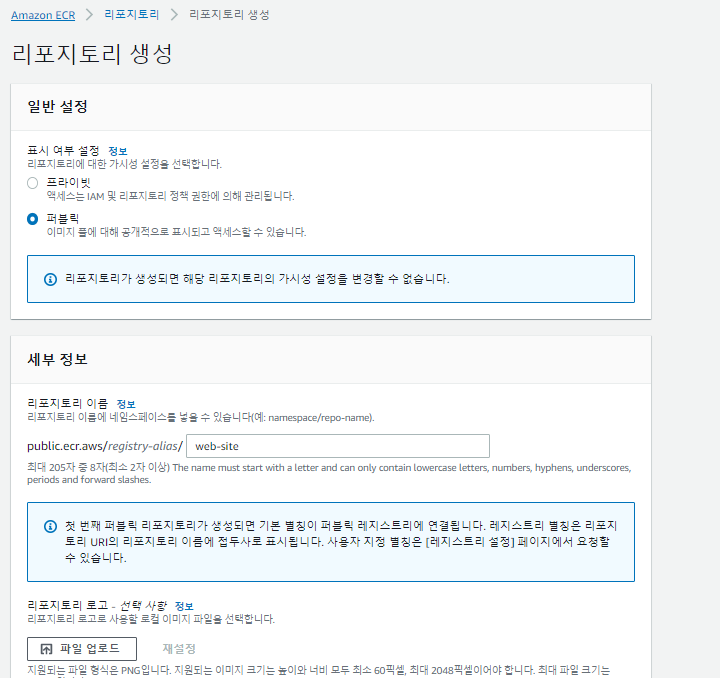

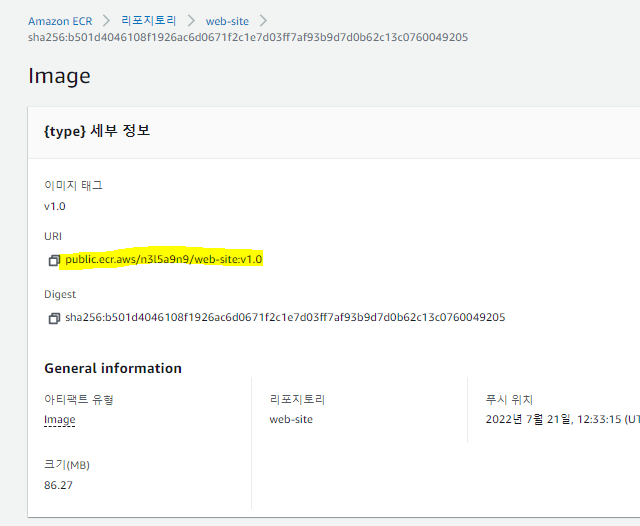

📙 ECR- Amazon Elastic Container Registry

✔️ECS-ECR- 리포지토리 생성

✔️표시 여부 설정;퍼블릭

세부정보 : 이름 : web-site - 맨 하단 레포지토리 생성

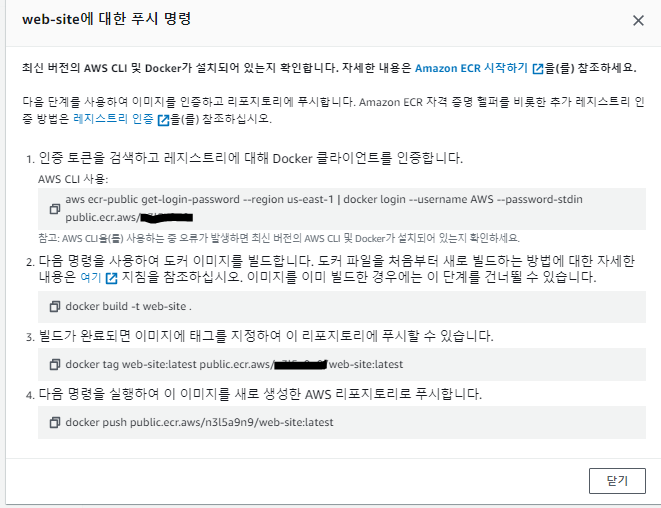

✔️ mabaxterm 진입, 위에서 인증토크 명령어 입력

[ec2-user@ip-10-14-33-225 ~]$ aws ecr-public get-login-password --region us-east-1 | docker login --username AWS --password-stdin public.ecr.aws/

WARNING! Your password will be stored unencrypted in /home/ec2-user/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

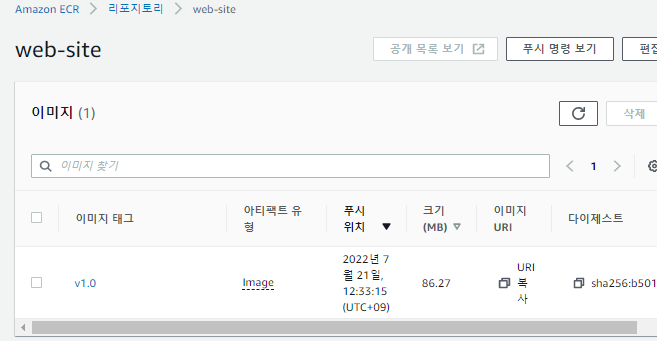

✔️ 리포지토리 URI확인하여 태그 및 리포지토리에 push

[ec2-user@ip-10-14-33-225 ~]$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mj030kk/web-site v2.0 cde791130344 8 days ago 172MB

$ docker tag mj030kk/web-site:v2.0 public.ecr.aws/n3l5a9n9/web-site:v1.0

[ec2-user@ip-10-14-33-225 ~]$ docker push public.ecr.aws/n3l5a9n9/web-site:v1.0

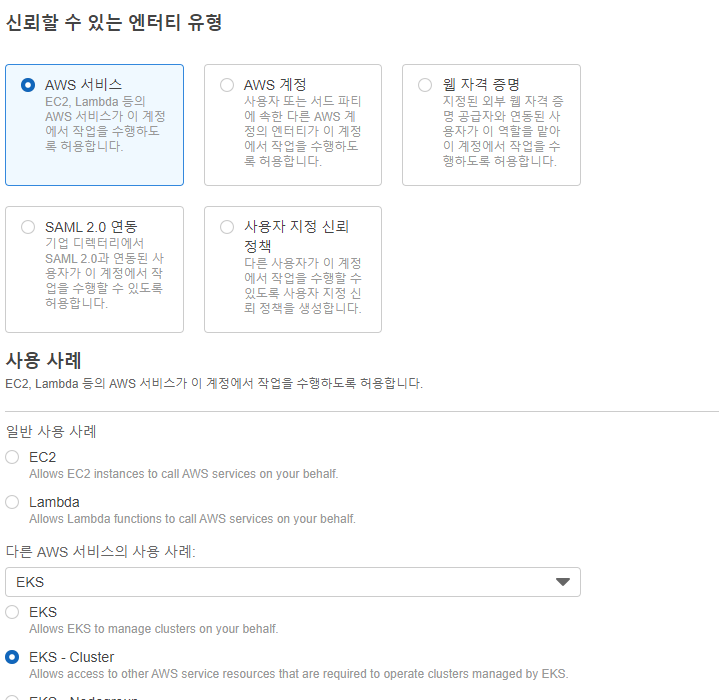

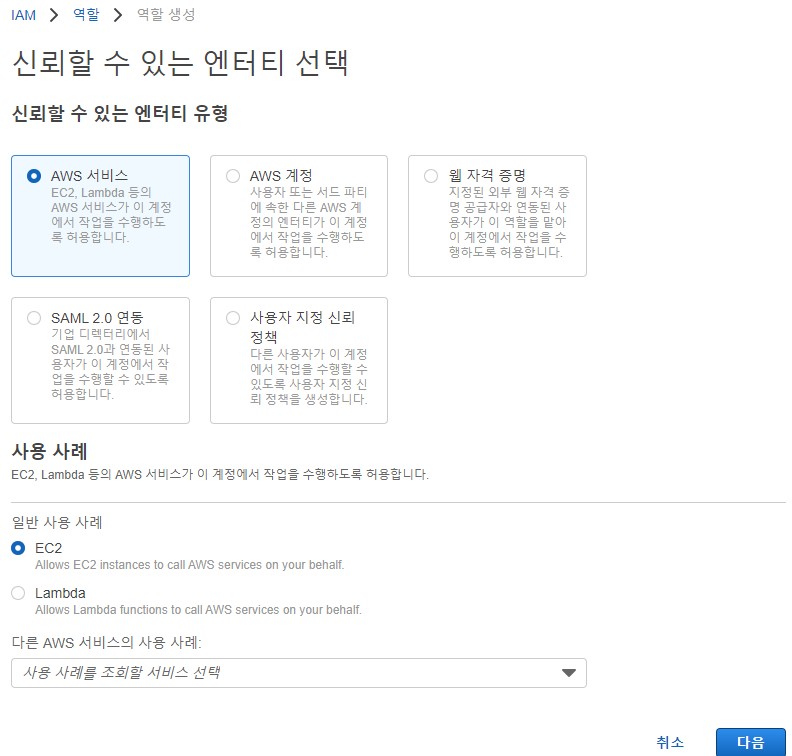

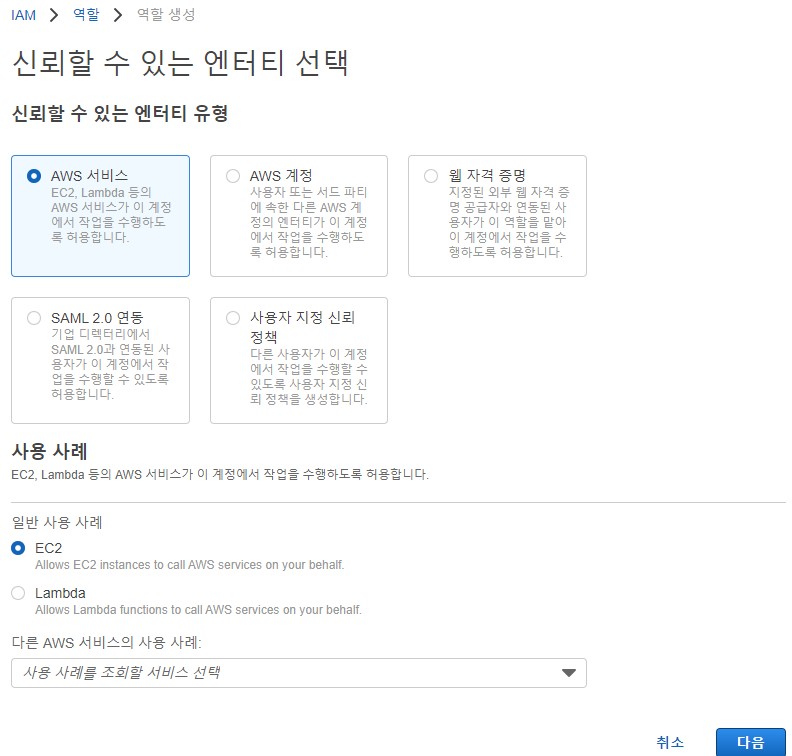

📙 IAM 역할 생성 (EKS-Cluster)

✔️ IAM - 역할 - 역할 만들기 - AWS서비스 - 다른 AWS서비스의 사용 사례 : EKS - EKS-Cluster - 다음

✔️ 권한 추가(이미 되어 있음) - 다음

✔️역할이름 : eksClusterRole - 역할 생성

📙 IAM사용자 로그인 - EKS(Elastic Kubernetes Service) 진행

chrome - root, firefox - iam사용자

IAM사용자에서 진행

✔️EKS - 클러스터 추가 - 생성

이름: EKS-CLUSTER

kubernetes버전 : 1.19

클러스터 서비스 역할 : eksClusterRole - 다음

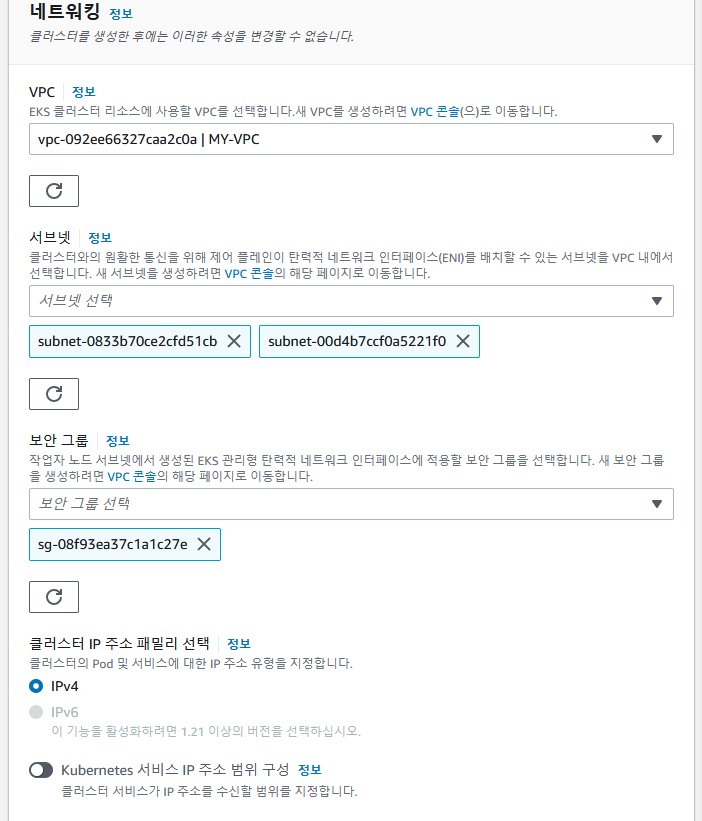

✔️VPC : MY-VPC

서브넷: public2a,2c

보안그룹 : MY-SG-WEB

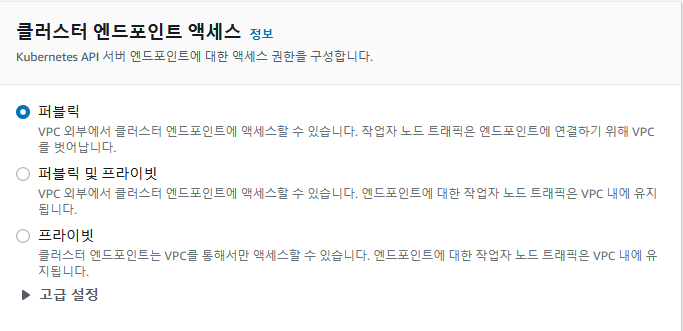

✔️클러스터 엔드포인트 액세스 : 퍼블릭

✔️ 네트워킹 추가 기능 ; default

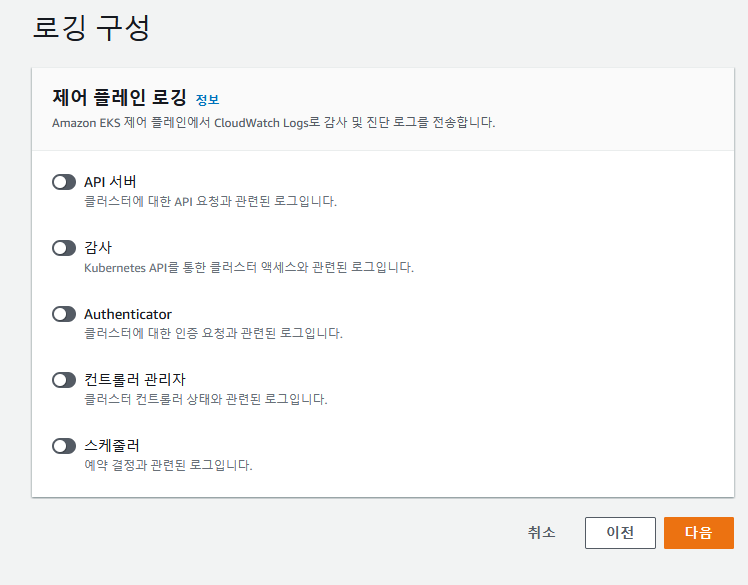

✔️로깅구성 ; default -다음 -생성

📙 클러스터 자격증명 (CLI)

https://docs.aws.amazon.com/ko_kr/eks/latest/userguide/install-kubectl.html

# curl -o kubectl https://s3.us-west-2.amazonaws.com/amazon-eks/1.19.6/2021-01-05/bin/linux/amd64/kubectl

# chmod +x ./kubectl

# sudo mv ./kubectl /usr/local/bin

$ source <(kubectl completion bash)

$ echo "source <(kubectl completion bash)" >> ~/.bashrc

$ kubectl version --short --client

$ aws eks --region ap-northeast-2 update-kubeconfig --name EKS-CLUSTER ##masternode에 접속하기

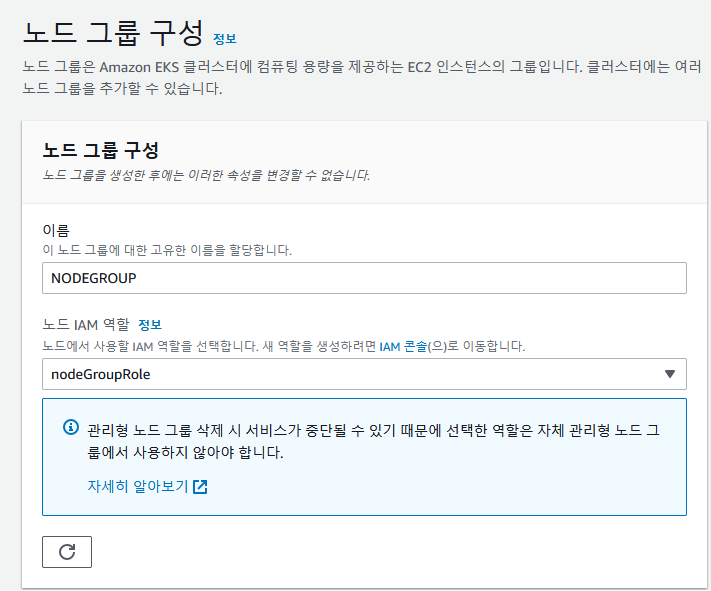

📙 IAM 역할(cluster에서 노드그룹추가하기 위한) 생성

✔️ IAM - 역할 - 역할 만들기 - AWS서비스, EC2

✔️ 아래 정책 검색해서 체크

AmazonEKSWorkerNodePolicy

AmazonEC2ContainerRegistryReadOnly

AmazonEKS_CNI_Policy

✔️ 역할 이름 : nodeGroupRole

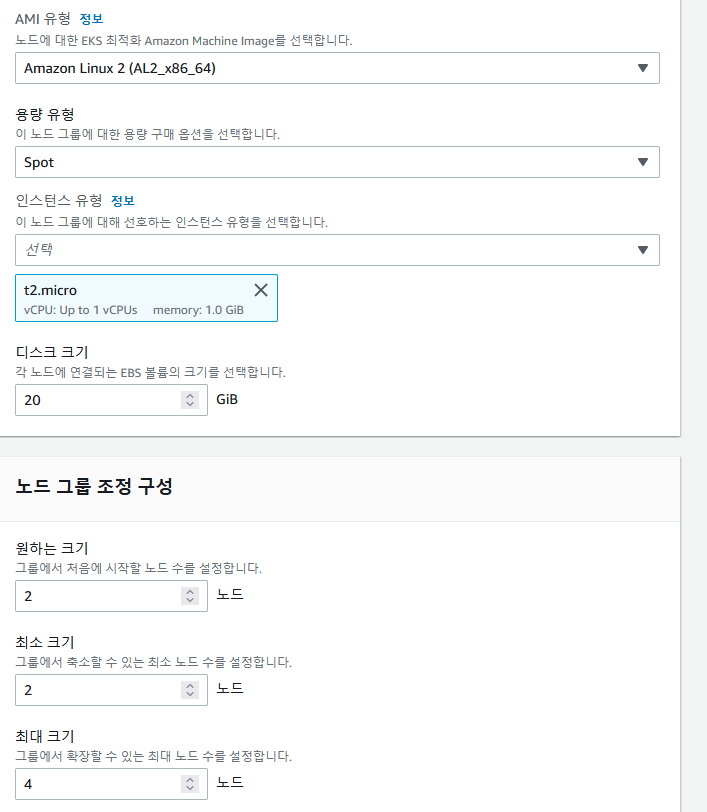

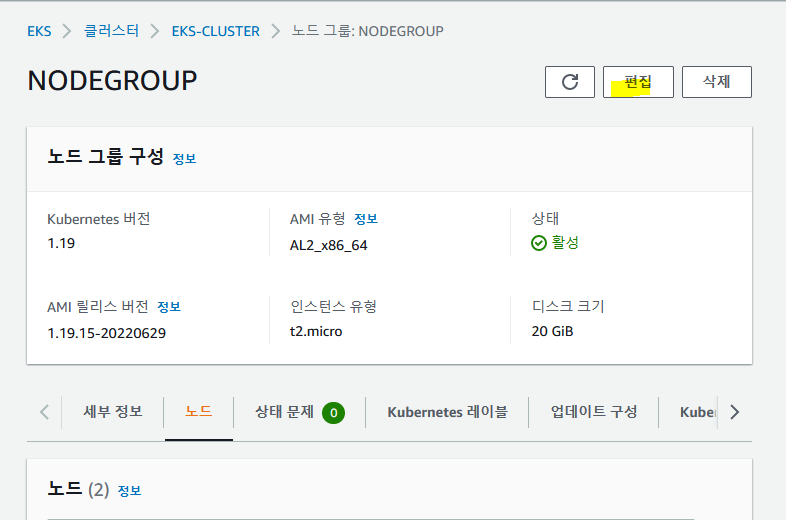

📙 docker사용자에서 노드 그룹 추가

✔️ 노드이름 : NODEGROUP

역할 : nodeGroupRole - 다음

✔️ AMI유형 : 아마존 linux2

용량 유형 : Spot

인스턴스 유형 : t2.micro

최대크기 노드 : 4

✔️ 서브넷 그대로, SSH 액세스 구성 활성화

키페어 선택, 허용 대상 모두 - 다음 - 노드그룹 생성

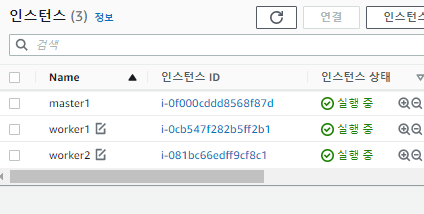

✔️ root계정에서 인스턴스 목록 확인하고 새로 생성된 node들에 태그 추가(worker1,2) 및 원래 docker ec2를 master1으로 바꿔주기.

📢 저 인스턴스가 마스터노드는 아님. 마스터 노드는 aws에서 만들어줌. 저걸 통해서 마스터노드에 진입 가능함.

✔️publicIP통해서 mobaxterm진입 가능 확인.

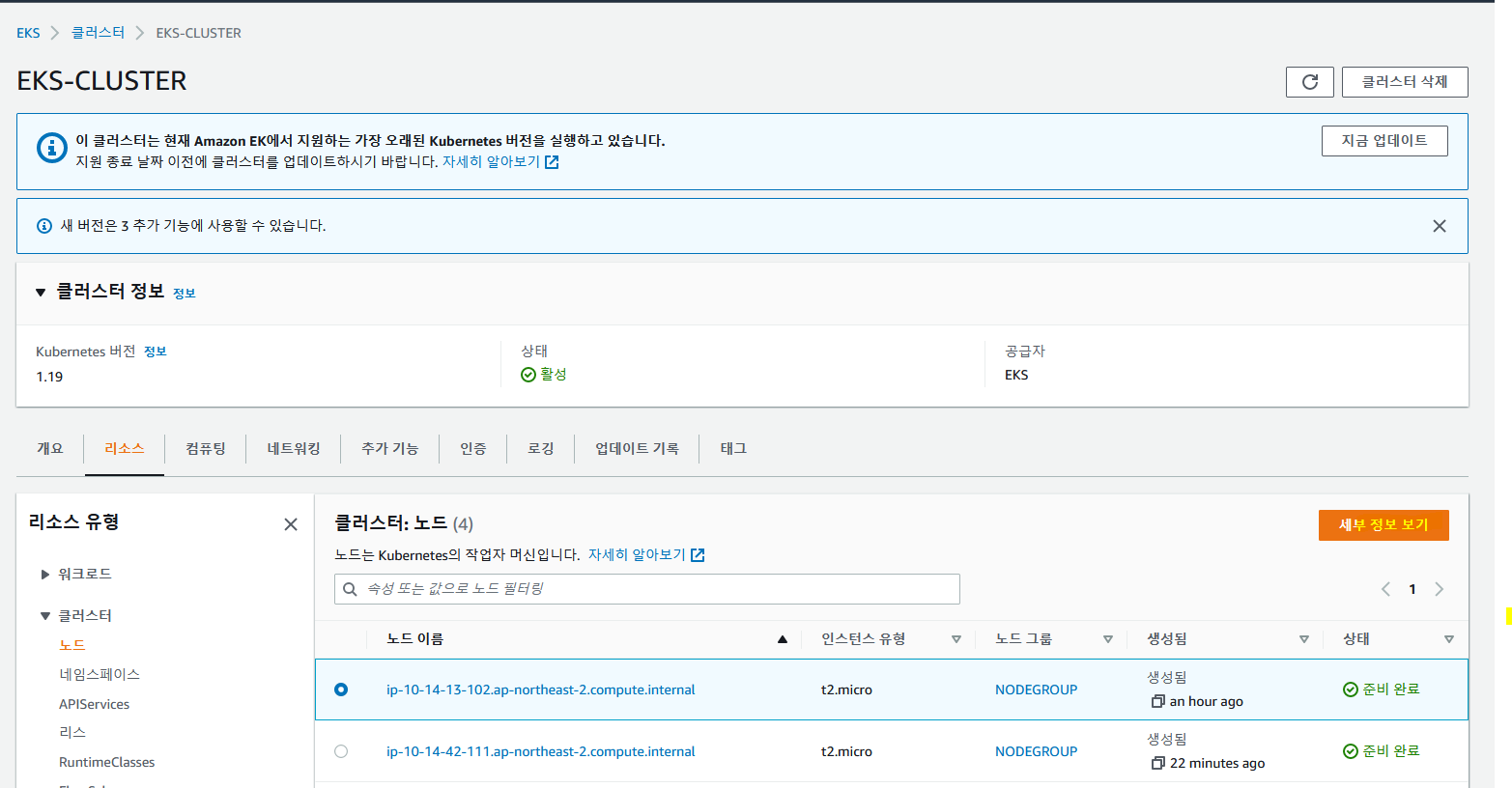

✔️ (docker.lovemj.shop)에서 node들이 잘 붙었는지 확인하기.

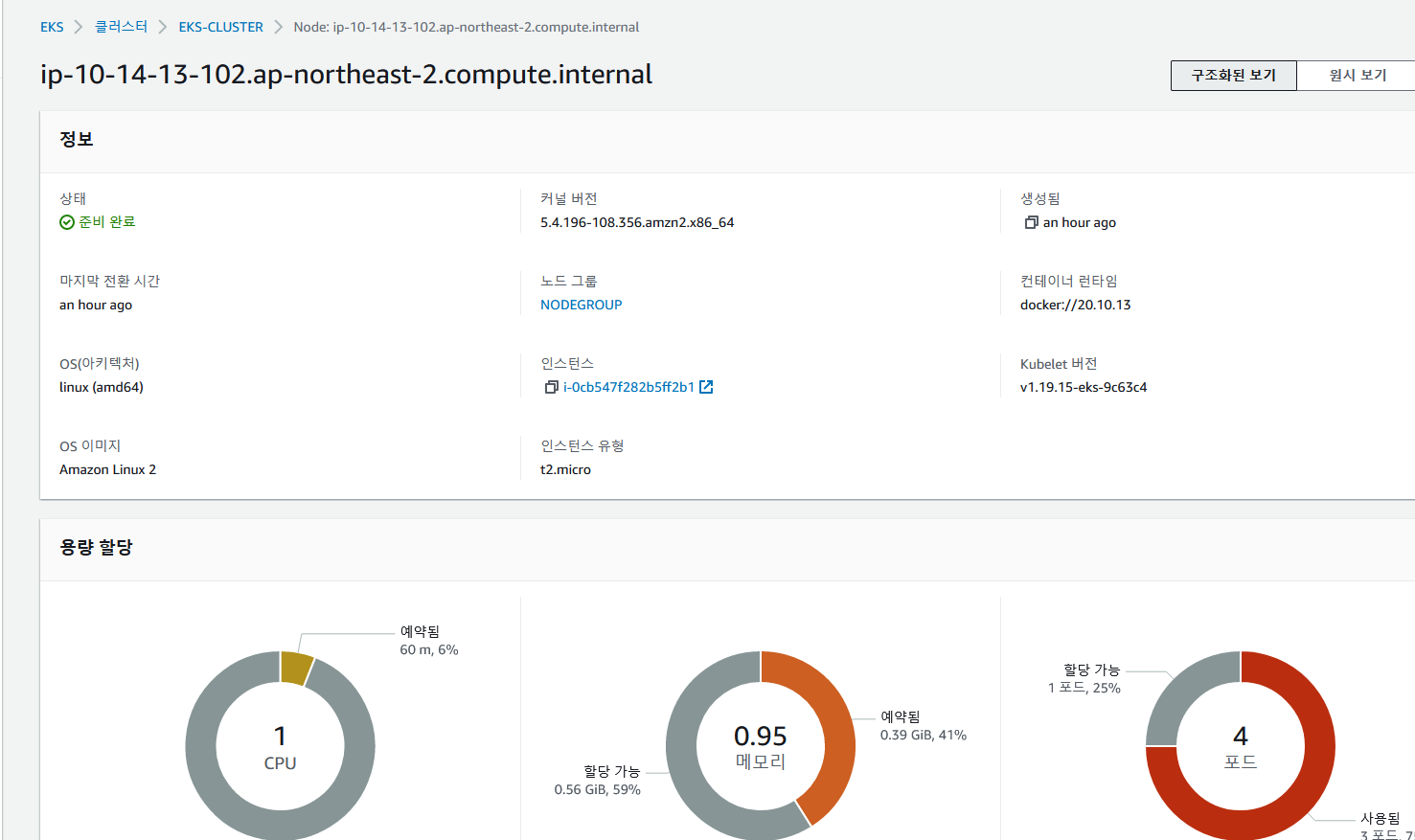

[ec2-user@ip-10-14-33-225 ~]$ kubectl get node

NAME STATUS ROLES AGE VERSION

ip-10-14-13-102.ap-northeast-2.compute.internal Ready <none> 8m32s v1.19.15-eks-9c63c4

ip-10-14-44-2.ap-northeast-2.compute.internal Ready <none> 8m23s v1.19.15-eks-9c63c4

📙 pod,service 이용

✔️ pod 생성

[ec2-user@ip-10-14-33-225 ~]$ mkdir workspace && cd $_

[ec2-user@ip-10-14-33-225 workspace]$ kubectl run nginx-pod --image=nginx

pod/nginx-pod created

[ec2-user@ip-10-14-33-225 workspace]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 32s 10.14.40.245 ip-10-14-44-2.ap-northeast-2.compute.internal <none> <none>

[ec2-user@ip-10-14-33-225 workspace]$ kubectl run nginx-pod2 --image=nginx

pod/nginx-pod2 created

[ec2-user@ip-10-14-33-225 workspace]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 69s 10.14.40.245 ip-10-14-44-2.ap-northeast-2.compute.internal <none> <none>

nginx-pod2 0/1 ContainerCreating 0 3s <none> ip-10-14-13-102.ap-northeast-2.compute.internal <none> <none>

✔️ 노드 갯수 편집

[ec2-user@ip-10-14-33-225 workspace]$ kubectl get node

NAME STATUS ROLES AGE VERSION

ip-10-14-13-102.ap-northeast-2.compute.internal Ready <none> 27m v1.19.15-eks-9c63c4

ip-10-14-42-111.ap-northeast-2.compute.internal Ready <none> 75s v1.19.15-eks-9c63c4

ip-10-14-44-2.ap-northeast-2.compute.internal Ready <none> 27m v1.19.15-eks-9c63c4

ip-10-14-8-13.ap-northeast-2.compute.internal Ready <none> 99s v1.19.15-eks-9c63c4

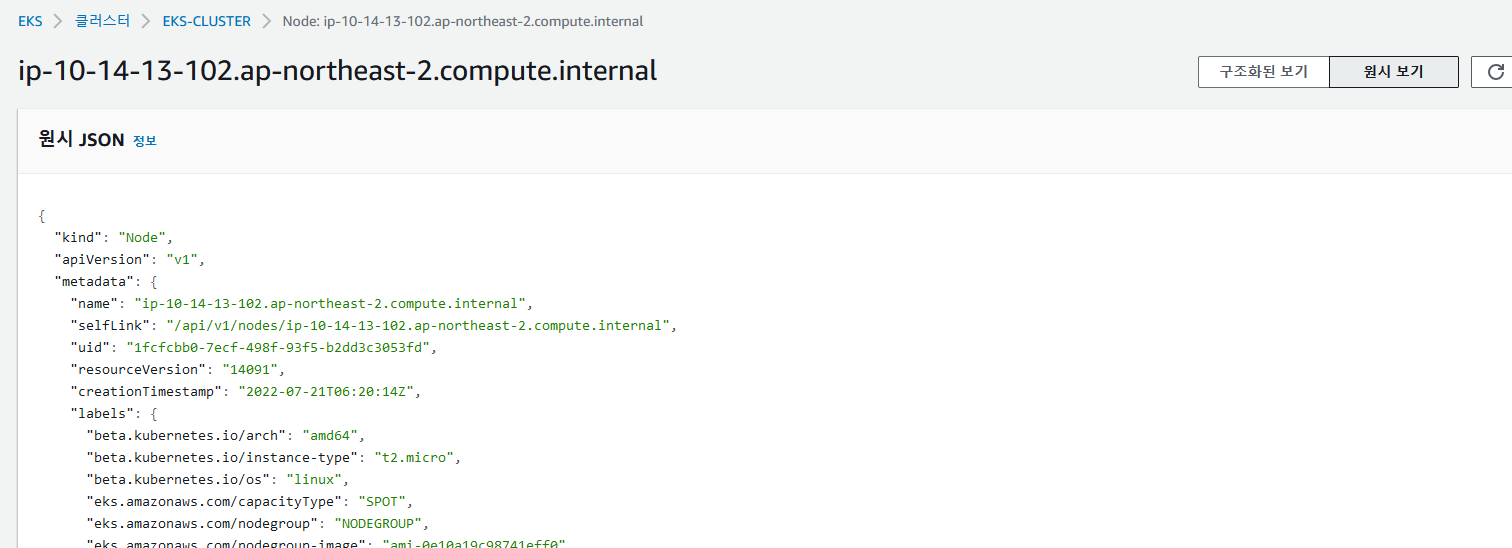

✔️ 노드 정보 확인

✔️pod , service

[ec2-user@ip-10-14-33-225 workspace]$ kubectl run nginx-pod --image=nginx

pod/nginx-pod created

[ec2-user@ip-10-14-33-225 workspace]$ kubectl expose pod nginx-pod --name clusterip --type ClusterIP --port 80

service/clusterip exposed

[ec2-user@ip-10-14-33-225 workspace]$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-pod 1/1 Running 0 52s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/clusterip ClusterIP 172.20.244.180 <none> 80/TCP 10s

service/kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 94m

[ec2-user@ip-10-14-13-102 ~]$ curl 172.20.244.180 ## worker1 or worker2에서 curl.

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

[ec2-user@ip-10-14-33-225 workspace]$ kubectl expose pod nginx-pod --name nodeport --type NodePort --port 80

service/nodeport exposed

[ec2-user@ip-10-14-33-225 workspace]$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-pod 1/1 Running 0 5m2s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/clusterip ClusterIP 172.20.244.180 <none> 80/TCP 4m20s

service/kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 98m

service/nodeport NodePort 172.20.32.121 <none> 80:30277/TCP 7s

[ec2-user@ip-10-14-13-102 ~]$ curl 10.14.13.102:30277 ##worker인스턴스에서, worker의 내부IP: 포트번호

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

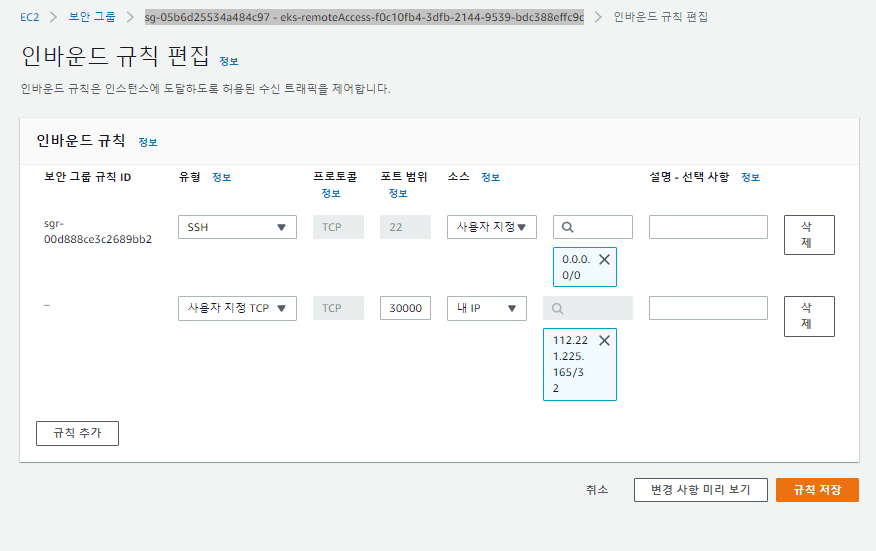

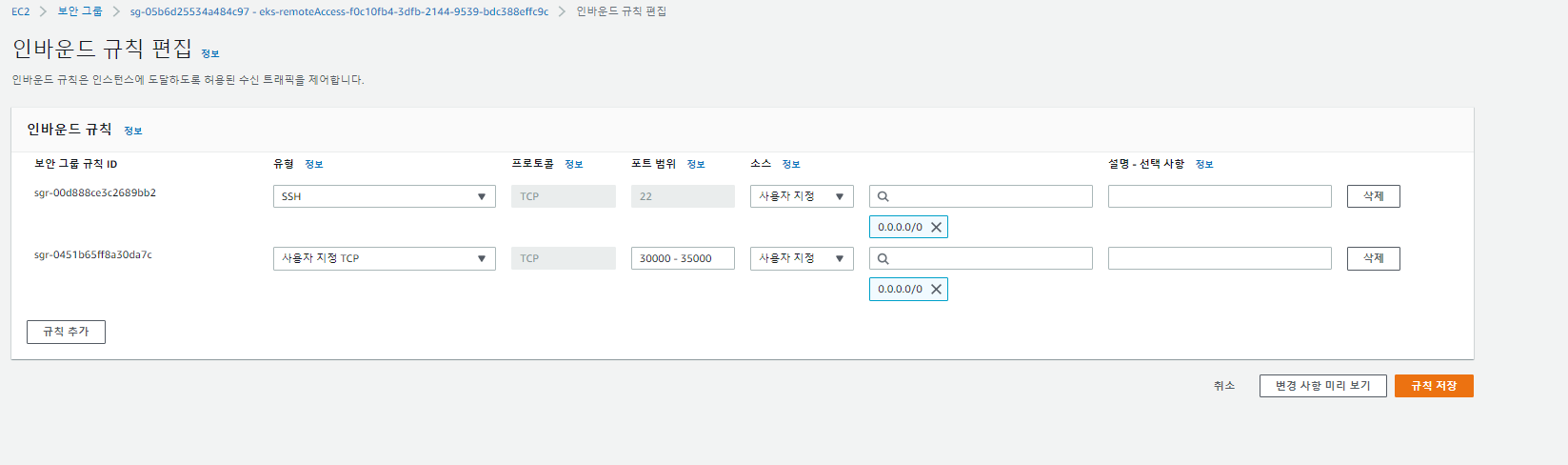

✔️ 인바운드 규칙편집- sg-05b6d25534a484c97 - eks-remoteAccess-f0c10fb4-3dfb-2144-9539-bdc388effc9c - 범위 3000-35000, 내 IP- 규칙추가

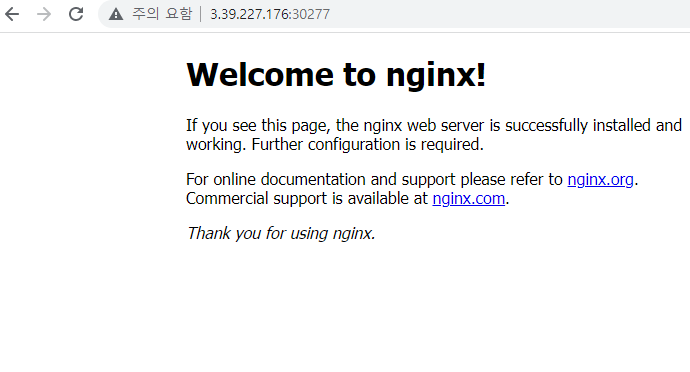

worker의 퍼블릭 IP:nodeport번호로 접속

->소스를 내IP가 아닌 0.0.0.0/0으로 할 경우 mabaxterm에서도 curl 통해서 확인 가능.(master,worker1,2)에서 다. => 일단 이렇ㄱㅔ 마무리.

✔️loadbalancer(master client)

$ kubectl expose pod nginx-pod --name loadbalancer --type LoadBalancer --external-ip 3.39.227.176 --port 80

service/loadbalancer exposed

## woker1의 외부IP

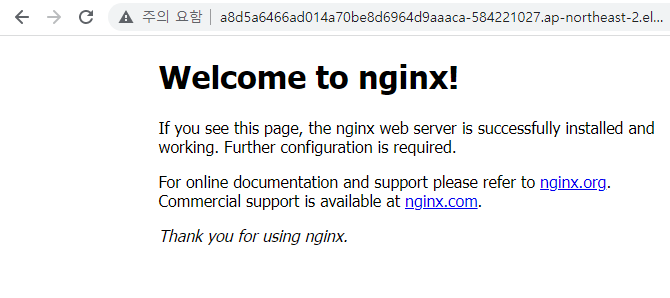

[ec2-user@ip-10-14-33-225 workspace]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

clusterip ClusterIP 172.20.244.180 <none> 80/TCP 53m

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 147m

loadbalancer LoadBalancer 172.20.75.202 a8d5a6466ad014a70be8d6964d9aaaca-584221027.ap-northeast-2.elb.amazonaws.com,3.39.227.176 80:30733/TCP 2m4s

nodeport NodePort 172.20.32.121 <none>

이건 안되는거 맞음.

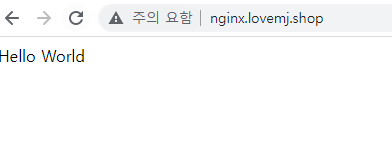

[ec2-user@ip-10-14-33-225 workspace]$ kubectl exec nginx-pod -- sh -c "echo 'Hello World' > /usr/share/nginx/html/index.html"

✔️pod,service manifest

$ vi pod-loadbalancer.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-web

labels:

app: nginx-pod

spec:

containers:

- name: nginx-pod-container

image: public.ecr.aws/n3l5a9n9/web-site:v1.0

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-pod

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.56.119

selector:

app: nginx-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

$ kubectl apply -f pod-loadbalancer.yaml

[ec2-user@ip-10-14-33-225 workspace]$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-pod 1/1 Running 0 73m

pod/nginx-pod-web 0/1 ContainerCreating 0 5s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/clusterip ClusterIP 172.20.244.180 <none> 80/TCP 72m

service/kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 166m

service/loadbalancer LoadBalancer 172.20.75.202 a8d5a6466ad014a70be8d6964d9aaaca-584221027.ap-northeast-2.elb.amazonaws.com,3.39.227.176 80:30733/TCP 21m

service/loadbalancer-service-pod LoadBalancer 172.20.202.201 ad3b00e165e1b47eabcbdb0c2b222581-1250017540.ap-northeast-2.elb.amazonaws.com 80:30044/TCP 5s

service/nodeport NodePort 172.20.32.121 <none> 80:30277/TCP 68m

📙 삭제

- 노드그룹 삭제

- master1삭제(종료)

- route53레코드 삭제

- 로드밸런서 삭제

- 보안그룹 삭제(default 제외하고 다 삭제하였음)

- 노드그룹 삭제 확인 후 클러스터 삭제

- 버킷 삭제

- ECR 리포지토리 삭제

📙✔️✏️📢⭐️📌

📌 기타

⭐️ limitrange

용량 값을 넣지 않아도 자동으로 셋팅

⭐️trouble shooting

[root@master1 test2]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-nodeselector-metadata 0/1 NodeAffinity 0 17h <none> worker1 <none> <none>

pod-nodeselector-metadata-app 1/1 Running 0 80m 10.244.2.28 worker2 <none> <none>

pod-schedule-metadata 1/1 Running 1 17h 10.244.2.27 worker2 <none> <none>

pod-taint-metadata 0/1 Pending 0 14m <none> <none> <none> <none>

[root@master1 test2]# kubectl describe pod pod-taint-metadata

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 17m default-scheduler 0/3 nodes are available: 1 Insufficient memory, 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 1 node(s) had taint {tier: dev}, t hat the pod didn't tolerate.

Warning FailedScheduling 17m default-scheduler 0/3 nodes are available: 1 Insufficient memory, 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 1 node(s) had taint {tier: dev}, t hat the pod didn't tolerate.

taint 삭제하기

# kubectl taint node worker1 tier-

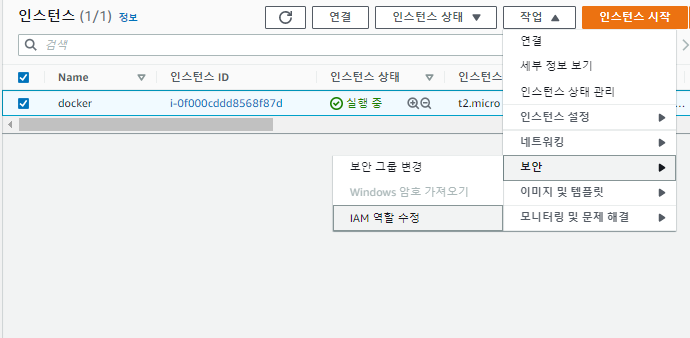

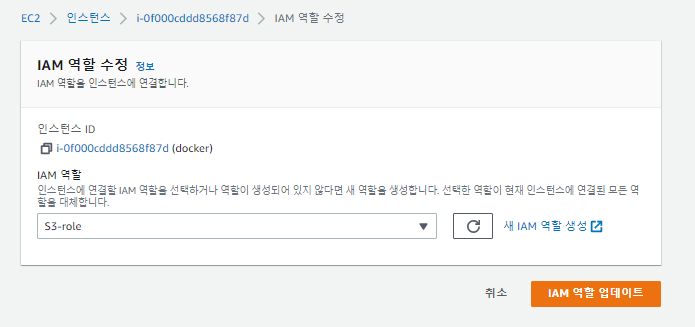

⭐️ IAM-역할

서비스 권한을 리소스에게 주기

다양한 서비스들이 또다른 서비스에 접근해서 조작할 수 있도록 도움.

✔️ IAM - 역할 - 역할 만들기 - AWS서비스, EC2

✔️s3검색 - AmazonS3FullAccess체크 - 다음

✔️이름 : S3-role - 역할생성

✔️S3진입 - 버킷 생성

버킷 이름 : s3.lovemj.shop

ACL비활성화,버전관리 활성화,서버측암호화 활성화, S3관리형 키 - 버킷 만들기

[ec2-user@ip-10-14-33-225 ~]$ aws s3 ls

2022-07-21 02:38:55 s3.lovemj.shop

->로그인하지 않아도 역할을 통해서 s3접근 가능.