0801[k8s version up, eks ingress]

📌 쿠버네티스 버전업

📙 저번에 만들어둔 centos7 복제(연결된 복제)해서 worker1,2만들기

✔️ centos 7 선택 - 복제 - 이름 : worker1 - 연결된 복제 -> 이름 worker2로 한번 더 진행

✔️ centos7이름 master1으로 바꿔주기

✔️ worker 1,2 : CPU1,RAM 1G로 스펙 바꿔주기 ( 설정 - 시스템)

✔️ 3개의 서버 그룹으로 묶어주고 이름 Kubernetes Cluster

📙 노드공통 명령어

# hostnamectl set-hostname master

# exit

# cat <<EOF >> /etc/hosts

192.168.56.106 master1

192.168.56.107 worker1

192.168.56.108 worker2

EOF

📙 노드 명령어

--master--

# kubeadm init --apiserver-advertise-address=192.168.56.106 --pod-network-cidr=10.244.0.0/16

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

-- worker 1,2에서 kubeadem init에서 맨 하단에 나온 kubeadm join (토큰정보) 입력 --

# kubeadm join 192.168.56.106:6443 --token 32eyw8.5l08iqkzvrg3q5i0 --discovery-token-ca-cert-hash sha256:5a1f6308637da409dc02418fc208c34caf343d52d857894ab13c7920543deb93

--다시 master로 돌아와서 확인하고 자동완성 명령어진행 --

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready master 6m v1.19.16

worker1 Ready <none> 71s v1.19.16

worker2 Ready <none> 44s v1.19.16

# kubectl get pods --all-namespaces

# source <(kubectl completion bash) ## 자동완성 기능

# echo "source <(kubectl completion bash)" >> ~/.bashrc

# exit

세 노드 모두 poweroff 후 스냅샷 찍기 이름 : 1.19

📙 ingress / 경로기반라우팅 (AWS - EKS,ELB(ALB))

# yum install git -y

# git clone https://github.com/hali-linux/_Book_k8sInfra.git

# kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.2/ingress-nginx.yaml ##controller 사용하기 위한 apply

# kubectl get pods -n ingress-nginx

# mkdir ingress && cd $_

# vi ingress-deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: foods-deploy

spec:

replicas: 1

selector:

matchLabels:

app: foods-deploy

template:

metadata:

labels:

app: foods-deploy

spec:

containers:

- name: foods-deploy

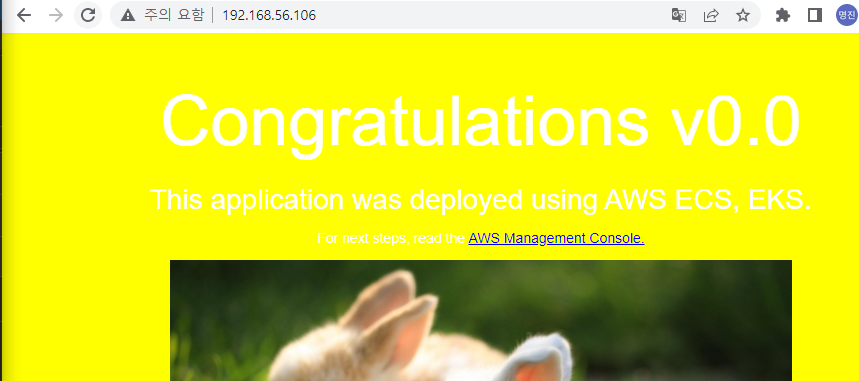

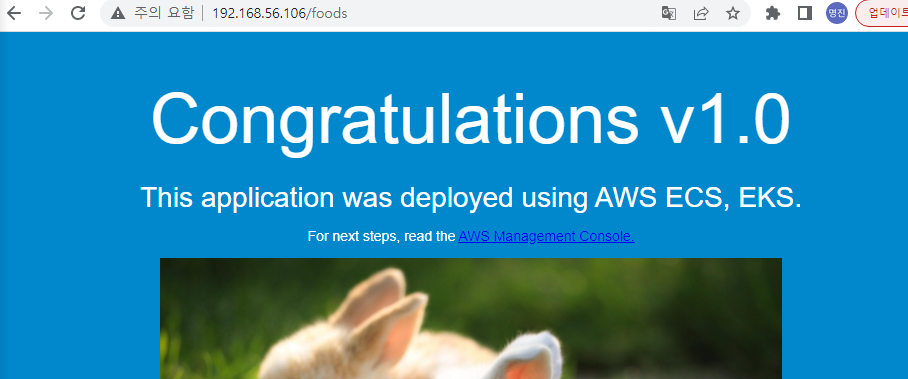

image: halilinux/test-home:v1.0

---

apiVersion: v1

kind: Service

metadata:

name: foods-svc

spec:

type: ClusterIP

selector:

app: foods-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sales-deploy

spec:

replicas: 1

selector:

matchLabels:

app: sales-deploy

template:

metadata:

labels:

app: sales-deploy

spec:

containers:

- name: sales-deploy

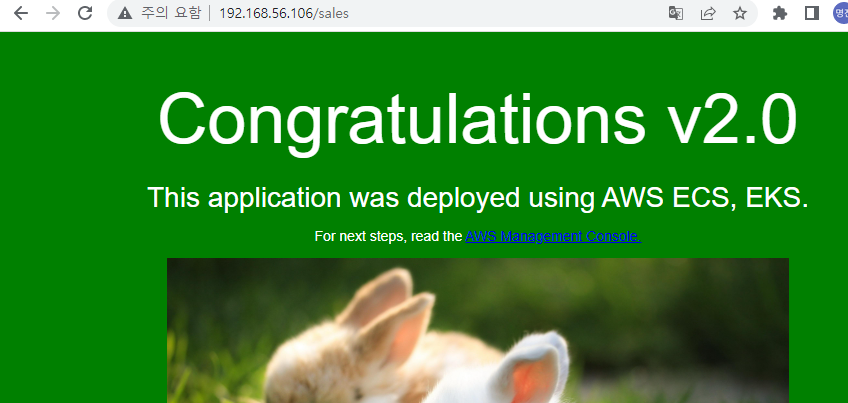

image: halilinux/test-home:v2.0

---

apiVersion: v1

kind: Service

metadata:

name: sales-svc

spec:

type: ClusterIP

selector:

app: sales-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: home-deploy

spec:

replicas: 1

selector:

matchLabels:

app: home-deploy

template:

metadata:

labels:

app: home-deploy

spec:

containers:

- name: home-deploy

image: halilinux/test-home:v0.0

---

apiVersion: v1

kind: Service

metadata:

name: home-svc

spec:

type: ClusterIP

selector:

app: home-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

# kubectl apply -f ingress-deploy.yaml

# kubectl get all

# kubectl get pod -o wide

# kubectl get svc

# curl 10.98.225.168 ##다른 노드들에서도 접속 가능

# kubectl get node -o wide

# vi ingress-config.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /foods

backend:

serviceName: foods-svc

servicePort: 80

- path: /sales

backend:

serviceName: sales-svc

servicePort: 80

- path:

backend:

serviceName: home-svc

servicePort: 80

# kubectl apply -f ingress-config.yaml

# kubectl get ingress

# kubectl describe ingress ingress-nginx

# vi ingress-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

- name: https

protocol: TCP

port: 443

targetPort: 443

selector:

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

externalIPs:

- 192.168.56.106 ##master IP

# kubectl apply -f ingress-service.yaml

📙 taint와 toleration

✔️taint

# kubectl taint node worker1 tier=dev:NoSchedule

# kubectl describe nodes worker1

Taints: tier=dev:NoSchedule

# vi ingress-deploy.yaml

replicas를 다 2개로 (3개의 디플로이 모두)

[root@master ingress]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

foods-deploy-7ffcb8f58-d8b6w 1/1 Running 0 13s 10.244.2.6 worker2 <none> <none>

foods-deploy-7ffcb8f58-lrpvd 1/1 Running 0 16m 10.244.2.5 worker2 <none> <none>

home-deploy-688558dc79-48xcs 1/1 Running 0 13s 10.244.2.8 worker2 <none> <none>

home-deploy-688558dc79-7qhhk 1/1 Running 0 68m 10.244.1.3 worker1 <none> <none>

sales-deploy-7cdbd9848c-4rqdv 1/1 Running 0 68m 10.244.2.3 worker2 <none> <none>

sales-deploy-7cdbd9848c-tpdch 1/1 Running 0 13s 10.244.2.7 worker2 <none> <none>

-> worker2에만 생성된 모습

✔️toleration

# vi pod-taint.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata

labels:

app: pod-taint-labels

spec:

containers:

- name: pod-taint-containers

image: nginx

tolerations:

- key: "tier"

operator: "Equal"

value: "dev"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service

spec:

type: NodePort

selector:

app: pod-taint-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master ingress]# kubectl apply -f pod-taint.yaml

pod/pod-taint-metadata created

service/pod-taint-service created

[root@master ingress]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

foods-deploy-7ffcb8f58-d8b6w 1/1 Running 0 9m45s 10.244.2.6 worker2 <none> <none>

foods-deploy-7ffcb8f58-lrpvd 1/1 Running 0 26m 10.244.2.5 worker2 <none> <none>

home-deploy-688558dc79-48xcs 1/1 Running 0 9m45s 10.244.2.8 worker2 <none> <none>

home-deploy-688558dc79-7qhhk 1/1 Running 0 77m 10.244.1.3 worker1 <none> <none>

pod-taint-metadata 1/1 Running 0 22s 10.244.1.5 worker1 <none> <none>

sales-deploy-7cdbd9848c-4rqdv 1/1 Running 0 77m 10.244.2.3 worker2 <none> <none>

sales-deploy-7cdbd9848c-tpdch 1/1 Running 0 9m45s 10.244.2.7 worker2 <none> <none>

->worker1에 생성됨

158 kubectl delete -f pod-taint.yaml

[root@master ingress]# kubectl taint node worker2 tier=dev:NoSchedule

[root@master ingress]# kubectl apply -f pod-taint.yaml

[root@master ingress]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

foods-deploy-7ffcb8f58-d8b6w 1/1 Running 0 96m 10.244.2.6 worker2 <none> <none>

foods-deploy-7ffcb8f58-lrpvd 1/1 Running 0 112m 10.244.2.5 worker2 <none> <none>

home-deploy-688558dc79-48xcs 1/1 Running 0 96m 10.244.2.8 worker2 <none> <none>

home-deploy-688558dc79-7qhhk 1/1 Running 0 164m 10.244.1.3 worker1 <none> <none>

pod-taint-metadata 1/1 Running 0 97s 10.244.1.6 worker1 <none> <none>

sales-deploy-7cdbd9848c-4rqdv 1/1 Running 0 164m 10.244.2.3 worker2 <none> <none>

sales-deploy-7cdbd9848c-tpdch 1/1 Running 0 96m 10.244.2.7 worker2 <none> <none>

worker2에도 테인드, 1or2중에 생성.

또다시 1에 생성된 모습 (2에 할당된 pod가 많아서 그런듯)

📙 클러스터 업그레이드 1

- master 노드

# cd ~

# yum list --showduplicates kubeadm --disableexcludes=kubernetes

# yum install -y kubeadm-1.20.15-0 --disableexcludes=kubernetes

# kubeadm version

# kubeadm upgrade plan

# kubeadm upgrade apply v1.20.15

# yum install -y kubelet-1.20.15-0 kubectl-1.20.15-0 --disableexcludes=kubernetes

# systemctl daemon-reload

# systemctl restart kubelet

- worker 노드

# yum install -y kubeadm-1.20.15-0 --disableexcludes=kubernetes

# kubeadm upgrade node

- master 노드

# kubectl drain worker1 --ignore-daemonsets --force

# kubectl drain worker2 --ignore-daemonsets --force

- worker 노드

# yum install -y kubelet-1.20.15-0 kubectl-1.20.15-0 --disableexcludes=kubernetes

# systemctl daemon-reload

# systemctl restart kubelet

- master 노드

# kubectl uncordon worker1

# kubectl uncordon worker2

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 4h10m v1.20.15

worker1 Ready <none> 4h5m v1.20.15

worker2 Ready <none> 4h5m v1.20.15

-> 버전 바뀐 모습 확인 가능📙 클러스터 업그레이드 2

- master 노드

# cd ~

# yum list --showduplicates kubeadm --disableexcludes=kubernetes

# yum install -y kubeadm-1.21.14-0 --disableexcludes=kubernetes

# kubeadm version

# kubeadm upgrade plan

# kubeadm upgrade apply v1.21.14

# yum install -y kubelet-1.21.14-0 kubectl-1.21.14-0 --disableexcludes=kubernetes

# systemctl daemon-reload

# systemctl restart kubelet

- worker 노드

# yum install -y kubeadm-1.21.14-0 --disableexcludes=kubernetes

# kubeadm upgrade node

- master 노드

# kubectl drain worker1 --ignore-daemonsets --force

# kubectl drain worker2 --ignore-daemonsets --force

- worker 노드

# yum install -y kubelet-1.21.14-0 kubectl-1.21.14-0 --disableexcludes=kubernetes

# systemctl daemon-reload

# systemctl restart kubelet

- master 노드

# kubectl uncordon worker1

# kubectl uncordon worker2

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 4h23m v1.21.14

worker1 Ready <none> 4h18m v1.21.14

worker2 Ready <none> 4h18m v1.21.14

📙 ✔️✏️📢⭐️📌

📌기타

⭐️ 프로젝트

https://saramin.github.io/2020-05-01-k8s-cicd/

⭐️ 뭔가 설치하다가 잘못됐거나 안됐을 때 리셋하는 명령어

kubeadm reset

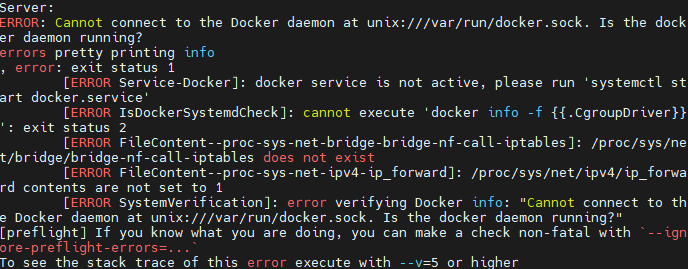

⭐️ kebeadm init 에러

systemctl enable --now docker 다시 해주기.

⭐️ yaml파일 s3에 넣어두고 apply 가능.

# kubectl apply -f s3주소⭐️ dockerhub에서 이미지 가져온 경우 경로 문제때문에 웹화면 안나올 수 있음

-> 이미지 이름 자체를 foods 하면 문제가 발생하지 않을 것.