오늘의 학습 리스트

-

fig = tfds.show_examples(ds_train, ds_info)- Visualize images (and labels) from an image classification dataset.

tfds.visualization.show_examples( ds: tf.data.Dataset, ds_info: tfds.core.DatasetInfo, **options_kwargs )

-

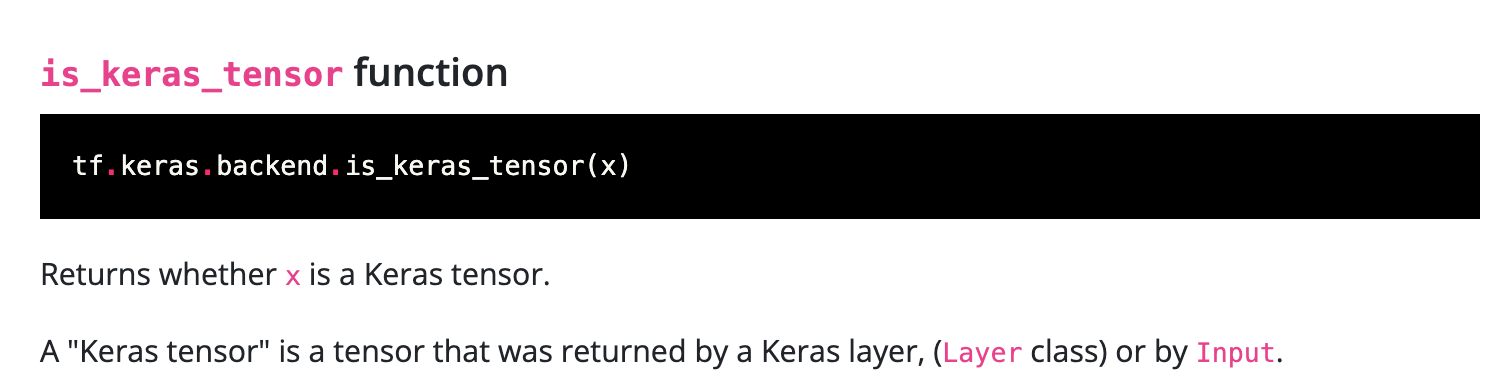

KerasTensor와Tensor는 다르다 -

tf.keras.ModelModelgroups layers into an object with training and inference features.- Inherits From:

Layer,Module - With the "Functional API", where you start from Input, you chain layer calls to specify the model's forward pass, and finally you create your model from inputs and outputs

-

padding,strides같이 있을 때 어떻게 되는지 헷갈리면input_shape = (4, 32, 32, 3) x = tf.random.normal(input_shape) y = tf.keras.layers.Conv2D( 3, 3, activation='relu', padding='same' strides=2, input_shape=input_shape[1:])(x) print(y.shape)- 위 코드로 시험삼아 이것저것 해보기

ResNet 구현

-

conv_block 구현

-

BN & Activation 위치 및 종류

- p 4. "We adopt batch

normalization (BN) [16] right after each convolution and

before activation, following [16]" 참고

- p 4. "We adopt batch

-

Shortcut connection 구현하기

-

p 3. "The operation is performed by a shortcut

connection and element-wise addition. We adopt the second

nonlinearity after the addition (i.e., (y), see Fig. 2)." -

p 3. _"The dimensions of and must be equal in Eqn.(1).

If this is not the case (e.g., when changing the input/output

channels), we can perform a linear projection by the

shortcut connections to match the dimensions:" -

p 4. "When the dimensions increase (dotted line shortcuts

in Fig. 3), we consider two options: (A) The shortcut still

performs identity mapping, with extra zero entries padded

for increasing dimensions. This option introduces no extra

parameter; (B) The projection shortcut in Eqn.(2) is used to

match dimensions (done by 1 x 1 convolutions)." -

p 5. "In the first comparison (Table 2 and Fig. 4 right),

we use identity mapping for all shortcuts and zero-padding

for increasing dimensions (option A)" -> ResNet-34 -

p 6. "In Table 3 we

compare three options: (A) zero-padding shortcuts are used

for increasing dimensions, and all shortcuts are parameterfree

(the same as Table 2 and Fig. 4 right); (B) projection

shortcuts are used for increasing dimensions, and other

shortcuts are identity; and (C) all shortcuts are projections.... So we

do not use option C in the rest of this paper,"

-

-

한 함수 내에서 ResNet-34, ResNet-50간 설정 가능하게 만들기

- end_blcok

- Global Average Pooling

- 어떤 모양이 되고, 언제 쓰는지 알고 싶다면 링크

- Global Average Pooling