오늘의 학습 리스트

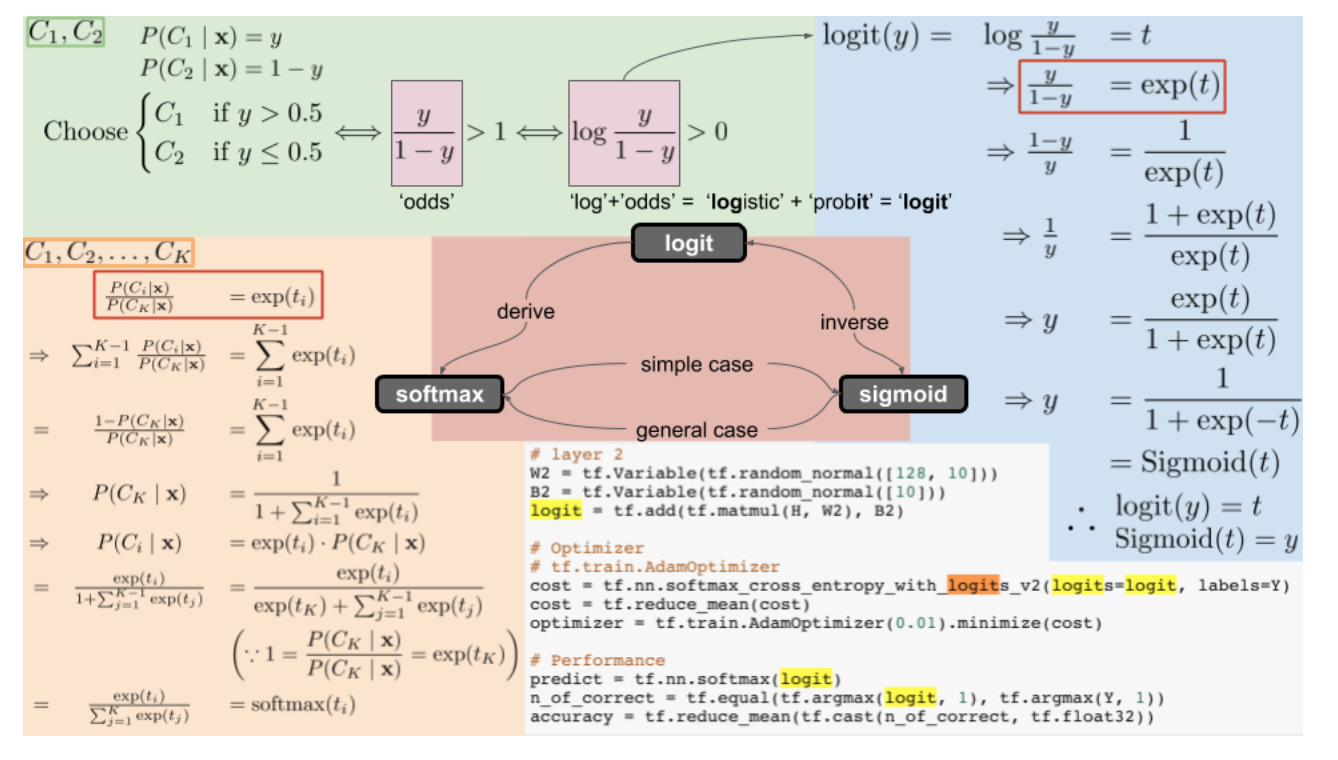

- Logit, Sigmoid, Softmax 간의 관계

- Odds : 어떤 확률이 complementary 확률에 대해 가지는 비율

- Logit : Logistic + Probit

- Odds에 Log 씌운 것

(출처)

(출처)

- Odds : 어떤 확률이 complementary 확률에 대해 가지는 비율

Going Deeper

- NIN(Network in Network)에서 Global Average Pooling을 마지막 레이어(softmax 전)에 넣는 것을 제시

- 이유

- p 4. "One advantage of global average pooling over the fully connected layers is that it is more native to the convolution structure by enforcing correspondences between feature maps and categories. Thus the feature maps can be easily interpreted as categories confidence maps. Another advantage is that there is no parameter to optimize in the global average pooling thus overfitting is avoided at this layer. Futhermore, global average pooling sums out the spatial information, thus it is more robust to spatial translations of the input."

- 다른 말이긴 하지만 fully connected layer가 overffitting 에 더 쉽다는 얘기

- p 4. "However, the fully connected layers are prone to overfitting, thus hampering the generalization ability of the overall network. Dropout is proposed by Hinton et al. [5] as a regularizer which randomly sets half of the activations to the fully connected layers to zero during training."

- 이유

- Grad-CAM

- ReLU가 가지는 클래스별 특징 추출 기능..?

- p 5. "We apply a ReLU to the linear combination of maps because we are only interested in the features that have a positive influence on the class of interest, i.e.pixels whose intensity should be increased in order to increase . Negative pixels are likely to belong to other categories in the image"

- ReLU가 가지는 클래스별 특징 추출 기능..?

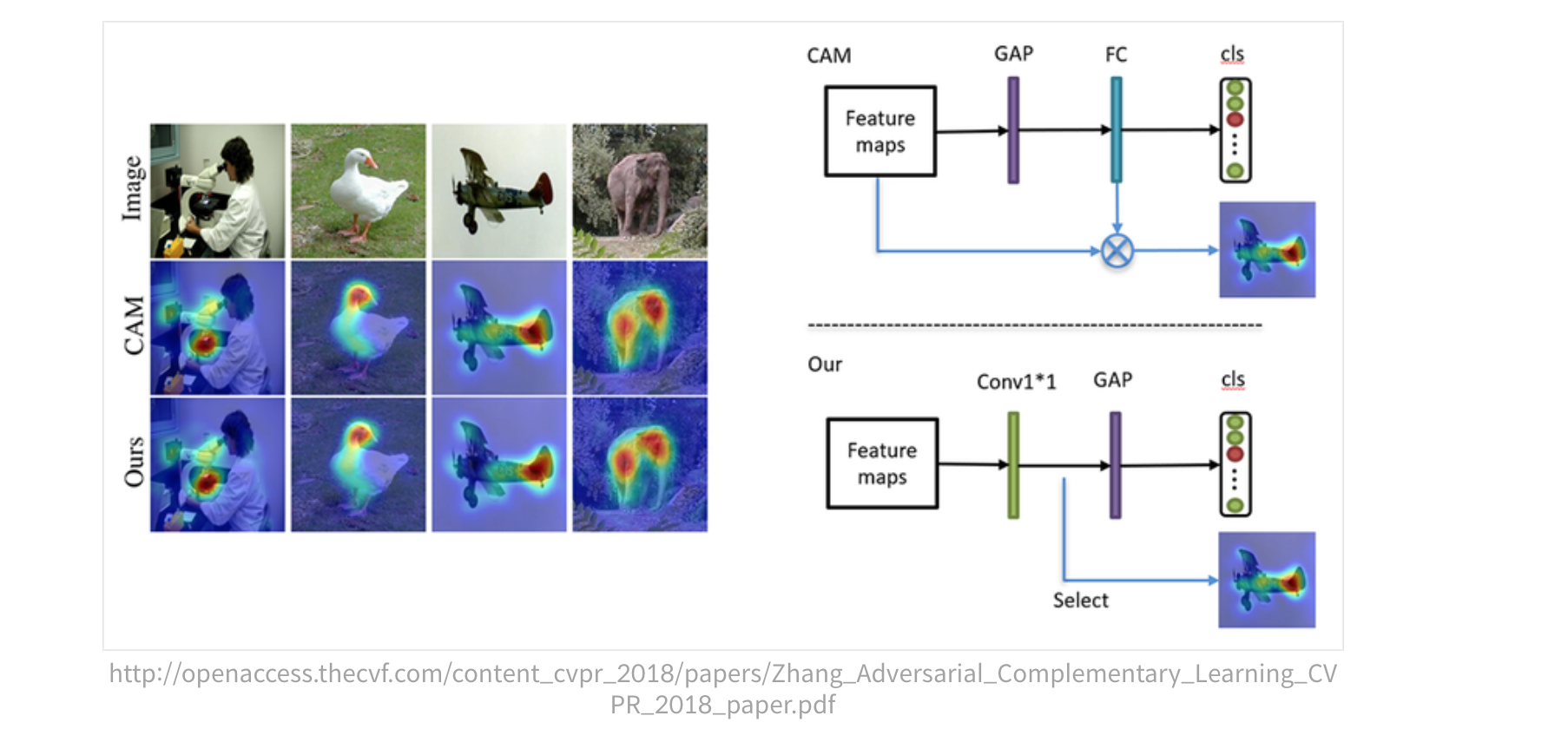

- Adversarial Complementary Learning

전반적으로 보면 흐름이 맨 처음에는 이미지 feature map을 얻는 것에 노력하다가 점진적으로 그 feature map을 통해 weakly supervised learning에 이용하는 쪽으로 온 것 같다.