🧃Optimization

About Optimization

optimization을 처음 접하는 사람이라면 일단 이것이 어떤 multi variable scalar valued 함수의 max or min 값과 그 지점을 알아내는 것이 목적이라고 인식하고 시작하자.

- Optimization은 하나의 closed form이 없고 이런 저런 방법이 많이 있다.

- Least Squares는 tall A에 대해 근사해를 구하는 방법이었다.

Optimization은 wide A에 대해 다룬다.

Wide, 즉 underdetermined A는 조건이 부족해서 해의 후보가 너무 많은데 그 중에서 가장 괜찮은 것을 찾는 것이 최적화다. - Optimization 문제도 결국 또다시 끝엔 Linear System을 풀게 될 것이다.

- Optimization을 풀기 위해서는 두 가지가 필요하다.

1. 목적함수

2. 제약조건form of optimization problem

Find x that minimizes a (cost) function subject to or

Cost Function

물론 문제를 설계하기 나름이지만.. 일단 optimization 문제가 잘 설계되었고 우리는 그걸 푸는 입장이라고 가정한다.

우리는 어떤 값을 minimizing 하고 싶다. 이 를 목적함수, cost function이라고 한다.

내가 관심있는 분야에서는 보통 cost function이 error를 의미하고 당연히 이것을 minimize 하고 싶어한다.

아니 경제 이런 쪽에서는 어떤 값이 가장 크길 원하기도 하는데 왜 minimize라고만 하냐고 한다면 사실 를 minimize하는 것과 maximize하는 것은 같은 일이기 때문이다. min of =max of 이다. 를 minimize하고 다시 음수를 취해 되돌리면 되니 우리는 minimize만 고려하고 공부하자.

Constriant

위에서 가 equality constraint이고 가 inequality constraint이다.

제약조건이란 사실 나의 해가 존재할 수 있는 범위를 좁혀주는 녀석이다. 그래서 나의 candidiate라고 여길 수도 있다. 그 안에서 해가 나오니까.

사실은 equality constraint는 없다고 보면 된다. machine precision 때문이다. 컴퓨터든 계산기든 기계를 이용해 풀 때 정확히 equality를 만족시키는 것은 거의 불가능해서 좁은 범위로 inequality 조건을 두는 식으로 우회한다.

Problem of Optimization

optimization이 곧 어떤 제약조건을 가지고 cost function을 minimize하는 것이다.

원하는 것은 그 global min 값과 그 때의 .

이 상황에서 이과 수능을 본 우리는 일, 이차 미분을 생각한다. 당연히 을 이용하면 된다.

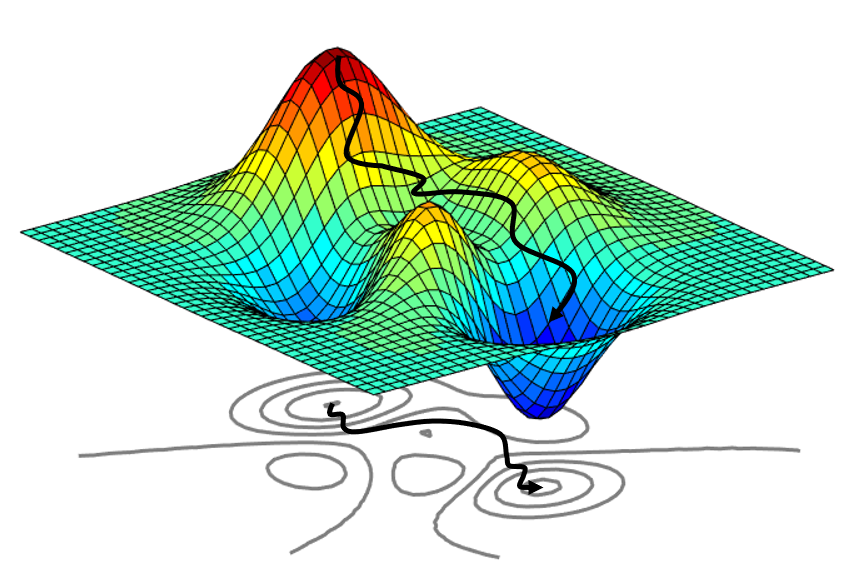

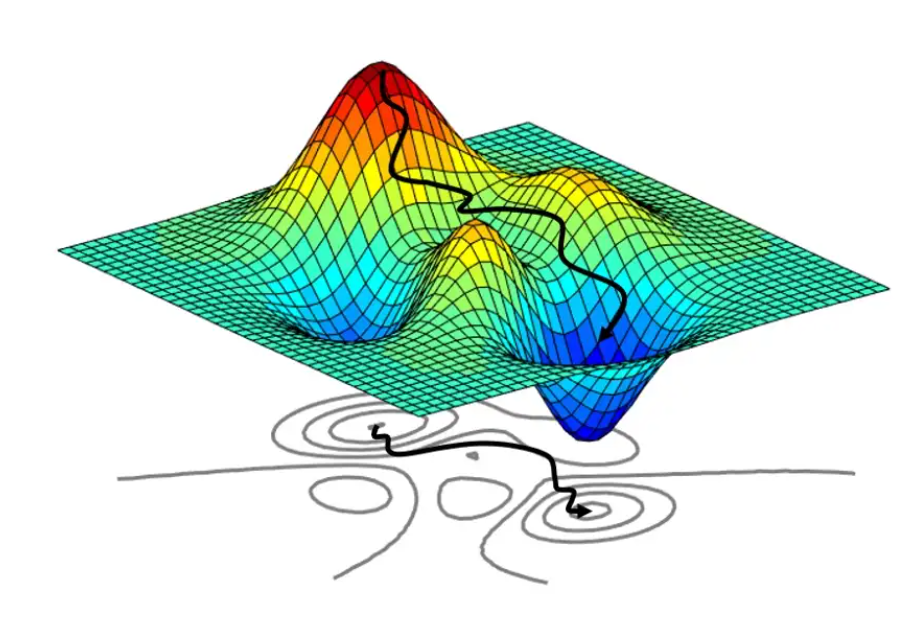

문제는 이렇게 찾은 것이 local min인지 global min인지 모른다는 것이다.

convex 함수는 특정한 조건 하에서는 local min이 곧 global min (우리가 원하는 바로 그 point)이다. 이런 경우에는 문제가 굉장히 단순해지기 때문에 optimization 문제를 convex 함수로 만들고 싶어 하고 그래서 풀기 쉽도록 convexize하기도 한다.

iterative method를 쓸 때 global optimal point를 찾는 보장된 방법은 없다. iterative method는 항상 iterate를 시작할 starting point가 필요하다. 그 starting point를 여러 개 잡아서 해보고 그중에 제일 나은 것을 고르는 방법도 있고 그렇다.

Two Methods for Optimization

- Iterative Method

- Direct Method

using calculus by solving

🏁How to Minimize

이제 를 minimize 하는 방법에 집중해보자!

Unconstrained Minimization

여기에서는 제약조건이 없는 (제약조건이 인) unconstrained에 대해서 다루겠다.

Gradient Descent

함수의 일차 미분이 0이 되는 점을 critical point 또는 stationary point라고 한다. 함수의 극점, saddle point가 critical point에 해당된다.

함수의 이차미분으로써 그의 곡룔을 나타내는 Hessian은 critical point의 종류를 판별하는 데 이용할 수 있다.

어떤 multi variable scalar valued function의 optimize하는 문제 어떤 함수를 정의한 다음 최소값을 찾는 것이 목적이다.

보통의 경우 함수의 일차 미분(gradient)가 0이 되는 지점을 찾고 그 지점이 min/max/saddle point 중에 무엇인지를 알아야 하는데 그때 Hessian이 이용된다.

Finding Optimal Point

-

Find ciritical point

-

eigen value evaluation on that

all positive eigen values : local min

all negative eigen values : local max

positive and negative : saddle point

Hessian의 eigen vector는 곡률이 가장 큰 방향으로의 벡터이고 eigen value는 이 곡률을 나타낸다.

Hessian은 symmetric matrix이기 때문에 항상 고유값 분해가 가능하고 eigen value가 존재한다.

Hessian은 고등학교때 공부했던 바로 그 이차미분이다. 어느 점에서 그 주변의 곡률을 보는 것이기 때문에 기본적으로 max, min 이라고 말해도 항상 local max, local min을 말하는 것이다. 우리가 찾고싶은 최소값은 global min 인데 도중에 찾은 것이 local min인지 global min인지 구분하기 힘든 것이 문제이다.

convex 함수라는 특정한 조건 하에서는 local min이 곧 global min (우리가 원하는 바로 그 point)이다. 이런 경우에는 문제가 굉장히 단순해지기 때문에 optimization 문제를 convex 함수로 만들고 싶어 하고 그래서 풀기 쉽도록 convexize하기도 한다. 여기에서 깊게 다루지는 않겠지만 이런 게 있다.

❗️~ : ~ 라는 문구는 "뒤의 조건을 만족하는 앞의 것"이라고 읽으면 된다❕

참고자료:

인하대 김광기 교수님의 수치해석 강의

https://darkpgmr.tistory.com/132

https://angeloyeo.github.io/2020/06/17/Hessian.html