0. Longhorn

기존에 운영중인 클러스터에 분산 스토리지 시스템으로 Longhorn을 사용해왔다.

여러 서비스를 추가하면서 RWX PVC를 사용해야 할 경우가 많아졌는데, Longhorn이 제공하는 RWX가 성능적인 문제가 있는 것 같아서 Rook-Ceph로 전환하였다.

1. Rook-Ceph

- Rook은 쿠버네티스에서 분산 스토리지를 자동화, 배포 관리할 수 있도록 도와주는 스토리지 오퍼레이터이다.

- Ceph는 오브젝트, 블록, 파일 스토리지를 모두 제공하는 확장성 높은 분산 스토리지 시스템이다.

- Rook-Ceph는 Ceph를 쿠버네티스에서 쉽게 사용할 수 있도록 Rook 오퍼레이터를 통해 관리하는 조합을 의미한다.

-

Ceph Mon: 클러스터 상태 모니터링

-

Ceph OSD (Object Storage Daemon): 실제 데이터를 저장

-

Ceph MGR: 클러스터 상태 수집 및 대시보드 제공

-

Ceph MDS (선택적): CephFS 파일시스템 지원 시 사용

Ceph 제공 스토리지 타입

- 블록 (Block)

- VM 디스크, DB 등에 적합하다.

(ReadWriteOnce,ReadWriteMany)

- VM 디스크, DB 등에 적합하다.

- 파일 (File)

- 여러 Pod가 동시 접근 가능하다. (CephFS 사용)

(ReadWriteMany, NFS 대체 가능 )

- 여러 Pod가 동시 접근 가능하다. (CephFS 사용)

- 오브젝트 (Object)

- S3 호환 API 제공, 정적 파일 저장, 컨테이너 이미지 등

(Rook-Ceph는 S3 Gateway 제공)

- S3 호환 API 제공, 정적 파일 저장, 컨테이너 이미지 등

2. 설치

- Rook-Ceph는 OSD 구성을 위해 초기화되지 않은 새로운 디스크, 즉 파티션이나 파일시스템이 없는

raw 상태의 디스크를 요구한다.- OpenStack을 사용하고 있으므로, 각 워커 노드에 Cinder 볼륨을 생성해 추가 후 진행한다.

ubuntu@k8s:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

vda 253:0 0 80G 0 disk

├─vda1 253:1 0 79G 0 part /

├─vda14 253:14 0 4M 0 part

├─vda15 253:15 0 106M 0 part /boot/efi

└─vda16 259:0 0 913M 0 part /boot

vdb 253:16 0 200G 0 disk

ubuntu@k8s:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

vda 253:0 0 80G 0 disk

├─vda1 253:1 0 79G 0 part /

├─vda14 253:14 0 4M 0 part

├─vda15 253:15 0 106M 0 part /boot/efi

└─vda16 259:0 0 913M 0 part /boot

vdb 253:16 0 200G 0 disk

git clone https://github.com/rook/rook.git

cd rook/deploy/examples

vi cluster.yaml

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: quay.io/ceph/ceph:v18

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

dashboard:

enabled: true

storage:

useAllNodes: false

useAllDevices: false

nodes:

- name: k8s.node01

devices:

- name: "vdb"

- name: k8s.node02

devices:

- name: "vdb"

k create ns rook-ceph

ku apply -f common.yaml

k apply -f crds.yaml

k apply -f operator.yaml

k get po -n rook-ceph

k apply -f cluster.yaml

k -n rook-ceph get pods -w

배포 과정을 보면 아래와 같다.

-

오퍼레이터 기반의 동적 구성으로, MON, MGR, OSD, CSI, CrashCollector를 순차적으로 배포한다.

-

Canary Pod를 통해 MON 배치를 위한 사전 검사를 진행하면서 배포한다.

(Terminating이 많은 이유) -

OSD는 Ceph의 핵심 저장소 데몬으로, 노드에 디스크를 연결 시 Rook이 자동 감지 후 작업한다.

rook-ceph-osd-prepare-<노드명>: 디스크 포맷, 마운트, 키 배포 등 준비- 준비 완료 시

Completed상태로 전환 rook-ceph-osd-<index>Pod가 생성되어 실제 데이터 저장 역할을 수행

ubuntu@k8s:~/rook/deploy/examples$ kubectl -n rook-ceph get pods -w

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-hrt82 0/2 ContainerCreating 0 9s

csi-cephfsplugin-provisioner-d4d7df87-g96mx 0/5 ContainerCreating 0 8s

csi-cephfsplugin-provisioner-d4d7df87-nbjmv 0/5 ContainerCreating 0 8s

csi-cephfsplugin-qhkr9 0/2 ContainerCreating 0 8s

csi-rbdplugin-ld8kw 0/2 ContainerCreating 0 9s

csi-rbdplugin-provisioner-6d4bbf78d7-2wphs 0/5 ContainerCreating 0 9s

csi-rbdplugin-provisioner-6d4bbf78d7-gplvr 0/5 ContainerCreating 0 9s

csi-rbdplugin-x5l8b 0/2 ContainerCreating 0 9s

rook-ceph-detect-version-94lx9 0/1 Init:0/1 0 10s

rook-ceph-operator-59dcf6d55b-cwmrc 1/1 Running 0 75s

csi-cephfsplugin-provisioner-d4d7df87-g96mx 0/5 ContainerCreating 0 10s

rook-ceph-detect-version-94lx9 0/1 Init:0/1 0 19s

rook-ceph-detect-version-94lx9 0/1 PodInitializing 0 22s

rook-ceph-detect-version-94lx9 1/1 Running 0 93s

rook-ceph-detect-version-94lx9 1/1 Running 0 94s

rook-ceph-detect-version-94lx9 1/1 Terminating 0 95s

rook-ceph-detect-version-94lx9 0/1 Terminating 0 97s

rook-ceph-detect-version-94lx9 0/1 Terminating 0 97s

rook-ceph-detect-version-94lx9 0/1 Completed 0 100s

rook-ceph-detect-version-94lx9 0/1 Completed 0 100s

rook-ceph-detect-version-94lx9 0/1 Completed 0 100s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 Pending 0 0s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 Pending 0 1s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 Pending 0 1s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 Pending 0 0s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 Pending 0 1s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 Pending 0 0s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 ContainerCreating 0 2s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 ContainerCreating 0 2s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 ContainerCreating 0 1s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 ContainerCreating 0 3s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 ContainerCreating 0 5s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 Terminating 0 10s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 Terminating 0 11s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 Terminating 0 11s

rook-ceph-mon-c-canary-57f68b6849-q4nql 1/1 Terminating 0 11s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 Terminating 0 13s

rook-ceph-mon-a-canary-bf497869c-whx7z 1/1 Terminating 0 15s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Pending 0 0s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Pending 0 0s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Init:0/2 0 1s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Init:0/2 0 10s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Init:0/2 0 28s

csi-rbdplugin-ld8kw 1/2 Error 0 3m15s

csi-rbdplugin-ld8kw 2/2 Running 1 (2m26s ago) 3m22s

csi-cephfsplugin-qhkr9 1/2 Error 0 3m35s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Init:1/2 0 102s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Init:1/2 0 2m9s

csi-cephfsplugin-qhkr9 2/2 Running 1 (3m12s ago) 4m11s

rook-ceph-mon-a-canary-bf497869c-whx7z 1/1 Terminating 0 2m46s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 PodInitializing 0 2m33s

csi-rbdplugin-x5l8b 1/2 Error 0 4m29s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 Terminating 0 2m49s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 Error 0 2m50s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 Error 0 2m52s

rook-ceph-mon-a-canary-bf497869c-whx7z 0/1 Error 0 2m53s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Running 0 2m40s

csi-rbdplugin-x5l8b 2/2 Running 1 (3m24s ago) 4m35s

rook-ceph-mon-c-canary-57f68b6849-q4nql 1/1 Terminating 0 2m56s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 Terminating 0 2m57s

csi-cephfsplugin-hrt82 1/2 Error 0 4m39s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 Error 0 2m58s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 Error 0 2m59s

rook-ceph-mon-c-canary-57f68b6849-q4nql 0/1 Error 0 2m59s

csi-cephfsplugin-hrt82 2/2 Running 1 (3m27s ago) 4m41s

rook-ceph-mon-a-774886ccb4-cv58q 0/1 Running 0 2m51s

csi-cephfsplugin-provisioner-d4d7df87-nbjmv 4/5 Error 0 4m46s

rook-ceph-mon-a-774886ccb4-cv58q 1/1 Running 0 2m52s

csi-cephfsplugin-provisioner-d4d7df87-nbjmv 5/5 Running 1 (3m19s ago) 4m48s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Pending 0 0s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Pending 0 0s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Init:0/2 0 1s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Init:0/2 0 2s

csi-cephfsplugin-provisioner-d4d7df87-g96mx 4/5 Error 0 5m35s

csi-rbdplugin-provisioner-6d4bbf78d7-2wphs 4/5 Error 0 5m37s

csi-rbdplugin-provisioner-6d4bbf78d7-2wphs 5/5 Running 1 (4m8s ago) 5m42s

csi-cephfsplugin-provisioner-d4d7df87-g96mx 5/5 Running 1 (3m47s ago) 5m42s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 1/1 Terminating 0 4m56s

csi-rbdplugin-provisioner-6d4bbf78d7-gplvr 2/5 Error 0 6m39s

csi-rbdplugin-provisioner-6d4bbf78d7-gplvr 5/5 Running 3 (104s ago) 6m44s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Init:0/2 0 115s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Init:1/2 0 116s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Init:1/2 0 117s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 PodInitializing 0 119s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Running 0 2m1s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Pending 0 0s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Pending 0 1s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Init:0/2 0 1s

rook-ceph-mon-b-74bccb4dbf-2hcss 0/1 Running 0 2m11s

rook-ceph-mon-b-74bccb4dbf-2hcss 1/1 Running 0 2m11s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Init:0/2 0 2s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 1/1 Terminating 0 5m27s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Init:1/2 0 5s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 Error 0 5m28s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 Error 0 5m28s

rook-ceph-mon-b-canary-69dccbb779-vdhpr 0/1 Error 0 5m28s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Init:1/2 0 7s

rook-ceph-mon-c-58b9d496-jnggd 0/1 PodInitializing 0 9s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Running 0 10s

rook-ceph-mon-c-58b9d496-jnggd 0/1 Running 0 22s

rook-ceph-mon-c-58b9d496-jnggd 1/1 Running 0 22s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 Pending 0 0s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 Pending 0 0s

rook-ceph-exporter-k8s.node01-7dfd984d6b-s9dfp 0/1 Pending 0 1s

rook-ceph-exporter-k8s.node01-7dfd984d6b-s9dfp 0/1 Pending 0 1s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 Init:0/2 0 2s

rook-ceph-exporter-k8s.node01-7dfd984d6b-s9dfp 0/1 Init:0/1 0 2s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 Init:0/2 0 3s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 Pending 0 1s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 Pending 0 1s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 Init:0/1 0 1s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Pending 0 1s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 Pending 0 0s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Pending 0 1s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 Pending 0 0s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Init:0/2 0 1s

rook-ceph-exporter-k8s.node01-7dfd984d6b-s9dfp 0/1 Init:0/1 0 5s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 Init:0/1 0 2s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 Init:0/2 0 8s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 Init:1/2 0 9s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 Init:0/1 0 6s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 Init:0/1 0 5s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Init:0/2 0 6s

rook-ceph-exporter-k8s.node01-7dfd984d6b-s9dfp 0/1 PodInitializing 0 12s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 Init:1/2 0 13s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 0/1 PodInitializing 0 13s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 Init:0/1 0 12s

rook-ceph-exporter-k8s.node01-7dfd984d6b-s9dfp 1/1 Running 0 16s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 PodInitializing 0 13s

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 1/1 Running 0 17s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 PodInitializing 0 13s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Init:1/2 0 15s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 Running 0 16s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 1/1 Running 0 16s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Init:1/2 0 17s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 PodInitializing 0 17s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 1/1 Running 0 18s

rook-ceph-mgr-a-6c44dddbb5-882x2 0/1 Running 0 32s

rook-ceph-mgr-a-6c44dddbb5-882x2 1/1 Running 0 32s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Pending 0 0s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Pending 0 0s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Pending 0 0s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Init:0/1 0 1s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Pending 0 0s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Init:0/1 0 1s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Init:0/1 0 2s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Init:0/1 0 2s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Init:0/1 0 4s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Init:0/1 0 6s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 PodInitializing 0 7s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 PodInitializing 0 8s

rook-ceph-osd-prepare-k8s.node02-p4jgr 1/1 Running 0 9s

rook-ceph-osd-prepare-k8s.node01-fn7lv 1/1 Running 0 10s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Pending 0 0s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Pending 0 1s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Completed 0 20s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Completed 0 22s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Init:0/3 0 2s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 0/1 Pending 0 0s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Pending 0 0s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 0/1 Pending 0 1s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Pending 0 1s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 1/1 Terminating 0 56s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Completed 0 22s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 0/1 Init:0/2 0 2s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Completed 0 24s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Init:0/3 0 2s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Completed 0 26s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 1/1 Terminating 0 60s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Completed 0 27s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Init:0/3 0 8s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Init:0/3 0 6s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Completed 0 28s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Completed 0 29s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 0/1 Init:0/2 0 8s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 Error 0 63s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 Error 0 65s

rook-ceph-exporter-k8s.node02-7698d6dc9c-v7nhs 0/1 Error 0 65s

rook-ceph-exporter-k8s.node02-6796f859f5-t7w9p 0/1 Pending 0 0s

rook-ceph-exporter-k8s.node02-6796f859f5-t7w9p 0/1 Pending 0 1s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Init:0/3 0 11s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Init:0/3 0 14s

rook-ceph-exporter-k8s.node02-6796f859f5-t7w9p 0/1 Init:0/1 0 2s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Init:1/3 0 13s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 0/1 Init:0/2 0 13s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Init:1/3 0 16s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 0/1 Init:1/2 0 15s

rook-ceph-exporter-k8s.node02-6796f859f5-t7w9p 0/1 Init:0/1 0 5s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Init:1/3 0 17s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Init:2/3 0 18s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Init:1/3 0 20s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Init:2/3 0 21s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 0/1 PodInitializing 0 19s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Init:2/3 0 22s

rook-ceph-exporter-k8s.node02-6796f859f5-t7w9p 0/1 PodInitializing 0 12s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 PodInitializing 0 23s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 1/1 Running 0 24s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Init:2/3 0 26s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 1/1 Terminating 0 81s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 PodInitializing 0 28s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Running 0 27s

rook-ceph-exporter-k8s.node02-6796f859f5-t7w9p 1/1 Running 0 17s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 1/1 Terminating 0 83s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Running 0 31s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Completed 0 85s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Completed 0 85s

rook-ceph-crashcollector-k8s.node02-697576cfff-m7qj8 0/1 Completed 0 85s

rook-ceph-osd-0-87c656b8-sjpnl 0/1 Running 0 42s

rook-ceph-osd-0-87c656b8-sjpnl 1/1 Running 0 42s

rook-ceph-osd-1-84cb96b764-z58nk 0/1 Running 0 42s

rook-ceph-osd-1-84cb96b764-z58nk 1/1 Running 0 42s

배포가 모두 완료되면 아래와 같다.

ubuntu@k8s:~/rook/deploy/examples$ k get po -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-hrt82 2/2 Running 1 (9m44s ago) 10m

csi-cephfsplugin-provisioner-d4d7df87-g96mx 5/5 Running 1 (9m2s ago) 10m

csi-cephfsplugin-provisioner-d4d7df87-nbjmv 5/5 Running 1 (9m28s ago) 10m

csi-cephfsplugin-qhkr9 2/2 Running 1 (9m58s ago) 10m

csi-rbdplugin-ld8kw 2/2 Running 1 (10m ago) 10m

csi-rbdplugin-provisioner-6d4bbf78d7-2wphs 5/5 Running 1 (9m24s ago) 10m

csi-rbdplugin-provisioner-6d4bbf78d7-gplvr 5/5 Running 3 (5m58s ago) 10m

csi-rbdplugin-x5l8b 2/2 Running 1 (9m47s ago) 10m

rook-ceph-crashcollector-k8s.node01-68b99667b-kjtgk 1/1 Running 0 3m32s

rook-ceph-crashcollector-k8s.node02-5dd44f5bcc-c8574 1/1 Running 0 2m32s

rook-ceph-exporter-k8s.node01-7dfd984d6b-s9dfp 1/1 Running 0 3m32s

rook-ceph-exporter-k8s.node02-6796f859f5-t7w9p 1/1 Running 0 2m22s

rook-ceph-mgr-a-6c44dddbb5-882x2 1/1 Running 0 3m29s

rook-ceph-mon-a-774886ccb4-cv58q 1/1 Running 0 9m3s

rook-ceph-mon-b-74bccb4dbf-2hcss 1/1 Running 0 6m6s

rook-ceph-mon-c-58b9d496-jnggd 1/1 Running 0 3m56s

rook-ceph-operator-59dcf6d55b-cwmrc 1/1 Running 0 12m

rook-ceph-osd-0-87c656b8-sjpnl 1/1 Running 0 2m34s

rook-ceph-osd-1-84cb96b764-z58nk 1/1 Running 0 2m32s

rook-ceph-osd-prepare-k8s.node01-fn7lv 0/1 Completed 0 2m54s

rook-ceph-osd-prepare-k8s.node02-p4jgr 0/1 Completed 0 2m53s

3. ToolBox 배포

Rook-Ceph Toolbox는 Ceph 관리용 도구가 내장된 Pod로, 내부에

cephCLI ,rados,rbd,cephfs등 Ceph 관련 유틸리티 도구들이 설치되어 있다.

왜 필요할까?

Kubernetes는 Pod 안에 컨테이너가 격리되어 있기 때문에, 일반 노드에서 바로 ceph status, ceph osd tree 등 Ceph 명령어를 실행할 수 없다.

→ 클러스터 내부에서 Ceph 클러스터 상태 관리, 디버깅 등을 위해 필요하다.

cd /rook/deploy/examples

k apply -f toolbox.yaml

ubuntu@k8s:~/rook/deploy/examples$ k exec -it -n rook-ceph rook-ceph-tools-7b75b967db-dtclg -- /bin/bash

bash-5.1$ ceph status

cluster:

id: 187837c0-9a58-4bee-9430-2115e098e163

health: HEALTH_WARN

OSD count 2 < osd_pool_default_size 3

services:

mon: 3 daemons, quorum a,b,c (age 5m)

mgr: a(active, since 4m)

osd: 2 osds: 2 up (since 3m), 2 in (since 4m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 453 MiB used, 400 GiB / 400 GiB avail

pgs:

bash-5.1$ ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 400 GiB 400 GiB 453 MiB 453 MiB 0.11

TOTAL 400 GiB 400 GiB 453 MiB 453 MiB 0.11

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

4. CephBlockPool & StorageClass 배포

CephBlockPool은 Ceph 클러스터 내에서 RBD(Block) 볼륨이 저장될 공간(pool)을 정의하는 Kubernetes CRD이다.

→ PVC가 요청할 블록 스토리지를 저장할 논리적인볼륨 풀을 생성하는 것이다.

현재 레플리카 2개로 구성이 되므로, 수정 후 배포한다.

→ 대부분 HA 고려 3개일 것이므로, 3개이면 수정하지 않아도 된다.

vi csi/rbd/storageclass.yaml

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph # namespace:cluster

spec:

failureDomain: host

replicated:

size: 2

requireSafeReplicaSize: true

k apply -f csi/rbd/storageclass.yaml

5. 정상 동작 확인

내장된 example을 통해 동작을 확인한다.

cd /rook/deploy/examples

k apply -f mysql.yaml

k apply -f wordpress.yaml

k get pvc

k edit svc wordpress

# NodePort로 수정 후 접속 확인

6. Ceph Dashboard 설치

대시보드 설치

cd /rook/deploy/examples

k apply -f dashboard-external-http.yaml

k get svc -n rook-ceph

rook-ceph-mgr-dashboard-external-http NodePort 10.233.28.40 <none> 7000:31493/TCP 73s

orchestrator 활성화

k exec -it -n rook-ceph rook-ceph-tools -- bash

ceph mgr module enable rook

ceph orch set backend rook

ceph orch status

Backend: rook

Available: Yes

dashboard 접속 계정 생성

k exec -it -n rook-ceph rook-ceph-tools -- bash

echo '<PASSWORD>' > /tmp/ceph-admin-password.txt

ceph dashboard set-login-credentials admin -i /tmp/ceph-admin-password.txt

******************************************************************

*** WARNING: this command is deprecated. ***

*** Please use the ac-user-* related commands to manage users. ***

******************************************************************

Username and password updated

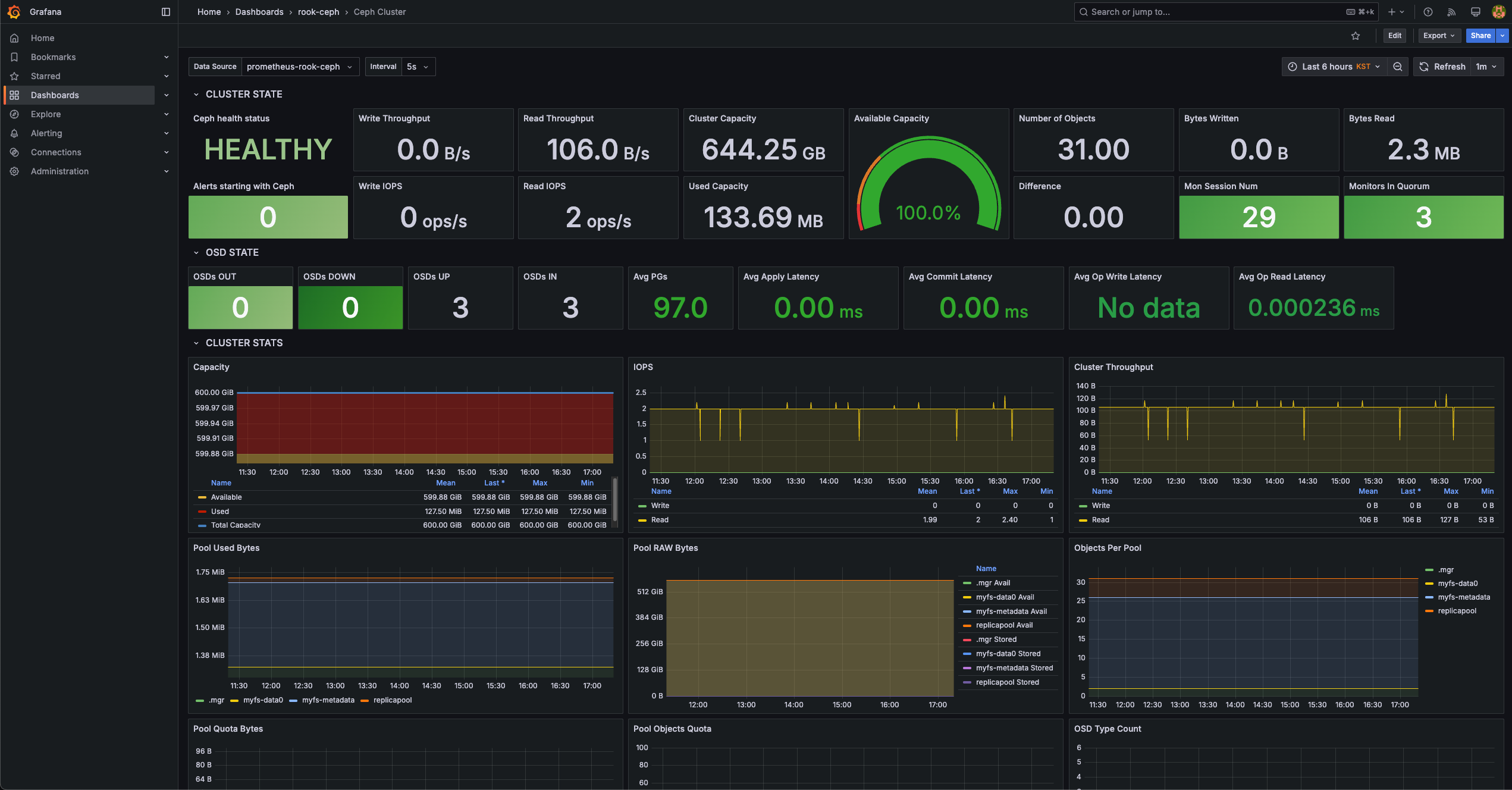

7. Grafana, Prometheus 구성

Rook-Ceph는 프로메테우스와 그라파나를 이용해 모니터링도 가능하다.

프로메테우스 배포

kubectl apply -f https://raw.githubusercontent.com/coreos/prometheus-operator/v0.40.0/bundle.yaml

k get po

prometheus-operator-d858565cd-rl2jm 1/1 Running 0 37s

cd rook/deploy/examples/monitoring

kubectl apply -f service-monitor.yaml

kubectl apply -f prometheus.yaml

kubectl apply -f prometheus-service.yaml

kubectl -n rook-ceph get pod prometheus-rook-prometheus-0

spec.monitoring을 활성화 해준다.

vi cluster.yaml

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: quay.io/ceph/ceph:v18

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

dashboard:

enabled: true

storage:

useAllNodes: false

useAllDevices: false

nodes:

- name: k8s.node01

devices:

- name: "vdb"

- name: k8s.node02

devices:

- name: "vdb"

monitoring:

enabled: true

k apply -f cluster.yaml

그라파나 데이터소스 추가

프로메테우스를 그라파나에 추가해준다.

- Ceph-Cluster : 2842

- Ceph-OSD (Single) : 5336

- Ceph-Pools : 5342

8. ReadWriteMany 사용

Rook-CephFS 생성이 필요하다.

# CephFilesystem 정의

apiVersion: ceph.rook.io/v1

kind: CephFilesystem

metadata:

name: myfs

namespace: rook-ceph

spec:

metadataPool:

replicated:

size: 3

dataPools:

- replicated:

size: 3

preserveFilesystemOnDelete: true

metadataServer:

activeCount: 1

activeStandby: true

---

# CephFS용 StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-cephfs

provisioner: rook-ceph.cephfs.csi.ceph.com

parameters:

clusterID: rook-ceph

fsName: myfs

pool: myfs-data0

csi.storage.k8s.io/provisioner-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-cephfs-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

allowVolumeExpansion: true

reclaimPolicy: Delete

volumeBindingMode: Immediatekubectl get cephfilesystem -n rook-ceph

NAME ACTIVEMDS AGE PHASE

myfs 1 97s Ready

k get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 136m

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 100s

정상 동작을 확인한다.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cephfs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: rook-cephfs

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: cephfs-test-pod

spec:

containers:

- name: test-container

image: busybox

command: [ "sleep", "3600" ]

volumeMounts:

- name: cephfs-vol

mountPath: /mnt/mycephfs

volumes:

- name: cephfs-vol

persistentVolumeClaim:

claimName: cephfs-pvc

k get po

NAME READY STATUS RESTARTS AGE

cephfs-test-pod 1/1 Running 0 57s

prometheus-operator-d858565cd-rl2jm 1/1 Running 0 119m

k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

cephfs-pvc Bound pvc-73c9ab95-233c-4486-9ce7-513502c801cd 1Gi RWX rook-cephfs <unset> 59s

k get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-73c9ab95-233c-4486-9ce7-513502c801cd 1Gi RWX Delete Bound default/cephfs-pvc rook-cephfs <unset> 60s

9. 새로운 노드 추가 시

cluster.yaml에 노드를 추가해준다.

vi cluster.yaml

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: quay.io/ceph/ceph:v18

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

dashboard:

enabled: true

storage:

useAllNodes: false

useAllDevices: false

nodes:

- name: k8s.node01

devices:

- name: "vdb"

- name: k8s.node02

devices:

- name: "vdb"

- name: k8s.node03

devices:

- name: "vdb"

monitoring:

enabled: true

k apply -f cluster.yaml

10. 노드 추가 문제 발생 시

대부분 ServiceMonitor 관련 권한 부족 때문에

rook-ceph클러스터의 reconclie이 실패하고, 이로 인해 OSD 준비 Job도 생성되지 않아 발생한다.

kubectl -n rook-ceph describe cephcluster rook-ceph

Warning ReconcileFailed 49s (x16 over 9m57s) rook-ceph-cluster-controller failed to reconcile CephCluster "rook-ceph/rook-ceph". failed to reconcile cluster "rook-ceph": failed to configure local ceph cluster: failed to create cluster: failed to start ceph mgr: failed to enable mgr services: failed to enable service monitor: service monitor could not be enabled: failed to retrieve servicemonitor. servicemonitors.monitoring.coreos.com "rook-ceph-mgr" is forbidden: User "system:serviceaccount:rook-ceph:rook-ceph-system" cannot get resource "servicemonitors" in API group "monitoring.coreos.com" in the namespace "rook-ceph"

ubuntu@k8s:~/rook/deploy/examples$ kubectl get pods -n rook-ceph | grep osd-prepare

kubectl apply -f - <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: rook-prometheus-servicemonitor-access

rules:

- apiGroups: ["monitoring.coreos.com"]

resources: ["servicemonitors"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: rook-prometheus-servicemonitor-access

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: rook-prometheus-servicemonitor-access

subjects:

- kind: ServiceAccount

name: rook-ceph-system

namespace: rook-ceph

EOF

kubectl annotate cephcluster rook-ceph -n rook-ceph reconcile=now --overwrite