kubernetes + prometheus + grafana -> metric

ㄴ elasticsearch + kibana -> log

ㄴ Iac -> *.yaml

CI/CD -> jenkins, ArgoCD

public cloud -> AWS, GCP

자격증

CKA, AWS(SAA), 정보처리기사

Multi Container service for MSA(microservice architecture)

docker-compose

: docker에서 제공하는 orchestration(다양한 기능을 통해 원하는 서비스를 운영할 수 있다) tool

: docker swarm / kubernetes

돌아가려면 docker-compose.yaml이 필요하다. (yaml / yml)

들여쓰기(indent)가 까다롭다. 들여쓰기 체크 사이트 1 들여쓰기 체크 사이트 2

: MSA 서비스를 위해 사용한다.

command는 docker CLI와 유사하다.

설치

kevin@hostos1:~$ DOCKER_CONFIG=${DOCKER_CONFIG:-$HOME/.docker}

kevin@hostos1:~$ mkdir -p $DOCKER_CONFIG/cli-plugins

kevin@hostos1:~$ curl -SL https://github.com/docker/compose/releases/download/v2.11.1/docker-compose-linux-x86_64 -o $DOCKER_CONFIG/cli-plugins/docker-compose

kevin@hostos1:~$ chmod +x $DOCKER_CONFIG/cli-plugins/docker-compose

kevin@hostos1:~$ docker compose version

Docker Compose version v2.11.1docker restart : no

kubernetes restart : always

실습 1

kevin@hostos1:~$ cd LABs/

kevin@hostos1:~/LABs$ mkdir mydb && cd $_

## yaml 코드 작성

kevin@hostos1:~/LABs/mydb$ vi docker-compose.yaml

version: '3.3'

services:

mydb:

image: mariadb:10.4.6

container_name: mariadb

restart: always

volumes:

- /home/kevin/my_db:/var/lib/mysql

ports:

- '3306:3306'

environment:

MYSQL_ROOT_PASSWORD: pass123#

MYSQL_DATABASE: myprod

## 컨테이너 서비스 실행

kevin@hostos1:~/LABs/mydb$ docker compose up실습 2

application과 database가 결합된 container 결합 환경 바꾸기

kevin@hostos1:~/LABs$ mkdir my_wp && cd $_

## yaml 코드 작성

kevin@hostos1:~/LABs/my_wp$ vi docker-compose.yml

version: "3.9"

services:

mydb:

image: mysql:8.0

container_name: mysql_app

volumes:

- mydb_data:/var/lib/mysql

restart: always

networks:

- backend-net

ports:

- "3306:3306"

environment:

MYSQL_ROOT_PASSWORD: wordpress

MYSQL_DATABASE: wordpress

MYSQL_USER: wordpress

MYSQL_PASSWORD: wordpress

myweb:

depends_on:

- mydb

image: wordpress:latest

container_name: wordpress_app

networks:

- frontend-net

- backend-net

ports:

- "8888:80"

volumes:

- myweb_data:/var/www/html

- ${PWD}/myweb-log:/var/log

restart: always

environment:

WORDPRESS_DB_HOST: mydb:3306

WORDPRESS_DB_USER: wordpress

WORDPRESS_DB_PASSWORD: wordpress

WORDPRESS_DB_NAME: wordpress

networks:

frontend-net: {}

backend-net: {}

volumes:

mydb_data: {}

myweb_data: {}

## 컨테이너 서비스 실행

kevin@hostos1:~/LABs/my_wp$ docker-compose up실습 3

3-Tier container MSA 만들기 : npm 기반의 nodejs 바꾸기

kevin@hostos1:~/LABs$ mkdir 3tier

kevin@hostos1:~/LABs$ cd 3tier/

## yaml 코드 작성

kevin@hostos1:~/LABs/3tier$ vi docker-compose.yml

version: "3.6"

services:

mongodb:

image: mongo:4

restart: always

networks:

- devapp-net

ports:

- "17017:27017"

backend:

image: leecloudo/guestbook:backend_1.0

restart: always

networks:

- devapp-net

environment:

PORT: 8000

GUESTBOOK_DB_ADDR: mongodb:27017

frontend:

image: leecloudo/guestbook:frontend_1.0

networks:

- devapp-net

ports:

- "3000:8000"

restart: always

environment:

PORT: 8000

GUESTBOOK_API_ADDR: backend:8000

networks:

devapp-net: {}

## 컨테이너 서비스 실행

kevin@hostos1:~/LABs/3tier$ docker-compose up -d

## 실행 중인 컨테이너 서비스 출력

kevin@hostos1:~/LABs/3tier$ docker-compose ps실습 4

kevin@hostos1:~/LABs$ git clone https://github.com/brayanlee/cloud-webapp.git

kevin@hostos1:~/LABs$ cd cloud-webapp/

kevin@hostos1:~/LABs/cloud-webapp$ docker-compose build --no-cache실습 (MSA: Nginx Load Balancing)

kevin@hostos1:~/LABs$ mkdir nginx_alb

kevin@hostos1:~/LABs$ cd nginx_alb/

## yaml 코드 작성

kevin@hostos1:~/LABs/nginx_alb$ vi docker-compose.yml

version: '3'

services:

pyfla_app1:

build:

context: ./pyfla_app1

dockerfile: Dockerfile-pyapp1

ports:

- "5001:5000"

pyfla_app2:

build:

context: ./pyfla_app2

dockerfile: Dockerfile-pyapp2

ports:

- "5002:5000"

pyfla_app3:

build:

context: ./pyfla_app3

dockerfile: Dockerfile-pyapp3

ports:

- "5003:5000"

nginx:

build:

context: ./nginx_alb

dockerfile: Dockerfile-nginx

ports:

- "8080:80"

depends_on:

- pyfla_app1

- pyfla_app2

- pyfla_app3

## 디렉터리 생성

kevin@hostos1:~/LABs/nginx_alb$ mkdir nginx_alb

kevin@hostos1:~/LABs/nginx_alb$ cd nginx_alb/

## Dockerfile 작성

kevin@hostos1:~/LABs/nginx_alb/nginx_alb$ vi Dockerfile-nginx

FROM nginx:1.21

RUN rm /etc/nginx/conf.d/default.conf

COPY nginx.conf /etc/nginx/conf.d/default.conf

## conf 파일 작성

kevin@hostos1:~/LABs/nginx_alb/nginx_alb$ vi nginx.conf

upstream web-alb {

server 172.17.0.1:5001;

server 172.17.0.1:5002;

server 172.17.0.1:5003;

}

server {

location / {

proxy_pass http://web-alb;

}

}

## 디렉터리 생성

kevin@hostos1:~/LABs/nginx_alb$ mkdir pyfla_app1

## Dockerfile 작성

kevin@hostos1:~/LABs/nginx_alb/pyfla_app1$ vi Dockerfile-pyapp1

FROM python:3

COPY ./requirements.txt /requirements.txt

WORKDIR /

RUN pip install -r requirements.txt

COPY . /

CMD ["python3", "pyfla_app1.py"]

## py 파일 작성

kevin@hostos1:~/LABs/nginx_alb/pyfla_app1$ vi pyfla_app1.py

from flask import request, Flask

import json

app1 = Flask(__name__)

@app1.route('/')

def hello_world():

return 'Nginx Web Application Load Balancing Server [1]' + '\n'

if __name__ == '__main__':

app1.run(debug=True, host='0.0.0.0')

## requirements 파일 작성

kevin@hostos1:~/LABs/nginx_alb/pyfla_app1$ vi requirements.txt

Flask==2.1.0

## pyfla_app2, 3에도 동일하게 생성

kevin@hostos1:~/LABs/nginx_alb$ mkdir pyfla_app2

kevin@hostos1:~/LABs/nginx_alb$ mkdir pyfla_app3

## 디렉터리 구조 확인

kevin@hostos1:~/LABs/nginx_alb$ tree

.

├── docker-compose.yml

├── nginx_alb

│ ├── Dockerfile-nginx

│ └── nginx.conf

├── pyfla_app1

│ ├── Dockerfile-pyapp1

│ ├── pyfla_app1.py

│ └── requirements.txt

├── pyfla_app2

│ ├── Dockerfile-pyapp2

│ ├── pyfla_app2.py

│ └── requirements.txt

└── pyfla_app3

├── Dockerfile-pyapp3

├── pyfla_app3.py

└── requirements.txt

## 컨테이너 서비스 실행

kevin@hostos1:~/LABs/nginx_alb$ docker-compose upDocker swam cluster 구축

RAFT -> manager 선출

- manager node -> cluster 관리, service 실행도 가능

- worker node -> service 실행도 가능

- container service 시각화

- swarmpit 모니터링 도구

=> kubernetes와 비교하기 위해 구축해본다.

실습

init 작업하기

docker swarm init -> join key -> worker와 연결

kubeadm init -> kubeadm join key -> worker와 연결

## swarm active 상태 확인

kevin@swarm-manager:~$ docker info | grep -i swarm

Swarm: inactive

Name: swarm-manager

## swarm 초기화

kevin@swarm-manager:~$ docker swarm init --advertise-addr 192.168.56.101

Swarm initialized: current node (fod6u2ah9np0yo85k2r1zmnr2) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-50v665nbjgt7ut4l8gqqfyp8az14axcl7fom8uzlqn6vfbrocj-dr0mrzwekwe04mkfg55zau8xv 192.168.56.101:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

## 방화벽 제거

kevin@swarm-manager:~$ sudo ufw disable

## 각 worker에서 실행

docker swarm join --token SWMTKN-1-50v665nbjgt7ut4l8gqqfyp8az14axcl7fom8uzlqn6vfbrocj-dr0mrzwekwe04mkfg55zau8xv 192.168.56.101:2377

kevin@swarm-manager:~$ docker info | grep -i swarm

Swarm: active

Name: swarm-manager

kevin@swarm-manager:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

fod6u2ah9np0yo85k2r1zmnr2 * swarm-manager Ready Active Leader 20.10.18

ltfcbr3oorule2ri7g793w6du swarm-worker1 Ready Active 20.10.18

9yq9grtj2q8vmya6fcfziv2c2 swarm-worker2 Ready Active 20.10.18

## 열린 포트 확인하기

kevin@swarm-manager:~$ sudo netstat -nlp | grep dockerd

tcp6 0 0 :::7946 :::* LISTEN 922/dockerd

tcp6 0 0 :::2377 :::* LISTEN 922/dockerd

udp6 0 0 :::7946 :::* 922/dockerd

...

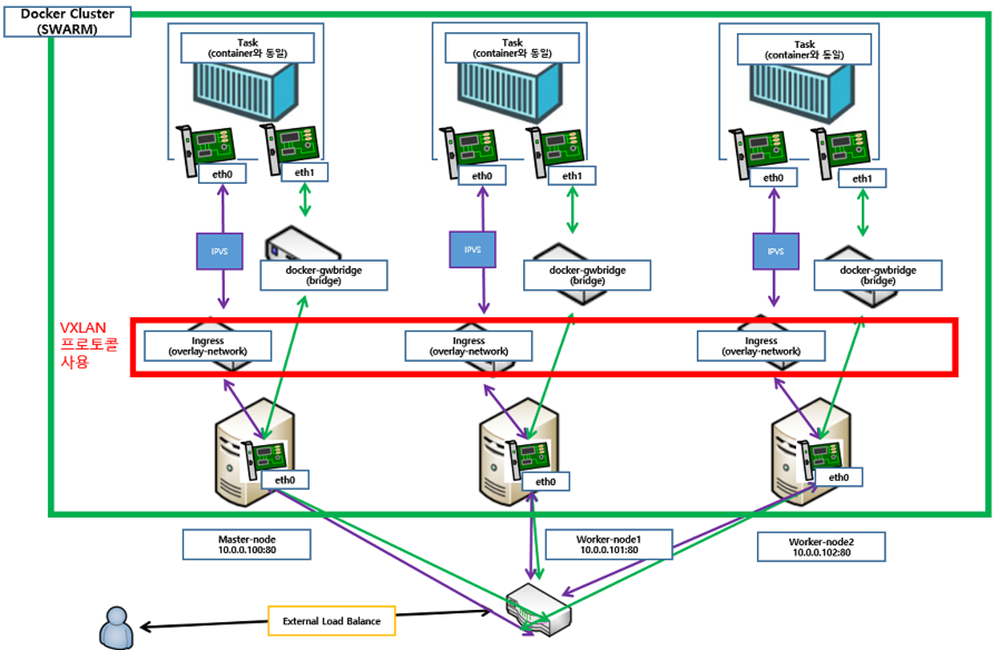

kevin@swarm-manager:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

f6dcc52e720e docker_gwbridge bridge local

s54mwpx0izi7 ingress overlay swarm

...

ingress(overlay)는 서로 다른 host에서 사용한다.

gwbridge는 안에서 사용한다.

kevin@swarm-manager:~$ docker service create \

> --name=viz_swarm \

> --publish=8082:8080 \

> --constraint=node.role==manager \

> --mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock \

> dockersamples/visualizer

## http://192.168.56.101:8082/ 접속해서 확인

kevin@swarm-manager:~$ docker run -it --restart=always --name=swarmpit-installer -v /var/run/docker.sock:/var/run/docker.sock swarmpit/install:1.9

## Application setup

Enter stack name [swarmpit]: swarmpit

Enter application port [888]: 888

Enter database volume driver [local]: local

Enter admin username [admin]: admin

Enter admin password (min 8 characters long): pass123#

kevin@swarm-manager:~$ docker stack ls

NAME SERVICES ORCHESTRATOR

swarmpit 4 Swarm

kevin@swarm-manager:~$ docker stack rm swarmpit

kevin@swarm-manager:~$ docker service create \

> ubuntu:14.04 \

> /bin/bash -c "while true; do echo 'hello docker-swarm'; sleep 2; done"

kevin@swarm-manager:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

l6dch7v39hva viz_swarm replicated 1/1 dockersamples/visualizer:latest *:8082->8080/tcp

nl1oyzx778we xenodochial_jang replicated 1/1 ubuntu:14.04

kevin@swarm-manager:~$ docker service logs -f xenodochial_jang

xenodochial_jang.1.8r9uhuax67zu@swarm-worker1 | hello docker-swarm

xenodochial_jang.1.8r9uhuax67zu@swarm-worker1 | hello docker-swarm

kevin@swarm-manager:~$ docker service rm xenodochial_jang

kevin@swarm-manager:~$ docker service lsnginx service

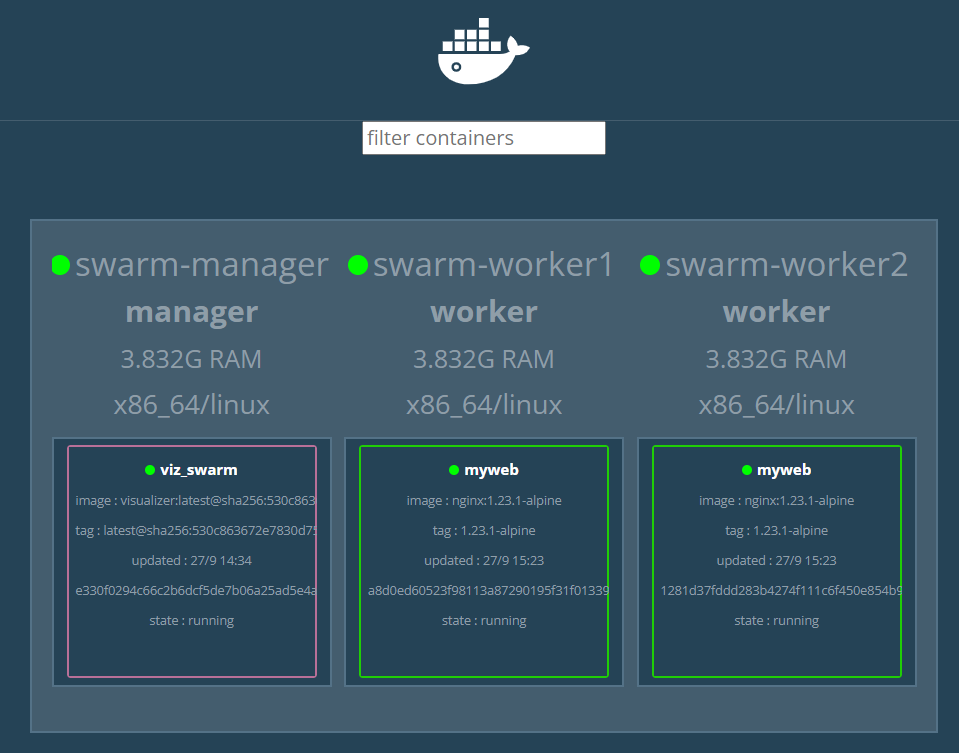

kevin@swarm-manager:~$ docker service create --name myweb \

> --replicas 2 -p 10001:80 nginx:1.23.1-alpine

# 2개가 올라온 것을 확인할 수 있다.

kevin@swarm-manager:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

x3wha435qn6k myweb replicated 2/2 nginx:1.23.1-alpine *:10001->80/tcp

kevin@swarm-manager:~$ docker service ps myweb

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

3qw6pidrijyc myweb.1 nginx:1.23.1-alpine swarm-worker1 Running Running about a minute ago

npgdg00qpq08 myweb.2 nginx:1.23.1-alpine swarm-worker2 Running Running about a minute ago

## 어디에 접속해도 접근할 수 있다.

## http://192.168.56.101:10001/

## http://192.168.56.102:10001/

## http://192.168.56.103:10001/

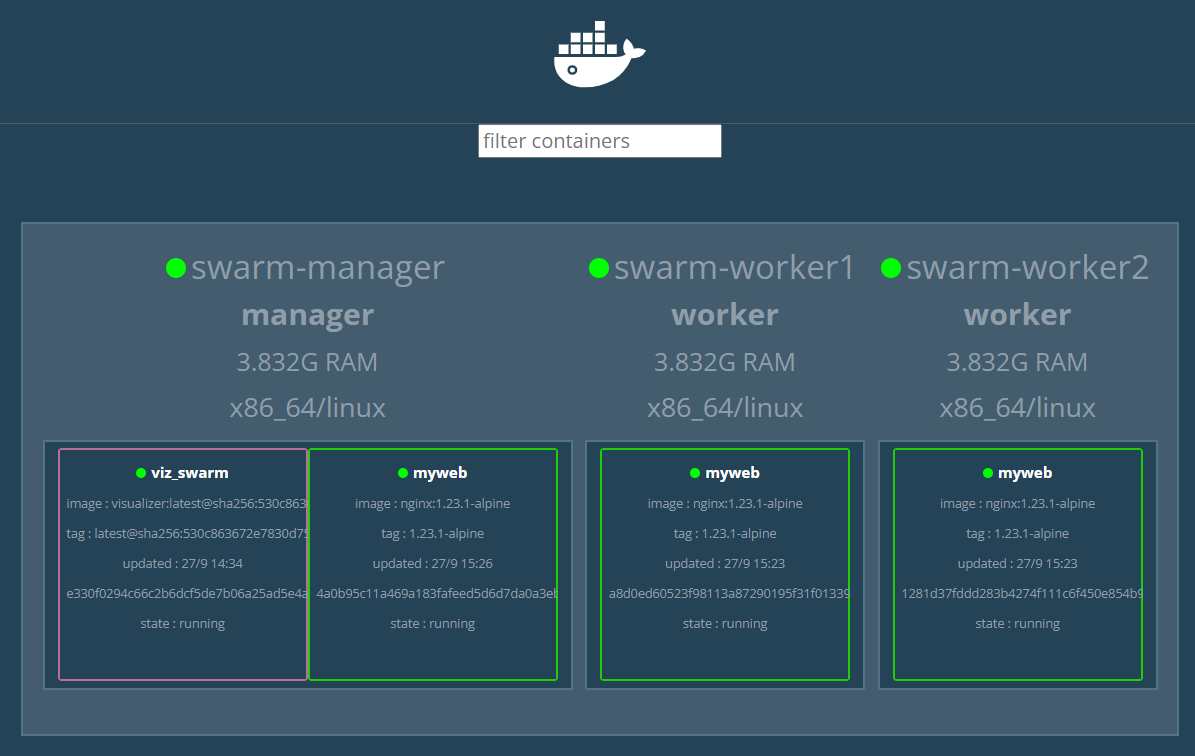

## 3개로 scale out 하고 싶다.

kevin@swarm-manager:~$ docker service scale myweb=3

kevin@swarm-manager:~$ docker service scale myweb=6

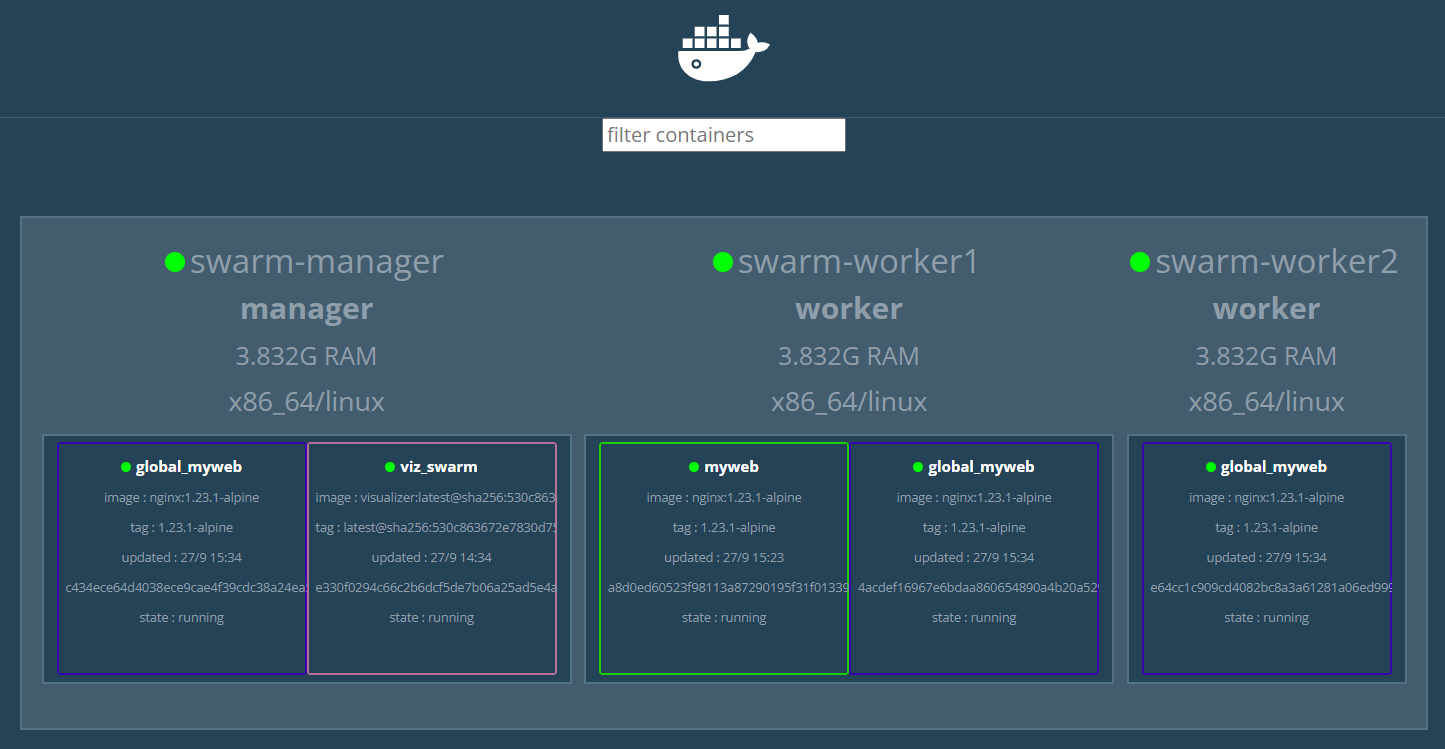

daemon set

: 모든 노드를 모니터링 할 때 사용한다.

kevin@swarm-manager:~$ docker service create --name global_myweb --mode global nginx:1.23.1-alpine

kevin@swarm-manager:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

yzqfgs9szbu2 global_myweb global 3/3 nginx:1.23.1-alpine

...

kevin@swarm-manager:~$ docker service ps global_myweb

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

eo0bam5omns1 global_myweb.9yq9grtj2q8vmya6fcfziv2c2 nginx:1.23.1-alpine swarm-worker2 Running Running about a minute ago

5msiaqwj3mgm global_myweb.fod6u2ah9np0yo85k2r1zmnr2 nginx:1.23.1-alpine swarm-manager Running Running about a minute ago

sq62ilwk71s2 global_myweb.ltfcbr3oorule2ri7g793w6du nginx:1.23.1-alpine swarm-worker1 Running Running about a minute ago

kevin@swarm-manager:~$ docker service scale myweb=3

kevin@swarm-manager:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

yzqfgs9szbu2 global_myweb global 3/3 nginx:1.23.1-alpine

x3wha435qn6k myweb replicated 3/3 nginx:1.23.1-alpine *:10001->80/tcp

l6dch7v39hva viz_swarm replicated 1/1 dockersamples/visualizer:latest *:8082->8080/tcp

kevin@swarm-manager:~$ docker service ps myweb

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

3qw6pidrijyc myweb.1 nginx:1.23.1-alpine swarm-worker1 Running Running 14 minutes ago

8egfj8adgmz8 myweb.2 nginx:1.23.1-alpine swarm-worker2 Running Running 22 seconds ago

jkqndu8ue491 myweb.3 nginx:1.23.1-alpine swarm-manager Running Running 22 seconds ago

## 서비스를 강제로 죽여도 바로 다시 새로운 것을 생성해준다.

kevin@swarm-manager:~$ docker rm -f myweb.3.jkqndu8ue491wlfy96bc3r89x

kevin@swarm-manager:~$ docker service ps myweb

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

3qw6pidrijyc myweb.1 nginx:1.23.1-alpine swarm-worker1 Running Running 16 minutes ago

8egfj8adgmz8 myweb.2 nginx:1.23.1-alpine swarm-worker2 Running Running 2 minutes ago

ez5139e0rnwi myweb.3 nginx:1.23.1-alpine swarm-manager Running Running 12 seconds ago

jkqndu8ue491 \_ myweb.3 nginx:1.23.1-alpine swarm-manager Shutdown Failed 18 seconds ago "task: non-zero exit (137)"

## node에 다시 골고루 분배하려면 scale을 1로 했다가 다시 3으로 하는 방법 밖에 없다.

모든 노드에서 실행된다(daemon set)

버전 업데이트

kevin@swarm-manager:~$ docker service create --name myweb2 --replicas 3 nginx:1.10

kevin@swarm-manager:~$ docker service ls

kevin@swarm-manager:~$ docker service ps myweb2

## dev team으로부터 image version update 요청이 들어왔다.

## 무중단으로 rolling update

kevin@swarm-manager:~$ docker service update --image nginx:1.23.1-alpine myweb2

## 하나씩 올리고 내리면서 바꿔준다.

kevin@swarm-manager:~$ docker service ps myweb2

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

jync4sy4u167 myweb2.1 nginx:1.23.1-alpine swarm-worker2 Running Running 25 seconds ago

7qjls4vc6884 \_ myweb2.1 nginx:1.10 swarm-worker2 Shutdown Shutdown 27 seconds ago

vuuhm50rv0ms myweb2.2 nginx:1.23.1-alpine swarm-manager Running Running 22 seconds ago

z25d8bmx0un9 \_ myweb2.2 nginx:1.10 swarm-worker1 Shutdown Shutdown 24 seconds ago

tyiaqqxs8ety \_ myweb2.2 nginx:1.10 swarm-manager Shutdown Rejected 2 minutes ago "No such image: nginx:1.10"

l1r18gz3ueks \_ myweb2.2 nginx:1.10 swarm-manager Shutdown Rejected 2 minutes ago "No such image: nginx:1.10"

quc0h0gcyh34 \_ myweb2.2 nginx:1.10 swarm-manager Shutdown Rejected 2 minutes ago "No such image: nginx:1.10"

0htld4v7rj6y myweb2.3 nginx:1.23.1-alpine swarm-worker1 Running Running 19 seconds ago

lk3un6k8pzpv \_ myweb2.3 nginx:1.10 swarm-worker1 Shutdown Shutdown 21 seconds ago Docker swarm + HAproxy + Nginx를 활용한 Web service와 Load Balancing

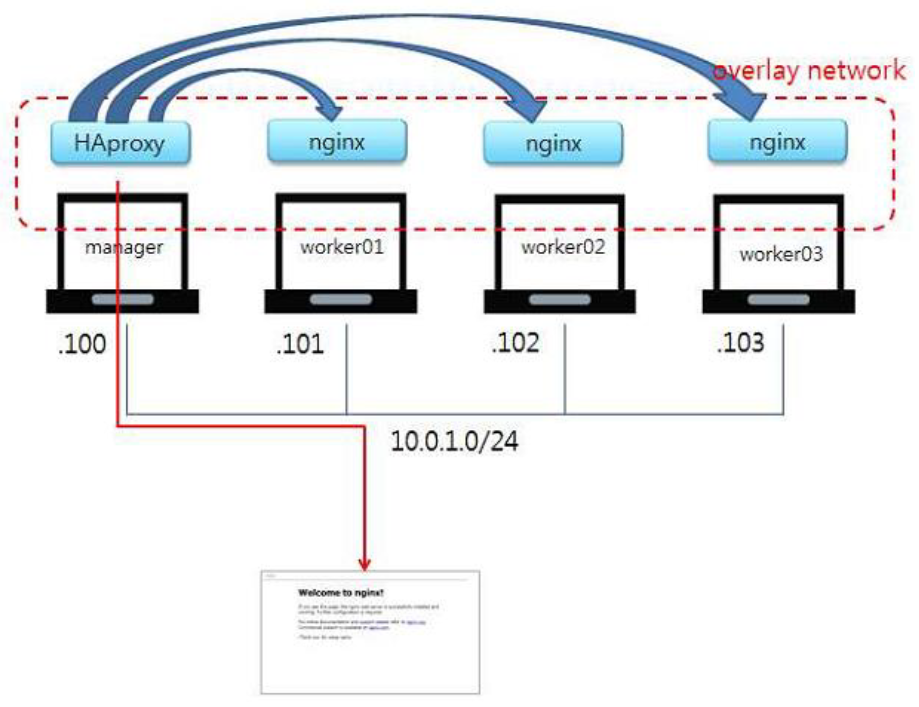

: Swarm-manager에 haproxy를 설치한 뒤 해당 IP 로 웹 접속이 있을 경우 이를 동일 overlay 네트워크 내에 있는 nginx 컨테이너로 트래픽을 분산시켜 주는 방법이다.

kevin@swarm-manager:~/LABs$ mkdir haproxy-nginx

kevin@swarm-manager:~/LABs$ cd haproxy-nginx/

kevin@swarm-manager:~/LABs/haproxy-nginx$ docker network create --driver=overlay --attachable haproxy-web

kevin@swarm-manager:~/LABs/haproxy-nginx$ docker network ls

NETWORK ID NAME DRIVER SCOPE

1u2d04eoj087 haproxy-web overlay swarm

kevin@swarm-manager:~/LABs/haproxy-nginx$ vi haproxy-web.yaml

version: '3'

services:

nginx:

image: nginx:1.23.1-alpine

deploy:

replicas: 4

placement:

constraints: [node.role != manager]

restart_policy:

condition: on-failure

max-attempts: 3

environment:

SERVICE_PORTS: 80

networks:

- haproxy-web

proxy:

image: dbgurum/haproxy:1.0

depends_on:

- nginx

volumes:

- /var/run/docker.sock:/var/run/docker.sock

ports:

- 80:80

networks:

- haproxy-web

deploy:

mode: global

placement:

constraints: [node.role == manager]

networks:

haproxy-web:

external: true

## docker stack 구성

kevin@swarm-manager:~/LABs/haproxy-nginx$ docker stack deploy --compose-file=haproxy-web.yaml haproxy-web

kevin@swarm-manager:~/LABs/haproxy-nginx$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

yzqfgs9szbu2 global_myweb global 3/3 nginx:1.23.1-alpine

stef2qxns7ut haproxy-web_nginx replicated 4/4 nginx:1.23.1-alpine

y5ajo1aw3ufo haproxy-web_proxy global 0/1 dbgurum/haproxy:1.0 *:80->80/tcp

x3wha435qn6k myweb replicated 3/3 nginx:1.23.1-alpine *:10001->80/tcp

krs84azszmn6 myweb2 replicated 3/3 nginx:1.23.1-alpine

l6dch7v39hva viz_swarm replicated 1/1 dockersamples/visualizer:latest *:8082->8080/tcp

kevin@swarm-manager:~/LABs/haproxy-nginx$ docker stack services haproxy-web

ID NAME MODE REPLICAS IMAGE PORTS

stef2qxns7ut haproxy-web_nginx replicated 4/4 nginx:1.23.1-alpine

y5ajo1aw3ufo haproxy-web_proxy global 1/1 dbgurum/haproxy:1.0 *:80->80/tcp

## http://192.168.56.101/ 접속해서 확인

## 생성된 서비스의 로그 확인

kevin@swarm-manager:~/LABs/haproxy-nginx$ docker service logs -f haproxy-web_nginx

haproxy-web_nginx.4.z9xxg2767031@swarm-worker1 | 10.0.2.10 - - [27/Sep/2022:07:25:07 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36" "10.0.0.2"

haproxy-web_nginx.1.fyo88vbn6e4i@swarm-worker2 | 10.0.2.10 - - [27/Sep/2022:07:25:09 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36" "10.0.0.2"