MySQL과 ElasticSearch의 데이터 동기화

두 가지 방법이 있다

- MySQL에 CRUD가 발생할 때마다 ElasticSearch에 직접 CRUD해주기

- Logstash로 MySQL의 변화를 감지해 ElasticSearch에 업데이트하기

여러가지 이유로 후자가 합리적이어 보인다.

구현

작동 방식

- 흐름

- Logstash에 JDBC 입력 플러그인 설치 → 이 JDBC 플러그인은 주기적으로 MySQL을 폴링 → 마지막 폴링 이후 MySQL에 발생한 변화를 감지해 ElasticSearch에 업데이트

- 조건

1. MySQL문서가 ElasticSearch로 작성될 때 ES의 _id필드는 MySQL의 id로 설정되어야 함.

2. MySQL에 레코드가 삽입되거나 업데이트 되면, 그 레코드는 업데이트 또는 삽입 시간을 포함하는 필드를 가져야 함. 이 데이터는 폴링에서 활용됨.

3. MySQL에서의 데이터 삭제를 ES에 반영하기 위해서는 is_deleted 필드를 가져야 함.

실제 구현

- 내 환경 설정과 미리 알면 좋은 것들

ES와 키바나는 이미 연결되어있다고 가정

도커 및 도커 컴포즈를 사용한다

JDBC connector는 직접 다운해야하지만 JDBC input plugin은 이미지 다운받으면 자동으로 설정된다

ES와 logstash를 연결하기 위해선 es와 kibana 연결할 때 사용했던 인증서가 필요하다

나는 yml이나 conf같은 설정파일들은 로컬에서 작성하고 도커 컨테이너가 만들어질 때 복사하도록 설정했다 (컨테이너에 직접 들어가서 파일을 만들어도 됨)

1) logstash 이미지 다운 및 컨테이너 설정 및 컨테이너 올리기

logstash:

image: docker.elastic.co/logstash/logstash:8.17.1

container_name: logstash

build:

dockerfile: Dockerfile-logstash

ports:

- 5044:5044### 2) *.conf 파일 작성

input {

jdbc {

jdbc_driver_library => "/usr/share/logstash/logstash-core/lib/jars/mysql-connector-java-8.0.30.jar"

jdbc_driver_class => "com.mysql.cj.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://mysql:3306/picky?useSSL=false&useUnicode=true&characterEncoding=UTF-8&autoReconnection=true"

jdbc_user => "유저명"

jdbc_password => "비밀번호"

tracking_column => "unix_ts_in_secs"

use_column_value => true

tracking_column_type => "numeric"

schedule => "*/10 * * * * *"

statement => "SELECT *, UNIX_TIMESTAMP(updated_at) AS unix_ts_in_secs FROM movie WHERE (UNIX_TIMESTAMP(updated_at) > :sql_last_value AND updated_at < NOW()) ORDER BY updated_at ASC"

last_run_metadata_path => "/usr/share/logstash/.logstash_jdbc_last_run"

}

}

filter {

mutate {

copy => { "id" => "[@metadata][movieId]" }

}

date {

match => ["updated_at", "yyyy-MM-dd HH:mm:ss.SSSSSS"]

target => "updated_at"

timezone => "Asia/Seoul"

}

mutate {

remove_field => ["id", "@version", "unix_ts_in_secs"]

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["https://es01:9200"]

ssl_certificate_authorities => ["/usr/share/logstash/config/certs/es01/es01.crt"]

user => 유저명

password => 비밀번호

index => "connector-movie"

document_id => "%{[@metadata][movieId]}"

}

}- MySQL과 ES를 어떻게 연결할지에 대한 설정파일 및 파이프라인 설정이다.

- 인덱스 하나당 하나의 설정파일을 만들어야 한다.

- 파일의 이름은 상관없다. 확장자만 .conf면 된다.

- 설명

- input

- MySQL에 대한 설정이다. 어떤 JDBC를 쓰고, db url은 무엇이고, 유저명과 비밀번호, 얼마 주기로 동작할지 스케줄러, 어떤 쿼리를 날릴지가 들어간다.

- filter

- MySQL에서 가져온 레코드를 ES에 어떻게 넣을지 정제한다. 구현 중에 애를 좀 먹었는데 업데이트할지 안 할지 기준인 timestamp로 쓰이는 필드의 형식이 MySQL과 ES가 다르다고 느꼈다. 그래서 그걸 맞춰주느라 match라는 부분을 넣었다.

- timezone은 서울로 맞춰줬다. 서버, mysql, es 모두가 같은 시간대를 공유하도록 해야 오류가 안 난다. 나는 전부 한국으로 통일했다.

- output

- 정제된 데이터가 ES의 어디에 저장될지 설정한다.

- ES와 통신할 때 필요한 인증서의 위치를 설정. 여기까지 읽었다면 많이들 겪었겠지만 ElasticSearch 8 부터는 ssl없이는 연결이 불가능하다. xpack옵션으로 ssl을 disable하면 된다고하는데 그건 버전 7이고, 버전 8부터는 해당 옵션 자체가 적혀있어도 무시된다.

- index는 데이터 넣을 인덱스명을 넣으면 된다.

3) logstash.yml 파일 작성

api.http.host: "0.0.0.0"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.hosts: [ "https://es01:9200" ]

xpack.monitoring.elasticsearch.ssl.certificate_authority: "/usr/share/logstash/config/certs/es01/es01.crt"

xpack.monitoring.elasticsearch.username: 유저명

xpack.monitoring.elasticsearch.password: 비밀번호

path.config : "pipeline"- 위와 같은 설정들이 들어간다.

- 호스트, 인증서, 유저명, 비밀번호는 직관적으로 보이고,

- path.config는 위에서 작성했던 *.conf파일들이 저장되어있는 경로를 명시한다.

4) Dockerfile-logstash 파일 작성

FROM docker.elastic.co/logstash/logstash:8.17.1

USER root

RUN mkdir -p /usr/share/logstash/logstash-core/lib/jars/ && \

curl -L -O https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-java-8.0.30.tar.gz && \

tar -xzf mysql-connector-java-8.0.30.tar.gz -C /tmp && \

ls -lah /tmp && \

cp /tmp/mysql-connector-java-8.0.30/mysql-connector-java-8.0.30.jar /usr/share/logstash/logstash-core/lib/jars/ && \

chown logstash:logstash /usr/share/logstash/logstash-core/lib/jars/mysql-connector-java-8.0.30.jar && \

chmod 755 /usr/share/logstash/logstash-core/lib/jars/mysql-connector-java-8.0.30.jar && \

rm -rf /tmp/mysql-connector-java-8.0.30.tar.gz /tmp/mysql-connector-java-8.0.30

RUN mkdir -p /usr/share/logstash/config/certs/ && \

chown -R logstash:logstash /usr/share/logstash/config/certs/ && \

chmod -R 755 /usr/share/logstash/config/certs/

COPY ./logstash/config /usr/share/logstash/config

COPY ./logstash/pipeline /usr/share/logstash/pipeline

COPY ./certs /usr/share/logstash/config/certs

RUN chown -R logstash:logstash /usr/share/logstash/config/certs/ && \

chmod -R 755 /usr/share/logstash/config/certs/

USER logstash- dockerfile은 컨테이너를 빌드할 때 필요한 설정파일이다. 간단하게 mysql을 올리는 경우에는 굳이 작성할 필요가 없지만, CI/CD를 위해서 플젝 jars파일을 만든다거나, 지금처럼 logstash 컨테이너를 올리는데 사전에 설정을 해줘야하는 게 있다면 작성해서 넣어주는 것

- 간단히 설명을 하자면

- 첫 번째 RUN에서 JDBC connector를 다운받아서 압축을 풀고 logstash 컨테이너 내부에 적절한 곳 (/usr/share/logstash/logstash-core/lib/jars) 에 넣는다

- 두 번째 RUN에서는 컨테이너 내부에 인증서를 저장할 폴더를 만들고 권한을 수정한다

- 세 번째 문단 COPY는 로컬에서 각각 파일을 복사해온다. 순서대로, logstash 파이프라인 설정파일 (logstash.yml), logstash 설정파일(*.cnf), 필요한 인증서 (이 인증서들은 es와 kibana 생성할 때 로컬에 복사해둔 인증서) 복사이다.

- 네 번째 RUN은 복사된 인증서의 권한을 수정

- 필요한 파일과 해당 파일들의 위치

- 인증서(.crt): 임의의 루트 아무데나 있으면 된다. 나는 설정파일이랑 같이 관리하려고 /usr/share/logstash/config/certs라는 경로를 만들어서 여기 저장했지만, 어디에 저장하든 yml과 conf에 인증서 경로만 잘 적어주면 된다

- logstash.yml: logstash 설정파일이다. /usr/share/logstash/config 에 뒀다.

- *.cnf: 아무데나 둬도 된다. logstash.yml에서 어느 디렉토리를 참조해야하는지만 명시하면 된다. 근데 usr/share/logstash/pipeline이라는 디렉토리가 기본적으로 생성되기 때문에 나는 여기 저장했다.

완성!

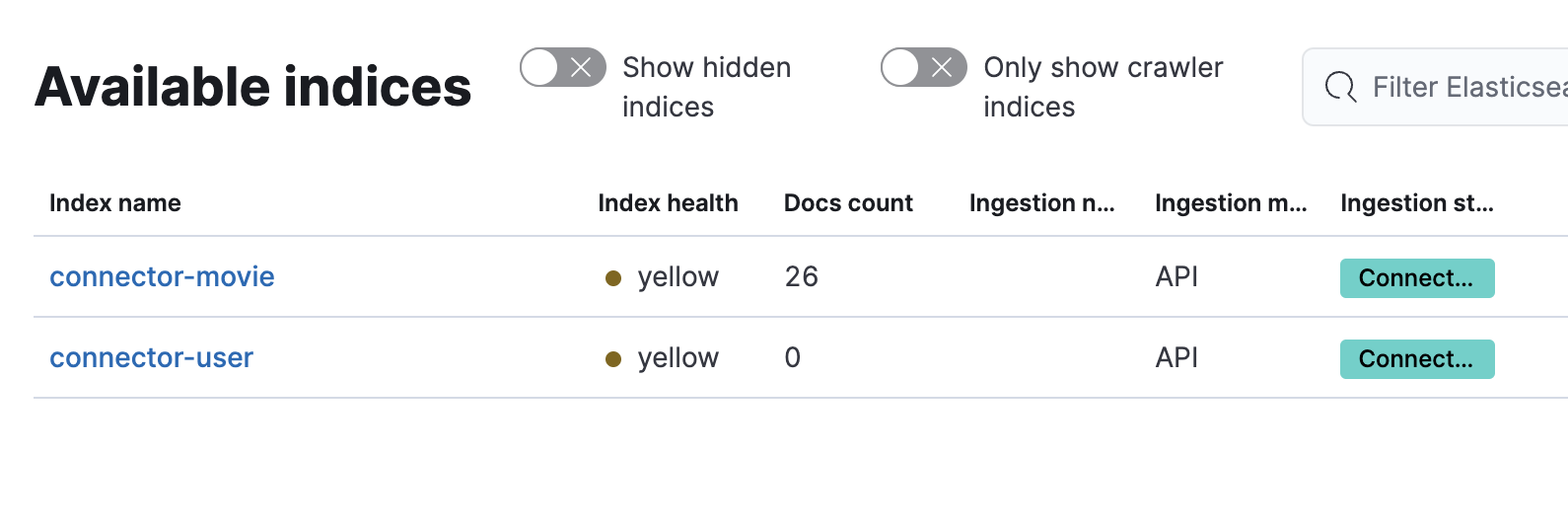

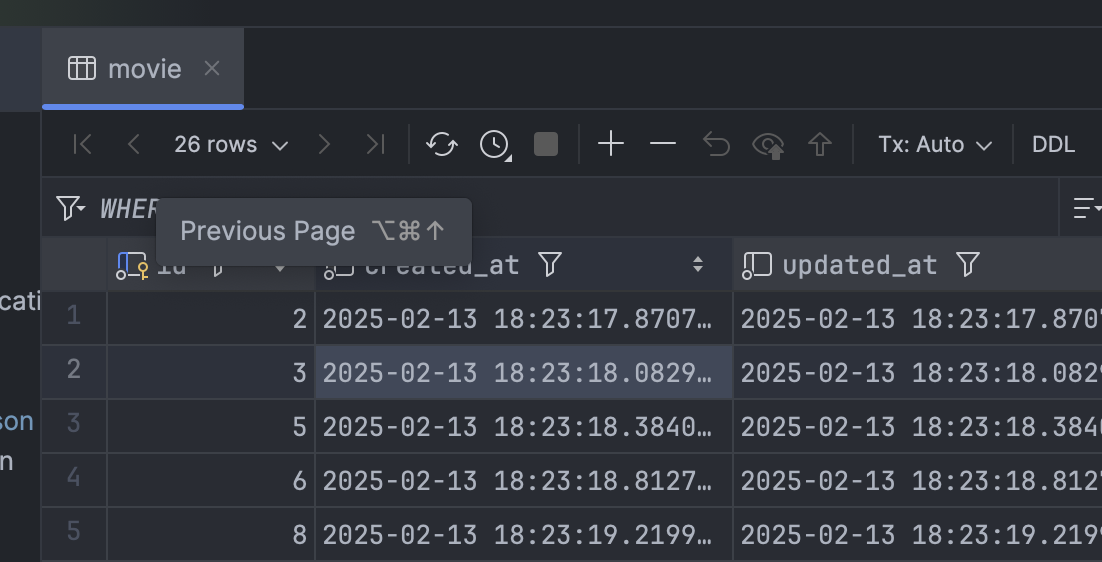

ES와 MySQL의 자료개수가 똑같다. (movie)

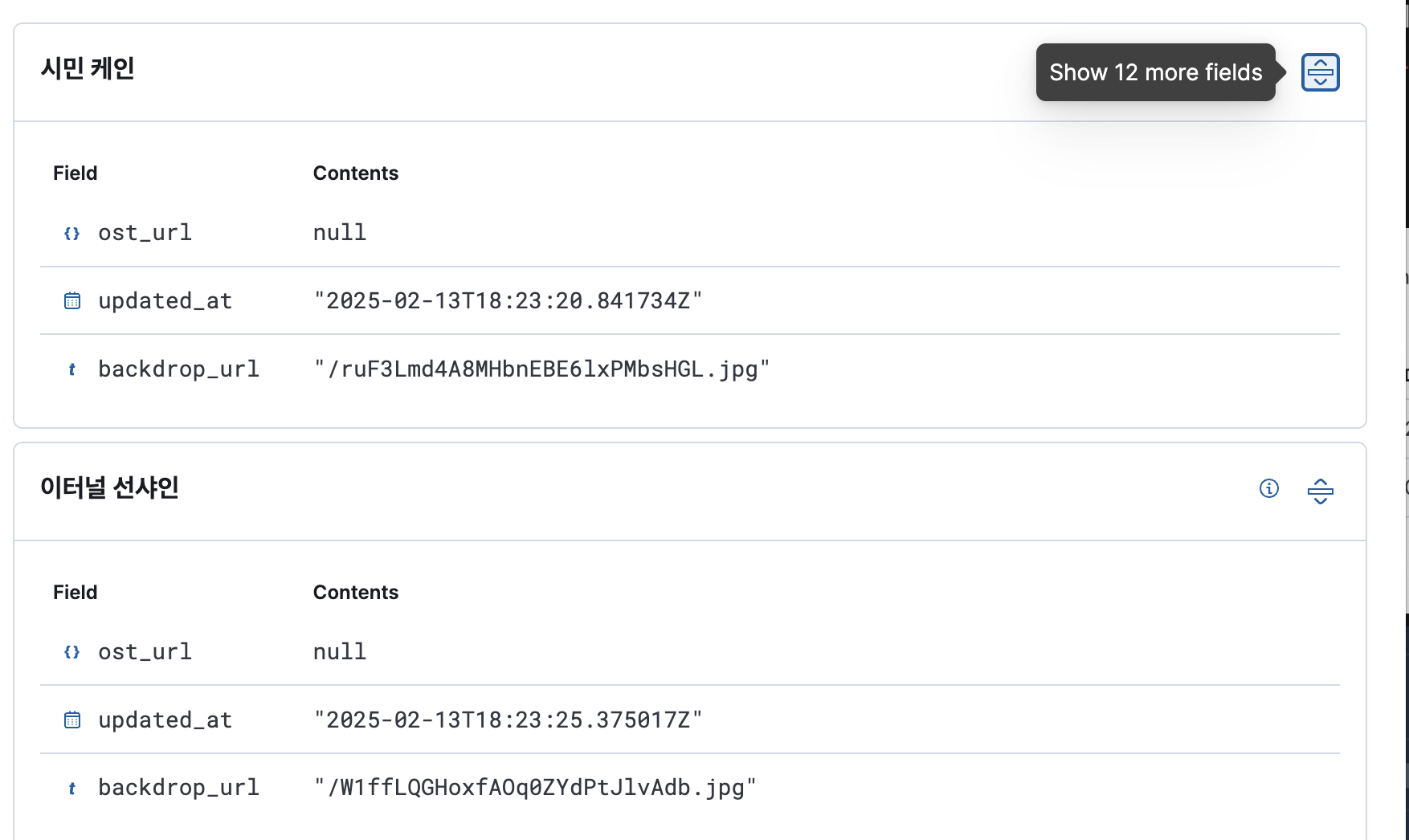

필요했던 데이터들이 잘 들어와있다. 3개의 필드만 보이는데 열면 12개의 필드가 더 있다.

회고

- 여러번 포기했다가 다시 도전했다. 대충 12시간 쯤 걸린 것 같다. 만약 도커를 안 쓰고 그냥 설정을 했더라면 훨씬 빨랐을 거다. 근데 나는 팀원들이 docker-compose up -d 하나 하는 것만으로 모든 설정이 끝나길 바랬다. 그래서 꾸역꾸역 해냈다.

지금은 docker-compose up -d와 인증서를 jks로 변환하는 명령어 두 개만 입력하면 MySQL, Redis, ElasticSearch(인덱스와 플러그인까지), Kibana, Logstash까지 모든 설정이 끝난다. - 정말 많고 자잘한 오류를 만났다. 이상하게 자료들도 다 옛날거고 심지어 대부분은 도커를 안 쓰기 때문에 트러블 슈팅하느라 꽤 애먹었다. Elastic에서 공식으로 내놓은 jdbc 연결하는 문서도 2019년에 작성돼서 이걸 믿어도 되나 상당히 의심하며 구현했다.

- 예전 프로젝트할 때 이미 사용했었는데 사실 ElasticSearch는 돈주고 api 결제하면 인증서부터해서 갖가지 다른 설정이 아예 필요가 없어진다 (…)

개선점

- 개선점은 아니고 추후에 해보면 좋을 것 같은 사항인데, logstash는 단순히 RDBMS와 ES의 자료 동기화를 위해서만 쓰이는 게 아니다. 사실 이건 기능 중 일부이다. 다양한 플랫폼과 자료형을 지원하기 때문에 로그나 자료수집해서 분석하는 도구로도 많이 사용하는 것 같았다. 나중에 서비스 모니터링 환경을 다시 구축하게 된다면 접목해보는 것도 좋을 것 같다.