✍ EKS Observability 실습환경 배포

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# mount -t efs -o tls $EfsFsId:/ /mnt/myefs

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs 1.9G 484K 1.9G 1% /run

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/nvme0n1p1 30G 2.7G 28G 9% /

tmpfs 388M 0 388M 0% /run/user/1000

127.0.0.1:/ 8.0E 0 8.0E 0% /mnt/myefs

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# df -hT --type nfs4

Filesystem Type Size Used Avail Use% Mounted on

127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /mnt/myefs

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

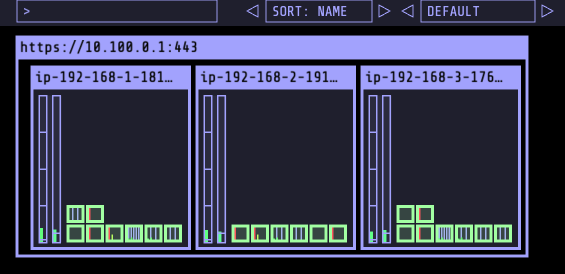

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

NAME STATUS ROLES AGE VERSION INSTANCE-TYPE CAPACITYTYPE ZONE

ip-192-168-1-181.ap-northeast-2.compute.internal Ready <none> 4m41s v1.24.13-eks-0a21954 t3.xlarge ON_DEMAND ap-northeast-2a

ip-192-168-2-191.ap-northeast-2.compute.internal Ready <none> 4m41s v1.24.13-eks-0a21954 t3.xlarge ON_DEMAND ap-northeast-2b

ip-192-168-3-176.ap-northeast-2.compute.internal Ready <none> 4m42s v1.24.13-eks-0a21954 t3.xlarge ON_DEMAND ap-northeast-2c

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# eksctl get iamidentitymapping --cluster myeks

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::070271542073:role/eksctl-myeks-nodegroup-ng1-NodeInstanceRole-9B3PQRJWO2PB system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# echo $N1, $N2, $N3

192.168.1.181, 192.168.2.191, 192.168.3.176

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

# 이미지 정보 확인

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

# eksctl 설치/업데이트 addon 확인

eksctl get addon --cluster $CLUSTER_NAME

# IRSA 확인

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

kube-system aws-load-balancer-controller arn:aws:iam::070271542073:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-7BD8E9A18Z8H

kube-system efs-csi-controller-sa arn:aws:iam::070271542073:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-6SMXACU3BEQQ

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

✍ EKS Console

쿠버네티스 API를 통해서 리소스 및 정보를 확인 할 수 있음

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl get ClusterRole | grep eks

eks:addon-manager 2023-05-20T13:30:22Z

eks:az-poller 2023-05-20T13:30:18Z

eks:certificate-controller-approver 2023-05-20T13:30:18Z

eks:certificate-controller-signer 2023-05-20T13:30:18Z

eks:cloud-controller-manager 2023-05-20T13:30:18Z참고자료 : https://www.eksworkshop.com/docs/observability/resource-view/workloads-view/

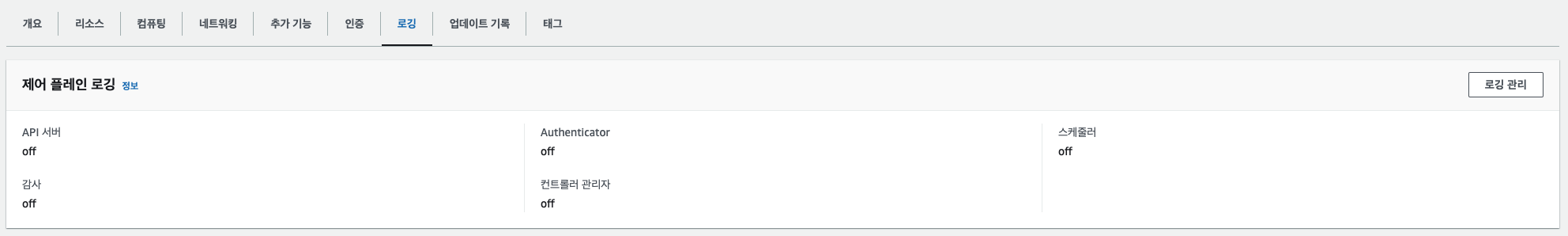

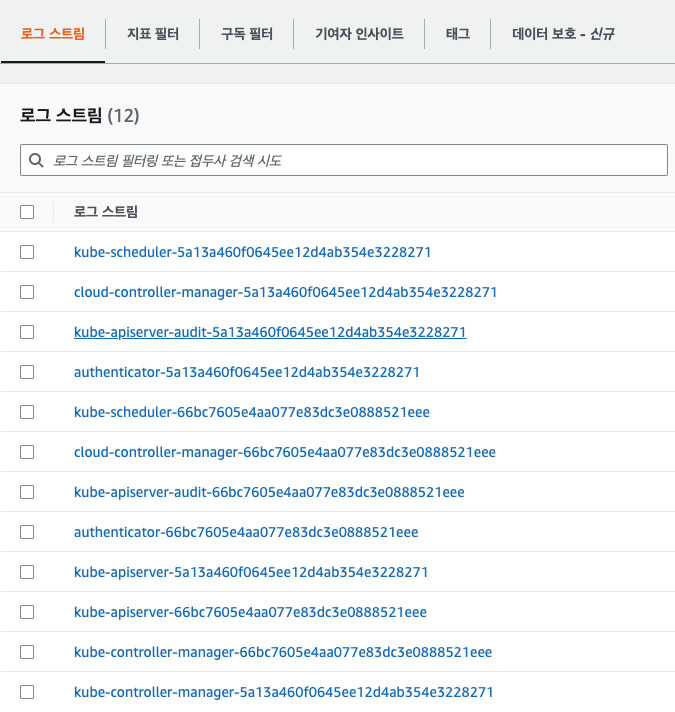

✍ Logging in EKS

# 모든 로깅 활성화

aws eks update-cluster-config --region $AWS_DEFAULT_REGION --name $CLUSTER_NAME \

--logging '{"clusterLogging":[{"types":["api","audit","authenticator","controllerManager","scheduler"],"enabled":true}]}'

# 로그 그룹 확인

aws logs describe-log-groups | jq

# 로그 tail 확인 : aws logs tail help

aws logs tail /aws/eks/$CLUSTER_NAME/cluster | more

# 신규 로그를 바로 출력

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --follow

# 필터 패턴

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --filter-pattern <필터 패턴>

# 로그 스트림이름

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --log-stream-name-prefix <로그 스트림 prefix> --follow

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --log-stream-name-prefix kube-controller-manager --follow

kubectl scale deployment -n kube-system coredns --replicas=1

kubectl scale deployment -n kube-system coredns --replicas=2

# 시간 지정: 1초(s) 1분(m) 1시간(h) 하루(d) 한주(w)

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --since 1h30m

# 짧게 출력

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --since 1h30m --format short

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"72daccae-ff1c-4acc-b7e8-a524a96fd034","stage":"ResponseComplete","requestURI":"/apis/coordination.k8s.io/v1/namespaces/kube-system/leases/cp-vpc-resource-controller","verb":"get","user":{"username":"eks:vpc-resource-controller","groups":["system:authenticated"]},"sourceIPs":["10.0.33.124"],"userAgent":"controller/v0.0.0 (linux/amd64) kubernetes/$Format/leader-election","objectRef":{"resource":"leases","namespace":"kube-system","name":"cp-vpc-resource-controller","apiGroup":"coordination.k8s.io","apiVersion":"v1"},"responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:59:46.578355Z","stageTimestamp":"2023-05-20T13:59:46.582180Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by RoleBinding \"eks-vpc-resource-controller-rolebinding/kube-system\" of Role \"eks-vpc-resource-controller-role\" to User \"eks:vpc-resource-controller\""}}

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Request","auditID":"dfda0468-daae-4bd6-9917-1d0a7f13f51a","stage":"ResponseComplete","requestURI":"/api/v1/nodes?limit=100","verb":"list","user":{"username":"eks:k8s-metrics","groups":["system:authenticated"]},"sourceIPs":["10.0.33.124"],"userAgent":"eks-k8s-metrics/v0.0.0 (linux/amd64) kubernetes/$Format","objectRef":{"resource":"nodes","apiVersion":"v1"},"responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:59:46.924973Z","stageTimestamp":"2023-05-20T13:59:47.005282Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"eks:k8s-metrics\" of ClusterRole \"eks:k8s-metrics\" to User \"eks:k8s-metrics\""}}

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Request","auditID":"466643f0-d6ef-4d8d-8346-1d4983f76190","stage":"ResponseComplete","requestURI":"/apis/apps/v1/deployments?labelSelector=app.kubernetes.io%2Fname+in+%28alb-ingress-controller%2Cappmesh-controller%2Caws-load-balancer-controller%29","verb":"list","user":{"username":"eks:k8s-metrics","groups":["system:authenticated"]},"sourceIPs":["10.0.33.124"],"userAgent":"eks-k8s-metrics/v0.0.0 (linux/amd64) kubernetes/$Format","objectRef":{"resource":"deployments","apiGroup":"apps","apiVersion":"v1"},"responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:59:47.013359Z","stageTimestamp":"2023-05-20T13:59:47.021439Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"eks:k8s-metrics\" of ClusterRole \"eks:k8s-metrics\" to User \"eks:k8s-metrics\""}}

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"25af9233-90da-4ca1-8375-4910316b16bc","stage":"ResponseComplete","requestURI":"/livez?exclude=kms-provider-0","verb":"get","user":{"username":"system:anonymous","groups":["system:unauthenticated"]},"sourceIPs":["127.0.0.1"],"userAgent":"Go-http-client/1.1","responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:59:47.012931Z","stageTimestamp":"2023-05-20T13:59:47.021987Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"system:public-info-viewer\" of ClusterRole \"system:public-info-viewer\" to Group \"system:unauthenticated\""}}

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"25bd40e4-5a7d-4134-965c-8ba5560d1558","stage":"ResponseComplete","requestURI":"/apis/coordination.k8s.io/v1/namespaces/kube-system/leases/kube-controller-manager?timeout=5s","verb":"get","user":{"username":"system:kube-controller-manager","groups":["system:authenticated"]},"sourceIPs":["10.0.127.115"],"userAgent":"kube-controller-manager/v1.24.13 (linux/amd64) kubernetes/6305d65/leader-election","objectRef":{"resource":"leases","namespace":"kube-system","name":"kube-controller-manager","apiGroup":"coordination.k8s.io","apiVersion":"v1"},"responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:42:26.867112Z","stageTimestamp":"2023-05-20T13:42:26.874794Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"system:kube-controller-manager\" of ClusterRole \"system:kube-controller-manager\" to User \"system:kube-controller-manager\""}}

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"55841d11-a344-41af-81fa-c4faff5ea103","stage":"ResponseComplete","requestURI":"/apis/coordination.k8s.io/v1/namespaces/kube-system/leases/kube-controller-manager?timeout=5s","verb":"update","user":{"username":"system:kube-controller-manager","groups":["system:authenticated"]},"sourceIPs":["10.0.127.115"],"userAgent":"kube-controller-manager/v1.24.13 (linux/amd64) kubernetes/6305d65/leader-election","objectRef":{"resource":"leases","namespace":"kube-system","name":"kube-controller-manager","uid":"8c069a95-9524-4073-a13a-f8ee478399ac","apiGroup":"coordination.k8s.io","apiVersion":"v1","resourceVersion":"2456"},"responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:42:26.875459Z","stageTimestamp":"2023-05-20T13:42:26.883943Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"system:kube-controller-manager\" of ClusterRole \"system:kube-controller-manager\" to User \"system:kube-controller-manager\""}}

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"8ec3f25c-6f63-4653-9792-5dff2abbcce6","stage":"ResponseComplete","requestURI":"/api","verb":"get","user":{"username":"system:serviceaccount:kube-system:generic-garbage-collector","uid":"7c8b2638-29fe-470f-a1b9-49aeff0adedd","groups":["system:serviceaccounts","system:serviceaccounts:kube-system","system:authenticated"]},"sourceIPs":["10.0.127.115"],"userAgent":"kube-controller-manager/v1.24.13 (linux/amd64) kubernetes/6305d65/system:serviceaccount:kube-system:generic-garbage-collector","responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:42:27.033900Z","stageTimestamp":"2023-05-20T13:42:27.035917Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"system:discovery\" of ClusterRole \"system:discovery\" to Group \"system:authenticated\""}}

2023-05-20T14:20:06 {"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"365a087d-9933-4721-a29a-9cfb677eaf01","stage":"ResponseComplete","requestURI":"/apis","verb":"get","user":{"username":"system:serviceaccount:kube-system:generic-garbage-collector","uid":"7c8b2638-29fe-470f-a1b9-49aeff0adedd","groups":["system:serviceaccounts","system:serviceaccounts:kube-system","system:authenticated"]},"sourceIPs":["10.0.127.115"],"userAgent":"kube-controller-manager/v1.24.13 (linux/amd64) kubernetes/6305d65/system:serviceaccount:kube-system:generic-garbage-collector","responseStatus":{"metadata":{},"code":200},"requestReceivedTimestamp":"2023-05-20T13:42:27.036445Z","stageTimestamp":"2023-05-20T13:42:27.036739Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"system:discovery\" of ClusterRole \"system:discovery\" to Group \"system:authenticated\""}}

^Z

[2]+ Stopped aws logs tail /aws/eks/$CLUSTER_NAME/cluster --since 1h30m --format shortCloudWatch Log Insights

https://www.eksworkshop.com/docs/observability/logging/cluster-logging/log-insights/

# EC2 Instance가 NodeNotReady 상태인 로그 검색

fields @timestamp, @message

| filter @message like /NodeNotReady/

| sort @timestamp desc

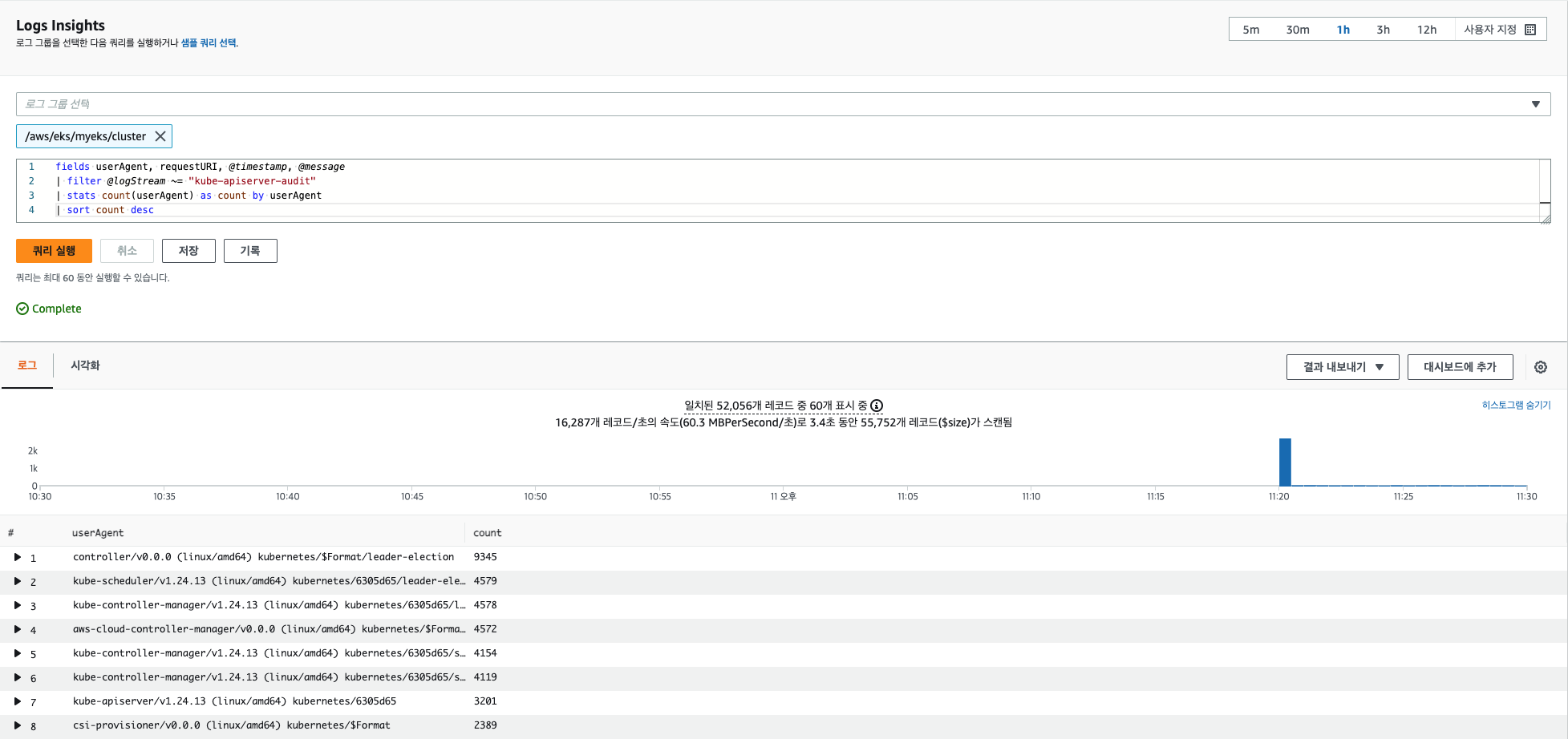

# kube-apiserver-audit 로그에서 userAgent 정렬해서 아래 4개 필드 정보 검색

fields userAgent, requestURI, @timestamp, @message

| filter @logStream ~= "kube-apiserver-audit"

| stats count(userAgent) as count by userAgent

| sort count desc

#

fields @timestamp, @message

| filter @logStream ~= "kube-scheduler"

| sort @timestamp desc

#

fields @timestamp, @message

| filter @logStream ~= "authenticator"

| sort @timestamp desc

#

fields @timestamp, @message

| filter @logStream ~= "kube-controller-manager"

| sort @timestamp desc

CloudWatch Log Insight Query with AWS CLI# CloudWatch Log Insight Query

aws logs get-query-results --query-id $(aws logs start-query \

--log-group-name '/aws/eks/myeks/cluster' \

--start-time `date -d "-1 hours" +%s` \

--end-time `date +%s` \

--query-string 'fields @timestamp, @message | filter @logStream ~= "kube-scheduler" | sort @timestamp desc' \

| jq --raw-output '.queryId')

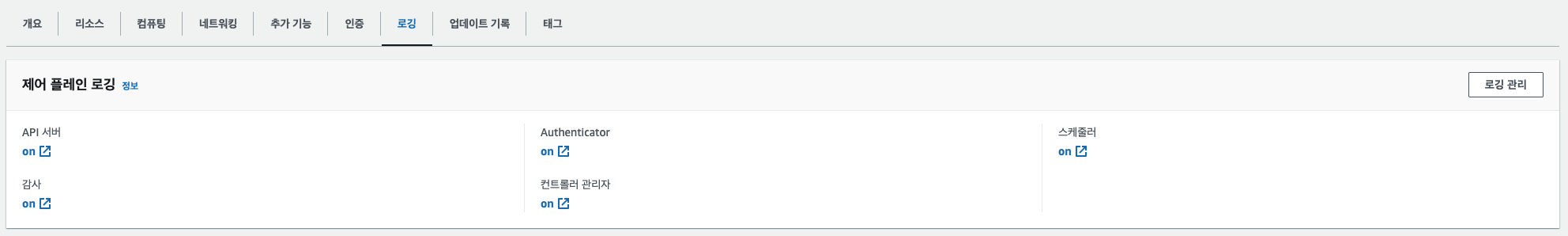

- 로깅 끄기

# EKS Control Plane 로깅(CloudWatch Logs) 비활성화

eksctl utils update-cluster-logging --cluster $CLUSTER_NAME --region $AWS_DEFAULT_REGION --disable-types all --approve

# 로그 그룹 삭제

aws logs delete-log-group --log-group-name /aws/eks/$CLUSTER_NAME/cluster

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# eksctl utils update-cluster-logging --cluster $CLUSTER_NAME --region $AWS_DEFAULT_REGION --disable-types all --approve2023-05-20 23:33:48 [ℹ] will update CloudWatch logging for cluster "myeks" in "ap-northeast-2" (no types to enable & disable types: api, audit, authenticator, controllerManager, scheduler)

2023-05-20 23:34:20 [✔] configured CloudWatch logging for cluster "myeks" in "ap-northeast-2" (no types enabled & disabled types: api, audit, authenticator, controllerManager, scheduler)

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#Control Plane metrics with Prometheus & CW Logs Insights 쿼리

# 메트릭 패턴 정보 : metric_name{"tag"="value"[,...]} value

kubectl get --raw /metrics | more

Managing etcd database size on Amazon EKS clusters

# How to monitor etcd database size? >> 아래 10.0.X.Y IP는 어디일까요? >> 아래 주소로 프로메테우스 메트릭 수집 endpoint 주소로 사용 가능한지???

kubectl get --raw /metrics | grep "etcd_db_total_size_in_bytes"

etcd_db_total_size_in_bytes{endpoint="http://10.0.160.16:2379"} 4.665344e+06

etcd_db_total_size_in_bytes{endpoint="http://10.0.32.16:2379"} 4.636672e+06

etcd_db_total_size_in_bytes{endpoint="http://10.0.96.16:2379"} 4.640768e+06

kubectl get --raw=/metrics | grep apiserver_storage_objects |awk '$2>100' |sort -g -k 2

# CW Logs Insights 쿼리

fields @timestamp, @message, @logStream

| filter @logStream like /kube-apiserver-audit/

| filter @message like /mvcc: database space exceeded/

| limit 10

# How do I identify what is consuming etcd database space?

kubectl get --raw=/metrics | grep apiserver_storage_objects |awk '$2>100' |sort -g -k 2

kubectl get --raw=/metrics | grep apiserver_storage_objects |awk '$2>50' |sort -g -k 2

apiserver_storage_objects{resource="clusterrolebindings.rbac.authorization.k8s.io"} 78

apiserver_storage_objects{resource="clusterroles.rbac.authorization.k8s.io"} 92

# CW Logs Insights 쿼리 : Request volume - Requests by User Agent:

fields userAgent, requestURI, @timestamp, @message

| filter @logStream like /kube-apiserver-audit/

| stats count(*) as count by userAgent

| sort count desc

# CW Logs Insights 쿼리 : Request volume - Requests by Universal Resource Identifier (URI)/Verb:

filter @logStream like /kube-apiserver-audit/

| stats count(*) as count by requestURI, verb, user.username

| sort count desc

# Object revision updates

fields requestURI

| filter @logStream like /kube-apiserver-audit/

| filter requestURI like /pods/

| filter verb like /patch/

| filter count > 8

| stats count(*) as count by requestURI, responseStatus.code

| filter responseStatus.code not like /500/

| sort count desc

#

fields @timestamp, userAgent, responseStatus.code, requestURI

| filter @logStream like /kube-apiserver-audit/

| filter requestURI like /pods/

| filter verb like /patch/

| filter requestURI like /name_of_the_pod_that_is_updating_fast/

| sort @timestamp

https://docs.aws.amazon.com/ko_kr/eks/latest/userguide/prometheus.html

https://aws.amazon.com/ko/blogs/containers/managing-etcd-database-size-on-amazon-eks-clusters/

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl get --raw /metrics | grep "etcd_db_total_size_in_bytes"

# HELP etcd_db_total_size_in_bytes [ALPHA] Total size of the etcd database file physically allocated in bytes.

# TYPE etcd_db_total_size_in_bytes gauge

etcd_db_total_size_in_bytes{endpoint="http://10.0.160.16:2379"} 4.440064e+06

etcd_db_total_size_in_bytes{endpoint="http://10.0.32.16:2379"} 4.435968e+06

etcd_db_total_size_in_bytes{endpoint="http://10.0.96.16:2379"} 4.427776e+06

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl get --raw=/metrics | grep apiserver_storage_objects |awk '$2>100' |sort -g -k 2

# HELP apiserver_storage_objects [STABLE] Number of stored objects at the time of last check split by kind.

# TYPE apiserver_storage_objects gauge

apiserver_storage_objects{resource="events"} 337

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

컨테이너(파드) 로깅

https://artifacthub.io/packages/helm/bitnami/nginx

# NGINX 웹서버 배포

helm repo add bitnami https://charts.bitnami.com/bitnami

# 사용 리전의 인증서 ARN 확인

CERT_ARN=$(aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text)

echo $CERT_ARN

# 도메인 확인

echo $MyDomain

# 파라미터 파일 생성

cat <<EOT > nginx-values.yaml

service:

type: NodePort

ingress:

enabled: true

ingressClassName: alb

hostname: nginx.$MyDomain

path: /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: $CLUSTER_NAME-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

EOT

cat nginx-values.yaml | yh

# 배포

helm install nginx bitnami/nginx --version 14.1.0 -f nginx-values.yaml

# 확인

kubectl get ingress,deploy,svc,ep nginx

kubectl get targetgroupbindings # ALB TG 확인

# 접속 주소 확인 및 접속

echo -e "Nginx WebServer URL = https://nginx.$MyDomain"

curl -s https://nginx.$MyDomain

kubectl logs deploy/nginx -f

# 반복 접속

while true; do curl -s https://nginx.$MyDomain -I | head -n 1; date; sleep 1; done

# (참고) 삭제 시

helm uninstall nginx(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# cat <<EOT > nginx-values.yaml

> service:

> type: NodePort

>

> ingress:

> enabled: true

> ingressClassName: alb

> hostname: nginx.$MyDomain

> path: /*

> annotations:

> alb.ingress.kubernetes.io/scheme: internet-facing

> alb.ingress.kubernetes.io/target-type: ip

> alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

> alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

> alb.ingress.kubernetes.io/success-codes: 200-399

> alb.ingress.kubernetes.io/load-balancer-name: $CLUSTER_NAME-ingress-alb

> alb.ingress.kubernetes.io/group.name: study

> alb.ingress.kubernetes.io/ssl-redirect: '443'

> EOT

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# cat nginx-values.yaml | yh

service:

type: NodePort

ingress:

enabled: true

ingressClassName: alb

hostname: nginx.primedo.shop

path: /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn:

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'# 로그 모니터링

kubectl logs deploy/nginx -f

# nginx 웹 접속 시도

# 컨테이너 로그 파일 위치 확인

kubectl exec -it deploy/nginx -- ls -l /opt/bitnami/nginx/logs/

total 0

lrwxrwxrwx 1 root root 11 Feb 18 13:35 access.log -> /dev/stdout

lrwxrwxrwx 1 root root 11 Feb 18 13:35 error.log -> /dev/stderr

# 로그 모니터링

kubectl logs deploy/nginx -f

# nginx 웹 접속 시도

# 컨테이너 로그 파일 위치 확인

kubectl exec -it deploy/nginx -- ls -l /opt/bitnami/nginx/logs/

total 0

lrwxrwxrwx 1 root root 11 Feb 18 13:35 access.log -> /dev/stdout

lrwxrwxrwx 1 root root 11 Feb 18 13:35 error.log -> /dev/stderr

- 또한 종료된 파드의 로그는 kubectl logs로 조회 할 수 없다

- kubelet 기본 설정은 로그 파일의 최대 크기가 10Mi로 10Mi를 초과하는 로그는 전체 로그 조회가 불가능함

참고) EKS 수정 예시

https://malwareanalysis.tistory.com/601

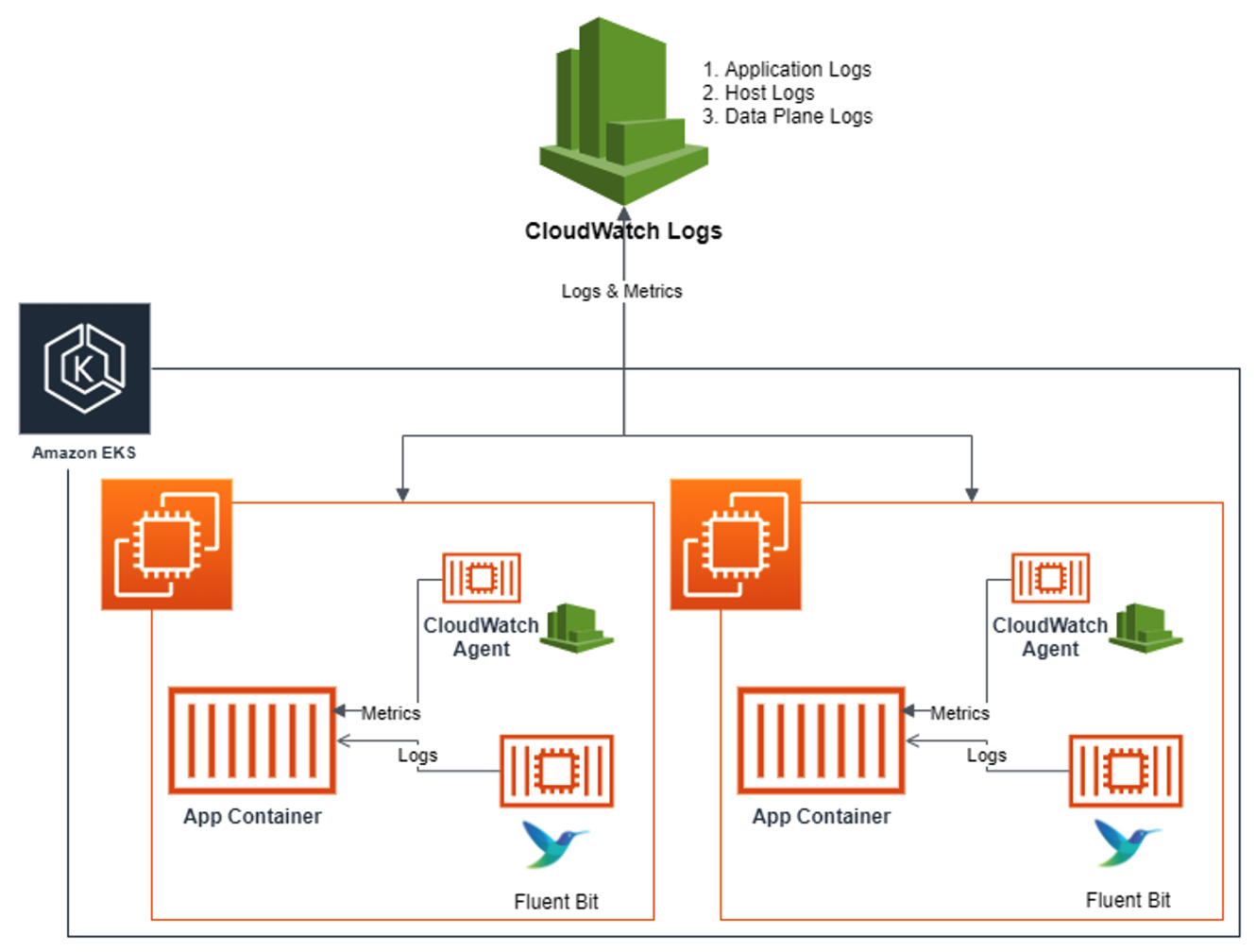

✍ Container Insights metrics in Amazon CloudWatch & Fluent Bit (Logs)

](https://s3-us-west-2.amazonaws.com/secure.notion-static.com/20144fdd-70e4-4cd0-af21-a257831ee47c/Untitled.png)

- application 로그 소스(All log files in /var/log/containers → 심볼릭 링크 /var/log/pods/<컨테이너>, 각 컨테이너/파드 로그

# 로그 위치 확인

#ssh ec2-user@$N1 sudo tree /var/log/containers

#ssh ec2-user@$N1 sudo ls -al /var/log/containers

for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo tree /var/log/containers; echo; done

for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo ls -al /var/log/containers; echo; done

# 개별 파드 로그 확인 : 아래 각자 디렉터리 경로는 다름

ssh ec2-user@$N1 sudo tail -f /var/log/pods/default_nginx-685c67bc9-pkvzd_69b28caf-7fe2-422b-aad8-f1f70a206d9e/nginx/0.log(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N1 sudo tree /var/log/containers

/var/log/containers

├── aws-load-balancer-controller-5f99d5f58f-zs2b7_kube-system_aws-load-balancer-controller-8e16fc41810b577a77d4eeab580fed4ae7c090928c1dee7e0bdc9171247c541b.log -> /var/log/pods/kube-system_aws-load-balancer-controller-5f99d5f58f-zs2b7_62f12329-a70a-4c67-a147-2200363b1320/aws-load-balancer-controller/0.log

├── aws-node-vcb5g_kube-system_aws-node-6040dac6a1d065d9a711f027ed652d0ae1be9f877daf131bad021334771f3131.log -> /var/log/pods/kube-system_aws-node-vcb5g_278654ac-b663-4aa0-abba-372d927ea2ba/aws-node/0.log

├── aws-node-vcb5g_kube-system_aws-vpc-cni-init-b5b03b88b4024b5ee78711bb331672620fe2b4f1234fa31089c374366b9f0837.log -> /var/log/pods/kube-system_aws-node-vcb5g_278654ac-b663-4aa0-abba-372d927ea2ba/aws-vpc-cni-init/0.log

├── coredns-6777fcd775-j4r7s_kube-system_coredns-5b1de0fa2584a2d1c2b0a7f1bd9c72cfb27185477537da9e1a012bd5f1402400.log -> /var/log/pods/kube-system_coredns-6777fcd775-j4r7s_edcf143b-e6db-41fa-85f5-e8896bb4f5b1/coredns/0.log

├── ebs-csi-controller-67658f895c-8rkzd_kube-system_csi-attacher-710bf72cd301874dd177a3f6b8b2deb536414964c1058cb548349be8bdf9283c.log -> /var/log/pods/kube-system_ebs-csi-controller-67658f895c-8rkzd_f32f6957-ead7-4c67-928f-888a4d858ce3/csi-attacher/0.log

├── ebs-csi-controller-67658f895c-8rkzd_kube-system_csi-provisioner-25432817d0143e0ee4c7982e26074918d0c524c353552dbca686d6a54d95205e.log -> /var/log/pods/kube-system_ebs-csi-controller-67658f895c-8rkzd_f32f6957-ead7-4c67-928f-888a4d858ce3/csi-provisioner/0.log

├── ebs-csi-controller-67658f895c-8rkzd_kube-system_csi-resizer-44def4563918548a51de30d7a58bb266dc60ea7bbee21fa4a4866a075f195ea4.log -> /var/log/pods/kube-system_ebs-csi-controller-67658f895c-8rkzd_f32f6957-ead7-4c67-928f-888a4d858ce3/csi-resizer/0.log

├── ebs-csi-controller-67658f895c-8rkzd_kube-system_csi-snapshotter-6e5790722c69c84907e2a39af9b343d26d3450425fd6e1e3d6ef9e9d0ba6bf40.log -> /var/log/pods/kube-system_ebs-csi-controller-67658f895c-8rkzd_f32f6957-ead7-4c67-928f-888a4d858ce3/csi-snapshotter/0.log

├── ebs-csi-controller-67658f895c-8rkzd_kube-system_ebs-plugin-9a904c4de8e84586881987545662525f79f9506fc6e5c0cdea034db7d304d812.log -> /var/log/pods/kube-system_ebs-csi-controller-67658f895c-8rkzd_f32f6957-ead7-4c67-928f-888a4d858ce3/ebs-plugin/0.log

├── ebs-csi-controller-67658f895c-8rkzd_kube-system_liveness-probe-9d4d44432e772077998f0e4759998ba7358fa0961b727c8b6ffbe6f84079e0ce.log -> /var/log/pods/kube-system_ebs-csi-controller-67658f895c-8rkzd_f32f6957-ead7-4c67-928f-888a4d858ce3/liveness-probe/0.log

├── ebs-csi-node-jdgxb_kube-system_ebs-plugin-88329cb592e5a7536127e6e2c740938fe837ffff949c491e8efaa159b1f27070.log -> /var/log/pods/kube-system_ebs-csi-node-jdgxb_23a49f87-10e5-49e8-8f34-989119d11d14/ebs-plugin/0.log

├── ebs-csi-node-jdgxb_kube-system_liveness-probe-7bcf2be23bcb547c0b6a4387762fc8dee8c148f55951c1cc7eb4939c62f63c57.log -> /var/log/pods/kube-system_ebs-csi-node-jdgxb_23a49f87-10e5-49e8-8f34-989119d11d14/liveness-probe/0.log

├── ebs-csi-node-jdgxb_kube-system_node-driver-registrar-f614540c45f57b9107d37a030369564729cadb88489b3a4fa3f6df75b8102f5f.log -> /var/log/pods/kube-system_ebs-csi-node-jdgxb_23a49f87-10e5-49e8-8f34-989119d11d14/node-driver-registrar/0.log

├── efs-csi-controller-6f64dcc5dc-txm97_kube-system_csi-provisioner-f8dc271da8002f2db19b889e5da586c7c3fda40441a3cb5e171e2b33c1045dd4.log -> /var/log/pods/kube-system_efs-csi-controller-6f64dcc5dc-txm97_7f371fe5-125d-4e97-814d-09debfd19980/csi-provisioner/0.log

├── efs-csi-controller-6f64dcc5dc-txm97_kube-system_efs-plugin-be9c6ef7232cbf2336c1fccc215eaabd4e665958171066e613f69ebf2102347e.log -> /var/log/pods/kube-system_efs-csi-controller-6f64dcc5dc-txm97_7f371fe5-125d-4e97-814d-09debfd19980/efs-plugin/0.log

├── efs-csi-controller-6f64dcc5dc-txm97_kube-system_liveness-probe-64ec80ad9769724c98becfefe855055b31c474d40a3b343aac1e52ce34a4cb75.log -> /var/log/pods/kube-system_efs-csi-controller-6f64dcc5dc-txm97_7f371fe5-125d-4e97-814d-09debfd19980/liveness-probe/0.log

├── efs-csi-node-df45x_kube-system_csi-driver-registrar-0dcf3d7f661a2c4a0dbddfa5a980b041015d138836524a39b7dcf335547611c3.log -> /var/log/pods/kube-system_efs-csi-node-df45x_c7dc5ae2-b389-421e-b487-889b105a926b/csi-driver-registrar/0.log

├── efs-csi-node-df45x_kube-system_efs-plugin-ca7dc331204d4f25a126152fef18db2df13a8fa8180e7ec226c5f932e4535d24.log -> /var/log/pods/kube-system_efs-csi-node-df45x_c7dc5ae2-b389-421e-b487-889b105a926b/efs-plugin/0.log

├── efs-csi-node-df45x_kube-system_liveness-probe-2a3b1afbcffa241eb41bfb27f81fd0b6d3ec01c51d6fcc6d1e404a7e9163431e.log -> /var/log/pods/kube-system_efs-csi-node-df45x_c7dc5ae2-b389-421e-b487-889b105a926b/liveness-probe/0.log

└── kube-proxy-7hctc_kube-system_kube-proxy-3c97a4b53de08d3c0bcf6d1f02c5df92d7acab01d118e14a4f69fe4de25eefb6.log -> /var/log/pods/kube-system_kube-proxy-7hctc_1a03176f-e496-4513-a8a9-65f682badee9/kube-proxy/0.log

0 directories, 20 files

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#- host 로그 소스(Logs from /var/log/dmesg, /var/log/secure, and /var/log/messages), 노드(호스트) 로그

# 로그 위치 확인

#ssh ec2-user@$N1 sudo tree /var/log/ -L 1

#ssh ec2-user@$N1 sudo ls -la /var/log/

for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo tree /var/log/ -L 1; echo; done

for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo ls -la /var/log/; echo; done

# 호스트 로그 확인

#ssh ec2-user@$N1 sudo tail /var/log/dmesg

#ssh ec2-user@$N1 sudo tail /var/log/secure

#ssh ec2-user@$N1 sudo tail /var/log/messages

for log in dmesg secure messages; do echo ">>>>> Node1: /var/log/$log <<<<<"; ssh ec2-user@$N1 sudo tail /var/log/$log; echo; done

for log in dmesg secure messages; do echo ">>>>> Node2: /var/log/$log <<<<<"; ssh ec2-user@$N2 sudo tail /var/log/$log; echo; done

for log in dmesg secure messages; do echo ">>>>> Node3: /var/log/$log <<<<<"; ssh ec2-user@$N3 sudo tail /var/log/$log; echo; done(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N1 sudo tree /var/log/ -L 1

/var/log/

├── amazon

├── audit

├── aws-routed-eni

├── boot.log

├── btmp

├── chrony

├── cloud-init.log

├── cloud-init-output.log

├── containers

├── cron

├── dmesg

├── dmesg.old

├── grubby

├── grubby_prune_debug

├── journal

├── lastlog

├── maillog

├── messages

├── pods

├── sa

├── secure

├── spooler

├── tallylog

├── wtmp

└── yum.log

8 directories, 17 files

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#- dataplane 로그 소스(/var/log/journal for kubelet.service, kubeproxy.service, and docker.service), 쿠버네티스 데이터플레인 로그

# 로그 위치 확인

#ssh ec2-user@$N1 sudo tree /var/log/journal -L 1

#ssh ec2-user@$N1 sudo ls -la /var/log/journal

for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo tree /var/log/journal -L 1; echo; done

# 저널 로그 확인 - 링크

ssh ec2-user@$N3 sudo journalctl -x -n 200

ssh ec2-user@$N3 sudo journalctl -f(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N1 sudo tail /var/log/dmesg

[ 6.137127] ena 0000:00:05.0: Elastic Network Adapter (ENA) found at mem febf4000, mac addr 02:73:6b:6b:fc:dc

[ 6.149401] mousedev: PS/2 mouse device common for all mice

[ 6.189008] AVX2 version of gcm_enc/dec engaged.

[ 6.193505] AES CTR mode by8 optimization enabled

[ 7.043051] RPC: Registered named UNIX socket transport module.

[ 7.048364] RPC: Registered udp transport module.

[ 7.052673] RPC: Registered tcp transport module.

[ 7.063625] RPC: Registered tcp NFSv4.1 backchannel transport module.

[ 7.264814] xfs filesystem being remounted at /tmp supports timestamps until 2038 (0x7fffffff)

[ 7.273394] xfs filesystem being remounted at /var/tmp supports timestamps until 2038 (0x7fffffff)

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N1 sudo tail /var/log/secure

May 20 14:56:01 ip-192-168-1-181 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/dmesg

May 20 14:56:01 ip-192-168-1-181 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

May 20 14:56:01 ip-192-168-1-181 sudo: pam_unix(sudo:session): session closed for user root

May 20 14:56:01 ip-192-168-1-181 sshd[12828]: Received disconnect from 192.168.1.100 port 43644:11: disconnected by user

May 20 14:56:01 ip-192-168-1-181 sshd[12828]: Disconnected from 192.168.1.100 port 43644

May 20 14:56:01 ip-192-168-1-181 sshd[12796]: pam_unix(sshd:session): session closed for user ec2-user

May 20 14:56:08 ip-192-168-1-181 sshd[12884]: Accepted publickey for ec2-user from 192.168.1.100 port 43648 ssh2: RSA SHA256:ZAuwfHNZMtXtIPO9Xsx7M95yd38EJ22WaT9ME6AwViA

May 20 14:56:08 ip-192-168-1-181 sshd[12884]: pam_unix(sshd:session): session opened for user ec2-user by (uid=0)

May 20 14:56:08 ip-192-168-1-181 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/secure

May 20 14:56:08 ip-192-168-1-181 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N1 sudo tail /var/log/messages

May 20 14:56:01 ip-192-168-1-181 systemd-logind: Removed session 18.

May 20 14:56:01 ip-192-168-1-181 systemd: Removed slice User Slice of ec2-user.

May 20 14:56:08 ip-192-168-1-181 systemd: Created slice User Slice of ec2-user.

May 20 14:56:08 ip-192-168-1-181 systemd-logind: New session 19 of user ec2-user.

May 20 14:56:08 ip-192-168-1-181 systemd: Started Session 19 of user ec2-user.

May 20 14:56:08 ip-192-168-1-181 systemd-logind: Removed session 19.

May 20 14:56:08 ip-192-168-1-181 systemd: Removed slice User Slice of ec2-user.

May 20 14:56:14 ip-192-168-1-181 systemd: Created slice User Slice of ec2-user.

May 20 14:56:14 ip-192-168-1-181 systemd-logind: New session 20 of user ec2-user.

May 20 14:56:14 ip-192-168-1-181 systemd: Started Session 20 of user ec2-user.

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# for log in dmesg secure messages; do echo ">>>>> Node1: /var/log/$log <<<<<"; ssh ec2-user@$N1 sudo tail /var/log/$log; echo; done

>>>>> Node1: /var/log/dmesg <<<<<

[ 6.137127] ena 0000:00:05.0: Elastic Network Adapter (ENA) found at mem febf4000, mac addr 02:73:6b:6b:fc:dc

[ 6.149401] mousedev: PS/2 mouse device common for all mice

[ 6.189008] AVX2 version of gcm_enc/dec engaged.

[ 6.193505] AES CTR mode by8 optimization enabled

[ 7.043051] RPC: Registered named UNIX socket transport module.

[ 7.048364] RPC: Registered udp transport module.

[ 7.052673] RPC: Registered tcp transport module.

[ 7.063625] RPC: Registered tcp NFSv4.1 backchannel transport module.

[ 7.264814] xfs filesystem being remounted at /tmp supports timestamps until 2038 (0x7fffffff)

[ 7.273394] xfs filesystem being remounted at /var/tmp supports timestamps until 2038 (0x7fffffff)

>>>>> Node1: /var/log/secure <<<<<

May 20 14:56:50 ip-192-168-1-181 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/dmesg

May 20 14:56:50 ip-192-168-1-181 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

May 20 14:56:50 ip-192-168-1-181 sudo: pam_unix(sudo:session): session closed for user root

May 20 14:56:50 ip-192-168-1-181 sshd[13317]: Received disconnect from 192.168.1.100 port 38304:11: disconnected by user

May 20 14:56:50 ip-192-168-1-181 sshd[13317]: Disconnected from 192.168.1.100 port 38304

May 20 14:56:50 ip-192-168-1-181 sshd[13285]: pam_unix(sshd:session): session closed for user ec2-user

May 20 14:56:50 ip-192-168-1-181 sshd[13327]: Accepted publickey for ec2-user from 192.168.1.100 port 38306 ssh2: RSA SHA256:ZAuwfHNZMtXtIPO9Xsx7M95yd38EJ22WaT9ME6AwViA

May 20 14:56:50 ip-192-168-1-181 sshd[13327]: pam_unix(sshd:session): session opened for user ec2-user by (uid=0)

May 20 14:56:50 ip-192-168-1-181 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/secure

May 20 14:56:51 ip-192-168-1-181 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

>>>>> Node1: /var/log/messages <<<<<

May 20 14:56:50 ip-192-168-1-181 systemd-logind: Removed session 21.

May 20 14:56:50 ip-192-168-1-181 systemd: Removed slice User Slice of ec2-user.

May 20 14:56:50 ip-192-168-1-181 systemd: Created slice User Slice of ec2-user.

May 20 14:56:50 ip-192-168-1-181 systemd-logind: New session 22 of user ec2-user.

May 20 14:56:50 ip-192-168-1-181 systemd: Started Session 22 of user ec2-user.

May 20 14:56:51 ip-192-168-1-181 systemd-logind: Removed session 22.

May 20 14:56:51 ip-192-168-1-181 systemd: Removed slice User Slice of ec2-user.

May 20 14:56:51 ip-192-168-1-181 systemd: Created slice User Slice of ec2-user.

May 20 14:56:51 ip-192-168-1-181 systemd-logind: New session 23 of user ec2-user.

May 20 14:56:51 ip-192-168-1-181 systemd: Started Session 23 of user ec2-user.

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# for log in dmesg secure messages; do echo ">>>>> Node2: /var/log/$log <<<<<"; ssh ec2-user@$N2 sudo tail /var/log/$log; echo; done

>>>>> Node2: /var/log/dmesg <<<<<

[ 5.705135] ACPI: Sleep Button [SLPF]

[ 5.709697] ena 0000:00:05.0: LLQ is not supported Fallback to host mode policy.

[ 5.733426] ena 0000:00:05.0: Elastic Network Adapter (ENA) found at mem febf4000, mac addr 06:25:6a:82:bd:a6

[ 5.749115] mousedev: PS/2 mouse device common for all mice

[ 6.753284] RPC: Registered named UNIX socket transport module.

[ 6.757428] RPC: Registered udp transport module.

[ 6.761196] RPC: Registered tcp transport module.

[ 6.764967] RPC: Registered tcp NFSv4.1 backchannel transport module.

[ 6.945848] xfs filesystem being remounted at /tmp supports timestamps until 2038 (0x7fffffff)

[ 6.953026] xfs filesystem being remounted at /var/tmp supports timestamps until 2038 (0x7fffffff)

>>>>> Node2: /var/log/secure <<<<<

May 20 14:56:58 ip-192-168-2-191 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/dmesg

May 20 14:56:58 ip-192-168-2-191 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

May 20 14:56:58 ip-192-168-2-191 sudo: pam_unix(sudo:session): session closed for user root

May 20 14:56:58 ip-192-168-2-191 sshd[12036]: Received disconnect from 192.168.1.100 port 59542:11: disconnected by user

May 20 14:56:58 ip-192-168-2-191 sshd[12036]: Disconnected from 192.168.1.100 port 59542

May 20 14:56:58 ip-192-168-2-191 sshd[12004]: pam_unix(sshd:session): session closed for user ec2-user

May 20 14:56:58 ip-192-168-2-191 sshd[12048]: Accepted publickey for ec2-user from 192.168.1.100 port 59550 ssh2: RSA SHA256:ZAuwfHNZMtXtIPO9Xsx7M95yd38EJ22WaT9ME6AwViA

May 20 14:56:58 ip-192-168-2-191 sshd[12048]: pam_unix(sshd:session): session opened for user ec2-user by (uid=0)

May 20 14:56:58 ip-192-168-2-191 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/secure

May 20 14:56:58 ip-192-168-2-191 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

>>>>> Node2: /var/log/messages <<<<<

May 20 14:56:58 ip-192-168-2-191 systemd-logind: Removed session 14.

May 20 14:56:58 ip-192-168-2-191 systemd: Removed slice User Slice of ec2-user.

May 20 14:56:58 ip-192-168-2-191 systemd: Created slice User Slice of ec2-user.

May 20 14:56:58 ip-192-168-2-191 systemd-logind: New session 15 of user ec2-user.

May 20 14:56:58 ip-192-168-2-191 systemd: Started Session 15 of user ec2-user.

May 20 14:56:58 ip-192-168-2-191 systemd-logind: Removed session 15.

May 20 14:56:58 ip-192-168-2-191 systemd: Removed slice User Slice of ec2-user.

May 20 14:56:58 ip-192-168-2-191 systemd: Created slice User Slice of ec2-user.

May 20 14:56:58 ip-192-168-2-191 systemd-logind: New session 16 of user ec2-user.

May 20 14:56:58 ip-192-168-2-191 systemd: Started Session 16 of user ec2-user.

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# for log in dmesg secure messages; do echo ">>>>> Node3: /var/log/$log <<<<<"; ssh ec2-user@$N3 sudo tail /var/log/$log; echo; done

>>>>> Node3: /var/log/dmesg <<<<<

[ 5.705767] ACPI: Sleep Button [SLPF]

[ 5.719598] mousedev: PS/2 mouse device common for all mice

[ 5.725936] AVX2 version of gcm_enc/dec engaged.

[ 5.730413] AES CTR mode by8 optimization enabled

[ 6.421373] RPC: Registered named UNIX socket transport module.

[ 6.425308] RPC: Registered udp transport module.

[ 6.428777] RPC: Registered tcp transport module.

[ 6.432233] RPC: Registered tcp NFSv4.1 backchannel transport module.

[ 6.516944] xfs filesystem being remounted at /tmp supports timestamps until 2038 (0x7fffffff)

[ 6.525508] xfs filesystem being remounted at /var/tmp supports timestamps until 2038 (0x7fffffff)

>>>>> Node3: /var/log/secure <<<<<

May 20 14:57:10 ip-192-168-3-176 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/dmesg

May 20 14:57:10 ip-192-168-3-176 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

May 20 14:57:10 ip-192-168-3-176 sudo: pam_unix(sudo:session): session closed for user root

May 20 14:57:10 ip-192-168-3-176 sshd[13461]: Received disconnect from 192.168.1.100 port 46918:11: disconnected by user

May 20 14:57:10 ip-192-168-3-176 sshd[13461]: Disconnected from 192.168.1.100 port 46918

May 20 14:57:10 ip-192-168-3-176 sshd[13429]: pam_unix(sshd:session): session closed for user ec2-user

May 20 14:57:10 ip-192-168-3-176 sshd[13472]: Accepted publickey for ec2-user from 192.168.1.100 port 46924 ssh2: RSA SHA256:ZAuwfHNZMtXtIPO9Xsx7M95yd38EJ22WaT9ME6AwViA

May 20 14:57:10 ip-192-168-3-176 sshd[13472]: pam_unix(sshd:session): session opened for user ec2-user by (uid=0)

May 20 14:57:10 ip-192-168-3-176 sudo: ec2-user : TTY=unknown ; PWD=/home/ec2-user ; USER=root ; COMMAND=/bin/tail /var/log/secure

May 20 14:57:10 ip-192-168-3-176 sudo: pam_unix(sudo:session): session opened for user root by (uid=0)

>>>>> Node3: /var/log/messages <<<<<

May 20 14:57:10 ip-192-168-3-176 systemd-logind: Removed session 14.

May 20 14:57:10 ip-192-168-3-176 systemd: Removed slice User Slice of ec2-user.

May 20 14:57:10 ip-192-168-3-176 systemd: Created slice User Slice of ec2-user.

May 20 14:57:10 ip-192-168-3-176 systemd-logind: New session 15 of user ec2-user.

May 20 14:57:10 ip-192-168-3-176 systemd: Started Session 15 of user ec2-user.

May 20 14:57:10 ip-192-168-3-176 systemd-logind: Removed session 15.

May 20 14:57:10 ip-192-168-3-176 systemd: Removed slice User Slice of ec2-user.

May 20 14:57:10 ip-192-168-3-176 systemd: Created slice User Slice of ec2-user.

May 20 14:57:10 ip-192-168-3-176 systemd: Started Session 16 of user ec2-user.

May 20 14:57:10 ip-192-168-3-176 systemd-logind: New session 16 of user ec2-user.

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo tree /var/log/journal -L 1; echo; done

>>>>> 192.168.1.181 <<<<<

/var/log/journal

├── ec2d184089fd93a22cc04a98df97cc6d

└── ec2e88644360977c092dff22910023b4

2 directories, 0 files

>>>>> 192.168.2.191 <<<<<

/var/log/journal

├── ec2d184089fd93a22cc04a98df97cc6d

└── ec2ed0f08d29ef8ead6f5fc38b66d477

2 directories, 0 files

>>>>> 192.168.3.176 <<<<<

/var/log/journal

├── ec2d184089fd93a22cc04a98df97cc6d

└── ec2ff43272b509594874aebbab7c7594

2 directories, 0 files

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

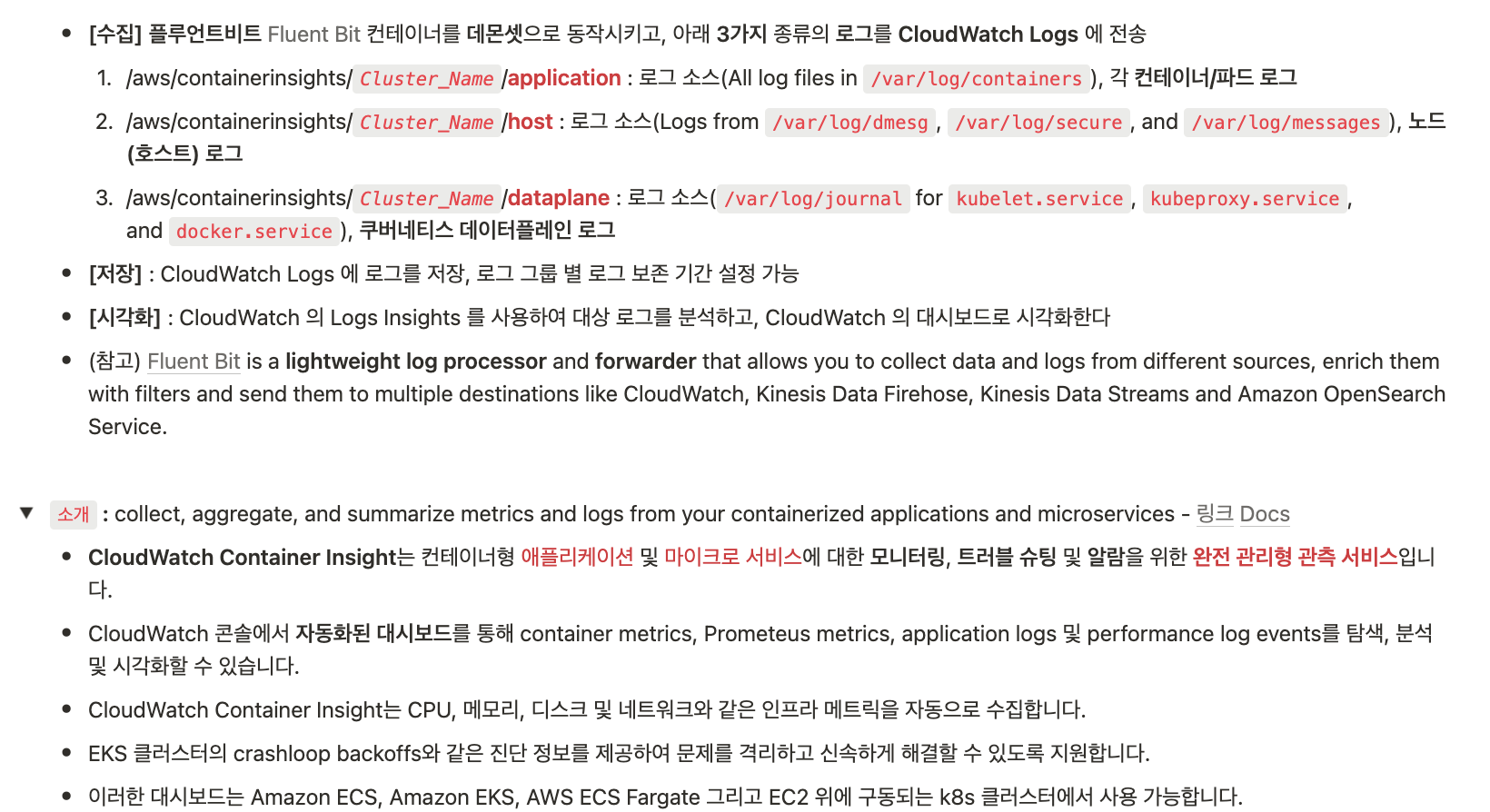

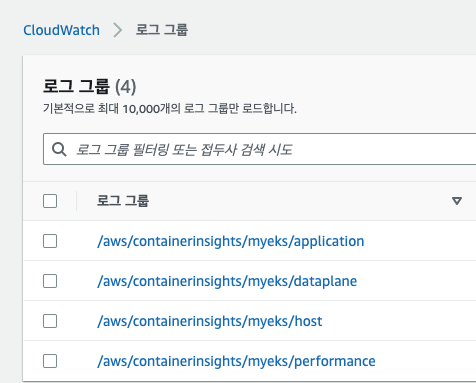

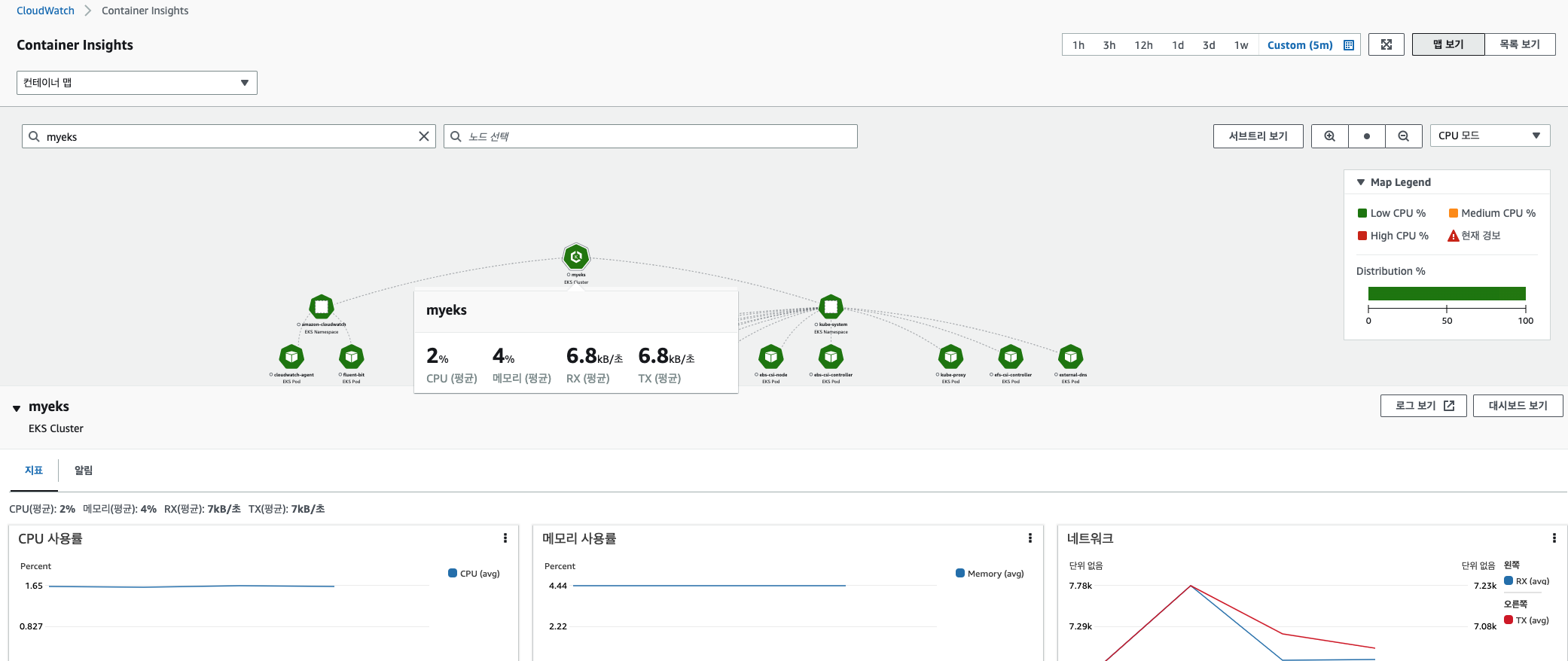

CloudWatch Container Insight 설치 : cloudwatch-agent & fluent-bit - 링크 & Setting up Fluent Bit - Docs

# 설치

FluentBitHttpServer='On'

FluentBitHttpPort='2020'

FluentBitReadFromHead='Off'

FluentBitReadFromTail='On'

curl -s https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/daemonset/container-insights-monitoring/quickstart/cwagent-fluent-bit-quickstart.yaml | sed 's/{{cluster_name}}/'${CLUSTER_NAME}'/;s/{{region_name}}/'${AWS_DEFAULT_REGION}'/;s/{{http_server_toggle}}/"'${FluentBitHttpServer}'"/;s/{{http_server_port}}/"'${FluentBitHttpPort}'"/;s/{{read_from_head}}/"'${FluentBitReadFromHead}'"/;s/{{read_from_tail}}/"'${FluentBitReadFromTail}'"/' | kubectl apply -f -

# 설치 확인

kubectl get-all -n amazon-cloudwatch

kubectl get ds,pod,cm,sa -n amazon-cloudwatch

kubectl describe clusterrole cloudwatch-agent-role fluent-bit-role # 클러스터롤 확인

kubectl describe clusterrolebindings cloudwatch-agent-role-binding fluent-bit-role-binding # 클러스터롤 바인딩 확인

kubectl -n amazon-cloudwatch logs -l name=cloudwatch-agent -f # 파드 로그 확인

kubectl -n amazon-cloudwatch logs -l k8s-app=fluent-bit -f # 파드 로그 확인

for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo ss -tnlp | grep fluent-bit; echo; done

# cloudwatch-agent 설정 확인

kubectl describe cm cwagentconfig -n amazon-cloudwatch

{

"agent": {

"region": "ap-northeast-2"

},

"logs": {

"metrics_collected": {

"kubernetes": {

"cluster_name": "myeks",

"metrics_collection_interval": 60

}

},

"force_flush_interval": 5

}

}

# CW 파드가 수집하는 방법 : Volumes에 HostPath를 살펴보자! >> / 호스트 패스 공유??? 보안상 안전한가? 좀 더 범위를 좁힐수는 없을까요?

kubectl describe -n amazon-cloudwatch ds cloudwatch-agent

...

ssh ec2-user@$N1 sudo tree /dev/disk

...

# Fluent Bit Cluster Info 확인

kubectl get cm -n amazon-cloudwatch fluent-bit-cluster-info -o yaml | yh

apiVersion: v1

data:

cluster.name: myeks

http.port: "2020"

http.server: "On"

logs.region: ap-northeast-2

read.head: "Off"

read.tail: "On"

kind: ConfigMap

...

# Fluent Bit 로그 INPUT/FILTER/OUTPUT 설정 확인 - 링크

## 설정 부분 구성 : application-log.conf , dataplane-log.conf , fluent-bit.conf , host-log.conf , parsers.conf

kubectl describe cm fluent-bit-config -n amazon-cloudwatch

...

application-log.conf:

----

[INPUT]

Name tail

Tag application.*

Exclude_Path /var/log/containers/cloudwatch-agent*, /var/log/containers/fluent-bit*, /var/log/containers/aws-node*, /var/log/containers/kube-proxy*

Path /var/log/containers/*.log

multiline.parser docker, cri

DB /var/fluent-bit/state/flb_container.db

Mem_Buf_Limit 50MB

Skip_Long_Lines On

Refresh_Interval 10

Rotate_Wait 30

storage.type filesystem

Read_from_Head ${READ_FROM_HEAD}

[FILTER]

Name kubernetes

Match application.*

Kube_URL https://kubernetes.default.svc:443

Kube_Tag_Prefix application.var.log.containers.

Merge_Log On

Merge_Log_Key log_processed

K8S-Logging.Parser On

K8S-Logging.Exclude Off

Labels Off

Annotations Off

Use_Kubelet On

Kubelet_Port 10250

Buffer_Size 0

[OUTPUT]

Name cloudwatch_logs

Match application.*

region ${AWS_REGION}

log_group_name /aws/containerinsights/${CLUSTER_NAME}/application

log_stream_prefix ${HOST_NAME}-

auto_create_group true

extra_user_agent container-insights

...

# Fluent Bit 파드가 수집하는 방법 : Volumes에 HostPath를 살펴보자!

kubectl describe -n amazon-cloudwatch ds fluent-bit

...

ssh ec2-user@$N1 sudo tree /var/log

...

# (참고) 삭제

curl -s https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/daemonset/container-insights-monitoring/quickstart/cwagent-fluent-bit-quickstart.yaml | sed 's/{{cluster_name}}/'${CLUSTER_NAME}'/;s/{{region_name}}/'${AWS_DEFAULT_REGION}'/;s/{{http_server_toggle}}/"'${FluentBitHttpServer}'"/;s/{{http_server_port}}/"'${FluentBitHttpPort}'"/;s/{{read_from_head}}/"'${FluentBitReadFromHead}'"/;s/{{read_from_tail}}/"'${FluentBitReadFromTail}'"/' | kubectl delete -f -(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl -n amazon-cloudwatch logs -l name=cloudwatch-agent -f

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: Initial retrieval of tags succeeded

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started, finished initial retrieval of tags and Volumes

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: Initial retrieval of tags succeeded

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started, finished initial retrieval of tags and Volumes

2023-05-20T15:05:47Z W! [outputs.cloudwatchlogs] Retried 0 time, going to sleep 177.027943ms before retrying.

2023-05-20T15:06:40Z I! number of namespace to running pod num map[amazon-cloudwatch:6 kube-system:22]

2023-05-20T15:07:40Z I! number of namespace to running pod num map[amazon-cloudwatch:6 kube-system:22]

2023-05-20T15:08:40Z I! number of namespace to running pod num map[amazon-cloudwatch:6 kube-system:22]

2023-05-20T15:08:52Z I! [processors.ec2tagger] ec2tagger: Refresh is no longer needed, stop refreshTicker.

2023-05-20T15:08:52Z I! [processors.ec2tagger] ec2tagger: Refresh is no longer needed, stop refreshTicker.

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: Check EC2 Metadata.

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started initialization.

I0520 15:05:40.716153 1 leaderelection.go:248] attempting to acquire leader lease amazon-cloudwatch/cwagent-clusterleader...

2023-05-20T15:05:40Z I! k8sapiserver Switch New Leader: ip-192-168-1-181.ap-northeast-2.compute.internal

W0520 15:05:40.890093 1 manager.go:291] Could not configure a source for OOM detection, disabling OOM events: open /dev/kmsg: no such file or directory

2023-05-20T15:05:41Z I! [processors.ec2tagger] ec2tagger: Initial retrieval of tags succeeded

2023-05-20T15:05:41Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started, finished initial retrieval of tags and Volumes

2023-05-20T15:05:41Z I! [processors.ec2tagger] ec2tagger: Initial retrieval of tags succeeded

2023-05-20T15:05:41Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started, finished initial retrieval of tags and Volumes

2023-05-20T15:05:47Z W! [outputs.cloudwatchlogs] Retried 0 time, going to sleep 127.79391ms before retrying.

2023-05-20T15:05:40Z I! Cannot get the leader config map: configmaps "cwagent-clusterleader" not found, try to create the config map...

2023-05-20T15:05:40Z I! configMap: &ConfigMap{ObjectMeta:{ 0 0001-01-01 00:00:00 +0000 UTC <nil> <nil> map[] map[] [] [] []},Data:map[string]string{},BinaryData:map[string][]byte{},Immutable:nil,}, err: configmaps "cwagent-clusterleader" already exists

I0520 15:05:40.376969 1 leaderelection.go:248] attempting to acquire leader lease amazon-cloudwatch/cwagent-clusterleader...

2023-05-20T15:05:40Z I! k8sapiserver Switch New Leader: ip-192-168-1-181.ap-northeast-2.compute.internal

W0520 15:05:40.470397 1 manager.go:291] Could not configure a source for OOM detection, disabling OOM events: open /dev/kmsg: no such file or directory

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: Initial retrieval of tags succeeded

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started, finished initial retrieval of tags and Volumes

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: Initial retrieval of tags succeeded

2023-05-20T15:05:40Z I! [processors.ec2tagger] ec2tagger: EC2 tagger has started, finished initial retrieval of tags and Volumes

2023-05-20T15:05:47Z W! [outputs.cloudwatchlogs] Retried 0 time, going to sleep 113.374123ms before retrying.

^Z

[1]+ Stopped kubectl -n amazon-cloudwatch logs -l name=cloudwatch-agent -f

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# for node in $N1 $N2 $N3; do echo ">>>>> $node <<<<<"; ssh ec2-user@$node sudo ss -tnlp | grep fluent-bit; echo; done

>>>>> 192.168.1.181 <<<<<

LISTEN 0 128 0.0.0.0:2020 0.0.0.0:* users:(("fluent-bit",pid=17593,fd=200))

>>>>> 192.168.2.191 <<<<<

LISTEN 0 128 0.0.0.0:2020 0.0.0.0:* users:(("fluent-bit",pid=16064,fd=191))

>>>>> 192.168.3.176 <<<<<

LISTEN 0 128 0.0.0.0:2020 0.0.0.0:* users:(("fluent-bit",pid=17644,fd=200))

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

====

cwagentconfig.json:

----

{

"agent": {

"region": "ap-northeast-2"

},

"logs": {

"metrics_collected": {

"kubernetes": {

"cluster_name": "myeks",

"metrics_collection_interval": 60

}

},

"force_flush_interval": 5

}

}

BinaryData

====

primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl get cm -n amazon-cloudwatch fluent-bit-cluster-info -o yaml | yh

apiVersion: v1

data:

cluster.name: myeks

http.port: "2020"

http.server: "On"

logs.region: ap-northeast-2

read.head: "Off"

read.tail: "On"

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"cluster.name":"myeks","http.port":"2020","http.server":"On","logs.region":"ap-northeast-2","read.head":"Off","read.tail":"On"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"fluent-bit-cluster-info","namespace":"amazon-cloudwatch"}}

creationTimestamp: "2023-05-20T15:05:31Z"

name: fluent-bit-cluster-info

namespace: amazon-cloudwatch

resourceVersion: "23257"

uid: 299f3f68-2546-4ebe-ac4a-9a241620a595

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl describe cm fluent-bit-config -n amazon-cloudwatch

Name: fluent-bit-config

Namespace: amazon-cloudwatch

Labels: k8s-app=fluent-bit

Annotations: <none>

Data

====

application-log.conf:

----

[INPUT]

Name tail

Tag application.*

Exclude_Path /var/log/containers/cloudwatch-agent*, /var/log/containers/fluent-bit*, /var/log/containers/aws-node*, /var/log/containers/kube-proxy*

Path /var/log/containers/*.log

multiline.parser docker, cri

DB /var/fluent-bit/state/flb_container.db

Mem_Buf_Limit 50MB

Skip_Long_Lines On

Refresh_Interval 10

Rotate_Wait 30

storage.type filesystem

Read_from_Head ${READ_FROM_HEAD}

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl describe -n amazon-cloudwatch ds fluent-bit

Name: fluent-bit

Selector: k8s-app=fluent-bit

Node-Selector: <none>

Labels: k8s-app=fluent-bit

kubernetes.io/cluster-service=true

version=v1

Annotations: deprecated.daemonset.template.generation: 1

Desired Number of Nodes Scheduled: 3

Current Number of Nodes Scheduled: 3

Number of Nodes Scheduled with Up-to-date Pods: 3

Number of Nodes Scheduled with Available Pods: 3

Number of Nodes Misscheduled: 0

Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: k8s-app=fluent-bit

kubernetes.io/cluster-service=true

version=v1

Service Account: fluent-bit

Containers:

fluent-bit:

Image: public.ecr.aws/aws-observability/aws-for-fluent-bit:stable

Port: <none>

Host Port: <none>

Limits:

memory: 200Mi

Requests:

cpu: 500m

memory: 100Mi

Environment:

AWS_REGION: <set to the key 'logs.region' of config map 'fluent-bit-cluster-info'> Optional: false

CLUSTER_NAME: <set to the key 'cluster.name' of config map 'fluent-bit-cluster-info'> Optional: false

HTTP_SERVER: <set to the key 'http.server' of config map 'fluent-bit-cluster-info'> Optional: false

HTTP_PORT: <set to the key 'http.port' of config map 'fluent-bit-cluster-info'> Optional: false

READ_FROM_HEAD: <set to the key 'read.head' of config map 'fluent-bit-cluster-info'> Optional: false

READ_FROM_TAIL: <set to the key 'read.tail' of config map 'fluent-bit-cluster-info'> Optional: false

HOST_NAME: (v1:spec.nodeName)

HOSTNAME: (v1:metadata.name)

CI_VERSION: k8s/1.3.14

Mounts:

/fluent-bit/etc/ from fluent-bit-config (rw)

/run/log/journal from runlogjournal (ro)

/var/fluent-bit/state from fluentbitstate (rw)

/var/lib/docker/containers from varlibdockercontainers (ro)

/var/log from varlog (ro)

/var/log/dmesg from dmesg (ro)

Volumes:

fluentbitstate:

Type: HostPath (bare host directory volume)

Path: /var/fluent-bit/state

HostPathType:

varlog:

Type: HostPath (bare host directory volume)

Path: /var/log

HostPathType:

varlibdockercontainers:

Type: HostPath (bare host directory volume)

Path: /var/lib/docker/containers

HostPathType:

fluent-bit-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: fluent-bit-config

Optional: false

runlogjournal:

Type: HostPath (bare host directory volume)

Path: /run/log/journal

HostPathType:

dmesg:

Type: HostPath (bare host directory volume)

Path: /var/log/dmesg

HostPathType:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 7m daemonset-controller Created pod: fluent-bit-65sfg

Normal SuccessfulCreate 7m daemonset-controller Created pod: fluent-bit-8rjl2

Normal SuccessfulCreate 7m daemonset-controller Created pod: fluent-bit-6p6kd

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

Logs Insights

# Application log errors by container name : 컨테이너 이름별 애플리케이션 로그 오류

# 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/application

stats count() as error_count by kubernetes.container_name

| filter stream="stderr"

| sort error_count desc

# All Kubelet errors/warning logs for for a given EKS worker node

# 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/dataplane

fields @timestamp, @message, ec2_instance_id

| filter message =~ /.*(E|W)[0-9]{4}.*/ and ec2_instance_id="<YOUR INSTANCE ID>"

| sort @timestamp desc

# Kubelet errors/warning count per EKS worker node in the cluster

# 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/dataplane

fields @timestamp, @message, ec2_instance_id

| filter message =~ /.*(E|W)[0-9]{4}.*/

| stats count(*) as error_count by ec2_instance_id

# performance 로그 그룹

# 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/performance

# 노드별 평균 CPU 사용률

STATS avg(node_cpu_utilization) as avg_node_cpu_utilization by NodeName

| SORT avg_node_cpu_utilization DESC

# 파드별 재시작(restart) 카운트

STATS avg(number_of_container_restarts) as avg_number_of_container_restarts by PodName

| SORT avg_number_of_container_restarts DESC

# 요청된 Pod와 실행 중인 Pod 간 비교

fields @timestamp, @message

| sort @timestamp desc

| filter Type="Pod"

| stats min(pod_number_of_containers) as requested, min(pod_number_of_running_containers) as running, ceil(avg(pod_number_of_containers-pod_number_of_running_containers)) as pods_missing by kubernetes.pod_name

| sort pods_missing desc

# 클러스터 노드 실패 횟수

stats avg(cluster_failed_node_count) as CountOfNodeFailures

| filter Type="Cluster"

| sort @timestamp desc

# 파드별 CPU 사용량

stats pct(container_cpu_usage_total, 50) as CPUPercMedian by kubernetes.container_name

| filter Type="Container"

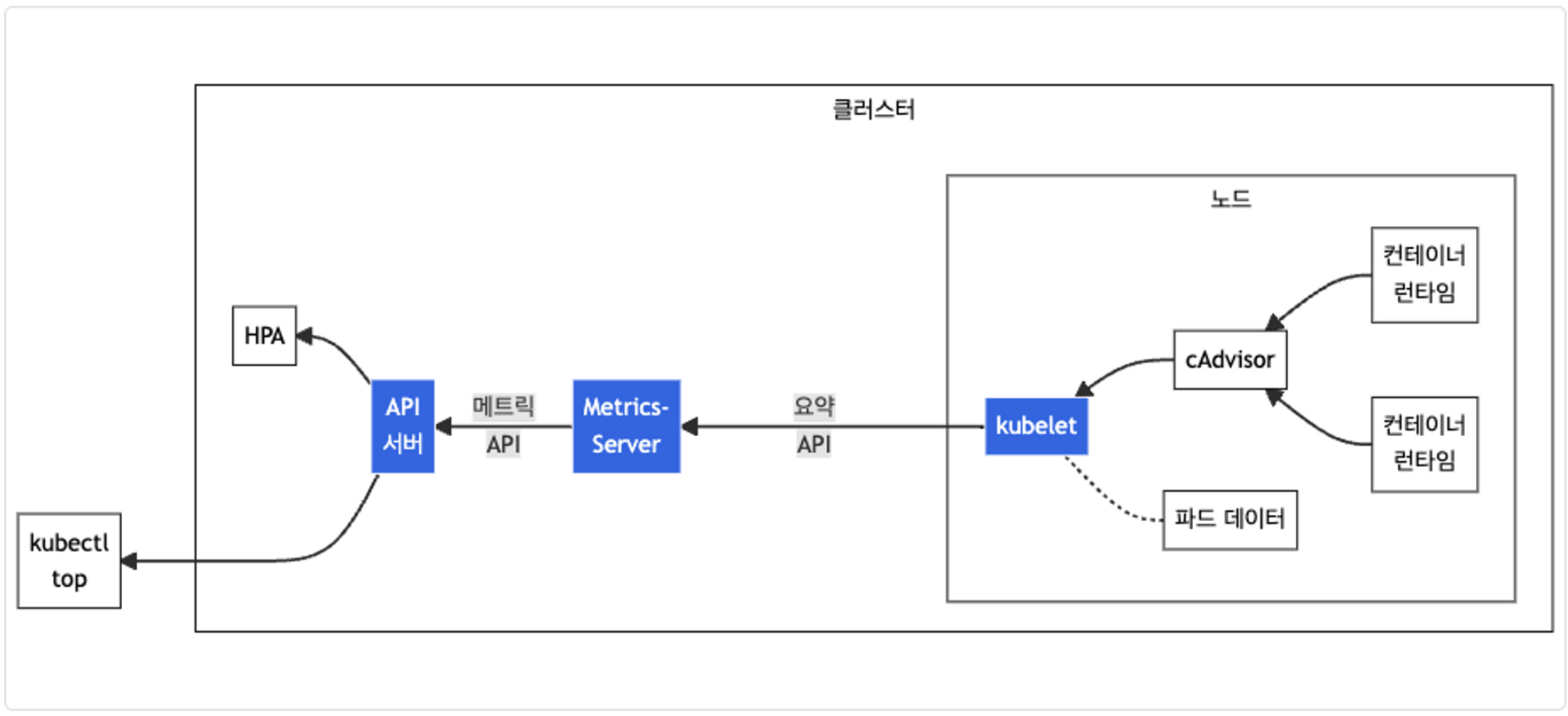

| sort CPUPercMedian desc✍ Metrics-server & kwatch & botkube

Metrics-server확인 : kubelet으로부터 수집한 리소스 메트릭을 수집 및 집계하는 클러스터 애드온 구성 요소 - EKS Github Docs CMD- cAdvisor : kubelet에 포함된 컨테이너 메트릭을 수집, 집계, 노출하는 데몬

# 배포

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

# 메트릭 서버 확인 : 메트릭은 15초 간격으로 cAdvisor를 통하여 가져옴

kubectl get pod -n kube-system -l k8s-app=metrics-server

kubectl api-resources | grep metrics

kubectl get apiservices |egrep '(AVAILABLE|metrics)'

# 노드 메트릭 확인

kubectl top node

# 파드 메트릭 확인

kubectl top pod -A

kubectl top pod -n kube-system --sort-by='cpu'

kubectl top pod -n kube-system --sort-by='memory'(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl get pod -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-6bf466fbf5-7262h 0/1 Running 0 10s

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl api-resources | grep metrics

error: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl get apiservices |egrep '(AVAILABLE|metrics)'

NAME SERVICE AVAILABLE AGE

v1beta1.metrics.k8s.io kube-system/metrics-server False (MissingEndpoints) 13s

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]#

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl top pod -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system aws-load-balancer-controller-5f99d5f58f-hjjcv 3m 24Mi

kube-system aws-load-balancer-controller-5f99d5f58f-zs2b7 1m 19Mi

kube-system aws-node-gzngx 3m 38Mi

kube-system aws-node-qjvmk 3m 38Mi

kube-system aws-node-vcb5g 6m 38Mi

kube-system coredns-6777fcd775-j4r7s 2m 12Mi

kube-system coredns-6777fcd775-l7xsw 2m 13Mi

kube-system ebs-csi-controller-67658f895c-8rkzd 5m 54Mi

kube-system ebs-csi-controller-67658f895c-bnzjh 2m 51Mi

kube-system ebs-csi-node-2lt2l 3m 23Mi

kube-system ebs-csi-node-d8rwq 2m 20Mi

kube-system ebs-csi-node-jdgxb 2m 20Mi

kube-system efs-csi-controller-6f64dcc5dc-txm97 3m 44Mi

kube-system efs-csi-controller-6f64dcc5dc-vw8zh 2m 41Mi

kube-system efs-csi-node-5hth6 3m 37Mi

kube-system efs-csi-node-df45x 3m 38Mi

kube-system efs-csi-node-xxjm4 3m 38Mi

kube-system external-dns-d5d957f98-tspnv 1m 16Mi

kube-system kube-ops-view-558d87b798-np7z8 12m 35Mi

kube-system kube-proxy-7hctc 3m 10Mi

kube-system kube-proxy-kgh6p 3m 10Mi

kube-system kube-proxy-qllq2 4m 10Mi

kube-system metrics-server-6bf466fbf5-7262h 9m 17Mi

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl top pod -n kube-system --sort-by='cpu'

NAME CPU(cores) MEMORY(bytes)

kube-ops-view-558d87b798-np7z8 11m 35Mi

metrics-server-6bf466fbf5-7262h 4m 17Mi

aws-node-gzngx 4m 38Mi

aws-node-qjvmk 4m 38Mi

ebs-csi-controller-67658f895c-8rkzd 4m 54Mi

efs-csi-node-df45x 4m 38Mi

efs-csi-controller-6f64dcc5dc-txm97 3m 44Mi

aws-node-vcb5g 3m 38Mi

aws-load-balancer-controller-5f99d5f58f-hjjcv 3m 24Mi

efs-csi-node-xxjm4 3m 37Mi

efs-csi-node-5hth6 3m 37Mi

coredns-6777fcd775-j4r7s 2m 12Mi

ebs-csi-node-jdgxb 2m 20Mi

efs-csi-controller-6f64dcc5dc-vw8zh 2m 41Mi

ebs-csi-node-d8rwq 2m 20Mi

ebs-csi-node-2lt2l 2m 21Mi

ebs-csi-controller-67658f895c-bnzjh 2m 51Mi

coredns-6777fcd775-l7xsw 2m 12Mi

external-dns-d5d957f98-tspnv 1m 16Mi

kube-proxy-7hctc 1m 10Mi

kube-proxy-kgh6p 1m 10Mi

kube-proxy-qllq2 1m 10Mi

aws-load-balancer-controller-5f99d5f58f-zs2b7 1m 19Mi

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]# kubectl top pod -n kube-system --sort-by='memory'

NAME CPU(cores) MEMORY(bytes)

ebs-csi-controller-67658f895c-8rkzd 4m 54Mi

ebs-csi-controller-67658f895c-bnzjh 2m 51Mi

efs-csi-controller-6f64dcc5dc-txm97 3m 44Mi

efs-csi-controller-6f64dcc5dc-vw8zh 2m 41Mi

efs-csi-node-df45x 4m 38Mi

aws-node-gzngx 4m 38Mi

aws-node-vcb5g 3m 38Mi

aws-node-qjvmk 4m 38Mi

efs-csi-node-xxjm4 3m 37Mi

efs-csi-node-5hth6 3m 37Mi

kube-ops-view-558d87b798-np7z8 11m 35Mi

aws-load-balancer-controller-5f99d5f58f-hjjcv 3m 24Mi

ebs-csi-node-2lt2l 2m 21Mi

ebs-csi-node-d8rwq 2m 20Mi

ebs-csi-node-jdgxb 2m 20Mi

aws-load-balancer-controller-5f99d5f58f-zs2b7 1m 19Mi

metrics-server-6bf466fbf5-7262h 4m 17Mi

external-dns-d5d957f98-tspnv 1m 16Mi

coredns-6777fcd775-l7xsw 2m 12Mi

coredns-6777fcd775-j4r7s 2m 12Mi

kube-proxy-7hctc 1m 10Mi

kube-proxy-kgh6p 1m 10Mi

kube-proxy-qllq2 1m 10Mi

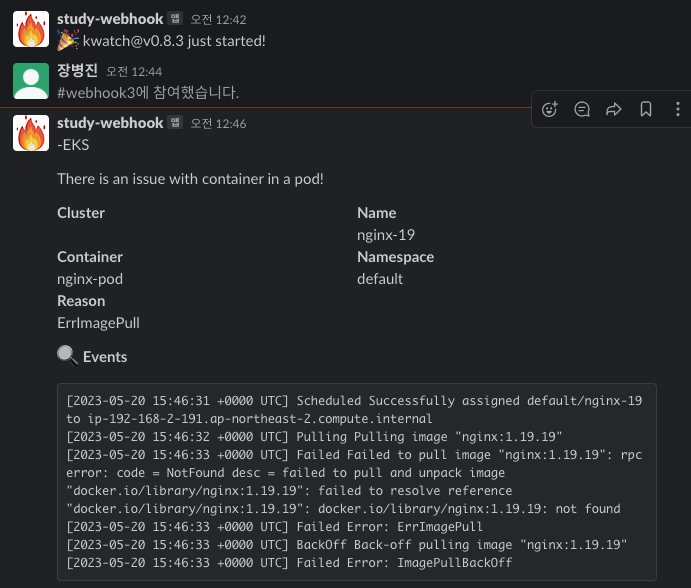

(primedo_eks@myeks:default) [root@myeks-bastion-EC2 ~]## configmap 생성

cat <<EOT > ~/kwatch-config.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kwatch

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kwatch

namespace: kwatch

data:

config.yaml: |

alert:

slack:

webhook: 'https://hooks.slack.com/ser~'

title: $NICK-EKS

#text:

pvcMonitor:

enabled: true

interval: 5

threshold: 70

EOT

kubectl apply -f kwatch-config.yaml

# 배포

kubectl apply -f https://raw.githubusercontent.com/abahmed/kwatch/v0.8.3/deploy/deploy.yaml

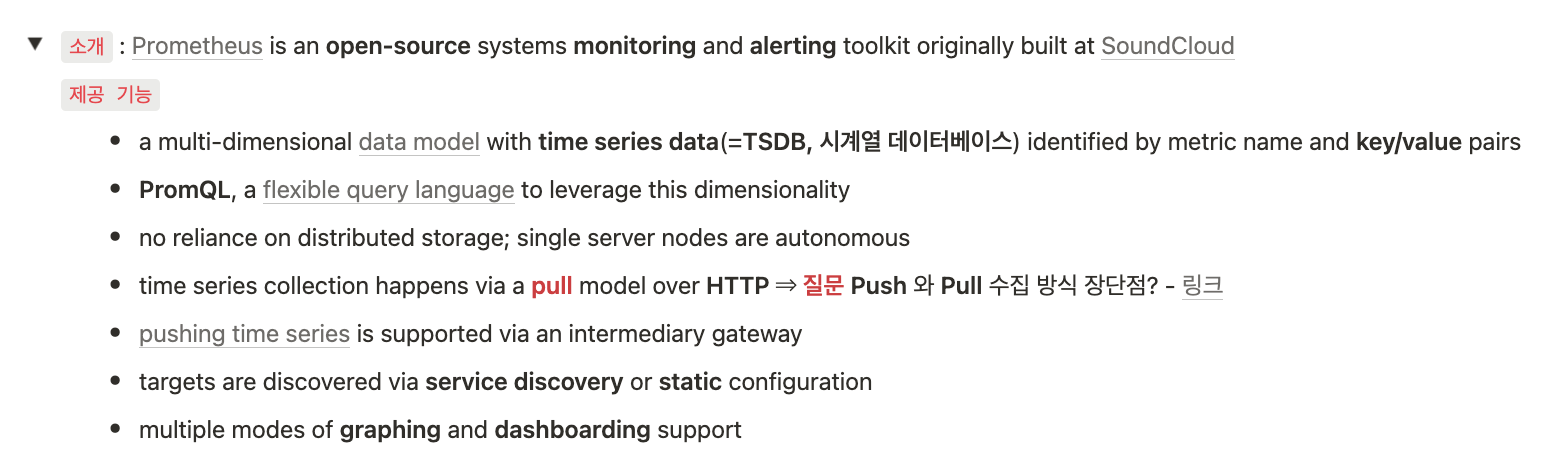

✍ 프로메테우스-스택

Prometheus Kubernetes 쌍둥이 음모설 : P8S vs K8S 축약, 둘 다 10글자, 로고 보색 관계(주황 vs 파랑)

프로메테우스-스택 설치 : 모니터링에 필요한 여러 요소를 단일 차트(스택)으로 제공 ← 시각화(그라파나), 이벤트 메시지 정책(경고 임계값, 경고 수준) 등 - Helm

kube-prometheus-stack collects Kubernetes manifests, Grafana dashboards, and Prometheus rules combined with documentation and scripts to provide easy to operate end-to-end Kubernetes cluster monitoring with Prometheus using the Prometheus Operator.

# 모니터링

kubectl create ns monitoring

watch kubectl get pod,pvc,svc,ingress -n monitoring

# 사용 리전의 인증서 ARN 확인

CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

echo $CERT_ARN

# repo 추가

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# 파라미터 파일 생성

cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

defaultRules:

create: false

kubeControllerManager:

enabled: false

kubeEtcd:

enabled: false

kubeScheduler:

enabled: false

alertmanager:

enabled: false

# alertmanager:

# ingress:

# enabled: true

# ingressClassName: alb

# hosts:

# - alertmanager.$MyDomain

# paths:

# - /*

# annotations:

# alb.ingress.kubernetes.io/scheme: internet-facing

# alb.ingress.kubernetes.io/target-type: ip

# alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

# alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

# alb.ingress.kubernetes.io/success-codes: 200-399

# alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

# alb.ingress.kubernetes.io/group.name: study

# alb.ingress.kubernetes.io/ssl-redirect: '443'

EOT

cat monitor-values.yaml | yh

# 배포

helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 45.27.2 \

--set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \

-f monitor-values.yaml --namespace monitoring

# 확인

## alertmanager-0 : 사전에 정의한 정책 기반(예: 노드 다운, 파드 Pending 등)으로 시스템 경고 메시지를 생성 후 경보 채널(슬랙 등)로 전송

## grafana : 프로메테우스는 메트릭 정보를 저장하는 용도로 사용하며, 그라파나로 시각화 처리

## prometheus-0 : 모니터링 대상이 되는 파드는 ‘exporter’라는 별도의 사이드카 형식의 파드에서 모니터링 메트릭을 노출, pull 방식으로 가져와 내부의 시계열 데이터베이스에 저장

## node-exporter : 노드익스포터는 물리 노드에 대한 자원 사용량(네트워크, 스토리지 등 전체) 정보를 메트릭 형태로 변경하여 노출

## operator : 시스템 경고 메시지 정책(prometheus rule), 애플리케이션 모니터링 대상 추가 등의 작업을 편리하게 할수 있게 CRD 지원

## kube-state-metrics : 쿠버네티스의 클러스터의 상태(kube-state)를 메트릭으로 변환하는 파드

helm list -n monitoring

kubectl get pod,svc,ingress -n monitoring

kubectl get-all -n monitoring

kubectl get prometheus,servicemonitors -n monitoring

kubectl get crd | grep monitoring

# helm 삭제

helm uninstall -n monitoring kube-prometheus-stack

# crd 삭제

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com

kubectl delete crd alertmanagers.monitoring.coreos.com

kubectl delete crd podmonitors.monitoring.coreos.com

kubectl delete crd probes.monitoring.coreos.com

kubectl delete crd prometheuses.monitoring.coreos.com

kubectl delete crd prometheusrules.monitoring.coreos.com

kubectl delete crd servicemonitors.monitoring.coreos.com

kubectl delete crd thanosrulers.monitoring.coreos.com

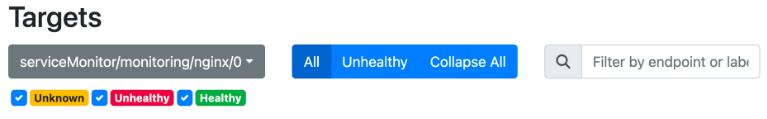

- 프로메테우스 설정(Configuration) 확인 : Status → Service Discovery : 모든 endpoint 로 도달 가능 시 **자동 발견**!, 도달 규칙은 설정Configuration 파일에 정의

- 예) serviceMonitor/monitoring/kube-prometheus-stack-apiserver/0 경우 해당 __**address**__="*192.168.1.53*:443" **도달 가능 시 자동 발견됨**

- 메트릭을 그래프(Graph)로 조회 : Graph - 아래 PromQL 쿼리(전체 클러스터 노드의 CPU 사용량 합계)입력 후 조회 → Graph 확인

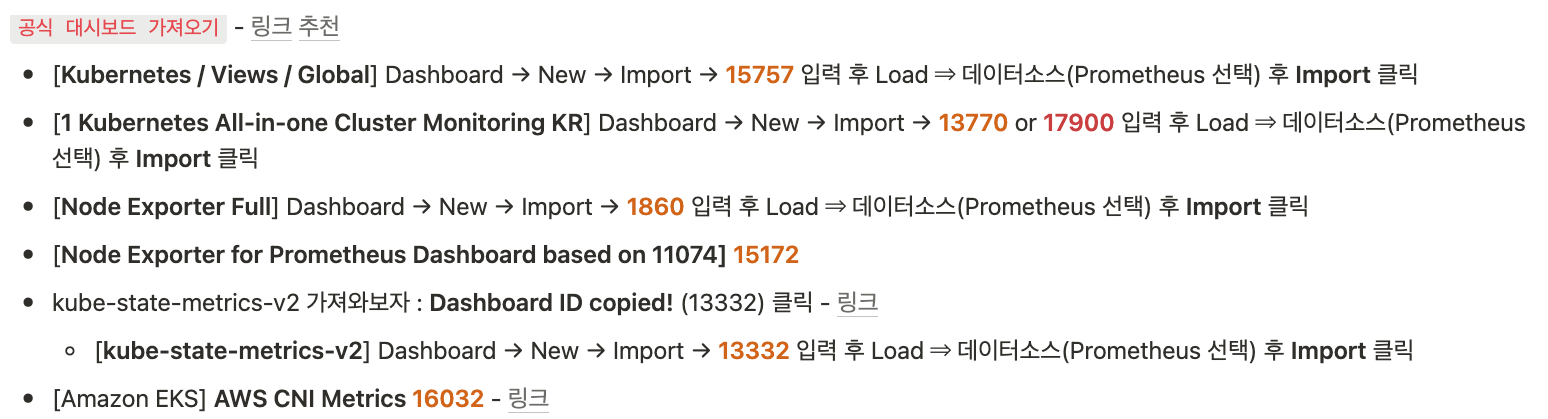

- 혹은 지구 아이콘(Metrics Explorer) 클릭 시 전체 메트릭 출력되며, 해당 메트릭 클릭해서 확인✍ 그라파나 Grafana

- Grafana open source software enables you to query, visualize, alert on, and explore your metrics, logs, and traces wherever they are stored.

- Grafana OSS provides you with tools to turn your time-series database (TSDB) data into insightful graphs and visualizations.

- 그라파나는 시각화 솔루션으로 데이터 자체를 저장하지 않음 → 현재 실습 환경에서는 데이터 소스는 프로메테우스를 사용

- 접속 정보 확인 및 로그인 : 기본 계정 - admin / prom-operator

# 그라파나 버전 확인

kubectl exec -it -n monitoring deploy/kube-prometheus-stack-grafana -- grafana-cli --version

grafana cli version 9.5.1

# ingress 확인

kubectl get ingress -n monitoring kube-prometheus-stack-grafana

kubectl describe ingress -n monitoring kube-prometheus-stack-grafana

# ingress 도메인으로 웹 접속 : 기본 계정 - admin / prom-operator

echo -e "Grafana Web URL = https://grafana.$MyDomain"# 서비스 주소 확인

kubectl get svc,ep -n monitoring kube-prometheus-stack-prometheus

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-prometheus-stack-prometheus ClusterIP 10.100.143.5 <none> 9090/TCP 21m

NAME ENDPOINTS AGE

endpoints/kube-prometheus-stack-prometheus 192.168.2.93:9090 21m

# 테스트용 파드 배포

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: netshoot-pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

kubectl get pod netshoot-pod

# 접속 확인

kubectl exec -it netshoot-pod -- nslookup kube-prometheus-stack-prometheus.monitoring

kubectl exec -it netshoot-pod -- curl -s kube-prometheus-stack-prometheus.monitoring:9090/graph -v ; echo

# 삭제

kubectl delete pod netshoot-pod

# PodMonitor 배포

cat <<EOF | kubectl create -f -

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: aws-cni-metrics

namespace: kube-system

spec:

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

podMetricsEndpoints:

- interval: 30s

path: /metrics

port: metrics

selector:

matchLabels:

k8s-app: aws-node

EOF

# PodMonitor 확인

kubectl get podmonitor -n kube-system