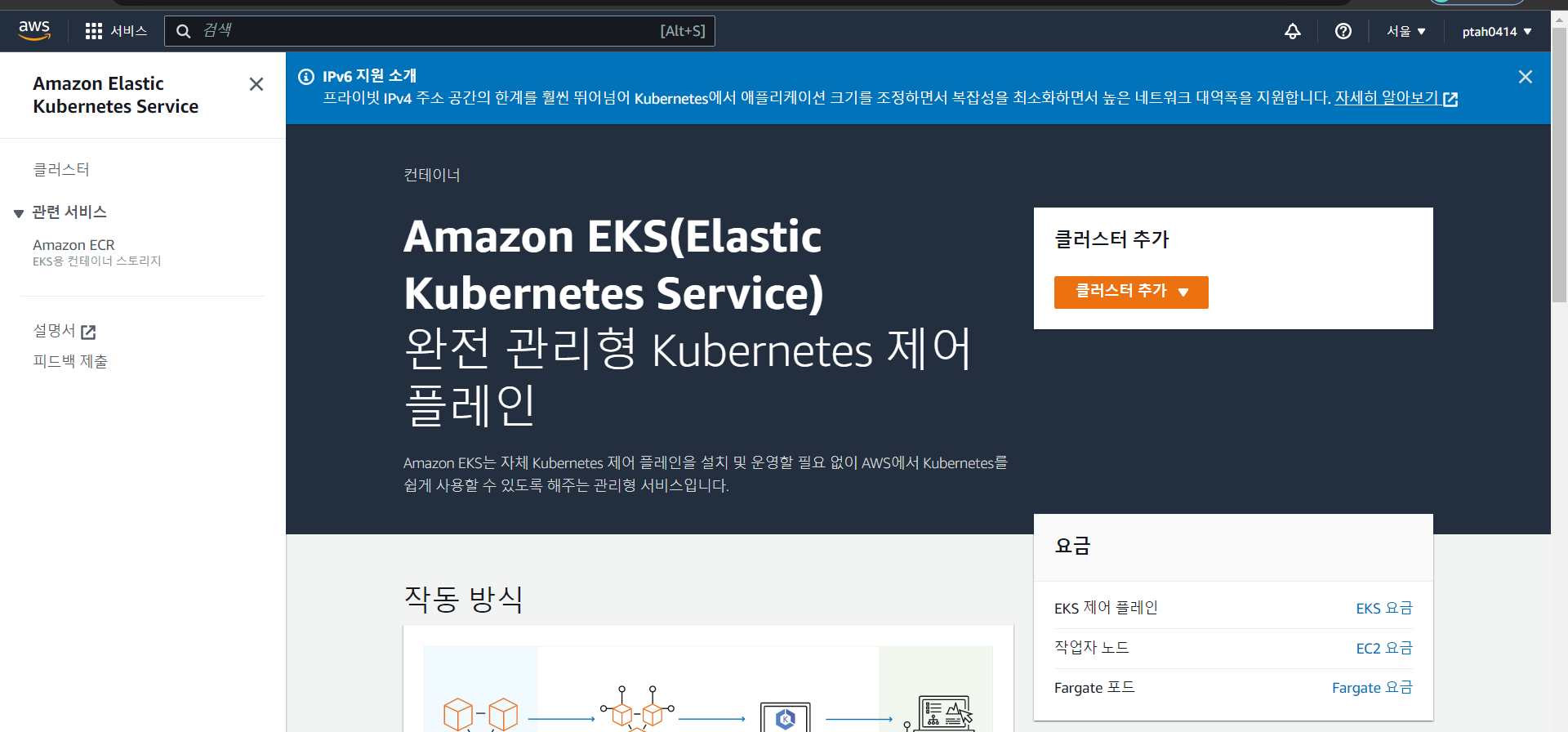

EKS -> region: seoul (ap-northesat-2)

이중화

- 네트워크 이중화 -> FHRP(First Hop Redundancy Protocol, 게이트웨이 이중화), VRRP, GLBP, HSRP

- 서버 이중화 -> 티밍(Teaming), 이더채널, 본딩(Bonding), VxLAN 등과 같이 클라우드 환경에서의 관리 용이를 위해 네트워크 가상화 솔루션이 별도로 존재한다. -> VMware NSX

- DB 이중화 -> 자료 손실에 대비해야한다. 복제 방식, 분산처리(클러스터를 구성하여 물리적으로 나뉘어진 곳에서 데이터를 나누어 처리한다.)

서비스의 다운타임 없이 가용성을 올릴 수 있다. 다운타임은 SLA에 의해 정해져있는 시간을 준수해야 한다.

cloud watch(container insights)를 통해 컨테이너들의 pending 상태를 모니터링하고 autoscaling을 적용하여 ec2의 인스턴스(worker)를 늘릴 수 있다.

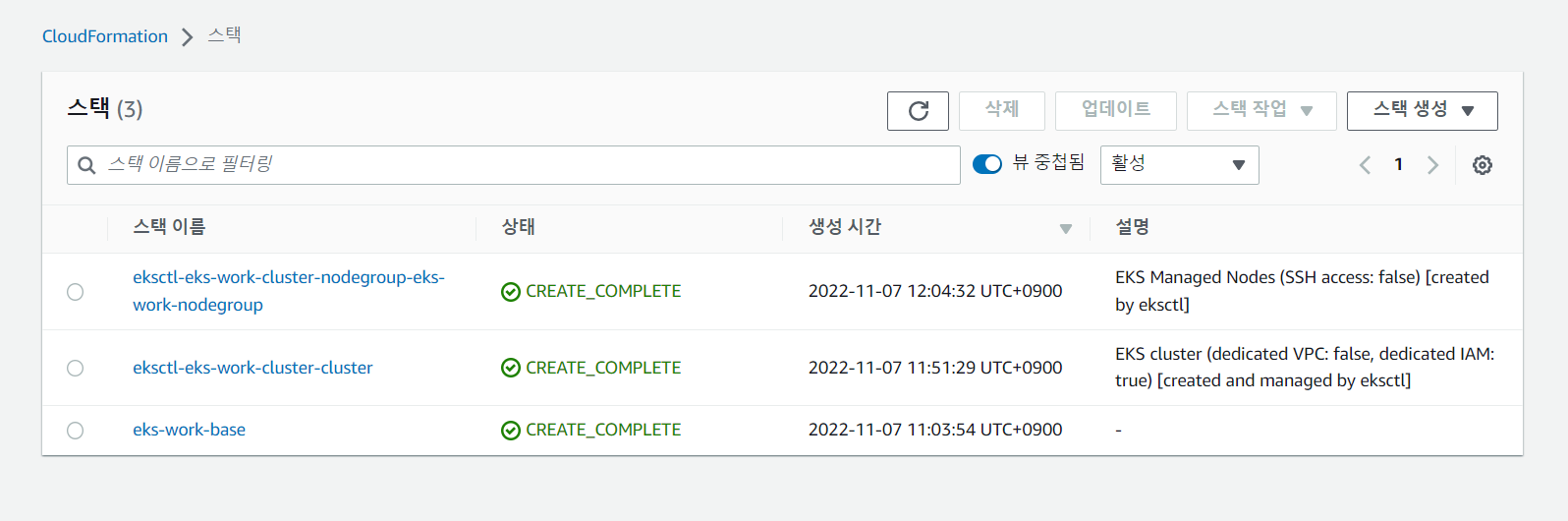

CloudFormation

코드를 이용하여 인프라 프로비전이 가능하다. (heat, vagrant, terraform)

IaC 도구

한 번에 프로비전, 일괄 삭제 가능하여 일부 내용만 수정해서 재배포 가능하는 등 재사용이 편리하다.

Stack

Docker swarm stack(서비스의 묶음, docker-compose + docker swarm ) -> 서비스는 컨테이너의 묶음

ex) 워드 프레스 스택 = 워드프레스 서비스 + 데이터베이스 서비스

CloudFormation

- base_resorce.yaml

AWSTemplateFormatVersion: '2010-09-09'

Parameters:

ClusterBaseName:

Type: String

Default: eks-work

TargetRegion:

Type: String

Default: ap-northeast-2

AvailabilityZone1:

Type: String

Default: ap-northeast-2a

AvailabilityZone2:

Type: String

Default: ap-northeast-2b

AvailabilityZone3:

Type: String

Default: ap-northeast-2c

VpcBlock:

Type: String

Default: 192.168.0.0/16

WorkerSubnet1Block:

Type: String

Default: 192.168.0.0/24

WorkerSubnet2Block:

Type: String

Default: 192.168.1.0/24

WorkerSubnet3Block:

Type: String

Default: 192.168.2.0/24

Resources:

EksWorkVPC:

Type: AWS::EC2::VPC

Properties:

CidrBlock: !Ref VpcBlock

EnableDnsSupport: true

EnableDnsHostnames: true

Tags:

- Key: Name

Value: !Sub ${ClusterBaseName}-VPC

WorkerSubnet1:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone: !Ref AvailabilityZone1

CidrBlock: !Ref WorkerSubnet1Block

VpcId: !Ref EksWorkVPC

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub ${ClusterBaseName}-WorkerSubnet1

WorkerSubnet2:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone: !Ref AvailabilityZone2

CidrBlock: !Ref WorkerSubnet2Block

VpcId: !Ref EksWorkVPC

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub ${ClusterBaseName}-WorkerSubnet2

WorkerSubnet3:

Type: AWS::EC2::Subnet

Properties:

AvailabilityZone: !Ref AvailabilityZone3

CidrBlock: !Ref WorkerSubnet3Block

VpcId: !Ref EksWorkVPC

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub ${ClusterBaseName}-WorkerSubnet3

InternetGateway:

Type: AWS::EC2::InternetGateway

VPCGatewayAttachment:

Type: AWS::EC2::VPCGatewayAttachment

Properties:

InternetGatewayId: !Ref InternetGateway

VpcId: !Ref EksWorkVPC

WorkerSubnetRouteTable:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref EksWorkVPC

Tags:

- Key: Name

Value: !Sub ${ClusterBaseName}-WorkerSubnetRouteTable

WorkerSubnetRoute:

Type: AWS::EC2::Route

Properties:

RouteTableId: !Ref WorkerSubnetRouteTable

DestinationCidrBlock: 0.0.0.0/0

GatewayId: !Ref InternetGateway

WorkerSubnet1RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref WorkerSubnet1

RouteTableId: !Ref WorkerSubnetRouteTable

WorkerSubnet2RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref WorkerSubnet2

RouteTableId: !Ref WorkerSubnetRouteTable

WorkerSubnet3RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

SubnetId: !Ref WorkerSubnet3

RouteTableId: !Ref WorkerSubnetRouteTable

Outputs:

VPC:

Value: !Ref EksWorkVPC

WorkerSubnets:

Value: !Join

- ","

- [!Ref WorkerSubnet1, !Ref WorkerSubnet2, !Ref WorkerSubnet3]

RouteTable:

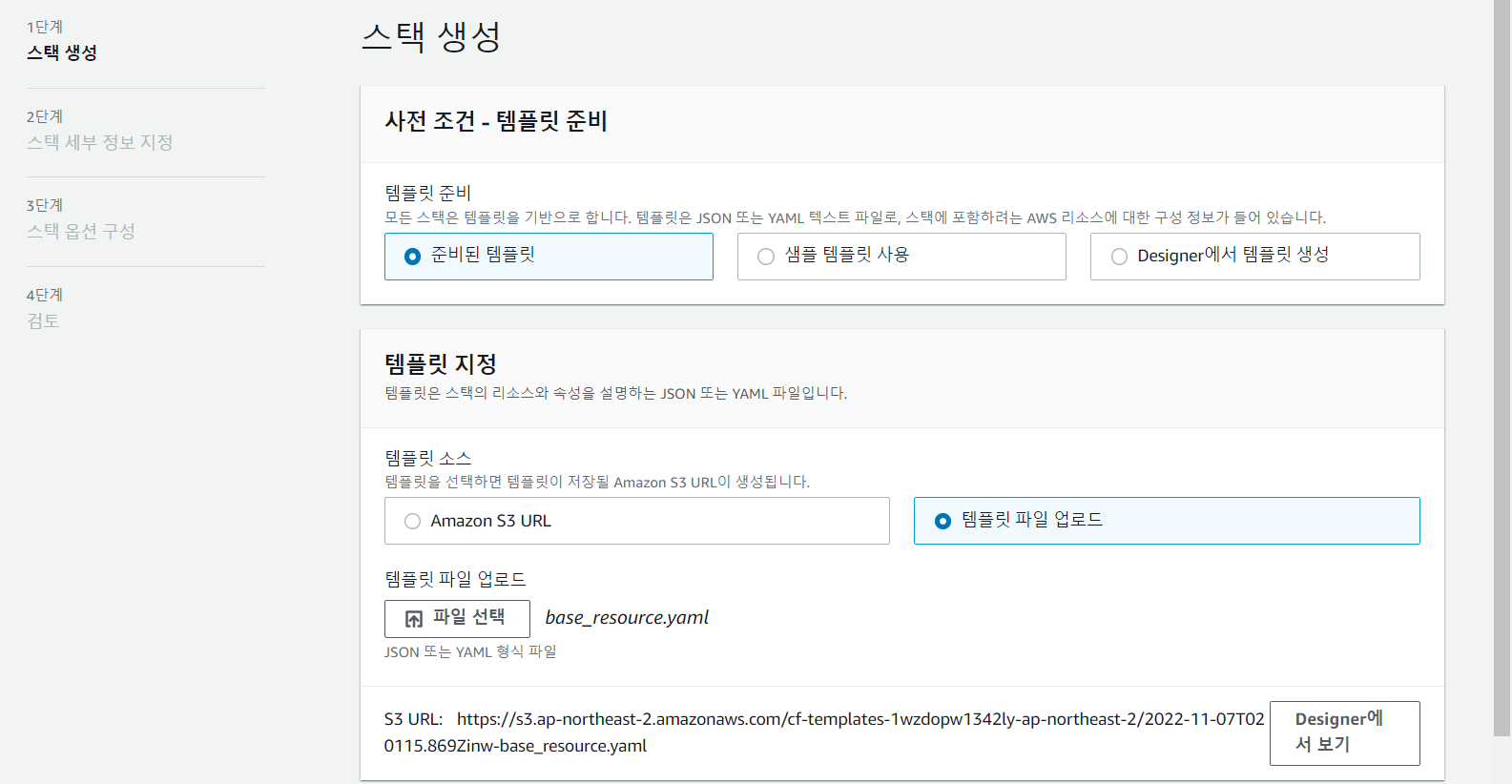

Value: !Ref WorkerSubnetRouteTable- 1단계. 스택 생성

base_resource.yaml 업로드

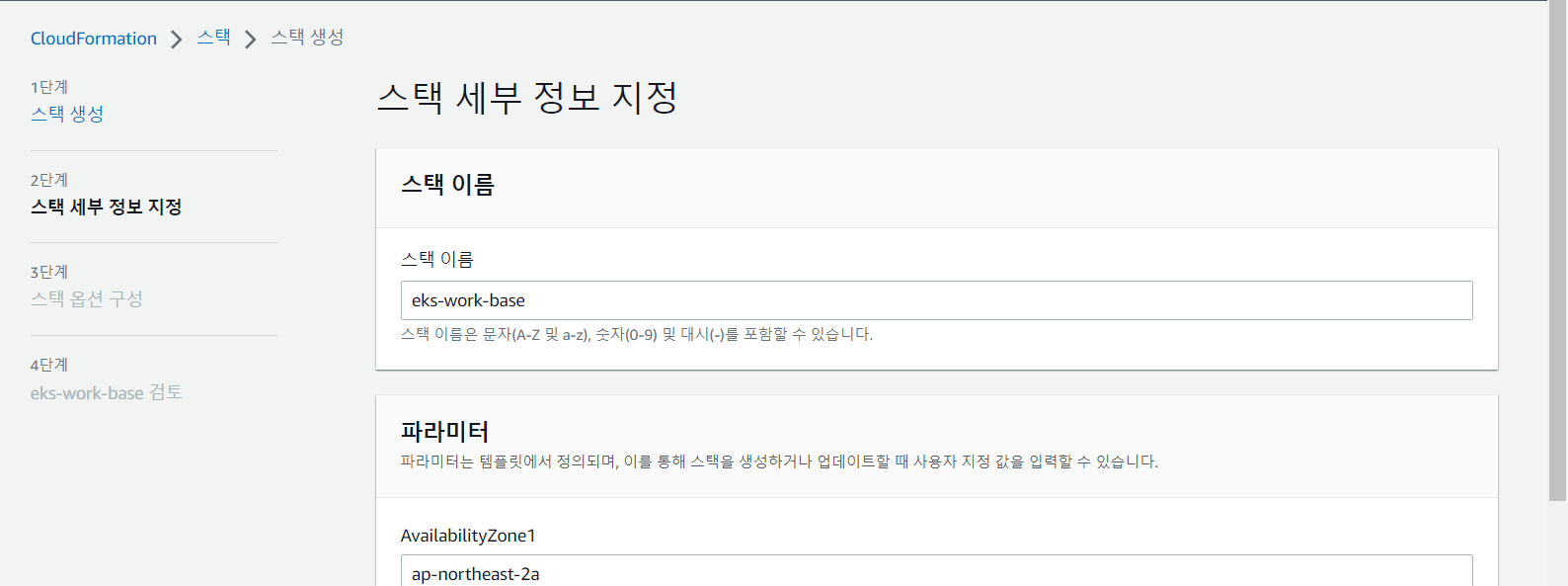

- 2단계. 스택 세부 정보 지정

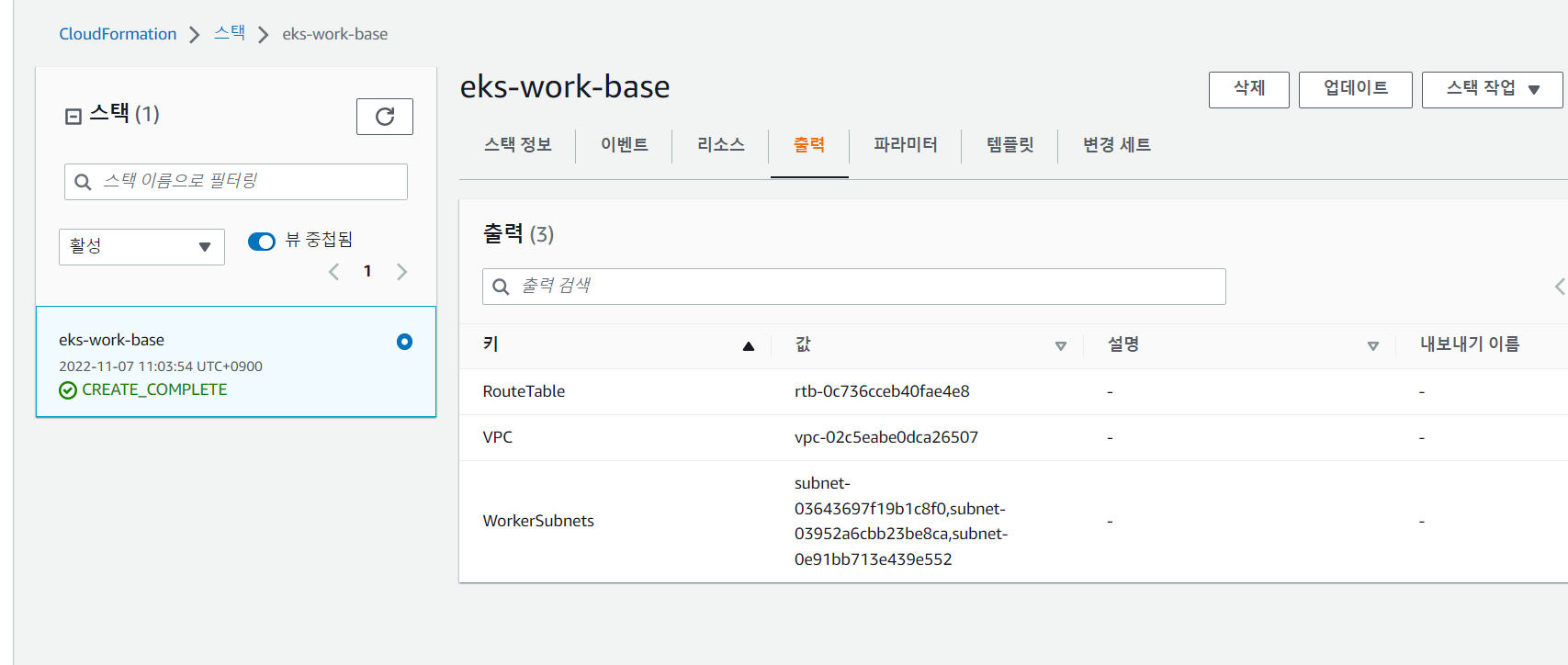

- 생성 확인

RouteTable rtb-0c736cceb40fae4e8 VPC vpc-02c5eabe0dca26507 WorkerSubnets - subnet-03643697f19b1c8f0 - subnet-03952a6cbb23be8ca - subnet-0e91bb713e439e552

EKS(Elastic Kubernetes Service)

- k8s 클러스터에 대한 시작, 실행, 크기 조정(Pod, Node)

- Control Plane: Master, Data Plane: Worker

- 관리형으로 프로비전을 통해 설치된 k8s를 관리할 수 있다.

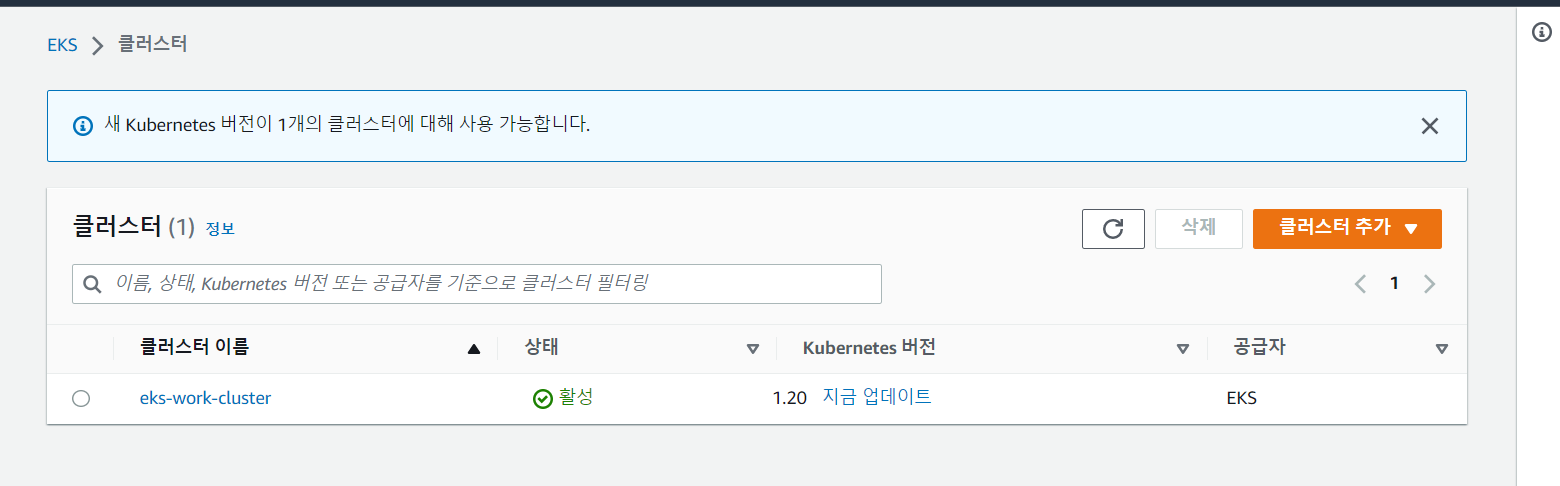

EKS 클러스터 구성

1) 웹 콘솔상에서 직접 구현

2) 명령으로 프로비전 가능 (eksctl을 이용할 수 있다 -> cloudformation으로 전환되어 배포된다)

3) cloudformation과의 유연한 관계를 통해 환경 구성 등이 가능하다.

4) fargate를 이용하여 노드들에 대한 관리에서 벗어난 serverless 형태로 사용이 가능하다.

자원 및 OS에 대한 제어권이 AWS에 있으므로 AWS의 제약사항 등을 따라야 한다.

- aws configure

root@master:~# aws configure

Command 'aws' not found, but can be installed with:

snap install aws-cli # version 1.15.58, or

apt install awscli # version 1.18.69-1ubuntu0.20.04.1

See 'snap info aws-cli' for additional versions.

root@master:~# PATH=/root/.local/bin/:$PATH

root@master:~# aws configure

AWS Access Key ID [****************5YHK]: ****************5YHK

AWS Secret Access Key [****************RIwp]: ****************RIwp

Default region name [ap-northeast-1]: ap-northeast-2

Default output format [None]:

root@master:~# - eksctl 설치

root@master:~# curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

root@master:~# sudo mv /tmp/eksctl /usr/local/bin/

root@master:~# eksctl version

0.117.0- kubectl 설치

root@master:~# snap install kubectl --classic

kubectl 1.25.3 from Canonical✓ installed- 클러스터 생성

root@master:~#

root@master:~# eksctl create cluster \

> --vpc-public-subnets subnet-03643697f19b1c8f0,subnet-03952a6cbb23be8ca,subnet-0e91bb713e439e552 \

> --name eks-work-cluster \

> --region ap-northeast-2 \

> --nodegroup-name eks-work-nodegroup \

> --version 1.20 \

> --node-type t2.small \

> --nodes 2 \

> --nodes-min 2 \

> --nodes-max 4

2022-11-07 11:51:28 [ℹ] eksctl version 0.117.0

2022-11-07 11:51:28 [ℹ] using region ap-northeast-2

2022-11-07 11:51:28 [✔] using existing VPC (vpc-02c5eabe0dca26507) and subnets (private:map[] public:map[ap-northeast-2a:{subnet-03643697f19b1c8f0 ap-northeast-2a 192.168.0.0/24 0 } ap-northeast-2b:{subnet-03952a6cbb23be8ca ap-northeast-2b 192.168.1.0/24 0 } ap-northeast-2c:{subnet-0e91bb713e439e552 ap-northeast-2c 192.168.2.0/24 0 }])

2022-11-07 11:51:28 [!] custom VPC/subnets will be used; if resulting cluster doesn't function as expected, make sure to review the configuration of VPC/subnets

2022-11-07 11:51:28 [ℹ] nodegroup "eks-work-nodegroup" will use "" [AmazonLinux2/1.20]

2022-11-07 11:51:28 [ℹ] using Kubernetes version 1.20

2022-11-07 11:51:28 [ℹ] creating EKS cluster "eks-work-cluster" in "ap-northeast-2" region with managed nodes

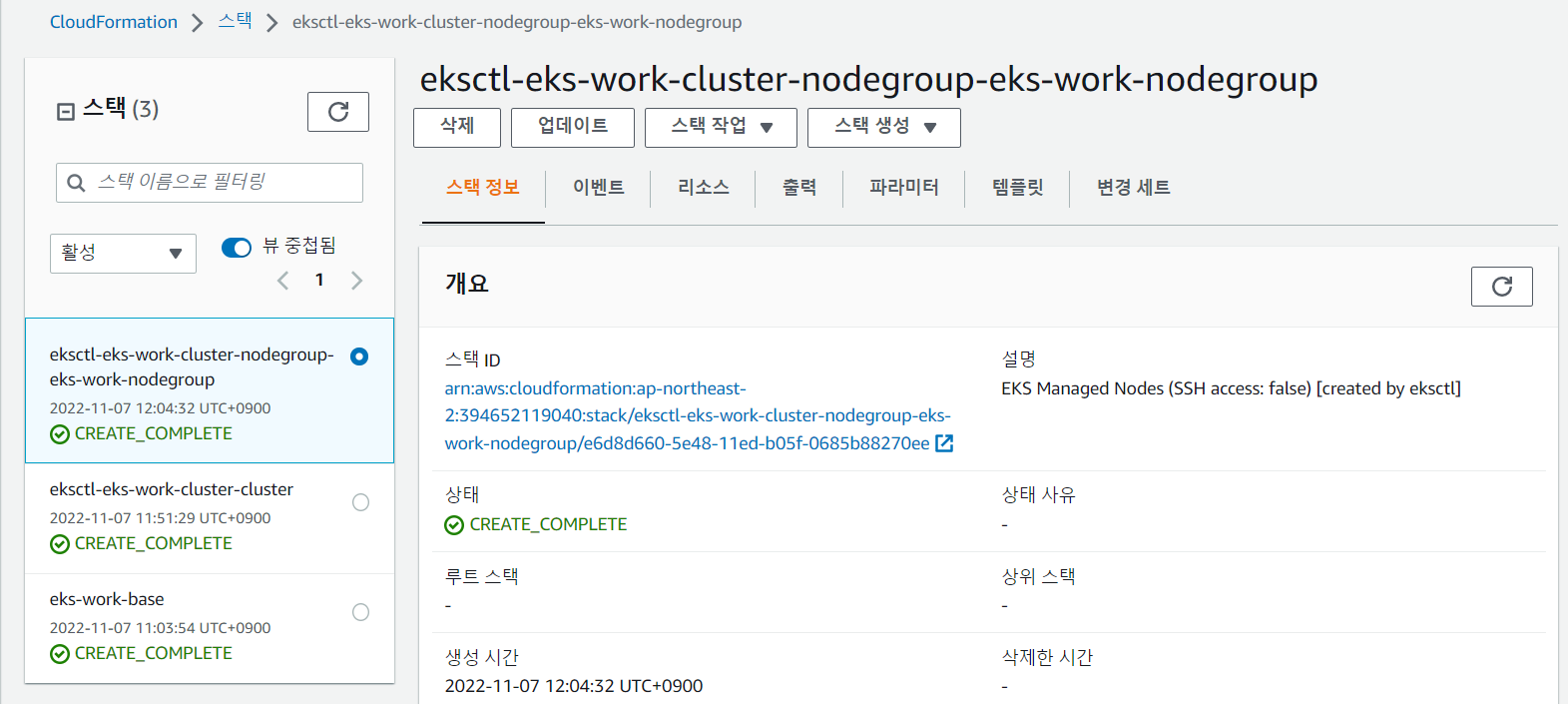

2022-11-07 11:51:28 [ℹ] will create 2 separate CloudFormation stacks for cluster itself and the initial managed nodegroup

2022-11-07 11:51:28 [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=ap-northeast-2 --cluster=eks-work-cluster'

2022-11-07 11:51:28 [ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "eks-work-cluster" in "ap-northeast-2"

2022-11-07 11:51:28 [ℹ] CloudWatch logging will not be enabled for cluster "eks-work-cluster" in "ap-northeast-2"

2022-11-07 11:51:28 [ℹ] you can enable it with 'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} --region=ap-northeast-2 --cluster=eks-work-cluster'

2022-11-07 11:51:28 [ℹ]

2 sequential tasks: { create cluster control plane "eks-work-cluster",

2 sequential sub-tasks: {

wait for control plane to become ready,

create managed nodegroup "eks-work-nodegroup",

}

}

2022-11-07 11:51:28 [ℹ] building cluster stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:51:29 [ℹ] deploying stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:51:59 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:52:29 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:53:29 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:54:29 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:55:29 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:56:29 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:57:30 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:58:30 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 11:59:30 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 12:00:30 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 12:01:30 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 12:02:30 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-cluster"

2022-11-07 12:04:32 [ℹ] building managed nodegroup stack "eksctl-eks-work-cluster-nodegroup-eks-work-nodegroup"

2022-11-07 12:04:32 [ℹ] deploying stack "eksctl-eks-work-cluster-nodegroup-eks-work-nodegroup"

2022-11-07 12:04:32 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-nodegroup-eks-work-nodegroup"

2022-11-07 12:05:02 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-nodegroup-eks-work-nodegroup"

2022-11-07 12:05:54 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-nodegroup-eks-work-nodegroup"

2022-11-07 12:07:49 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-nodegroup-eks-work-nodegroup"

2022-11-07 12:09:35 [ℹ] waiting for CloudFormation stack "eksctl-eks-work-cluster-nodegroup-eks-work-nodegroup"

2022-11-07 12:09:35 [ℹ] waiting for the control plane to become ready

2022-11-07 12:09:36 [✔] saved kubeconfig as "/root/.kube/config"

2022-11-07 12:09:36 [ℹ] no tasks

2022-11-07 12:09:36 [✔] all EKS cluster resources for "eks-work-cluster" have been created

2022-11-07 12:09:36 [ℹ] nodegroup "eks-work-nodegroup" has 2 node(s)

2022-11-07 12:09:36 [ℹ] node "ip-192-168-0-166.ap-northeast-2.compute.internal" is ready

2022-11-07 12:09:36 [ℹ] node "ip-192-168-2-8.ap-northeast-2.compute.internal" is ready

2022-11-07 12:09:36 [ℹ] waiting for at least 2 node(s) to become ready in "eks-work-nodegroup"

2022-11-07 12:09:36 [ℹ] nodegroup "eks-work-nodegroup" has 2 node(s)

2022-11-07 12:09:36 [ℹ] node "ip-192-168-0-166.ap-northeast-2.compute.internal" is ready

2022-11-07 12:09:36 [ℹ] node "ip-192-168-2-8.ap-northeast-2.compute.internal" is ready

2022-11-07 12:09:38 [ℹ] kubectl command should work with "/root/.kube/config", try 'kubectl get nodes'

2022-11-07 12:09:38 [✔] EKS cluster "eks-work-cluster" in "ap-northeast-2" region is ready- eks 클러스터 생성 확인

root@master:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-192-168-0-166.ap-northeast-2.compute.internal Ready <none> 5m59s v1.20.15-eks-ba74326 192.168.0.166 52.78.151.243 Amazon Linux 2 5.4.209-116.367.amzn2.x86_64 docker://20.10.17

ip-192-168-2-8.ap-northeast-2.compute.internal Ready <none> 5m55s v1.20.15-eks-ba74326 192.168.2.8 3.36.71.162 Amazon Linux 2 5.4.209-116.367.amzn2.x86_64 docker://20.10.17- cloudformation stack 생성 확인

- nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx-ctn

image: nginx

ports:

- containerPort: 80

protocol: TCP- nginx-pod 배포

root@master:~# kubectl apply -f nginx-pod.yaml - pod 확인

root@master:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 17s 192.168.2.212 ip-192-168-2-8.ap-northeast-2.compute.internal <none> <none>- 포트포워딩으로 8001번 포트 임시로 열어 외부 접속 허용

root@master:~# kubectl port-forward nginx-pod 8001:80

Forwarding from 127.0.0.1:8001 -> 80

Forwarding from [::1]:8001 -> 80- localhost:8001 접속

root@master:~# curl localhost:8001

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>nginx 호스팅 확인

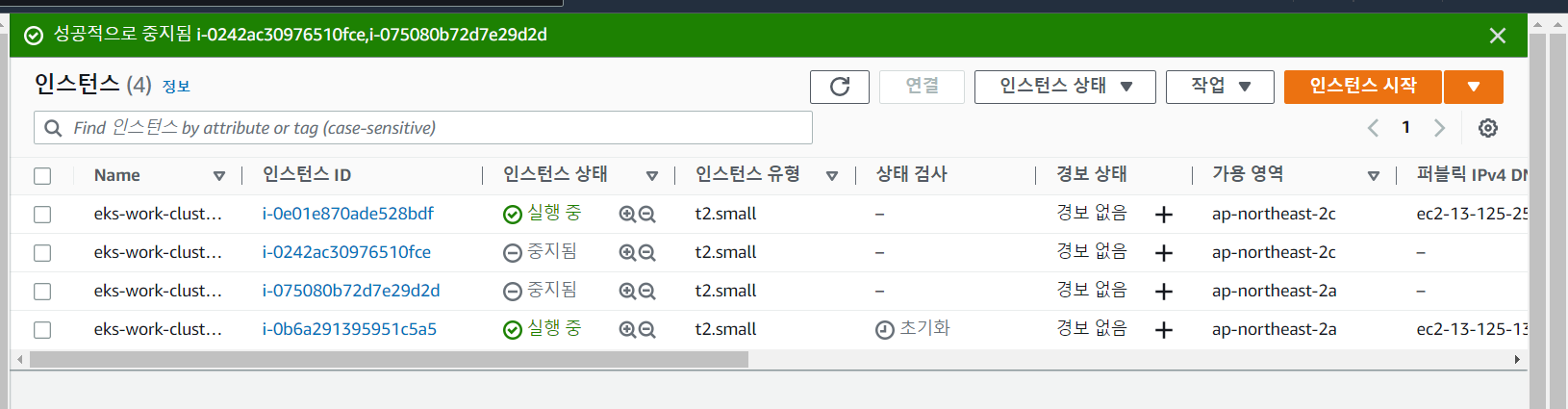

- 인스턴스 3개 모두 중지시켜보기

min 정책을 2개로 설정했기 때문에, 인스턴스 2개가 다시 실행된다.

CloudFormation 삭제 시

위에서부터 하나씩 삭제