쿠버네티스 구성하기

- root 계정으로 로그인

user1@manager:~$ su

Password:

root@manager:/home/user1# - swapoff

- swapoff: 각 노드에서 가상 메모리를 사용하지 않는다.

- 모든 노드의 일관성을 유지하기 위해 k8s에서는 가상 메모리 사용을 못하도록 한다.

- 노드 실행 시마다 자동으로 가상 메모리 사용을 못하도록 하고 싶다면 /etc/fstab의 가장 아래에 있는 내용(/swapfile)을 주석 처리한다.

- swap 메모리를 사용하는 상태에서 클러스터를 맺으려고 한다면 오류가 발생할 수 있다.

root@manager:/home/user1# cat /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a

# device; this may be used with UUID= as a more robust way to name devices

# that works even if disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

# / was on /dev/sda5 during installation

UUID=5535a33d-56e1-4b72-a7d6-dcb513bd1a4b / ext4 errors=remount-ro 0 1

# /boot/efi was on /dev/sda1 during installation

UUID=0889-C55E /boot/efi vfat umask=0077 0 1

#/swapfile none swap sw 0 0-> /swapfile에 주석 처리를 해야 함

step 1) 클러스터 구성

root@manager:/home/user1# kubeadm init --apiserver-advertise-address 211.183.3.100

I0905 09:37:30.443478 3328 version.go:254] remote version is much newer: v1.25.0; falling back to: stable-1.21

[init] Using Kubernetes version: v1.21.14

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

...

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 211.183.3.100:6443 --token txl691.qficfxf8ramv1wd2 \

--discovery-token-ca-cert-hash sha256:55985f2922219aa58dcc448638c2bf33231eead697c49faaf12951b8a741817e

root@manager:/home/user1# 현재 사용자가 쿠버네티스의 admin 계정에 대한 설정 내용이 포함된 파일을 관리할 수 있다. -> 현재 사용자가 쿠버네티스 관리자로 등록된다.

내일 오전에 재부팅한 뒤 kubectl을 이용하여 클러스터에 대한 명령을 실행하는데 오류가 발생할 수 있다.

export KUBECONFIG=/etc/kubernetes/admin.conf다시 한 번 입력해야 한다.

또는 KUBECONFIG=/etc/kubernetes/admin.conf를 처음 실행 시 자동으로 실행될 수 있도록 .bashrc에 등록한다.

- manager 노드의 admin 설정

root@manager:/home/user1# mkdir -p $HOME/.kube

root@manager:/home/user1# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@manager:/home/user1# sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@manager:/home/user1# export KUBECONFIG=/etc/kubernetes/admin.conf- worker 노드 클러스터에 join 시키기

root@worker1:/home/user1# kubeadm join 211.183.3.100:6443 --token txl691.qficfxf8ramv1wd2 \

> --discovery-token-ca-cert-hash sha256:55985f2922219aa58dcc448638c2bf33231eead697c49faaf12951b8a741817e

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.- 노드 확인

root@manager:/home/user1# kubectl get node

NAME STATUS ROLES AGE VERSION

manager NotReady control-plane,master 10m v1.21.0

worker1 NotReady <none> 85s v1.21.0

worker2 NotReady <none> 80s v1.21.0

worker3 NotReady <none> 75s v1.21.0step 2) calico 애드온 추가(pod간 오버레이 네트워크 구성)

- manager에서 docker login

root@manager:/home/user1# docker login

Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.

Username: ptah0414

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded- calico 애드온 추가

root@manager:/home/user1# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created- pod 확인

root@manager:/home/user1# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-867d8d6bd8-zjtvl 0/1 Pending 0 48s

calico-node-px2vp 0/1 Init:0/3 0 47s

calico-node-qmb6g 0/1 Init:0/3 0 48s

calico-node-r9p9m 0/1 Init:ImagePullBackOff 0 48s

calico-node-vd987 0/1 Init:0/3 0 47s

coredns-558bd4d5db-jqbrm 0/1 Pending 0 12m

coredns-558bd4d5db-v55cn 0/1 Pending 0 12m

etcd-manager 1/1 Running 0 13m

kube-apiserver-manager 1/1 Running 0 13m

kube-controller-manager-manager 1/1 Running 0 13m

kube-proxy-2ttkz 1/1 Running 0 3m46s

kube-proxy-f25xm 1/1 Running 0 3m41s

kube-proxy-t47dm 1/1 Running 0 12m

kube-proxy-x2z4k 1/1 Running 0 3m51s

kube-scheduler-manager 1/1 Running 0 13m-> 설치 중

- kube-proxy 확인

root@manager:/home/user1# kubectl get pod -n kube-system | grep proxy

kube-proxy-2ttkz 1/1 Running 0 21m

kube-proxy-f25xm 1/1 Running 0 21m

kube-proxy-t47dm 1/1 Running 0 31m

kube-proxy-x2z4k 1/1 Running 0 21m-> kube-proxy가 4개 있음 (master, worker1, worker2, worker3)

- scheduler 확인

root@manager:/home/user1# kubectl get pod -n kube-system | grep scheduler

kube-scheduler-manager 1/1 Running 0 32m-> manager의 것 하나가 있다.

- CoreDNS 확인

root@manager:/home/user1# kubectl get pod -n kube-system | grep coredns

coredns-558bd4d5db-jqbrm 1/1 Running 0 48m

coredns-558bd4d5db-v55cn 1/1 Running 0 48m노드에 생성된 각각의 pod는 별도의 독립적인 주소와 이에 해당하는 도메인 이름이 할당된다. 우리는 이 정보가 저장된 CoreDNS를 통해 통신시킬 수 있다.

worker2 노드에 2개 있다.

- calico 확인

root@manager:/home/user1# kubectl get pod -n kube-system | grep calico

calico-kube-controllers-867d8d6bd8-zjtvl 1/1 Running 0 20m

calico-node-px2vp 1/1 Running 0 20m

calico-node-qmb6g 1/1 Running 0 20m

calico-node-r9p9m 1/1 Running 0 20m

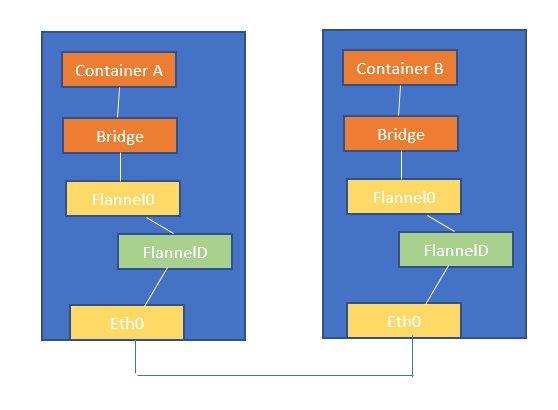

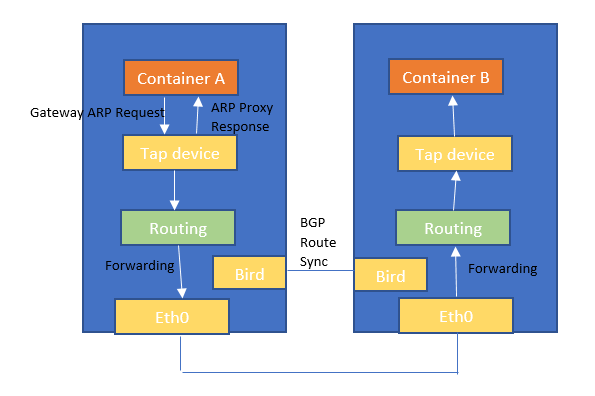

calico-node-vd987 1/1 Running 0 20mCNI(Container Network Interface)

CNI(Container Network Interface)는 컨테이너들과의 통신을 위해 개발된 인터페이스이며 표준화를 통해 어떠한 컨테이너든 상관 없이 연결이 가능하도록 해준다.

CNI의 종류는 크게 L2와 L3로 구분한다.

-

L2

- 각각의 노드에 나뉘어 배포된 모든 pod를 하나의 '가상 스위치'에 연결하여 통신시킬 수 있다.

- 각각의 노드에 나뉘어 배포된 모든 pod를 하나의 '가상 스위치'에 연결하여 통신시킬 수 있다.

-

L3

- 각 네트워크 간 통신을 가상의 라우터를 사용하여 라우팅한다. 또한 BGP를 제공하여 회사 내에서 보유하고 있는 독립된 공인 주소 대역을 ISP와의 통신에 활용할 수 있다.

- 각 네트워크 간 통신을 가상의 라우터를 사용하여 라우팅한다. 또한 BGP를 제공하여 회사 내에서 보유하고 있는 독립된 공인 주소 대역을 ISP와의 통신에 활용할 수 있다.

L2와 L3 중 어느 것을 사용하더라도 pod 간 통신에는 문제 없다.

이미지 출처: https://medium.com/@jain.sm/flannel-vs-calico-a-battle-of-l2-vs-l3-based-networking-5a30cd0a3ebd

manager에 있는 api, 스케줄러, 컨트롤러 등은 모드 pod 형태로 생성되어 서비스를 제공한다. 또한 CoreDNS, calico도 pod 형태로 서비스가 제공된다. 따라서 이미지 다운로드 후 pod 생성의 단계를 거쳐야 한다.

- master 노드의 모든 pod 준비 완료

root@manager:/home/user1# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-867d8d6bd8-zjtvl 1/1 Running 0 38m

calico-node-px2vp 1/1 Running 0 38m

calico-node-qmb6g 1/1 Running 0 38m

calico-node-r9p9m 1/1 Running 0 38m

calico-node-vd987 1/1 Running 0 38m

coredns-558bd4d5db-jqbrm 1/1 Running 0 50m

coredns-558bd4d5db-v55cn 1/1 Running 0 50m

etcd-manager 1/1 Running 0 50m

kube-apiserver-manager 1/1 Running 0 50m

kube-controller-manager-manager 1/1 Running 0 50m

kube-proxy-2ttkz 1/1 Running 0 41m

kube-proxy-f25xm 1/1 Running 0 41m

kube-proxy-t47dm 1/1 Running 0 50m

kube-proxy-x2z4k 1/1 Running 0 41m

kube-scheduler-manager 1/1 Running 0 50m- calico 자세히 보기

root@manager:/home/user1# wget https://docs.projectcalico.org/manifests/calico.yaml

--2022-09-05 10:36:42-- https://docs.projectcalico.org/manifests/calico.yaml

Resolving docs.projectcalico.org (docs.projectcalico.org)... 34.143.223.220, 52.220.193.16, 2406:da18:880:3802:371c:4bf1:923b:fc30, ...

Connecting to docs.projectcalico.org (docs.projectcalico.org)|34.143.223.220|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 234906 (229K) [text/yaml]

Saving to: ‘calico.yaml’

calico.yaml 100%[=====================================>] 229.40K 614KB/s in 0.4s

2022-09-05 10:36:43 (614 KB/s) - ‘calico.yaml’ saved [234906/234906]

root@manager:/home/user1# cat calico.yaml | grep image:

image: docker.io/calico/cni:v3.24.1

image: docker.io/calico/cni:v3.24.1

image: docker.io/calico/node:v3.24.1

image: docker.io/calico/node:v3.24.1

image: docker.io/calico/kube-controllers:v3.24.1-> 이미지 3개로 구성되어 있음

docker pull docker.io/calico/cni:v3.24.1

docker.io/calico/node:v3.24.1

docker.io/calico/kube-controllers:v3.24.1

metallb 구성하기

퍼블릭 환경의 경우에는 pod와 외부 사용자간 연결을 위해 손쉽게 LB를 이용할 수 있다. 하지만 on-premise에서는 LB 서비스를 이용할 수 없다. 이를 해결하기 위해 metallb를 사용하면 LB 이용이 가능하다.

step 1) Namespace 생성 (name: metallb-system)

root@manager:/home/user1# kubectl create ns metallb-system

namespace/metallb-system created- namespace 생성 확인 (metallb-system)

root@manager:/home/user1# kubectl get ns

NAME STATUS AGE

default Active 61m

kube-node-lease Active 61m

kube-public Active 61m

kube-system Active 61m

metallb-system Active 37sstep 2) controller, speaker 구성을 위한 manifest 파일 배포

root@manager:/home/user1# kubectl apply -f \

> https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

role.rbac.authorization.k8s.io/controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

rolebinding.rbac.authorization.k8s.io/controller created

daemonset.apps/speaker created

deployment.apps/controller createdstep 3) MetalLB ConfigMap 배포 (name: config)

root@manager:/home/user1# mkdir k8slab ; cd k8slab

root@manager:/home/user1/k8slab# vi metallb.yamlapiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 211.183.3.201-211.183.3.211 # 해당 네트워크 대역으로 LB 생성

- 211.183.3.231-211.183.3.239 # 해당 네트워크 대역으로 LB 생성 대역 1: 211.183.3.201 ~ .211

대역 2: 211.183.3.231 ~ .239

- MetalLB ConfigMap 배포 (config)

root@manager:/home/user1/k8slab# kubectl apply -f metallb.yaml

configmap/config createdstep 4) LoadBalancer Service와 nginx Deployment 배포

root@manager:/home/user1/k8slab# vi nginx-deploy-svc.yamlapiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec: # 아래는 ReplicaSet 설정

replicas: 3

selector: # 아래의 label 개수를 확인하여 pod 관리

matchLabels:

app: webserver

template: # 아래는 pod 구성

metadata:

name: my-webserver # Pod의 이름

labels:

app: webserver

spec: # 아래 부분은 컨테이너 구성 내용

containers:

- name: my-webserver # 컨테이너의 이름

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-lb

spec:

ports:

- name: web-port

port: 80 # LB의 포트

selector:

app: webserver

type: LoadBalancer - 배포

root@manager:/home/user1/k8slab# kubectl apply -f nginx-deploy-svc.yaml

deployment.apps/nginx-deployment created

service/nginx-lb created- 배포 확인

root@manager:/home/user1/k8slab# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-5fdcfffc56-5szpw 1/1 Running 0 67s

pod/nginx-deployment-5fdcfffc56-ctbhm 1/1 Running 0 67s

pod/nginx-deployment-5fdcfffc56-gqk7l 0/1 ContainerCreating 0 67s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 119m

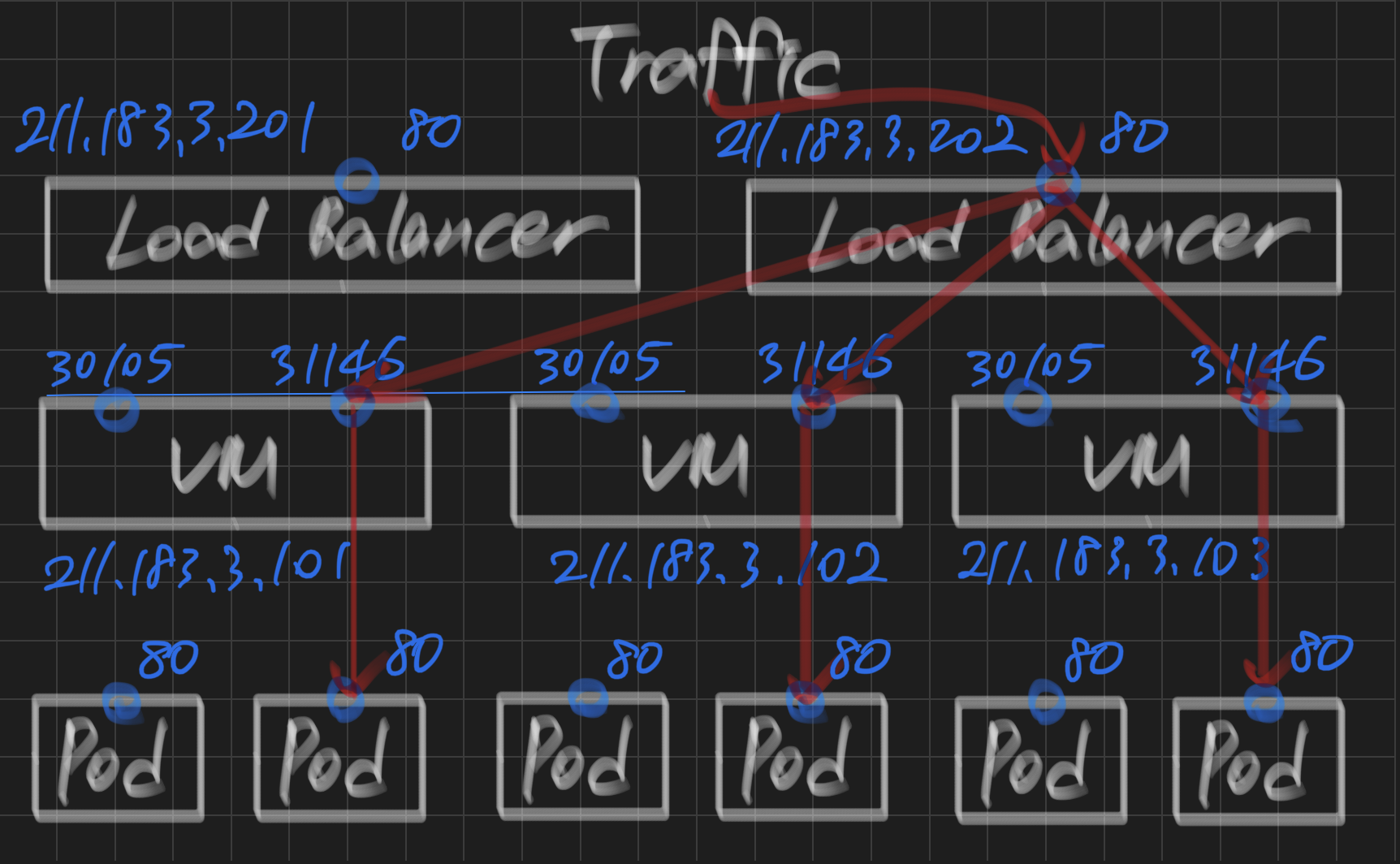

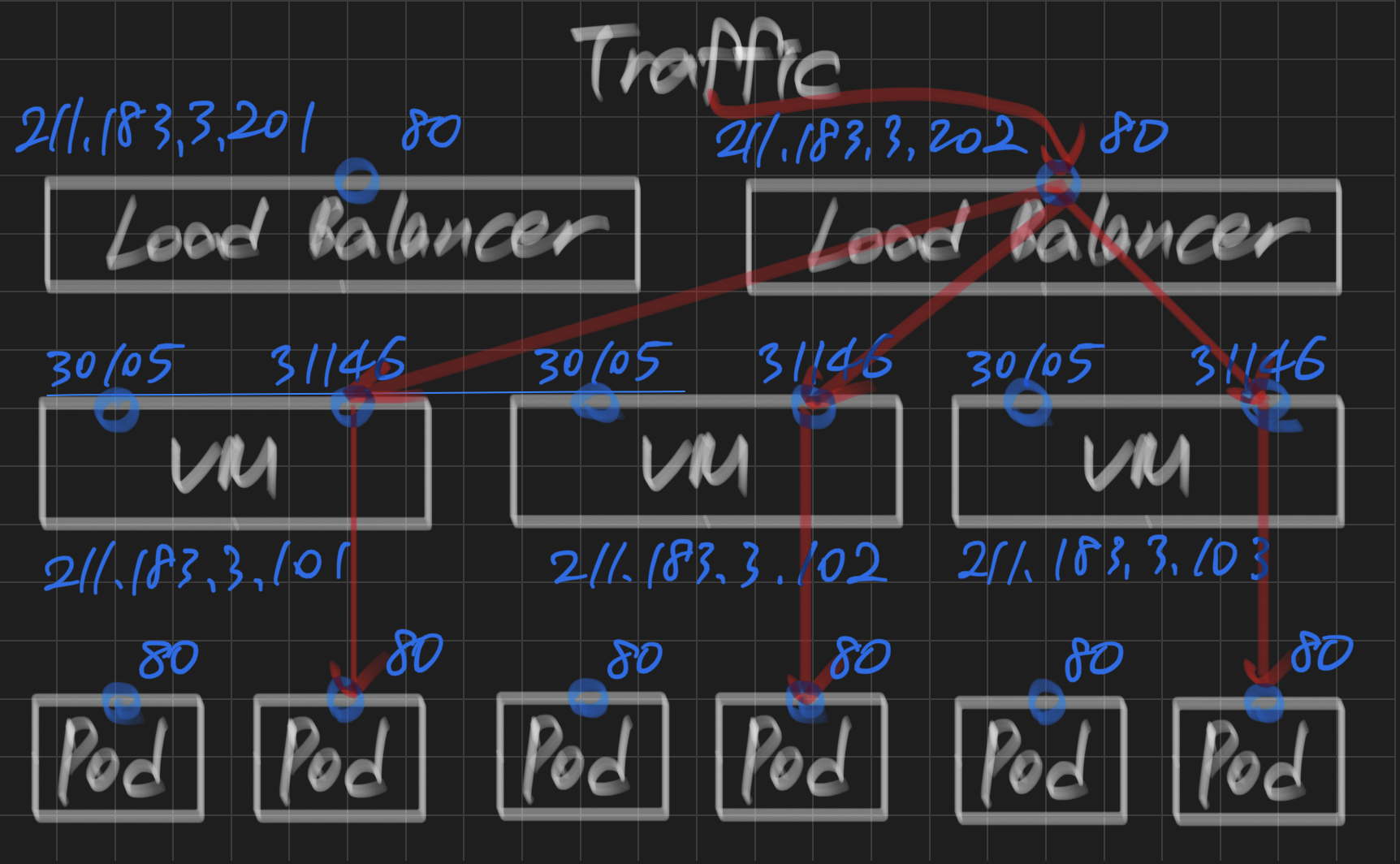

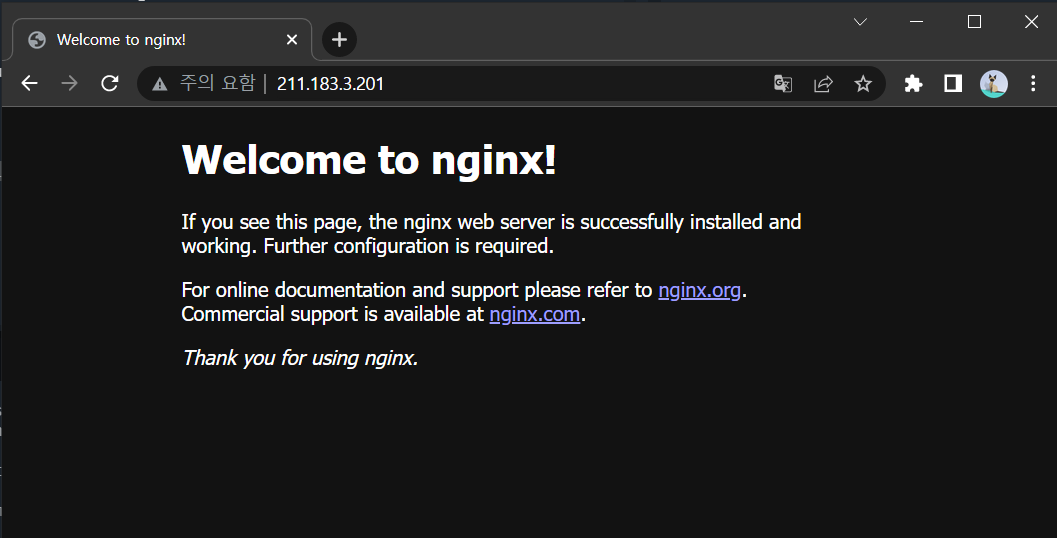

service/nginx-lb LoadBalancer 10.110.108.162 211.183.3.201 80:30105/TCP 67sLoadBalancer의 ip: 211.183.3.201

root@manager:/home/user1/k8slab# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

nginx-deployment-5fdcfffc56-5szpw 1/1 Running 0 2m40s

nginx-deployment-5fdcfffc56-ctbhm 1/1 Running 0 2m40s

nginx-deployment-5fdcfffc56-gqk7l 1/1 Running 0 2m40s-> 생성이 완료되었다.

step 5) LoadBalancer 접속

- yaml 작성

root@manager:/home/user1/k8slab# cp nginx-deploy-svc.yaml nginx2-deploy-svc.yaml

root@manager:/home/user1/k8slab# vi nginx2-deploy-svc.yaml apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx2-deployment

spec: # 아래는 ReplicaSet 설정

replicas: 3

selector: # 아래의 label 개수를 확인하여 pod 관리

matchLabels:

app: webserver2

template: # 아래는 pod 구성

metadata:

name: my-webserver2 # Pod의 이름

labels:

app: webserver2

spec: # 아래 부분은 컨테이너 구성 내용

containers:

- name: my-webserver2 # 컨테이너의 이름

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx2-lb

spec:

ports:

- name: web2-port

port: 80

selector:

app: webserver2

type: LoadBalancerstep 6) LoadBalancer와 Deployment 하나 더 배포

- 배포

root@manager:/home/user1/k8slab# k apply -f nginx2-deploy-svc.yaml

deployment.apps/nginx2-deployment created

service/nginx2-lb created- 배포 확인

root@manager:/home/user1/k8slab# k get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 127m

nginx-lb LoadBalancer 10.110.108.162 211.183.3.201 80:30105/TCP 9m27s

nginx2-lb LoadBalancer 10.109.18.134 211.183.3.202 80:31146/TCP 15s새로 생성한 LoadBalancer의 ip: 211.183.3.202

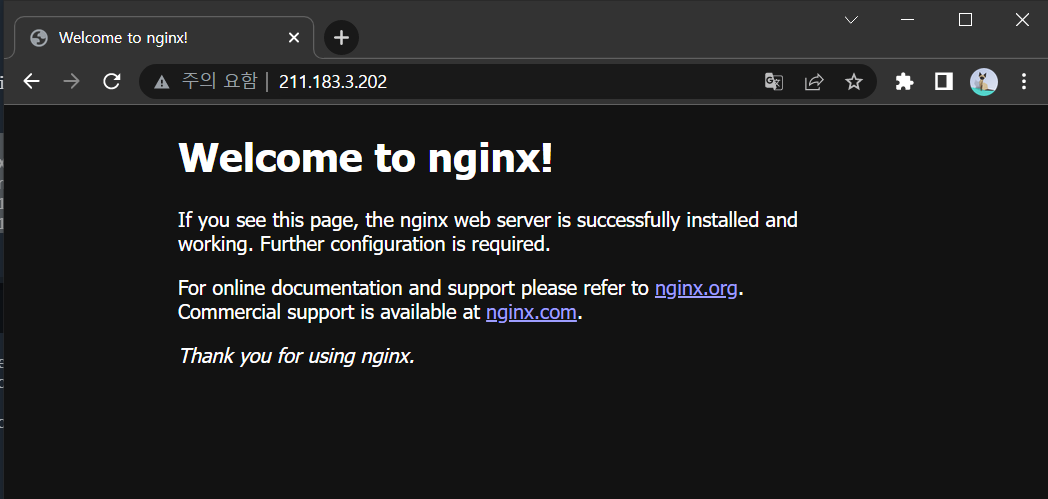

- 새로 생성한 LoadBalancer 접속

http://211.183.3.202

- 배포 삭제

root@manager:/home/user1/k8slab# k delete -f nginx2-deploy-svc.yaml

deployment.apps "nginx2-deployment" deleted

service "nginx2-lb" deletedNamespace 사용하기

- 컨테이너의 namespace가 아니라 object의 namespace

- 용도에 따라 컨테이너와 그에 관련된 리소스를 구분 짓는 그룹의 역할

- pod, rs, deployment, svc 등과 쿠버네티스 리소스들이 묶여있는 가상의 작업 공간

우리 회사가 여러 고객들의 pod를 관리해주는 업체라면 각 고객사별로 별도의 namespace를 할당하고 해당 namespace에서 pod, svs 등을 이용한 서비스를 제공해야 할 것이다.

kube-system은 쿠버네티스 동작을 위한 pod가 관리되는 곳이다.

root@manager:/home/user1# k get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-867d8d6bd8-zjtvl 1/1 Running 0 3h47m

calico-node-px2vp 1/1 Running 0 3h47m

calico-node-qmb6g 1/1 Running 0 3h47m

calico-node-r9p9m 1/1 Running 0 3h47m

calico-node-vd987 1/1 Running 0 3h47m

coredns-558bd4d5db-jqbrm 1/1 Running 0 3h59m

coredns-558bd4d5db-v55cn 1/1 Running 0 3h59m

etcd-manager 1/1 Running 0 3h59m

kube-apiserver-manager 1/1 Running 0 3h59m

kube-controller-manager-manager 1/1 Running 0 3h59m

kube-proxy-2ttkz 1/1 Running 0 3h50m

kube-proxy-f25xm 1/1 Running 0 3h50m

kube-proxy-t47dm 1/1 Running 0 3h59m

kube-proxy-x2z4k 1/1 Running 0 3h50m

kube-scheduler-manager 1/1 Running 0 3h59m- 기본적으로 서로 다른 namespace에 속해있는 리소스 간에는 통신/접근이 되지 않는다.

(gcp에서 별도의 project를 만들고 이 공간 내에 vm, vpc 등을 할당하는 것과 비슷) - namespace에 속하는 object와 그렇지 않은 object가 있다.

kubectl api-resources-

쿠버네티스 클러스터를 여러 명이 동시에 사용해야 한다면 사용자마다 namespace를 별도로 생성하여 사용하도록 설정할 수 있다. (MSP에서 고객을 관리하는 방법)

-

namespace의 리소스들은 논리적으로 구분되어 있는 것이며 물리적으로 격리된 것이 아니므로 서로 다른 namespace에서 생성된 pod가 같은 노드에 존재할 수 있다.

-

namespace 생성 예시

root@manager:/home/user1# kubectl create ns testns코드 배포 시 namespace를 추가적으로 작성해야 한다. (metadata)

- deploy 확인

root@manager:/home/user1/k8slab# k get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 3 3 135m- 기존 배포 삭제

root@manager:/home/user1/k8slab# k delete deploy nginx-deployment

deployment.apps "nginx-deployment" deletedNamespace 배포 (name: rapa)

root@manager:/home/user1/k8slab# vi nginx-deploy-svc.yaml apiVersion: v1

kind: Namespace

metadata:

name: rapa # namespace 이름

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: rapa # namespace 이름

spec: # 아래는 ReplicaSet 설정

replicas: 3

selector: # 아래의 label 개수를 확인하여 pod 관리

matchLabels:

app: webserver

template: # 아래는 pod 구성

metadata:

name: my-webserver # Pod의 이름

labels:

app: webserver

spec: # 아래 부분은 컨테이너 구성 내용

containers:

- name: my-webserver # 컨테이너의 이름

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-lb

namespace: rapa # namespace 이름

spec:

ports:

- name: web-port

port: 80

selector:

app: webserver

type: LoadBalancer apiVersion: v1 kind: Namespace metadata: name: rapa # namespace 이름각 Deployment와 Service의 metadata에도 namespace를 추가해야된다.

- 배포

root@manager:/home/user1/k8slab# k apply -f nginx-deploy-svc.yaml

namespace/rapa created

deployment.apps/nginx-deployment created

service/nginx-lb created- rapa namespace 배포 확인

root@manager:/home/user1/k8slab# k get deploy,pod,svc -n rapa

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment 3/3 3 3 28s

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-5fdcfffc56-8fk7x 1/1 Running 0 28s

pod/nginx-deployment-5fdcfffc56-mfmxn 1/1 Running 0 28s

pod/nginx-deployment-5fdcfffc56-t2g46 1/1 Running 0 28s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-lb LoadBalancer 10.102.169.185 211.183.3.202 80:32710/TCP 28sConfigMap과 Secret

- 도커의 이미지는 빌드 후 불변의 상태를 갖기 때문에 설정 옵션을 유연하게 변경할 수 없다.

- configmap: 사용자별 별도의 설정값(시스템 환경 변수), 사용자별 별도의 파일

- secret: 일반적인 username/password, 사설 저장소 접근을 위한 인증

- configmap 생성 (testmap)

root@manager:/home/user1/k8slab# k create configmap testmap \

> --from-literal k8s=kubernetes \

> --from-literal container=docker

configmap/testmap created- configmap 확인

root@manager:/home/user1/k8slab# k get cm

NAME DATA AGE

kube-root-ca.crt 1 4h32m

testmap 2 23s- testmap configmap 자세히 확인

root@manager:/home/user1/k8slab# k describe cm testmap

Name: testmap

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

container:

----

docker

k8s:

----

kubernetes

Events: <none>- configmap을 pod에서 사용해보기

- configmap에 저장된 key:value 데이터가 컨테이너의 환경 변수 key:value로 사용되기 때문에 쉘에서 echo $k8s와 같은 방법으로 값을 확인할 수 있다.

- configmap의 값을 pod 내부의 파일로 마운트하여 사용하기

생성된 cm을 pod 내의 특정 파일과 마운트하게 되면 pod 내에서 해당 파일을 cat 등으로 열어서 확인하면 내용을 확인할 수 있게 된다.

key는 파일명, value는 파일 내에 기록되어 있다.

configmap 생성

root@manager:/home/user1/k8slab# k create cm cmtest1 \

> --from-literal name=gildong \

> --from-literal age=24

configmap/cmtest1 created

root@manager:/home/user1/k8slab#

root@manager:/home/user1/k8slab# k create cm cmtest2 \

> --from-literal name=chulsoo \

> --from-literal age=25

configmap/cmtest2 created- configmap 생성 확인

root@manager:/home/user1/k8slab# k get cm

NAME DATA AGE

cmtest1 2 56s

cmtest2 2 27s

kube-root-ca.crt 1 5h3m

testmap 2 31m- configmap 자세히 확인

root@manager:/home/user1/k8slab# k describe cm cmtest1

Name: cmtest1

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

age:

----

24

name:

----

gildong

Events: <none>방법 1) configMapRef을 통한 배포

- yaml 작성

root@manager:/home/user1/k8slab# vi cmtestpod.yaml apiVersion: v1

kind: Pod

metadata:

name: cmtestpod

spec: # 컨테이너에 대한 내용

containers:

- name: cmtestpod-ctn

image: busybox

args: ['tail', '-f', '/dev/null'] # /dev/null 파일을 실시간을 봄. 컨테이너가 종료되지 않도록 하는 용도

envFrom:

- configMapRef:

name: cmtest1- 배포

root@manager:/home/user1/k8slab# k apply -f cmtestpod.yaml

pod/cmtestpod created- 배포 확인

root@manager:/home/user1/k8slab# k get pod

NAME READY STATUS RESTARTS AGE

cmtestpod 0/1 ContainerCreating 0 19s

root@manager:/home/user1/k8slab# - 배포된 pod의 환경 변수 확인

root@manager:/home/user1/k8slab# k exec cmtestpod -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=cmtestpod

age=24

name=gildong

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

NGINX_LB_PORT_80_TCP_PORT=80

NGINX_LB_PORT_80_TCP_ADDR=10.110.108.162

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_PORT_HTTPS=443

NGINX_LB_PORT_80_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

NGINX_LB_SERVICE_PORT_WEB_PORT=80

KUBERNETES_PORT=tcp://10.96.0.1:443

NGINX_LB_SERVICE_HOST=10.110.108.162

NGINX_LB_SERVICE_PORT=80

NGINX_LB_PORT=tcp://10.110.108.162:80

NGINX_LB_PORT_80_TCP=tcp://10.110.108.162:80

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_PORT_443_TCP_PORT=443

HOME=/root-> configMap으로 생성한 cmtest1의 정보가 환경 변수로 등록되어있다.

방법 2) configMap을 볼륨처럼 이용하여 pod의 특정 파일과 마운트 시키기

- yaml 작성

root@manager:/home/user1/k8slab# vi cmtestpodvol.yamlapiVersion: v1

kind: Pod

metadata:

name: cmtestpodvol

spec:

containers:

- name: cmtestpodvolctn

image: busybox

args: ['tail', '-f', '/dev/null']

volumeMounts:

- name: cmtestpod-volume # 아래에서 생성한 볼륨과 연결

mountPath: /etc/testcm # /age와 /name이라는 파일이 생성될 것임

volumes:

- name: cmtestpod-volume

configMap:

name: cmtest2- 배포

root@manager:/home/user1/k8slab# k apply -f cmtestpodvol.yaml

pod/cmtestpodvol created- 배포 확인

root@manager:/home/user1/k8slab# k get pod

NAME READY STATUS RESTARTS AGE

cmtestpod 1/1 Running 0 11m

cmtestpodvol 1/1 Running 0 31s- configMap 파일 확인 (age, name)

root@manager:/home/user1/k8slab# k exec cmtestpodvol -- ls /etc/testcm

age

name- configMap 파일 내용 확인

root@manager:/home/user1/k8slab# k exec cmtestpodvol -- cat /etc/testcm/age

25

25root@manager:/home/user1/k8slab# k exec cmtestpodvol -- cat /etc/testcm/name

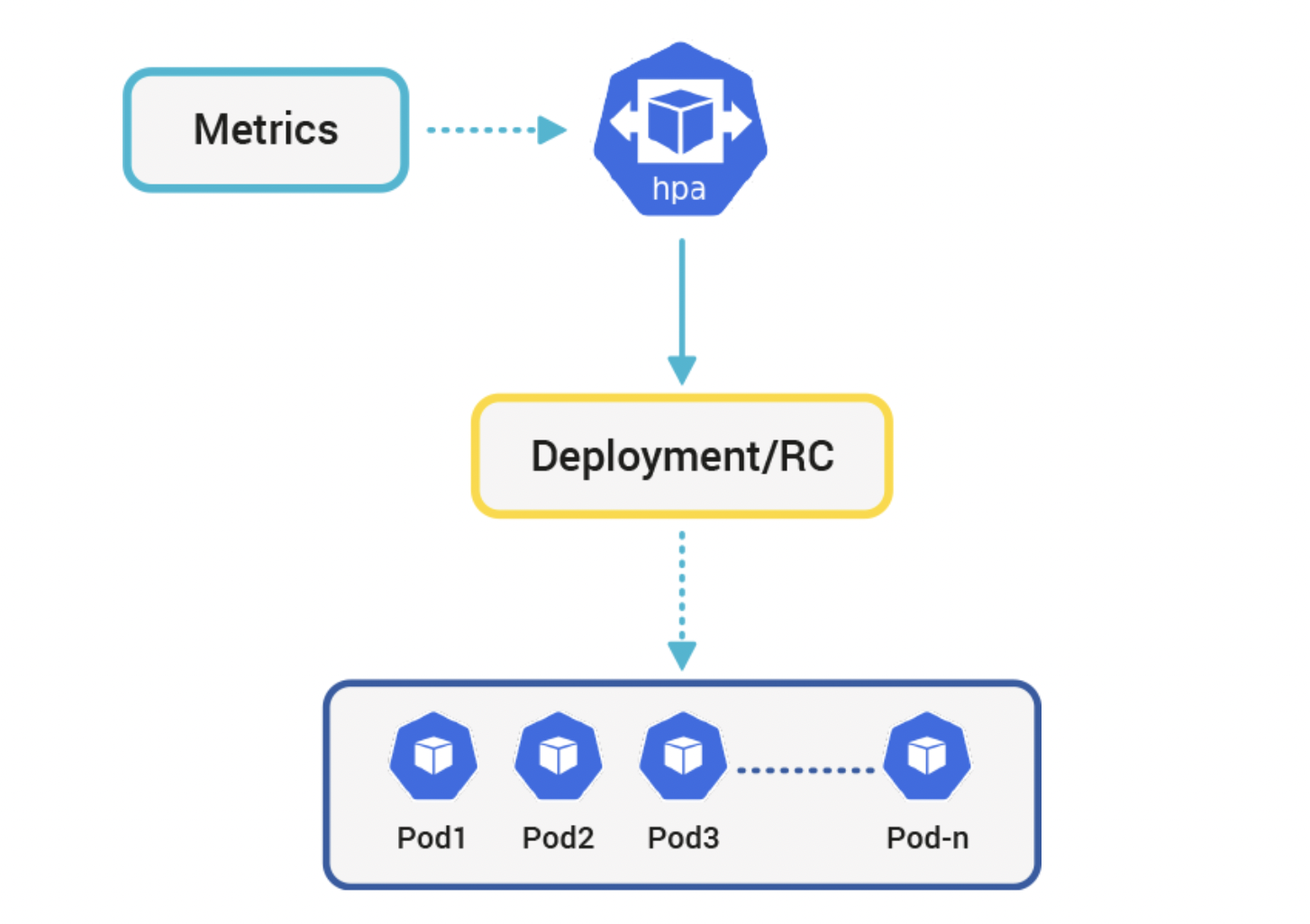

chulsoorootHPA(Horizontal Pod Autoscaling)

이미지 출처: https://www.kloia.com/blog/kubernetes-horizontal-pod-autoscaler

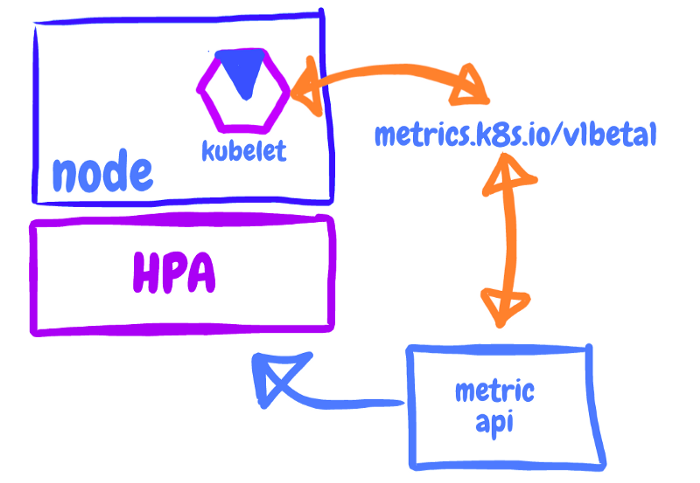

- 메트릭 서버: 각 pod의 자원 사용량 정보를 수집하기 위한 도구

이미지 출처: https://www.devopsschool.com/blog/what-is-metrics-server-and-how-to-install-metrics-server/

-

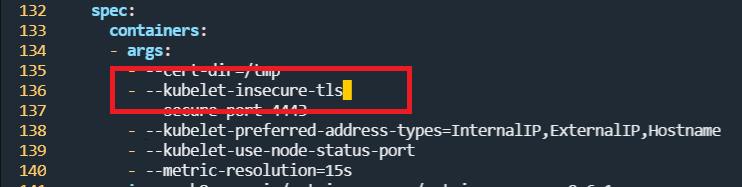

메트릭 서버를 인터넷으로부터 직접 설치하게 되면 공인 인증서를 통한 인증이 먼저 수행되어야 한다. 하지만 우리는 해당 인증서 발급을 하지 않은 상태이므로 이 단계를 bypass하더라도 문제 없도록 --kubelet-insecure-tls 를 작성해 둔다.

-

pod의 autoscale은 지정된 자원 사용량의 제한을 확인하고 이를 넘어서는 경우 수평적인 확장을 하게 된다. 따라서 pod가 어느 정도의 자원을 할당 받았는지 미리 지정해 두어야 한다.

-

참고:

1000m: CPU 1개

500m: CPU 0.5개

200m: CPU 0.2개

resources:

limits:

cpu: 500m

requests:

cpu: 200m-> 최소 보장값은 CPU 0.2개

-> 만약 다른 pod에서 cpu를 사용하고 있지 않아 물리 자원(CPU)에 여유가 있다면 이를 확장하여 최대 0.5개까지 사용하겠다.

Quiz.

- HPA 배포

- deployment + svc 배포

- deployment: replicas 3

- svc: loadbalancer (http://211.183.3.203)

- HPA를 이용하여 pod의 cpu 사용량이 10%를 넘어서게 되면 수평 확장을 통해 최대 20개까지 사용 가능하도록 하라. 단, min은 1개로 지정한다. Quiz. 그렇다면 replicas는 몇이 되는건가? (3?? 1??)

- 외부에서 트래픽을 보내본다.

외부에서 http://211.183.3.203 으로 apache bench를 이용하여 트래픽을 보내본다.

몇 개까지 늘어나고 ab가 중지된 뒤 몇 개까지 줄어드는가?

step 1) 깃허브에서 metric-server 소스코드 다운로드

root@manager:/home/user1/k8slab# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

--2022-09-05 15:51:47-- https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

Resolving github.com (github.com)... 20.200.245.247

Connecting to github.com (github.com)|20.200.245.247|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github.com/kubernetes-sigs/metrics-server/releases/download/metrics-server-helm-chart-3.8.2/components.yaml [following]

--2022-09-05 15:51:48-- https://github.com/kubernetes-sigs/metrics-server/releases/download/metrics-server-helm-chart-3.8.2/components.yaml

Reusing existing connection to github.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://objects.githubusercontent.com/github-production-release-asset-2e65be/92132038/d85e100a-2404-4c5e-b6a9-f3814ad4e6e5?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20220905%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20220905T065148Z&X-Amz-Expires=300&X-Amz-Signature=f2be64e6059ec6ed0d4f8a265b92c8677c57a90bb482c6e6d415b3399b9e8bd8&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream [following]

--2022-09-05 15:51:48-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/92132038/d85e100a-2404-4c5e-b6a9-f3814ad4e6e5?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20220905%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20220905T065148Z&X-Amz-Expires=300&X-Amz-Signature=f2be64e6059ec6ed0d4f8a265b92c8677c57a90bb482c6e6d415b3399b9e8bd8&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream

Resolving objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4181 (4.1K) [application/octet-stream]

Saving to: ‘components.yaml’

components.yaml 100%[==================================================================================================================>] 4.08K --.-KB/s in 0s

2022-09-05 15:51:49 (37.2 MB/s) - ‘components.yaml’ saved [4181/4181]- 파일 내용 수정

root@manager:/home/user1/k8slab# vi components.yaml

step 2) metrics-server 배포

root@manager:/home/user1/k8slab# k apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io createdstep 3) nginx deployment, loadbalancer service 배포

root@manager:/home/user1/k8slab# vi autoscaletest.yaml apiVersion: apps/v1

kind: Deployment

metadata:

name: autoscaletest

spec:

selector:

matchLabels:

color: black

replicas: 3

template: # pod 구성 내용

metadata:

labels:

color: black

spec: # 컨테이너 구성 내용

containers:

- name: autoscaletest-nginx

image: nginx

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: nginx3-lb

spec:

ports:

- name: web3-port

port: 80

selector:

color: black # pod의 label

type: LoadBalancerautoscaling 설정 예시

resources: limits: cpu: 500m requests: cpu: 200m

- 배포

root@manager:/home/user1/k8slab# k apply -f autoscaletest.yaml

deployment.apps/autoscaletest created

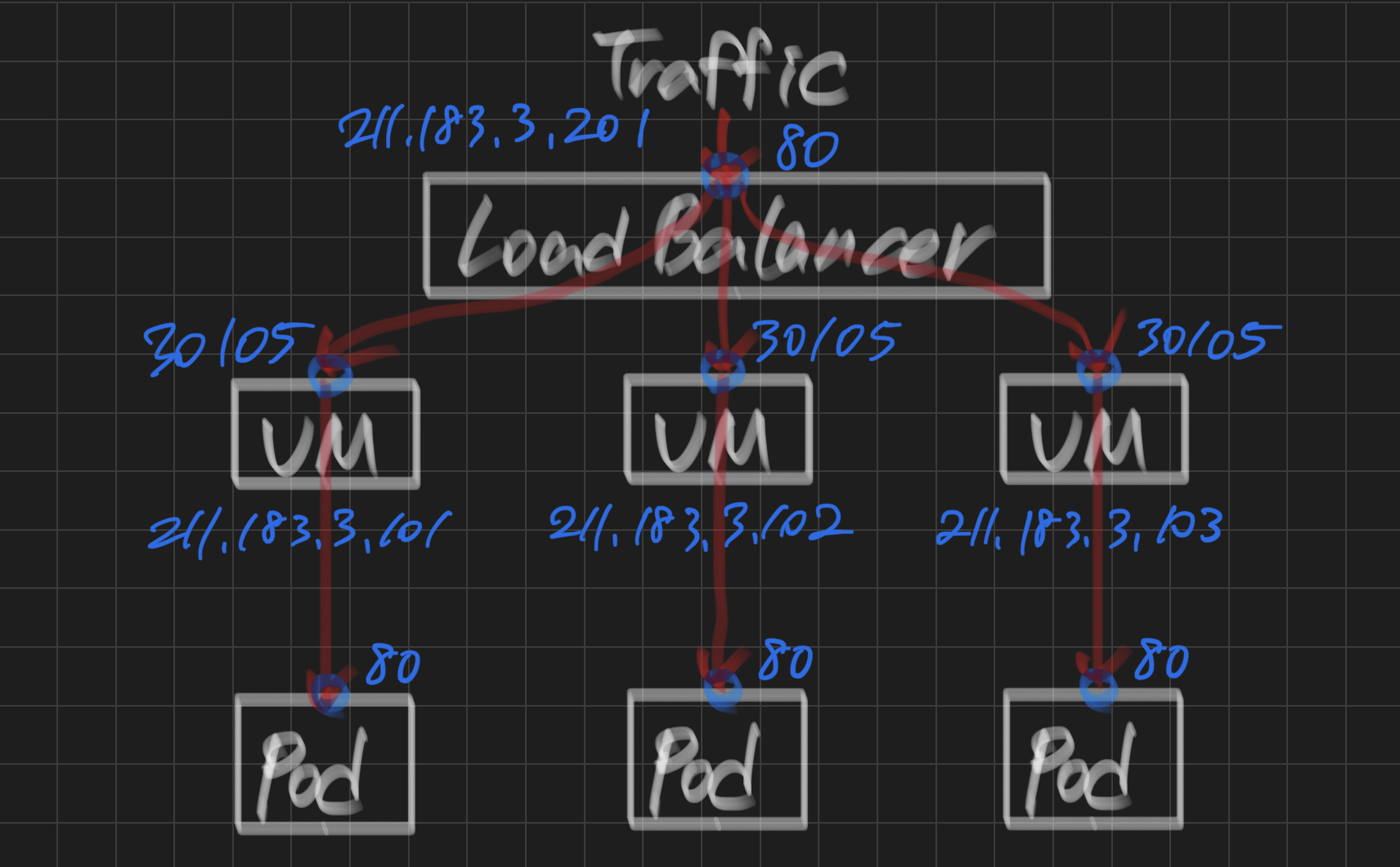

service/nginx3-lb created- 배포 확인 (nginx3-lb, 211.183.3.203:32370)

root@manager:/home/user1/k8slab# k get deploy,svc

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/autoscaletest 3/3 3 3 4m12s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6h25m

service/nginx-lb LoadBalancer 10.110.108.162 211.183.3.201 80:30105/TCP 4h27m

service/nginx3-lb LoadBalancer 10.103.102.245 211.183.3.203 80:32370/TCP 4m12sstep 4) HPA 설정

root@manager:/home/user1/k8slab# k autoscale deploy autoscaletest \

> --cpu-percent=10 \

> --min=1 \

> --max=20

horizontalpodautoscaler.autoscaling/autoscaletest autoscaled- 배포 확인

root@manager:/home/user1/k8slab# k get deploy,svc,pod

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/autoscaletest 3/3 3 3 6m8s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6h27m

service/nginx-lb LoadBalancer 10.110.108.162 211.183.3.201 80:30105/TCP 4h29m

service/nginx3-lb LoadBalancer 10.103.102.245 211.183.3.203 80:32370/TCP 6m8s

NAME READY STATUS RESTARTS AGE

pod/autoscaletest-7c7986bdcb-dm2m8 1/1 Running 0 6m8s

pod/autoscaletest-7c7986bdcb-fwsrl 1/1 Running 0 6m8s

pod/autoscaletest-7c7986bdcb-l5sz4 1/1 Running 0 6m8s

pod/cmtestpod 1/1 Running 0 77m

pod/cmtestpodvol 1/1 Running 0 66mroot@manager:/home/user1/k8slab# k get pod

NAME READY STATUS RESTARTS AGE

autoscaletest-7c7986bdcb-dm2m8 1/1 Running 0 7m36s

autoscaletest-7c7986bdcb-fwsrl 1/1 Running 0 7m36s

autoscaletest-7c7986bdcb-l5sz4 1/1 Running 0 7m36s

cmtestpod 1/1 Running 0 79m

cmtestpodvol 1/1 Running 0 68m

root@manager:/home/user1/k8slab# kubectl top no --use-protocol-buffers ; kubectl get hpa

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

manager 334m 8% 2096Mi 55%

worker1 189m 4% 968Mi 52%

worker2 204m 5% 1051Mi 57%

worker3 188m 4% 955Mi 51%

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

autoscaletest Deployment/autoscaletest 0%/10% 1 20 3 2m11s-> replicas 3개가 실행 중이다.

step 5) 외부에서 apache bench 부하 주기

[worker1]

root@worker1:/home/user1# ab -c 1000 -n 200 -t 60 http://211.183.3.203:80/

This is ApacheBench, Version 2.3 <$Revision: 1843412 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 211.183.3.203 (be patient)

Completed 5000 requests

Completed 10000 requests

Completed 15000 requests

Completed 20000 requests

Completed 25000 requests

Completed 30000 requests

Completed 35000 requests

Completed 40000 requests

Completed 45000 requests

apr_pollset_poll: The timeout specified has expired (70007)

Total of 49981 requests completedstep 6) autoscaling 확인

root@manager:/home/user1/k8slab# kubectl top no --use-protocol-buffers ; kubectl get hpa -w

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

manager 376m 9% 2195Mi 57%

worker1 318m 7% 1030Mi 55%

worker2 258m 6% 1033Mi 56%

worker3 297m 7% 950Mi 51%

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

autoscaletest Deployment/autoscaletest 1%/10% 1 20 4 15m

autoscaletest Deployment/autoscaletest 0%/10% 1 20 8 15m

autoscaletest Deployment/autoscaletest 0%/10% 1 20 16 15m

autoscaletest Deployment/autoscaletest 0%/10% 1 20 17 15m

autoscaletest Deployment/autoscaletest 0%/10% 1 20 17 16m

autoscaletest Deployment/autoscaletest 0%/10% 1 20 17 21m

autoscaletest Deployment/autoscaletest 0%/10% 1 20 1 21m부하가 발생하자, scale out이 진행되어 컨테이너가 17개까지 늘어났다.

일정 시간이 지나자, scale out이 진행되어 컨테이너가 min 개수인 1개로 줄어들었다.

NFS 사용하기

manager: apt install -y nfs-server

worker: apt install -y nfs-common -> client임

no_root_squash: 외부 노드에서 작성한 파일을 로컬(nfs-server)에서 확인했을 때 root가 작성한 것(소유주가 root)으로 간주한다.

- 211.183.3.100에 nfs-server를 구축한다.

- manager에 /root/pvpvc/shared 디렉토리를 생성하고 퍼미션을 777로 조정한다.

- /etc/exports에 /root/pvpvc/shared를 외부에 공개하도록 설정한다.

단, pod에서만 접속이 가능하도록 해야한다. 어떤 주소를 허용해야 하는가?

/root/pvpvc/shared [허용할 주소](rw,no_root_squash,sync)- nfs-server 활성화 및 실행

- pod 1개를 배포하고 pod의 /mnt를 nfs-server의 /root/pvpvc/shared와 마운트한다.

- manager에서 /root/pvpvc/shared에 임의의 파일을 생성하고 pod에서 exec을 활용하여 해당 파일을 볼 수 있어야 한다. 단, 모든 pod에서 볼 수 있어야 한다.

step 1) 211.183.3.100에 nfs-server 구축

- nfs-server 패키지 설치

root@manager:~# apt install -y nfs-server- nfs-server 실행 확인

root@manager:~# systemctl status nfs-server | grep Active

Active: active (exited) since Mon 2022-09-05 17:30:08 KST; 1min 45s agostep 2) manager에 /shared 디렉토리를 생성

root@manager:~# mkdir -p ~/pvpvc/shared

root@manager:~# chmod 777 pvpvc/shared/step 3) /shared 외부에 공개 설정

(단, pod에서만 접속이 가능하도록 해야한다.)

root@manager:~# vi /etc/exports /root/pvpvc/shared 211.183.3.*(rw,no_root_squash,sync)허용 IP: 211.183.3.*

step 4) 방화벽 해제 및 nfs-server 재시작

root@manager:~# ufw disable

Firewall stopped and disabled on system startuproot@manager:~# systemctl restart nfs-serverstep 5) pod의 /mnt를 nfs-server의 /shared와 마운트

root@manager:~/k8slab# vi nfs-pod.yaml apiVersion: v1

kind: Pod

metadata:

name: nfs-pod

spec:

containers:

- name: nfs-mount-container

image: busybox

args: [ "tail", "-f", "/dev/null" ]

volumeMounts:

- name: nfs-volume

mountPath: /mnt # 포드 컨테이너 내부의 /mnt 디렉터리에 마운트합니다.

volumes:

- name : nfs-volume

nfs: # NFS 서버의 볼륨을 포드의 컨테이너에 마운트합니다.

path: /root/pvpvc/shared

server: 211.183.3.100 - pod 배포

root@manager:~/k8slab# k apply -f nfs-pod.yaml

pod/nfs-pod createdstep 6) manager에서 /shared에 임의의 파일 생성

단, 모든 pod에서 볼 수 있어야 한다.

- pod에서 nfs 서버에 직접 접속하는 것이 아니라 노드에서 접속하게 되므로 worker1, 2, 3에 nfs 클라이언트 패키지 설치

[worker 1, 2, 3]

root@worker1:/home/user1# apt install -y nfs-common- nfs-server(/root/pvpvc/shared)에 임의의 파일 생성

root@manager:~/k8slab# touch /root/pvpvc/shared/test.txtstep 7) pod와 마운트 확인

root@manager:~/k8slab# k exec nfs-pod -- ls /mnt

test.txt기타

yaml 파일 작성하기

- 확장자는 yaml 또는 yml로 작성한다.

- tab은 기본적으로 인정하지 않는다. space bar를 사용해야 한다.

- 만약 주석을 사용하고 싶다면?

json은 기본적으로 허용하지 않는다. 하지만, yaml은 #으로 제공한다. - 두 가지 이상의 작업을 하나의 파일에 작성하고 싶아면 ---을 이용하여 구분한다.

apiVersion: v1

---

apiVersion: apps/v1 - 리스트 사용 가능하다.

- 기본적으로 yaml은 string이다.

'

abc

def

'-> 출력: abcdef

- 여러 줄로 작성된 내용을 사진 찍듯이 그대로 옮기고 싶다면?

| (pipe)를 이용할 수 있다. 즉, 기본적으로 한 줄 뒤에는 \n이 생략되어 있다.

만약 | 아래에 한 줄을 비웠다면 이 역시 그대로 한 줄 비우기가 전달된다.

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 211.183.3.201-211.183.3.239추가적으로 | 뒤에 - 를 붙이면 마지막 라인이 비워져 있어도 이를 지워준다.

abc

def

ghi

⬇

abc

def

ghialias 설정

root@manager:/home/user1/k8slab# alias k='kubectl'

root@manager:/home/user1/k8slab# k get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-5fdcfffc56-5szpw 1/1 Running 0 3m45s

nginx-deployment-5fdcfffc56-ctbhm 1/1 Running 0 3m45s

nginx-deployment-5fdcfffc56-gqk7l 1/1 Running 0 3m45s

진도가 너무 빨라요 ㅠㅠㅠ