mnist을 위한 모델 함수 생성

import keras

from keras import layers

def get_mnist_model():

inputs = keras.Input(shape=(28*28,))

features = layers.Dense(512, activation='relu')(inputs)

features = layers.Dropout(0.5)(features)

outputs = layers.Dense(10, activation='softmax')(features)

model = keras.Model(inputs,outputs)

return model 워크플로 커스터마이징

- 사용자 정의 측정 지표 전달

- fit()에 콜백(callback)을 전달: 특정 시점에 수행될 행동 예약

1. 사용자 정의 지표(metric) 만들기: keras.metrics.Metric

- metric: 모델의 성능을 측정하는 열쇠

- 일반적으로 사용되는 지표는 Keras에 포함

- compile에서 사용되는 metrics 모듈은 keras.metrics.Metric클래스를 상속한 클래스

- 일반적이지 않은 작업을 한다면 사용자 정의 지표를 만들 수 있어야 한다.

🔸 사용자 지표 함수 생성 코드: RMSE 계산

import tensorflow as tf

class RootMeanSquaredError(keras.metrics.Metric):

def __init__(self, name='rmse',**kwargs):

super().__init__(name=name, **kwargs)

self.mse_sum = self.add_weight(name='mse_sum', initializer='zeros')

self.total_samples = self.add_weight(name='total_sample', initializer='zeros', dtype='int32')

def update_state(self, y_true, y_pred, sample_weight=None):

y_true = tf.one_hot(y_true, depth=tf.shape(y_pred)[1])

mse=tf.reduce_sum(tf.square(y_true - y_pred))

self.mse_sum.assign_add(mse)

num_samples = tf.shape(y_pred)[0]

self.total_samples.assign_add(num_samples)

def result(self):

return tf.sqrt(self.mse_sum / tf.cast(self.total_samples, tf.float32))

def reset_state(self):

self.mse_sum.assign(0.)

self.total_samples.assign(0)__init__():add_weight: 상태변수정의

update_state():- y_true는 배치의 타깃, y_pred는 이에 해당하는 모델의 예측

- y_pred는 각 클래스에 대한 확률을 담고 있으므로 정수레이블인 y_true를 원핫 인코딩으로 변경한다.

result():tf.cast: total_samples를 float32타입으로 변형시켜줌

reset_state():- 객체를 다시 생성하지 않고 상태를 초기화 하는 방법 제시

- 객체를 다시 생성하지 않고 상태를 초기화 하는 방법 제시

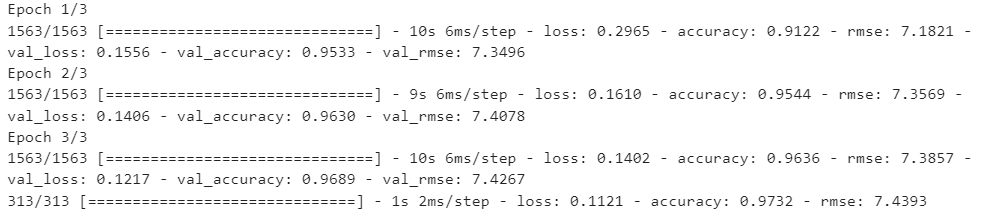

🔸 지표 테스트

from keras.datasets import mnist

(images, labels), (test_images, test_labels) = mnist.load_data()

images = images.reshape((60000, 28 * 28)).astype("float32") / 255

test_images = test_images.reshape((10000, 28 * 28)).astype("float32") / 255

train_images, val_images = images[10000:], images[:10000]

train_labels, val_labels = labels[10000:], labels[:10000]

model = get_mnist_model()

model.compile(optimizer="rmsprop",

loss="sparse_categorical_crossentropy",

metrics=["accuracy", RootMeanSquaredError()])

model.fit(train_images, train_labels,

epochs=3,

validation_data=(val_images, val_labels))

test_metrics = model.evaluate(test_images, test_labels)2. 콜백(callback) 사용하기

- fit()을 통해 여러번 에포크를 실행하는 것은 자원 낭비

- 콜백은 스스로 판단하고 동적으로 결정:

- 모델의 상태와 성능 정보에 접근하여 훈련중지, 모델 저장, 가중치 적재 등을 처리한다.

- 모델 체크포인트 저장: 모델의 현재 가중치 저장

- 조기종료: 검증손실이 향상되지 않을 때 훈련 중지

- 하이퍼파라미터 값 동적 조절: optimizer의 learning_rate 등

- 검증지표 로그에 기록 or 업데이트시 시각화: fit()메서드의 진행표시줄이 하나의 콜백

- 모델의 상태와 성능 정보에 접근하여 훈련중지, 모델 저장, 가중치 적재 등을 처리한다.

🔸 사용가능한 콜백: keras.callbacks.

ModelCheckpoint

BackupAndRestore

TensorBoard

EarlyStopping

LearningRateScheduler

ReduceLROnPlateau

RemoteMonitor

LambdaCallback

TerminateOnNaN

CSVLogger

ProgbarLogger

1) ModelCheckpoint, EarlyStopping

- EarlyStopping: 조기종료, 지표가 향상되지 않을 때 훈련 중지(과대적합이 시작되면 훈련 중지)

- ModelCheckpoint: 훈련하는 동안 모델을 계속 저장 (에포크 끝에서 최고의 성능을 낸 모델)

🔸 콜백리스트 작성

callbacks_list = [keras.callbacks.EarlyStopping(monitor="val_accuracy",

patience=2,),

keras.callbacks.ModelCheckpoint(filepath="checkpoint_path.keras",

monitor="val_loss",

save_best_only=True,)]- EarlyStopping

monitor="val_accuracy": 모델의 검증 정확도를 모니터링patience: n번의 에포크동안 정확도가 향상되지 않으면 훈련 중지

- ModelCheckpoint

filepath: 모델 파일의 저장 경로monitor="val_loss": 손실값이 좋아지지 않으면 모델파일을 덮어쓰지 않음.save_best_only=True훈련하는 동안 가장 좋은 모델 저장

🔸 fit()에 적용

(images, labels), (test_images, test_labels) = mnist.load_data()

images = images.reshape((60000, 28 * 28)).astype("float32") / 255

test_images = test_images.reshape((10000, 28 * 28)).astype("float32") / 255

train_images, val_images = images[10000:], images[:10000]

train_labels, val_labels = labels[10000:], labels[:10000]

model = get_mnist_model()

model.compile(optimizer="rmsprop",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

model.fit(train_images, train_labels,

epochs=10,

callbacks=callbacks_list,

validation_data=(val_images, val_labels))- fit() 메서드의 callbacks 매개변수를 이용하여 콜백의 리스트를 모델로 전달

🔸 저장된 모델 호출

model = keras.models.load_model('checkpoint_path.keras')2) 사용자 정의 콜백 만들기: keras.callbacks.Callback

-

훈련도중 내장콜백에서 제공하지 않는 특정행동을 만들 수 있다.

-

keras.callbacks.Callback클래스를 상속

- on_epoch_begin(epoch, logs): 에포크가 시작할 때 호출

- on_epoch_end(epoch, logs): 에포크가 끝날 때 호출

- on_batch_begin(batch, logs): 배치처리가 시작하기 전

- on_batch_end(batch, logs): 배치처리가 끝난 후

- on_train_begin(logs): 훈련시작 시

- on_train_end(logs): 훈련 끝날 때

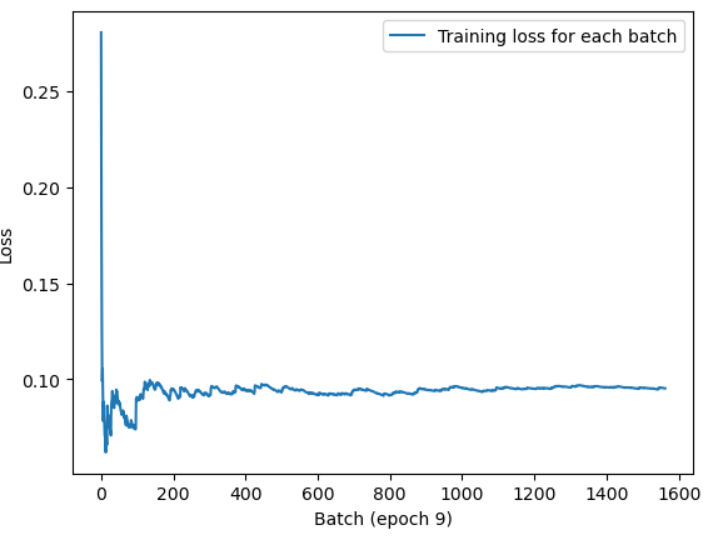

🔸 사용자 정의 콜백 함수 생성

from matplotlib import pyplot as plt

class LossHistory(keras.callbacks.Callback):

def on_train_begin(self, logs):

self.per_batch_losses = []

def on_batch_end(self, batch, logs):

self.per_batch_losses.append(logs.get("loss"))

def on_epoch_end(self, epoch, logs):

plt.clf()

plt.plot(range(len(self.per_batch_losses)), self.per_batch_losses,

label="Training loss for each batch")

plt.xlabel(f"Batch (epoch {epoch})")

plt.ylabel("Loss")

plt.legend()

plt.savefig(f"plot_at_epoch_{epoch}")

self.per_batch_losses = []설명:

훈련이 시작되면 손실값 리스트를 만들고,

각 배치처리가 끝날때 손실값을 리스트에 저장하고,

각 에포그가 끝날때 그래프를 그리기🔸 적용

(images, labels), (test_images, test_labels) = mnist.load_data()

images = images.reshape((60000, 28 * 28)).astype("float32") / 255

test_images = test_images.reshape((10000, 28 * 28)).astype("float32") / 255

train_images, val_images = images[10000:], images[:10000]

train_labels, val_labels = labels[10000:], labels[:10000]

model = get_mnist_model()

model.compile(optimizer="rmsprop",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

model.fit(train_images, train_labels,

epochs=10,

callbacks=[LossHistory()],

validation_data=(val_images, val_labels))