Langchain 공부 #2

Template

이어서 공부!

from langchain import PromptTemplate, OpenAI

from langchain import LLMChain

from langchain.prompts.few_shot import FewShotPromptTemplate

from langchain.prompts.prompt import PromptTemplate

OPENAI_API_KEY = "..."

llm = OpenAI(temperature=0, openai_api_key=OPENAI_API_KEY)

template = """

I want you to act as a professional chef

What is a delicious meal from the country of {country}?

"""

prompt_template = PromptTemplate(

input_variables=["country"],

template=template,

)

chain = LLMChain(llm=llm, prompt = prompt_template)

print(chain.run('Mexico'))

....

template = """

I want you to act as a professional chef

What is a delicious {type_of_dish} from the country of {country}?

"""

prompt_template = PromptTemplate(

input_variables=["country", "type_of_dish"],

template=template,

)

chain = LLMChain(llm=llm, prompt = prompt_template)

print(chain.run({

'country': "America",

'type_of_dish': "meat dish"

}))위와 같이 prompt가 있고, input_variables를 활용하면 하나의 템플릿에서 출발한 결과물들을 얻을 수 있음

Memory

- ConversationBufferWindowMemory: 마지막 K Message만 저장함 (K의 의미가 뭘까..)

- ConversationSummaryMemory: 대화의 Summary만 저장함

- VectorStore-Backed Memory: 모든 메세지를 저장하지만, 정확히 메세지의 순서를 트래킹할 수는 없음. 대신 연관된 정보 조각을 기억할 수 있음. VectorDB에 저장됨

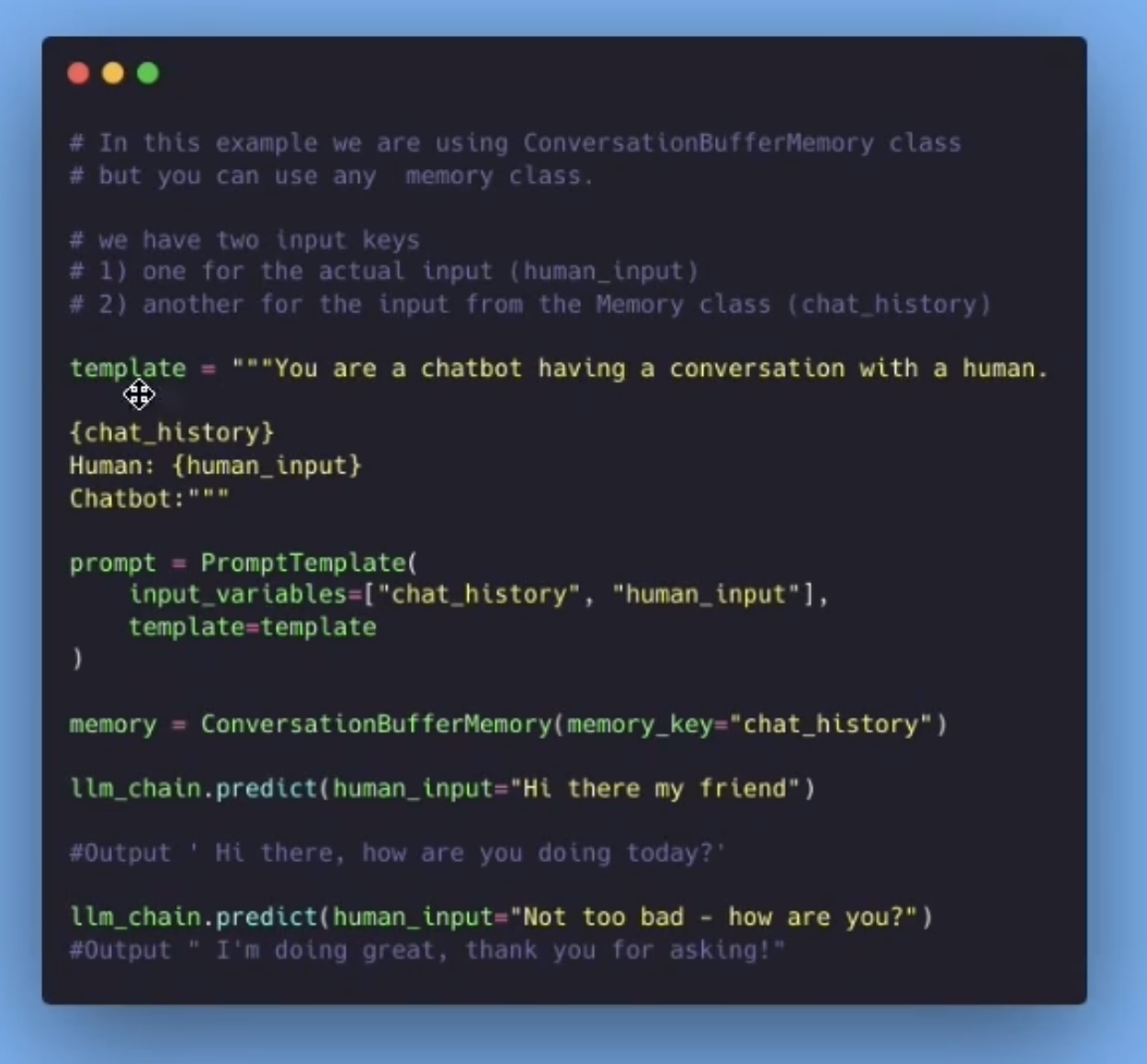

그럼 어떻게 메모리를 체인에 추가하느냐?

1. prompt와 memory를 셋업

2. LLMChain을 세팅

3. LLMChain 부르기!

Agent에 메모리를 추가하는 방법도 존재함.

보통 Agent는 메모리가 없지만 갖게 하는 방법이 있음.

1. LLMChain을 메모리와 함께 생성

2. Custom Agent를 생성하기 위해 LLMChain을 사용

Agent는 도저히 뭔지 모르겠다..

예제를 봅시다

!pip install -qU langchain openai tiktoken

from langchain import OpenAI, PromptTemplate

from langchain.chains import LLMChain, ConversationChain, LLMMathChain, TransformChain, SequentialChain, SimpleSequentialChain

from langchain.chains.conversation.memory import ConversationBufferMemory, ConversationSummaryBufferMemory

OPENAI_API_KEY = "..."

llm = OpenAI(temperature=0, openai_api_key=OPENAI_API_KEY)

memory = ConversationBufferMemory()

conversation = ConversationChain(

llm=llm,

verbose=True,

memory=memory

)

conversation.predict(input="Hi there! I am Peter!")- 위 예시는 ConversationBufferMemory를 활용한 것. 돈 많이 깨질 수 있으니 주의.

- ConversationChain 안에 만든 BufferMemory를 넣는다

- 이때 메모리는 내부를 보면 요렇게 되어있다

chat_memory=ChatMessageHistory(messages=[HumanMessage(content='Hi there! I am Peter!', additional_kwargs={}, example=False), AIMessage(content=" Hi Peter! It's nice to meet you. My name is AI. What can I do for you today?", additional_kwargs={}, example=False), HumanMessage(content='What is my name?', additional_kwargs={}, example=False), AIMessage(content=' Your name is Peter. Is there anything else I can help you with?', additional_kwargs={}, example=False), HumanMessage(content='I have a customer named Alice that wants to buy one taco with a Diet Coke ', additional_kwargs={}, example=False), AIMessage(content=' Sure, I can help you with that. Do you know what type of taco Alice wants?', additional_kwargs={}, example=False)]) output_key=None input_key=None return_messages=False human_prefix='Human' ai_prefix='AI' memory_key='history'- ConversationSummaryBufferMemory 를 사용하면 돈을 아낄 수 있어서 좋음.

Current conversation:

System:

The AI greets Peter and asks what it can do for him. Peter reveals he owns a restaurant, to which the AI responds positively and inquires what kind of restaurant it is.

Human: I have a customer that wants to buy 3 bowls of ramen

AI: Sure, I can help you with that. Do you need help with the preparation or the payment?

Human: In the ramen, make sure there are no veggies and its extra spicy

AI:위와 같은 대화를

The AI greets Peter and asks what it can do for him. Peter reveals he owns a restaurant, to which the AI responds positively and inquires what kind of restaurant it is. Peter then reveals he needs help with a customer wanting to buy 3 bowls of ramen, to which the AI responds positively and offers to help with either the preparation or the payment.이렇게 압축해준다.

보면서 느낀 건 실제 인간의 기억도 오래되면 이런 식으로 추상화되는 과정을 거치겠구나.. 싶어서 소름 돋는 부분임