1. 네이버 영화리뷰 감성분석 개요

- 네이버 영화리뷰 데이터로 학습한 ML/DL 모델을 활용해 감성분석 API 개발

- 나이브 베이지안 모델과 딥러닝 모델로 학습한 두 개의 모델을 서빙하며 0은 부정을, 1은 긍정을 의미

- 과정

- 영화 리뷰 텍스트 POST predict 요청 "영화가 재미 없어요"

- do_fast 요청 정보에 따라 ML 또는 DL 모델로 예측

- 여기서 do_fast는 client가 요청할 때 함께 넣어주는 변수

(밑에서 다시 설명)

- 여기서 do_fast는 client가 요청할 때 함께 넣어주는 변수

- 예측된 결과(즉 감성분석 결과)를 key : value 형태로 묶어서 반환

2. API 정의

- key:value 형태의 json 포맷으로 요청을 받아 text index별로 key:value로 결과를 저장한 json 포맷으로 결과 반환

- POST 방식으로 predict 요청

# POST 즉 predict

json = {

'text' = ['review1', 'review2' ... ],

'do_fast' : true # or false

}- do_fast 변수 :

- True --> 빠른 추론 속도가 가능한 머신러닝 모델로 추론

- False --> 추론 속도는 느리지만 정확도가 높은 딥러닝 모델로 추론

# response

{"idx0" : {

"text" : 'review1',

'label' : 'positive' or 'negative',

'confidence' : float

},

...

"idxn" : {

"text" : 'reviewn',

'label' : 'positive' or 'negative',

'confidence' : float

}

...

}3. Add Deep Learning model handler (1/2)

- 사전 학습한 딥러닝 모델을 활용하여 머신러닝 모델 handler와 동일한 입력에 대해 동일한 결과를 반환하는 handler 개발

3-1. torch 불러오기

import torch

3-2. initialize

def initialize(self, ):

from transformers import AutoTokenizer, AutoModelForSequenceClassification

self.model_name_or_path = 'sackoh/bert-base-multilingual-cased-nsmc'

self.tokenizer = AutoTokenizer.from_pretrained(self.model_name_or_path)

self.model = AutoModelForSequenceClassification.from_pretrained(self.model_name_or_path)

self.model.to('cpu') # 모델이 CPU에서만 돌아가도록 설정3-3. Preprocess

def preprocess(self, text):

# preprocess raw text

model_input = self._clean_text(text)

# vectorize cleaned text

model_input = self.tokenizer(text, return_tensors = 'pt', padding = True)

return model_input3-4. Inference

def inference(self, model_input):

with torch.no_grad(): # 가중치 조정 X

model_output = self.model(**model_input)[0].cpu()

# 밑에 코드는 나중에 배움. 지금은 몰라도 됨.

model_output = 1.0 / (1.0 + torch.exp(-model_output))

model_output = model_output.numpy().astype('float')

return model_output3-5. Postprocess

def postprocess(self, model_output):

# process predictions to predicted label and output format

predicted_probabilities = model_output.max(axis = 1)

predicted_ids = model_output.argmax(axis = 1)

predicted_labels = [self.id2label[id_] for id_ in predicted_ids]

return predicted_labels, predicted_probabilities3-6. Handle

def handle(self, data):

# do above processes

model_input = self.preprocess(data)

model_output = self.inference(model_input)

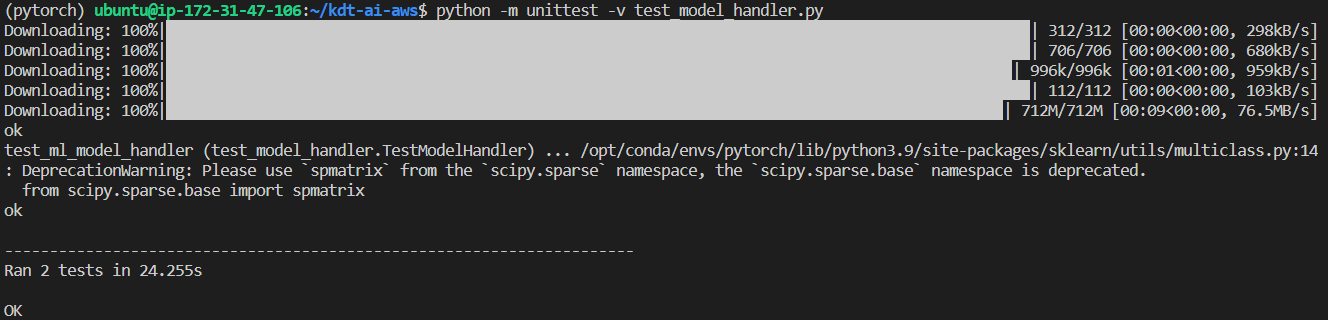

return self.postprocess(model_output)4. Unittest model handlers

- 개발한 model handler가 원했던 대로 동작하는지 unittest

python -m unittest -v test_model_handler.py

--> unittest 성공!

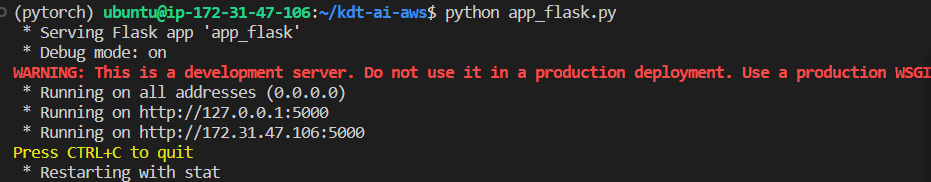

5. Flask API 개발 & 배포

- model을 전역변수로 불러오고 요청된 텍스트에 대해 예측 결과를 반환하는 코드 입력

from flask import Flask, request, json

from model import MLModelHandler, DLModelHandler

app = Flask(__name__)

# assign model handler as global variable [2 LINES]

ml_handler = MLModelHandler()

dl_handler = DLModelHandler()

@app.route("/predict", methods=["POST"])

def predict():

# handle request and body

body = request.get_json()

text = body.get('text', '')

text = [text] if isinstance(text, str) else text

do_fast = body.get('do_fast', True)

# model inference [2 LINES]

if do_fast: # 머신러닝 모델 사용

predictions = ml_handler.handle(text)

else:

predictions = dl_handler.handle(text)

# response

result = json.dumps({str(i): {'text': t, 'label': l, 'confidence': c}

for i, (t, l, c) in enumerate(zip(text, predictions[0], predictions[1]))}) # predictions[0] : label, predictions[1] : label에 대한 confidence값

return result

if __name__ == "__main__":

app.run(host='0.0.0.0', port='5000', debug=True)

6. Test API on remote

- 원격에서 서버로 API에 요청하여 테스트 수행

- host : EC2 인스턴스 생성 시에 받은 퍼블릭 IP 주소 또는 직접 할당한 탄력적 IP주소

- port : EC2 인스턴스 생성 시에 설정했던 port 번호 (ex. 5000)

- 터미널 방식

curl -d '{"text" : ["영화 오랜만에 봤는데 괜찮은 영화였어", "정말 지루했어"], "use_fast" : False}'\

-H "Content-Type: application/json"\

-X POST\

http://43.201.30.132:5000/predict- 파이썬 방식

import requests

url = 'http://43.201.30.132:5000/predict'

response = requests.post(url, json = data)--> 파이썬 방식으로 해봤지만 다음과 같은 오류 발생...