테라폼으로 eks 만들기

디렉토리는 아래와 같습니당..!

테라폼 코드

# Allow terraform to access k8s

provider "kubernetes" {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

token = data.aws_eks_cluster_auth.eks.token

}

# Allow Helm to access k8s

provider "helm" {

kubernetes {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

token = data.aws_eks_cluster_auth.eks.token

}

}

data "aws_availability_zones" "available" {}

data "aws_caller_identity" "current" {} # ${data.aws_caller_identity.current.account_id}

data "aws_eks_cluster_auth" "eks" {name = module.eks.cluster_name}

locals {

name = "infra"

cluster_version = "1.26"

region = "us-east-1"

vpc_id = data.terraform_remote_state.vpc.outputs.vpc_id

subnet_ids = data.terraform_remote_state.vpc.outputs.private_subnets

sg_common_id = data.terraform_remote_state.sg.outputs.yusa_bastion_id

external_dns_arn="${퍼블릭dns arn넣기}"

external_cert_arn="${acm arn 넣기}"

tags = merge(

data.aws_default_tags.aws_dt.tags,

{ Owner = "yusa" }

)

}

# # # # # # # # # # # # # # # # # # # # # # # # # # #

# # EKS Module

# # # # # # # # # # # # # # # # # # # # # # # # # # #

# aws eks --region ${지역넣기} update-kubeconfig --name ${클러스터 이름}

module "eks" {

source = "terraform-aws-modules/eks/aws"

cluster_name = "eks-${local.name}-cluster"

cluster_version = local.cluster_version

cluster_endpoint_private_access = true

cluster_endpoint_public_access = true

# 프라이빗 eks로 만들고 계속 오류나서 변경!, 화가나서 도저히 못하겠음...^^ 지구 부셔~

# aws-auth CM

manage_aws_auth_configmap = true

# IAM 계정에서도 볼수있도록 auth user에 master줘버리기, 더 작게 주고싶으면...작게 줘도;;

# ( master를 줘버리면, k8s에서 안해도됨.)

aws_auth_users = [

{

userarn = "${넣고싶은 유저 arn}"

username = "yusa"

groups = ["system:masters"]

}

]

vpc_id = local.vpc_id

subnet_ids = local.subnet_ids

# 돈 절약...

create_cloudwatch_log_group = false

# https://repost.aws/ko/knowledge-center/load-balancer-troubleshoot-creating

eks_managed_node_group_defaults = {

instance_types = ["t3.medium"]

iam_role_attach_cni_policy = true

use_name_prefix = false

use_custom_launch_template = false

block_device_mappings = {

xvda = {

device_name = "/dev/xvda"

ebs = {

volume_size = 20

volume_type = "gp2"

delete_on_termination = true

}

}

}

remote_access = {

ec2_ssh_key = "${자기 프라이빗 키 이름 넣기}"

source_security_group_ids = ["${local.sg_common_id}"]

tags = {

"kubernetes.io/cluster/eks-infra-cluster" = "owned"

}

}

tags = local.tags

}

cluster_identity_providers = {

sts = {

client_id = "sts.amazonaws.com"

}

}

cluster_addons = {

coredns = {

most_recent = true

resolve_conflicts = "OVERWRITE"

}

kube-proxy = {

most_recent = true

}

vpc-cni = {

most_recent = true

resolve_conflicts = "OVERWRITE"

}

}

eks_managed_node_groups = {

infra-node-group = {

name = "eks-${local.name}-nodegroup"

labels = {

nodegroup = "infra"

}

desired_size = 1

min_size = 1

max_size = 2

}

}

}

# https://registry.terraform.io/modules/terraform-aws-modules/iam/aws/latest/examples/iam-role-for-service-accounts-eks#module_load_balancer_controller_targetgroup_binding_only_irsa_role

module "load_balancer_controller_irsa_role" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

role_name = "${local.name}-lb-controller-irsa-role"

attach_load_balancer_controller_policy = true

oidc_providers = {

main = {

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["kube-system:aws-load-balancer-controller"]

}

}

tags = local.tags

}

module "load_balancer_controller_targetgroup_binding_only_irsa_role" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

role_name = "${local.name}-lb-controller-tg-binding-only-irsa-role"

attach_load_balancer_controller_targetgroup_binding_only_policy = true

oidc_providers = {

main = {

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["kube-system:aws-load-balancer-controller"]

}

}

tags = local.tags

}

resource "kubernetes_service_account" "aws-load-balancer-controller" {

metadata {

name = "aws-load-balancer-controller"

namespace = "kube-system"

annotations = {

"eks.amazonaws.com/role-arn" = module.load_balancer_controller_irsa_role.iam_role_arn

}

labels = {

"app.kubernetes.io/component" = "controller"

"app.kubernetes.io/name" = "aws-load-balancer-controller"

}

}

depends_on = [module.load_balancer_controller_irsa_role]

}

resource "kubernetes_service_account" "external-dns" {

metadata {

name = "external-dns"

namespace = "kube-system"

annotations = {

"eks.amazonaws.com/role-arn" = module.external_dns_irsa_role.iam_role_arn

}

}

depends_on = [module.external_dns_irsa_role]

}

module "external_dns_irsa_role" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

role_name = "${local.name}-externaldns-irsa-role"

attach_external_dns_policy = true

external_dns_hosted_zone_arns = [local.external_dns_arn]

oidc_providers = {

main = {

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["kube-system:external-dns"]

}

}

tags = local.tags

}

# # # # # # # # # # # # # # # # # # # # # # # # # # #

# # HELM

# # # # # # # # # # # # # # # # # # # # # # # # # # #

# https://github.com/GSA/terraform-kubernetes-aws-load-balancer-controller/blob/main/main.tf

# https://registry.terraform.io/providers/hashicorp/helm/latest/docs/resources/release

# https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.5/

resource "helm_release" "aws-load-balancer-controller" {

name = "aws-load-balancer-controller"

namespace = "kube-system"

repository = "https://aws.github.io/eks-charts"

chart = "aws-load-balancer-controller"

set {

name = "clusterName"

value = module.eks.cluster_name

}

set {

name = "serviceAccount.create"

value = false

}

set {

name = "serviceAccount.name"

value = "aws-load-balancer-controller"

}

# 위와 동일

# dynamic "set" {

# for_each = {

# "clusterName" = module.eks.cluster_name

# }

# }

# depends_on = [kubernetes_service_account.aws-load-balancer-controller]

}

# https://tech.polyconseil.fr/external-dns-helm-terraform.html

# parameter https://github.com/kubernetes-sigs/external-dns/tree/master/charts/external-dns

resource "helm_release" "external_dns" {

name = "external-dns"

namespace = "kube-system"

repository = "https://charts.bitnami.com/bitnami"

chart = "external-dns"

wait = false

set {

name = "provider"

value = "aws"

}

set {

name = "serviceAccount.create"

value = false

}

set {

name = "serviceAccount.name"

value = "external-dns"

}

set {

name = "policy"

value = "sync"

}

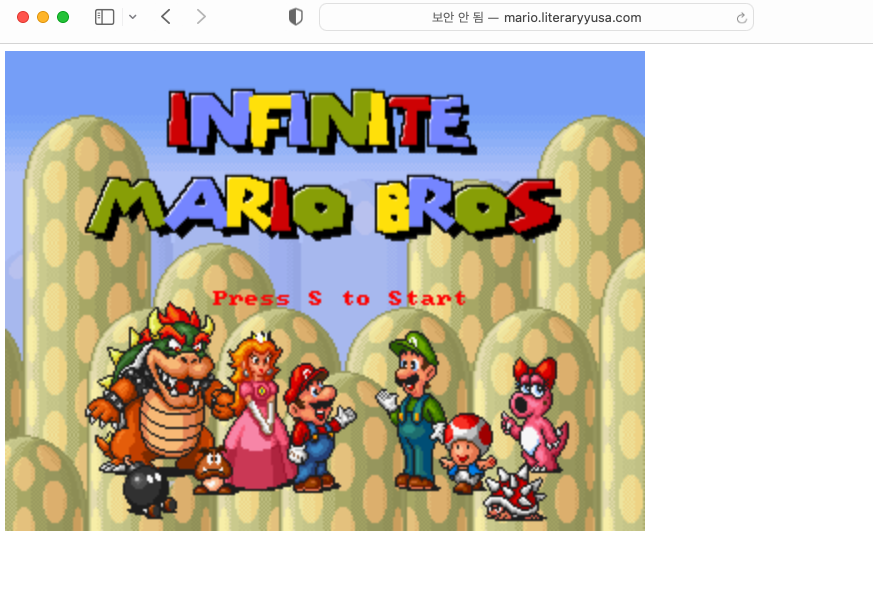

}결과 확인을 위한 mario

[root@ip-10-1-8-104 ~]# cat mario.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: mario

name: mario

spec:

replicas: 1

selector:

matchLabels:

app: mario

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: mario

spec:

containers:

- image: pengbai/docker-supermario

name: docker-supermario

---

apiVersion: v1

kind: Service

metadata:

name: mario

spec:

selector:

app: mario

ports:

- port: 80

targetPort: 8080

protocol: TCP

type: NodePort

[root@ip-10-1-8-104 ~]# kubectl apply -f mario.yaml

deployment.apps/mario created

service/mario created

[root@ip-10-1-8-104 ~]# cat mario-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: default

name: mario-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/load-balaner-name: yusa-mario

alb.ingress.kubernetes.io/target-type: ip

spec:

rules:

- host: mario.literaryyusa.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: mario

port:

number: 80

[root@ip-10-1-8-104 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default mario-6566bc5b4d-rmzdb 1/1 Running 0 36m

kube-system aws-load-balancer-controller-5b9f545d58-8kpx4 1/1 Running 0 16h

kube-system aws-load-balancer-controller-5b9f545d58-pclzf 1/1 Running 0 16h

kube-system aws-node-d2ndd 1/1 Running 0 50m

kube-system coredns-7d484f769c-f5cqs 1/1 Running 0 16h

kube-system coredns-7d484f769c-w877z 1/1 Running 0 16h

kube-system external-dns-5b89cd4449-4svx5 1/1 Running 0 45m

kube-system kube-proxy-jb85k 1/1 Running 0 50

잘 설정했다면 저렇게 올라오게 된다! https설정을 하려면 이렇게 바꾸면되더라!

alb.ingress.kubernetes.io/ssl-redirect: '443'

alb.ingress.kubernetes.io/certificate-arn: 내꺼 arn넣기

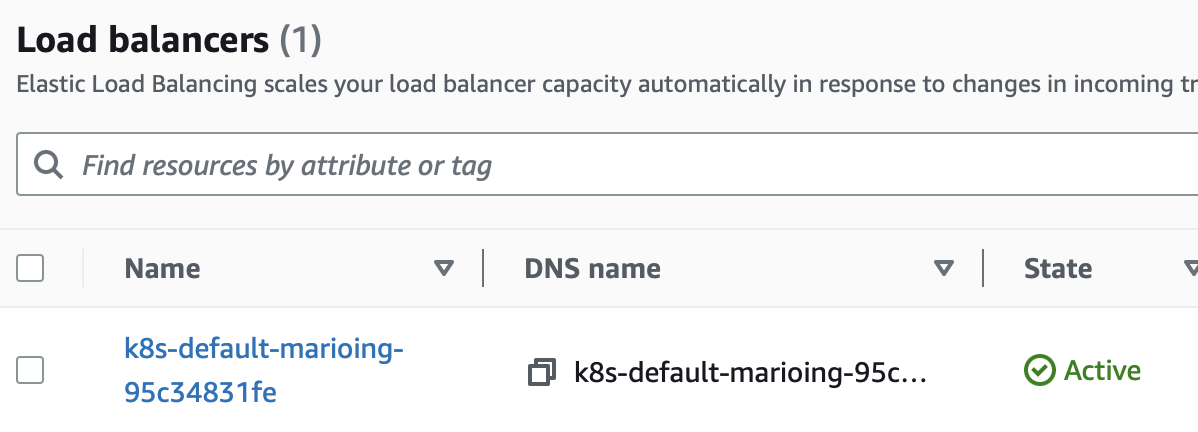

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'인그레스 까지 올리면 이렇게 아래와 같이 로드밸런서가 생성된다!

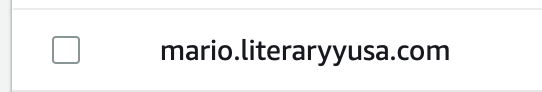

라우트 53에는 아래와 같이 추가가됨

A레코드로는 alb주소가, txt로 다른 것들이 추가된다!

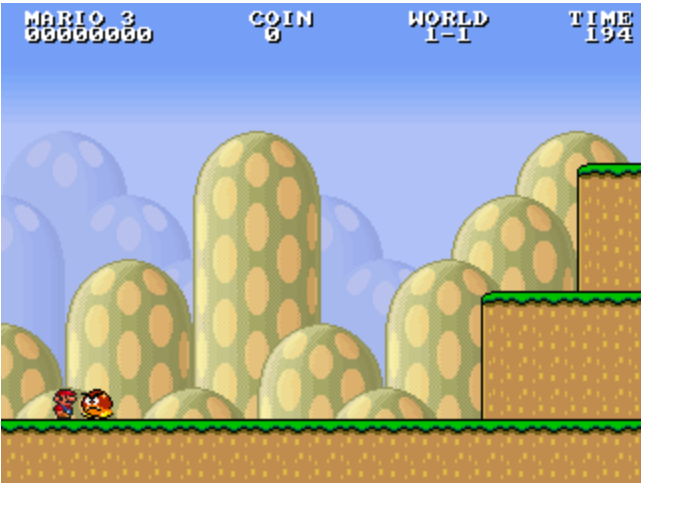

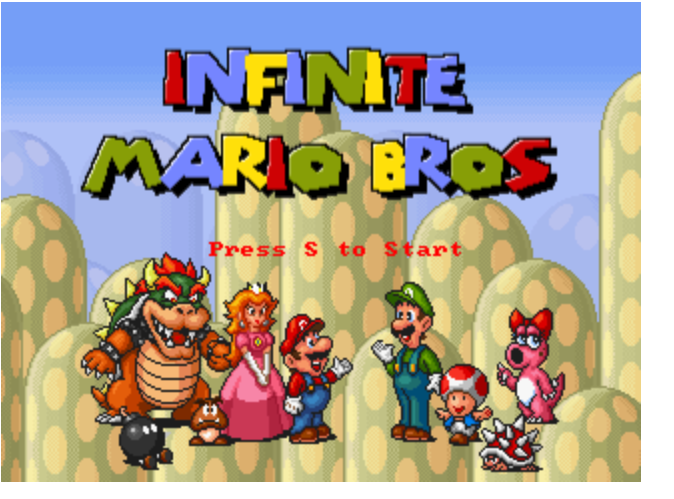

이후 접속하면 아래와 같이 화면을 볼수있음.

오류 모음

주소가 생성이 안되는 오류

[root@ip-10-1-8-104 ~]# kubectl get ingress -A

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

default ingress * 80 13s

Address가 계속 안붙을때는 vpc에 태그를 했는지 확인해보자..!

퍼블릭 서브넷에 태그를 아래와 같이 넣어줘야만 컨트롤러가 alb를 찾아서 아래와같이 배포해준다.

public_subnet_tags = {

"kubernetes.io/role/elb" = "1"

}헬름 오류

노드 올라오고 -> irsa 설정하고 -> helm 설정해야 오류 안생기더라... 15분지나도 안끝나서 멈췄다가 다시 시작하니 되던데 이유를 모르겟ㅇ므 ..;;

디펜던시가 있는 것같다.

external DNS 오류

external DNS가 등록해서 들어갈때, 우리는 public zone이기때문에 public zone 에 등록해서 사용해된다는 것;;;

private zone에 등록하고 찾아갈려고하면 당연히 안되는 건데...

이건 내가 너무 바보였음...

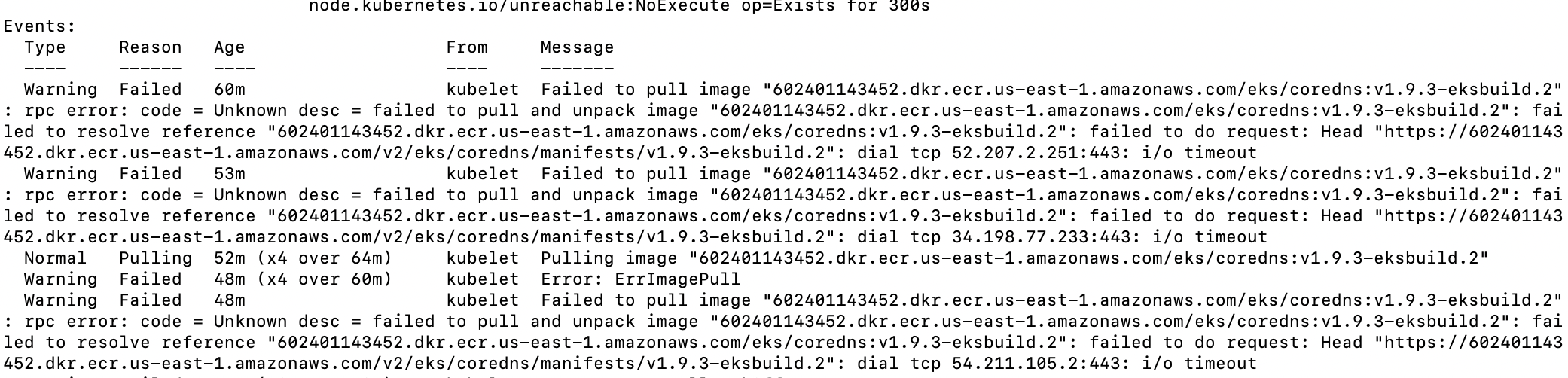

private eks terraform 만들다 때려침.. oidc랑 irsa 설정에서 안되버림 ㅠ 엔드포인트 문제인가 ㅠ

계속 노드 등록이 안되는 이유를 몰랐는데.

일단 짐작으로는 private subnet에 NAT도 없기때문에, vpc endpoint를 무조건 만들어야 될 것으로 보임

하... 내 하루의 반틈 날라갔다.. 프라이빗 구성하면 인터넷으로 못보기때문에, eks endpoint만들어줘야되는거 매번 해줬으면서 이번에 안해줘서... ^^;;

https://aws-ia.github.io/terraform-aws-eks-blueprints/node-groups/

참고 : eks를 private으로 만들고, NAT가 없을때 ( 인터넷 연결이 없을 때 ecr endpoint도 만들어줘야만 imagepull이 가능함 )

가져오지 못할 것입니다... ^^

private 으로 만들다가 결국 private은 eksctl 로 한방에 만드는 게 좋은거 같다.

사실 인터넷 안되는 곳에서 누가 쓰긴할까 싶기도하고.. ^^;;