KANS(Kubernetes Advanced Networking Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 정리한 게시글입니다. 8주차는 Cilium CNI를 학습하여 정리하였습니다. 개인적으로 궁금했던 eks에 vpc-cni 대신 cilium을 설치 테스트한 내용을 추가 하였습니다.

1. Cilium CNI 실습환경 구성 on MacBook with VMwareFusion

Vanailla K8s 환경

- Macbook Air(M1 16GB) + vagrant + VMware Fusion 13 Pro + Ubuntu24.04(arm64)

- K8S v1.30.6, 노드 OS(Ubuntu 22.04.3), CNI(Cilium),

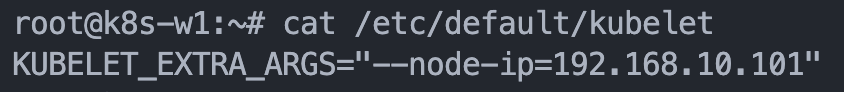

kube-proxy미설치kube-proxy를 미설치하는 이유는 다음과 같습니다.

-

Kubernetes Cluster 내부 또는 외부에서 접근하고자 하는 Application에 대해 접근을 위해서는

Endpoint가 필요한데, 이러한 Endpoint를 제공하는 것이 Kubernetes의 Service라는 Object가 제공합니다.

Kubernetes Cluster 상에 배포한 Application에 대한 Endpoint를 제공하기 위해 Service를 구성하는 방식은

일반적으로 ClusterIP 또는 NodePort 등을 사용하게 됩니다. -

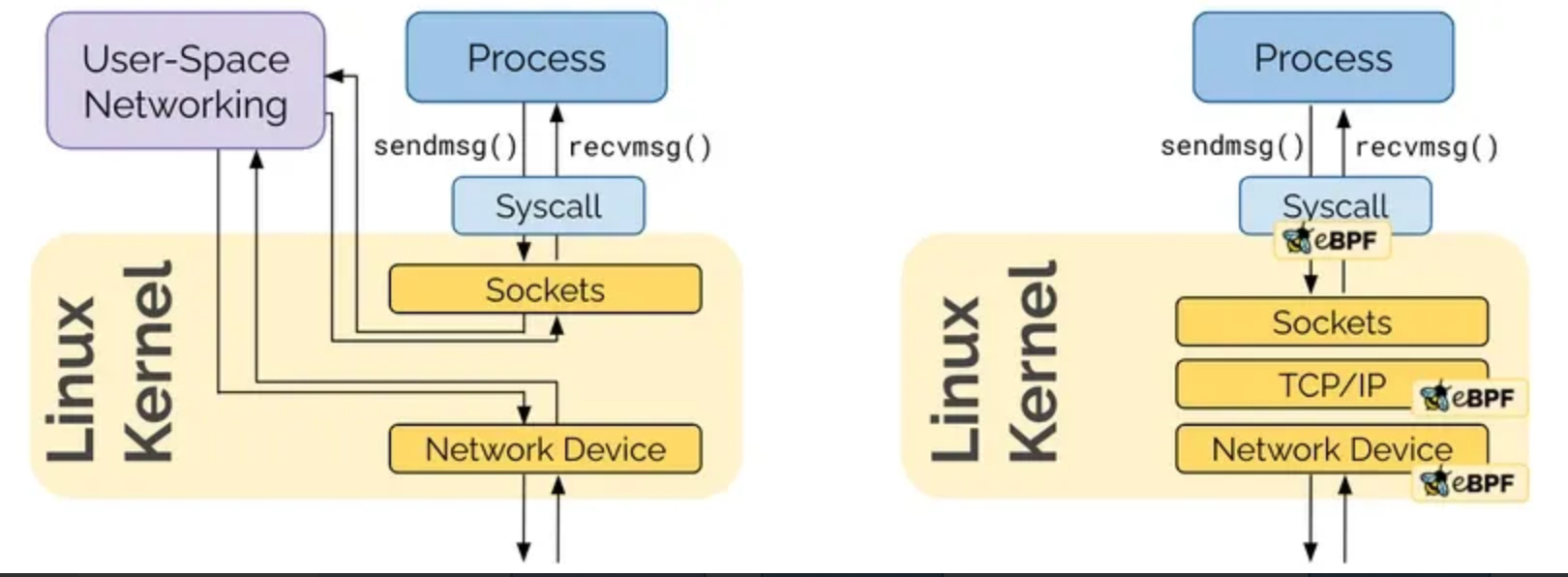

앞서 언급한 Service 유형들은 실제 요청이 Endpoint로 전달되었을 때, 요청에 대한 Target Pod로 Routing 하기 위해서 각 Node에 배포 되어 있는 kube-proxy가 이를 대신 수행합니다.

즉, kube-proxy가 생성한 iptables를 기반으로 동작한다는 것이 맞겠죠.

Service가 생성되면 해당 Service에 대한 Targer Backend로의 분산을 위한 iptables Chain 및 규칙이 생성되는데 이러한 iptables의 규칙을 생성하고 배포하는 것을 kube-proxy가 담당합니다. -

kube-proxy가 열일(?)을 해주는 덕분에 Service를 생성하고 운영하는데 추가 노력이 들지 않습니다만, Service가 더 많은 환경에서는 이러한 iptables 기반의 환경은 잠재적으로 다양한 문제 유발할 수 있습니다.

- Performance: 일치할때까지 모든 규칙 평가,

- Time: 새로운 서비스 생성 시 규칙 적용위해 iptables list 전체 교체,

- Operations: 기하급수적인 iptables 규칙증가로 관리에 어려움 발생)

본 학습에서 다룰 cilium은 kube-proxy 없이, iptables 동작없이 작동한다고 합니다

[출처][Kubernetes/Networking] eBPF Basic|작성자 kangdorr -

Vanilla K8s 초기 환경 구성 시 문제점 및 해결

-

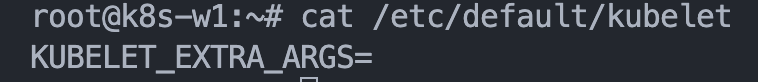

Vagrantfile에서 각 VM에 대해 private ip로 192.168.10.x로 지정하였으나

Vanilla K8S에 Join된 Worker Node의 IP는 eth0 (NAT) IP인 경우 발생 -

k8s 클러스터를 설치할 때, 노드의 INTERNAL-IP를 기본적으로 eth0 인터페이스에 할당된 IP로 설정하는 경우가 많은데 eth1을 INTERNAL-IP로 설정하려면 --node-ip 옵션을 사용하고자 하였으나 k3s와는 다르게 kubeadm join 명령어 옵션으로

--node-ip은 지원되지 않네요

kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.10:6443-

각 WorkerNode의 /etc/default/kubelet 파일 옵션에 --node-ip를 명시하여 해결할 수 있습니다.

다음은 k8s-w.sh 파일에서 kubelet 옵션을 수정하는 방법입니다.

[수정전]

# k8s Controlplane Join - API Server 192.168.10.10" kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.10:6443[수정후]

# k8s Controlplane Join - API Server 192.168.10.10" sed -i "s/KUBELET_EXTRA_ARGS=/KUBELET_EXTRA_ARGS=\"--node-ip=192.168.10.10$1\"/g" /etc/default/kubelet systemctl daemon-reload systemctl restart kubelet kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.10:6443

소스 다운로드 & Vanilla K8s Cluster 구성

가시다님이 제공한 AWS CloudFormation 배포 소스(kans-8w.yaml)를 MacBook M시리즈에서도 실행할 수 있도록

커스터마이징 하였습니다. Vagrant&VMware Fustion 관련 세부적인 방법은 KANS3 - 6주차 Ingress & Gateway API + CoreDNS 1. mac에 k3s 실습환경 구성 참조바랍니다.

-

소스 Download

❯ git clone https://github.com/icebreaker70/kans-vanilla-k8s-cilium

❯ cd kans-vanilla-k8s-cilium -

VM 생성

❯ vagrant up --provision -

VM 상태 확인

❯ vagrant status

Current machine states:

k8s-s running (vmware_desktop)

k8s-w1 running (vmware_desktop)

k8s-w2 running (vmware_desktop)

testpc running (vmware_desktop) -

VM 접속

❯ vagrant ssh k3s-s

❯ vagrant ssh k3s-w1

❯ vagrant ssh k3s-w2

❯ vagrant ssh testpc -

K8s Cluster 확인

❯ vagrant ssh k8s-s

$ kc cluster-info # kc는 kubectl 실행 내용을 Color로 보여주는 Tool의 Alias

$ kc get nodes -o wide

$ kc get pods -A -

VM 일시멈춤

❯ vagrant suspend -

VM 멈춤재개

❯ vagrant resume -

VM 삭제

❯ vagrant destory -f -

K3s Cluster Local PC에서 접속

k8s-s에 있는 kube-config 파일 Local에 복사# cat <<EOT>> /etc/hosts #K3S 192.168.10.10 k8s-s 192.168.10.101 k8s-w1 192.168.10.102 k8s-w2 192.168.20.200 testpc #End of K8S EOT ❯ vagrant ssh k8s-s (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# exit vagrant@k8s-s:~$ sudo cp ~root/.kube/config config vagrant@k8s-s:~$ sudo chown vagrant:vagrant config vagrant@k8s-s:~$ exit ❯ vagrant scp k8s-s:config ~/.kube/config Warning: Permanently added '[127.0.0.1]:50010' (ED25519) to the list of known hosts. config (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kc get node -o wide # CNI 미설치로 NotReady 상태임 NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-s NotReady control-plane 21m v1.30.6 192.168.10.10 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.22 k8s-w1 NotReady <none> 19m v1.30.6 192.168.10.101 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.22 k8s-w2 NotReady <none> 17m v1.30.6 192.168.10.102 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.22 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kc get pod -A -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system coredns-55cb58b774-2dvq5 0/1 Pending 0 167m <none> <none> <none> <none> kube-system coredns-55cb58b774-t64fc 0/1 Pending 0 167m <none> <none> <none> <none> kube-system etcd-k8s-s 1/1 Running 0 167m 192.168.10.10 k8s-s <none> <none> kube-system kube-apiserver-k8s-s 1/1 Running 0 167m 192.168.10.10 k8s-s <none> <none> kube-system kube-controller-manager-k8s-s 1/1 Running 0 167m 192.168.10.10 k8s-s <none> <none> kube-system kube-scheduler-k8s-s 1/1 Running 0 167m 192.168.10.10 k8s-s <none> <none> # cilium 의 제대로?된 동작을 위해서 커널 버전은 최소 5.8 이상 권장 ❯ uname -a Linux k8s-s 5.15.0-92-generic #102-Ubuntu SMP Wed Jan 10 09:37:39 UTC 2024 aarch64 aarch64 aarch64 GNU/Linux (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# hostnamectl Static hostname: k8s-s Icon name: computer-vm Chassis: vm Machine ID: aa0d6ab8ce404638a3f1f84dd2101103 Boot ID: 17886a2311a24e99b4bfe35ec1ba034d Virtualization: vmware Operating System: Ubuntu 22.04.3 LTS Kernel: Linux 5.15.0-92-generic Architecture: arm64 Hardware Vendor: VMware, Inc. Hardware Model: VMware20,1

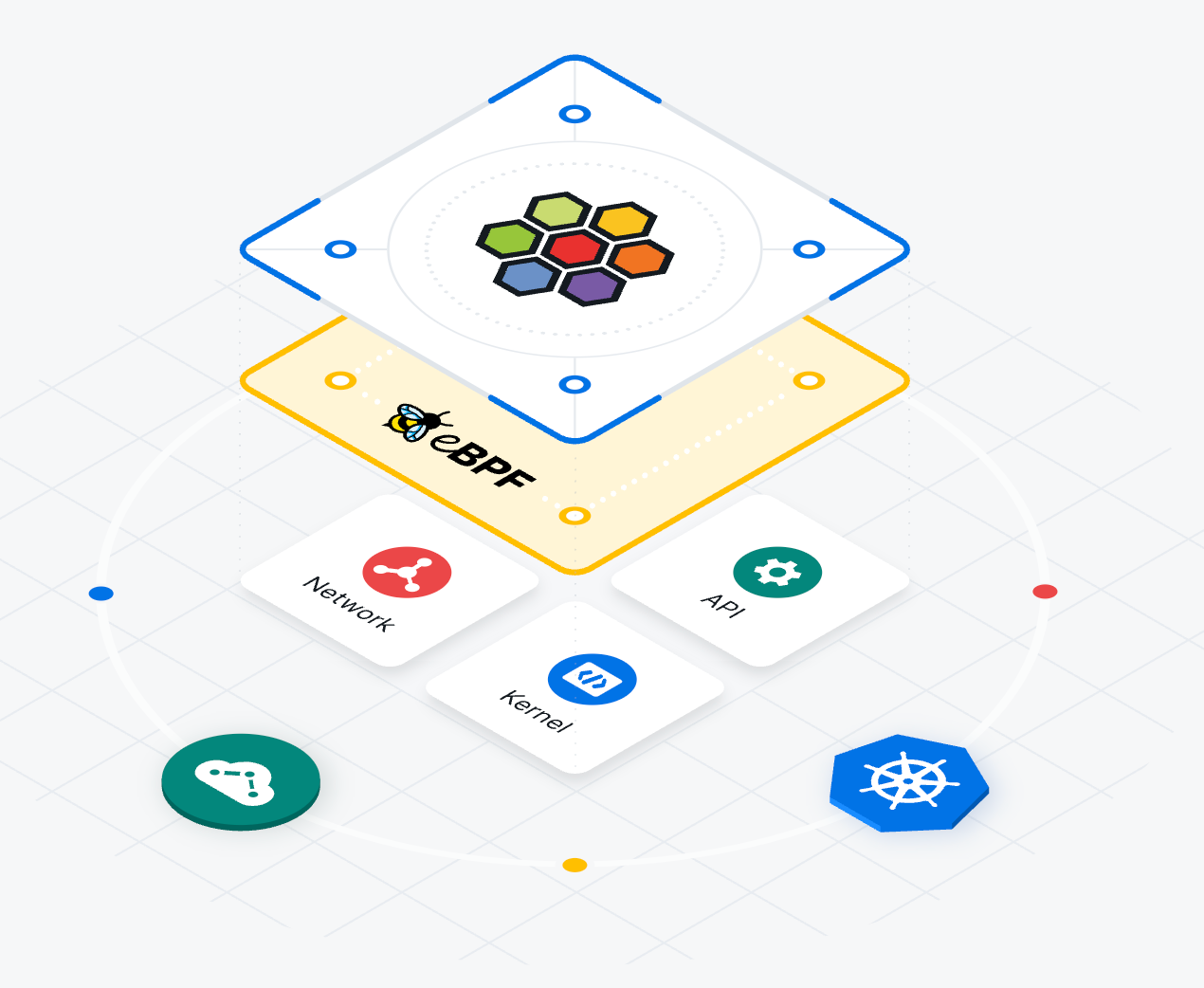

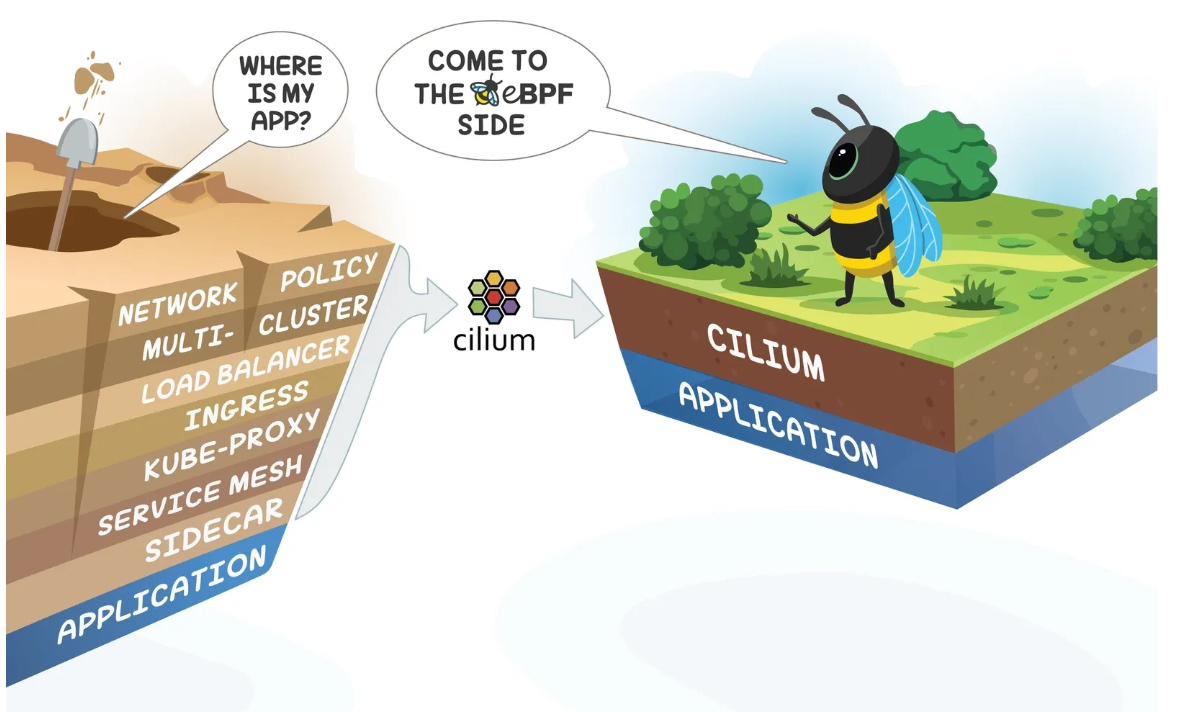

2. Cilium 소개

2021.10월 Cilium 는 CNCF 에 Join 되었습니다

또한 Google GKE dataplane 과 AWS EKS Anywhere 에 기본 CNI 로 Cilium 을 사용합니다

100% Kube-proxy replacement : iptables 거의 사용하지 않아도 동작, Datapath Optimizations (iptables bypass)

하지만 iptables 기능을 활용하는 일부 동작들은 이슈가 발생할 수 있음, 물론 지속해서 해당 이슈를 해결하고 있음 (예. istio, FHRP/VRRP - 링크)

Cilium은 Kubernetes 및 다른 클라우드 네이티브 환경에서 네트워크 및 보안을 제공하기 위한 오픈소스 네트워크 플러그인입니다. Cilium은 Linux 커널의 eBPF(extended Berkeley Packet Filter) 기술을 사용하여 높은 성능과 확장성을 지원합니다. eBPF는 커널 공간에서 사용자 정의 코드를 실행할 수 있게 하여, 데이터 패킷 처리 및 보안 정책 구현이 효율적으로 이뤄질 수 있도록 합니다.

Cilium의 주요 기능은 다음과 같습니다:

-

고성능 네트워킹: eBPF를 사용하여 패킷 필터링, 로드 밸런싱, 트래픽 관리 등을 커널 공간에서 실행하므로 높은 성능을 제공합니다.

-

정교한 보안 정책: Cilium은 네트워크 레벨뿐만 아니라 애플리케이션 레벨에서 보안 정책을 설정할 수 있습니다. 이를 통해 마이크로서비스 간의 통신을 더 세밀하게 제어할 수 있습니다.

-

로깅 및 모니터링: Cilium은 실시간 네트워크 트래픽 모니터링, 로깅 및 트레이싱 기능을 제공하여 네트워크 트래픽을 더 잘 이해하고 문제를 빠르게 해결할 수 있습니다.

-

확장성 및 클라우드 네이티브 지원: Kubernetes 및 다양한 클라우드 네이티브 환경에서 작동하도록 설계되어, 멀티 클러스터나 하이브리드 클라우드 환경에서도 유연하게 사용할 수 있습니다.

Cilium은 주로 네트워크 보안이 중요한 환경에서 사용되며, 특히 eBPF 기반의 동적 보안 정책과 네트워크 관리 기능을 통해 많은 Kubernetes 사용자들이 선택하는 네트워크 솔루션 중 하나로 자리 잡고 있습니다.

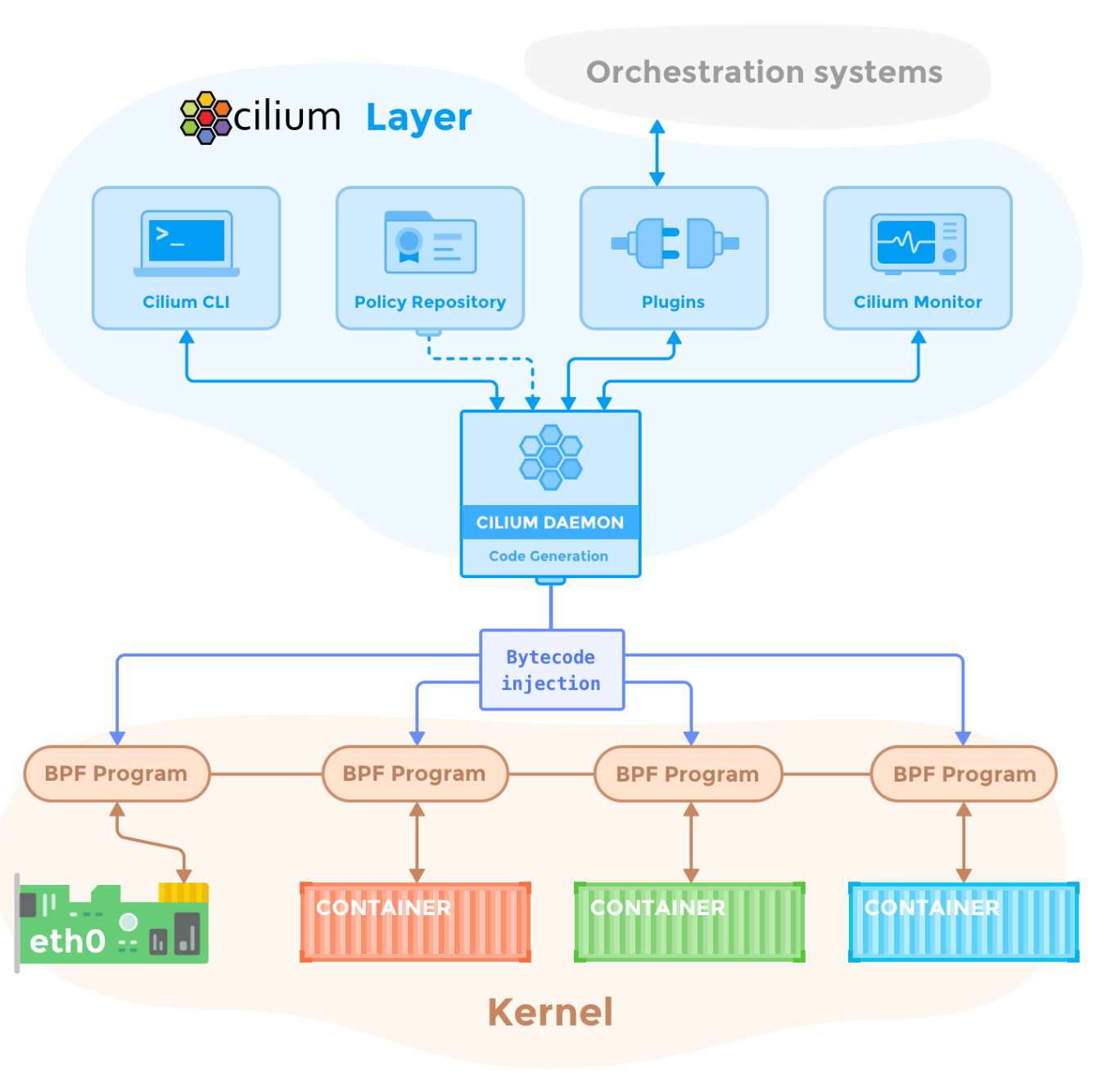

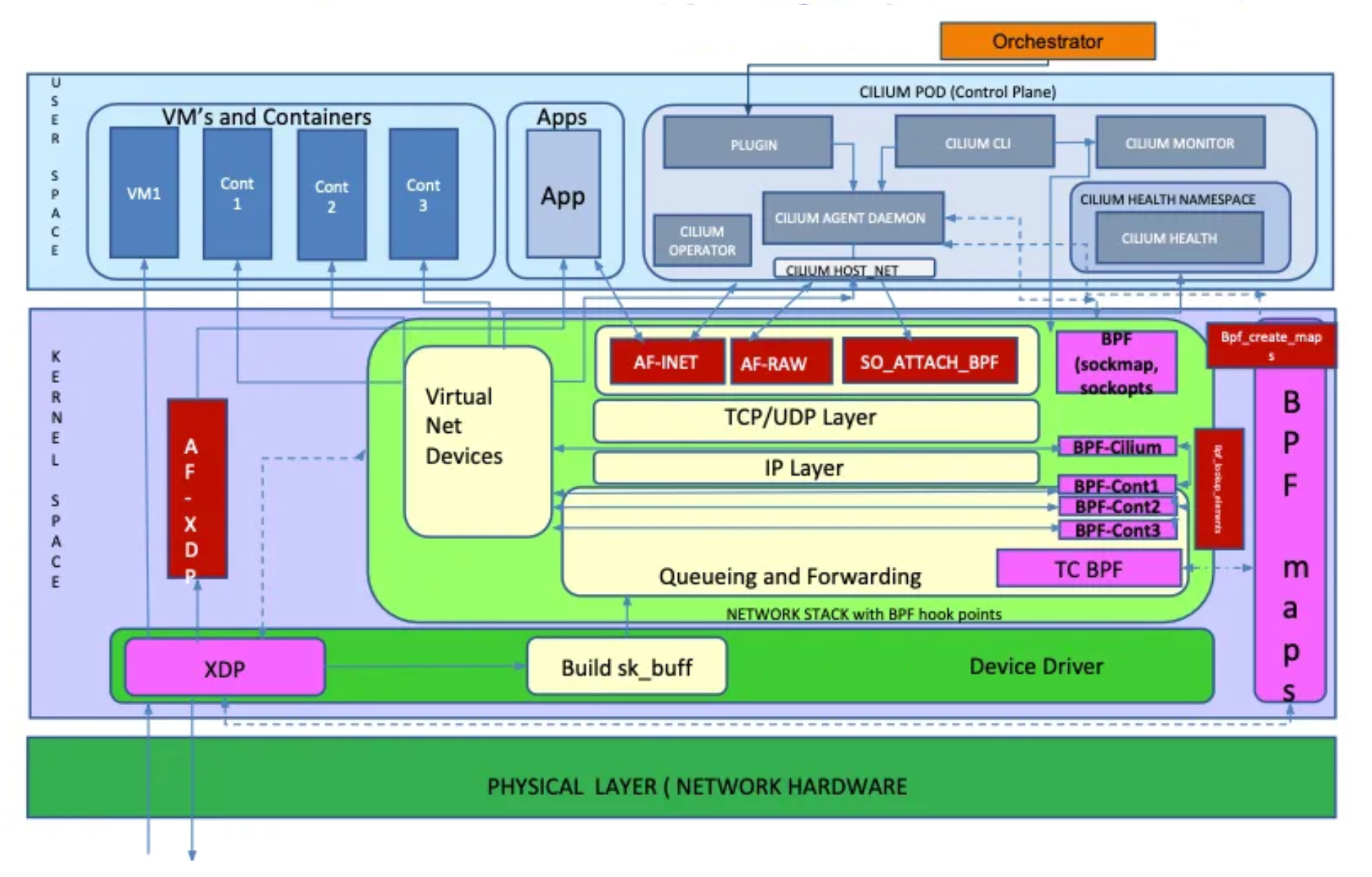

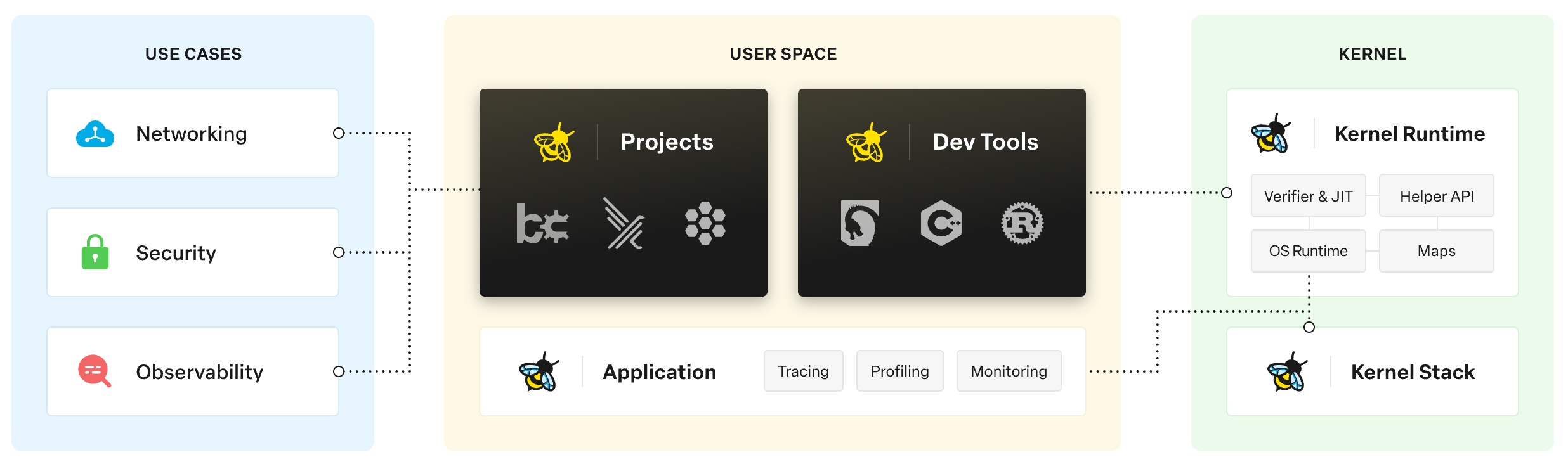

Cilium 아키텍처

Cilium 아키텍처 작동 방식 요약

- 각 Kubernetes 노드에서 Cilium Agent가 작동하며, eBPF 프로그램을 통해 패킷을 커널 공간에서 직접 처리합니다.

- 네트워크 트래픽 모니터링, 로드 밸런싱, 보안 정책 적용을 커널에서 수행하여 빠르고 효율적으로 네트워크를 제어합니다.

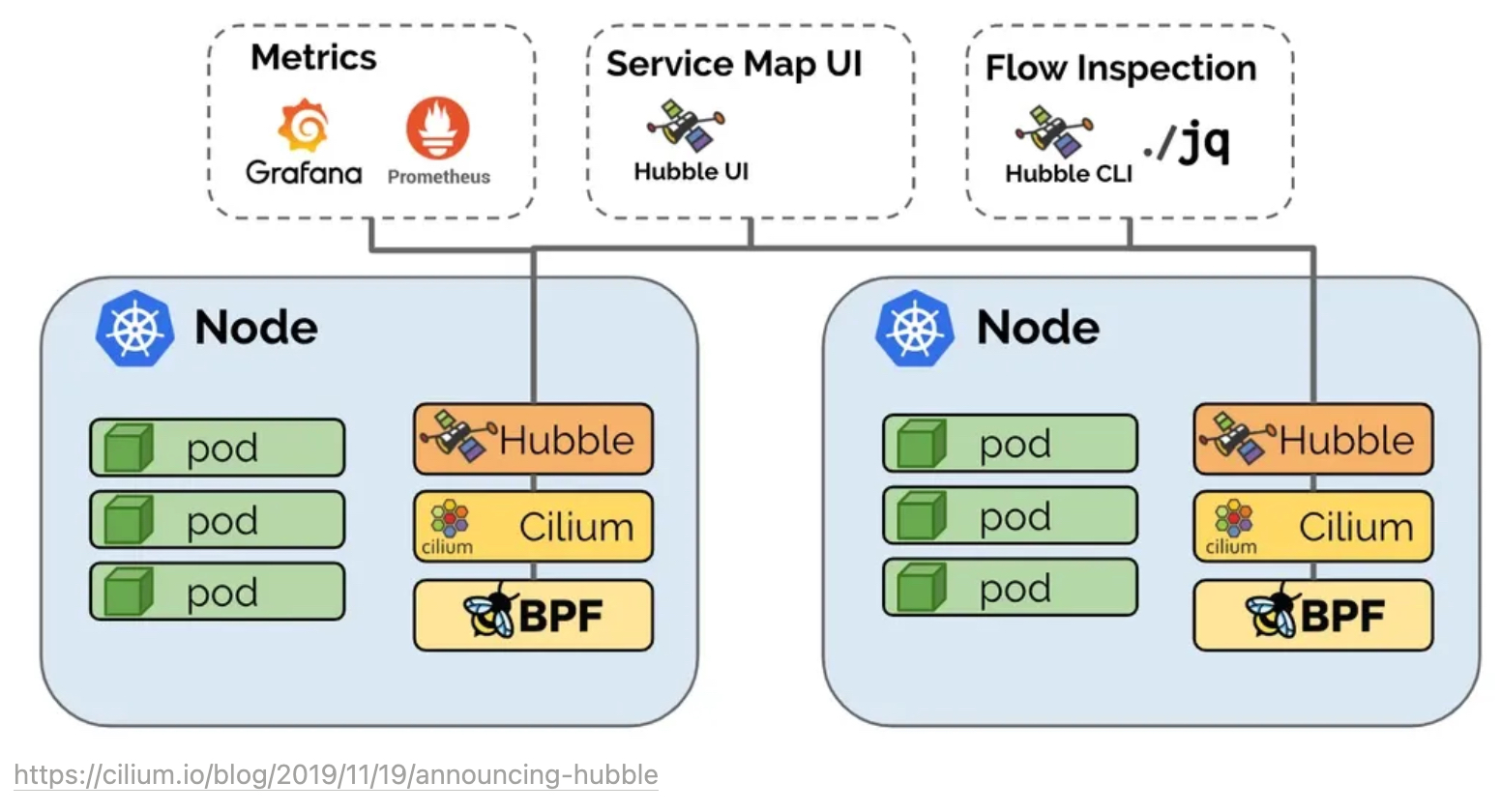

- Hubble과 같은 모니터링 도구를 사용하여 네트워크 상태와 흐름을 실시간으로 시각화할 수 있습니다.

이러한 구조 덕분에 Cilium은 대규모 Kubernetes 환경에서도 효율적이고 안전한 네트워크 통신을 제공합니다.

Cilium의 아키텍처는 eBPF를 활용하여 성능이 뛰어나고 유연한 네트워크와 보안 서비스를 제공합니다. 주요 구성 요소와 아키텍처를 다음과 같이 설명할 수 있습니다

1. Cilium Agent

- 역할: 각 Kubernetes 노드에서 실행되며, eBPF와 상호작용하여 네트워크 및 보안 정책을 관리합니다. 각 노드에서 애플리케이션의 네트워크 흐름을 모니터링하고, 네트워크 정책을 생성 및 적용합니다.

- 주요 기능: 네트워크 라우팅, 서비스 로드 밸런싱, 트래픽 모니터링, 보안 정책을 위한 eBPF 프로그램을 생성하고 설치합니다.

- 작동 방식: Kubernetes API 서버와 통신하여 네트워크 및 보안 정책 변경 사항을 반영합니다. 이를 통해 마이크로서비스 간의 동적인 연결 정책을 eBPF를 통해 커널에서 바로 적용하게 됩니다.

2. eBPF 프로그램

- 역할: Cilium의 핵심 기술로, Cilium이 각 패킷을 커널 공간에서 빠르게 처리할 수 있도록 지원합니다. eBPF는 각 패킷을 분석하고 필터링, 로드 밸런싱, 네트워크 주소 변환(NAT) 등을 수행합니다.

- 특징: eBPF는 유저 공간에서 커널 공간으로 많은 데이터를 보내지 않고도 네트워크 패킷을 처리할 수 있어 CPU 리소스를 절약하고 성능을 최적화합니다.

3. Cilium DaemonSet

- Cilium은 Kubernetes 클러스터 내에서 DaemonSet으로 배포됩니다. 각 워커 노드에 하나의 Cilium 에이전트를 설치하여 eBPF 프로그램을 통해 노드의 네트워크 인터페이스를 제어합니다. 이 DaemonSet은 Kubernetes와 연동하여 Cilium 에이전트의 배포와 관리를 용이하게 합니다.

4. Cilium Operator

- 역할: Cilium 에이전트를 관리 및 오케스트레이션하며, 네트워크 정책 및 엔드포인트에 대한 상태 관리를 수행합니다.

- 추가 기능: IP 주소 할당 관리, Cilium 클러스터 간 네트워크 연결 및 멀티 클러스터 지원 등을 제공합니다.

5. Cilium CLI 및 UI

- CLI: 사용자는 CLI를 통해 Cilium의 네트워크 및 보안 정책을 설정하고 관리할 수 있습니다. Kubernetes와 통합된 kubectl을 사용해 정책 설정이 가능하며, cilium CLI 도구도 제공됩니다.

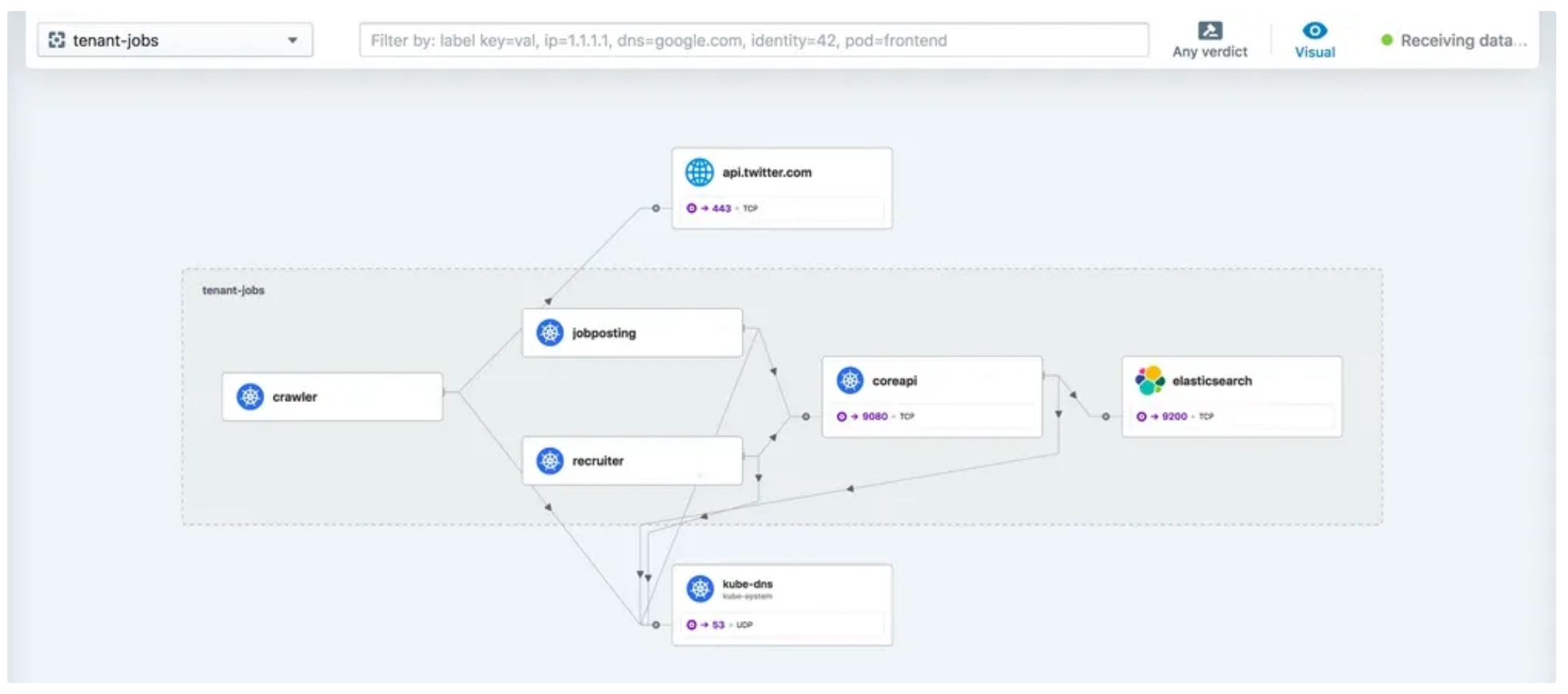

- Hubble: Hubble은 Cilium에 포함된 모니터링 및 트레이싱 UI 도구로, 실시간 네트워크 흐름과 보안 로그를 시각화해 주어 문제를 신속하게 파악할 수 있도록 합니다.

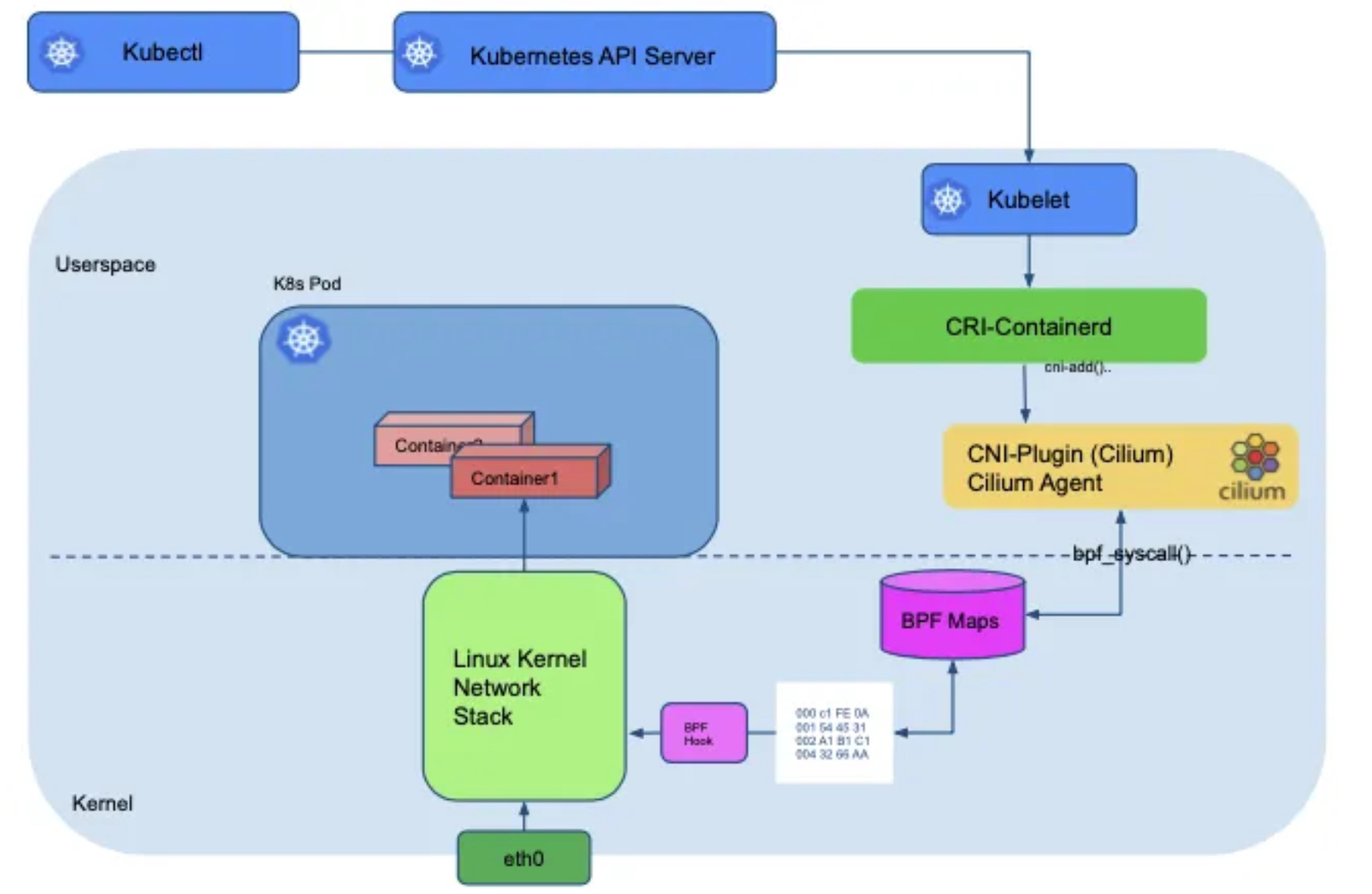

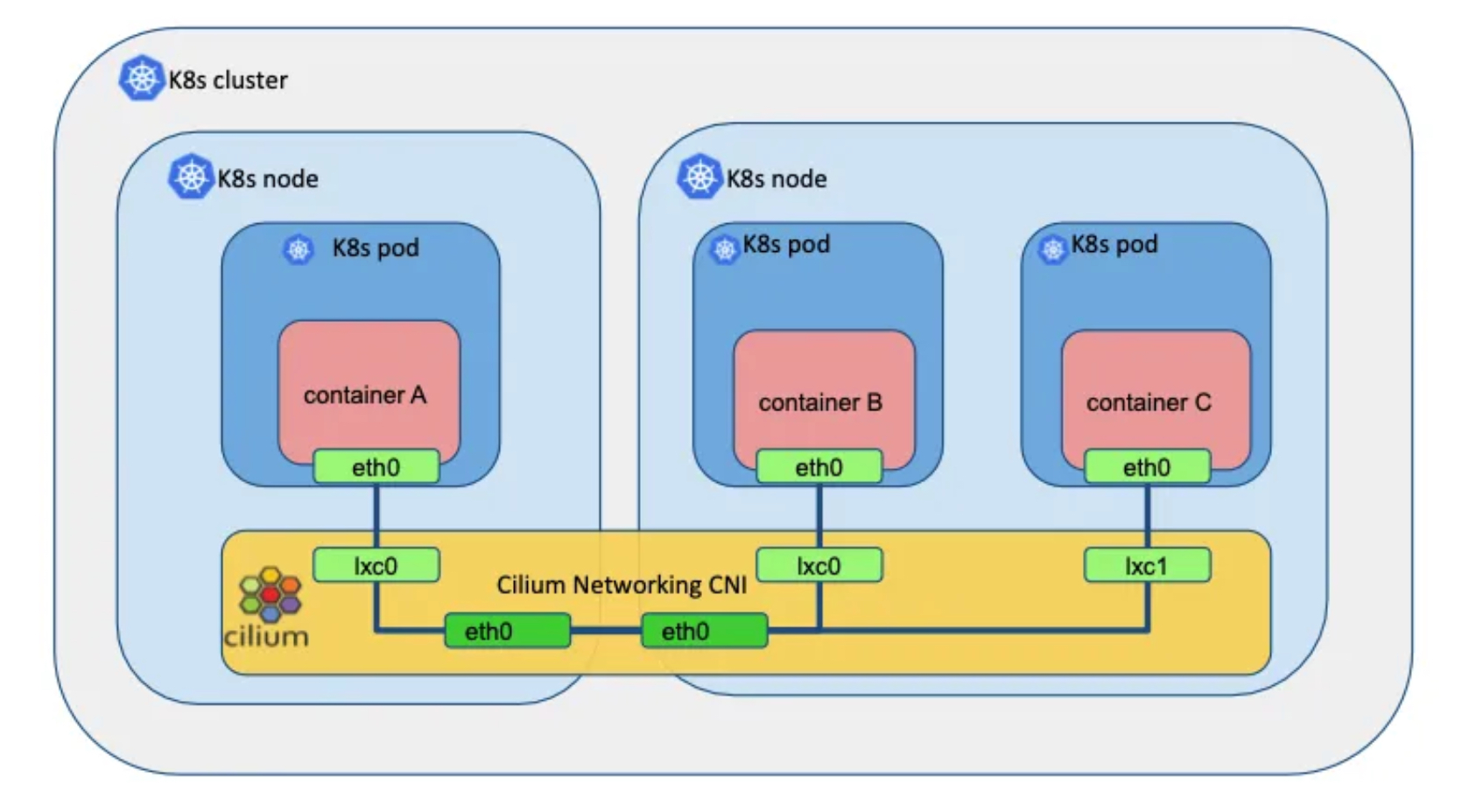

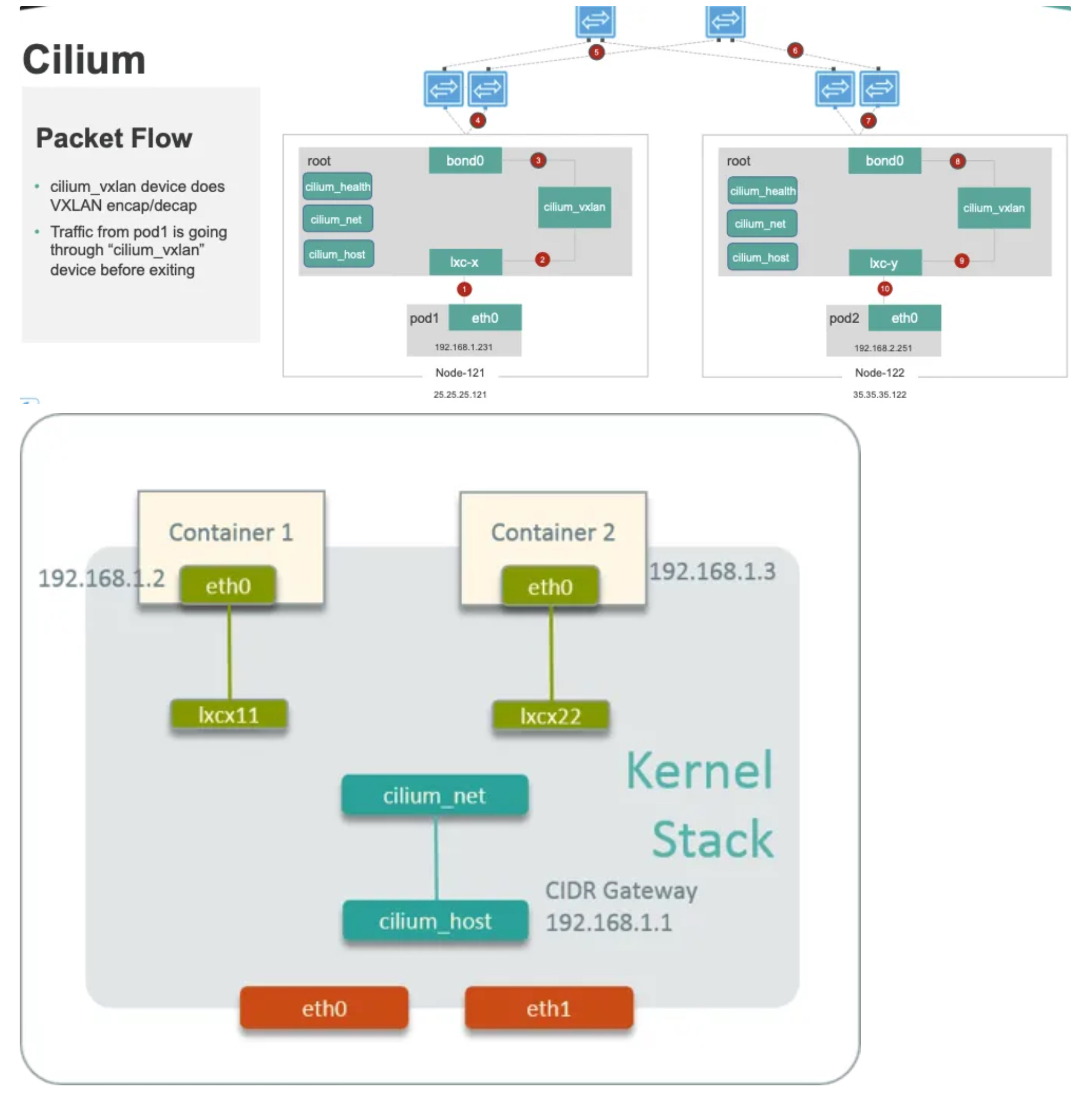

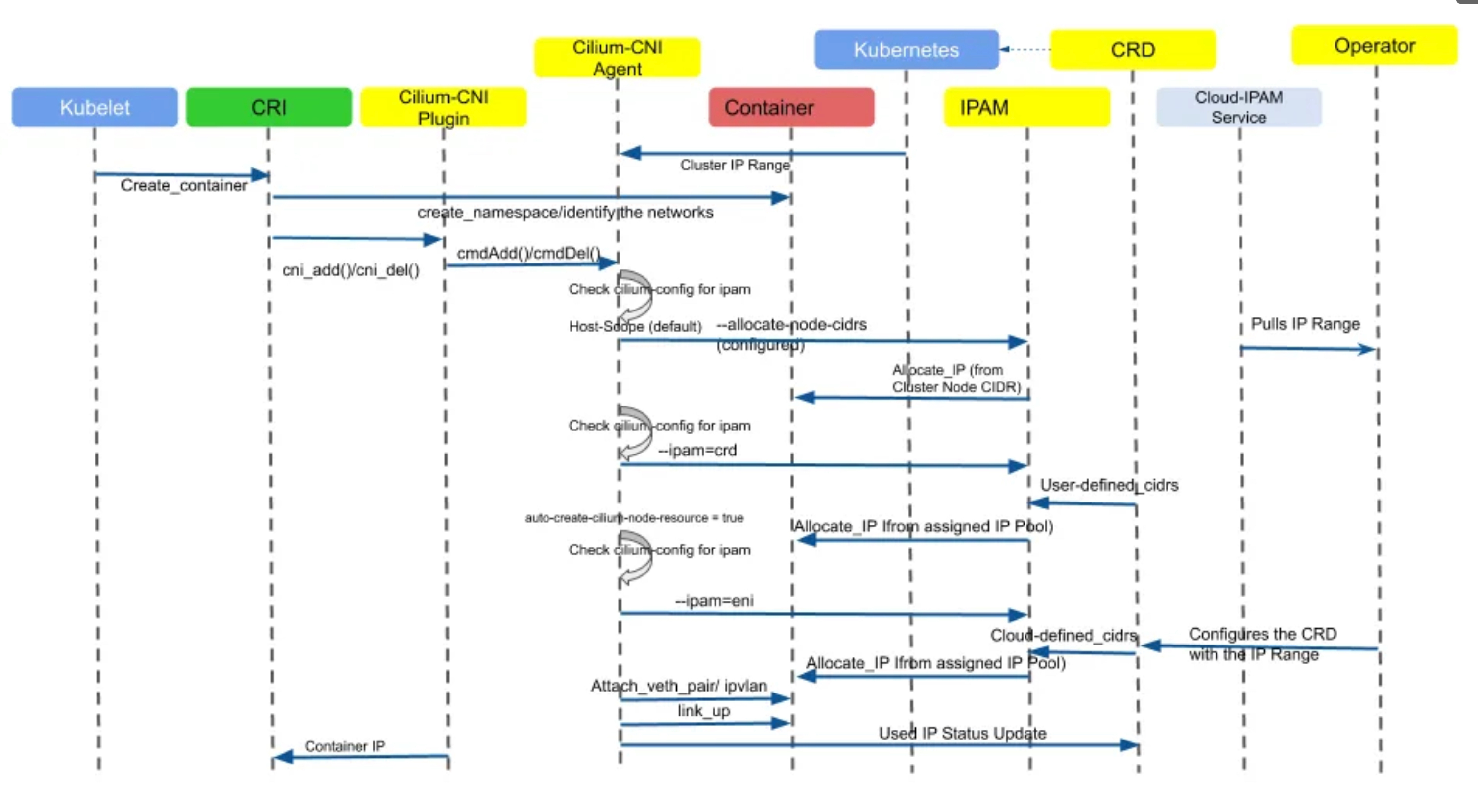

Cilium 관련 Diagram

-

Cilium control flow

-

Cilium Components with BPF hook points and BPF maps shown in Linux Stack

-

Cilium as CNI Plugin

-

Cilium Packet Flow

-

Cilium Container Networking Control Flow 링크

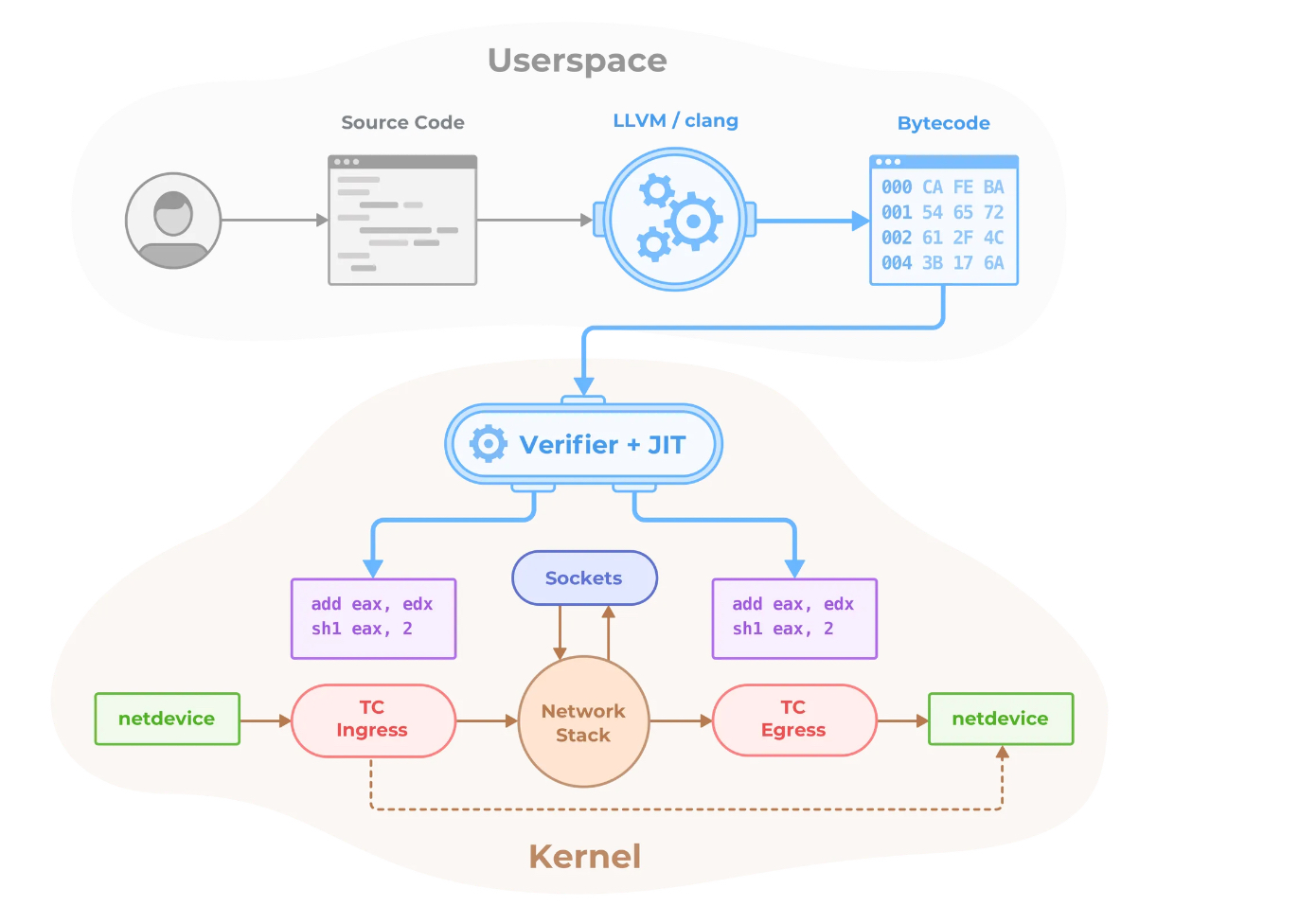

3. eBPF 소개

eBPF(extended Berkeley Packet Filter)는 Linux 커널에서 프로그램을 안전하고 효율적으로 실행할 수 있도록 하는 강력한 기술입니다. eBPF는 주로 네트워크 패킷 필터링을 위해 개발되었지만, 이제는 네트워크, 보안, 추적, 성능 분석 등에 폭넓게 사용됩니다.

eBPF의 주요 특징

-

커널 공간에서 실행:

- eBPF 프로그램은 커널 공간에서 실행되어, 유저 공간 프로그램보다 속도와 성능이 뛰어납니다. 커널을 다시 컴파일할 필요 없이 프로그램을 로드하여 동적으로 실행할 수 있습니다.

-

안전한 실행 환경:

- eBPF 프로그램은 검증기(verifier)를 통해 커널에 의해 검증됩니다. 이 과정에서 잘못된 코드나 무한 루프 등을 방지하여 커널 충돌을 예방합니다.

-

다양한 유스케이스:

- eBPF는 원래 네트워크 패킷 필터링용으로 만들어졌지만, 현재는 보안 정책 적용, 성능 모니터링, 시스템 호출 추적 등 여러 분야에서 활용됩니다.

eBPF의 주요 구성 요소

-

eBPF 프로그램:

- 작은 코드 조각으로, 특정 이벤트(예: 패킷 수신, 시스템 호출 발생 등)가 발생할 때 실행됩니다. 프로그램은 보통 C 또는 새로운 eBPF 언어를 통해 작성된 후, 커널에서 실행 가능한 형식으로 변환됩니다.

-

eBPF 맵(Map):

- 데이터 저장을 위해 eBPF가 제공하는 키-값 데이터 구조입니다. eBPF 프로그램이 처리하는 데이터 또는 상태를 유지하는 데 사용됩니다. 여러 프로그램이나 사용자 공간과 데이터를 주고받는 데 유용합니다.

-

eBPF 검증기:

- 커널 내에서 eBPF 프로그램의 안정성과 유효성을 보장합니다. 프로그램 로드 시 검증기를 통해 무한 루프나 유해한 동작이 없는지 확인합니다.

-

유저 공간과의 상호작용:

- eBPF 프로그램은 유저 공간 프로그램과 상호작용할 수 있으며, 주로

bpf()시스템 호출을 통해 프로그램을 커널에 로드하고, 맵이나 다른 리소스를 통해 데이터를 주고받을 수 있습니다.

- eBPF 프로그램은 유저 공간 프로그램과 상호작용할 수 있으며, 주로

eBPF의 주요 기능

-

네트워크 필터링 및 로드 밸런싱:

- eBPF는 네트워크 패킷을 커널 공간에서 직접 처리하여, 패킷 필터링, 라우팅, 네트워크 주소 변환(NAT) 등을 효율적으로 수행합니다. 이로 인해 성능이 크게 향상됩니다.

- Cilium 같은 프로젝트는 eBPF를 사용해 네트워크 보안 정책을 커널에서 실행하며, 이는 Kubernetes 네트워크 성능을 크게 개선합니다.

-

성능 분석 및 시스템 모니터링:

- eBPF는 시스템 호출 추적, CPU 사용량, 메모리 액세스, 디스크 I/O 등을 실시간으로 분석하여 시스템의 성능 병목을 진단할 수 있습니다. 이를 통해 Facebook, Netflix 등에서 성능 모니터링 도구로 사용됩니다.

-

보안 정책 적용:

- eBPF는 특정 애플리케이션 간의 통신을 제어하여 네트워크 보안을 강화합니다. 보안 정책을 커널 레벨에서 강제로 적용해 성능과 보안성을 동시에 높일 수 있습니다.

eBPF의 장점과 단점

-

장점:

- 고성능 및 효율성: 커널에서 직접 데이터 처리를 수행하므로 리소스를 적게 소모하며 빠르게 처리합니다.

- 다양한 활용 가능성: 네트워크 필터링부터 성능 모니터링까지 다목적으로 활용할 수 있습니다.

- 동적 업데이트: 커널 모듈을 재컴파일하지 않고도 코드를 동적으로 로드할 수 있어 유지보수가 간편합니다.

-

단점:

- 복잡한 개발: eBPF 코드는 커널 내에서 동작하기 때문에 디버깅과 개발이 복잡할 수 있습니다.

- 호환성 문제: 최신 커널 버전에 맞춰 지속적인 업데이트가 필요하며, 일부 eBPF 기능은 오래된 커널에서는 사용할 수 없습니다.

eBPF가 사용되는 예

- Cilium: Kubernetes 네트워크와 보안 정책을 관리하기 위해 eBPF를 활용하여, 애플리케이션 간 통신을 효율적으로 제어합니다.

- bcc 및 bpftrace: 시스템 성능 모니터링 도구로, 실시간으로 성능 데이터를 수집하여 병목을 분석합니다.

- Falco: 보안 도구로, eBPF를 사용하여 시스템 호출을 감시하고 잠재적 보안 위협을 실시간으로 탐지합니다.

eBPF는 리눅스 커널에서 다양한 기능을 효율적으로 수행할 수 있게 해 주어, Kubernetes 같은 클라우드 네이티브 환경에서 네트워크와 보안을 강화하는 데 특히 유용합니다.

4. Cilium 설치 & 실습

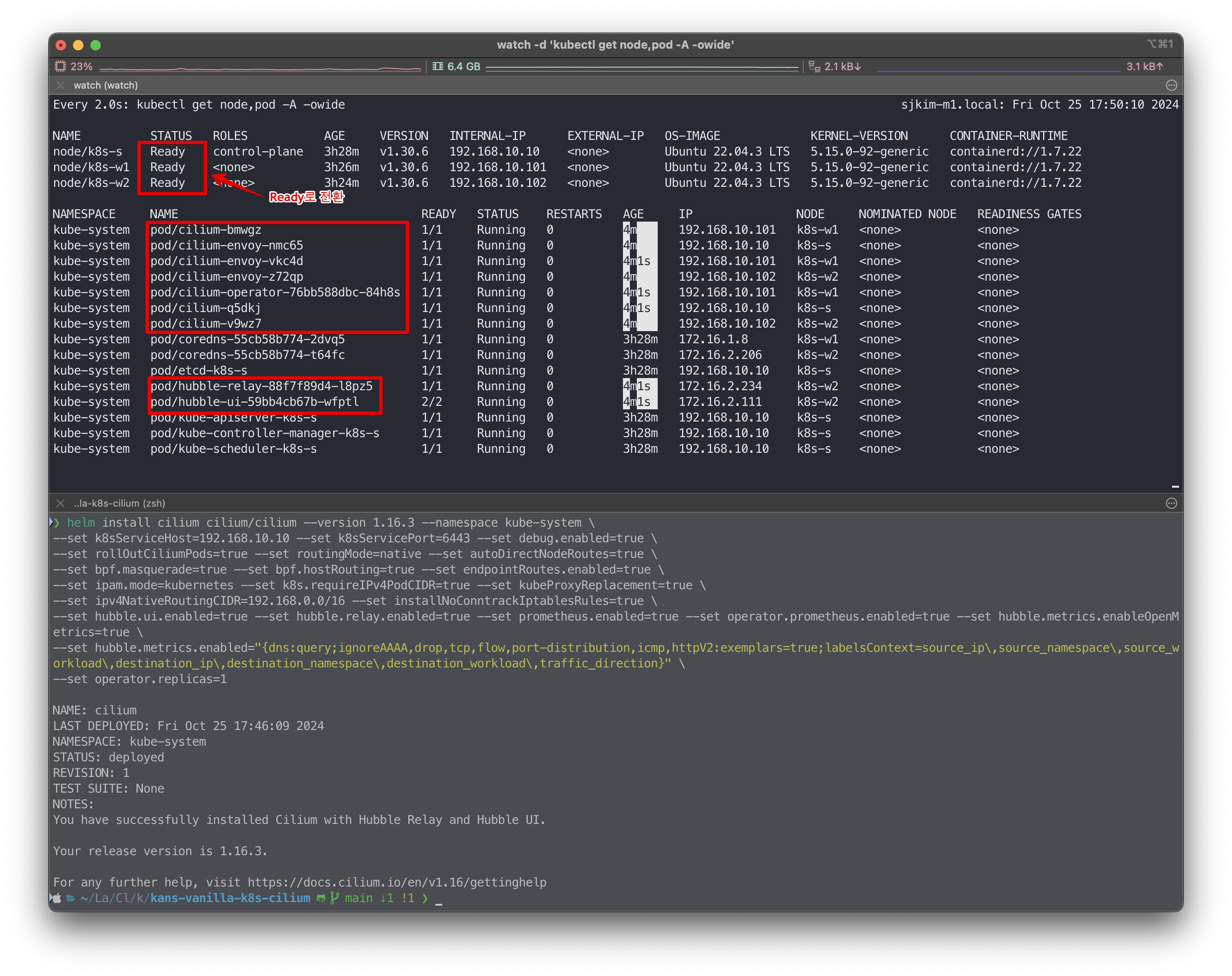

Cilium 배포

-

Cilium 설치정보(/w Helm) 및 확인

# 모니터링 watch -d kubectl get node,pod -A -owide # ❯ helm repo add cilium https://helm.cilium.io/ "cilium" has been added to your repositories ❯ helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "metrics-server" chart repository ...Successfully got an update from the "couchbase" chart repository ...Successfully got an update from the "ingress-nginx" chart repository ...Successfully got an update from the "eks" chart repository ...Successfully got an update from the "istio" chart repository ...Successfully got an update from the "cilium" chart repository ...Successfully got an update from the "argo" chart repository ...Successfully got an update from the "bitnami" chart repository ...Successfully got an update from the "geek-cookbook" chart repository Update Complete. ⎈Happy Helming!⎈ # helm install cilium cilium/cilium --version 1.16.3 --namespace kube-system \ --set k8sServiceHost=192.168.10.10 --set k8sServicePort=6443 --set debug.enabled=true \ --set rollOutCiliumPods=true --set routingMode=native --set autoDirectNodeRoutes=true \ --set bpf.masquerade=true --set bpf.hostRouting=true --set endpointRoutes.enabled=true \ --set ipam.mode=kubernetes --set k8s.requireIPv4PodCIDR=true --set kubeProxyReplacement=true \ --set ipv4NativeRoutingCIDR=192.168.0.0/16 --set installNoConntrackIptablesRules=true \ --set hubble.ui.enabled=true --set hubble.relay.enabled=true --set prometheus.enabled=true --set operator.prometheus.enabled=true --set hubble.metrics.enableOpenMetrics=true \ --set hubble.metrics.enabled="{dns:query;ignoreAAAA,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}" \ --set operator.replicas=1 ## 주요 파라미터 설명 --set debug.enabled=true # cilium 파드에 로그 레벨을 debug 설정 --set autoDirectNodeRoutes=true # 동일 대역 내의 노드들 끼리는 상대 노드의 podCIDR 대역의 라우팅이 자동으로 설정 --set endpointRoutes.enabled=true # 호스트에 endpoint(파드)별 개별 라우팅 설정 --set hubble.relay.enabled=true --set hubble.ui.enabled=true # hubble 활성화 --set ipam.mode=kubernetes --set k8s.requireIPv4PodCIDR=true # k8s IPAM 활용 --set kubeProxyReplacement=true # kube-proxy 없이 (최대한) 대처할수 있수 있게 --set ipv4NativeRoutingCIDR=192.168.0.0/16 # 해당 대역과 통신 시 IP Masq 하지 않음, 보통 사내망 대역을 지정 --set operator.replicas=1 # cilium-operator 파드 기본 1개 --set enableIPv4Masquerade=true --set bpf.masquerade=true # 파드를 위한 Masquerade , 추가로 Masquerade 을 BPF 로 처리 >> enableIPv4Masquerade=true 인 상태에서 추가로 bpf.masquerade=true 적용이 가능

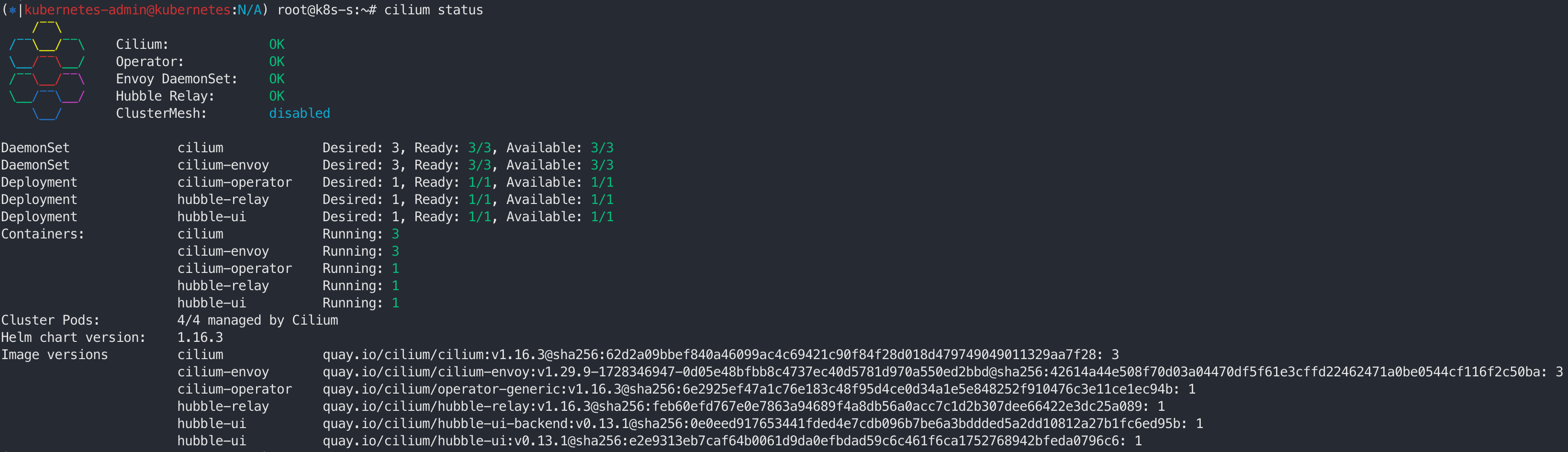

Cilium 확인

-

Cilium 설정 및 확인

(⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# ip -c addr ... 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether 00:0c:29:25:47:b4 brd ff:ff:ff:ff:ff:ff altname enp18s0 altname ens224 inet 192.168.10.10/24 brd 192.168.10.255 scope global eth1 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe25:47b4/64 scope link valid_lft forever preferred_lft forever 4: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 6a:e7:79:ee:51:07 brd ff:ff:ff:ff:ff:ff inet6 fe80::68e7:79ff:feee:5107/64 scope link valid_lft forever preferred_lft forever 5: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether ee:04:f3:38:99:3e brd ff:ff:ff:ff:ff:ff inet 172.16.0.4/32 scope global cilium_host valid_lft forever preferred_lft forever inet6 fe80::ec04:f3ff:fe38:993e/64 scope link valid_lft forever preferred_lft forever 7: lxc_health@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 12:6e:5c:a8:7e:20 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::106e:5cff:fea8:7e20/64 scope link valid_lft forever preferred_lft forever (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kc get node,pod,svc -A -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME node/k8s-s Ready control-plane 26h v1.30.6 192.168.10.10 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.22 node/k8s-w1 Ready <none> 26h v1.30.6 192.168.10.101 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.22 node/k8s-w2 Ready <none> 26h v1.30.6 192.168.10.102 <none> Ubuntu 22.04.3 LTS 5.15.0-92-generic containerd://1.7.22 NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system pod/cilium-bmwgz 1/1 Running 0 23h 192.168.10.101 k8s-w1 <none> <none> kube-system pod/cilium-envoy-nmc65 1/1 Running 0 23h 192.168.10.10 k8s-s <none> <none> kube-system pod/cilium-envoy-vkc4d 1/1 Running 0 23h 192.168.10.101 k8s-w1 <none> <none> kube-system pod/cilium-envoy-z72qp 1/1 Running 0 23h 192.168.10.102 k8s-w2 <none> <none> kube-system pod/cilium-operator-76bb588dbc-84h8s 1/1 Running 0 23h 192.168.10.101 k8s-w1 <none> <none> kube-system pod/cilium-q5dkj 1/1 Running 0 23h 192.168.10.10 k8s-s <none> <none> kube-system pod/cilium-v9wz7 1/1 Running 0 23h 192.168.10.102 k8s-w2 <none> <none> kube-system pod/coredns-55cb58b774-2dvq5 1/1 Running 0 26h 172.16.1.8 k8s-w1 <none> <none> kube-system pod/coredns-55cb58b774-t64fc 1/1 Running 0 26h 172.16.2.206 k8s-w2 <none> <none> kube-system pod/etcd-k8s-s 1/1 Running 0 26h 192.168.10.10 k8s-s <none> <none> kube-system pod/hubble-relay-88f7f89d4-l8pz5 1/1 Running 0 23h 172.16.2.234 k8s-w2 <none> <none> kube-system pod/hubble-ui-59bb4cb67b-wfptl 2/2 Running 0 23h 172.16.2.111 k8s-w2 <none> <none> kube-system pod/kube-apiserver-k8s-s 1/1 Running 0 26h 192.168.10.10 k8s-s <none> <none> kube-system pod/kube-controller-manager-k8s-s 1/1 Running 0 26h 192.168.10.10 k8s-s <none> <none> kube-system pod/kube-scheduler-k8s-s 1/1 Running 0 26h 192.168.10.10 k8s-s <none> <none> NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR default service/kubernetes ClusterIP 10.10.0.1 <none> 443/TCP 26h <none> kube-system service/cilium-envoy ClusterIP None <none> 9964/TCP 23h k8s-app=cilium-envoy kube-system service/hubble-metrics ClusterIP None <none> 9965/TCP 23h k8s-app=cilium kube-system service/hubble-peer ClusterIP 10.10.44.26 <none> 443/TCP 23h k8s-app=cilium kube-system service/hubble-relay ClusterIP 10.10.254.126 <none> 80/TCP 23h k8s-app=hubble-relay kube-system service/hubble-ui ClusterIP 10.10.227.104 <none> 80/TCP 23h k8s-app=hubble-ui kube-system service/kube-dns ClusterIP 10.10.0.10 <none> 53/UDP,53/TCP,9153/TCP 26h k8s-app=kube-dns (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# iptables -t nat -S -P PREROUTING ACCEPT -P INPUT ACCEPT -P OUTPUT ACCEPT -P POSTROUTING ACCEPT -N CILIUM_OUTPUT_nat -N CILIUM_POST_nat -N CILIUM_PRE_nat -N KUBE-KUBELET-CANARY -A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_nat" -j CILIUM_PRE_nat -A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_nat" -j CILIUM_OUTPUT_nat -A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_nat" -j CILIUM_POST_nat (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# iptables -t filter -S -P INPUT ACCEPT -P FORWARD ACCEPT -P OUTPUT ACCEPT -N CILIUM_FORWARD -N CILIUM_INPUT -N CILIUM_OUTPUT -N KUBE-FIREWALL -N KUBE-KUBELET-CANARY -A INPUT -m comment --comment "cilium-feeder: CILIUM_INPUT" -j CILIUM_INPUT -A INPUT -j KUBE-FIREWALL -A FORWARD -m comment --comment "cilium-feeder: CILIUM_FORWARD" -j CILIUM_FORWARD -A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT" -j CILIUM_OUTPUT -A OUTPUT -j KUBE-FIREWALL -A CILIUM_FORWARD -o cilium_host -m comment --comment "cilium: any->cluster on cilium_host forward accept" -j ACCEPT -A CILIUM_FORWARD -i cilium_host -m comment --comment "cilium: cluster->any on cilium_host forward accept (nodeport)" -j ACCEPT -A CILIUM_FORWARD -i lxc+ -m comment --comment "cilium: cluster->any on lxc+ forward accept" -j ACCEPT -A CILIUM_FORWARD -i cilium_net -m comment --comment "cilium: cluster->any on cilium_net forward accept (nodeport)" -j ACCEPT -A CILIUM_FORWARD -o lxc+ -m comment --comment "cilium: any->cluster on lxc+ forward accept" -j ACCEPT -A CILIUM_FORWARD -i lxc+ -m comment --comment "cilium: cluster->any on lxc+ forward accept (nodeport)" -j ACCEPT -A CILIUM_INPUT -m comment --comment "cilium: ACCEPT for proxy traffic" -j ACCEPT -A CILIUM_OUTPUT -m comment --comment "cilium: ACCEPT for proxy traffic" -j ACCEPT -A CILIUM_OUTPUT -m comment --comment "cilium: ACCEPT for l7 proxy upstream traffic" -j ACCEPT -A CILIUM_OUTPUT -m comment --comment "cilium: host->any mark as from host" -j MARK --set-xmark 0xc00/0xf00 -A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# iptables -t raw -S -P PREROUTING ACCEPT -P OUTPUT ACCEPT -N CILIUM_OUTPUT_raw -N CILIUM_PRE_raw -A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_raw" -j CILIUM_PRE_raw -A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_raw" -j CILIUM_OUTPUT_raw -A CILIUM_OUTPUT_raw -d 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -s 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o lxc+ -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o cilium_host -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o lxc+ -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o cilium_host -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack -A CILIUM_PRE_raw -d 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_PRE_raw -s 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_PRE_raw -m comment --comment "cilium: NOTRACK for proxy traffic" -j CT --notrack (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# iptables -t mangle -S -P PREROUTING ACCEPT -P INPUT ACCEPT -P FORWARD ACCEPT -P OUTPUT ACCEPT -P POSTROUTING ACCEPT -N CILIUM_POST_mangle -N CILIUM_PRE_mangle -N KUBE-IPTABLES-HINT -N KUBE-KUBELET-CANARY -A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_mangle" -j CILIUM_PRE_mangle -A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_mangle" -j CILIUM_POST_mangle -A CILIUM_PRE_mangle ! -o lo -m socket --transparent -m comment --comment "cilium: any->pod redirect proxied traffic to host proxy" -j MARK --set-xmark 0x200/0xffffffff -A CILIUM_PRE_mangle -p tcp -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 38613 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff -A CILIUM_PRE_mangle -p udp -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 38613 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# conntrack -L tcp 6 73 TIME_WAIT src=127.0.0.1 dst=127.0.0.1 sport=39522 dport=9878 src=127.0.0.1 dst=127.0.0.1 sport=9878 dport=39522 [ASSURED] mark=0 use=1 ... tcp 6 431996 ESTABLISHED src=127.0.0.1 dst=127.0.0.1 sport=37734 dport=2379 src=127.0.0.1 dst=127.0.0.1 sport=2379 dport=37734 [ASSURED] mark=0 use=1 conntrack v1.4.6 (conntrack-tools): 121 flow entries have been shown. (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kubectl get crd NAME CREATED AT ciliumcidrgroups.cilium.io 2024-10-25T08:47:38Z ciliumclusterwidenetworkpolicies.cilium.io 2024-10-25T08:47:40Z ciliumendpoints.cilium.io 2024-10-25T08:47:38Z ciliumexternalworkloads.cilium.io 2024-10-25T08:47:38Z ciliumidentities.cilium.io 2024-10-25T08:47:39Z ciliuml2announcementpolicies.cilium.io 2024-10-25T08:47:39Z ciliumloadbalancerippools.cilium.io 2024-10-25T08:47:39Z ciliumnetworkpolicies.cilium.io 2024-10-25T08:47:39Z ciliumnodeconfigs.cilium.io 2024-10-25T08:47:38Z ciliumnodes.cilium.io 2024-10-25T08:47:38Z ciliumpodippools.cilium.io 2024-10-25T08:47:38Z (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kubectl get ciliumnodes # cilium_host 인터페이스의 IP 확인 : CILIUMINTERNALIP NAME CILIUMINTERNALIP INTERNALIP AGE k8s-s 172.16.0.4 192.168.10.10 23h k8s-w1 172.16.1.85 192.168.10.101 23h k8s-w2 172.16.2.76 192.168.10.102 23h (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kubectl get ciliumendpoints -A NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6 kube-system coredns-55cb58b774-2dvq5 28955 ready 172.16.1.8 kube-system coredns-55cb58b774-t64fc 28955 ready 172.16.2.206 kube-system hubble-relay-88f7f89d4-l8pz5 36655 ready 172.16.2.234 kube-system hubble-ui-59bb4cb67b-wfptl 770 ready 172.16.2.111 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kubectl get cm -n kube-system cilium-config -o json | jq { "apiVersion": "v1", "data": { "agent-not-ready-taint-key": "node.cilium.io/agent-not-ready", "arping-refresh-period": "30s", "auto-direct-node-routes": "true", "bpf-events-drop-enabled": "true", "bpf-events-policy-verdict-enabled": "true", "bpf-events-trace-enabled": "true", "bpf-lb-acceleration": "disabled", "bpf-lb-external-clusterip": "false", "bpf-lb-map-max": "65536", "bpf-lb-sock": "false", "bpf-lb-sock-terminate-pod-connections": "false", "bpf-map-dynamic-size-ratio": "0.0025", "bpf-policy-map-max": "16384", "bpf-root": "/sys/fs/bpf", "cgroup-root": "/run/cilium/cgroupv2", "cilium-endpoint-gc-interval": "5m0s", "cluster-id": "0", "cluster-name": "default", "clustermesh-enable-endpoint-sync": "false", "clustermesh-enable-mcs-api": "false", "cni-exclusive": "true", "cni-log-file": "/var/run/cilium/cilium-cni.log", "controller-group-metrics": "write-cni-file sync-host-ips sync-lb-maps-with-k8s-services", "custom-cni-conf": "false", "datapath-mode": "veth", "debug": "true", "debug-verbose": "", "direct-routing-skip-unreachable": "false", "dnsproxy-enable-transparent-mode": "true", "dnsproxy-socket-linger-timeout": "10", "egress-gateway-reconciliation-trigger-interval": "1s", "enable-auto-protect-node-port-range": "true", "enable-bpf-clock-probe": "false", "enable-bpf-masquerade": "true", "enable-endpoint-health-checking": "true", "enable-endpoint-routes": "true", "enable-health-check-loadbalancer-ip": "false", "enable-health-check-nodeport": "true", "enable-health-checking": "true", "enable-hubble": "true", "enable-hubble-open-metrics": "true", "enable-ipv4": "true", "enable-ipv4-big-tcp": "false", "enable-ipv4-masquerade": "true", "enable-ipv6": "false", "enable-ipv6-big-tcp": "false", "enable-ipv6-masquerade": "true", "enable-k8s-networkpolicy": "true", "enable-k8s-terminating-endpoint": "true", "enable-l2-neigh-discovery": "true", "enable-l7-proxy": "true", "enable-local-redirect-policy": "false", "enable-masquerade-to-route-source": "false", "enable-metrics": "true", "enable-node-selector-labels": "false", "enable-policy": "default", "enable-runtime-device-detection": "true", "enable-sctp": "false", "enable-svc-source-range-check": "true", "enable-tcx": "true", "enable-vtep": "false", "enable-well-known-identities": "false", "enable-xt-socket-fallback": "true", "envoy-base-id": "0", "envoy-keep-cap-netbindservice": "false", "external-envoy-proxy": "true", "hubble-disable-tls": "false", "hubble-export-file-max-backups": "5", "hubble-export-file-max-size-mb": "10", "hubble-listen-address": ":4244", "hubble-metrics": "dns:query;ignoreAAAA drop tcp flow port-distribution icmp httpV2:exemplars=true;labelsContext=source_ip,source_namespace,source_workload,destination_ip,destination_namespace,destination_workload,traffic_direction", "hubble-metrics-server": ":9965", "hubble-metrics-server-enable-tls": "false", "hubble-socket-path": "/var/run/cilium/hubble.sock", "hubble-tls-cert-file": "/var/lib/cilium/tls/hubble/server.crt", "hubble-tls-client-ca-files": "/var/lib/cilium/tls/hubble/client-ca.crt", "hubble-tls-key-file": "/var/lib/cilium/tls/hubble/server.key", "identity-allocation-mode": "crd", "identity-gc-interval": "15m0s", "identity-heartbeat-timeout": "30m0s", "install-no-conntrack-iptables-rules": "true", "ipam": "kubernetes", "ipam-cilium-node-update-rate": "15s", "ipv4-native-routing-cidr": "192.168.0.0/16", "k8s-client-burst": "20", "k8s-client-qps": "10", "k8s-require-ipv4-pod-cidr": "true", "k8s-require-ipv6-pod-cidr": "false", "kube-proxy-replacement": "true", "kube-proxy-replacement-healthz-bind-address": "", "max-connected-clusters": "255", "mesh-auth-enabled": "true", "mesh-auth-gc-interval": "5m0s", "mesh-auth-queue-size": "1024", "mesh-auth-rotated-identities-queue-size": "1024", "monitor-aggregation": "medium", "monitor-aggregation-flags": "all", "monitor-aggregation-interval": "5s", "nat-map-stats-entries": "32", "nat-map-stats-interval": "30s", "node-port-bind-protection": "true", "nodeport-addresses": "", "nodes-gc-interval": "5m0s", "operator-api-serve-addr": "127.0.0.1:9234", "operator-prometheus-serve-addr": ":9963", "policy-cidr-match-mode": "", "preallocate-bpf-maps": "false", "procfs": "/host/proc", "prometheus-serve-addr": ":9962", "proxy-connect-timeout": "2", "proxy-idle-timeout-seconds": "60", "proxy-max-connection-duration-seconds": "0", "proxy-max-requests-per-connection": "0", "proxy-xff-num-trusted-hops-egress": "0", "proxy-xff-num-trusted-hops-ingress": "0", "remove-cilium-node-taints": "true", "routing-mode": "native", "service-no-backend-response": "reject", "set-cilium-is-up-condition": "true", "set-cilium-node-taints": "true", "synchronize-k8s-nodes": "true", "tofqdns-dns-reject-response-code": "refused", "tofqdns-enable-dns-compression": "true", "tofqdns-endpoint-max-ip-per-hostname": "50", "tofqdns-idle-connection-grace-period": "0s", "tofqdns-max-deferred-connection-deletes": "10000", "tofqdns-proxy-response-max-delay": "100ms", "unmanaged-pod-watcher-interval": "15", "vtep-cidr": "", "vtep-endpoint": "", "vtep-mac": "", "vtep-mask": "", "write-cni-conf-when-ready": "/host/etc/cni/net.d/05-cilium.conflist" }, "kind": "ConfigMap", "metadata": { "annotations": { "meta.helm.sh/release-name": "cilium", "meta.helm.sh/release-namespace": "kube-system" }, "creationTimestamp": "2024-10-25T08:46:10Z", "labels": { "app.kubernetes.io/managed-by": "Helm" }, "name": "cilium-config", "namespace": "kube-system", "resourceVersion": "18860", "uid": "a01f7488-44f7-42ea-83f2-e120cf34b6df" } } kubetail -n kube-system -l k8s-app=cilium --since 1h kubetail -n kube-system -l k8s-app=cilium-envoy --since 1h # Native XDP 지원 NIC 확인 : https://docs.cilium.io/en/stable/bpf/progtypes/#xdp-drivers (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# ethtool -i eth1 driver: e1000e version: 5.15.0-92-generic firmware-version: 1.8-0 expansion-rom-version: bus-info: 0000:12:00.0 supports-statistics: yes supports-test: yes supports-eeprom-access: yes supports-register-dump: yes supports-priv-flags: yes # https://docs.cilium.io/en/stable/operations/performance/tuning/#bypass-iptables-connection-tracking watch -d kubectl get pod -A # 모니터링 ❯ helm list -A NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION cilium kube-system 1 2024-10-25 17:46:09.898734 +0900 KST deployed cilium-1.16.3 1.16.3 ❯ helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values --set installNoConntrackIptablesRules=true Release "cilium" has been upgraded. Happy Helming! NAME: cilium LAST DEPLOYED: Sat Oct 26 17:26:50 2024 NAMESPACE: kube-system STATUS: deployed REVISION: 2 TEST SUITE: None NOTES: You have successfully installed Cilium with Hubble Relay and Hubble UI. Your release version is 1.16.3. For any further help, visit https://docs.cilium.io/en/v1.16/gettinghelp # 확인: 기존 raw 에 아래 rule 추가 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# iptables -t raw -S | grep notrack -A CILIUM_OUTPUT_raw -d 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -s 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o lxc+ -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o cilium_host -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o lxc+ -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack -A CILIUM_OUTPUT_raw -o cilium_host -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack -A CILIUM_PRE_raw -d 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_PRE_raw -s 192.168.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack -A CILIUM_PRE_raw -m comment --comment "cilium: NOTRACK for proxy traffic" -j CT --notrack (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# conntrack -F conntrack v1.4.6 (conntrack-tools): connection tracking table has been emptied. (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# conntrack -L tcp 6 431999 ESTABLISHED src=127.0.0.1 dst=127.0.0.1 sport=37588 dport=2379 src=127.0.0.1 dst=127.0.0.1 sport=2379 dport=37588 [ASSURED] mark=0 use=1 tcp 6 431999 ESTABLISHED src=127.0.0.1 dst=127.0.0.1 sport=2379 dport=37894 ... tcp 6 431995 ESTABLISHED src=127.0.0.1 dst=127.0.0.1 sport=2379 dport=37682 src=127.0.0.1 dst=127.0.0.1 sport=37682 dport=2379 [ASSURED] mark=0 use=1 conntrack v1.4.6 (conntrack-tools): 75 flow entries have been shown. (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# conntrack -L |grep -v 2379 tcp 6 75 TIME_WAIT src=127.0.0.1 dst=127.0.0.1 sport=37304 dport=9879 src=127.0.0.1 dst=127.0.0.1 sport=9879 dport=37304 [ASSURED] mark=0 use=1 conntrack v1.4.6 (conntrack-tools): 99 flow entries have been shown. tcp 6 1 CLOSE src=127.0.0.1 dst=127.0.0.1 sport=53872 dport=10257 src=127.0.0.1 dst=127.0.0.1 sport=10257 dport=53872 [ASSURED] mark=0 use=1 tcp 6 44 TIME_WAIT src=127.0.0.1 dst=127.0.0.1 sport=57902 dport=9878 src=127.0.0.1 dst=127.0.0.1 sport=9878 dport=57902 [ASSURED] mark=0 use=1 ... tcp 6 114 TIME_WAIT src=127.0.0.1 dst=127.0.0.1 sport=47908 dport=2381 src=127.0.0.1 dst=127.0.0.1 sport=2381 dport=47908 [ASSURED] mark=0 use=1 -

Cilium CLI 설치 : inspect the state of a Cilium installation, and enable/disable various features (e.g. clustermesh, Hubble)

# Cilium CLI 설치 ⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt) (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# CLI_ARCH=amd64 if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# echo $CLI_ARCH arm64 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum} % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0 100 44.4M 100 44.4M 0 0 8642k 0 0:00:05 0:00:05 --:--:-- 10.2M % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0 100 92 100 92 0 0 132 0 --:--:-- --:--:-- --:--:-- 0 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum cilium-linux-arm64.tar.gz: OK (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# sudo tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin cilium (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum} # 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# cilium status --wait /¯¯\ /¯¯\__/¯¯\ Cilium: OK \__/¯¯\__/ Operator: OK /¯¯\__/¯¯\ Envoy DaemonSet: OK \__/¯¯\__/ Hubble Relay: OK \__/ ClusterMesh: disabled DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3 DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3 Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1 Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1 Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1 Containers: cilium Running: 3 cilium-envoy Running: 3 cilium-operator Running: 1 hubble-relay Running: 1 hubble-ui Running: 1 Cluster Pods: 4/4 managed by Cilium Helm chart version: 1.16.3 Image versions cilium quay.io/cilium/cilium:v1.16.3@sha256:62d2a09bbef840a46099ac4c69421c90f84f28d018d479749049011329aa7f28: 3 cilium-envoy quay.io/cilium/cilium-envoy:v1.29.9-1728346947-0d05e48bfbb8c4737ec40d5781d970a550ed2bbd@sha256:42614a44e508f70d03a04470df5f61e3cffd22462471a0be0544cf116f2c50ba: 3 cilium-operator quay.io/cilium/operator-generic:v1.16.3@sha256:6e2925ef47a1c76e183c48f95d4ce0d34a1e5e848252f910476c3e11ce1ec94b: 1 hubble-relay quay.io/cilium/hubble-relay:v1.16.3@sha256:feb60efd767e0e7863a94689f4a8db56a0acc7c1d2b307dee66422e3dc25a089: 1 hubble-ui quay.io/cilium/hubble-ui-backend:v0.13.1@sha256:0e0eed917653441fded4e7cdb096b7be6a3bddded5a2dd10812a27b1fc6ed95b: 1 hubble-ui quay.io/cilium/hubble-ui:v0.13.1@sha256:e2e9313eb7caf64b0061d9da0efbdad59c6c461f6ca1752768942bfeda0796c6: 1 # cilium 데몬셋 파드 내에서 cilium 명령어로 상태 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-s -o jsonpath='{.items[0].metadata.name}') (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# alias c0="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium" c0 status --verbose KVStore: Ok Disabled Kubernetes: Ok 1.30 (v1.30.6) [linux/arm64] Kubernetes APIs: ["EndpointSliceOrEndpoint", "cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "cilium/v2alpha1::CiliumCIDRGroup", "core/v1::Namespace", "core/v1::Pods", "core/v1::Service", "networking.k8s.io/v1::NetworkPolicy"] KubeProxyReplacement: True [eth0 192.168.212.210 fe80::20c:29ff:fe25:47aa, eth1 192.168.10.10 fe80::20c:29ff:fe25:47b4 (Direct Routing)] Host firewall: Disabled SRv6: Disabled CNI Chaining: none CNI Config file: successfully wrote CNI configuration file to /host/etc/cni/net.d/05-cilium.conflist Cilium: Ok 1.16.3 (v1.16.3-f2217191) NodeMonitor: Listening for events on 2 CPUs with 64x4096 of shared memory Cilium health daemon: Ok IPAM: IPv4: 2/254 allocated from 172.16.0.0/24, Allocated addresses: 172.16.0.190 (health) 172.16.0.4 (router) IPv4 BIG TCP: Disabled IPv6 BIG TCP: Disabled BandwidthManager: Disabled Routing: Network: Native Host: BPF Attach Mode: Legacy TC Device Mode: veth Masquerading: BPF [eth0, eth1] 192.168.0.0/16 [IPv4: Enabled, IPv6: Disabled] Clock Source for BPF: ktime Controller Status: 20/20 healthy Name Last success Last error Count Message cilium-health-ep 37s ago 1h19m39s ago 0 no error ct-map-pressure 14s ago never 0 no error daemon-validate-config 7s ago never 0 no error dns-garbage-collector-job 46s ago never 0 no error endpoint-1065-regeneration-recovery never never 0 no error endpoint-527-regeneration-recovery never never 0 no error endpoint-gc 4m47s ago never 0 no error ep-bpf-prog-watchdog 15s ago never 0 no error ipcache-inject-labels 45s ago never 0 no error k8s-heartbeat 17s ago never 0 no error link-cache 14s ago never 0 no error neighbor-table-refresh 15s ago never 0 no error node-neighbor-link-updater 14s ago never 0 no error resolve-identity-1065 4m45s ago never 0 no error resolve-identity-527 4m44s ago never 0 no error sync-lb-maps-with-k8s-services 23h56m29s ago never 0 no error sync-policymap-1065 9m43s ago never 0 no error sync-policymap-527 9m40s ago never 0 no error sync-utime 45s ago never 0 no error write-cni-file 23h56m30s ago never 0 no error Proxy Status: OK, ip 172.16.0.4, 0 redirects active on ports 10000-20000, Envoy: external Global Identity Range: min 256, max 65535 Hubble: Ok Current/Max Flows: 4095/4095 (100.00%), Flows/s: 0.84 Metrics: Ok KubeProxyReplacement Details: Status: True Socket LB: Enabled Socket LB Tracing: Enabled Socket LB Coverage: Full Devices: eth0 192.168.212.210 fe80::20c:29ff:fe25:47aa, eth1 192.168.10.10 fe80::20c:29ff:fe25:47b4 (Direct Routing) Mode: SNAT Backend Selection: Random Session Affinity: Enabled Graceful Termination: Enabled NAT46/64 Support: Disabled XDP Acceleration: Disabled Services: - ClusterIP: Enabled - NodePort: Enabled (Range: 30000-32767) - LoadBalancer: Enabled - externalIPs: Enabled - HostPort: Enabled BPF Maps: dynamic sizing: on (ratio: 0.002500) Name Size Auth 524288 Non-TCP connection tracking 65536 TCP connection tracking 131072 Endpoint policy 65535 IP cache 512000 IPv4 masquerading agent 16384 IPv6 masquerading agent 16384 IPv4 fragmentation 8192 IPv4 service 65536 IPv6 service 65536 IPv4 service backend 65536 IPv6 service backend 65536 IPv4 service reverse NAT 65536 IPv6 service reverse NAT 65536 Metrics 1024 NAT 131072 Neighbor table 131072 Global policy 16384 Session affinity 65536 Sock reverse NAT 65536 Tunnel 65536 Encryption: Disabled Cluster health: 3/3 reachable (2024-10-26T08:43:31Z) Name IP Node Endpoints k8s-s (localhost) 192.168.10.10 reachable reachable k8s-w1 192.168.10.101 reachable reachable k8s-w2 192.168.10.102 reachable reachable Modules Health: agent ├── controlplane │ ├── auth │ │ ├── observer-job-auth gc-identity-events [OK] Primed (23h, x1) │ │ ├── observer-job-auth request-authentication [OK] Primed (23h, x1) │ │ └── timer-job-auth gc-cleanup [OK] OK (828.437µs) (4m47s, x1) │ ├── bgp-control-plane │ │ ├── job-bgp-crd-status-initialize [OK] Running (23h, x1) │ │ ├── job-bgp-crd-status-update-job [OK] Running (23h, x1) │ │ ├── job-bgp-state-observer [OK] Running (23h, x1) │ │ └── job-diffstore-events [OK] Running (23h, x2) │ ├── daemon │ │ ├── [OK] daemon-validate-config (7s, x99) │ │ ├── ep-bpf-prog-watchdog │ │ │ └── ep-bpf-prog-watchdog [OK] ep-bpf-prog-watchdog (15s, x200) │ │ └── job-sync-hostips [OK] Synchronized (45s, x108) │ ├── endpoint-manager │ │ ├── cilium-endpoint-1065 (/) │ │ │ ├── datapath-regenerate [OK] Endpoint regeneration successful (79m, x7) │ │ │ └── policymap-sync [OK] sync-policymap-1065 (9m43s, x7) │ │ ├── cilium-endpoint-527 (/) │ │ │ ├── datapath-regenerate [OK] Endpoint regeneration successful (79m, x6) │ │ │ └── policymap-sync [OK] sync-policymap-527 (9m40s, x7) │ │ └── endpoint-gc [OK] endpoint-gc (4m47s, x20) │ ├── envoy-proxy │ │ └── timer-job-version-check [OK] OK (17.333384ms) (4m45s, x1) │ ├── l2-announcer │ │ └── job-l2-announcer lease-gc [OK] Running (23h, x1) │ ├── nat-stats │ │ └── timer-job-nat-stats [OK] OK (2.744645ms) (15s, x1) │ ├── node-manager │ │ ├── background-sync [OK] Node validation successful (21s, x72) │ │ ├── neighbor-link-updater │ │ │ ├── k8s-w1 [OK] Node neighbor link update successful (4s, x272) │ │ │ └── k8s-w2 [OK] Node neighbor link update successful (4s, x273) │ │ ├── node-checkpoint-writer [OK] node checkpoint written (23h, x3) │ │ └── nodes-add [OK] Node adds successful (23h, x3) │ ├── service-manager │ │ └── job-ServiceReconciler [OK] 2 NodePort frontend addresses (79m, x1) │ ├── service-resolver │ │ └── job-service-reloader-initializer [OK] Running (23h, x1) │ ├── stale-endpoint-cleanup │ │ └── job-endpoint-cleanup [OK] Running (23h, x1) │ └── timer-job-device-reloader [OK] OK (15m0.59932768s) (14m, x1) ├── datapath │ ├── agent-liveness-updater │ │ └── timer-job-agent-liveness-updater [OK] OK (38.791µs) (0s, x1) │ ├── iptables │ │ ├── ipset │ │ │ ├── job-ipset-init-finalizer [OK] Running (23h, x1) │ │ │ ├── job-reconcile [OK] OK, 0 object(s) (39m, x2) │ │ │ └── job-refresh [OK] Next refresh in 30m0s (9m47s, x4) │ │ └── job-iptables-reconciliation-loop [OK] iptables rules full reconciliation completed (79m, x3) │ ├── l2-responder │ │ └── job-l2-responder-reconciler [OK] Running (23h, x1) │ ├── maps │ │ └── bwmap │ │ └── timer-job-pressure-metric-throttle [OK] OK (8.667µs) (15s, x1) │ ├── node-address │ │ └── job-node-address-update [OK] 172.16.0.4 (primary), fe80::ec04:f3ff:fe38:993e (primary) (79m, x1) │ └── sysctl │ ├── job-reconcile [OK] OK, 16 object(s) (9m36s, x138) │ └── job-refresh [OK] Next refresh in 9m48.897284338s (9m36s, x1) └── infra └── k8s-synced-crdsync └── job-sync-crds [OK] Running (23h, x1) # Native Routing 확인 : # 192.168.0.0/16 대역은 IP Masq 없이 라우팅 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 status | grep KubeProxyReplacement KubeProxyReplacement: True [eth0 192.168.212.210 fe80::20c:29ff:fe25:47aa, eth1 192.168.10.10 fe80::20c:29ff:fe25:47b4 (Direct Routing)] # enableIPv4Masquerade=true(기본값) , bpf.masquerade=true 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# cilium config view | egrep 'enable-ipv4-masquerade|enable-bpf-masquerade' enable-bpf-masquerade true enable-ipv4-masquerade true (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 status --verbose | grep Masquerading Masquerading: BPF [eth0, eth1] 192.168.0.0/16 [IPv4: Enabled, IPv6: Disabled] # Configure the eBPF-based ip-masq-agent # https://docs.cilium.io/en/stable/network/concepts/masquerading/ ❯ helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values --set ipMasqAgent.enabled=true Release "cilium" has been upgraded. Happy Helming! NAME: cilium LAST DEPLOYED: Sat Oct 26 17:47:03 2024 NAMESPACE: kube-system STATUS: deployed REVISION: 3 TEST SUITE: None NOTES: You have successfully installed Cilium with Hubble Relay and Hubble UI. Your release version is 1.16.3. # (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# cilium config view | grep -i masq enable-bpf-masquerade true enable-ip-masq-agent true enable-ipv4-masquerade true enable-ipv6-masquerade true enable-masquerade-to-route-source false (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-s -o jsonpath='{.items[0].metadata.name}') (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# alias c0="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium" c0 status --verbose | grep Masquerading Masquerading: BPF (ip-masq-agent) [eth0, eth1] 192.168.0.0/16 [IPv4: Enabled, IPv6: Disabled] (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kubectl get cm -n kube-system cilium-config -o yaml | grep ip-masq enable-ip-masq-agent: "true"

Cilium 기본 정보 확인

- 변수 & 단축키

# cilium 파드 이름

export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-s -o jsonpath='{.items[0].metadata.name}')

export CILIUMPOD1=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w1 -o jsonpath='{.items[0].metadata.name}')

export CILIUMPOD2=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w2 -o jsonpath='{.items[0].metadata.name}')

# 단축키(alias) 지정

alias c0="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium"

alias c1="kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- cilium"

alias c2="kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- cilium"

alias c0bpf="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- bpftool"

alias c1bpf="kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- bpftool"

alias c2bpf="kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- bpftool"

# Hubble UI 웹 접속

kubectl patch -n kube-system svc hubble-ui -p '{"spec": {"type": "NodePort"}}'

HubbleUiNodePort=$(kubectl get svc -n kube-system hubble-ui -o jsonpath={.spec.ports[0].nodePort})

echo -e "Hubble UI URL = http://$(curl -s ipinfo.io/ip):$HubbleUiNodePort"

# 자주 사용 명령

helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values --set

kubetail -n kube-system -l k8s-app=cilium --since 12h

kubetail -n kube-system -l k8s-app=cilium-envoy --since 12h

-

자주 쓰는 Cilium CLI 명령어

# cilium 파드 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kubectl get pod -n kube-system -l k8s-app=cilium -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES cilium-klhm4 1/1 Running 0 16m 192.168.10.102 k8s-w2 <none> <none> cilium-nr85g 1/1 Running 0 16m 192.168.10.10 k8s-s <none> <none> cilium-pbxch 1/1 Running 0 15m 192.168.10.101 k8s-w1 <none> <none> # cilium 파드 재시작 kubectl -n kube-system rollout restart ds/cilium 혹은 kubectl delete pod -n kube-system -l k8s-app=cilium # cilium 설정 정보 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# cilium config view agent-not-ready-taint-key node.cilium.io/agent-not-ready arping-refresh-period 30s auto-direct-node-routes true bpf-events-drop-enabled true bpf-events-policy-verdict-enabled true bpf-events-trace-enabled true bpf-lb-acceleration disabled bpf-lb-external-clusterip false bpf-lb-map-max 65536 bpf-lb-sock false bpf-lb-sock-terminate-pod-connections false bpf-map-dynamic-size-ratio 0.0025 bpf-policy-map-max 16384 bpf-root /sys/fs/bpf cgroup-root /run/cilium/cgroupv2 cilium-endpoint-gc-interval 5m0s ... vtep-mask write-cni-conf-when-ready /host/etc/cni/net.d/05-cilium.conflist # cilium 파드의 cilium 상태 확인 c0 status --verbose # cilium 엔드포인트 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# kubectl get ciliumendpoints -A NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6 kube-system coredns-55cb58b774-2dvq5 28955 ready 172.16.1.8 kube-system coredns-55cb58b774-t64fc 28955 ready 172.16.2.206 kube-system hubble-relay-88f7f89d4-l8pz5 36655 ready 172.16.2.234 kube-system hubble-ui-59bb4cb67b-wfptl 770 ready 172.16.2.111 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 endpoint list ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS ENFORCEMENT ENFORCEMENT 1065 Disabled Disabled 1 k8s:node-role.kubernetes.io/control-plane ready k8s:node.kubernetes.io/exclude-from-external-load-balancers reserved:host 1141 Disabled Disabled 4 reserved:health 172.16.0.190 ready (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 bpf endpoint list IP ADDRESS LOCAL ENDPOINT INFO 172.16.0.4:0 (localhost) 172.16.0.190:0 id=1141 sec_id=4 flags=0x0000 ifindex=11 mac=B2:48:16:DA:AD:99 nodemac=92:06:B5:ED:73:C6 192.168.212.210:0 (localhost) 192.168.10.10:0 (localhost) (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 map get cilium_lxc Key Value State Error 172.16.0.190:0 id=1141 sec_id=4 flags=0x0000 ifindex=11 mac=B2:48:16:DA:AD:99 nodemac=92:06:B5:ED:73:C6 sync (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 ip list IP IDENTITY SOURCE 0.0.0.0/0 reserved:world 172.16.0.4/32 reserved:host reserved:kube-apiserver 172.16.0.190/32 reserved:health 172.16.1.8/32 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system custom-resource k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=coredns k8s:io.kubernetes.pod.namespace=kube-system k8s:k8s-app=kube-dns 172.16.1.85/32 reserved:remote-node 172.16.1.201/32 reserved:health 172.16.2.76/32 reserved:remote-node 172.16.2.111/32 k8s:app.kubernetes.io/name=hubble-ui custom-resource k8s:app.kubernetes.io/part-of=cilium k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui k8s:io.kubernetes.pod.namespace=kube-system k8s:k8s-app=hubble-ui 172.16.2.177/32 reserved:health 172.16.2.206/32 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system custom-resource k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=coredns k8s:io.kubernetes.pod.namespace=kube-system k8s:k8s-app=kube-dns 172.16.2.234/32 k8s:app.kubernetes.io/name=hubble-relay custom-resource k8s:app.kubernetes.io/part-of=cilium k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay k8s:io.kubernetes.pod.namespace=kube-system k8s:k8s-app=hubble-relay 192.168.10.10/32 reserved:host reserved:kube-apiserver 192.168.10.101/32 reserved:remote-node 192.168.10.102/32 reserved:remote-node 192.168.212.210/32 reserved:host reserved:kube-apiserver # Manage the IPCache mappings for IP/CIDR <-> Identity (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 bpf ipcache list IP PREFIX/ADDRESS IDENTITY 172.16.1.201/32 identity=4 encryptkey=0 tunnelendpoint=192.168.10.101, flags=<none> 172.16.2.76/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> 172.16.2.234/32 identity=36655 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> 0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> 172.16.2.111/32 identity=770 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> 172.16.2.206/32 identity=28955 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> 192.168.10.10/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> 192.168.212.210/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> 172.16.0.4/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> 172.16.0.190/32 identity=4 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> 172.16.1.85/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.101, flags=<none> 192.168.10.102/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> 172.16.1.8/32 identity=28955 encryptkey=0 tunnelendpoint=192.168.10.101, flags=<none> 172.16.2.177/32 identity=4 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> 192.168.10.101/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> # Service/NAT List 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 service list ID Frontend Service Type Backend 1 10.10.0.1:443 ClusterIP 1 => 192.168.10.10:6443 (active) 2 10.10.44.26:443 ClusterIP 1 => 192.168.10.10:4244 (active) 3 10.10.254.126:80 ClusterIP 1 => 172.16.2.234:4245 (active) 4 10.10.227.104:80 ClusterIP 1 => 172.16.2.111:8081 (active) 5 10.10.0.10:53 ClusterIP 1 => 172.16.1.8:53 (active) 2 => 172.16.2.206:53 (active) 6 10.10.0.10:9153 ClusterIP 1 => 172.16.1.8:9153 (active) 2 => 172.16.2.206:9153 (active) 7 0.0.0.0:32287 NodePort 1 => 172.16.2.111:8081 (active) 8 192.168.10.10:32287 NodePort 1 => 172.16.2.111:8081 (active) 9 192.168.212.210:32287 NodePort 1 => 172.16.2.111:8081 (active) (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 bpf lb list SERVICE ADDRESS BACKEND ADDRESS (REVNAT_ID) (SLOT) 192.168.10.10:32287 (1) 172.16.2.111:8081 (8) (1) 0.0.0.0:32287 (1) 172.16.2.111:8081 (7) (1) 10.10.0.10:53 (0) 0.0.0.0:0 (5) (0) [ClusterIP, non-routable] 10.10.0.1:443 (1) 192.168.10.10:6443 (1) (1) 10.10.254.126:80 (1) 172.16.2.234:4245 (3) (1) 10.10.0.10:53 (2) 172.16.2.206:53 (5) (2) 10.10.0.1:443 (0) 0.0.0.0:0 (1) (0) [ClusterIP, non-routable] 10.10.227.104:80 (0) 0.0.0.0:0 (4) (0) [ClusterIP, non-routable] 10.10.44.26:443 (0) 0.0.0.0:0 (2) (0) [ClusterIP, InternalLocal, non-routable] 10.10.44.26:443 (1) 192.168.10.10:4244 (2) (1) 10.10.0.10:53 (1) 172.16.1.8:53 (5) (1) 0.0.0.0:32287 (0) 0.0.0.0:0 (7) (0) [NodePort, non-routable] 192.168.212.210:32287 (0) 0.0.0.0:0 (9) (0) [NodePort] 192.168.212.210:32287 (1) 172.16.2.111:8081 (9) (1) 192.168.10.10:32287 (0) 0.0.0.0:0 (8) (0) [NodePort] 10.10.227.104:80 (1) 172.16.2.111:8081 (4) (1) 10.10.254.126:80 (0) 0.0.0.0:0 (3) (0) [ClusterIP, non-routable] 10.10.0.10:9153 (1) 172.16.1.8:9153 (6) (1) 10.10.0.10:9153 (2) 172.16.2.206:9153 (6) (2) 10.10.0.10:9153 (0) 0.0.0.0:0 (6) (0) [ClusterIP, non-routable] (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 bpf lb list --revnat ID BACKEND ADDRESS (REVNAT_ID) (SLOT) 7 0.0.0.0:32287 5 10.10.0.10:53 3 10.10.254.126:80 9 192.168.212.210:32287 6 10.10.0.10:9153 4 10.10.227.104:80 2 10.10.44.26:443 8 192.168.10.10:32287 1 10.10.0.1:443 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 bpf nat list TCP IN 172.16.2.177:4240 -> 192.168.10.10:53974 XLATE_DST 192.168.10.10:53974 Created=583sec ago NeedsCT=1 ... TCP IN 172.16.2.111:8081 -> 192.168.10.10:53623 XLATE_DST 192.168.10.1:53623 Created=15sec ago NeedsCT=0 # List all open BPF maps (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 map list Name Num entries Num errors Cache enabled cilium_lb4_backends_v3 2 0 true cilium_lb4_services_v2 20 0 true cilium_lb4_source_range 0 0 true cilium_lb4_affinity 0 0 true cilium_lb4_reverse_nat 9 0 true cilium_ipmasq_v4 0 0 true cilium_ipcache 15 0 true cilium_lxc 1 0 true cilium_lb4_reverse_sk 0 0 true cilium_lb_affinity_match 0 0 true cilium_policy_01141 3 0 true cilium_policy_01065 2 0 true cilium_runtime_config 256 0 true cilium_metrics 0 0 false cilium_skip_lb4 0 0 false cilium_l2_responder_v4 0 0 false cilium_node_map 0 0 false cilium_auth_map 0 0 false cilium_node_map_v2 0 0 false (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 map list --verbose ## Map: cilium_lb4_affinity Cache is empty ## Map: cilium_lb4_source_range Cache is empty ## Map: cilium_ipcache Key Value State Error 0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> sync 192.168.10.10/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> sync 192.168.10.101/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> sync 172.16.1.8/32 identity=28955 encryptkey=0 tunnelendpoint=192.168.10.101, flags=<none> sync 172.16.2.234/32 identity=36655 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> sync 172.16.1.85/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.101, flags=<none> sync 172.16.1.201/32 identity=4 encryptkey=0 tunnelendpoint=192.168.10.101, flags=<none> sync 172.16.2.177/32 identity=4 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> sync 172.16.0.4/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> sync 172.16.2.111/32 identity=770 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> sync 172.16.0.190/32 identity=4 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> sync 192.168.10.102/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> sync 172.16.2.206/32 identity=28955 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> sync 172.16.2.76/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.102, flags=<none> sync 192.168.212.210/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0, flags=<none> sync ## Map: cilium_lb4_reverse_nat Key Value State Error 2 10.10.44.26:443 sync 4 10.10.227.104:80 sync 8 192.168.10.10:32287 sync 9 192.168.212.210:32287 sync 7 0.0.0.0:32287 sync 6 10.10.0.10:9153 sync 5 10.10.0.10:53 sync 1 10.10.0.1:443 sync 3 10.10.254.126:80 sync ## Map: cilium_ipmasq_v4 Cache is empty ## Map: cilium_policy_01141 Key Value State Error Ingress: 0 ANY 0 160 12916 Egress: 0 ANY 0 0 0 Ingress: 1 ANY 0 116 9953 ## Map: cilium_policy_01065 Key Value State Error Ingress: 0 ANY 0 0 0 Egress: 0 ANY 0 0 0 ## Map: cilium_runtime_config Key Value State Error UTimeOffset 3378737814453125 AgentLiveness 20332056741423 Unknown 0 Unknown 0 .. ## Map: cilium_lxc Key Value State Error 172.16.0.190:0 id=1141 sec_id=4 flags=0x0000 ifindex=11 mac=B2:48:16:DA:AD:99 nodemac=92:06:B5:ED:73:C6 sync ## Map: cilium_lb4_reverse_sk Cache is empty ## Map: cilium_lb_affinity_match Cache is empty ## Map: cilium_lb4_services_v2 Key Value State Error 192.168.10.10:32287 (1) 13 0 (8) [0x0 0x0] sync 192.168.10.10:32287 (0) 0 1 (8) [0x42 0x0] sync 192.168.212.210:32287 (1) 13 0 (9) [0x0 0x0] sync 10.10.0.10:9153 (1) 9 0 (6) [0x0 0x0] sync 10.10.0.10:9153 (2) 12 0 (6) [0x0 0x0] sync 10.10.0.10:53 (1) 10 0 (5) [0x0 0x0] sync 10.10.0.10:53 (0) 0 2 (5) [0x0 0x0] sync 10.10.44.26:443 (0) 0 1 (2) [0x0 0x10] sync 192.168.212.210:32287 (0) 0 1 (9) [0x42 0x0] sync 10.10.0.10:53 (2) 11 0 (5) [0x0 0x0] sync 10.10.254.126:80 (1) 17 0 (3) [0x0 0x0] sync 10.10.227.104:80 (0) 0 1 (4) [0x0 0x0] sync 0.0.0.0:32287 (1) 13 0 (7) [0x0 0x0] sync 0.0.0.0:32287 (0) 0 1 (7) [0x2 0x0] sync 10.10.0.10:9153 (0) 0 2 (6) [0x0 0x0] sync 10.10.0.1:443 (1) 1 0 (1) [0x0 0x0] sync 10.10.0.1:443 (0) 0 1 (1) [0x0 0x0] sync 10.10.44.26:443 (1) 16 0 (2) [0x0 0x0] sync 10.10.254.126:80 (0) 0 1 (3) [0x0 0x0] sync 10.10.227.104:80 (1) 13 0 (4) [0x0 0x0] sync ## Map: cilium_lb4_backends_v3 Key Value State Error 16 ANY://192.168.10.10 sync 17 ANY://172.16.2.234 sync ## Map: cilium_node_map_v2 Cache is disabled ## Map: cilium_metrics Cache is disabled ## Map: cilium_skip_lb4 Cache is disabled ## Map: cilium_l2_responder_v4 Cache is disabled ## Map: cilium_node_map Cache is disabled ## Map: cilium_auth_map Cache is disabled # List contents of a policy BPF map : Dump all policy maps (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 bpf policy get --all Endpoint ID: 1065 Path: /sys/fs/bpf/tc/globals/cilium_policy_01065 POLICY DIRECTION LABELS (source:key[=value]) PORT/PROTO PROXY PORT AUTH TYPE BYTES PACKETS PREFIX Allow Ingress reserved:unknown ANY NONE disabled 0 0 0 Allow Egress reserved:unknown ANY NONE disabled 0 0 0 Endpoint ID: 1141 Path: /sys/fs/bpf/tc/globals/cilium_policy_01141 POLICY DIRECTION LABELS (source:key[=value]) PORT/PROTO PROXY PORT AUTH TYPE BYTES PACKETS PREFIX Allow Ingress reserved:unknown ANY NONE disabled 16006 198 0 Allow Ingress reserved:host ANY NONE disabled 12197 141 0 reserved:kube-apiserver Allow Egress reserved:unknown ANY NONE disabled 0 0 0 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 bpf policy get --all -n Endpoint ID: 1065 Path: /sys/fs/bpf/tc/globals/cilium_policy_01065 POLICY DIRECTION IDENTITY PORT/PROTO PROXY PORT AUTH TYPE BYTES PACKETS PREFIX Allow Ingress 0 ANY NONE disabled 0 0 0 Allow Egress 0 ANY NONE disabled 0 0 0 Endpoint ID: 1141 Path: /sys/fs/bpf/tc/globals/cilium_policy_01141 POLICY DIRECTION IDENTITY PORT/PROTO PROXY PORT AUTH TYPE BYTES PACKETS PREFIX Allow Ingress 0 ANY NONE disabled 16270 202 0 Allow Ingress 1 ANY NONE disabled 12329 143 0 Allow Egress 0 ANY NONE disabled 0 0 0 # cilium monitor (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 monitor -v Listening for events on 2 CPUs with 64x4096 of shared memory Press Ctrl-C to quit CPU 01: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 27131, dst [192.168.10.10]:6443 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7637 sock_cookie: 27132, dst [192.168.10.10]:55188 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7791 sock_cookie: 30002, dst [127.0.0.1]:35354 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 27133, dst [127.0.0.1]:2381 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7637 sock_cookie: 30003, dst [192.168.10.10]:55202 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 27134, dst [192.168.10.10]:6443 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7637 sock_cookie: 27135, dst [192.168.10.10]:55206 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 30004, dst [192.168.10.10]:6443 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7637 sock_cookie: 30005, dst [192.168.10.10]:55210 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 27136, dst [192.168.10.10]:6443 tcp ^C Received an interrupt, disconnecting from monitor... (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# c0 monitor -v --type l7 Listening for events on 2 CPUs with 64x4096 of shared memory Press Ctrl-C to quit CPU 00: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 30036, dst [127.0.2.1]:9 udp CPU 00: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 30037, dst [127.0.2.1]:9 udp CPU 00: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 30038, dst [192.168.10.10]:9 udp CPU 00: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 30039, dst [192.168.10.10]:6443 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7637 sock_cookie: 27170, dst [192.168.10.10]:51962 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7945 sock_cookie: 27171, dst [192.168.10.10]:6443 tcp CPU 01: [pre-xlate-rev] cgroup_id: 7637 sock_cookie: 27172, dst [192.168.10.10]:51972 tcp ^C Received an interrupt, disconnecting from monitor...

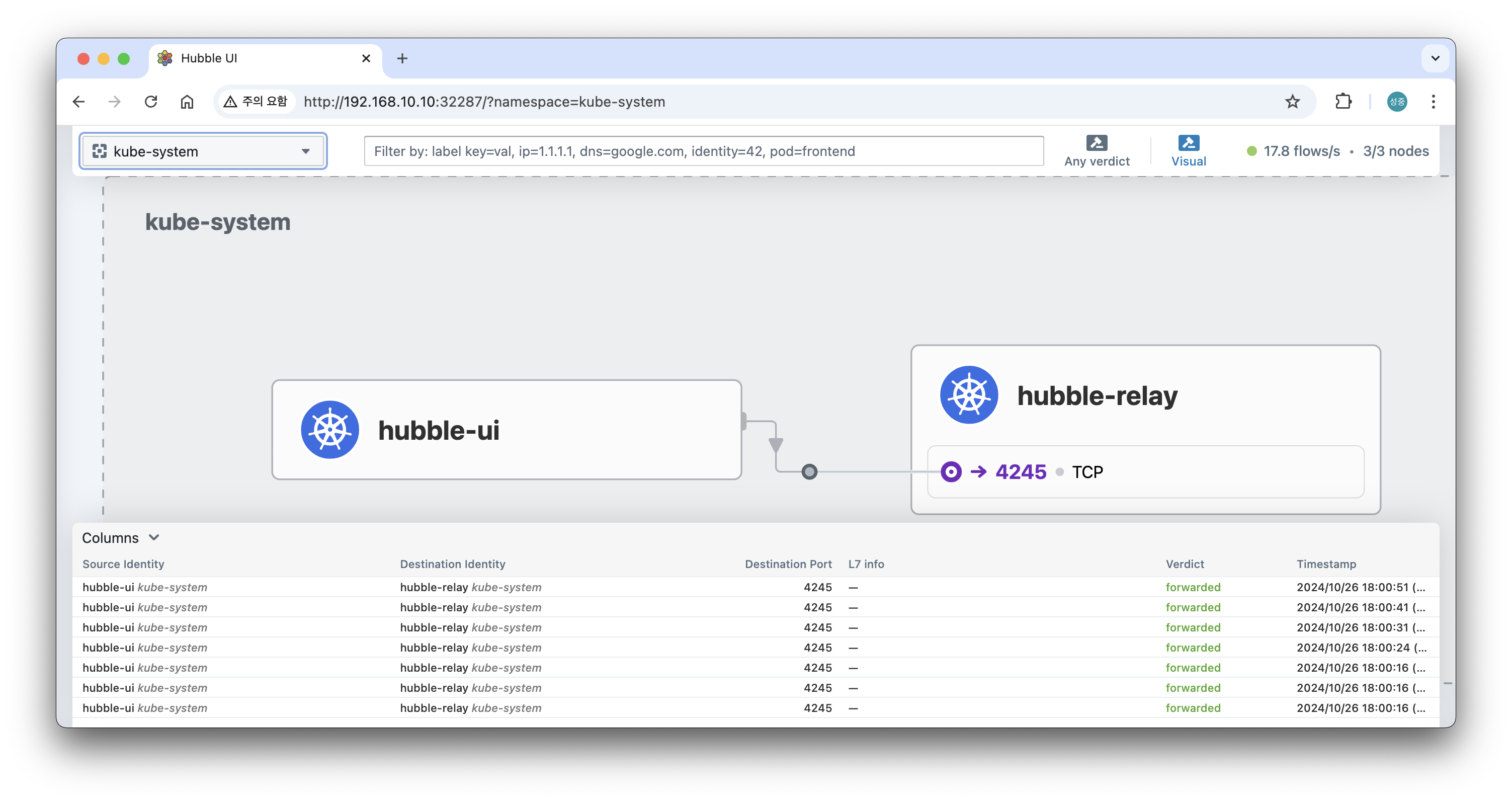

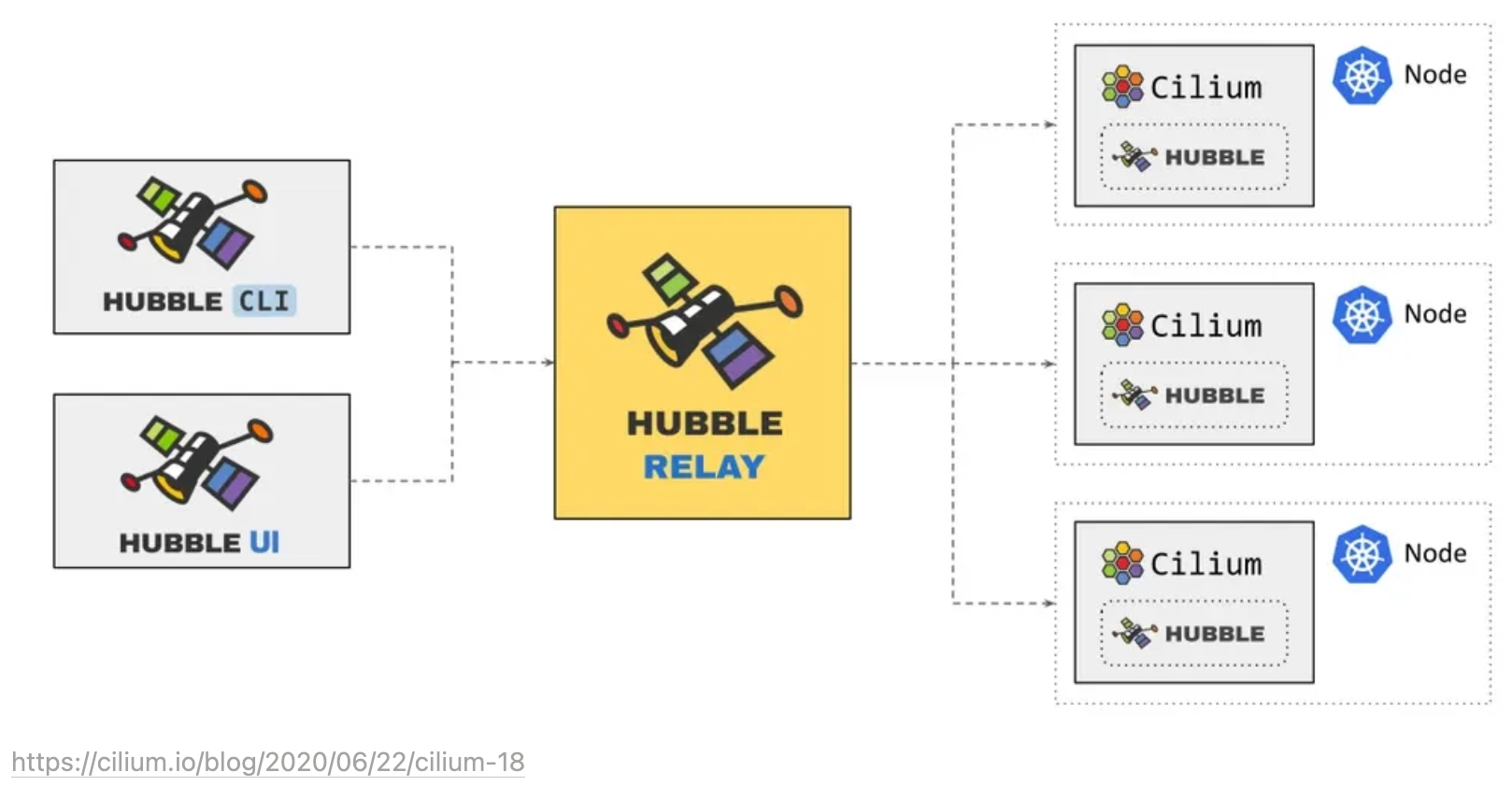

Hubble UI & CLI

-

통신 및 서비스와 네트워킹 인프라의 동작에 대한 심층적인 가시성을 완전히 투명한 방식으로 제공하는 관찰성을 제공

-

Observability

- Network Observability with Hubble - Link

- Running Prometheus & Grafana - Link

- Monitoring & Metrics - Link

- Layer 7 Protocol Visibility - Link

- Hubble for Network Observability and Security (Part 3): Leveraging Hubble Data for Network Security - Link

- Hubble for Network Observability and Security (Part 2): Utilizing Hubble for Network Observability - Link

- Hubble for Network Observability and Security (Part 1): Introduction to Cilium and Hubble - Link

-

Metrics export via Prometheus: Key metrics are exported via Prometheus for integration with your existing dashboards.

-

Cluster-wide observability with Hubble Relay

-

서비스 종속성 그래프

-

다양한 메트릭(네트워크, HTTP, DNS 등) 모니터링

-

통제 예시

- Allow all HTTP requests with method

GETand path/public/.*. Deny all other requests. - Allow

service1to produce on Kafka topictopic1andservice2to consume ontopic1. Reject all other Kafka messages. - Require the HTTP header

X-Token: [0-9]+to be present in all REST calls.

- Allow all HTTP requests with method

-

Hubble UI/CLI 접근 및 확인

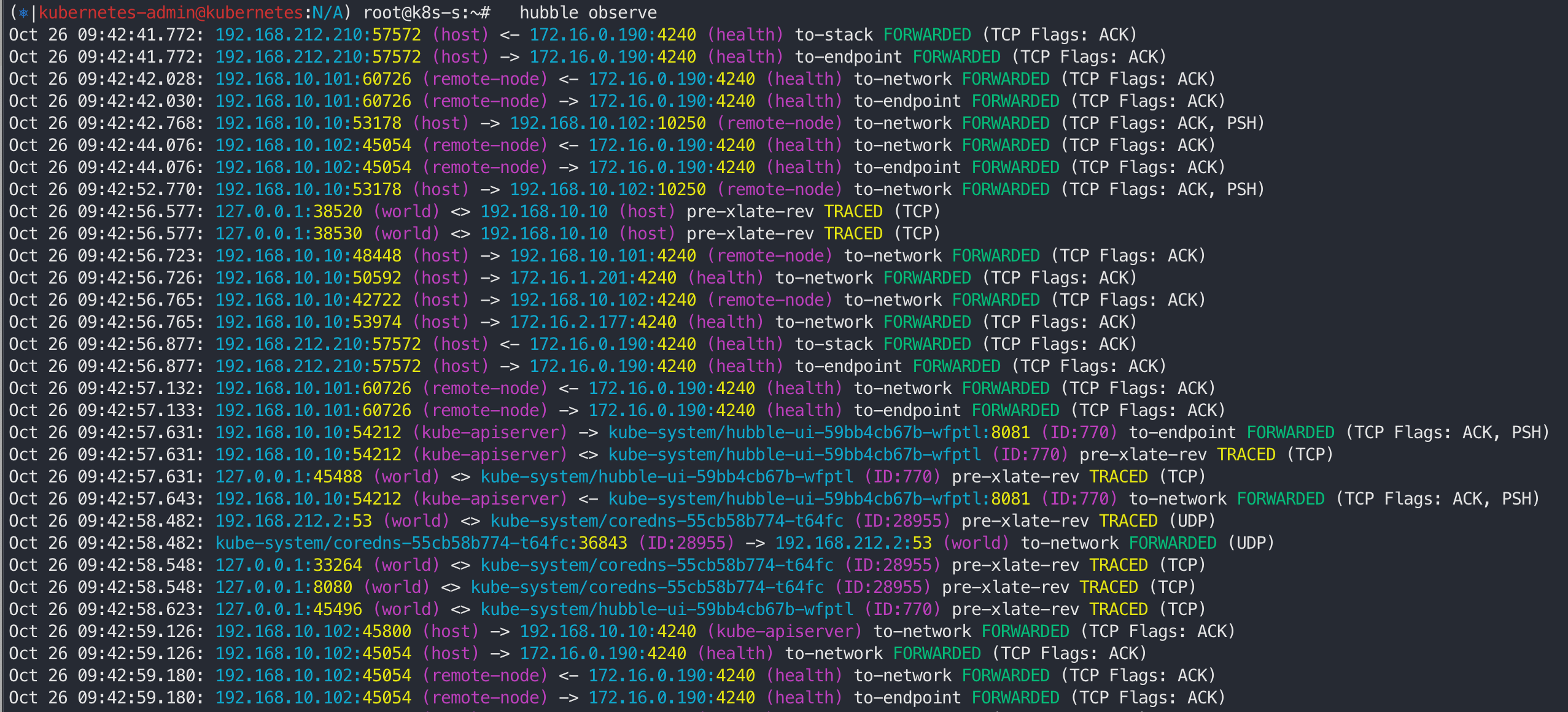

# 확인 cilium status # UI 파드 정보 확인 kubectl get pod -n kube-system -l k8s-app=hubble-ui -o wide # Hubble UI 웹 접속 kubectl patch -n kube-system svc hubble-ui -p '{"spec": {"type": "NodePort"}}' HubbleUiNodePort=$(kubectl get svc -n kube-system hubble-ui -o jsonpath={.spec.ports[0].nodePort}) echo -e "Hubble UI URL = http://$(curl -s ipinfo.io/ip):$HubbleUiNodePort" ## Service NodePort 생성 후 아래 정보 확인! iptables -t nat -S conntrack -L conntrack -L |grep -v 2379 # Install Hubble Client HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt) HUBBLE_ARCH=amd64 if [ "$(uname -m)" = "aarch64" ]; then HUBBLE_ARCH=arm64; fi curl -L --fail --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-linux-${HUBBLE_ARCH}.tar.gz{,.sha256sum} sha256sum --check hubble-linux-${HUBBLE_ARCH}.tar.gz.sha256sum sudo tar xzvfC hubble-linux-${HUBBLE_ARCH}.tar.gz /usr/local/bin rm hubble-linux-${HUBBLE_ARCH}.tar.gz{,.sha256sum} # Hubble API Access : localhost TCP 4245 Relay 를 통해 접근, observe 를 통해서 flow 쿼리 확인 가능! (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# cilium hubble port-forward & [1] 70056 # CLI 로 Hubble API 상태 확인 (⎈|kubernetes-admin@kubernetes:N/A) root@k8s-s:~# hubble status Healthcheck (via localhost:4245): Ok Current/Max Flows: 10,284/12,285 (83.71%) Flows/s: 21.77 Connected Nodes: 3/3 # query the flow API and look for flows hubble observe # hubble observe --pod netpod # hubble observe --namespace galaxy --http-method POST --http-path /v1/request-landing # hubble observe --pod deathstar --protocol http # hubble observe --pod deathstar --verdict DROPPED

5. EKS에 vpc-cni 대신 cilium 설치

eksctl로 eks cluster 생성

- aws credential 설정 설명은 생략합니다.

❯ cat <<EOT> eks-cilium.yaml

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: eks-cilium

region: ap-northeast-2

nodeGroups:

- name: ng-1

instanceType: t3.medium

desiredCapacity: 2

taints:

- key: "node.cilium.io/agent-not-ready"

value: "true"

effect: "NoExecute"

EOT

❯ eksctl create cluster -f eks-cilium.yaml

2024-10-26 22:07:49 [ℹ] eksctl version 0.194.0

2024-10-26 22:07:49 [ℹ] using region ap-northeast-2

2024-10-26 22:07:49 [ℹ] setting availability zones to [ap-northeast-2d ap-northeast-2c ap-northeast-2b]

2024-10-26 22:07:49 [ℹ] subnets for ap-northeast-2d - public:192.168.0.0/19 private:192.168.96.0/19

2024-10-26 22:07:49 [ℹ] subnets for ap-northeast-2c - public:192.168.32.0/19 private:192.168.128.0/19

2024-10-26 22:07:49 [ℹ] subnets for ap-northeast-2b - public:192.168.64.0/19 private:192.168.160.0/19

2024-10-26 22:07:49 [ℹ] nodegroup "ng-1" will use "ami-0f90afdeb14a3a420" [AmazonLinux2/1.30]

2024-10-26 22:07:49 [ℹ] using Kubernetes version 1.30

2024-10-26 22:07:49 [ℹ] creating EKS cluster "eks-cilium" in "ap-northeast-2" region with un-managed nodes

2024-10-26 22:07:49 [ℹ] 1 nodegroup (ng-1) was included (based on the include/exclude rules)

2024-10-26 22:07:49 [ℹ] will create a CloudFormation stack for cluster itself and 1 nodegroup stack(s)

2024-10-26 22:07:49 [ℹ] will create a CloudFormation stack for cluster itself and 0 managed nodegroup stack(s)

2024-10-26 22:07:49 [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=ap-northeast-2 --cluster=eks-cilium'

2024-10-26 22:07:49 [ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "eks-cilium" in "ap-northeast-2"

2024-10-26 22:07:49 [ℹ] CloudWatch logging will not be enabled for cluster "eks-cilium" in "ap-northeast-2"

2024-10-26 22:07:49 [ℹ] you can enable it with 'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} --region=ap-northeast-2 --cluster=eks-cilium'

2024-10-26 22:07:49 [ℹ] default addons kube-proxy, coredns, vpc-cni were not specified, will install them as EKS addons

2024-10-26 22:07:49 [ℹ]

2 sequential tasks: { create cluster control plane "eks-cilium",

2 sequential sub-tasks: {

2 sequential sub-tasks: {

1 task: { create addons },

wait for control plane to become ready,

},

create nodegroup "ng-1",

}

}

2024-10-26 22:07:49 [ℹ] building cluster stack "eksctl-eks-cilium-cluster"

2024-10-26 22:07:49 [ℹ] deploying stack "eksctl-eks-cilium-cluster"

2024-10-26 22:08:19 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:08:49 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:09:50 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:10:50 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:11:50 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:12:50 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:13:50 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:14:50 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-cluster"

2024-10-26 22:14:51 [ℹ] creating addon

2024-10-26 22:14:51 [ℹ] successfully created addon

2024-10-26 22:14:52 [ℹ] creating addon

2024-10-26 22:14:52 [ℹ] successfully created addon

2024-10-26 22:14:52 [!] recommended policies were found for "vpc-cni" addon, but since OIDC is disabled on the cluster, eksctl cannot configure the requested permissions; the recommended way to provide IAM permissions for "vpc-cni" addon is via pod identity associations; after addon creation is completed, add all recommended policies to the config file, under `addon.PodIdentityAssociations`, and run `eksctl update addon`

2024-10-26 22:14:52 [ℹ] creating addon

2024-10-26 22:14:53 [ℹ] successfully created addon

2024-10-26 22:16:53 [ℹ] building nodegroup stack "eksctl-eks-cilium-nodegroup-ng-1"

2024-10-26 22:16:53 [ℹ] --nodes-min=2 was set automatically for nodegroup ng-1

2024-10-26 22:16:53 [ℹ] --nodes-max=2 was set automatically for nodegroup ng-1

2024-10-26 22:16:54 [ℹ] deploying stack "eksctl-eks-cilium-nodegroup-ng-1"

2024-10-26 22:16:54 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-nodegroup-ng-1"

2024-10-26 22:17:24 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-nodegroup-ng-1"

2024-10-26 22:17:56 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-nodegroup-ng-1"

2024-10-26 22:19:38 [ℹ] waiting for CloudFormation stack "eksctl-eks-cilium-nodegroup-ng-1"

2024-10-26 22:19:38 [ℹ] waiting for the control plane to become ready

2024-10-26 22:19:38 [✔] saved kubeconfig as "/Users/sjkim/.kube/config"

2024-10-26 22:19:38 [ℹ] no tasks

2024-10-26 22:19:38 [✔] all EKS cluster resources for "eks-cilium" have been created

2024-10-26 22:19:39 [ℹ] nodegroup "ng-1" has 2 node(s)

2024-10-26 22:19:39 [ℹ] node "ip-192-168-2-1.ap-northeast-2.compute.internal" is ready

2024-10-26 22:19:39 [ℹ] node "ip-192-168-55-63.ap-northeast-2.compute.internal" is ready

2024-10-26 22:19:39 [ℹ] waiting for at least 2 node(s) to become ready in "ng-1"

2024-10-26 22:19:39 [ℹ] nodegroup "ng-1" has 2 node(s)

2024-10-26 22:19:39 [ℹ] node "ip-192-168-2-1.ap-northeast-2.compute.internal" is ready

2024-10-26 22:19:39 [ℹ] node "ip-192-168-55-63.ap-northeast-2.compute.internal" is ready

2024-10-26 22:19:39 [✔] created 1 nodegroup(s) in cluster "eks-cilium"

2024-10-26 22:19:39 [✔] created 0 managed nodegroup(s) in cluster "eks-cilium"

2024-10-26 22:19:39 [ℹ] kubectl command should work with "/Users/sjkim/.kube/config", try 'kubectl get nodes'

2024-10-26 22:19:39 [✔] EKS cluster "eks-cilium" in "ap-northeast-2" region is ready

❯ kc cluster-info

Kubernetes control plane is running at https://D47F46C0E0DAE391C41F6460E8DFAA71.gr7.ap-northeast-2.eks.amazonaws.com

CoreDNS is running at https://D47F46C0E0DAE391C41F6460E8DFAA71.gr7.ap-northeast-2.eks.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

❯ kc get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-192-168-2-1.ap-northeast-2.compute.internal Ready <none> 3m45s v1.30.4-eks-a737599 192.168.2.1 43.203.161.191 Amazon Linux 2 5.10.226-214.880.amzn2.x86_64 containerd://1.7.22

ip-192-168-55-63.ap-northeast-2.compute.internal Ready <none> 3m58s v1.30.4-eks-a737599 192.168.55.63 15.164.230.248 Amazon Linux 2 5.10.226-214.880.amzn2.x86_64 containerd://1.7.22

❯ kc get pod -o wide -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system aws-node-m4xcj 2/2 Running 0 3m55s 192.168.2.1 ip-192-168-2-1.ap-northeast-2.compute.internal <none> <none>

kube-system aws-node-ns8zm 2/2 Running 0 4m8s 192.168.55.63 ip-192-168-55-63.ap-northeast-2.compute.internal <none> <none>

kube-system coredns-5b9dfbf96-lv88s 0/1 Pending 0 7m5s <none> <none> <none> <none>

kube-system coredns-5b9dfbf96-v9c2l 0/1 Pending 0 7m6s <none> <none> <none> <none>

kube-system kube-proxy-758wg 1/1 Running 0 3m55s 192.168.2.1 ip-192-168-2-1.ap-northeast-2.compute.internal <none> <none>

kube-system kube-proxy-pxpgh 1/1 Running 0 4m8s 192.168.55.63 ip-192-168-55-63.ap-northeast-2.compute.internal <none> <none>```

## nginx demp pod, svc 생성

```bash

❯ kubectl expose deployments.apps web --name web-svc --type="LoadBalancer" --port=80

service/web-svc exposed

❯ kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 24m

default web-svc LoadBalancer 10.100.131.217 a1dc20c2f46a1423289ad35b4c3ee76f-1657700691.ap-northeast-2.elb.amazonaws.com 80:31231/TCP 13s

kube-system kube-dns ClusterIP 10.100.0.10 <none> 53/UDP,53/TCP,9153/TCP 21m정보 확인(aws-node 사용 시)

[root@ip-192-168-178-222 ~]# iptables -t nat -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N AWS-CONNMARK-CHAIN-0

-N AWS-SNAT-CHAIN-0

-N KUBE-EXT-SRNXP4JNS2EQLOND

-N KUBE-KUBELET-CANARY

-N KUBE-MARK-MASQ

-N KUBE-NODEPORTS

-N KUBE-POSTROUTING

-N KUBE-PROXY-CANARY

-N KUBE-SEP-7GBQHEPOUJDLKLNY

-N KUBE-SEP-AXSRWV772VKSZHXC

-N KUBE-SEP-BOQZMUQGPD7WJ57D

-N KUBE-SEP-BRETL7OU7HEP264I

-N KUBE-SEP-CAEEGXVVWZ5RHPS5

-N KUBE-SEP-H7K4HLLDTE4VEEKF

-N KUBE-SEP-HHNNHAA274ESOYMK

-N KUBE-SEP-OYC5DLCVWJ77JK3J

-N KUBE-SEP-QNIGYUFXRPLJXBBP

-N KUBE-SEP-TDJUWXWYTTO7FOJP

-N KUBE-SEP-TJJ6CU5GT3UBV3IJ

-N KUBE-SERVICES

-N KUBE-SVC-ERIFXISQEP7F7OF4

-N KUBE-SVC-JD5MR3NA4I4DYORP

-N KUBE-SVC-NPX46M4PTMTKRN6Y

-N KUBE-SVC-SRNXP4JNS2EQLOND

-N KUBE-SVC-TCOU7JCQXEZGVUNU

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -i eni+ -m comment --comment "AWS, outbound connections" -j AWS-CONNMARK-CHAIN-0

-A PREROUTING -m comment --comment "AWS, CONNMARK" -j CONNMARK --restore-mark --nfmask 0x80 --ctmask 0x80

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -m comment --comment "AWS SNAT CHAIN" -j AWS-SNAT-CHAIN-0

-A AWS-CONNMARK-CHAIN-0 -d 192.168.0.0/16 -m comment --comment "AWS CONNMARK CHAIN, VPC CIDR" -j RETURN

-A AWS-CONNMARK-CHAIN-0 -m comment --comment "AWS, CONNMARK" -j CONNMARK --set-xmark 0x80/0x80

-A AWS-SNAT-CHAIN-0 -d 192.168.0.0/16 -m comment --comment "AWS SNAT CHAIN" -j RETURN

-A AWS-SNAT-CHAIN-0 ! -o vlan+ -m comment --comment "AWS, SNAT" -m addrtype ! --dst-type LOCAL -j SNAT --to-source 192.168.178.222 --random-fully

-A KUBE-EXT-SRNXP4JNS2EQLOND -m comment --comment "masquerade traffic for default/web-svc external destinations" -j KUBE-MARK-MASQ

-A KUBE-EXT-SRNXP4JNS2EQLOND -j KUBE-SVC-SRNXP4JNS2EQLOND

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/web-svc" -m tcp --dport 31231 -j KUBE-EXT-SRNXP4JNS2EQLOND

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-7GBQHEPOUJDLKLNY -s 192.168.171.111/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-7GBQHEPOUJDLKLNY -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.171.111:443

-A KUBE-SEP-AXSRWV772VKSZHXC -s 192.168.130.128/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-AXSRWV772VKSZHXC -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 192.168.130.128:9153

-A KUBE-SEP-BOQZMUQGPD7WJ57D -s 192.168.151.169/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-BOQZMUQGPD7WJ57D -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 192.168.151.169:53

-A KUBE-SEP-BRETL7OU7HEP264I -s 192.168.125.148/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-BRETL7OU7HEP264I -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 192.168.125.148:443

-A KUBE-SEP-CAEEGXVVWZ5RHPS5 -s 192.168.159.193/32 -m comment --comment "default/web-svc" -j KUBE-MARK-MASQ

-A KUBE-SEP-CAEEGXVVWZ5RHPS5 -p tcp -m comment --comment "default/web-svc" -m tcp -j DNAT --to-destination 192.168.159.193:80

-A KUBE-SEP-H7K4HLLDTE4VEEKF -s 192.168.151.169/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-H7K4HLLDTE4VEEKF -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 192.168.151.169:9153

-A KUBE-SEP-HHNNHAA274ESOYMK -s 192.168.190.218/32 -m comment --comment "default/web-svc" -j KUBE-MARK-MASQ

-A KUBE-SEP-HHNNHAA274ESOYMK -p tcp -m comment --comment "default/web-svc" -m tcp -j DNAT --to-destination 192.168.190.218:80

-A KUBE-SEP-OYC5DLCVWJ77JK3J -s 192.168.130.128/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-OYC5DLCVWJ77JK3J -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 192.168.130.128:53

-A KUBE-SEP-QNIGYUFXRPLJXBBP -s 192.168.130.128/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-QNIGYUFXRPLJXBBP -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 192.168.130.128:53

-A KUBE-SEP-TDJUWXWYTTO7FOJP -s 192.168.122.11/32 -m comment --comment "default/web-svc" -j KUBE-MARK-MASQ

-A KUBE-SEP-TDJUWXWYTTO7FOJP -p tcp -m comment --comment "default/web-svc" -m tcp -j DNAT --to-destination 192.168.122.11:80

-A KUBE-SEP-TJJ6CU5GT3UBV3IJ -s 192.168.151.169/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ