가시다(gasida) 님이 진행하는 Terraform T101 4기 실습 스터디 게시글입니다.

책 '테라폼으로 시작하는 IaC' 를 참고하였고, 스터디하면서 도움이 될 내용들을 정리하고자 합니다.

3주차는 업무적으로 Amazon MSK 버전업 할 필요성이 있어서 Terraform을 이용하여 VPC와 MSK 생성하는 코드를 작성하였습니다.

코드 작성시 고려한 사항

- 코드 가독성과 재사용성, 자원 생성빈도와 상호 영향도를 고려하여 모듈화

- tfstate 파일을 안전하게 관리하기 위해 Local 대신에 버전관리 적용된 S3에 보관

- 공동 개발/유지관리를 위해 DynamoDB 이용하여 Lock 관리

- 1~3주 학습 내용을 복습 차 변수, Local, Data, 반복문(count, for_each) , 함수 등을 활용

Terraform으로 생성된 Amazon MSK를 v2.8.1에서 v3.5.1로 Terraform 통해 버전업시 발생된 문제점을 공유

실습환경 준비

- Terminal 설정

# Credential 설정 시 복수로 AWS 계정을 사용(관리)할 경우 프로파일 사용을 추천(예, t101)

$ aws configure --profile t101

AWS Access Key ID [None]: AKIA******DWVHK27PF2

AWS Secret Access Key [None]: fRQbW******KP

Default region name [None]: ap-northeast-2

Default output format [None]: json

# aws cli 명령어 실행 시 --profile 프로파일명 옵션 값 사용

$ aws sts get-caller-identity --profile=t101

{

"UserId": "AKIA******DWVHK27PF2",

"Account": "5**********7",

"Arn":"arn:aws:iam::5**********7:user/terraform"

}

# STS 관련 오류 방지 위해 AWS_PROFILE 환경변수에 t101 설정

$ export AWS_PROFILE=t101Terraform 모듈 계층구조

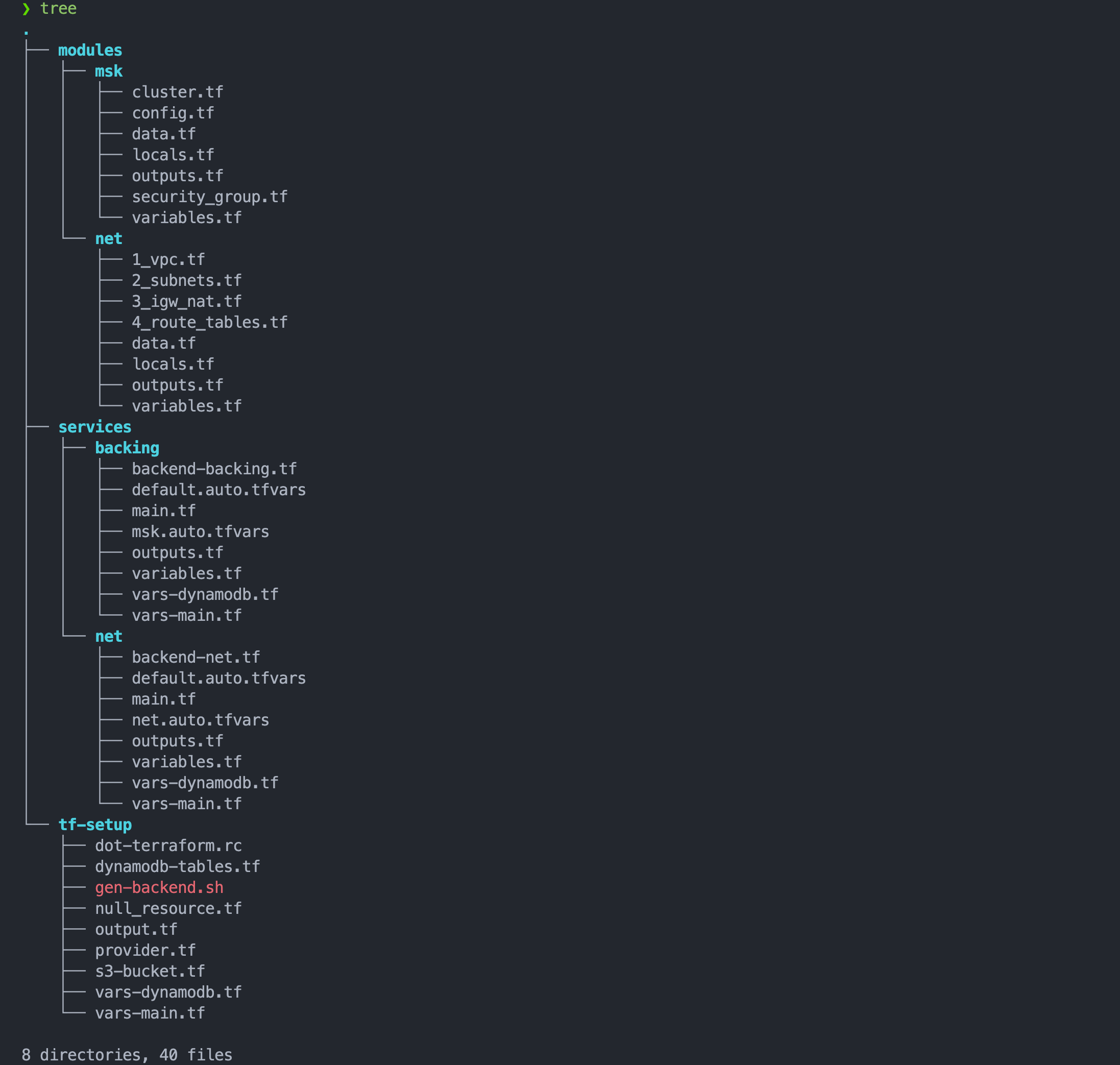

- 1 tf-setup : S3와 DynamoDB 생성, BackEnd 구성정보를 services의 net과 backing에 복사

- 2.1 services > net : vpc, subnet, igw, nat, route table 생성 요청

- 2.2 modules > net : vpc, subnet, igw, nat, route table 생성 처리

- 3.1 services > backing : MSK 생성 요청

- 3.2 modules > backing : MSK 생성 처리

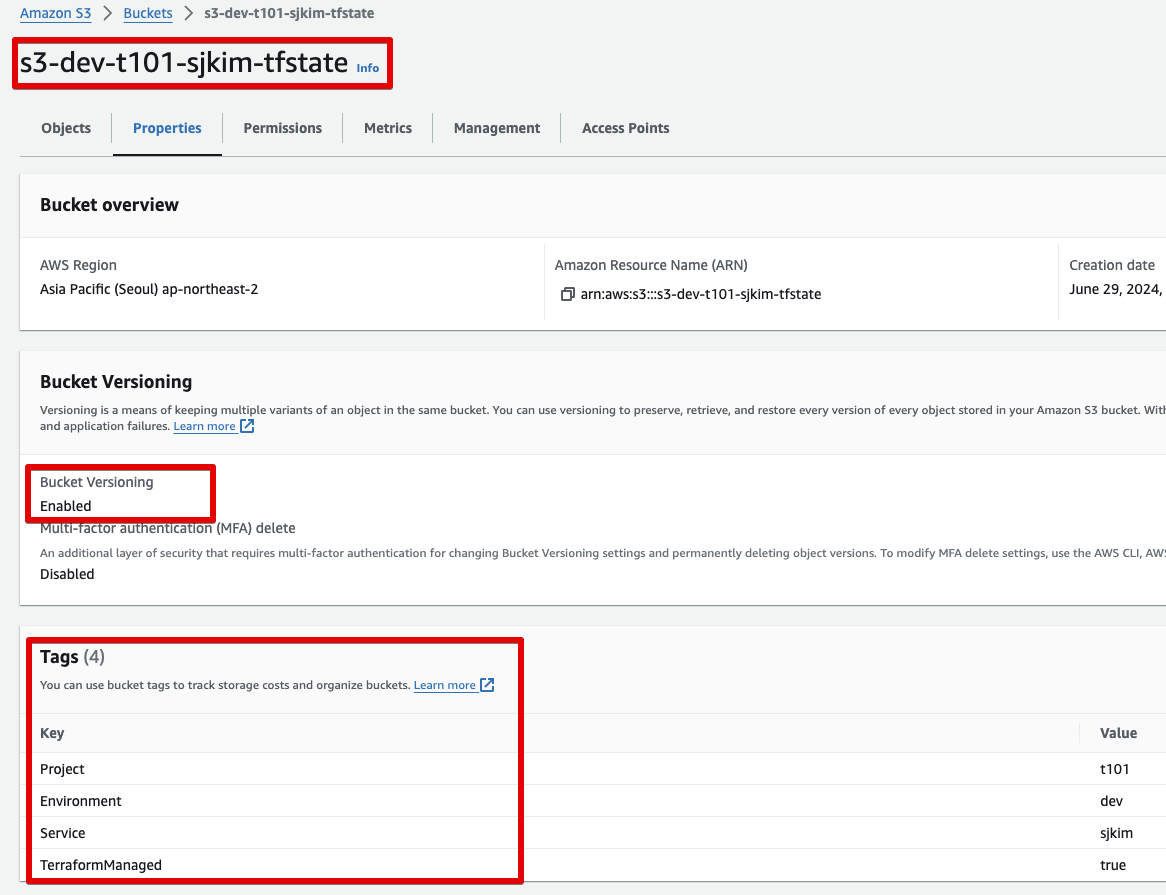

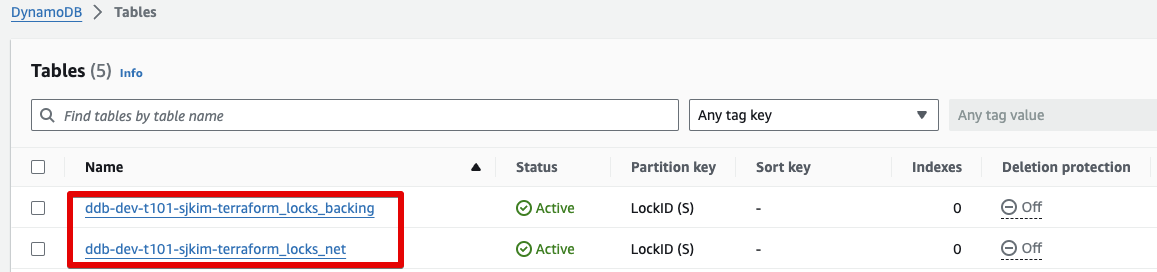

s3 생성 & tfstate 저장, DynamoDB Lock Table 생성 (tf-setup)

tfstate 원격 보관을 위한 S3 버킷 & DynamoDB 생성

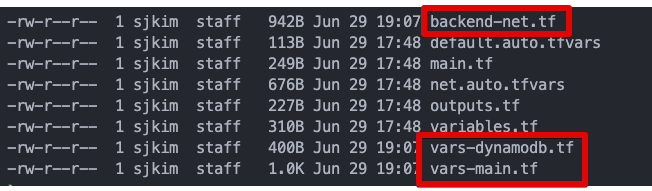

Provider & Backend(backend-net.tf, backend-backing.tf), vars-main.tf, vars-dynamodb.tf을 net과 backing 디렉토리로 복사

1.1 Terraform 코드

- provier.tf

terraform {

required_version = "1.8.5"

required_providers {

aws = {

source = "hashicorp/aws"

# Lock version to prevent unexpected problems

version = "5.56.0"

}

null = {

source = "hashicorp/null"

version = "~> 3.2.2"

}

external = {

source = "hashicorp/external"

version = "~> 2.3.3"

}

}

}

provider "aws" {

region = var.region

shared_credentials_files = ["~/.aws/credentials"]

profile = var.profile

default_tags {

tags = {

Environment = var.env

Project = var.pjt

Service = var.svc

TerraformManaged = true

}

}

}

provider "null" {}

provider "external" {}- vars-main.tf

variable "profile" {

description = "The name of the AWS profile in the credentials file"

type = string

default = "t101"

}

variable "region" {

description = "The name of the AWS Default Region"

type = string

default = "ap-northeast-2"

}

variable "stages" {

type = list(string)

default = ["net", "backing"]

}

variable "stagecount" {

type = number

default = 2

}

# default tag

variable "env" {

default = "dev"

}

variable "pjt" {

default = "t101"

}

variable "svc" {

default = "sjkim"

}

variable "tags" {

description = "Default tags attached to all resources."

type = map(string)

default = {

Environment = "dev",

Project = "t101",

Service = "sjkim",

TerraformManaged = "true"

}

}

variable "s3-tfsetate" {

default = "s3-dev-t101-sjkim-tfstate"

}

- vars-dynamodb.tf

variable "table_name_net" {

description = "The name of the DynamoDB table. Must be unique in this AWS account."

type = string

default = "ddb-dev-t101-sjkim-terraform_locks_net"

}

variable "table_name_backing" {

description = "The name of the DynamoDB table. Must be unique in this AWS account."

type = string

default = "ddb-dev-t101-sjkim-terraform_locks_backing"

} - s3-bucket.tf

resource "aws_s3_bucket" "terraform_state" {

bucket = var.s3-tfsetate

# This is only here so we can destroy the bucket as part of automated tests.

# You should not copy this for production usage

force_destroy = true

lifecycle {

ignore_changes = [bucket]

}

}

resource "aws_s3_bucket_versioning" "terraform_state_versioning" {

bucket = aws_s3_bucket.terraform_state.id

versioning_configuration {

status = "Enabled"

}

}

resource "aws_s3_bucket_server_side_encryption_configuration" "terraform_state_sse" {

bucket = aws_s3_bucket.terraform_state.id

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

resource "aws_s3_bucket_public_access_block" "terraform_state_versioning_pab" {

bucket = aws_s3_bucket.terraform_state.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}- dynamodb-tables.tf

resource "aws_dynamodb_table" "terraform_locks" {

count = var.stagecount

depends_on = [aws_s3_bucket.terraform_state]

name = format("ddb-%s-%s-%s-terraform_locks_%s", var.env, var.pjt, var.svc, var.stages[count.index])

billing_mode = "PAY_PER_REQUEST"

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

}- null_resource.tf

resource "null_resource" "sleep" {

triggers = {

always_run = timestamp()

}

depends_on = [aws_dynamodb_table.terraform_locks]

provisioner "local-exec" {

when = create

command = "sleep 5"

}

}

resource "null_resource" "gen_backend" {

triggers = {

always_run = timestamp()

}

depends_on = [null_resource.sleep]

provisioner "local-exec" {

when = create

command = "./gen-backend.sh"

}

} - gen-backend.sh

#!/bin/bash

cp dot-terraform.rc $HOME/.terraformrc

d=`pwd`

sleep 2

reg=`terraform output -json region | jq -r .[]`

if [[ -z ${reg} ]] ; then

echo "no terraform output variables - exiting ....."

echo "run terraform init/plan/apply in the the init directory first"

exit

else

echo "region=${reg}"

fi

mkdir -p $HOME/.terraform.d/plugin-cache

mkdir -p generated

SECTIONS=('net' 'backing' 'packer-ami' 'app')

for section in "${SECTIONS[@]}"

do

tabn=`terraform output dynamodb_table_name_$section | tr -d '"'`

s3b=`terraform output -json s3_bucket | jq -r .[]`

echo $s3b $tabn

cd $d

of=`echo "generated/backend-${section}.tf"`

vf=`echo "generated/vars-${section}.tf"`

# write out the backend config

printf "" > $of

printf "terraform {\n" >> $of

printf " required_version = \"~> 1.8.5\"\n" >> $of

printf " required_providers {\n" >> $of

printf " aws = {\n" >> $of

printf " source = \"hashicorp/aws\"\n" >> $of

printf " version = \"~> 5.56\"\n" >> $of

printf " }\n\n" >> $of

printf " null = {\n" >> $of

printf " source = \"hashicorp/null\"\n" >> $of

printf " version = \"~> 3.2.2\"\n" >> $of

printf " }\n\n" >> $of

printf " }\n\n" >> $of

printf " backend \"s3\" {\n" >> $of

printf " bucket = \"%s\"\n" $s3b >> $of

printf " key = \"terraform/%s.tfstate\"\n" $tabn >> $of

printf " region = \"%s\"\n" $reg >> $of

printf " dynamodb_table = \"%s\"\n" $tabn >> $of

printf " encrypt = \"true\"\n" >> $of

printf " }\n" >> $of

printf "}\n\n" >> $of

## Provider

printf "provider \"aws\" {\n" >> $of

printf " region = var.region\n" >> $of

printf " shared_credentials_files = [\"~/.aws/credentials\"]\n" >> $of

printf " profile = var.profile\n" >> $of

printf " \n" >> $of

printf " default_tags {\n" >> $of

printf " tags = {\n" >> $of

printf " Environment = var.env\n" >> $of

printf " Project = var.pjt\n" >> $of

printf " Service = var.svc\n" >> $of

printf " TerraformManaged = true\n" >> $of

printf " }\n" >> $of

printf " }\n" >> $of

printf "}\n" >> $of

# make directory

mkdir -p ../services/$section

# copy the files into place

cp -v $of ../services/$section

cp -v vars-main.tf ../services/$section

done

# next generate the remote_state config files

cd $d

echo "**** REMOTE ****"

RSECTIONS=('net' 'backing' 'packer-ami' 'app')

for section in "${RSECTIONS[@]}"

do

tabn=`terraform output dynamodb_table_name_$section | tr -d '"'`

s3b=`terraform output -json s3_bucket | jq -r .[]`

echo $s3b $tabn

of=`echo "generated/remote-${section}.tf"`

printf "" > $of

# write out the remote_state terraform files

printf "data terraform_remote_state \"%s\" {\n" $section>> $of

printf " backend = \"s3\"\n" >> $of

printf " config = {\n" >> $of

printf " bucket = \"%s\"\n" $s3b >> $of

printf " region = var.region\n" >> $of

printf " key = \"terraform/%s.tfstate\"\n" $tabn >> $of

printf " }\n" >> $of

printf "}\n" >> $of

done

echo "remotes"

# put in place remote state access where required

cp -v generated/remote-net.tf ../services/backing

cp -v generated/remote-net.tf ../services/packer-ami

cp -v generated/remote-net.tf ../services/app

# Prepare "local state" for the sample app and extra activities- output.tf

output "dynamodb_table_name_net" {

value = aws_dynamodb_table.terraform_locks[0].name

description = "The name of the DynamoDB table"

}

output "dynamodb_table_name_backing" {

value = aws_dynamodb_table.terraform_locks[1].name

description = "The name of the DynamoDB table"

}

output "dynamodb_table_name_packer-ami" {

value = aws_dynamodb_table.terraform_locks[2].name

description = "The name of the DynamoDB table"

}

output "dynamodb_table_name_app" {

value = aws_dynamodb_table.terraform_locks[3].name

description = "The name of the DynamoDB table"

}

output "region" {

value = aws_s3_bucket.terraform_state[*].region

description = "The name of the region"

}

output "s3_bucket" {

value = aws_s3_bucket.terraform_state[*].bucket

description = "The ARN of the S3 bucket"

}1.2 코드 실행

- $ terraform init && terraform validate && terraform fmt

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of hashicorp/external from the dependency lock file

- Reusing previous version of hashicorp/aws from the dependency lock file

- Reusing previous version of hashicorp/null from the dependency lock file

- Using previously-installed hashicorp/external v2.3.3

- Using previously-installed hashicorp/aws v5.56.0

- Using previously-installed hashicorp/null v3.2.2

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Success! The configuration is valid.- $ terraform plan -out tfplan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_dynamodb_table.terraform_locks[0] will be created

+ resource "aws_dynamodb_table" "terraform_locks" {

+ arn = (known after apply)

+ billing_mode = "PAY_PER_REQUEST"

+ hash_key = "LockID"

+ id = (known after apply)

+ name = "ddb-dev-t101-sjkim-terraform_locks_net"

+ read_capacity = (known after apply)

+ stream_arn = (known after apply)

+ stream_label = (known after apply)

+ stream_view_type = (known after apply)

+ tags_all = {

+ "Environment" = "dev"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

+ write_capacity = (known after apply)

+ attribute {

+ name = "LockID"

+ type = "S"

}

}

# aws_dynamodb_table.terraform_locks[1] will be created

...

# aws_s3_bucket.terraform_state will be created

+ resource "aws_s3_bucket" "terraform_state" {

+ acceleration_status = (known after apply)

+ acl = (known after apply)

+ arn = (known after apply)

+ bucket = "s3-dev-t101-sjkim-tfstate"

+ bucket_domain_name = (known after apply)

+ bucket_prefix = (known after apply)

+ bucket_regional_domain_name = (known after apply)

+ force_destroy = true

+ hosted_zone_id = (known after apply)

+ id = (known after apply)

+ object_lock_enabled = (known after apply)

+ policy = (known after apply)

+ region = (known after apply)

+ request_payer = (known after apply)

+ tags_all = {

+ "Environment" = "dev"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

+ website_domain = (known after apply)

+ website_endpoint = (known after apply)

}

# aws_s3_bucket_public_access_block.terraform_state_versioning_pab will be created

+ resource "aws_s3_bucket_public_access_block" "terraform_state_versioning_pab" {

+ block_public_acls = true

+ block_public_policy = true

+ bucket = (known after apply)

+ id = (known after apply)

+ ignore_public_acls = true

+ restrict_public_buckets = true

}

# aws_s3_bucket_server_side_encryption_configuration.terraform_state_sse will be created

+ resource "aws_s3_bucket_server_side_encryption_configuration" "terraform_state_sse" {

+ bucket = (known after apply)

+ id = (known after apply)

+ rule {

+ apply_server_side_encryption_by_default {

+ sse_algorithm = "AES256"

# (1 unchanged attribute hidden)

}

}

}

# aws_s3_bucket_versioning.terraform_state_versioning will be created

+ resource "aws_s3_bucket_versioning" "terraform_state_versioning" {

+ bucket = (known after apply)

+ id = (known after apply)

+ versioning_configuration {

+ mfa_delete = (known after apply)

+ status = "Enabled"

}

}

# null_resource.gen_backend will be created

+ resource "null_resource" "gen_backend" {

+ id = (known after apply)

+ triggers = {

+ "always_run" = (known after apply)

}

}

# null_resource.sleep will be created

+ resource "null_resource" "sleep" {

+ id = (known after apply)

+ triggers = {

+ "always_run" = (known after apply)

}

}

Plan: 8 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ dynamodb_table_name_backing = "ddb-dev-t101-sjkim-terraform_locks_backing"

+ dynamodb_table_name_net = "ddb-dev-t101-sjkim-terraform_locks_net"

+ region = [

+ (known after apply),

]

+ s3_bucket = [

+ "s3-dev-t101-sjkim-tfstate",

]

────────────────────────────────────────────────────────────────────────

Saved the plan to: tfplan

To perform exactly these actions, run the following command to apply:

terraform apply "tfplan"- $ terraform apply tfplan

aws_s3_bucket.terraform_state: Creating...

aws_s3_bucket.terraform_state: Creation complete after 2s [id=s3-dev-t101-sjkim-tfstate]

aws_s3_bucket_public_access_block.terraform_state_versioning_pab: Creating...

aws_s3_bucket_server_side_encryption_configuration.terraform_state_sse: Creating...

aws_s3_bucket_versioning.terraform_state_versioning: Creating...

aws_dynamodb_table.terraform_locks[1]: Creating...

aws_dynamodb_table.terraform_locks[0]: Creating...

aws_s3_bucket_public_access_block.terraform_state_versioning_pab: Creation complete after 0s [id=s3-dev-t101-sjkim-tfstate]

aws_s3_bucket_server_side_encryption_configuration.terraform_state_sse: Creation complete after 1s [id=s3-dev-t101-sjkim-tfstate]

aws_s3_bucket_versioning.terraform_state_versioning: Creation complete after 2s [id=s3-dev-t101-sjkim-tfstate]

aws_dynamodb_table.terraform_locks[1]: Creation complete after 6s [id=ddb-dev-t101-sjkim-terraform_locks_backing]

aws_dynamodb_table.terraform_locks[0]: Still creating... [10s elapsed]

aws_dynamodb_table.terraform_locks[0]: Creation complete after 13s [id=ddb-dev-t101-sjkim-terraform_locks_net]

null_resource.sleep: Creating...

null_resource.sleep: Provisioning with 'local-exec'...

null_resource.sleep (local-exec): Executing: ["/bin/sh" "-c" "sleep 5"]

null_resource.sleep: Creation complete after 5s [id=2893517309727500101]

null_resource.gen_backend: Creating...

null_resource.gen_backend: Provisioning with 'local-exec'...

null_resource.gen_backend (local-exec): Executing: ["/bin/sh" "-c" "./gen-backend.sh"]

null_resource.gen_backend (local-exec): region=ap-northeast-2

null_resource.gen_backend (local-exec): s3-dev-t101-sjkim-tfstate ddb-dev-t101-sjkim-terraform_locks_net

null_resource.gen_backend (local-exec): generated/backend-net.tf -> ../services/net/backend-net.tf

null_resource.gen_backend (local-exec): vars-dynamodb.tf -> ../services/net/vars-dynamodb.tf

null_resource.gen_backend (local-exec): vars-main.tf -> ../services/net/vars-main.tf

null_resource.gen_backend (local-exec): s3-dev-t101-sjkim-tfstate ddb-dev-t101-sjkim-terraform_locks_backing

null_resource.gen_backend (local-exec): generated/backend-backing.tf -> ../services/backing/backend-backing.tf

null_resource.gen_backend (local-exec): vars-dynamodb.tf -> ../services/backing/vars-dynamodb.tf

null_resource.gen_backend (local-exec): vars-main.tf -> ../services/backing/vars-main.tf

null_resource.gen_backend (local-exec): **** REMOTE ****

null_resource.gen_backend (local-exec): s3-dev-t101-sjkim-tfstate ddb-dev-t101-sjkim-terraform_locks_net

null_resource.gen_backend (local-exec): s3-dev-t101-sjkim-tfstate ddb-dev-t101-sjkim-terraform_locks_backing

null_resource.gen_backend (local-exec): remotes

null_resource.gen_backend (local-exec): generated/remote-net.tf -> ../services/backing/remote-net.tf

null_resource.gen_backend (local-exec): Copy vars/aws.tf

null_resource.gen_backend: Creation complete after 5s [id=1579870088706299488]

Apply complete! Resources: 8 added, 0 changed, 0 destroyed.

Outputs:

dynamodb_table_name_backing = "ddb-dev-t101-sjkim-terraform_locks_backing"

dynamodb_table_name_net = "ddb-dev-t101-sjkim-terraform_locks_net"

region = [

"ap-northeast-2",

]

s3_bucket = [

"s3-dev-t101-sjkim-tfstate",

]1.3 실행 결과

- $ terraform output

dynamodb_table_name_backing = "ddb-dev-t101-sjkim-terraform_locks_backing"

dynamodb_table_name_net = "ddb-dev-t101-sjkim-terraform_locks_net"

region = [

"ap-northeast-2",

]

s3_bucket = [

"s3-dev-t101-sjkim-tfstate",

]- $ terraform state list

aws_dynamodb_table.terraform_locks[0]

aws_dynamodb_table.terraform_locks[1]

aws_s3_bucket.terraform_state

aws_s3_bucket_public_access_block.terraform_state_versioning_pab

aws_s3_bucket_server_side_encryption_configuration.terraform_state_sse

aws_s3_bucket_versioning.terraform_state_versioning

null_resource.gen_backend

null_resource.sleep- S3 버킷 생성 됨

Env-Project-Service 형식으로 버킷명 생성

버킷 버저닝 활성화

Default Tag 적용, TerraformManaged Tag키에 의해 Terraform으로 대상/비대상 식별 용이

-

DynamoDB Locks Table 생성 됨

-

services > net, backing에 3개 파일 복사

VPC 구성(net)

2.1.1 Terraform 코드 (services/net)

- backend-net.tf

backend 저장소로 s3 이용하도록 코드화

terraform {

required_version = "~> 1.8.5"

required_providers {

aws = {

source = "hashicorp/aws"

# Lock version to avoid unexpected problems

version = "~> 5.56"

}

null = {

source = "hashicorp/null"

version = "~> 3.2.2"

}

}

backend "s3" {

bucket = "s3-dev-t101-sjkim-tfstate"

key = "terraform/ddb-dev-t101-sjkim-terraform_locks_net.tfstate"

region = "ap-northeast-2"

dynamodb_table = "ddb-dev-t101-sjkim-terraform_locks_net"

encrypt = "true"

}

}

provider "aws" {

region = var.region

shared_credentials_files = ["~/.aws/credentials"]

profile = var.profile

default_tags {

tags = {

Environment = var.env

Project = var.pjt

Service = var.svc

TerraformManaged = true

}

}

}- main.tf

module "vpc" {

source = "../../modules/net"

vpc_cidr = var.vpc_cidr

env = var.env

pjt = var.pjt

svc = var.svc

public_subnets = var.public_subnets

private_subnets = var.private_subnets

zones = var.zones

}- variables.tf

variable "zones" {

type = list(string)

}

# vpc

variable "vpc_cidr" {}

# subnets

variable "public_subnets" {

type = list(object({

name = string

zone = string

cidr = string

}))

}

variable "private_subnets" {

type = list(object({

name = string

zone = string

cidr = string

}))

} - default.auto.tfvars

region = "ap-northeast-2"

zones = ["ap-northeast-2a", "ap-northeast-2c"]

env = "dev"

pjt = "t101"

svc = "sjkim" - net.auto.tfvars

# vpc cidr

vpc_cidr = "172.16.0.0/23"

# public subnets

public_subnets = [

{

name = "pub-az2a"

zone = "ap-northeast-2a"

cidr = "172.16.0.0/26"

},

{

name = "pub-az2c"

zone = "ap-northeast-2c"

cidr = "172.16.0.64/26"

}

]

# private subnets

private_subnets = [

{

name = "pri-backing-az2a"

zone = "ap-northeast-2a"

cidr = "172.16.0.128/26"

},

{

name = "pri-backing-az2c"

zone = "ap-northeast-2c"

cidr = "172.16.0.192/26"

},

{

name = "pri-server-az2a"

zone = "ap-northeast-2a"

cidr = "172.16.1.0/25"

},

{

name = "pri-server-az2c"

zone = "ap-northeast-2c"

cidr = "172.16.1.128/25"

},

]- output.tf

output "vpc_name" {

value = try(module.net.vpc_name, "")

}

output "vpc_id" {

value = try(module.net.vpc_id, "")

}

output "public_subnet_name" {

value = try(module.net.public_subnet_name, "")

}

output "public_subnet_id" {

value = try(module.net.public_subnet_id, "")

}

output "private_subnet_name" {

value = try(module.net.private_subnet_name, "")

}

output "private_subnet_id" {

value = try(module.net.private_subnet_id, "")

}

2.1.2 Terraform 코드 (module/net)

- 1_vpc.tf

# VPC 설정

resource "aws_vpc" "vpc" {

cidr_block = var.vpc_cidr

enable_dns_hostnames = true

enable_dns_support = true

tags = {

Name = "vpc-${local.default_tag}"

}

}- 2_subnets.tf

- Count 반복문 사용하여 Public과 Private Subnet 생성

# Public Subnet

resource "aws_subnet" "public" {

count = length(var.public_subnets) > 0 ? length(var.public_subnets) : 0

vpc_id = aws_vpc.vpc.id

availability_zone = var.public_subnets[count.index].zone

cidr_block = var.public_subnets[count.index].cidr

map_public_ip_on_launch = true

tags = merge({ Name = format("sbn-${local.default_tag}-%s", var.public_subnets[count.index].name) },

)

}

# Private Subnet

resource "aws_subnet" "private" {

count = length(var.private_subnets) > 0 ? length(var.private_subnets) : 0

vpc_id = aws_vpc.vpc.id

availability_zone = var.private_subnets[count.index].zone

cidr_block = var.private_subnets[count.index].cidr

tags = merge({ Name = format("sbn-${local.default_tag}-%s", var.private_subnets[count.index].name) },

)

}- 3_igw_nat.tf

- igw, eip, nat 생성

# internet gateway 생성

resource "aws_internet_gateway" "igw" {

count = length(var.public_subnets) > 0 ? 1 : 0

vpc_id = aws_vpc.vpc.id

tags = {

Name = "igw-${local.default_tag}"

}

depends_on = [

aws_vpc.vpc

]

}

# NAT 용 eip 생성

resource "aws_eip" "eip_nat" {

count = (var.env != "dev") ? length(var.zones) : 1 // stg, prd => zone 수 만큼, dev => 1개

depends_on = [aws_internet_gateway.igw[0]]

tags = {

Name = format("eip-${local.default_tag}-nat-az%s",

substr(var.zones[count.index], length(var.zones[count.index]) - 2, length(var.zones[count.index])))

}

}

# NAT 생성

resource "aws_nat_gateway" "nat" {

count = (var.env != "dev") ? length(var.zones) : 1

subnet_id = aws_subnet.public[count.index].id

allocation_id = aws_eip.eip_nat[count.index].id

depends_on = [aws_internet_gateway.igw[0]]

tags = {

Name = format("nat-${local.default_tag}-az%s",

substr(var.zones[count.index], length(var.zones[count.index]) - 2, length(var.zones[count.index])))

}

}- 4_route_tables.tf

# Public route table 생성

resource "aws_route_table" "public" {

count = length(var.public_subnets) > 0 ? 1 : 0

vpc_id = aws_vpc.vpc.id

tags = {

Name = "rt-${local.default_tag}-public",

Service = "Public Route Table"

}

}

resource "aws_route" "public" {

route_table_id = aws_route_table.public[0].id

destination_cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw[0].id

}

# private route table 생성

resource "aws_route_table" "private" {

count = (var.env != "dev") ? length(var.zones) : 1

vpc_id = aws_vpc.vpc.id

tags = {

Name = (var.env != "dev") ? "rt-${local.default_tag}-private${count.index}" : "rt-${local.default_tag}-private",

Service = "Private Route Table"

}

}

resource "aws_route" "private" {

count = (var.env != "dev") ? length(var.zones) : 1

route_table_id = aws_route_table.private[count.index].id

destination_cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.nat[count.index].id

}

# public route table <-> public subnet 연동

resource "aws_route_table_association" "public" {

count = length(var.public_subnets) > 0 ? length(var.public_subnets) : 0

subnet_id = aws_subnet.public[count.index].id

route_table_id = aws_route_table.public[0].id

}

# private route table <-> private subnet 연동

resource "aws_route_table_association" "private" {

count = length(var.private_subnets)

subnet_id = aws_subnet.private[count.index].id

route_table_id = (var.env == "dev") ? aws_route_table.private[0].id : aws_route_table.private[count.index % length(var.zones)].id

}- data.tf

data "aws_region" "this" {}- locals.tf

locals {

default_tag = "${var.env}-${var.pjt}-${var.svc}"

subnet_tag = "${var.env}-${var.pjt}"

}- variables.tf

variable "vpc_cidr" {}

variable "env" {}

variable "pjt" {}

variable "svc" {}

variable "public_subnets" {}

variable "private_subnets" {}

variable "zones" {}- output.tf

output "vpc_id" {

value = aws_vpc.vpc.id

}

output "vpc_name" {

value = "vpc-${local.default_tag}"

}

output "public_subnet_name" {

value = { for k, v in var.public_subnets : k => format("sbn-${local.default_tag}-%s", v.name) }

}

output "public_subnet_id" {

value = { for k, v in aws_subnet.public : k => format("%s", v.id) }

}

output "private_subnet_name" {

value = { for k, v in var.private_subnets : k => format("sbn-${local.default_tag}-%s", v.name) }

}

output "private_subnet_id" {

value = { for k, v in aws_subnet.private : k => format("%s", v.id) }

}2.2 코드 실행 - services/net 디렉토리에서 실행

- $ terraform init && terraform validate && terraform fmt

- $ terraform plan -out tfplan

module.vpc.data.aws_region.this: Reading...

module.vpc.data.aws_region.this: Read complete after 0s [id=ap-northeast-2]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.vpc.aws_eip.eip_nat[0] will be created

+ resource "aws_eip" "eip_nat" {

+ allocation_id = (known after apply)

+ arn = (known after apply)

...

+ public_ip = (known after apply)

+ public_ipv4_pool = (known after apply)

+ tags = {

+ "Name" = "eip-dev-t101-sjkim-nat-az2a"

}

+ tags_all = {

+ "Environment" = "dev"

+ "Name" = "eip-dev-t101-sjkim-nat-az2a"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

+ vpc = (known after apply)

}

# module.vpc.aws_internet_gateway.igw[0] will be created

+ resource "aws_internet_gateway" "igw" {

+ arn = (known after apply)

+ id = (known after apply)

+ owner_id = (known after apply)

+ tags = {

+ "Name" = "igw-dev-t101-sjkim"

}

+ tags_all = {

+ "Environment" = "dev"

+ "Name" = "igw-dev-t101-sjkim"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

+ vpc_id = (known after apply)

}

# module.vpc.aws_nat_gateway.nat[0] will be created

+ resource "aws_nat_gateway" "nat" {

# module.vpc.aws_route.private[0] will be created

+ resource "aws_route" "private" {

+ destination_cidr_block = "0.0.0.0/0"

+ id = (known after apply)

+ instance_id = (known after apply)

+ instance_owner_id = (known after apply)

+ nat_gateway_id = (known after apply)

+ network_interface_id = (known after apply)

+ origin = (known after apply)

+ route_table_id = (known after apply)

+ state = (known after apply)

}

# module.vpc.aws_route.public will be created

...

# module.vpc.aws_route_table.private[0] will be created

...

# module.vpc.aws_vpc.vpc will be created

+ resource "aws_vpc" "vpc" {

+ arn = (known after apply)

+ cidr_block = "172.16.0.0/23"

+ default_network_acl_id = (known after apply)

+ default_route_table_id = (known after apply)

+ default_security_group_id = (known after apply)

+ dhcp_options_id = (known after apply)

+ enable_dns_hostnames = true

+ enable_dns_support = true

+ enable_network_address_usage_metrics = (known after apply)

+ id = (known after apply)

+ instance_tenancy = "default"

+ ipv6_association_id = (known after apply)

+ ipv6_cidr_block = (known after apply)

+ ipv6_cidr_block_network_border_group = (known after apply)

+ main_route_table_id = (known after apply)

+ owner_id = (known after apply)

+ tags = {

+ "Name" = "vpc-dev-t101-sjkim"

}

+ tags_all = {

+ "Environment" = "dev"

+ "Name" = "vpc-dev-t101-sjkim"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

}

Plan: 20 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ private_subnet_name = {

+ "0" = "sbn-dev-t101-sjkim-pri-backing-az2a"

+ "1" = "sbn-dev-t101-sjkim-pri-backing-az2c"

+ "2" = "sbn-dev-t101-sjkim-pri-server-az2a"

+ "3" = "sbn-dev-t101-sjkim-pri-server-az2c"

}

+ public_subnet_name = {

+ "0" = "sbn-dev-t101-sjkim-pub-az2a"

+ "1" = "sbn-dev-t101-sjkim-pub-az2c"

}

+ vpc_name = "vpc-dev-t101-sjkim"

────────────────────────────────────────────────────────────────────────

Saved the plan to: tfplan

To perform exactly these actions, run the following command to apply:

terraform apply "tfplan"- $ terraform apply tfplan

module.vpc.aws_vpc.vpc: Creating...

module.vpc.aws_vpc.vpc: Still creating... [10s elapsed]

module.vpc.aws_vpc.vpc: Creation complete after 12s [id=vpc-066f0659402af2745]

module.vpc.aws_subnet.private[2]: Creating...

module.vpc.aws_internet_gateway.igw[0]: Creating...

module.vpc.aws_subnet.private[0]: Creating...

module.vpc.aws_subnet.private[3]: Creating...

module.vpc.aws_subnet.public[0]: Creating...

module.vpc.aws_subnet.private[1]: Creating...

module.vpc.aws_route_table.public[0]: Creating...

module.vpc.aws_route_table.private[0]: Creating...

module.vpc.aws_subnet.public[1]: Creating...

module.vpc.aws_route_table.private[0]: Creation complete after 0s [id=rtb-06983c29469cc8a1e]

module.vpc.aws_internet_gateway.igw[0]: Creation complete after 0s [id=igw-03c8a1e207cd6a86a]

module.vpc.aws_eip.eip_nat[0]: Creating...

module.vpc.aws_route_table.public[0]: Creation complete after 0s [id=rtb-0e040a47906c28181]

module.vpc.aws_route.public: Creating...

module.vpc.aws_subnet.private[1]: Creation complete after 0s [id=subnet-03a6846f3a9853f64]

module.vpc.aws_subnet.private[0]: Creation complete after 0s [id=subnet-0a2afdbfe288a817a]

module.vpc.aws_subnet.private[3]: Creation complete after 0s [id=subnet-06facceb61473829b]

module.vpc.aws_subnet.private[2]: Creation complete after 1s [id=subnet-03a430a9a2381aa61]

module.vpc.aws_route_table_association.private[1]: Creating...

module.vpc.aws_route_table_association.private[0]: Creating...

module.vpc.aws_route_table_association.private[3]: Creating...

module.vpc.aws_route_table_association.private[2]: Creating...

module.vpc.aws_route.public: Creation complete after 1s [id=r-rtb-0e040a47906c281811080289494]

module.vpc.aws_eip.eip_nat[0]: Creation complete after 1s [id=eipalloc-036f9635b3aef4ea6]

module.vpc.aws_route_table_association.private[3]: Creation complete after 0s [id=rtbassoc-0c3dbcdd539484ef0]

module.vpc.aws_route_table_association.private[2]: Creation complete after 0s [id=rtbassoc-01b9fd00cbc44f4b6]

module.vpc.aws_route_table_association.private[0]: Creation complete after 0s [id=rtbassoc-09a87ba973d1ae145]

module.vpc.aws_route_table_association.private[1]: Creation complete after 0s [id=rtbassoc-0c2ce644941ad9d41]

module.vpc.aws_subnet.public[0]: Still creating... [10s elapsed]

module.vpc.aws_subnet.public[1]: Still creating... [10s elapsed]

module.vpc.aws_subnet.public[0]: Creation complete after 11s [id=subnet-041904318c6b486a4]

module.vpc.aws_subnet.public[1]: Creation complete after 11s [id=subnet-031f411d2815577cf]

module.vpc.aws_route_table_association.public[1]: Creating...

module.vpc.aws_route_table_association.public[0]: Creating...

module.vpc.aws_nat_gateway.nat[0]: Creating...

module.vpc.aws_route_table_association.public[1]: Creation complete after 0s [id=rtbassoc-0b9d85ab67a0bc6f0]

module.vpc.aws_route_table_association.public[0]: Still creating... [10s elapsed]

module.vpc.aws_nat_gateway.nat[0]: Still creating... [10s elapsed]

module.vpc.aws_route_table_association.public[0]: Still creating... [20s elapsed]

module.vpc.aws_nat_gateway.nat[0]: Still creating... [20s elapsed]

module.vpc.aws_route_table_association.public[0]: Creation complete after 23s [id=rtbassoc-0d17ba67232e9f6c8]

module.vpc.aws_nat_gateway.nat[0]: Still creating... [30s elapsed]

...

module.vpc.aws_nat_gateway.nat[0]: Still creating... [2m0s elapsed]

module.vpc.aws_nat_gateway.nat[0]: Creation complete after 2m4s [id=nat-07e01bee281137cf0]

module.vpc.aws_route.private[0]: Creating...

module.vpc.aws_route.private[0]: Creation complete after 1s [id=r-rtb-06983c29469cc8a1e1080289494]

Apply complete! Resources: 20 added, 0 changed, 0 destroyed.

Outputs:

private_subnet_name = {

"0" = "sbn-dev-t101-sjkim-pri-backing-az2a"

"1" = "sbn-dev-t101-sjkim-pri-backing-az2c"

"2" = "sbn-dev-t101-sjkim-pri-server-az2a"

"3" = "sbn-dev-t101-sjkim-pri-server-az2c"

}

public_subnet_name = {

"0" = "sbn-dev-t101-sjkim-pub-az2a"

"1" = "sbn-dev-t101-sjkim-pub-az2c"

}

vpc_name = "vpc-dev-t101-sjkim"2.3 실행 결과

- $ terrafom state list

module.vpc.data.aws_region.this

module.vpc.aws_eip.eip_nat[0]

module.vpc.aws_internet_gateway.igw[0]

module.vpc.aws_nat_gateway.nat[0]

module.vpc.aws_route.private[0]

module.vpc.aws_route.public

module.vpc.aws_route_table.private[0]

module.vpc.aws_route_table.public[0]

module.vpc.aws_route_table_association.private[0]

module.vpc.aws_route_table_association.private[1]

module.vpc.aws_route_table_association.private[2]

module.vpc.aws_route_table_association.private[3]

module.vpc.aws_route_table_association.public[0]

module.vpc.aws_route_table_association.public[1]

module.vpc.aws_subnet.private[0]

module.vpc.aws_subnet.private[1]

module.vpc.aws_subnet.private[2]

module.vpc.aws_subnet.private[3]

module.vpc.aws_subnet.public[0]

module.vpc.aws_subnet.public[1]

module.vpc.aws_vpc.vpc-

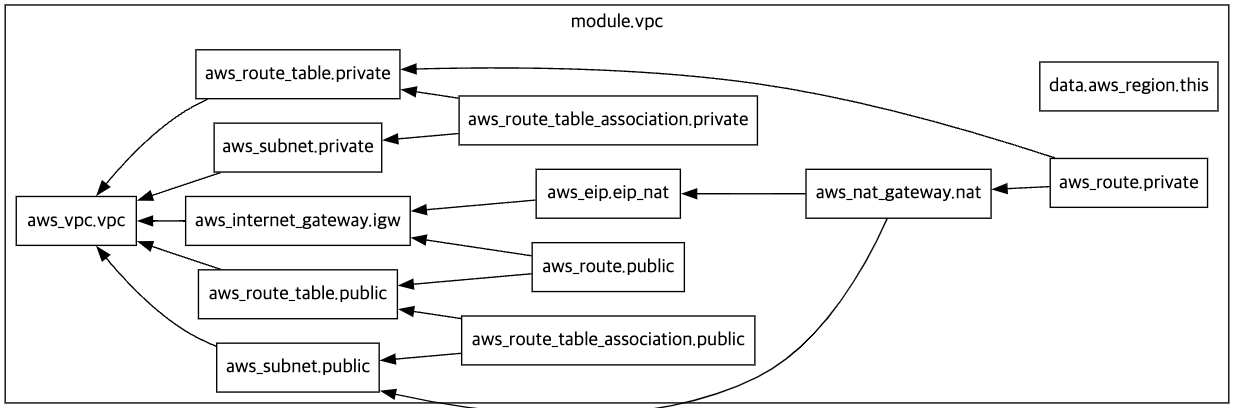

$ terraform graph > graph.dot

-

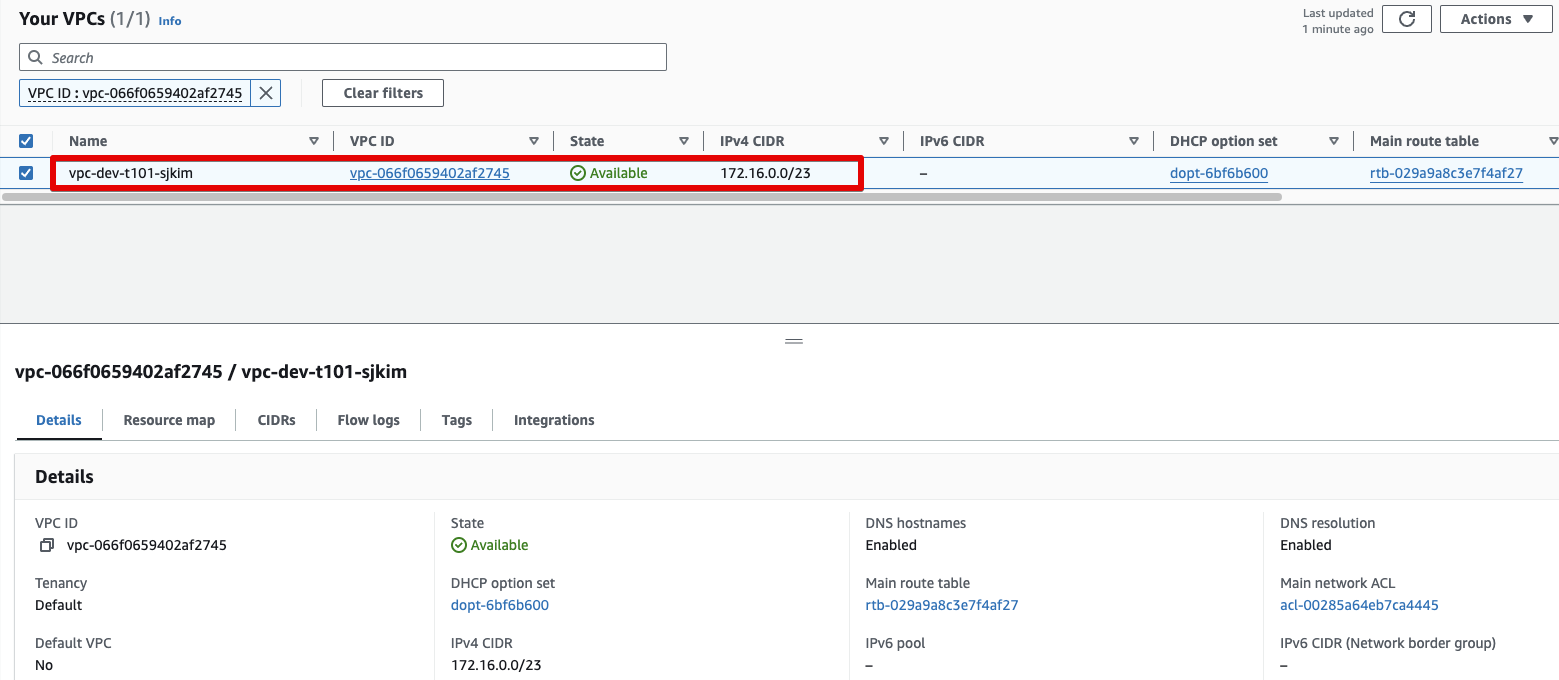

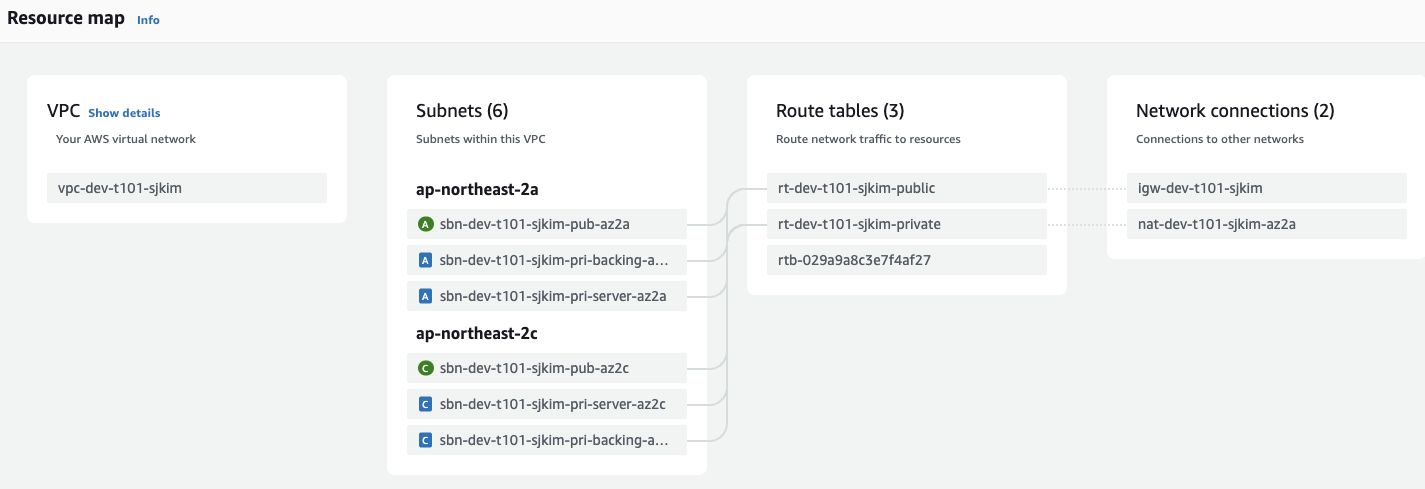

vpc

-

Resouce map

-

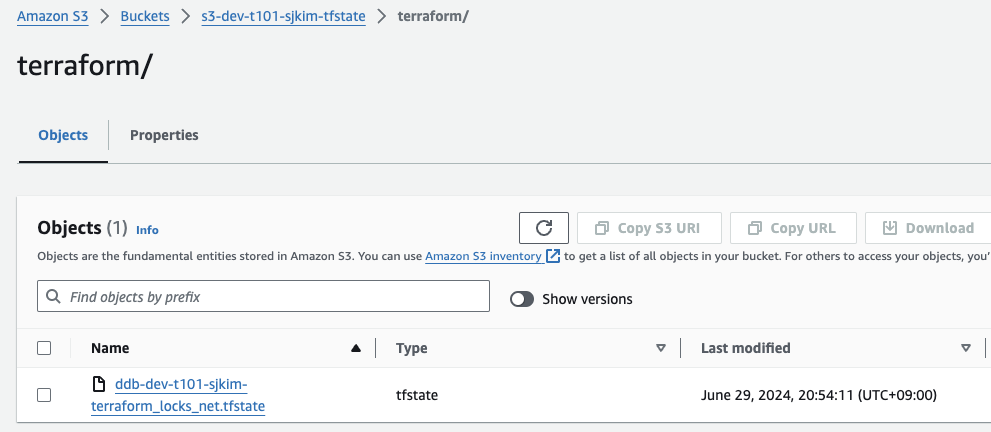

S3 terraform state 저장 내용

-

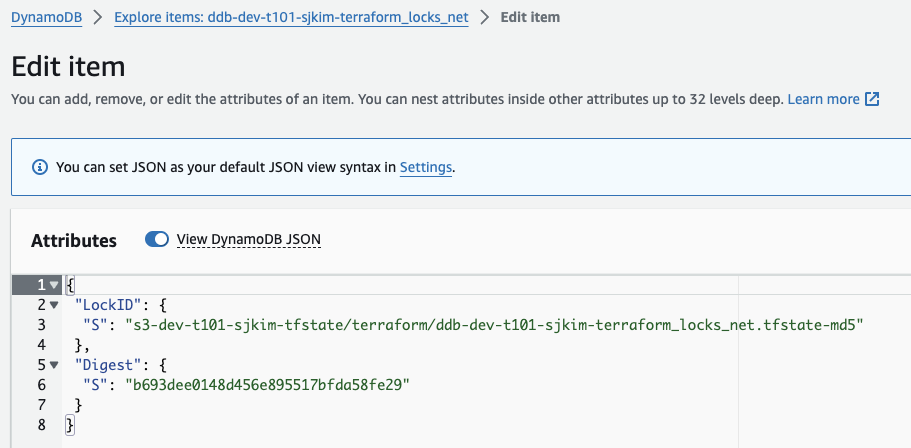

DynamoDB 저장된 내용

MSK 구성(msk)

3.1.1 Terraform 코드 (services/backing)

- backend-backing.tf

terraform {

required_version = "~> 1.8.5"

required_providers {

aws = {

source = "hashicorp/aws"

# Lock version to avoid unexpected problems

version = "~> 5.56"

}

null = {

source = "hashicorp/null"

version = "~> 3.2.2"

}

}

backend "s3" {

bucket = "s3-dev-t101-sjkim-tfstate"

key = "terraform/ddb-dev-t101-sjkim-terraform_locks_backing.tfstate"

region = "ap-northeast-2"

dynamodb_table = "ddb-dev-t101-sjkim-terraform_locks_backing"

encrypt = "true"

}

}

provider "aws" {

region = var.region

shared_credentials_files = ["~/.aws/credentials"]

profile = var.profile

default_tags {

tags = {

Environment = var.env

Project = var.pjt

Service = var.svc

TerraformManaged = true

}

}

}- main.tf

module "msk" {

source = "../../modules/msk"

vpc_name = var.vpc_name

msk_cluster = var.msk_cluster

msk_subnet_name = var.msk_subnet_name

msk_security_group = var.msk_security_group

msk_log_group = var.msk_log_group

# ebs_volume_size = var.ebs_volume_size

env = var.env

pjt = var.pjt

svc = var.svc

}- variables.tf

# region

variable "zones" {

type = list(string)

}

# vpc

variable "vpc_name" {

default = "vpc-dev-t101-sjkim"

}

# msk

variable "msk_cluster" {}

variable "msk_subnet_name" {}

variable "msk_security_group" {}

variable "msk_log_group" {}

}- default.auto.tfvars

env = "dev"

pjt = "t101"

svc = "sjkim"

region = "ap-northeast-2"

zones = ["ap-northeast-2a", "ap-northeast-2c"]- msk.auto.tfvars

msk_cluster = {

"msk" = {

kafka_version = "2.8.1"

number_of_broker_nodes = 2

instance_type = "kafka.t3.small"

ebs_volume_size = 10

}

}

msk_subnet_name = ["sbn-dev-t101-sjkim-pri-backing-az2a", "sbn-dev-t101-sjkim-pri-backing-az2c"]

msk_security_group = {

"msk" = {

ingress = [

{

from_port = 2182

to_port = 2182

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

description = "zookeeper port"

},

{

from_port = 9092

to_port = 9092

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

description = "bootstrap - endpoint of broker"

},

{

from_port = 9094

to_port = 9094

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

description = "encryption of broker messeges replication"

},

{

from_port = 9096

to_port = 9096

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

description = ""

},

{

from_port = 9098

to_port = 9098

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

description = ""

}]

egress = [

{

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

description = ""

}]

}

}

msk_log_group = {

"msk" = {

name = "/aws/msk/msk-dev-t101-sejkim"

}

}- output.tf

output "msk" {

value = try(module.msk, "")

}3.1.2 Terraform 코드 (module/msk)

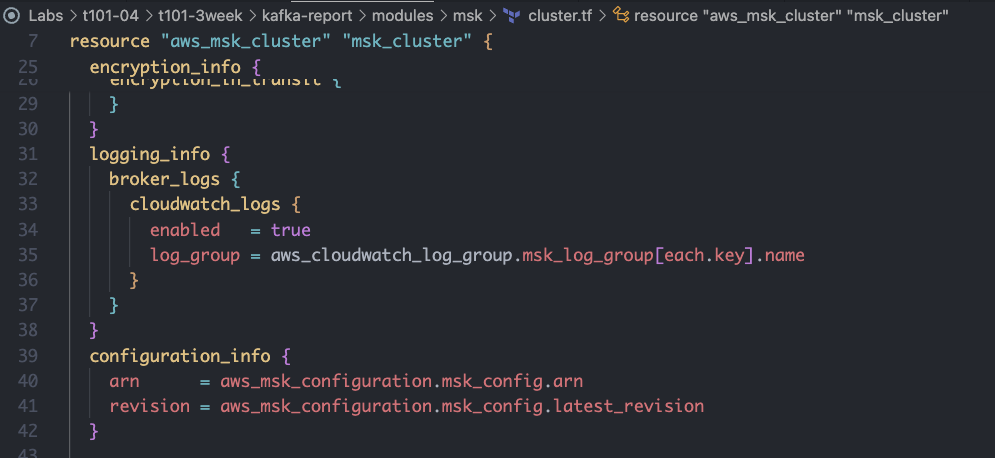

- cluster.tf

resource "aws_cloudwatch_log_group" "msk_log_group" {

for_each = var.msk_log_group

name = each.value.name

}

resource "aws_msk_cluster" "msk_cluster" {

for_each = var.msk_cluster

cluster_name = "msk-${local.default_tag}-${each.key}-cluster"

kafka_version = each.value.kafka_version

number_of_broker_nodes = each.value.number_of_broker_nodes

enhanced_monitoring = "PER_TOPIC_PER_BROKER"

broker_node_group_info {

instance_type = each.value.instance_type

client_subnets = tolist(data.aws_subnets.msk_subnets.ids)

security_groups = [aws_security_group.msk_security_group[each.key].id]

storage_info {

ebs_storage_info {

volume_size = each.value.ebs_volume_size

}

}

}

encryption_info {

encryption_in_transit {

client_broker = "TLS_PLAINTEXT"

in_cluster = "true"

}

}

logging_info {

broker_logs {

cloudwatch_logs {

enabled = true

log_group = aws_cloudwatch_log_group.msk_log_group[each.key].name

}

}

}

configuration_info {

arn = aws_msk_configuration.msk_config.arn

revision = aws_msk_configuration.msk_config.latest_revision

}

# configuration_info {

# arn = aws_msk_configuration.msk_config[each.key].arn

# revision = aws_msk_configuration.msk_config[each.key].latest_revision

# }

tags = {

Name = "msk-${local.default_tag}-${each.key}-cluster"

}

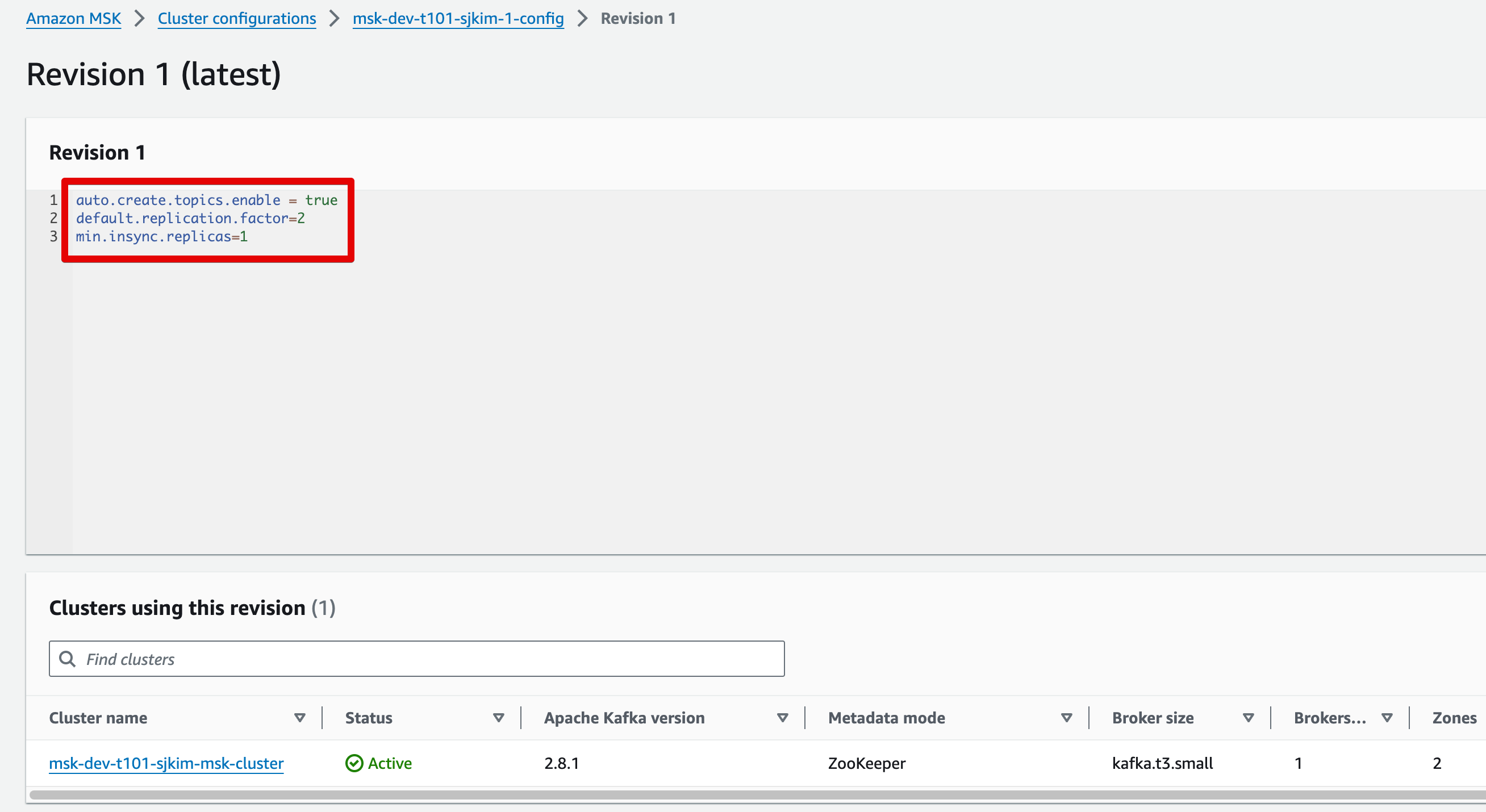

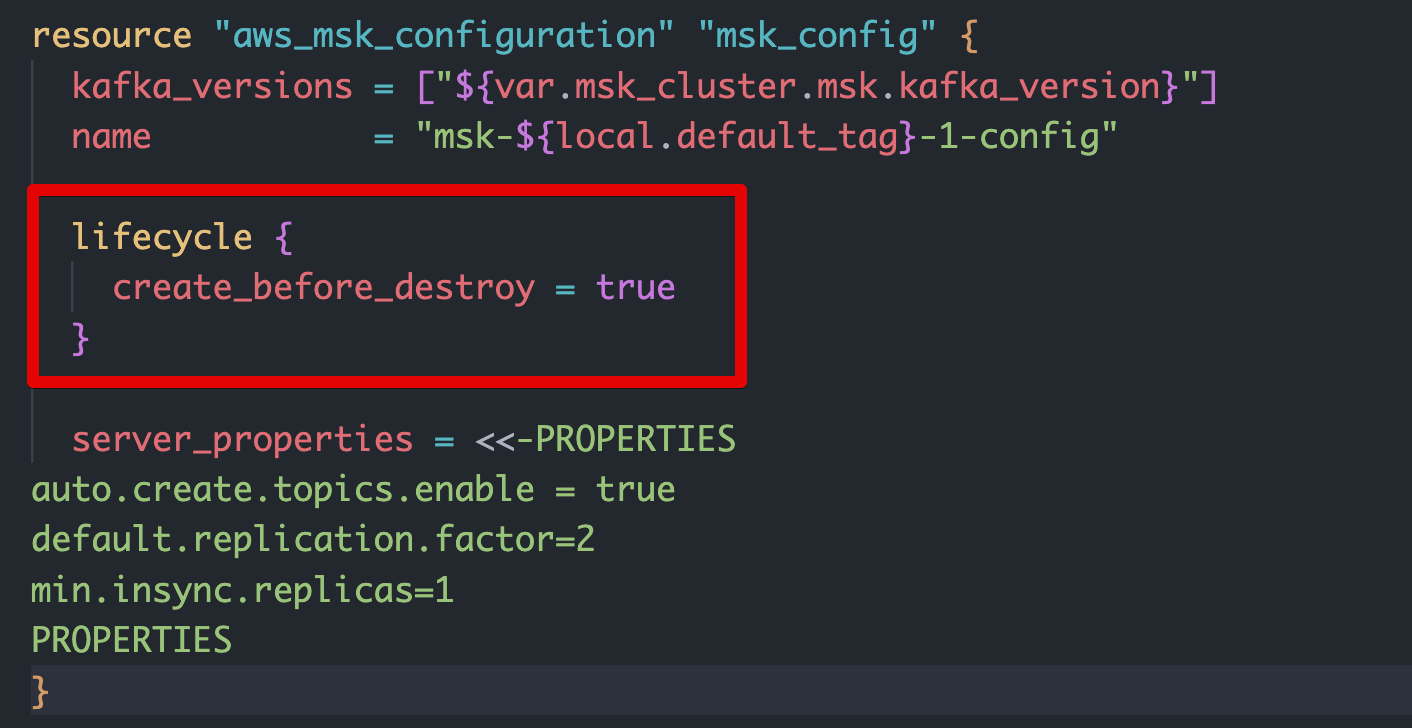

}- config.tf

resource "aws_msk_configuration" "msk_config" {

kafka_versions = ["${var.msk_cluster.msk.kafka_version}"]

name = "msk-${local.default_tag}-1-config"

lifecycle {

create_before_destroy = true

}

server_properties = <<-PROPERTIES

auto.create.topics.enable = true

default.replication.factor=2

min.insync.replicas=1

PROPERTIES

}

# resource "aws_msk_configuration" "msk_config" {

# for_each = var.msk_cluster

# kafka_versions = [each.value.kafka_version]

# name = "msk-${local.default_tag}-${each.key}-config"

# lifecycle {

# create_before_destroy = true

# }

# server_properties = <<-PROPERTIES

# auto.create.topics.enable = true

# default.replication.factor=2

# min.insync.replicas=1

# PROPERTIES

# }- security_group.tf

# Security Group

resource "aws_security_group" "msk_security_group" {

for_each = var.msk_security_group

name = "sg_${local.default_tag}-${each.key}"

vpc_id = data.aws_vpc.vpc.id

tags = {

Name = "sg-${local.default_tag}-${each.key}"

}

lifecycle {

create_before_destroy = true

}

}

locals {

msk_ingress = flatten([for msk, types in var.msk_security_group : [for type, rules in types : [for rule in rules : merge(rule, { name = msk }) if type == "ingress"]]])

msk_egress = flatten([for msk, types in var.msk_security_group : [for type, rules in types : [for rule in rules : merge(rule, { name = msk }) if type == "egress"]]])

}

resource "aws_security_group_rule" "msk_ingress" {

for_each = { for k, v in concat(local.msk_ingress) : "${k}.${v.name}.${v.from_port}" => v }

# Required

security_group_id = aws_security_group.msk_security_group[each.value.name].id

from_port = each.value.from_port

to_port = each.value.to_port

protocol = each.value.protocol

type = "ingress"

# Optional

description = try(each.value.description, null)

cidr_blocks = try(each.value.cidr_blocks, null)

self = try(each.value.self, null)

source_security_group_id = try(each.value.source_security_group_id, null)

}

resource "aws_security_group_rule" "msk_egress" {

for_each = { for k, v in local.msk_egress : "${k}.${v.name}.${v.from_port}" => v }

# Required

security_group_id = aws_security_group.msk_security_group[each.value.name].id

protocol = each.value.protocol

from_port = each.value.from_port

to_port = each.value.to_port

type = "egress"

# Optional

description = try(each.value.description, null)

cidr_blocks = try(each.value.cidr_blocks, null)

self = try(each.value.self, null)

source_security_group_id = try(each.value.source_security_group_id, null)

} - data.tf

data "aws_vpc" "vpc" {

filter {

name = "tag:Name"

values = [var.vpc_name]

}

}

data "aws_subnets" "msk_subnets" {

filter {

name = "tag:Name"

values = var.msk_subnet_name

}

}- local.tf

locals {

default_tag = "${var.env}-${var.pjt}-${var.svc}"

}- variable.tf

variable "vpc_name" {}

variable "msk_cluster" {}

variable "msk_subnet_name" {}

variable "msk_security_group" {}

variable "msk_log_group" {}

# variable "ebs_volume_size" {}

variable "env" {}

variable "pjt" {}

variable "svc" {}- output.tf

output "zookeeper_connect_string" {

value = { for k, v in aws_msk_cluster.msk_cluster : k => v.zookeeper_connect_string }

}

output "bootstrap_brokers_tls" {

description = "TLS connection host:port pairs"

value = { for k, v in aws_msk_cluster.msk_cluster : k => v.bootstrap_brokers_tls }

}

output "msk_cluster_name" {

value = { for k, v in aws_msk_cluster.msk_cluster : k => v.cluster_name }

}3.2 코드 실행 - services/backing 디렉토리에서 실행

- $ terraform init && terraform validate && terraform fmt

- $ terraform plan -out tfplan

data.terraform_remote_state.net: Reading...

module.msk.data.aws_subnets.msk_subnets: Reading...

module.msk.data.aws_vpc.vpc: Reading...

data.terraform_remote_state.net: Read complete after 1s

module.msk.data.aws_subnets.msk_subnets: Read complete after 0s [id=ap-northeast-2]

module.msk.data.aws_vpc.vpc: Read complete after 0s [id=vpc-066f0659402af2745]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.msk.aws_cloudwatch_log_group.msk_log_group["msk"] will be created

+ resource "aws_cloudwatch_log_group" "msk_log_group" {

+ arn = (known after apply)

+ id = (known after apply)

+ log_group_class = (known after apply)

+ name = "/aws/msk/msk-dev-t101-sejkim"

+ name_prefix = (known after apply)

+ retention_in_days = 0

+ skip_destroy = false

+ tags_all = {

+ "Environment" = "dev"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

}

# module.msk.aws_msk_cluster.msk_cluster["msk"] will be created

+ resource "aws_msk_cluster" "msk_cluster" {

+ arn = (known after apply)

+ bootstrap_brokers = (known after apply)

+ bootstrap_brokers_public_sasl_iam = (known after apply)

+ bootstrap_brokers_public_sasl_scram = (known after apply)

+ bootstrap_brokers_public_tls = (known after apply)

+ bootstrap_brokers_sasl_iam = (known after apply)

+ bootstrap_brokers_sasl_scram = (known after apply)

+ bootstrap_brokers_tls = (known after apply)

+ bootstrap_brokers_vpc_connectivity_sasl_iam = (known after apply)

+ bootstrap_brokers_vpc_connectivity_sasl_scram = (known after apply)

+ bootstrap_brokers_vpc_connectivity_tls = (known after apply)

+ cluster_name = "msk-dev-t101-sjkim-msk-cluster"

+ cluster_uuid = (known after apply)

+ current_version = (known after apply)

+ enhanced_monitoring = "PER_TOPIC_PER_BROKER"

+ id = (known after apply)

+ kafka_version = "2.8.1"

+ number_of_broker_nodes = 2

+ storage_mode = (known after apply)

+ tags = {

+ "Name" = "msk-dev-t101-sjkim-msk-cluster"

}

+ tags_all = {

+ "Environment" = "dev"

+ "Name" = "msk-dev-t101-sjkim-msk-cluster"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

+ zookeeper_connect_string = (known after apply)

+ zookeeper_connect_string_tls = (known after apply)

+ broker_node_group_info {

+ az_distribution = "DEFAULT"

+ client_subnets = [

+ "subnet-03a6846f3a9853f64",

+ "subnet-0a2afdbfe288a817a",

]

+ instance_type = "kafka.t3.small"

+ security_groups = (known after apply)

+ storage_info {

+ ebs_storage_info {

+ volume_size = 10

}

}

}

+ configuration_info {

+ arn = (known after apply)

+ revision = (known after apply)

}

+ encryption_info {

+ encryption_at_rest_kms_key_arn = (known after apply)

+ encryption_in_transit {

+ client_broker = "TLS_PLAINTEXT"

+ in_cluster = true

}

}

+ logging_info {

+ broker_logs {

+ cloudwatch_logs {

+ enabled = true

+ log_group = "/aws/msk/msk-dev-t101-sejkim"

}

}

}

}

# module.msk.aws_msk_configuration.msk_config will be created

+ resource "aws_msk_configuration" "msk_config" {

+ arn = (known after apply)

+ id = (known after apply)

+ kafka_versions = [

+ "2.8.1",

]

+ latest_revision = (known after apply)

+ name = "msk-dev-t101-sjkim-1-config"

+ server_properties = <<-EOT

auto.create.topics.enable = true

default.replication.factor=2

min.insync.replicas=1

EOT

}

# module.msk.aws_security_group.msk_security_group["msk"] will be created

+ resource "aws_security_group" "msk_security_group" {

+ arn = (known after apply)

+ description = "Managed by Terraform"

+ egress = (known after apply)

+ id = (known after apply)

+ ingress = (known after apply)

+ name = "sg_dev-t101-sjkim-msk"

+ name_prefix = (known after apply)

+ owner_id = (known after apply)

+ revoke_rules_on_delete = false

+ tags = {

+ "Name" = "sg-dev-t101-sjkim-msk"

}

+ tags_all = {

+ "Environment" = "dev"

+ "Name" = "sg-dev-t101-sjkim-msk"

+ "Project" = "t101"

+ "Service" = "sjkim"

+ "TerraformManaged" = "true"

}

+ vpc_id = "vpc-066f0659402af2745"

}

# module.msk.aws_security_group_rule.msk_egress["0.msk.0"] will be created

+ resource "aws_security_group_rule" "msk_egress" {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ from_port = 0

+ id = (known after apply)

+ protocol = "-1"

+ security_group_id = (known after apply)

+ security_group_rule_id = (known after apply)

+ self = false

+ source_security_group_id = (known after apply)

+ to_port = 0

+ type = "egress"

# (1 unchanged attribute hidden)

}

# module.msk.aws_security_group_rule.msk_ingress["0.msk.2182"] will be created

+ resource "aws_security_group_rule" "msk_ingress" {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = "zookeeper port"

+ from_port = 2182

+ id = (known after apply)

+ protocol = "tcp"

+ security_group_id = (known after apply)

+ security_group_rule_id = (known after apply)

+ self = false

+ source_security_group_id = (known after apply)

+ to_port = 2182

+ type = "ingress"

}

# module.msk.aws_security_group_rule.msk_ingress["1.msk.9092"] will be created

+ resource "aws_security_group_rule" "msk_ingress" {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = "bootstrap - endpoint of broker"

+ from_port = 9092

+ id = (known after apply)

+ protocol = "tcp"

+ security_group_id = (known after apply)

+ security_group_rule_id = (known after apply)

+ self = false

+ source_security_group_id = (known after apply)

+ to_port = 9092

+ type = "ingress"

}

# module.msk.aws_security_group_rule.msk_ingress["2.msk.9094"] will be created

+ resource "aws_security_group_rule" "msk_ingress" {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = "encryption of broker messeges replication"

+ from_port = 9094

+ id = (known after apply)

+ protocol = "tcp"

+ security_group_id = (known after apply)

+ security_group_rule_id = (known after apply)

+ self = false

+ source_security_group_id = (known after apply)

+ to_port = 9094

+ type = "ingress"

}

# module.msk.aws_security_group_rule.msk_ingress["3.msk.9096"] will be created

+ resource "aws_security_group_rule" "msk_ingress" {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ from_port = 9096

+ id = (known after apply)

+ protocol = "tcp"

+ security_group_id = (known after apply)

+ security_group_rule_id = (known after apply)

+ self = false

+ source_security_group_id = (known after apply)

+ to_port = 9096

+ type = "ingress"

# (1 unchanged attribute hidden)

}

# module.msk.aws_security_group_rule.msk_ingress["4.msk.9098"] will be created

+ resource "aws_security_group_rule" "msk_ingress" {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ from_port = 9098

+ id = (known after apply)

+ protocol = "tcp"

+ security_group_id = (known after apply)

+ security_group_rule_id = (known after apply)

+ self = false

+ source_security_group_id = (known after apply)

+ to_port = 9098

+ type = "ingress"

# (1 unchanged attribute hidden)

}

Plan: 10 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ msk = (known after apply)

─────────────────────────────────────────────────────────────────────

Saved the plan to: tfplan

To perform exactly these actions, run the following command to apply:

terraform apply "tfplan"- $ terraform apply tfplan

module.msk.aws_msk_configuration.msk_config: Creating...

module.msk.aws_cloudwatch_log_group.msk_log_group["msk"]: Creating...

module.msk.aws_security_group.msk_security_group["msk"]: Creating...

module.msk.aws_cloudwatch_log_group.msk_log_group["msk"]: Creation complete after 1s [id=/aws/msk/msk-dev-t101-sejkim]

module.msk.aws_security_group.msk_security_group["msk"]: Creation complete after 2s [id=sg-04a7279dd29838bfb]

module.msk.aws_security_group_rule.msk_ingress["1.msk.9092"]: Creating...

module.msk.aws_security_group_rule.msk_egress["0.msk.0"]: Creating...

module.msk.aws_security_group_rule.msk_ingress["2.msk.9094"]: Creating...

module.msk.aws_security_group_rule.msk_ingress["4.msk.9098"]: Creating...

module.msk.aws_security_group_rule.msk_ingress["3.msk.9096"]: Creating...

module.msk.aws_security_group_rule.msk_ingress["0.msk.2182"]: Creating...

module.msk.aws_security_group_rule.msk_ingress["4.msk.9098"]: Creation complete after 0s [id=sgrule-3106511744]

module.msk.aws_security_group_rule.msk_ingress["3.msk.9096"]: Creation complete after 1s [id=sgrule-3836010581]

module.msk.aws_security_group_rule.msk_ingress["0.msk.2182"]: Creation complete after 1s [id=sgrule-4055950905]

module.msk.aws_security_group_rule.msk_ingress["1.msk.9092"]: Creation complete after 2s [id=sgrule-3670809819]

module.msk.aws_msk_configuration.msk_config: Creation complete after 4s [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Creating...

module.msk.aws_security_group_rule.msk_ingress["2.msk.9094"]: Creation complete after 2s [id=sgrule-4220558866]

module.msk.aws_security_group_rule.msk_egress["0.msk.0"]: Creation complete after 3s [id=sgrule-753378928]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Still creating... [10s elapsed]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Still creating... [20s elapsed]

...

module.msk.aws_msk_cluster.msk_cluster["msk"]: Still creating... [37m13s elapsed]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Creation complete after 37m19s [id=arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2]

Apply complete! Resources: 10 added, 0 changed, 0 destroyed.

Outputs:

msk = {

"bootstrap_brokers_tls" = {

"msk" = "b-1.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:9094,b-2.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:9094"

}

"msk_cluster_name" = {

"msk" = "msk-dev-t101-sjkim-msk-cluster"

}

"zookeeper_connect_string" = {

"msk" = "z-1.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:2181,z-2.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:2181,z-3.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:2181"

}

}

3.3 실행 결과

- $ terraform state list

data.terraform_remote_state.net

module.msk.data.aws_subnets.msk_subnets

module.msk.data.aws_vpc.vpc

module.msk.aws_cloudwatch_log_group.msk_log_group["msk"]

module.msk.aws_msk_cluster.msk_cluster["msk"]

module.msk.aws_msk_configuration.msk_config

module.msk.aws_security_group.msk_security_group["msk"]

module.msk.aws_security_group_rule.msk_egress["0.msk.0"]

module.msk.aws_security_group_rule.msk_ingress["0.msk.2182"]

module.msk.aws_security_group_rule.msk_ingress["1.msk.9092"]

module.msk.aws_security_group_rule.msk_ingress["2.msk.9094"]

module.msk.aws_security_group_rule.msk_ingress["3.msk.9096"]

module.msk.aws_security_group_rule.msk_ingress["4.msk.9098"]-

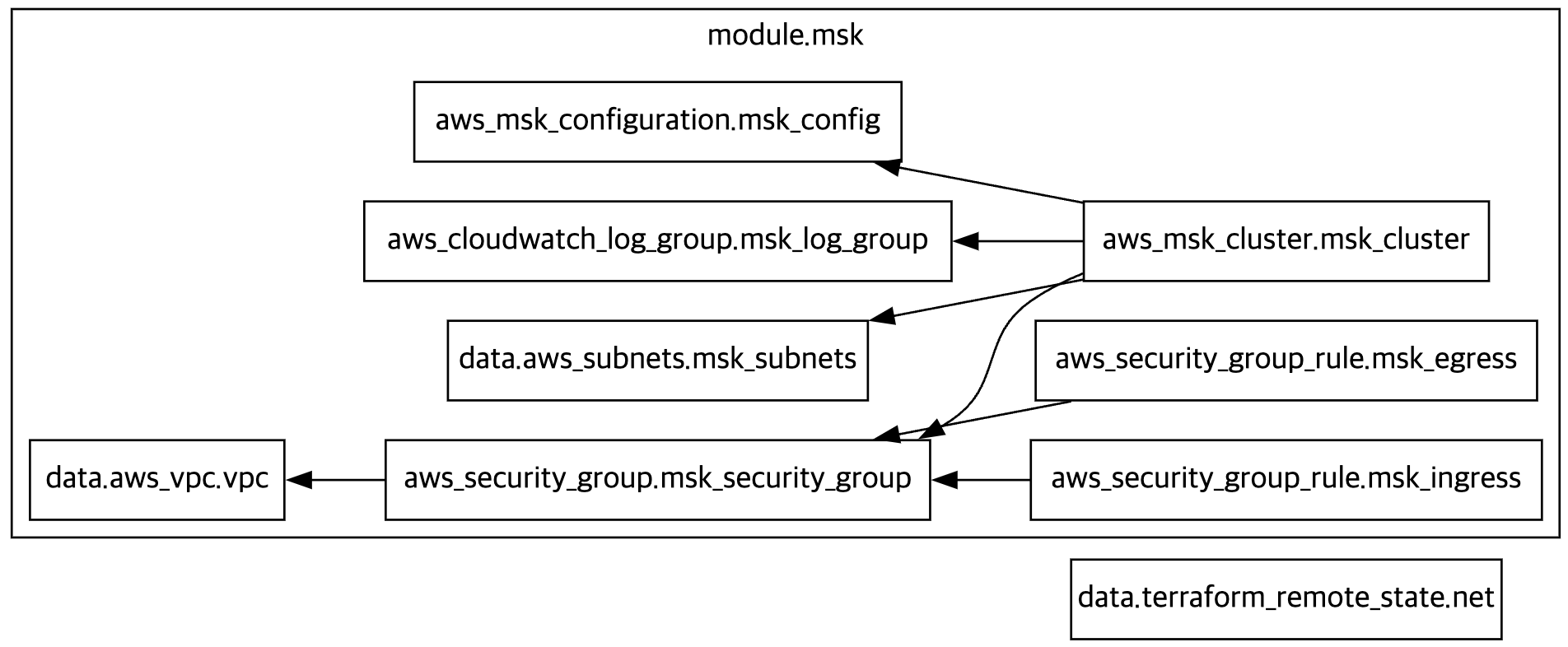

$ terraform graph > graph.dot

-

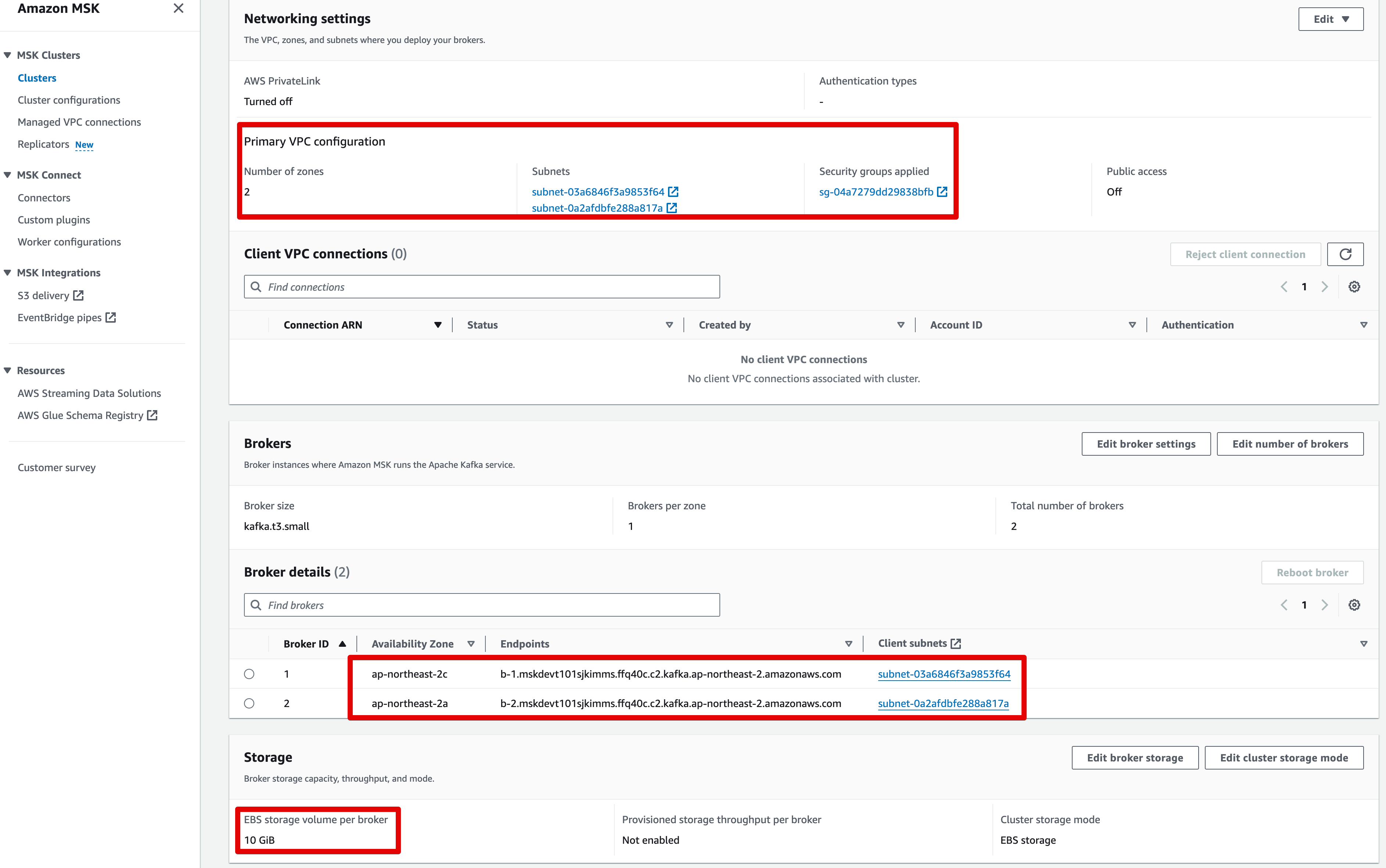

msk - cluster

-

msk -config

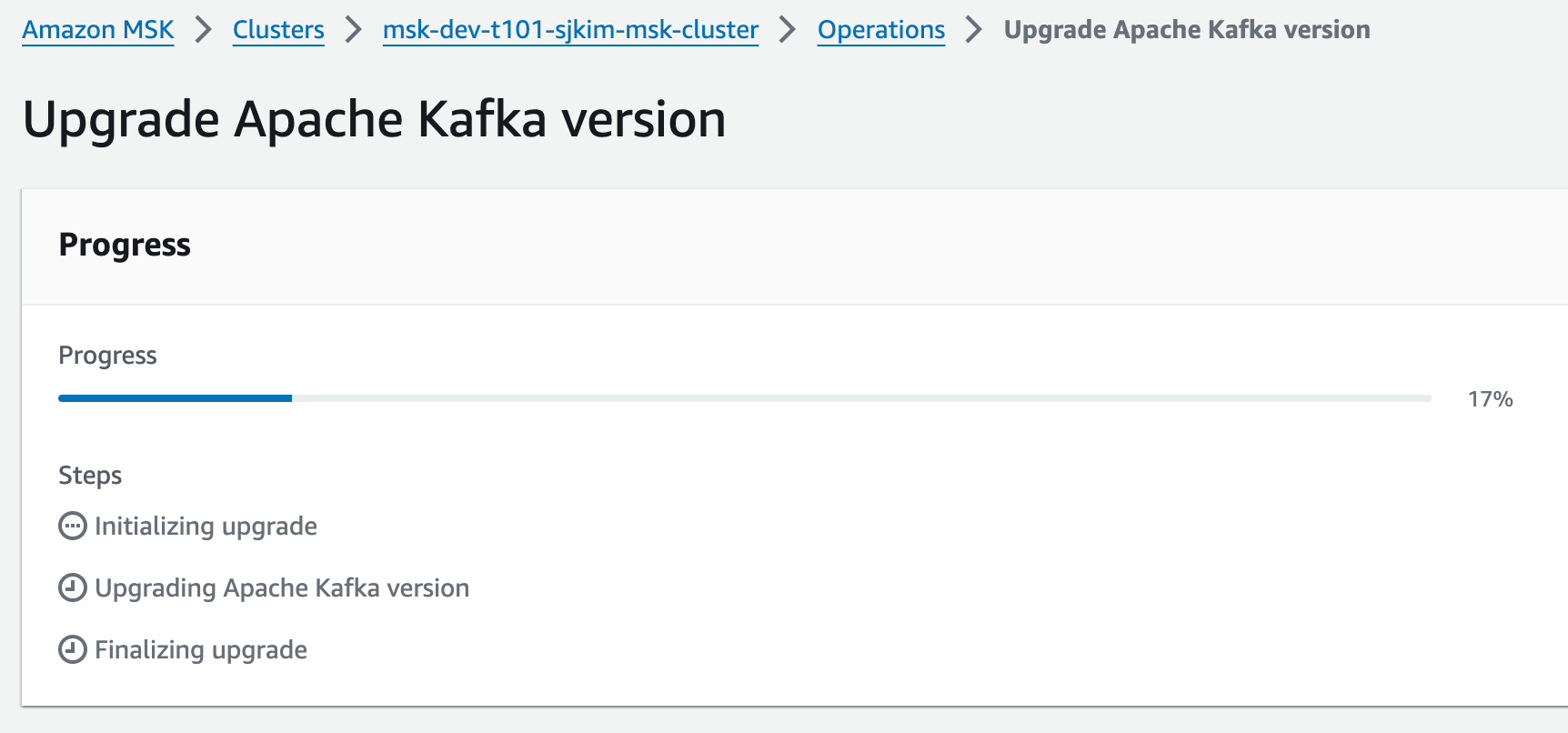

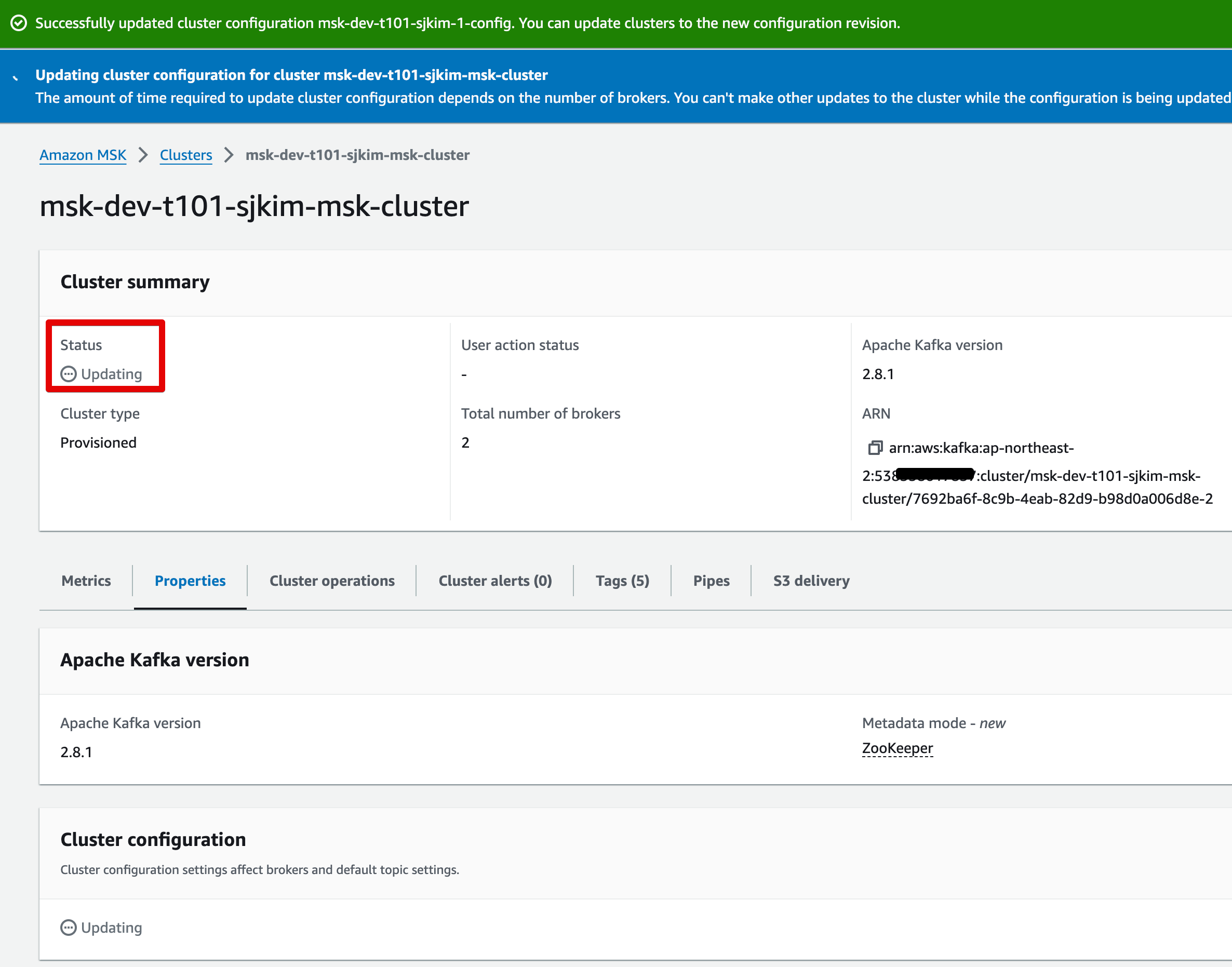

MSK v2.8.1을 v3.5.1로 버전업 테스트

- services/backing/msk.auto.tfvars 파일의 kafka_version 값을 수정

aws_msk_configuration의 lifecycle > create_before_destroy 값은 true 상태 조건 임

msk_cluster = {

"msk" = {

kafka_version = "3.5.1"

number_of_broker_nodes = 2

instance_type = "kafka.t3.small"

ebs_volume_size = 10

}

}Upgrade 시도

- $ terraform plan -out tfplan

msk cluster는 in-place update, msk_configuration은 Life_Cycle 생성 후 삭제 정책에 의해 생성 후 삭제로 진행됨

data.terraform_remote_state.net: Reading...

module.msk.data.aws_subnets.msk_subnets: Reading...

module.msk.data.aws_vpc.vpc: Reading...

module.msk.aws_cloudwatch_log_group.msk_log_group["msk"]: Refreshing state... [id=/aws/msk/msk-dev-t101-sejkim]

module.msk.aws_msk_configuration.msk_config: Refreshing state... [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2]

data.terraform_remote_state.net: Read complete after 0s

module.msk.data.aws_subnets.msk_subnets: Read complete after 0s [id=ap-northeast-2]

module.msk.data.aws_vpc.vpc: Read complete after 0s [id=vpc-066f0659402af2745]

module.msk.aws_security_group.msk_security_group["msk"]: Refreshing state... [id=sg-04a7279dd29838bfb]

module.msk.aws_security_group_rule.msk_ingress["4.msk.9098"]: Refreshing state... [id=sgrule-3106511744]

module.msk.aws_security_group_rule.msk_ingress["0.msk.2182"]: Refreshing state... [id=sgrule-4055950905]

module.msk.aws_security_group_rule.msk_ingress["1.msk.9092"]: Refreshing state... [id=sgrule-3670809819]

module.msk.aws_security_group_rule.msk_ingress["2.msk.9094"]: Refreshing state... [id=sgrule-4220558866]

module.msk.aws_security_group_rule.msk_egress["0.msk.0"]: Refreshing state... [id=sgrule-753378928]

module.msk.aws_security_group_rule.msk_ingress["3.msk.9096"]: Refreshing state... [id=sgrule-3836010581]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Refreshing state... [id=arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

~ update in-place

+/- create replacement and then destroy

Terraform will perform the following actions:

# module.msk.aws_msk_cluster.msk_cluster["msk"] will be updated in-place

~ resource "aws_msk_cluster" "msk_cluster" {

id = "arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2"

~ kafka_version = "2.8.1" -> "3.5.1"

tags = {

"Name" = "msk-dev-t101-sjkim-msk-cluster"

}

# (20 unchanged attributes hidden)

~ configuration_info {

~ arn = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

# (1 unchanged attribute hidden)

}

# (4 unchanged blocks hidden)

}

# module.msk.aws_msk_configuration.msk_config must be replaced

+/- resource "aws_msk_configuration" "msk_config" {

~ arn = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

~ id = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

~ kafka_versions = [ # forces replacement

- "2.8.1",

+ "3.5.1",

]

~ latest_revision = 1 -> (known after apply)

name = "msk-dev-t101-sjkim-1-config"

# (2 unchanged attributes hidden)

}

Plan: 1 to add, 1 to change, 1 to destroy.

────────────────────────────────────

Saved the plan to: tfplan

To perform exactly these actions, run the following command to apply:

terraform apply "tfplan"- $ terraform apply tfplan

module.msk.aws_msk_configuration.msk_config: Creating...Upgrade 오류 발생 - MSK Cluster 버전값만 변수로 변경 시 실패

msk_configuration 리소스 이름 충돌

Error: creating MSK Configuration: operation error Kafka: CreateConfiguration, https response error StatusCode: 409, RequestID: 095d22bd-b945-4577-966e-408baeb68a14, ConflictException: A resource with this name already exists.

with module.msk.aws_msk_configuration.msk_config,

on ../../modules/msk/config.tf line 2, in resource "aws_msk_configuration" "msk_config":

2: resource "aws_msk_configuration" "msk_config" {

문제해결 시도1 - Configuration의 LC 정책(삭제 후 생성)으로 해도 실패

aws_msk_configuration의 lifecycle > create_before_destroy 값은 false로 한후 재시도

MSK Cluster는 in-place로 업데이트, msk_configuration은 삭제후 생성으로 진행됨

$ terraform plan -out tfplan

data.terraform_remote_state.net: Reading...

module.msk.data.aws_vpc.vpc: Reading...

module.msk.data.aws_subnets.msk_subnets: Reading...

module.msk.aws_cloudwatch_log_group.msk_log_group["msk"]: Refreshing state... [id=/aws/msk/msk-dev-t101-sejkim]

module.msk.aws_msk_configuration.msk_config: Refreshing state... [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2]

data.terraform_remote_state.net: Read complete after 0s

module.msk.data.aws_subnets.msk_subnets: Read complete after 0s [id=ap-northeast-2]

module.msk.data.aws_vpc.vpc: Read complete after 1s [id=vpc-066f0659402af2745]

module.msk.aws_security_group.msk_security_group["msk"]: Refreshing state... [id=sg-04a7279dd29838bfb]

module.msk.aws_security_group_rule.msk_egress["0.msk.0"]: Refreshing state... [id=sgrule-753378928]

module.msk.aws_security_group_rule.msk_ingress["3.msk.9096"]: Refreshing state... [id=sgrule-3836010581]

module.msk.aws_security_group_rule.msk_ingress["1.msk.9092"]: Refreshing state... [id=sgrule-3670809819]

module.msk.aws_security_group_rule.msk_ingress["4.msk.9098"]: Refreshing state... [id=sgrule-3106511744]

module.msk.aws_security_group_rule.msk_ingress["2.msk.9094"]: Refreshing state... [id=sgrule-4220558866]

module.msk.aws_security_group_rule.msk_ingress["0.msk.2182"]: Refreshing state... [id=sgrule-4055950905]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Refreshing state... [id=arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

~ update in-place

-/+ destroy and then create replacement

Terraform will perform the following actions:

# module.msk.aws_msk_cluster.msk_cluster["msk"] will be updated in-place

~ resource "aws_msk_cluster" "msk_cluster" {

id = "arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2"

~ kafka_version = "2.8.1" -> "3.5.1"

tags = {

"Name" = "msk-dev-t101-sjkim-msk-cluster"

}

# (20 unchanged attributes hidden)

~ configuration_info {

~ arn = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

# (1 unchanged attribute hidden)

}

# (4 unchanged blocks hidden)

}

# module.msk.aws_msk_configuration.msk_config must be replaced

-/+ resource "aws_msk_configuration" "msk_config" {

~ arn = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

~ id = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

~ kafka_versions = [ # forces replacement

- "2.8.1",

+ "3.5.1",

]

~ latest_revision = 1 -> (known after apply)

name = "msk-dev-t101-sjkim-1-config"

# (2 unchanged attributes hidden)

}

Plan: 1 to add, 1 to change, 1 to destroy.

────────────────────────────────────

Saved the plan to: tfplan

To perform exactly these actions, run the following command to apply:

terraform apply "tfplan"Configuration이 사용중이라 삭제하지 못한다고 에러 발생

module.msk.aws_msk_configuration.msk_config: Destroying... [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2]

Error: deleting MSK Configuration (arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2): operation error Kafka: DeleteConfiguration, https response error StatusCode: 400, RequestID: 53e7ac12-567b-48f6-8db9-1ab0705a93e0, BadRequestException: Configuration is in use by one or more clusters. Dissociate the configuration from the clusters

문제해결 시도2 - Configuration 버전 관계없이 사용중으로 인지하여 실패

resource aws_msk_cluster에서 configuration_info 의 revision 값을 latest_revison 대신 현재의 1의 값으로 변경

사용중여서 삭제하지 못한다고 에러 발생

$ terraform apply tfplan

module.msk.aws_msk_configuration.msk_config: Destroying... [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2]

Error: deleting MSK Configuration (arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2): operation error Kafka: DeleteConfiguration, https response error StatusCode: 400, RequestID: 1146cffe-01af-4bda-a96f-a0a336d34373, BadRequestException: Configuration is in use by one or more clusters. Dissociate the configuration from the clusters.

문제해결 시도3 - AWS 콘솔에서 Configuration 버전 변경 & LC 생성 후 삭제 정책으로 진행결과 실패

AWS 콘솔에서 수동으로 Configuration 값을 2로 생성하여 변경하고 재시도

$ terraform apply tfplan

module.msk.aws_msk_configuration.msk_config: Creating...

Error: creating MSK Configuration: operation error Kafka: CreateConfiguration, https response error StatusCode: 409, RequestID: ed60c08f-9c93-4ba9-9e12-58e6067b0424, ConflictException: A resource with this name already exists.

with module.msk.aws_msk_configuration.msk_config,

on ../../modules/msk/config.tf line 2, in resource "aws_msk_configuration" "msk_config":

2: resource "aws_msk_configuration" "msk_config" {

동일하게 에러 발생

문제해결 시도4 - AWS 콘솔에서 Configuration 신규 생성하여 변경 & LC 삭제 후 생성 정책으로 변경 후 업데이트 성공

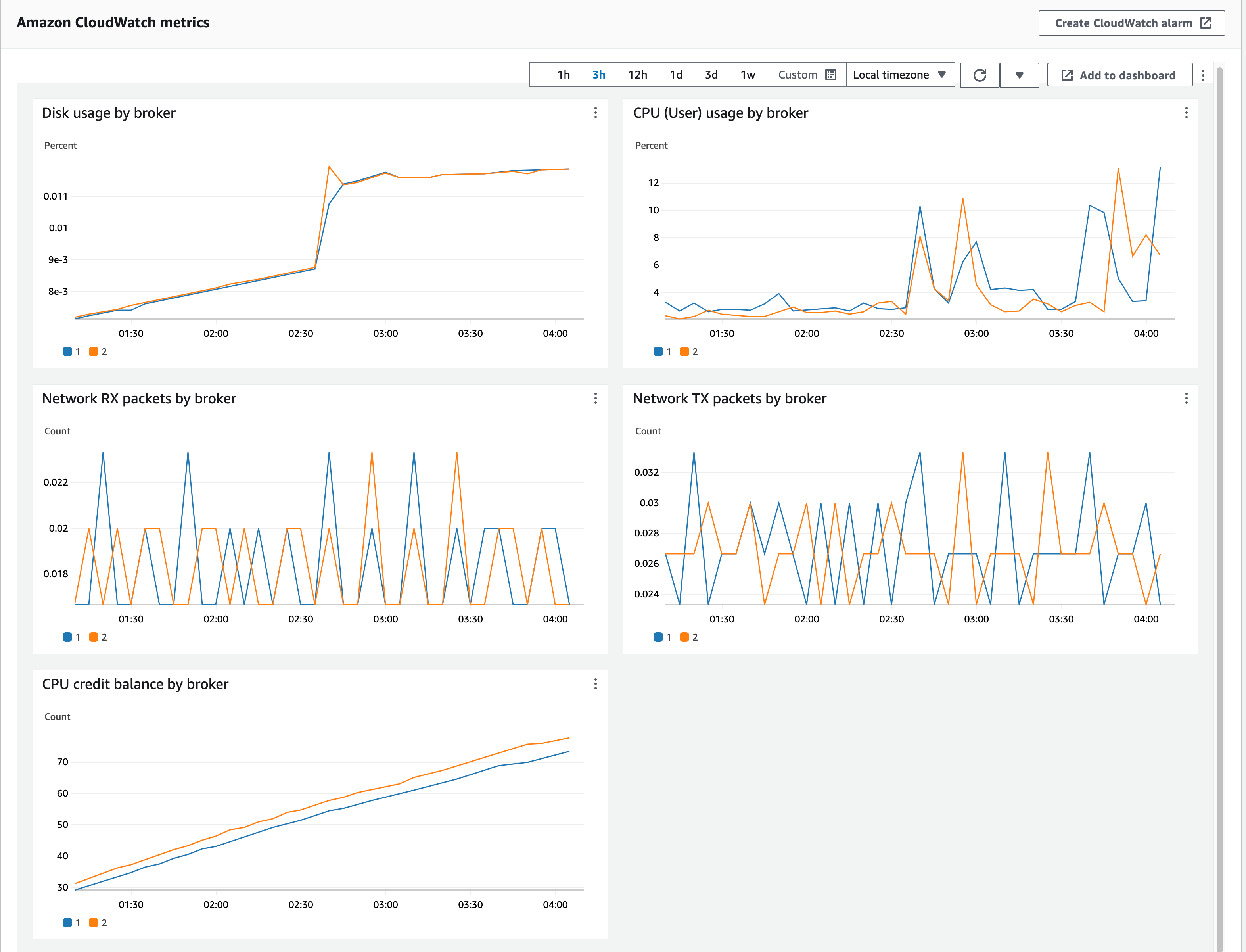

AWS 콘솔에서 수동으로 Configuration을 새로 생성 후 변경, Life Cycle을 삭제 후 생성으로 변경 후 업데이트 진행됨(1시간 6분 소요, MSK Broker Endpoint IP 변경되지 않음, 무중단으로 버전업 완료)

$ terraform plan -out tfplan

data.terraform_remote_state.net: Reading...

module.msk.data.aws_subnets.msk_subnets: Reading...

module.msk.data.aws_vpc.vpc: Reading...

module.msk.aws_msk_configuration.msk_config: Refreshing state... [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2]

module.msk.aws_cloudwatch_log_group.msk_log_group["msk"]: Refreshing state... [id=/aws/msk/msk-dev-t101-sejkim]

module.msk.data.aws_subnets.msk_subnets: Read complete after 0s [id=ap-northeast-2]

module.msk.data.aws_vpc.vpc: Read complete after 0s [id=vpc-066f0659402af2745]

module.msk.aws_security_group.msk_security_group["msk"]: Refreshing state... [id=sg-04a7279dd29838bfb]

module.msk.aws_security_group_rule.msk_egress["0.msk.0"]: Refreshing state... [id=sgrule-753378928]

module.msk.aws_security_group_rule.msk_ingress["1.msk.9092"]: Refreshing state... [id=sgrule-3670809819]

module.msk.aws_security_group_rule.msk_ingress["4.msk.9098"]: Refreshing state... [id=sgrule-3106511744]

module.msk.aws_security_group_rule.msk_ingress["3.msk.9096"]: Refreshing state... [id=sgrule-3836010581]

module.msk.aws_security_group_rule.msk_ingress["2.msk.9094"]: Refreshing state... [id=sgrule-4220558866]

module.msk.aws_security_group_rule.msk_ingress["0.msk.2182"]: Refreshing state... [id=sgrule-4055950905]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Refreshing state... [id=arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2]

data.terraform_remote_state.net: Read complete after 1s

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

~ update in-place

-/+ destroy and then create replacement

Terraform will perform the following actions:

# module.msk.aws_msk_cluster.msk_cluster["msk"] will be updated in-place

~ resource "aws_msk_cluster" "msk_cluster" {

id = "arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2"

~ kafka_version = "2.8.1" -> "3.5.1"

tags = {

"Name" = "msk-dev-t101-sjkim-msk-cluster"

}

# (20 unchanged attributes hidden)

~ configuration_info {

~ arn = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config-281/27e062ef-53e5-40f8-a9af-ba85e6ceec59-2" -> (known after apply)

# (1 unchanged attribute hidden)

}

# (4 unchanged blocks hidden)

}

# module.msk.aws_msk_configuration.msk_config must be replaced

-/+ resource "aws_msk_configuration" "msk_config" {

~ arn = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

- description = "replica 값을 3으로 수정" -> null

~ id = "arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2" -> (known after apply)

~ kafka_versions = [ # forces replacement

- "2.8.1",

+ "3.5.1",

]

~ latest_revision = 2 -> (known after apply)

name = "msk-dev-t101-sjkim-1-config"

~ server_properties = <<-EOT

auto.create.topics.enable = true

- default.replication.factor=3

+ default.replication.factor=2

min.insync.replicas=1

EOT

}

Plan: 1 to add, 1 to change, 1 to destroy.

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Saved the plan to: tfplan

To perform exactly these actions, run the following command to apply:

terraform apply "tfplan"

$ terraform apply tfplan

module.msk.aws_msk_configuration.msk_config: Destroying... [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/f580504e-c026-40fb-ab8d-794490421365-2]

module.msk.aws_msk_configuration.msk_config: Destruction complete after 1s

module.msk.aws_msk_configuration.msk_config: Creating...

module.msk.aws_msk_configuration.msk_config: Creation complete after 0s [id=arn:aws:kafka:ap-northeast-2:538558617837:configuration/msk-dev-t101-sjkim-1-config/1e1891a1-ee50-45a1-b290-3b3e2c97117e-2]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Modifying... [id=arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Still modifying... [id=arn:aws:kafka:ap-northeast-2:5385586178...7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2, 10s elapsed]

...

module.msk.aws_msk_cluster.msk_cluster["msk"]: Still modifying... [id=arn:aws:kafka:ap-northeast-2:5385586178...7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2, 1h6m31s elapsed]

module.msk.aws_msk_cluster.msk_cluster["msk"]: Modifications complete after 1h6m32s [id=arn:aws:kafka:ap-northeast-2:538558617837:cluster/msk-dev-t101-sjkim-msk-cluster/7692ba6f-8c9b-4eab-82d9-b98d0a006d8e-2]

Apply complete! Resources: 1 added, 1 changed, 1 destroyed.

Outputs:

msk = {

"bootstrap_brokers_tls" = {

"msk" = "b-1.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:9094,b-2.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:9094"

}

"msk_cluster_name" = {

"msk" = "msk-dev-t101-sjkim-msk-cluster"

}

"zookeeper_connect_string" = {

"msk" = "z-1.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:2181,z-2.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:2181,z-3.mskdevt101sjkimms.ffq40c.c2.kafka.ap-northeast-2.amazonaws.com:2181"

}

}