[Oracle] 19c RAC설치(5) - GRID Infrastructure 설치

GRID Infrastructure 설치 사전 작업

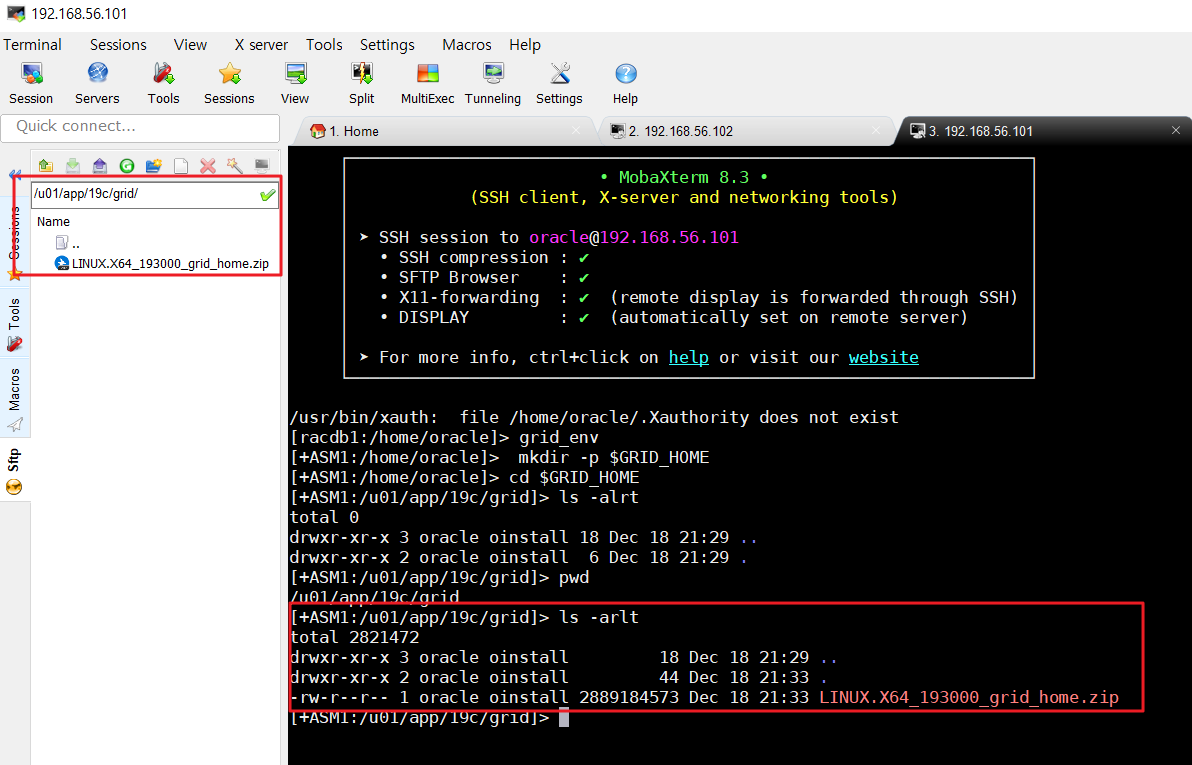

GRID_HOME 경로 생성

1번 노드 oracle 계정으로 접속한 후 grid 환경변수로 넘어간다.

$GRID_HOME 경로에 디렉토리를 생성한다. oracle 계정에서 실행

[racdb1:/home/oracle]> grid_env

[+ASM1:/home/oracle]> mkdir -p $GRID_HOMEGRID 설치파일 업로드

GRID_HOME에 grid 설치파일을 넣어준다.

Grid 설치파일 압축 해제

[+ASM1:/u01/app/19c/grid]> unzip LINUX.X64_193000_grid_home.zipcvuqdisk 패키지 설치

패키지 설치 중 "오류: can't create transaction lock on /var/lib/rpm/.rpm.lock (허가 거부)" 에러가 뜨게 되는데 이 부분은 root 계정에서 실행을 하지 않아 생긴 오류이다. -> root에서 하기!!

[root@rac1 ~]# cd $GRID_HOME/cv/rpm/

[root@rac1 rpm]# pwd

/u01/app/19c/grid/cv/rpm

[root@rac1 rpm]# rpm -Uvh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]2번 노드로 rpm 파일 전송

[root@rac1 rpm]# scp cvuqdisk-1.0.10-1.rpm rac2:/tmp

The authenticity of host 'rac2 (10.0.2.16)' can't be established.

ECDSA key fingerprint is SHA256:EK3z6HBw/h/hzU9PuLdTRTs+Nw0g/Air59rdctdyg90.

ECDSA key fingerprint is MD5:a0:51:d7:29:18:5b:84:16:0a:e5:82:c7:0b:e3:28:86.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,10.0.2.16' (ECDSA) to the list of known hosts.

root@rac2's password:

cvuqdisk-1.0.10-1.rpm 100% 11KB 11.6MB/s 00:002번 노드 ssh 접속 및 cvuqdisk 패키지 설치

root 에서 실행

[root@rac1 rpm]# ssh root@rac2

root@rac2's password:

Last login: Mon Dec 18 20:43:21 2023 from 192.168.56.1

[root@rac2 ~]# cd /tmp

[root@rac2 tmp]# rpm -Uvh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

[root@rac2 tmp]# exit

logout

Connection to rac2 closed.oracle 유저 패스워드 없는 SSH 접속 환경 설정

(yes, oracle pw 입력) oracle 계정에서 실행

grid_env

cd $GRID_HOME/oui/prov/resources/scripts

./sshUserSetup.sh -user oracle -hosts "rac1 rac2" -noPromptPassphrase -advanced

[root@rac1 ~]# cd $GRID_HOME/cv/rpm/

[root@rac1 rpm]# pws

bash: pws: command not found...

[root@rac1 rpm]# pwd

/u01/app/19c/grid/cv/rpm

[root@rac1 rpm]# rpm -Uvh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

[root@rac1 rpm]# ^C

[root@rac1 rpm]# scp cvuqdisk-1.0.10-1.rpm rac2:/tmp

The authenticity of host 'rac2 (10.0.2.16)' can't be established.

ECDSA key fingerprint is SHA256:EK3z6HBw/h/hzU9PuLdTRTs+Nw0g/Air59rdctdyg90.

ECDSA key fingerprint is MD5:a0:51:d7:29:18:5b:84:16:0a:e5:82:c7:0b:e3:28:86.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,10.0.2.16' (ECDSA) to the list of known hosts.

root@rac2's password:

cvuqdisk-1.0.10-1.rpm 100% 11KB 1

[root@rac1 rpm]# ^C

[root@rac1 rpm]# ^C

[root@rac1 rpm]# ssh root@rac2

root@rac2's password:

Last login: Mon Dec 18 20:43:21 2023 from 192.168.56.1

[root@rac2 ~]# cd /tmp

[root@rac2 tmp]# rpm -Uvh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

[root@rac2 tmp]# exit

logout

Connection to rac2 closed.

[root@rac1 rpm]# ^C

[root@rac1 rpm]# grid_env

bash: grid_env: command not found...

[root@rac1 rpm]# pwd

/u01/app/19c/grid/cv/rpm

[root@rac1 rpm]# cd $GRID_HOME/oui/prov/resources/scripts

[root@rac1 scripts]# pwd

/u01/app/19c/grid/oui/prov/resources/scripts

[root@rac1 scripts]# su - oracle

Last login: Mon Dec 18 21:28:22 KST 2023 from 192.168.56.1 on pts/0

[racdb1:/home/oracle]> grid_env

[+ASM1:/home/oracle]> cd $GRID_HOME/oui/prov/resources/scripts

[+ASM1:/u01/app/19c/grid/oui/prov/resources/scripts]> ./sshUserSetup.sh -user oracle noPromptPassphrase -advanced

The output of this script is also logged into /tmp/sshUserSetup_2023-12-18-21-52-32.l

Hosts are rac1 rac2

user is oracle

Platform:- Linux

Checking if the remote hosts are reachable

PING rac1 (10.0.2.15) 56(84) bytes of data.

64 bytes from rac1 (10.0.2.15): icmp_seq=1 ttl=64 time=0.016 ms

64 bytes from rac1 (10.0.2.15): icmp_seq=2 ttl=64 time=0.028 ms

64 bytes from rac1 (10.0.2.15): icmp_seq=3 ttl=64 time=0.031 ms

64 bytes from rac1 (10.0.2.15): icmp_seq=4 ttl=64 time=0.029 ms

64 bytes from rac1 (10.0.2.15): icmp_seq=5 ttl=64 time=0.035 ms

--- rac1 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4073ms

rtt min/avg/max/mdev = 0.016/0.027/0.035/0.009 ms

PING rac2 (10.0.2.16) 56(84) bytes of data.

64 bytes from rac2 (10.0.2.16): icmp_seq=1 ttl=64 time=0.253 ms

64 bytes from rac2 (10.0.2.16): icmp_seq=2 ttl=64 time=0.227 ms

64 bytes from rac2 (10.0.2.16): icmp_seq=3 ttl=64 time=0.253 ms

64 bytes from rac2 (10.0.2.16): icmp_seq=4 ttl=64 time=0.265 ms

64 bytes from rac2 (10.0.2.16): icmp_seq=5 ttl=64 time=0.244 ms

--- rac2 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4243ms

rtt min/avg/max/mdev = 0.227/0.248/0.265/0.018 ms

Remote host reachability check succeeded.

The following hosts are reachable: rac1 rac2.

The following hosts are not reachable: .

All hosts are reachable. Proceeding further...

firsthost rac1

numhosts 2

The script will setup SSH connectivity from the host rac1 to all

the remote hosts. After the script is executed, the user can use SSH to run

commands on the remote hosts or copy files between this host rac1

and the remote hosts without being prompted for passwords or confirmations.

NOTE 1:

As part of the setup procedure, this script will use ssh and scp to copy

files between the local host and the remote hosts. Since the script does not

store passwords, you may be prompted for the passwords during the execution of

the script whenever ssh or scp is invoked.

NOTE 2:

AS PER SSH REQUIREMENTS, THIS SCRIPT WILL SECURE THE USER HOME DIRECTORY

AND THE .ssh DIRECTORY BY REVOKING GROUP AND WORLD WRITE PRIVILEGES TO THESE

directories.

Do you want to continue and let the script make the above mentioned changes (yes/no)?

yes

The user chose yes

User chose to skip passphrase related questions.

Creating .ssh directory on local host, if not present already

Creating authorized_keys file on local host

Changing permissions on authorized_keys to 644 on local host

Creating known_hosts file on local host

Changing permissions on known_hosts to 644 on local host

Creating config file on local host

If a config file exists already at /home/oracle/.ssh/config, it would be backed up toonfig.backup.

Removing old private/public keys on local host

Running SSH keygen on local host with empty passphrase

Generating public/private rsa key pair.

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:kT/wAbmC+2DNOsgHEYiXPv0rj8hqhT7iOmrakxd16Dc oracle@rac1

The key's randomart image is:

+---[RSA 1024]----+

|.. . .. |

|o + .o |

| o o . .+.. |

| + o + o= . |

| .o B oS + |

| ...= = E . |

|...= = o . |

|oB=.*.o |

|&+=+.+. |

+----[SHA256]-----+

Creating .ssh directory and setting permissions on remote host rac1

THE SCRIPT WOULD ALSO BE REVOKING WRITE PERMISSIONS FOR group AND others ON THE HOME . THIS IS AN SSH REQUIREMENT.

The script would create ~oracle/.ssh/config file on remote host rac1. If a config fil~oracle/.ssh/config, it would be backed up to ~oracle/.ssh/config.backup.

The user may be prompted for a password here since the script would be running SSH on

Warning: Permanently added 'rac1,10.0.2.15' (ECDSA) to the list of known hosts.

oracle@rac1's password:

Done with creating .ssh directory and setting permissions on remote host rac1.

Creating .ssh directory and setting permissions on remote host rac2

THE SCRIPT WOULD ALSO BE REVOKING WRITE PERMISSIONS FOR group AND others ON THE HOME . THIS IS AN SSH REQUIREMENT.

The script would create ~oracle/.ssh/config file on remote host rac2. If a config fil~oracle/.ssh/config, it would be backed up to ~oracle/.ssh/config.backup.

The user may be prompted for a password here since the script would be running SSH on

Warning: Permanently added 'rac2,10.0.2.16' (ECDSA) to the list of known hosts.

oracle@rac2's password:

Done with creating .ssh directory and setting permissions on remote host rac2.

Copying local host public key to the remote host rac1

The user may be prompted for a password or passphrase here since the script would be ac1.

oracle@rac1's password:

Done copying local host public key to the remote host rac1

Copying local host public key to the remote host rac2

The user may be prompted for a password or passphrase here since the script would be ac2.

oracle@rac2's password:

Done copying local host public key to the remote host rac2

Creating keys on remote host rac1 if they do not exist already. This is required to s1.

Creating keys on remote host rac2 if they do not exist already. This is required to s2.

Generating public/private rsa key pair.

Your identification has been saved in .ssh/id_rsa.

Your public key has been saved in .ssh/id_rsa.pub.

The key fingerprint is:

SHA256:5hQtpGxz2H0HS2/btYG424l4PiUkRtkK7CEvqRD+6W0 oracle@rac2

The key's randomart image is:

+---[RSA 1024]----+

| . . o o |

| . o O = o.+. |

|. . @ O +.o.+..|

| o + = * o.o o+|

| o o . S o. ...|

| + + ..+.. |

| . . .. +oo |

| . E o. |

| . .. |

+----[SHA256]-----+

Updating authorized_keys file on remote host rac1

Updating known_hosts file on remote host rac1

Updating authorized_keys file on remote host rac2

Updating known_hosts file on remote host rac2

cat: /home/oracle/.ssh/known_hosts.tmp: No such file or directory

cat: /home/oracle/.ssh/authorized_keys.tmp: No such file or directory

SSH setup is complete.

------------------------------------------------------------------------

Verifying SSH setup

===================

The script will now run the date command on the remote nodes using ssh

to verify if ssh is setup correctly. IF THE SETUP IS CORRECTLY SETUP,

THERE SHOULD BE NO OUTPUT OTHER THAN THE DATE AND SSH SHOULD NOT ASK FOR

PASSWORDS. If you see any output other than date or are prompted for the

password, ssh is not setup correctly and you will need to resolve the

issue and set up ssh again.

The possible causes for failure could be:

1. The server settings in /etc/ssh/sshd_config file do not allow ssh

for user oracle.

2. The server may have disabled public key based authentication.

3. The client public key on the server may be outdated.

4. ~oracle or ~oracle/.ssh on the remote host may not be owned by oracle.

5. User may not have passed -shared option for shared remote users or

may be passing the -shared option for non-shared remote users.

6. If there is output in addition to the date, but no password is asked,

it may be a security alert shown as part of company policy. Append the

additional text to the <OMS HOME>/sysman/prov/resources/ignoreMessages.txt file.

------------------------------------------------------------------------

--rac1:--

Running /usr/bin/ssh -x -l oracle rac1 date to verify SSH connectivity has been setuprac1.

IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMHERE, IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL. Please note that being prompted for OK but being prompted for a password is ERROR.

Mon Dec 18 21:53:02 KST 2023

------------------------------------------------------------------------

--rac2:--

Running /usr/bin/ssh -x -l oracle rac2 date to verify SSH connectivity has been setuprac2.

IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMHERE, IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL. Please note that being prompted for OK but being prompted for a password is ERROR.

Mon Dec 18 21:45:26 KST 2023

------------------------------------------------------------------------

------------------------------------------------------------------------

Verifying SSH connectivity has been setup from rac1 to rac1

IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMHERE, IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL.

Mon Dec 18 21:53:02 KST 2023

------------------------------------------------------------------------

------------------------------------------------------------------------

Verifying SSH connectivity has been setup from rac1 to rac2

IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMHERE, IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL.

Mon Dec 18 21:45:26 KST 2023

------------------------------------------------------------------------

-Verification from complete-

SSH verification complete.

[+ASM1:/u01/app/19c/grid/oui/prov/resources/scripts]>클러스터 구성 환경 사전 검증

사전 환경 검증 스크립트 실행 (kernel / package 등)

교체 크기 확인이 실패로 뜨는데 이 부분은 swap 사이즈 부족으로 발생하는 에러이다. (초기 리눅스 설치시 파티션 작업 필요)

테스트로 설치하는 것이므로 나중에 Grid 설치시 무시하고 진행한다.

[+ASM1:/home/oracle]> cd $GRID_HOME

[+ASM1:/u01/app/19c/grid]> ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -osdba dba -orainv oinstall -fixup -method root -networks enp0s3/enp0s8 -verbose

"ROOT" 비밀번호 입력:

.

.

Verifying Physical Memory ...FAILED

rac2: PRVF-7530 : Sufficient physical memory is not available on node "rac2"

[Required physical memory = 8GB (8388608.0KB)]

rac1: PRVF-7530 : Sufficient physical memory is not available on node "rac1"

[Required physical memory = 8GB (8388608.0KB)]

.

.

Fix: Group Existence: asmadmin

Node Name Status

------------------------------------ ------------------------

rac2 successful

rac1 successful

Result:

"Group Existence: asmadmin" was successfully fixed on all the applicable nodes

Fix up operations were successfully completed on all the applicable nodesGrid Infrastructure 설치

GRID 설치 시작 (root로 접속 후 su - oracle로 접속하면 에러가 발생할 수 있다.oracle 유저로 접속 후 grid 설치 진행)

grid_env

cd $GRID_HOME

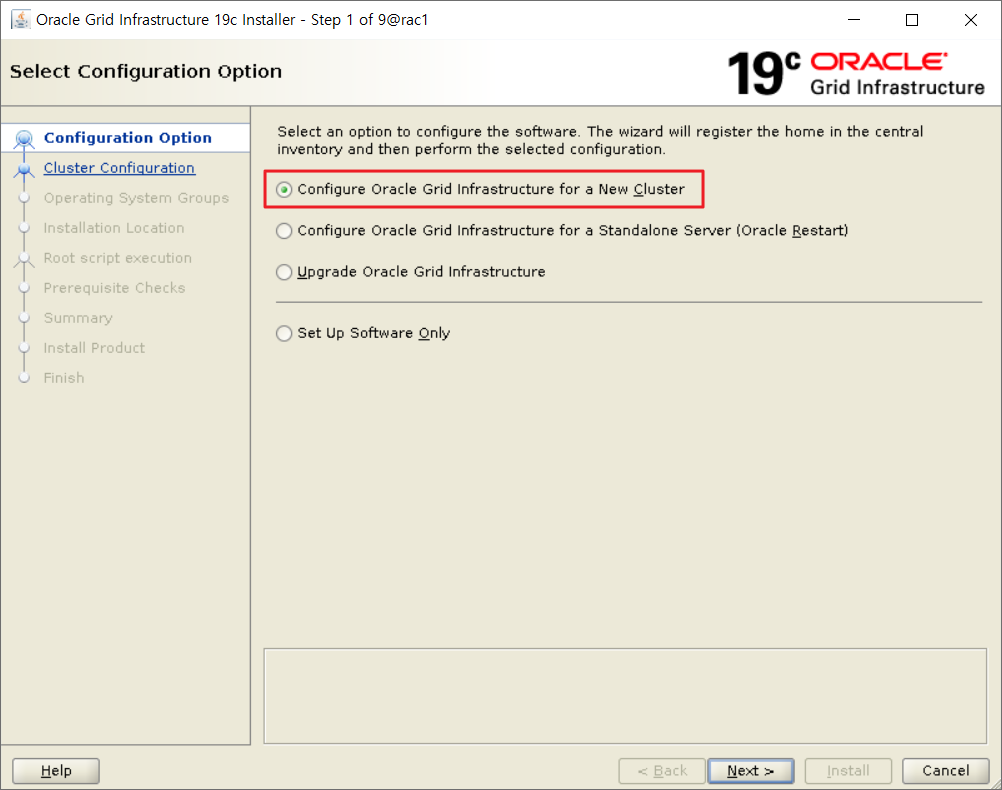

./gridSetup.sh소프트웨어 구성 옵션 선택

새 클러스터에 대한 Oracle Grid Instructure 구성 (rac)

독립 서버에 대한 Oracle Grid Instructure 구성 (싱글에서 오라클 asm 구성할때)

Oracle Grid Instructure 업그레이드 (그리드 버전 업그레이드 할 때가 있다. 이때 하는거지만 보통 그리드는 지우고 다시 설치한다.)

소프트웨어만 설정

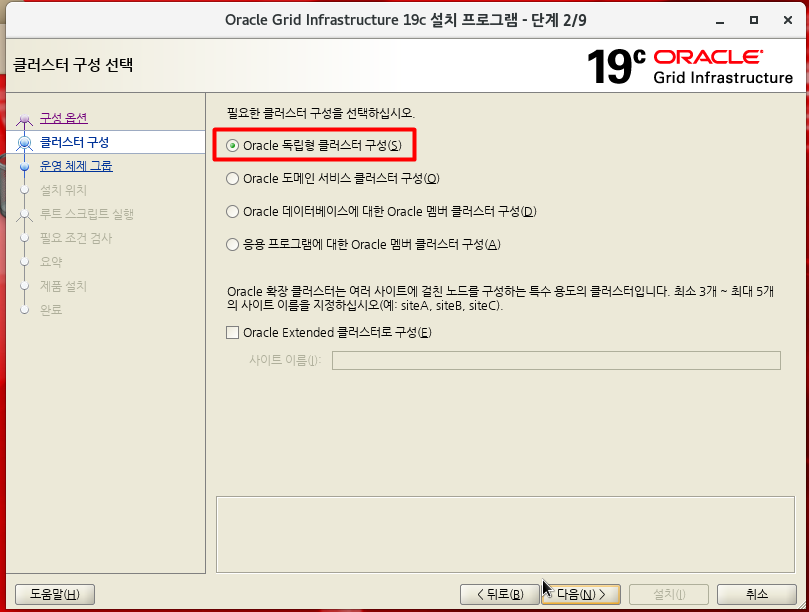

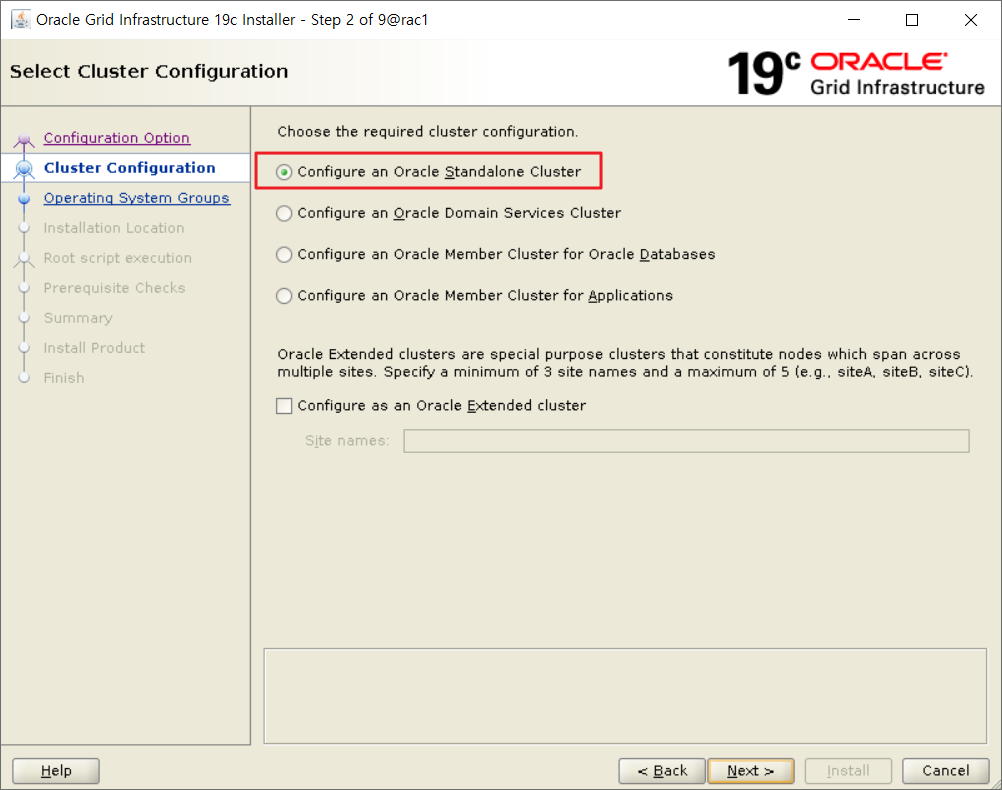

클러스터 구성 선택

클러스터종류관련 문서

첫번째꺼 한다.(용어 찾기)

- Oracle 독립형 클러스터는 모든 Oracle Grid Infrastructure 서비스와 Oracle ASM을 로컬로 호스팅하며 공유 스토리지에 대한 직접 액세스가 필요합니다.

- 12c 릴리스 2에 도입된 새 클러스터를 위한 배포 아키텍처.프라이빗 데이터베이스 클라우드를 위한 Oracle Real Application Clusters(Oracle RAC) 배포를 표준화, 중앙화 및 최적화할 수 있습니다. 여러 클러스터 구성은 관리 목적으로 Oracle 클러스터 도메인 아래에 그룹화되며 해당 Oracle 클러스터 도메인 내에서 사용 가능한 공유 서비스를 활용합니다.

- Oracle 멤버 클러스터는 Oracle Domain Services 클러스터의 중앙 집중식 서비스를 사용하며 데이터베이스 또는 애플리케이션을 호스팅할 수 있습니다.

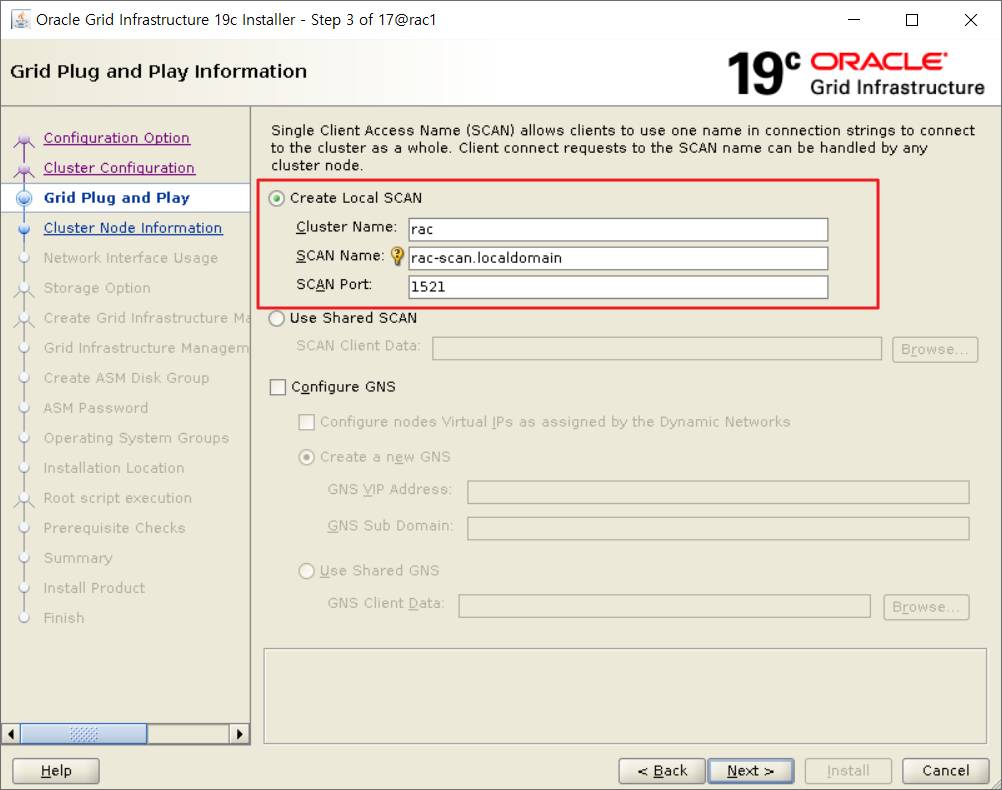

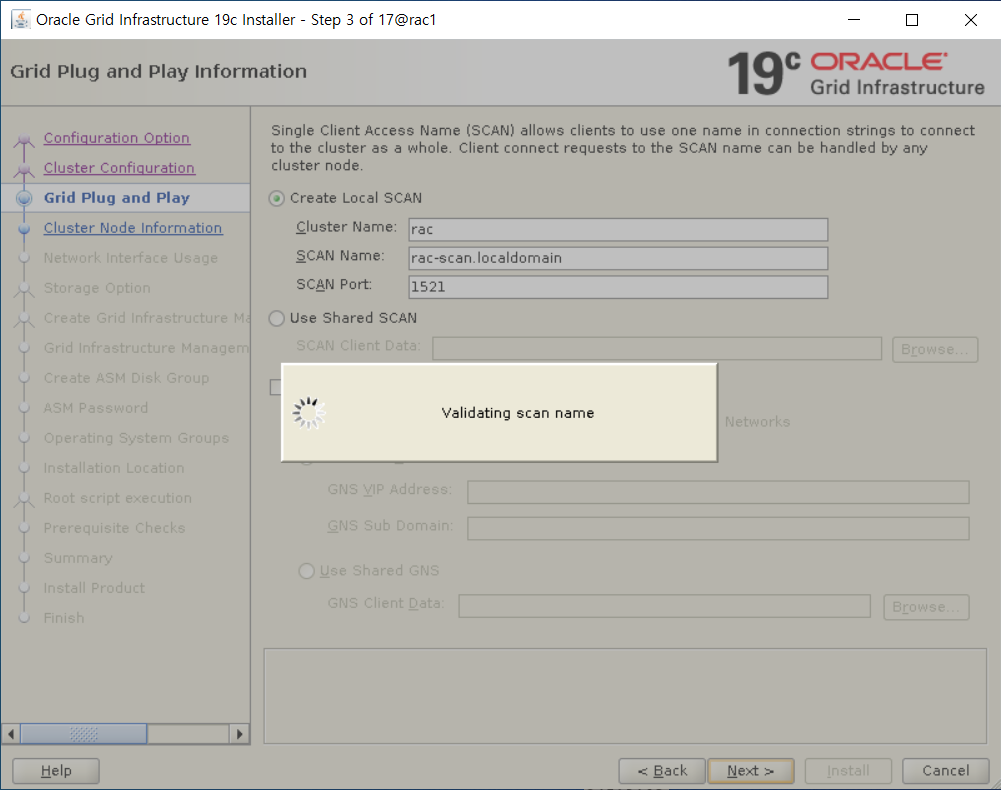

클러스터 구성 정보 입력

클러스터 이름, SCAN 이름, SCAN 포트 번호를 입력

SCAN 이름은 /etc/hosts에 정의한 이름, 그리고 nslookup에서 테스트한 이름과 동일하게 도메인을 포함하여 입력한다.

cluster name은 임의로 저장. 밑에꺼는 안맡으면 안넘어간다.

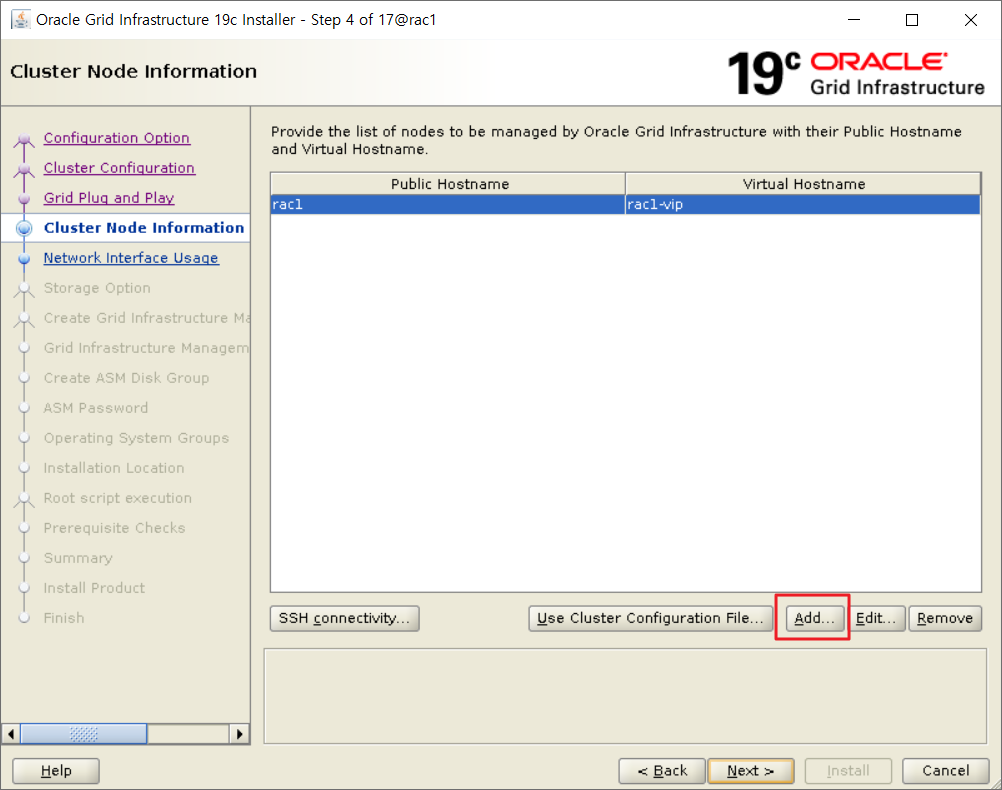

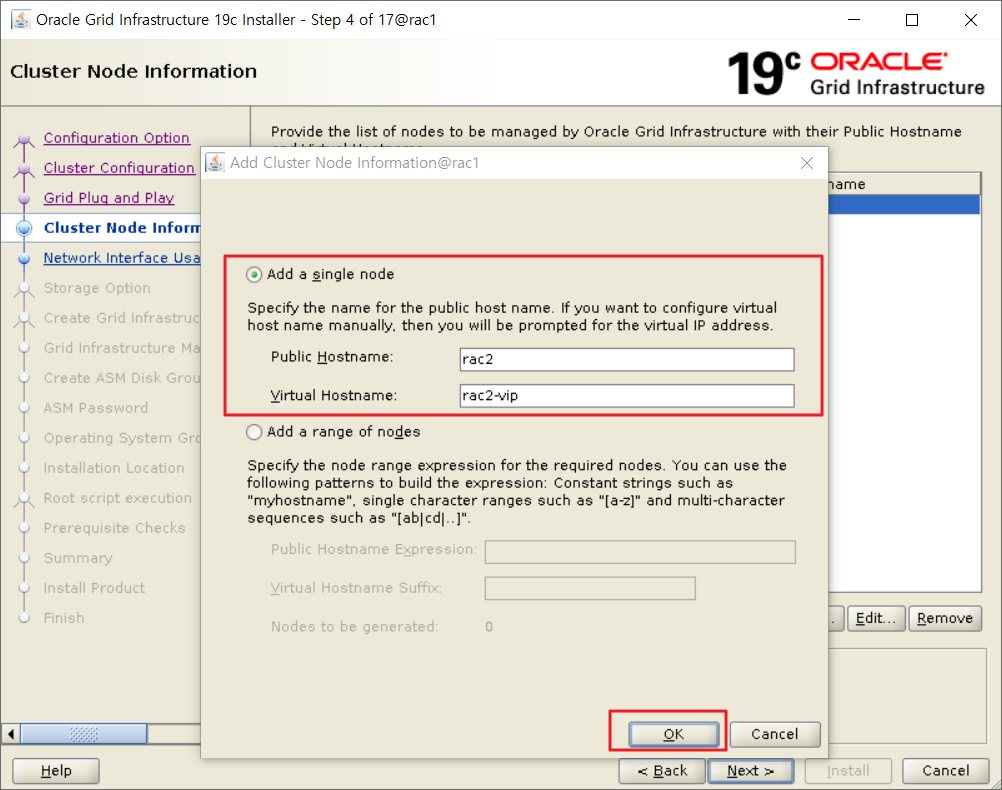

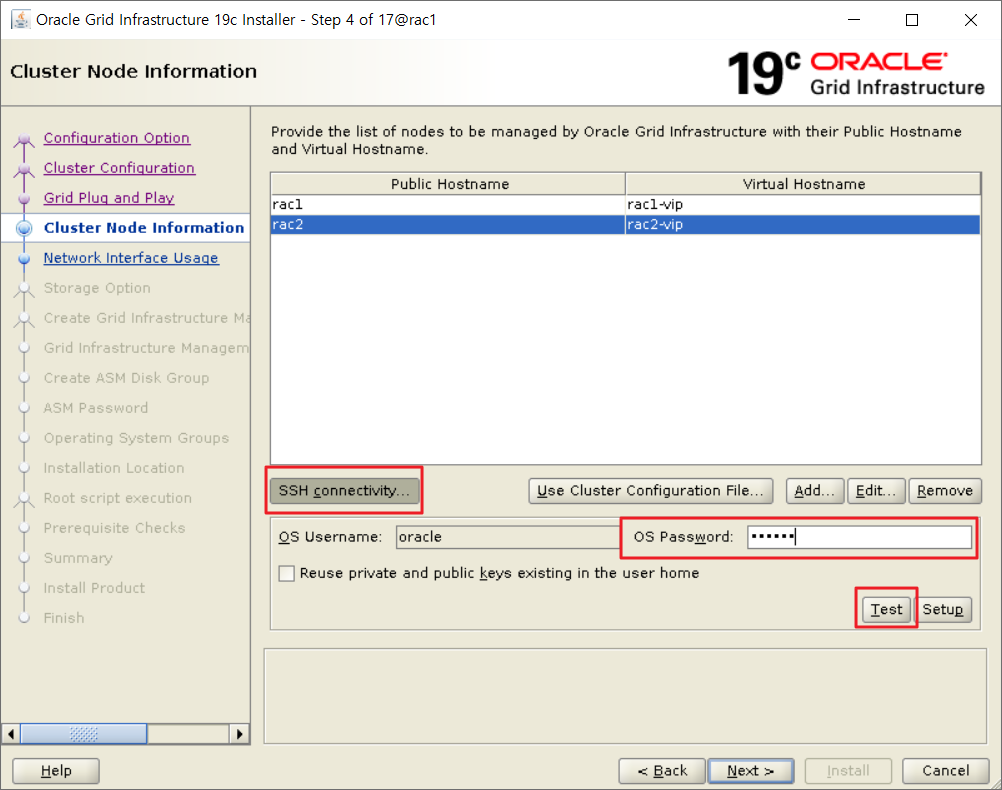

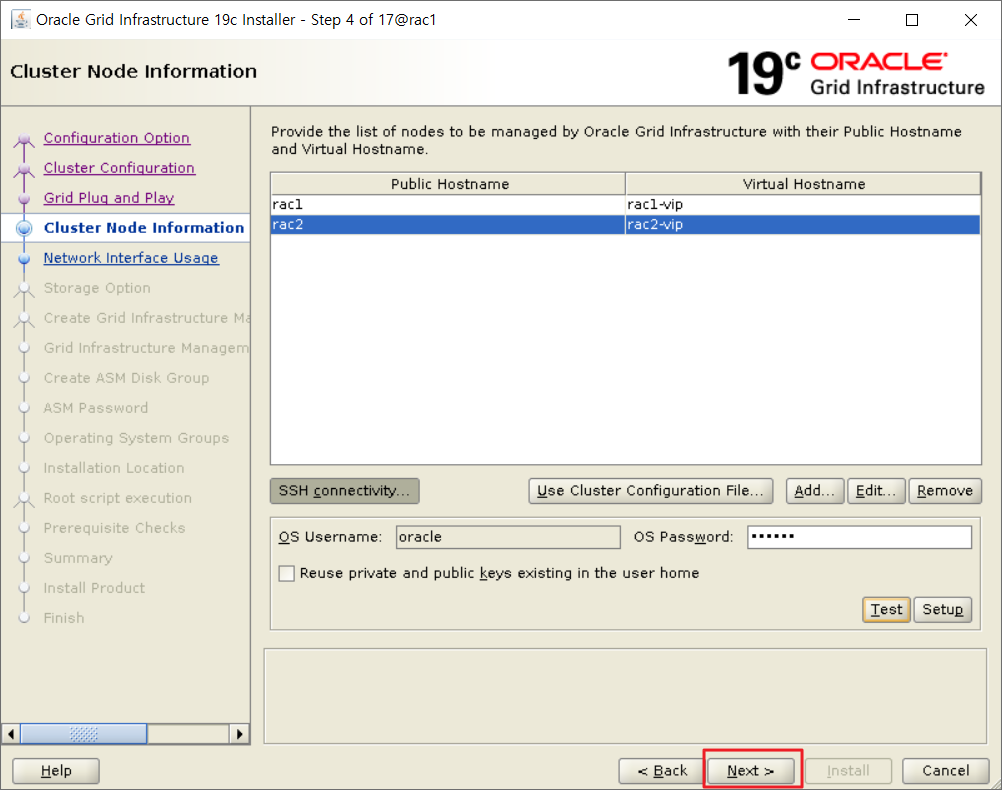

클러스터 노드 정보 설정

추가 선택

하나의 노드만 추가할 것이므로 Add a Single mode를 선택한 상태에서 2번 노드의 Public Host 이름과 Virtual Host 이름을 입력하고 OK 버튼을 클릭합니다.

✅ 클러스터 노드 간의 비밀번호 없는 SSH 환경 구성을 위해 SSH connectivity 버튼을 클릭합니다.

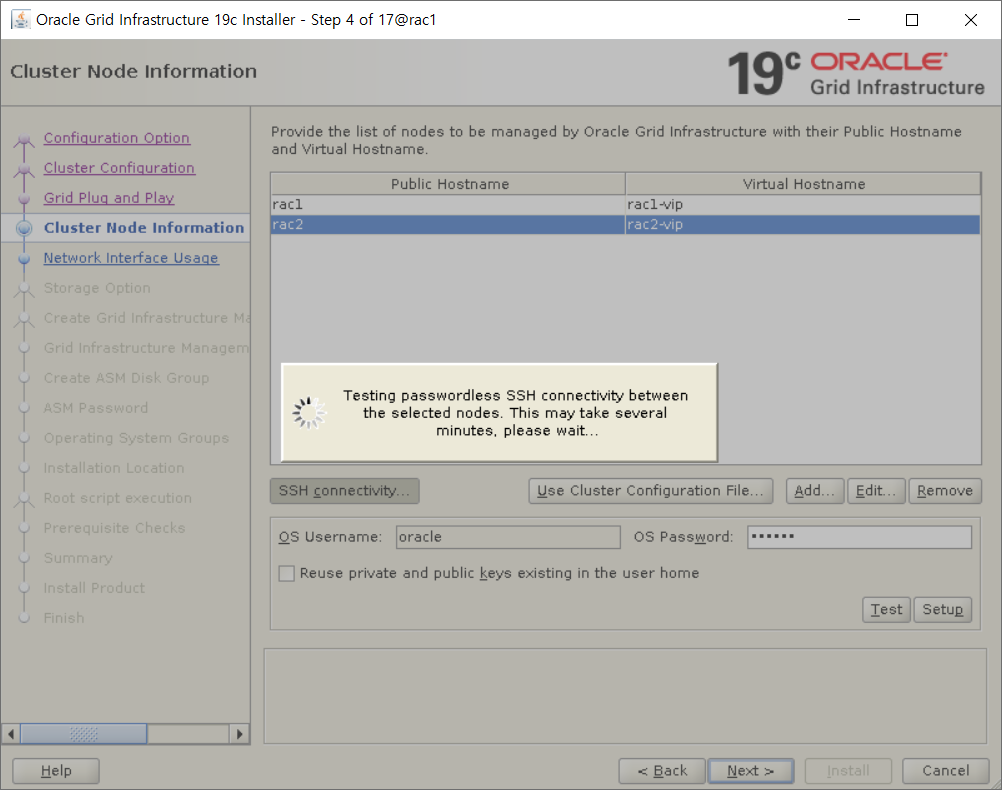

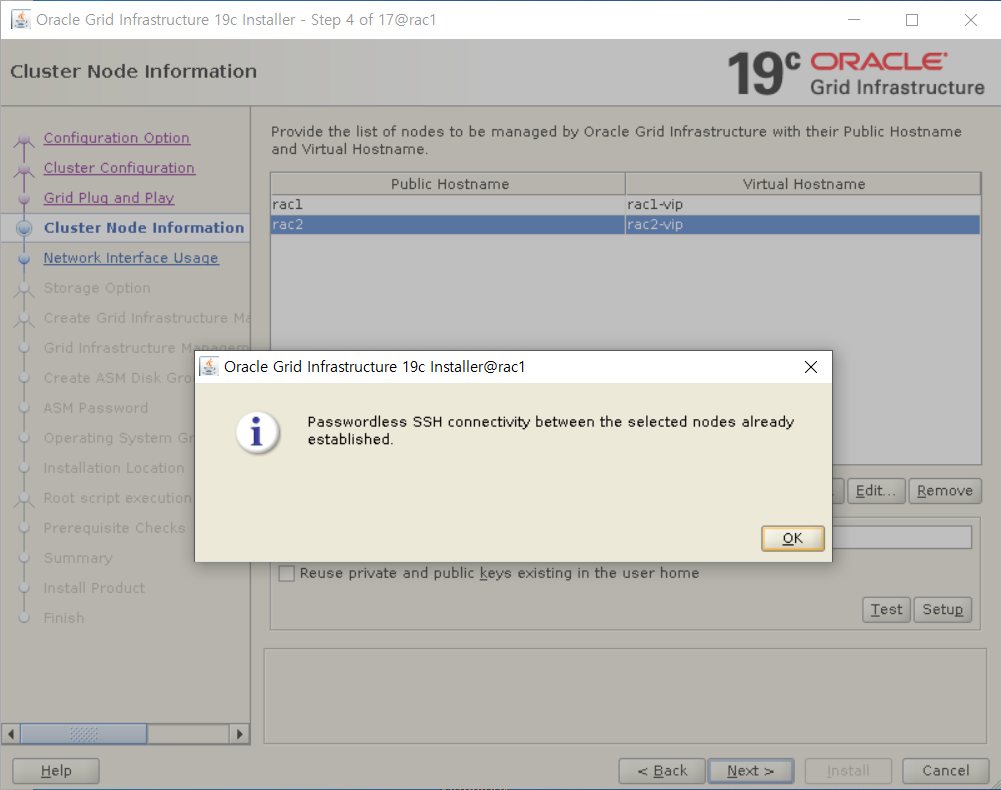

✅ oracle 유저에 대한 비밀번호 없는 SSH 환경 구성이 되어 있다면, oracle 유저의 비밀번호를 입력하고 Test 버튼을 클릭합니다.

✅ oracle 유저에 대한 비밀번호 없는 SSH 환경 구성이 되어 있지 않다면, oracle 유저의 OS 비밀번호를 입력하고 Setup 버튼을 클릭하면 노드간의 비빌번호 없는 SSH 접속 설정이 완료됩니다.

그리드랑 오라클이 패스워드 설정해서 접속할 때 패스워드 없이 파일 전송해야하므로

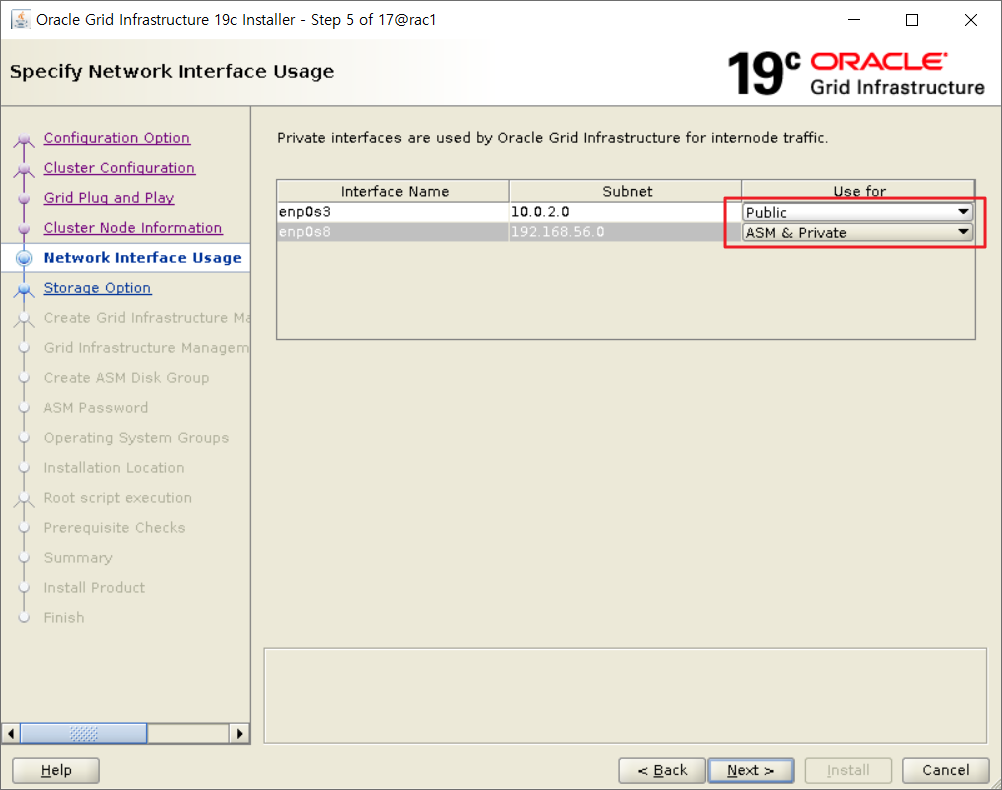

네트워크 인터페이스 용도 지정

ifconfig ? (flex asm위한 설정이다.) -> 뭐냐

✅ 노드간의 네트워크 인터페이스를 확인하는 단계입니다.

✅ Public 인터페이스는 Public IP와 VIP로 외부와 통신하는데 사용되며 ASM & Private 인터페이스는 ASM과 RAC 노드간의 통신에만 사용됩니다.

✅ 노드간의 인터페이스 이름과 서브넷은 Public과 Private를 구분하여 동일하게 설정이 되어야 합니다.

✅ 설정이 완료되었으면 Next 버튼을 클릭합니다.

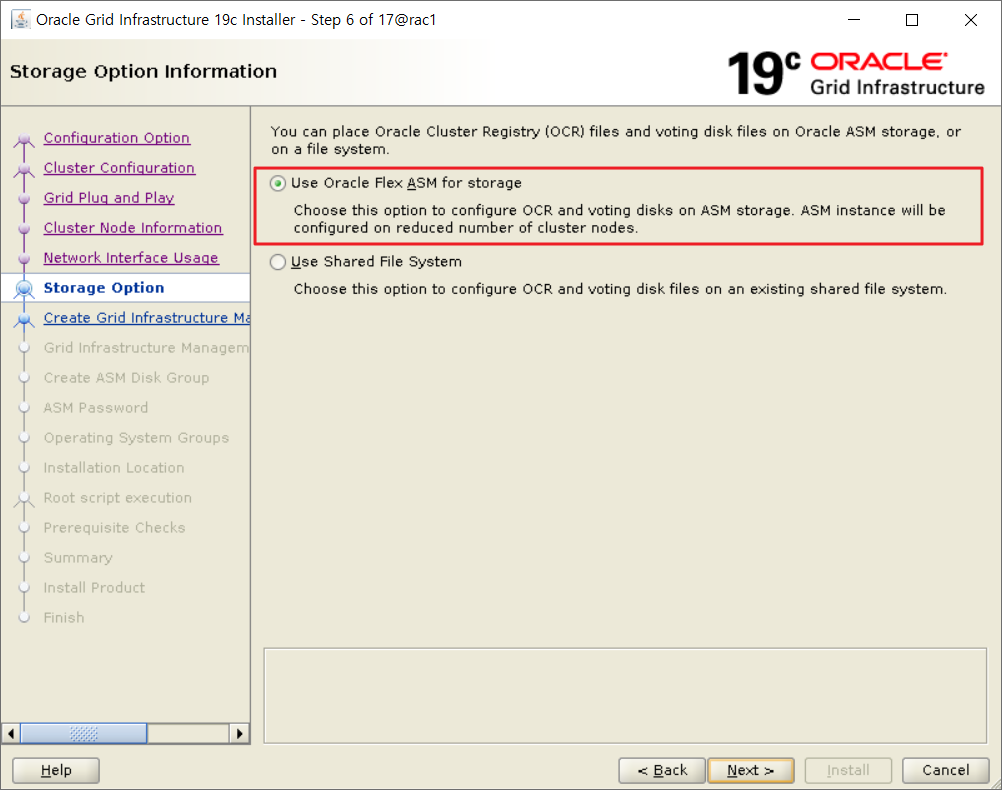

저장영역 옵션 정보를 선택합니다.

asm 사용할건지 공유파일시스템 쓸건지 고른다. 우린 리눅스 장비이다보니

OCR과 Voting disk를 ASM에 구성할 것이므로 Use Oracle Flex ASM for storatge를 선택하고 Next 버튼을 클릭합니다.

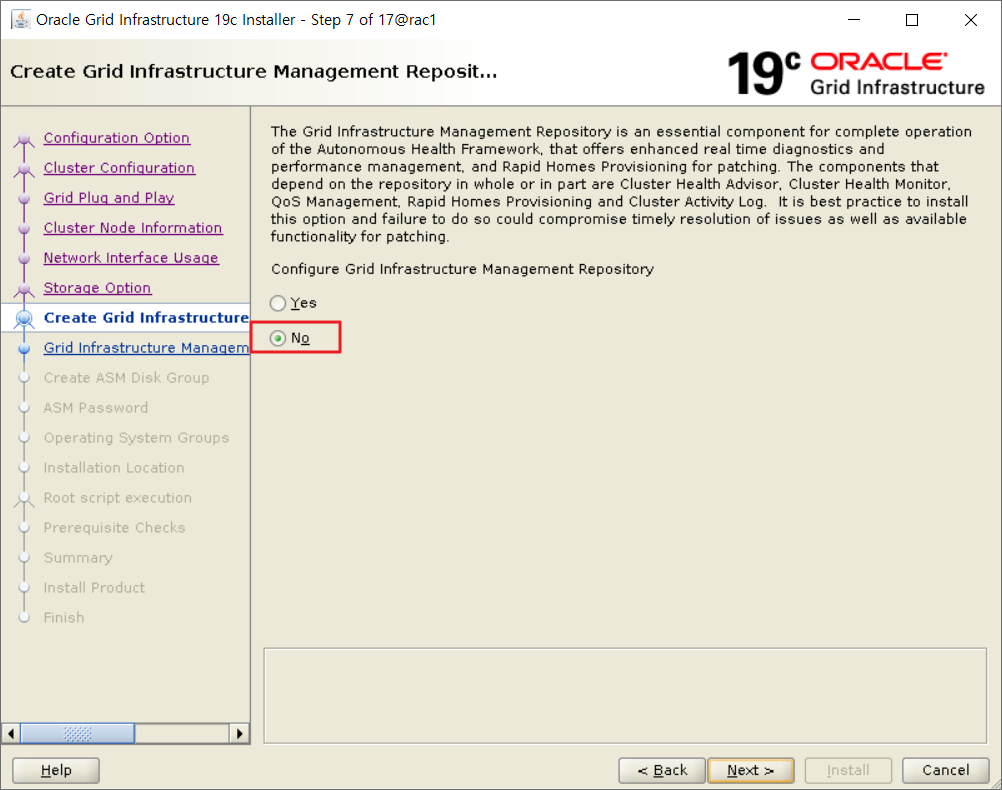

Grid Infrastructure 관리 저장소 옵션을 선택합니다. GIMR

Oracle 독립형 클러스터는 GIMR(Grid Infrastructure Management Repository)을 로컬로 호스팅합니다. GIMR은 클러스터에 대한 정보를 저장하는 다중 테넌트 데이터베이스입니다. 이 정보에는 Cluster Health Monitor가 수집하는 실시간 성능 데이터와 Rapid Home Provisioning에 필요한 메타데이터가 포함됩니다.

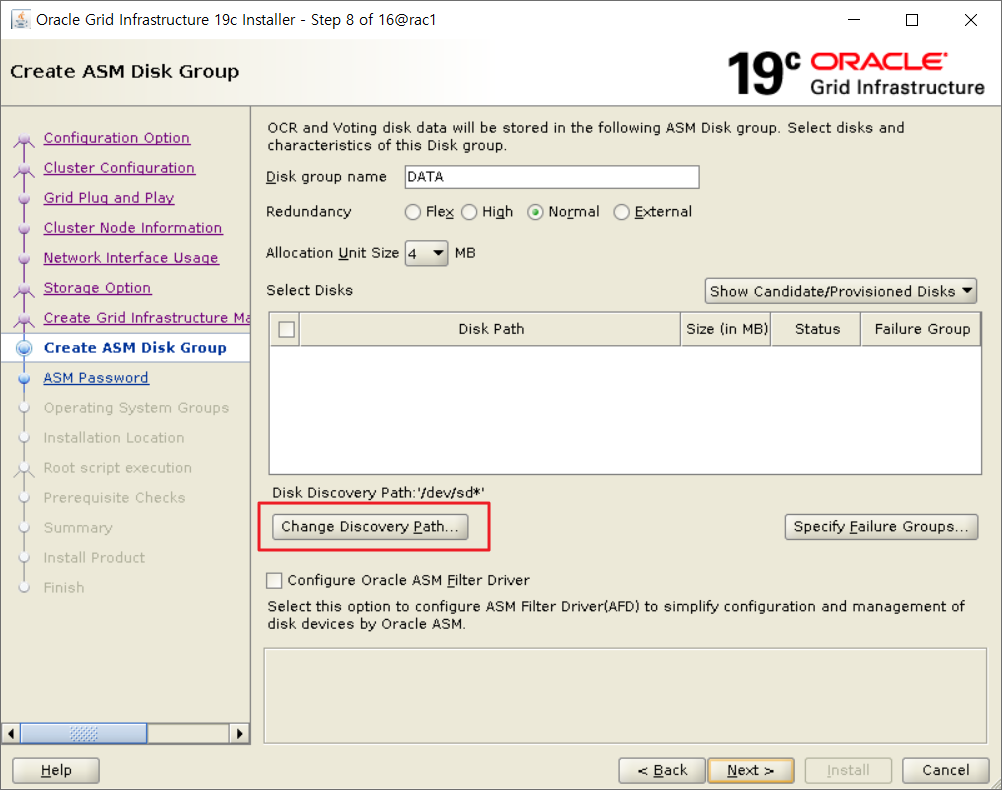

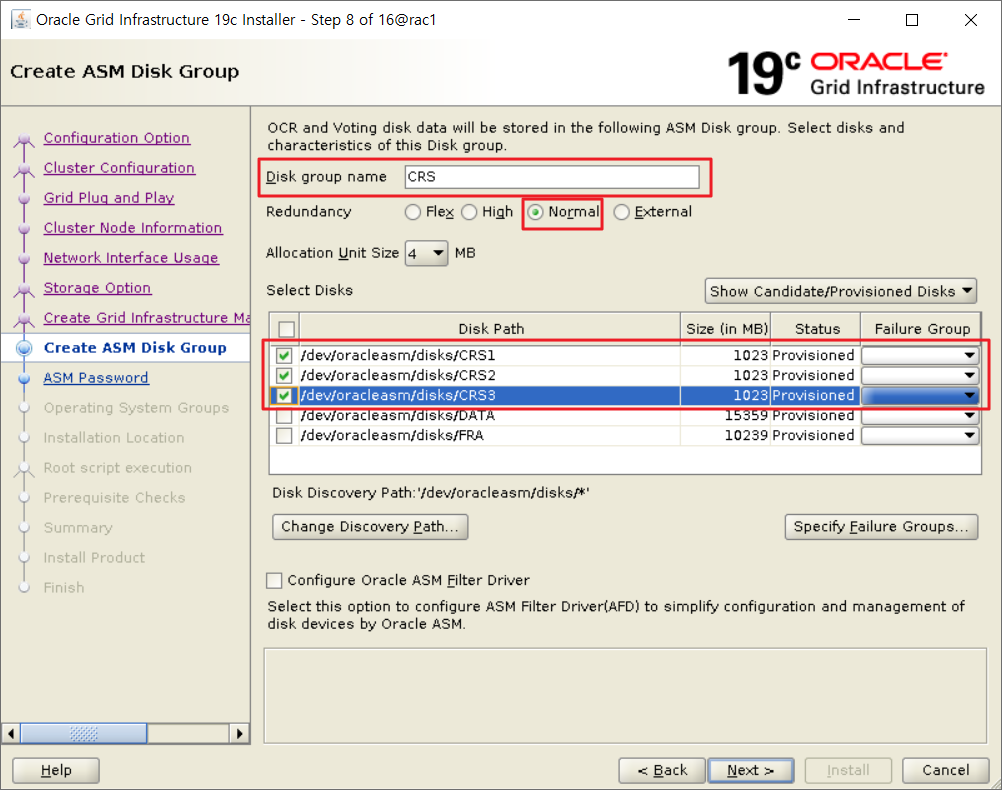

OCR과 Voting을 저장하기 위한 ASM 디스크 그룹을 생성합니다.

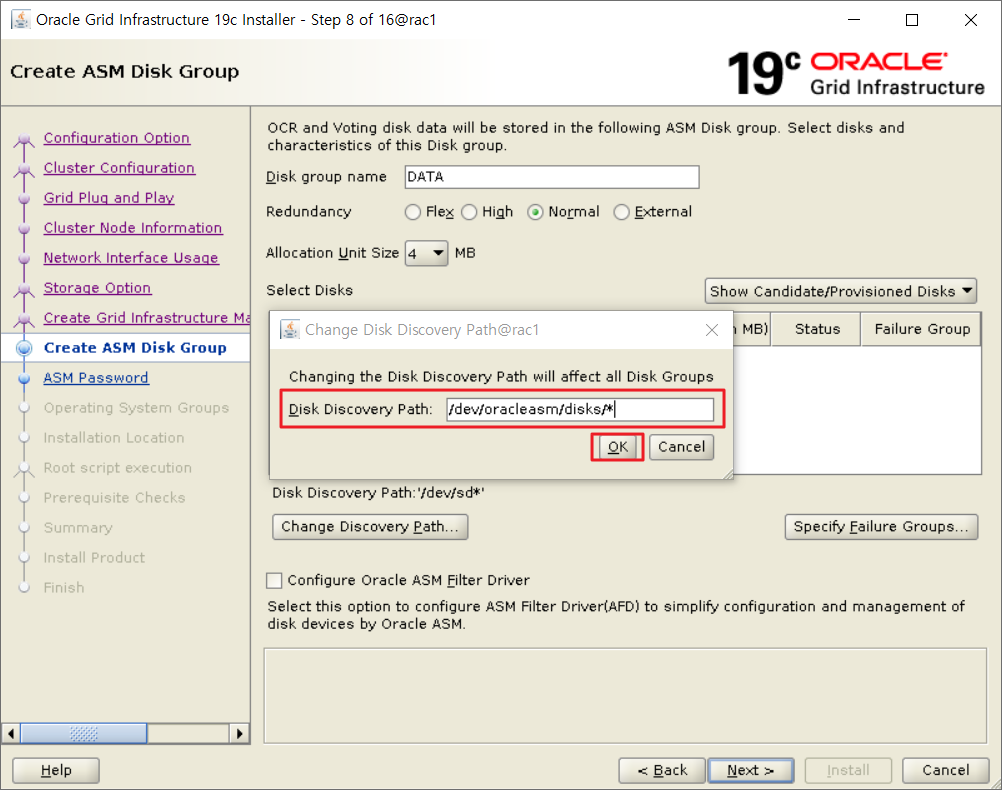

ASMLib로 등록된 디스크 목록을 검색하기 위해 Change Discovery Path 버튼을 클릭합니다.

ASMLib 사용 이유? 리눅스 경우 디스크가 추가디거나 삭제되는 경우 디바이스 네임과 오너십, 퍼미션이 변경될 수 있는데 이 제약사항 극복하기 위해 사용이 권장되었다. 디스크에 DiskGroup명을 labeling하여 Disk Name에 상관없이 label명을 통하여 Disk Path를 제공

디스크 검색 경로에 /dev/oracleasm/disks/* 를 입력하고 Next 버튼을 클릭합니다.

이 경로가 디폴트 위치이다. crs(ocr, voting 이중화) 리두, 컨트롤 파일 다중화 하려면

디스크 그룹 이름 변경 및 디스크 선택

노멀로 하면 낭비가 심하다. data -> external로 선택하기.

그ㅜㅂ룹안에 포함된 디스크들은 용량이 같아야한다. 성능 위해 뎅;터를 분산시킨다(asm) 그러니까 사이즈 같아야함.

✅ Disk group name 을 CRS로 변경하고, Redundancy는 기본값인 Normal을 유지합니다.

✅ 검색된 디스크 선택 목록에서 CRS001, CRS002, CRS003을 선택한 후 Next 버튼을 클릭합니다.

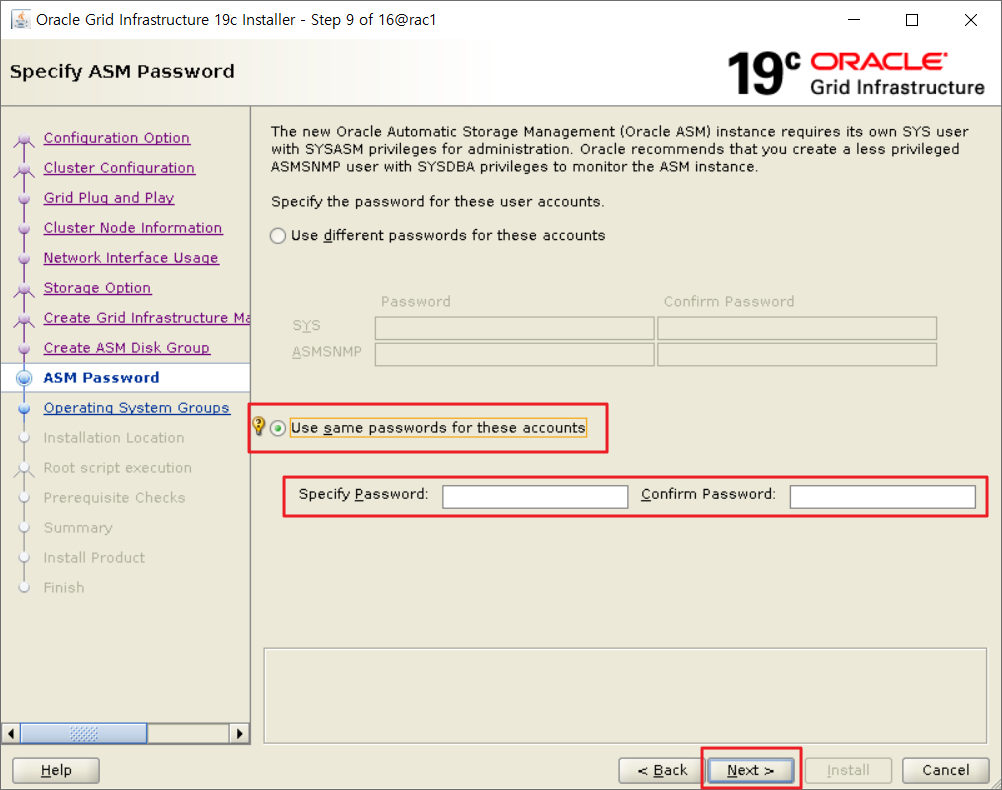

ASM Password 입력

oracle

✅ SYSASM 권한 사용자의 비밀번호를 설정합니다.

✅ 실습을 위해 하나의 패스워드로 SYS와 ASMSNMP 계정의 비밀번호를 사용할 것이므로 Use same passwords for these accounts를 선택하고 비밀번호를 입력합니다.

✅ 비밀번호를 입력하였으면 Next 버튼을 클릭합니다.

✅ 암호 복잡도 규칙에 맞는 않는 비밀번호를 입력하면 아래와 같은 안내 메시지가 출력됩니다. 설치 Test를 위한 것이므로 Yes 버튼을 클릭합니다.

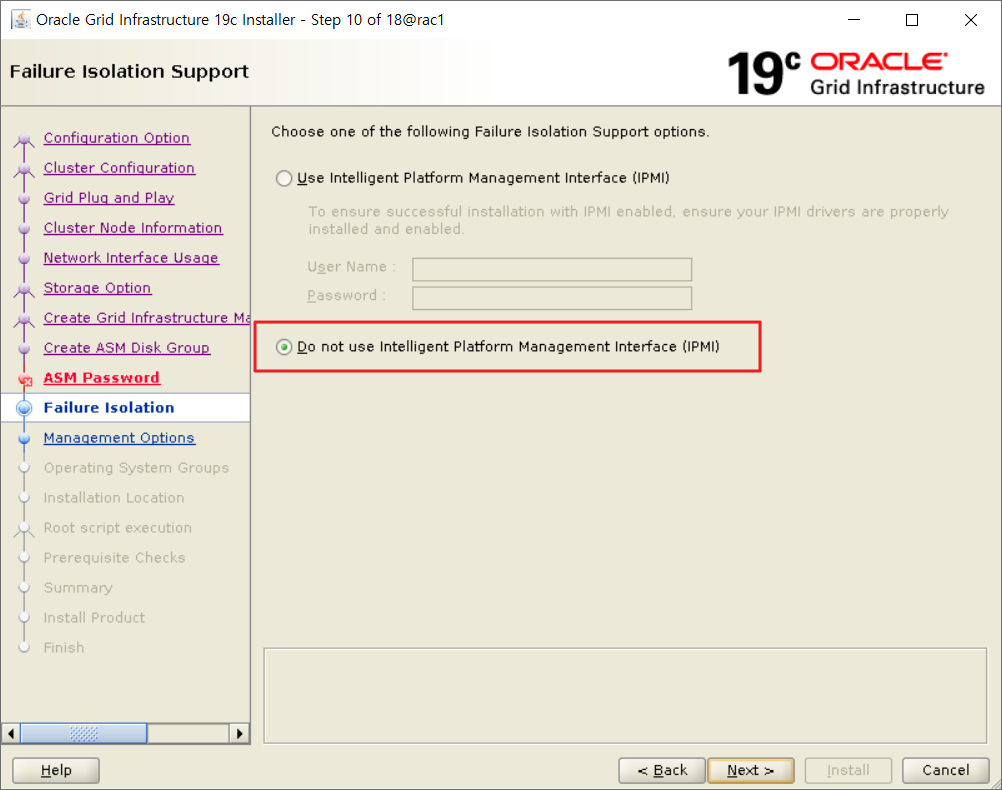

실패 분리 지원 설정

Do not use Intelligent Platform Management Interface (IPMI)를 선택하고 Next 버튼을 클릭합니다. - 용어 찾아보기

IPMI 는 하드웨어 관리 인터페이스인데, IPMItool은 IPMI 하드웨어가 상주하는 LAN에서 실행되는 서버 또는 기타 서버를 모니터하거나 관리하는 유연한 통신 기능을 가지고 있습니다.

관리 옵션 지정

별도의 EM 시스템에 등록하지 않는다면, Register with Enterprise Manager(EM) Cloud Control이 해제된 기본 상태에서 Next 버튼을 클릭합니다.

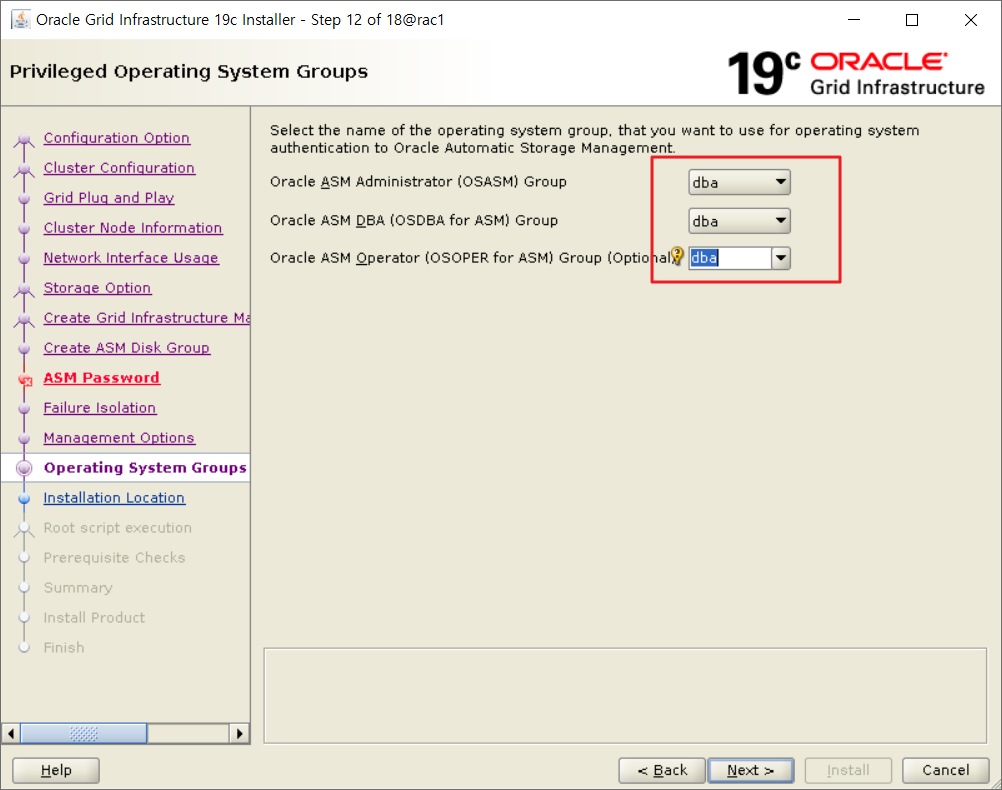

권한 부여된 운영체제 그룹 설정

ASM 권한 관련 OS 인증에 사용할 OS 그룹을 선택하는 단계입니다.

그룹은 편의를 위해 dba로만 설정했습니다.

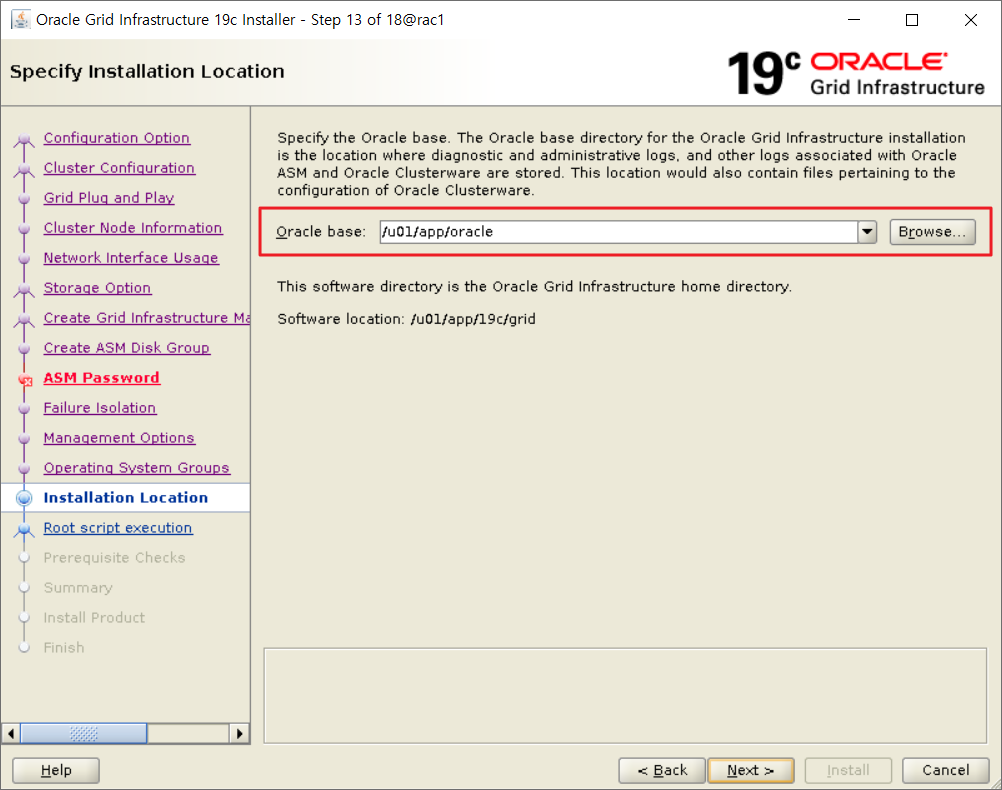

설치 위치 지정

Oracle base 경로 확인

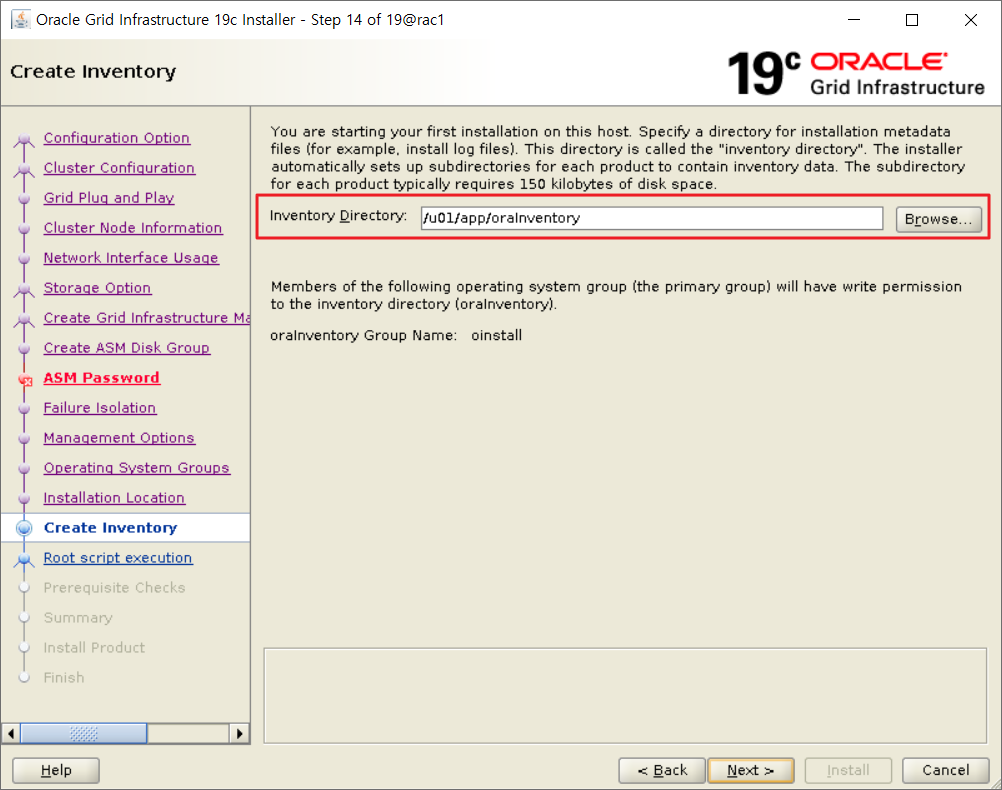

인벤토리 생성

OracleInventory에는 오라클 소프트웨어의 설치 정보가 저장됩니다.

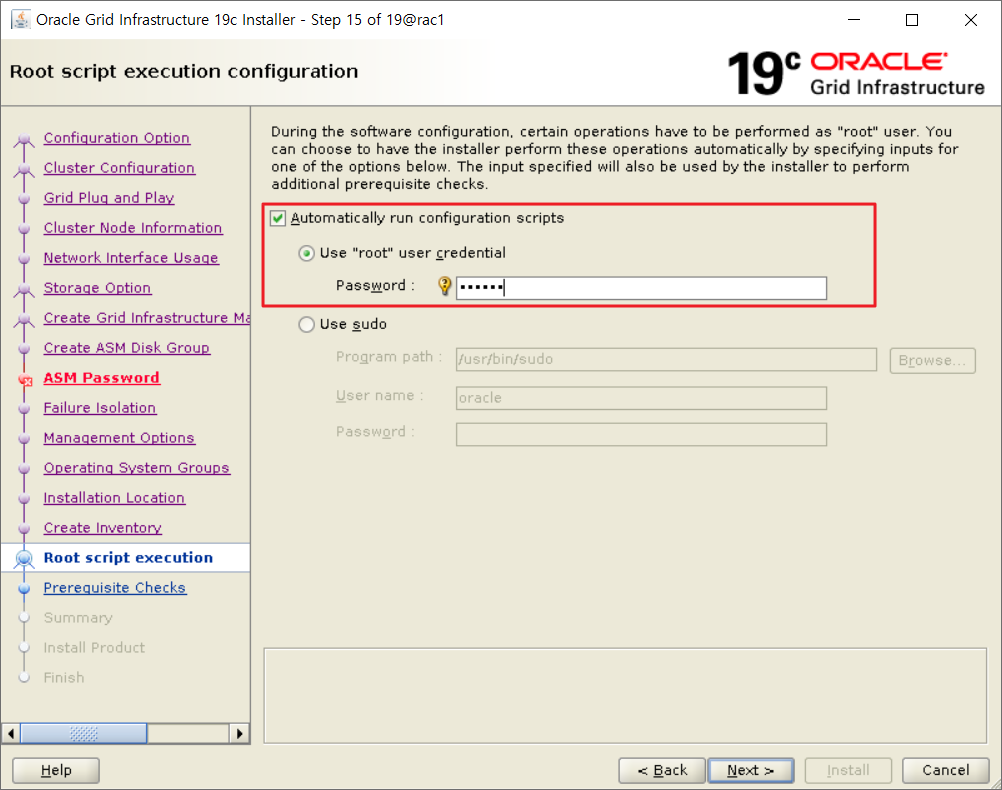

루트 스크립트 실행 구성

✅ 클러스터 구성 과정 중에 root 권한으로 스크립트를 실행하는 과정이 있습니다.

✅ 자동으로 구성 스크립트를 실행하기 위해서는 모든 노드의 root 계정 비밀번호가 동일해야 합니다.

✅ Automaticalluy run configuration scripts 를 체크합니다

✅ Use "root" user credetial을 선택한 후 root 계정의 비밀번호를 입력하고 Next 버튼을 클릭합니다.

✅ 만약 노드간에 root 계정의 암호가 다르거나, 수동으로 설치를 진행하고자 할 경우에는 아무 것도 선택하지 않고 다음 단계로 진행하면 수동으로 스크립트 실행을 할 수 있습니다.

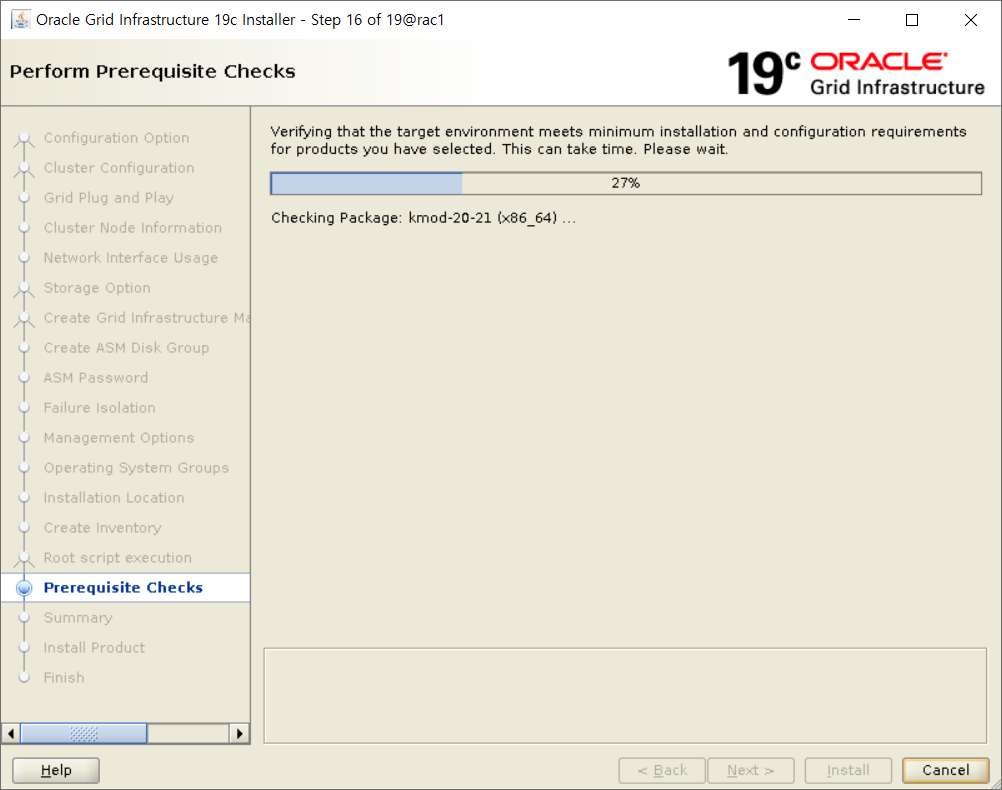

✅ 설치에 앞서 서버에 대한 필요 조건 검사가 실행됩니다.

✅ 이 단계에서 누락되거나 잘못된 설정을 반드시 수정하고 설치를 진행해야 합니다

✅ 특이사항이 없을 경우에는 자동으로 다음 단계로 넘어갑니다.

보통 고객사가면 이거 잠겨있다. 별도 창에서 담당자가 마지막에 열어주실 것이다. root계정은 dba도 이 계정을 잚못여는 경우가 있다.

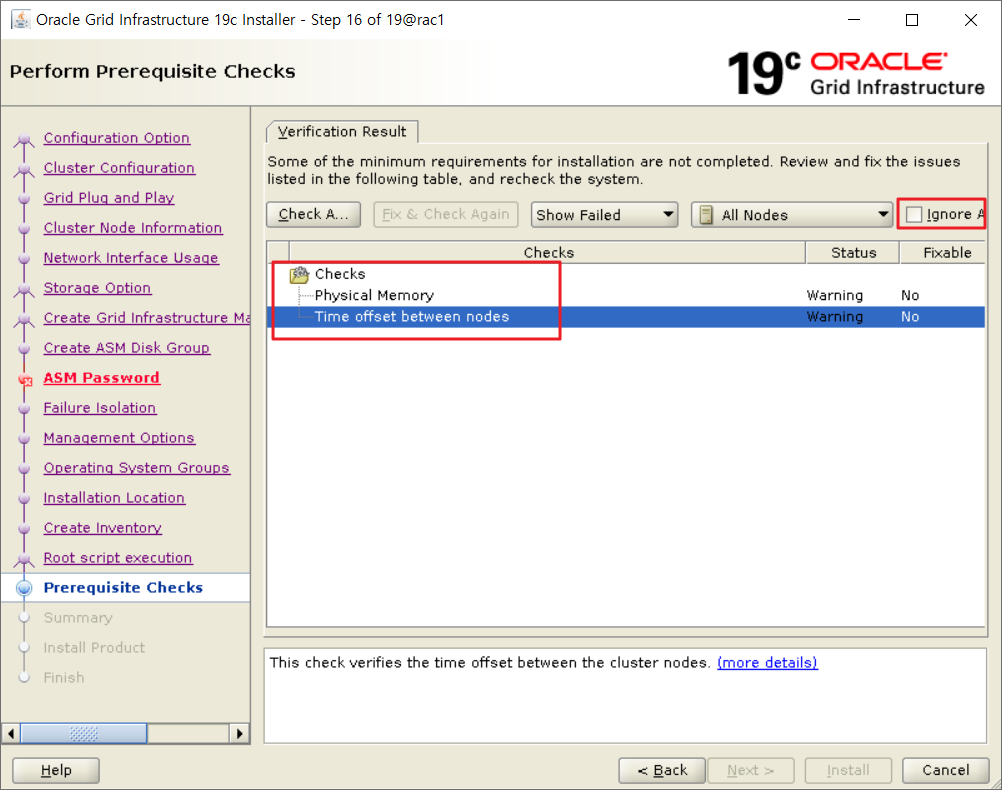

- NTP 설정 ! 메모리는 적어도 8기가 여야하는데 자금

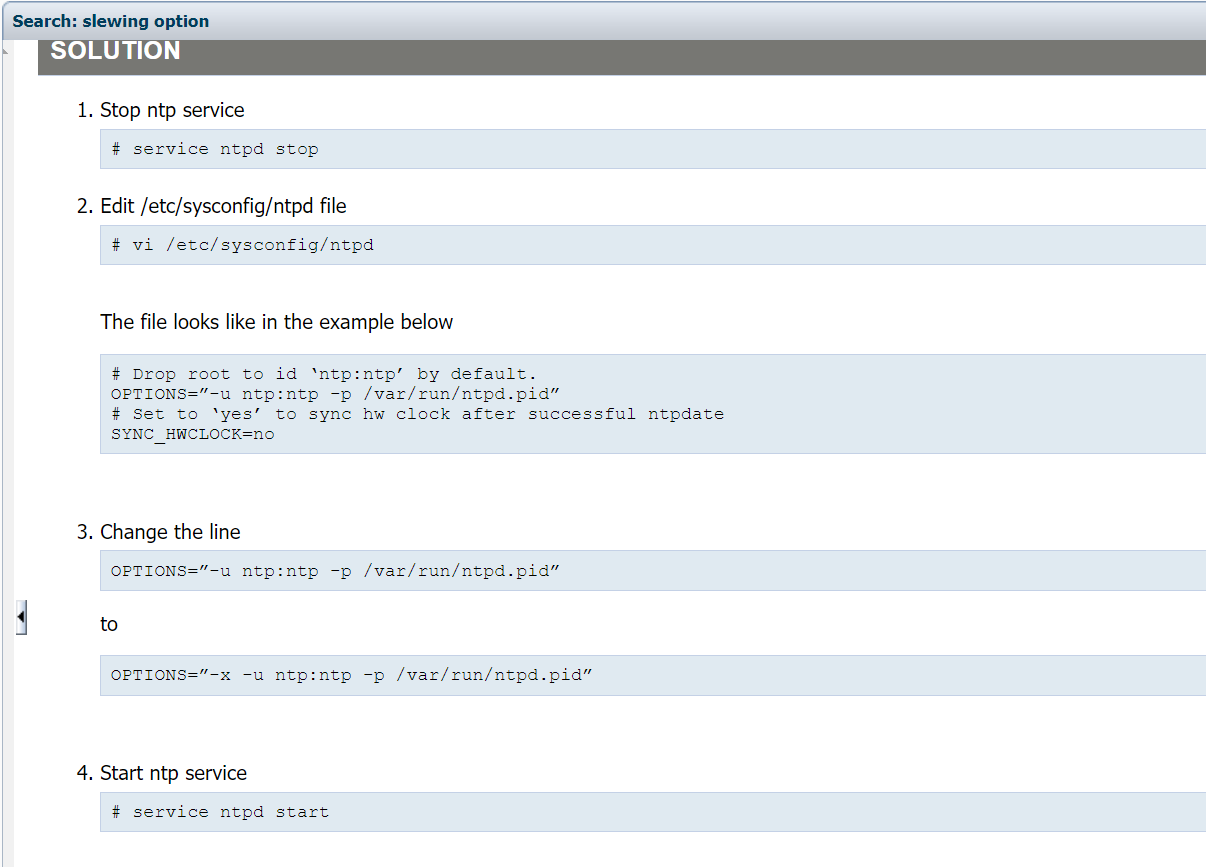

NTP 설정

Oracle Linux: How to Start NTP Service With Slewing Enabled (Doc ID 2422934.1)

Stop ntp service

# service ntpd stop

Edit /etc/sysconfig/ntpd file

# vi /etc/sysconfig/ntpd

The file looks like in the example below

# Drop root to id ‘ntp:ntp’ by default.

OPTIONS=”-u ntp:ntp -p /var/run/ntpd.pid”

# Set to ‘yes’ to sync hw clock after successful ntpdate

SYNC_HWCLOCK=no

Change the line

OPTIONS=”-u ntp:ntp -p /var/run/ntpd.pid”

to

OPTIONS=”-x -u ntp:ntp -p /var/run/ntpd.pid”

Start ntp service

# service ntpd startTime offset between nodes - This check verifies the time offset between the cluster nodes. Error:

-

PRVG-12103 : The current time offset of "456" seconds on node "rac1" is greater than the permissible offset of "60" when compared to the time on node "rac2" - Cause: The Cluster Verification Utility detected a time offset between the indicated nodes was more than the permissible value. - Action: Ensure that the time difference between the indicated nodes is less than the indicated permissible value.

-

PRVG-12102 : The time difference between nodes "rac1" and "rac2" is beyond the permissible offset of "60" seconds - Cause: The Cluster Verification Utility detected a time offset between the indicated nodes was more than the indicated permissible value. - Action: Ensure that the time difference between the indicated nodes is less than the indicated permissible value.

Verification WARNING result on node: rac1

Verification result of succeeded node: rac2Physical Memory - This is a prerequisite condition to test whether the system has at least 8GB (8388608.0KB) of total physical memory.

Check Failed on Nodes: [rac2, rac1]

Verification result of failed node: rac2

Expected Value

: 8GB (8388608.0KB)

Actual Value

: 3.8354GB (4021664.0KB)

Details:

-

PRVF-7530 : Sufficient physical memory is not available on node "rac2" [Required physical memory = 8GB (8388608.0KB)] - Cause: Amount of physical memory (RAM) found does not meet minimum memory requirements. - Action: Add physical memory (RAM) to the node specified.

Back to Top

Verification result of failed node: rac1

Expected Value

: 8GB (8388608.0KB)

Actual Value

: 3.8354GB (4021680.0KB)

Details:

-

PRVF-7530 : Sufficient physical memory is not available on node "rac1" [Required physical memory = 8GB (8388608.0KB)] - Cause: Amount of physical memory (RAM) found does not meet minimum memory requirements. - Action: Add physical memory (RAM) to the node specified.

Back to Top

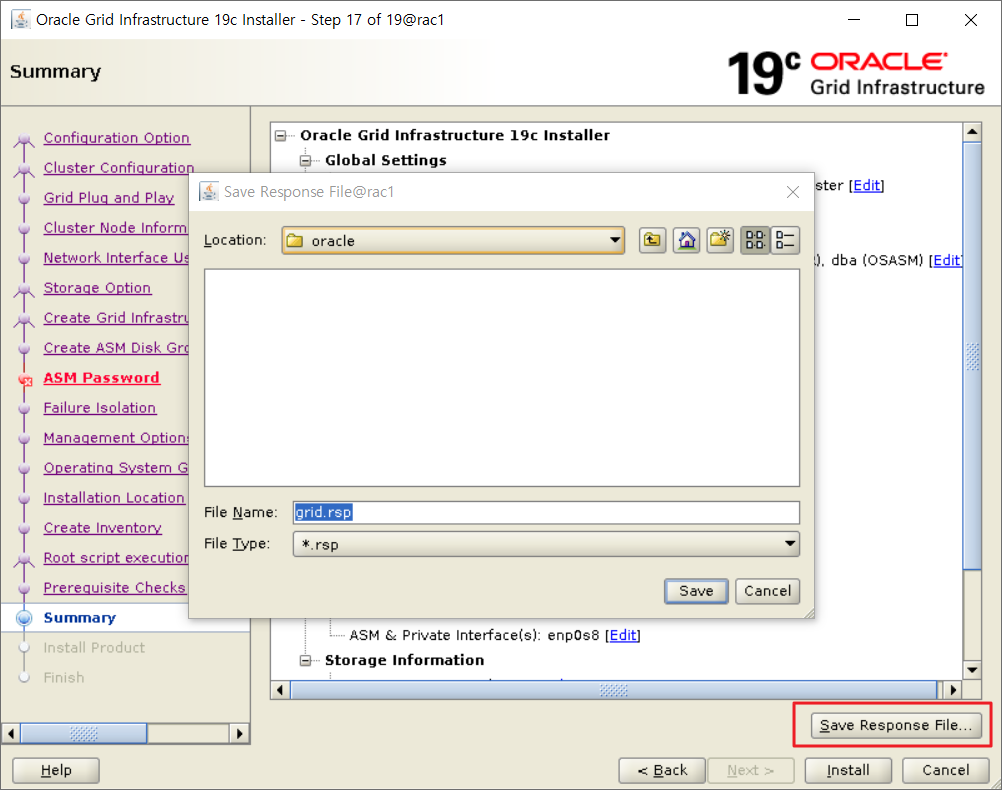

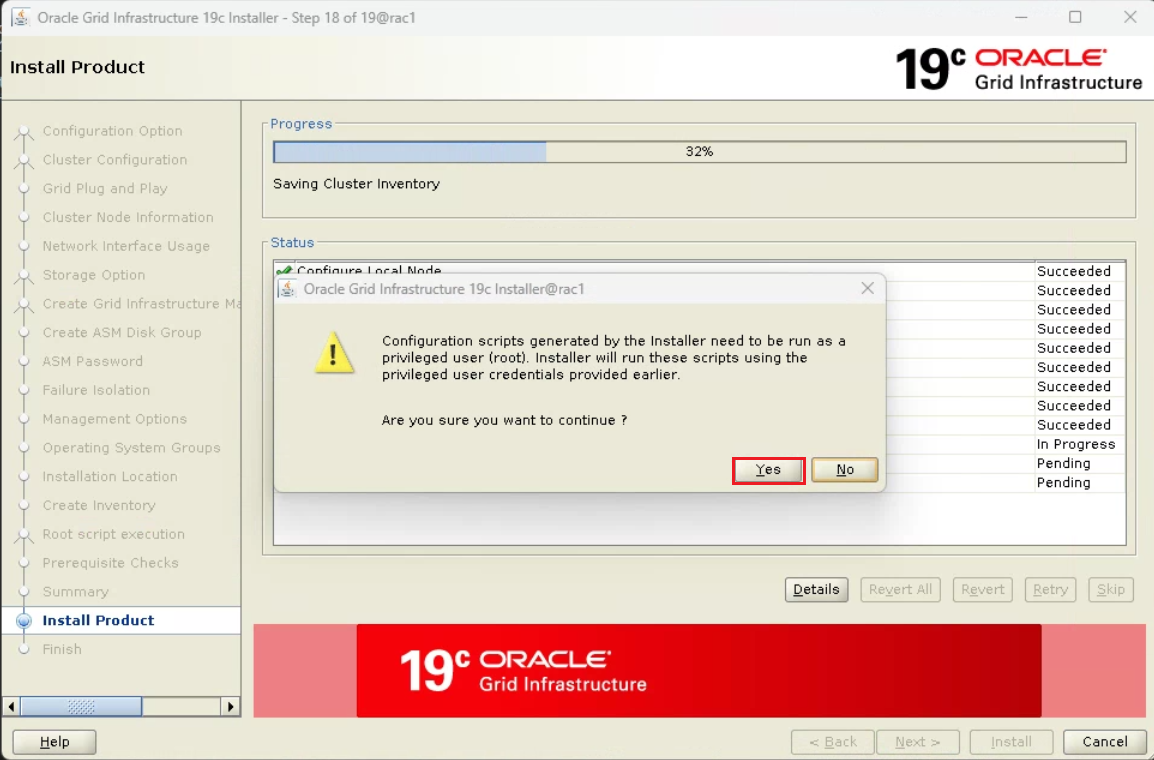

install

• 로컬 노드와 원격 노드에 grid 설치가 진행됩니다.

• 로컬 노드에는 GRID_HOME 디렉토리에 설치 파일의 압축이 이미 풀려 있으므로, 파일 복제 과정은 생략됩니다.

• 원격 노드에는 Private 망의 SSH 연결을 통해 설치 파일이 전송되며 GRID_HOME 디렉토리에 파일 복제가 진행됩니다

• 클러스터 구성 스크립트를 자동으로 실행할 것인지 뭍는 창이 뜨면 Yes를 클릭합니다.

• 설치 과정 중에 입력한 root 사용자의 비밀번호를 이용하여 자동으로 스크립트가 실행됩니다

• Details 버튼을 클릭하면 설치 과정을 확인할 수 있습니다.

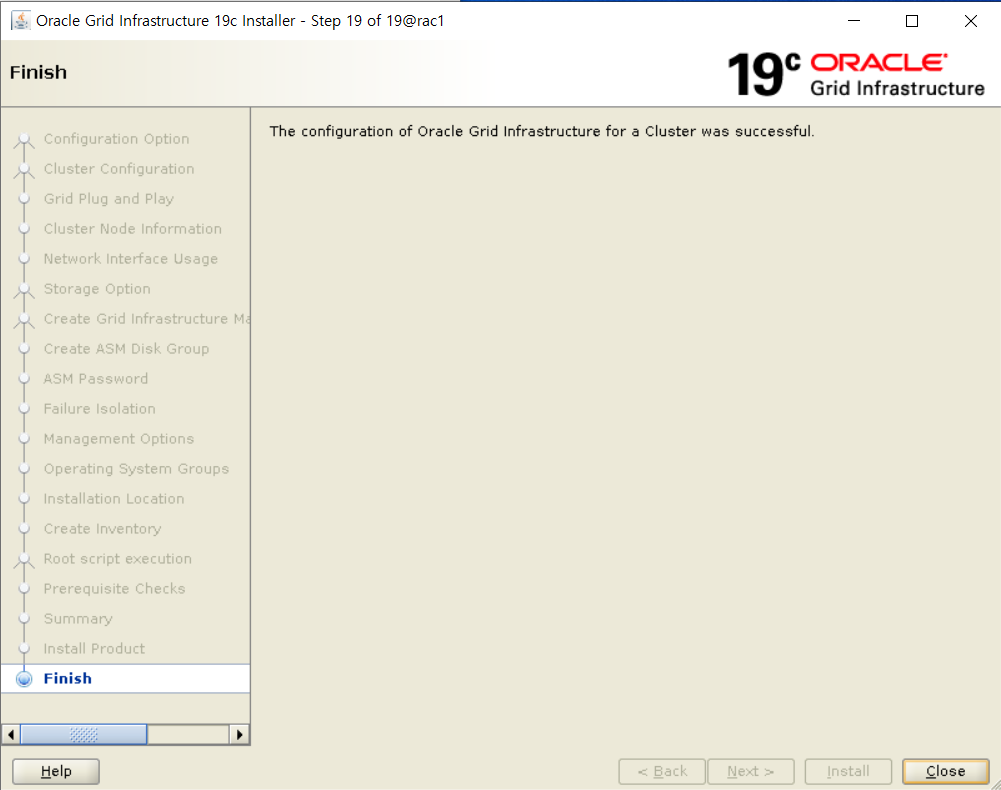

grid 설치와 구성이 완료되면 Close 버튼을 클릭하여 설치 프로그램을 종료합니다.

정상적으로 클러스터가 구성되었는지 확인

crsctl stat res -t

[+ASM1:/u01/app/19c/grid]> crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.CRS.dg(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1 STABLE

ora.qosmserver

1 ONLINE ONLINE rac1 STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan2.vip

1 ONLINE ONLINE rac1 STABLE

ora.scan3.vip

1 ONLINE ONLINE rac1 STABLE

--------------------------------------------------------------------------------CRS 시작 및 종료(명령어 설명)

crsctl start crs #crs 시작

crsctl stop crs #crs 종료

crsctl stop crs -f #crs 강제 종료원래 11g에는

/orainstPoot.sh : 설치정보를 등록한다.

/root.sh : 보팅디스크 포맷하고 데몬을 한번 올렸다가 내린다.

포스트 보고 동일하게 작업중인데 vm에서 두개의 서버를 동시에 올리면 한개의 서버는 작동을 못하고 꺼지네요.