0720-Kubernetes

ConfigMap

- 이미지 디폴트 경로를 내 도커허브로 지정

# kubectl create secret generic seozzang3 --from-file=.dockerconfigjson=/root/.docker/config.json --type=kubernetes.io/dockerconfigjson

# kubectl patch -n default serviceaccount/default -p '{"imagePullSecrets":[{"name": "seozzang3"}]}'

# kubectl describe serviceaccount default -n default

Name: default

Namespace: default

Labels: <none>

Annotations: <none>

Image pull secrets: seozzang3

Mountable secrets: default-token-8zt2f

Tokens: default-token-8zt2f

Events: <none>deployment-config01.yaml 생성

apiVersion: apps/v1

kind: Deployment

metadata:

name: configapp

labels:

app: configapp

spec:

replicas: 1

selector:

matchLabels:

app: configapp

template:

metadata:

labels:

app: configapp

spec:

containers:

- name: testapp

image: nginx

ports:

- containerPort: 8080

env:

- name: DEBUG_LEVEL

valueFrom:

configMapKeyRef:

name: config-dev

key: DEBUG_INFO

---

apiVersion: v1

kind: Service

metadata:

labels:

app: configapp

name: configapp-svc

namespace: default

spec:

type: NodePort

ports:

- nodePort: 30800

port: 8080

protocol: TCP

targetPort: 80

selector:

app: configapp

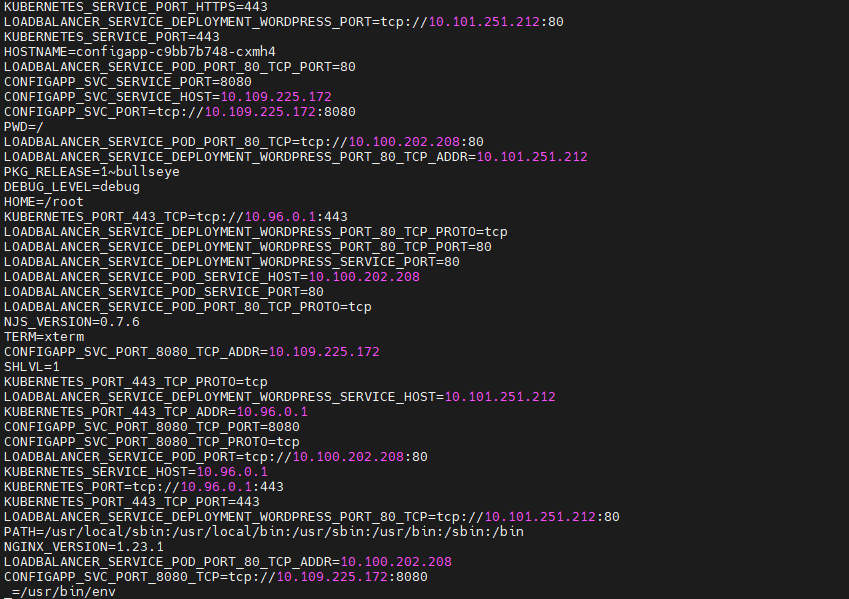

# kubectl apply -f deployment-config01.yaml- 환경변수 확인

[root@master1 configmap]# kubectl exec -it configapp-c9bb7b748-cxmh4 -- bash

root@configapp-c9bb7b748-cxmh4:/# env

워드프레스 설치

configmap-wordpress.yaml 생성

apiVersion: v1

kind: ConfigMap

metadata:

name: config-wordpress

namespace: default

data:

MYSQL_ROOT_HOST: '%'

MYSQL_ROOT_PASSWORD: kosa0401

MYSQL_DATABASE: wordpress

MYSQL_USER: wpuser

MYSQL_PASSWORD: wppass

[root@master1 configmap]# kubectl apply -f configmap-wordpress.yaml

configmap/config-wordpress created- 확인

[root@master1 configmap]# kubectl describe configmaps config-wordpress

Name: config-wordpress

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

MYSQL_ROOT_PASSWORD:

----

kosa0401

MYSQL_USER:

----

wpuser

MYSQL_DATABASE:

----

wordpress

MYSQL_PASSWORD:

----

wppass

MYSQL_ROOT_HOST:

----

%

Events: <none>- db서버 설정 (mysql-pod-svc.yaml)

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

labels:

app: mysql-pod

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom: # 컨피그맵 설정 전체를 한꺼번에 불러와서 사용

- configMapRef:

name: config-wordpress

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-pod

ports:

- protocol: TCP

port: 3306

targetPort: 3306

[root@master1 configmap]# kubectl apply -f mysql-pod-svc.yaml- 웹서버 설정 (wordpress-pod-svc.yaml)

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.2.0

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master1 configmap]# kubectl apply -f wordpress-pod-svc.yaml

pod/wordpress-pod created

service/wordpress-svc created-> metallb가 있기 때문에 ip 주석처리

- 확인

[root@master1 configmap]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/mysql-pod 1/1 Running 0 11m

pod/wordpress-pod 1/1 Running 0 42s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 24m

service/mysql-svc ClusterIP 10.102.19.168 <none> 3306/TCP 11m

service/wordpress-svc LoadBalancer 10.104.244.194 192.168.56.104 80:32735/TCP 42s

deploy 이용 (mysql-deploy-svc.yaml)

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deploy

labels:

app: mysql-deploy

spec:

replicas: 1

selector:

matchLabels:

app: mysql-deploy

template:

metadata:

labels:

app: mysql-deploy

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-deploy

ports:

- protocol: TCP

port: 3306

targetPort: 3306

[root@master1 configmap]# kubectl apply -f mysql-deploy-svc.yaml

deployment.apps/mysql-deploy created

service/mysql-svc configured- 웹서버 설정 (wordpress-deploy-svc.yaml)

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-deploy

labels:

app: wordpress-deploy

spec:

replicas: 3

selector:

matchLabels:

app: wordpress-deploy

template:

metadata:

labels:

app: wordpress-deploy

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.2.0

selector:

app: wordpress-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master1 configmap]# vi wordpress-deploy-svc.yaml

[root@master1 configmap]# kubectl apply -f wordpress-deploy-svc.yaml

deployment.apps/wordpress-deploy created

service/wordpress-svc configured- 확인

[root@master1 configmap]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/mysql-deploy-5f9c8f66d4-8nqdn 1/1 Running 0 5m47s

pod/wordpress-deploy-57787cfd48-9c8vc 1/1 Running 0 31s

pod/wordpress-deploy-57787cfd48-d9mhm 1/1 Running 0 31s

pod/wordpress-deploy-57787cfd48-xmv4v 1/1 Running 0 31s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 49m

service/mysql-svc ClusterIP 10.102.19.168 <none> 3306/TCP 36m

service/wordpress-svc LoadBalancer 10.104.244.194 192.168.56.104 80:32735/TCP 25m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mysql-deploy 1/1 1 1 5m47s

deployment.apps/wordpress-deploy 3/3 3 3 31s

NAME DESIRED CURRENT READY AGE

replicaset.apps/mysql-deploy-5f9c8f66d4 1 1 1 5m47s

replicaset.apps/wordpress-deploy-57787cfd48 3 3 3 31s

[root@master1 configmap]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-deploy-5f9c8f66d4-8nqdn 1/1 Running 0 6m12s 10.244.1.7 worker1 <none> <none>

wordpress-deploy-57787cfd48-9c8vc 1/1 Running 0 56s 10.244.1.8 worker1 <none> <none>

wordpress-deploy-57787cfd48-d9mhm 1/1 Running 0 56s 10.244.2.20 worker2 <none> <none>

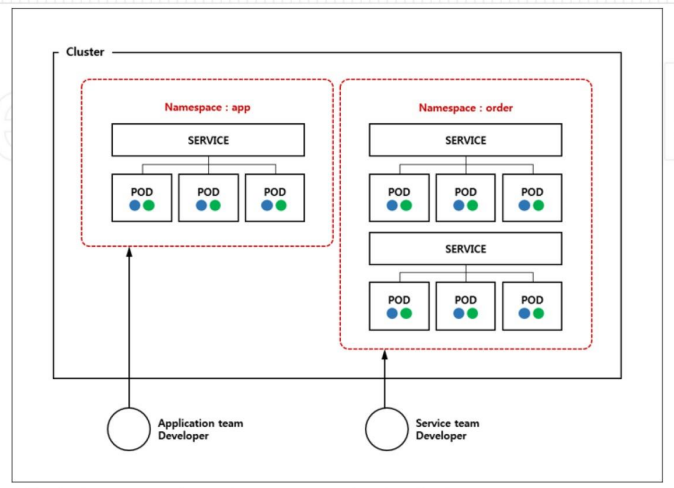

wordpress-deploy-57787cfd48-xmv4v 1/1 Running 0 56s 10.244.2.19 worker2 <none> <none>namespace

- 팀별로 namespace 가능

- 파티셔닝 느낌

- 논리적인 단위로 나눔

- 팀이 같은 목적으로 하나로 모아서 사용

- namespace 너무 많이 만들면 혼란

- 자원 관리

- 서비스, pod를 한곳에 모아두기

- 자주 사용하는 namespace를 자동으로 디폴트로 인식하게 하는게 편리

[root@master1 ~]# kubectl get namespaces

NAME STATUS AGE

default Active 24h

kube-flannel Active 24h

kube-node-lease Active 24h

kube-public Active 24h

kube-system Active 24h

metallb-system Active 19h

[root@master1 ~]# kubectl get all -n metallb-system

NAME READY STATUS RESTARTS AGE

pod/controller-5d6c599dc5-zw5rq 1/1 Running 3 19h

pod/speaker-hwt5d 1/1 Running 2 19h

pod/speaker-nhnnd 1/1 Running 3 19h

pod/speaker-rfpzx 1/1 Running 3 19h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 3 3 3 3 3 beta.kubernetes.io/os=linux 19h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 1/1 1 1 19h

NAME DESIRED CURRENT READY AGE

replicaset.apps/controller-594f59545d 0 0 0 19h

replicaset.apps/controller-5d6c599dc5 1 1 1 19h

replicaset.apps/controller-675d6c9976 0 0 0 19h

[root@master1 ~]# kubectl config get-contexts kubernetes-admin@kubernetes

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kubernetes-admin@kubernetes kubernetes kubernetes-admin

- namespace 생성

[root@master1 ~]# kubectl create namespace test-namespace

namespace/test-namespace created

[root@master1 ~]# kubectl get namespaces

NAME STATUS AGE

default Active 24h

kube-flannel Active 24h

kube-node-lease Active 24h

kube-public Active 24h

kube-system Active 24h

metallb-system Active 20h

test-namespace Active 6s[root@master1 ~]# kubectl run nginx-pod1 --image=nginx -n test-namespace

pod/nginx-pod1 created

[root@master1 ~]# kubectl get pod

[root@master1 ~]# kubectl get pod -n test-namespace

NAME READY STATUS RESTARTS AGE

nginx-pod1 1/1 Running 0 25s

- 디폴트로 설정

[root@master1 ~]# kubectl config set-context kubernetes-admin@kubernetes --namespace=test-namespace

Context "kubernetes-admin@kubernetes" modified.

[root@master1 ~]# kubectl config get-contexts kubernetes-admin@kubernetes

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kubernetes-admin@kubernetes kubernetes kubernetes-admin test-namespace- 확인 (디폴트로 설정했기 때문에 바로 보임)

[root@master1 ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-pod1 1/1 Running 0 4m6s- 서비스

[root@master1 ~]# kubectl expose pod nginx-pod1 --name loadbalancer --type=LoadBalancer --port 80

service/loadbalancer exposed

[root@master1 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-pod1 1/1 Running 0 7m28s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/loadbalancer LoadBalancer 10.110.28.105 192.168.56.104 80:32533/TCP 2s- 복구

[root@master1 ~]# kubectl config set-context kubernetes-admin@kubernetes --namespace=

Context "kubernetes-admin@kubernetes" modified.

[root@master1 ~]# kubectl config get-contexts kubernetes-admin@kubernetes

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kubernetes-admin@kubernetes kubernetes kubernetes-admin

[root@master1 ~]# kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 136mmy-ns 생성

[root@master1 ~]# kubectl create ns my-ns

namespace/my-ns created

[root@master1 ~]# kubectl get ns

NAME STATUS AGE

default Active 26h

kube-flannel Active 26h

kube-node-lease Active 26h

kube-public Active 26h

kube-system Active 26h

metallb-system Active 21h

my-ns Active 5s--- ResourceQuota

sample-resourcequota.yaml 생성

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota

namespace: my-ns

spec:

hard:

count/pods: 5

[root@master1 resourcequota]# kubectl apply -f sample-resourcequota.yaml

resourcequota/sample-resourcequota configured- 확인

[root@master1 resourcequota]# kubectl describe resourcequotas sample-resourcequota -n my-ns

Name: sample-resourcequota

Namespace: my-ns

Resource Used Hard

-------- ---- ----

count/pods 0 5

[root@master1 resourcequota]# kubectl run new-nginx1 --image=nginx -n my-ns

pod/new-nginx1 created

[root@master1 resourcequota]# kubectl run new-nginx2 --image=nginx -n my-ns

pod/new-nginx2 created

[root@master1 resourcequota]# kubectl run new-nginx3 --image=nginx -n my-ns

pod/new-nginx3 created

[root@master1 resourcequota]# kubectl run new-nginx4 --image=nginx -n my-ns

pod/new-nginx4 created

[root@master1 resourcequota]# kubectl run new-nginx5 --image=nginx -n my-ns

pod/new-nginx5 created

[root@master1 resourcequota]# kubectl describe resourcequotas sample-resourcequota -n my-ns

Name: sample-resourcequota

Namespace: my-ns

Resource Used Hard

-------- ---- ----

count/pods 10 10- namespace default로 지정

[root@master1 resourcequota]# kubectl config set-context kubernetes-admin@kubernetes --namespace=my-ns

Context "kubernetes-admin@kubernetes" modified.

[root@master1 resourcequota]# kubectl config get-contexts kubernetes-admin@kubernetes

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kubernetes-admin@kubernetes kubernetes kubernetes-admin my-ns- sample-resourcequota-usable.yaml 생성

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota-usable

spec:

hard:

requests.memory: 2Gi

requests.storage: 5Gi

sample-storageclass.storageclass.storage.k8s.io/requests.storage: 5Gi

requests.ephemeral-storage: 5Gi

requests.nvidia.com/gpu: 2

limits.cpu: 4

limits.ephemeral-storage: 10Gi

limits.nvidia.com/gpu: 4

[root@master1 resourcequota]# kubectl apply -f sample-resourcequota-usable.yaml

resourcequota/sample-resourcequota-usable created- 확인

[root@master1 resourcequota]# kubectl get resourcequotas

NAME AGE REQUEST LIMIT

sample-resourcequota 17m count/pods: 8/8

sample-resourcequota-usable 29s requests.ephemeral-storage: 0/5Gi, requests.memory: 0/2Gi, requests.nvidia.com/gpu: 0/2, requests.storage: 0/5Gi, sample-storageclass.storageclass.storage.k8s.io/requests.storage: 0/5Gi limits.cpu: 0/4, limits.ephemeral-storage: 0/10Gi, limits.nvidia.com/gpu: 0/4- vi sample-pod.yaml 생성

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

spec:

containers:

- name: nginx-container

image: nginx:1.16

resources:

requests: # 최소

memory: "64Mi"

cpu: "50m"

limits: # 최대

memory: "128Mi"

cpu: "100m" # 1000milicore 1core

[root@master1 resourcequota]# kubectl apply -f sample-pod.yaml-> 만들어지지 않음

-> sample-resourcequota에서 8개로 지정하고 다 사용했기 때문

-> 갯수 늘린 후 다시 실행

[root@master1 resourcequota]# kubectl edit resourcequotas sample-resourcequota

resourcequota/sample-resourcequota edited

[root@master1 resourcequota]# kubectl apply -f sample-pod.yaml

pod/sample-pod createdLimitRange

sample-limitrange-container.yaml 생성

apiVersion: v1

kind: LimitRange

metadata:

name: sample-limitrange-container

namespace: default

spec:

limits:

- type: Container

default:

memory: 512Mi

cpu: 500m

defaultRequest:

memory: 256Mi

cpu: 250m

max:

memory: 1024Mi

cpu: 1000m

min:

memory: 128Mi

cpu: 125m

maxLimitRequestRatio:

memory: 2

cpu: 2

[root@master1 limitrange]# kubectl apply -f sample-limitrange-container.yaml- 확인

[root@master1 limitrange]# kubectl describe limitranges sample-limitrange-container

Name: sample-limitrange-container

Namespace: my-ns

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory 128Mi 1Gi 256Mi 512Mi 2

Container cpu 125m 1 250m 500m 2

[root@master1 limitrange]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

new-pod 1/1 Running 0 29m 10.244.2.27 worker2 <none> <none>

sample-pod 1/1 Running 0 43m 10.244.1.17 worker1 <none> <none>

sample-pod-limitrange 1/1 Running 0 22s 10.244.2.28 worker2 <none> <none>

sample-resource-76fb4d9889-254hg 1/1 Running 0 33m 10.244.1.19 worker1 <none> <none>

sample-resource-76fb4d9889-7nmcl 1/1 Running 0 33m 10.244.2.26 worker2 <none> <none>

sample-resource-76fb4d9889-snbcz 1/1 Running 0 33m 10.244.1.18 worker1 <none> <none>sample-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod-limitrange

spec:

containers:

- name: nginx-container

image: nginx:1.16sample-pod-overrequest.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod-overrequest

spec:

containers:

- name: nginx-container

image: nginx:1.16

resources:

requests:

cpu: 125m

limits:

cpu: 125msample-pod-overratio.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod-overratio

spec:

containers:

- name: nginx-container

image: nginx:1.16

resources:

requests:

cpu: 125m

limits:

cpu: 250m- 확인

[root@master1 limitrange]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

new-pod 1/1 Running 0 45m 10.244.2.27 worker2 <none> <none>

sample-pod 1/1 Running 0 58m 10.244.1.17 worker1 <none> <none>

sample-pod-limitrange 1/1 Running 0 15m 10.244.2.28 worker2 <none> <none>

sample-pod-overratio 0/1 Pending 0 7s <none> <none> <none> <none>

sample-pod-overrequest 1/1 Running 0 5m43s 10.244.2.29 worker2 <none> <none>

sample-resource-76fb4d9889-254hg 1/1 Running 0 48m 10.244.1.19 worker1 <none> <none>

sample-resource-76fb4d9889-7nmcl 1/1 Running 0 48m 10.244.2.26 worker2 <none> <none>

sample-resource-76fb4d9889-snbcz 1/1 Running 0 48m 10.244.1.18 worker1 <none> <none>파드 스케줄(자동 배치)

pod-schedule.yaml 생성

apiVersion: v1

kind: Pod

metadata:

name: pod-schedule-metadata

labels:

app: pod-schedule-labels

spec:

containers:

- name: pod-schedule-containers

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: pod-schedule-service

spec:

type: NodePort

selector:

app: pod-schedule-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master1 schedule]# kubectl apply -f pod-schedule.yaml

pod/pod-schedule-metadata created

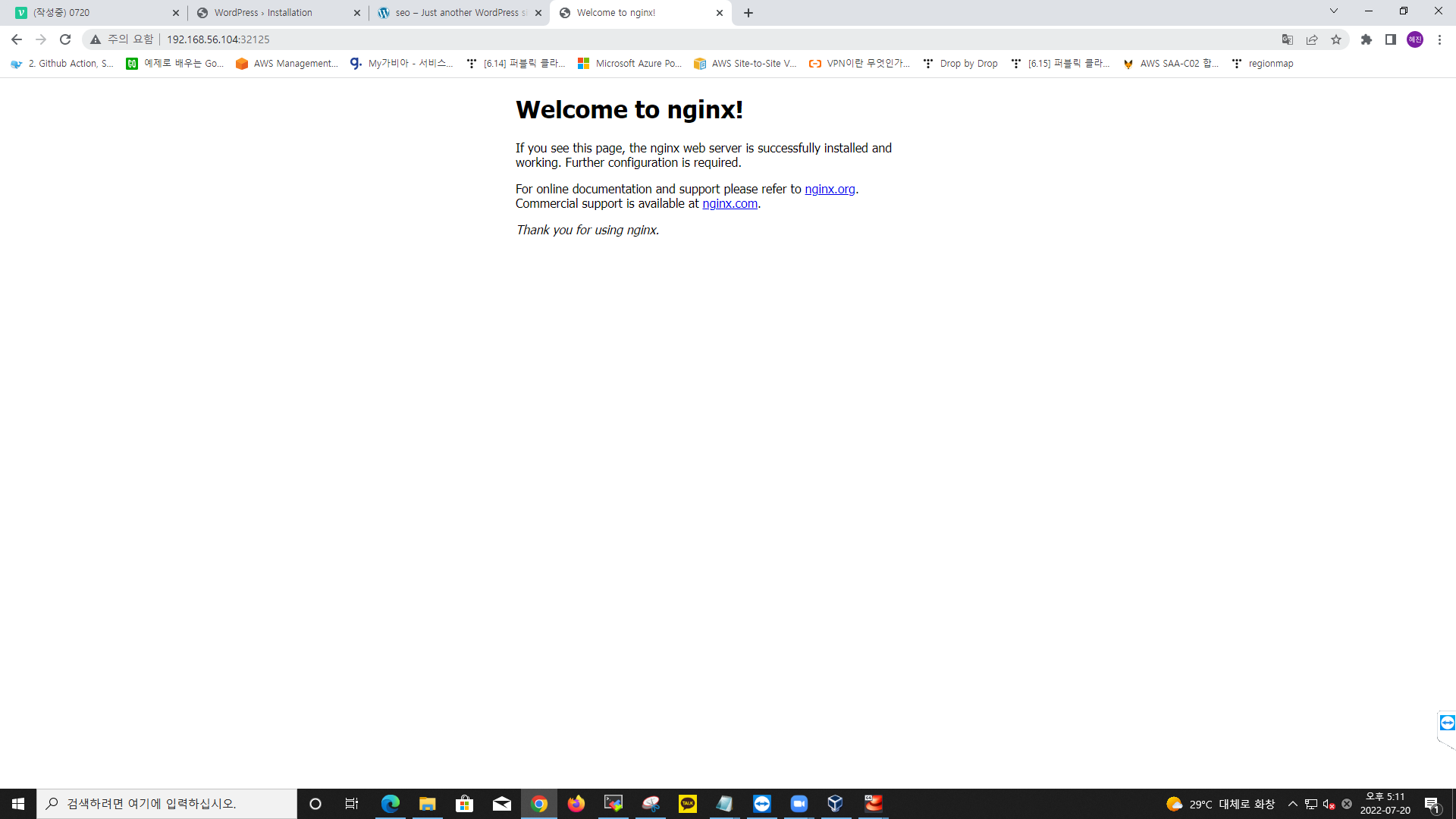

service/pod-schedule-service created- 확인

[root@master1 schedule]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/pod-schedule-metadata 1/1 Running 0 29s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/pod-schedule-service NodePort 10.97.163.213 <none> 80:32125/TCP 29s- 192.168.56.104:32125로 접속

파드 노드네임(수동 배치)

pod-nodename.yaml 생성

apiVersion: v1

kind: Pod

metadata:

name: pod-nodename-metadata

labels:

app: pod-nodename-labels

spec:

containers:

- name: pod-nodename-containers

image: nginx

ports:

- containerPort: 80

nodeName: worker2

---

apiVersion: v1

kind: Service

### metadata:

name: pod-nodename-service

spec:

type: NodePort

selector:

app: pod-nodename-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master1 schedule]# kubectl apply -f pod-nodename.yaml

pod/pod-nodename-metadata created

service/pod-nodename-service created- 확인

[root@master1 schedule]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ad-hoc1 1/1 Running 0 5m4s 10.244.2.32 worker2 <none> <none>

pod-nodename-metadata 1/1 Running 0 17s 10.244.2.33 worker2 <none> <none>

pod-schedule-metadata 1/1 Running 0 8m29s 10.244.1.21 worker1 <none> <none>-> worker2로 노드를 지정했기 떄문에 worker2에 생성

노드 셀렉터(수동 배치)

노드에 라벨 설정

[root@master1 schedule]# kubectl label node worker1 tier=dev

node/worker1 labeled

[root@master1 schedule]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master1 Ready master 29h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master1,kubernetes.io/os=linux,node-role.kubernetes.io/master=

worker1 Ready <none> 29h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker1,kubernetes.io/os=linux,tier=dev

worker2 Ready <none> 29h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker2,kubernetes.io/os=linux- pod-nodeselector.yaml 생성

apiVersion: v1

kind: Pod

metadata:

name: pod-nodeselector-metadata

labels:

app: pod-nodeselector-labels

spec:

containers:

- name: pod-nodeselector-containers

image: nginx

ports:

- containerPort: 80

nodeSelector:

tier: dev

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodeselector-service

spec:

type: NodePort

selector:

app: pod-nodeselector-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master1 schedule]# vi pod-nodeselector.yaml

[root@master1 schedule]# kubectl apply -f pod-nodeselector.yaml

pod/pod-nodeselector-metadata created

service/pod-nodeselector-service created- 확인

[root@master1 schedule]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ad-hoc1 1/1 Running 0 16m 10.244.2.32 worker2 <none> <none>

pod-nodename-metadata 1/1 Running 0 11m 10.244.2.33 worker2 <none> <none>

pod-nodeselector-metadata 1/1 Running 0 2m9s 10.244.1.22 worker1 <none> <none>

pod-schedule-metadata 1/1 Running 0 19m 10.244.1.21 worker1 <none> <none>- 라벨삭제

[root@master1 schedule]# kubectl label nodes worker1 tier-

node/worker1 labeled

[root@master1 schedule]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master1 Ready master 29h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master1,kubernetes.io/os=linux,node-role.kubernetes.io/master=

worker1 Ready <none> 29h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker1,kubernetes.io/os=linux

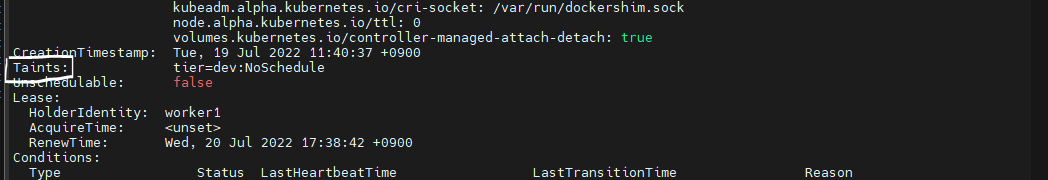

worker2 Ready <none> 29h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker2,kubernetes.io/os=linuxtaint와 toleration

[root@master1 schedule]# kubectl taint node worker1 tier=dev:NoSchedule

node/worker1 tainted