Why do we need MLOps?

To ensure the model's (service) agility in an inconsistent environment.

Components

- Server infra: Cloud/ On-premise(Private server)

- GPU infra: Cloud GPU/ Local GPU

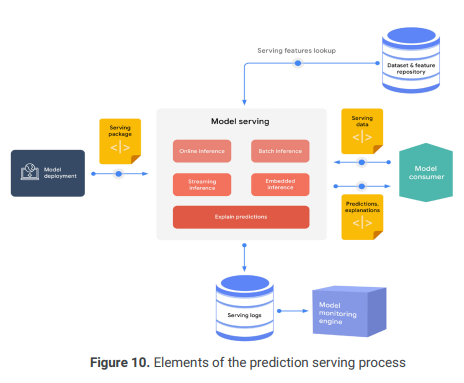

- Serving

- Batch serving: Provide service periodically

- use stored data - Online serving: Provide service constantly

- use stream of data

- need caution to avoid bottleneck

- expandability required - ex. Cortex labs, tensorflow serving

- Batch serving: Provide service periodically

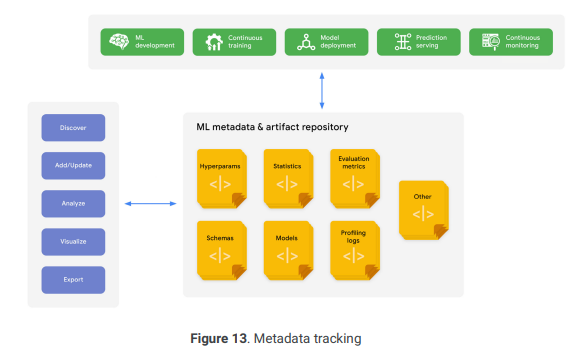

- Experiment, Model Management

- Storing by-product of experiments.(Model artifacts, metric, meta data, images, hyperparams etc.)

- ex. mlflow

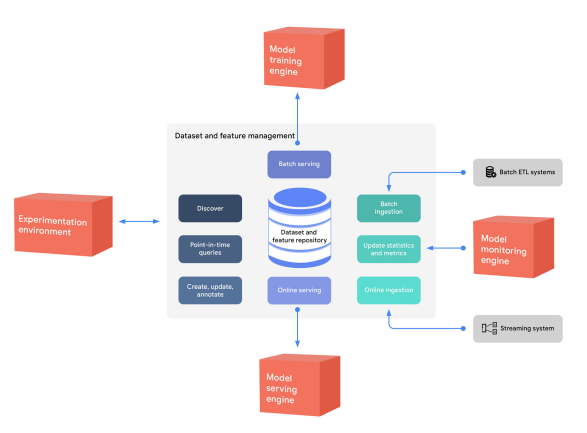

- Feature Store

- Storing ML features

- ex. feast-dev

- Data Validation

- To ensure that production data is similar to research stage data

- TFDV: feature validation

- AWS Deequ: Data Quality measure, Data Unit test

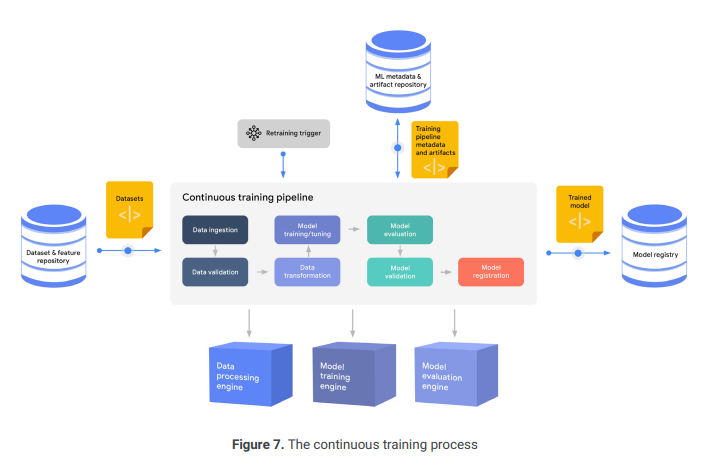

- Continuous Training

- Retrain

- with new data, simple periodic retrain, changing metric etc.

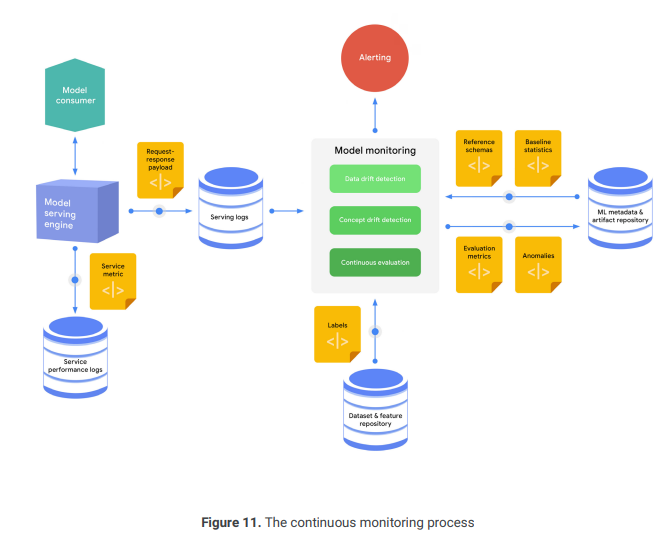

- Monitoring

- Monitoring the effectiveness and efficiency of a model in production, to prevent performance decay

- All together

My Top 3 selection among components

- Serving

- Isn't this the hole point of this system?

- Monitoring

- Monitoring is essential to provide constant performace. I think this would be one of the most important condition for maintaining customers.

- Feature storing

- To fufill customers needs, it is important to update service(model) rapidly. This could be done with only storing latest features constantly and using it to adjust model.

all images are from "practitioners_guide_to_mlops_whitepaper" google,2021