Introduction

Recently, my company required time synchronization with PTP-like precision for VMs on ESXi, so I had the opportunity to test out ESXi's PTP implementation, which I had known about only conceptually.

In this post, I'll cover in detail what ESXi's PTP implementation consists of and how you can manipulate its configuration parameters, including some undocumented analysis.

Disclaimer: The walkarounds in this article are not officially supported by VMware and you are responsible for any issues that arise from using them.

So, What is PTP?

For people who are not familiar with time synchronization technology, Precision Time Protocol (PTP) may sound alien.

PTP is an enhancement to the popular NTP protocol, and allows for precise path delay compensation, also known as IEEE 1588.

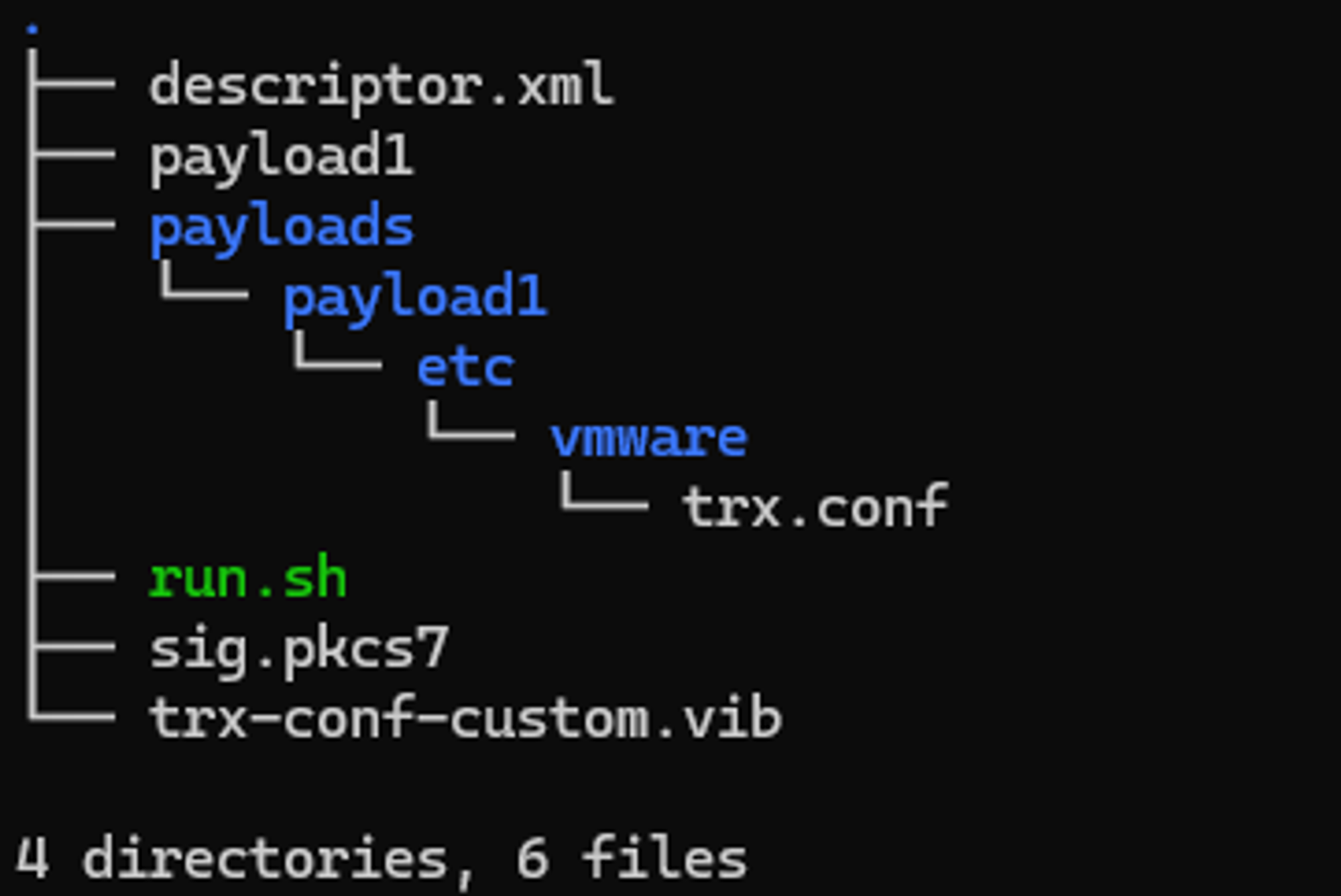

[Figure 1. PTP's Path Delay calculation mechanism]

The mechanics of PTP are beyond the scope of this article, so I won't go into detail, but basically, the Delay Request - Delay Response mechanism above allows us to calculate and precisely time the path delay between Master and Slave.

VMware has supported PTP-based time synchronization technology since vSphere 7.0.2, as telcos require PTP-based time synchronization to put VNFs on ESXi.

[Figure 2. Official blog introducing PTP support in ESXi (Source)]

PTP Support on ESXi

VMware requires that you use Intel's X710 or E810 NICs to use PTP on ESXi. As we'll see later, there's a good reason for this limitation, and we'll also see how to find undocumented supported NICs.

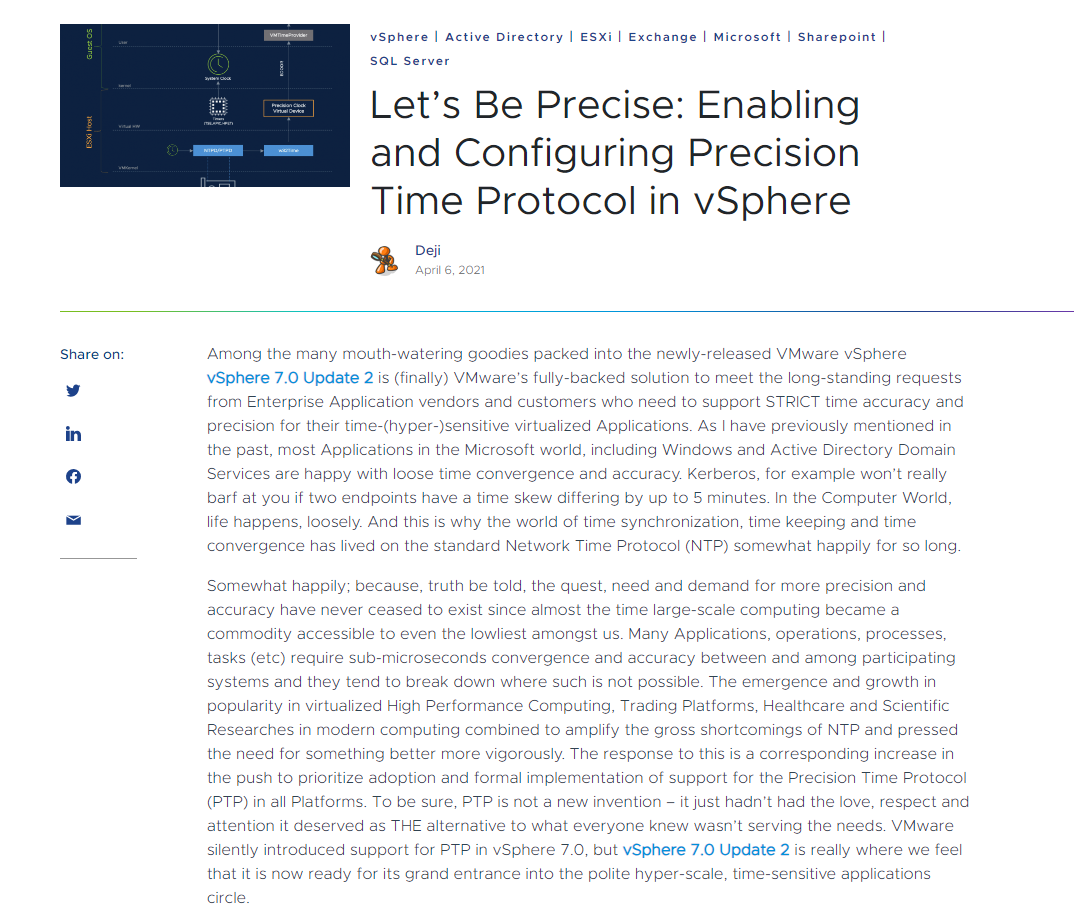

The list of NICs officially supported by VMware can be found in the Compatibility Guide.

If you select IO Devices > ESXi Hardware Timestamp based PTP, you can see that three NICs are supported as of vSphere 8.0 U3.

[Figure 3. NICs officially supported by vSphere]

Since all three NICs use the E810 chipset, it seems safe to assume that the Intel E810 is the only NIC officially supported by vSphere 8.

On the other hand, ESXi provides two methods of PTP time synchronization.

There are two ways to do this: one is to use the VMKernel Adapter and Software Timestamping, and the other is to use Hardware Timestamping by passing through the physical NIC.

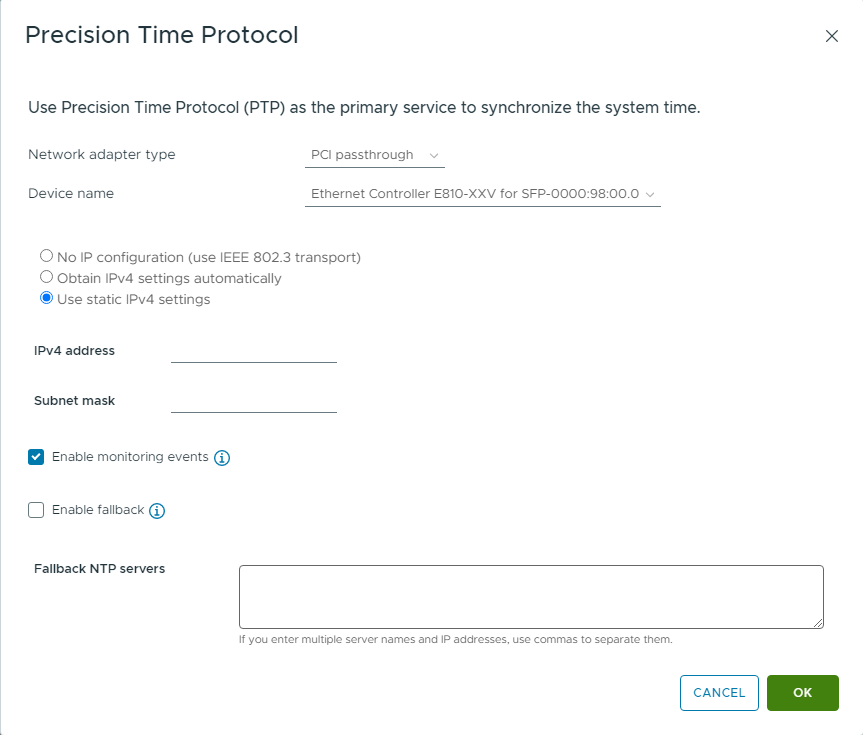

[Figure 4. PCI-E Passthrough-based PTP Configuration]

Because software timestamping does not achieve PTP's goal of precise time synchronization, you will typically want to use a PTP implementation over PCI-E Passthrough.

To get a more detailed setup, I decided to check out what commands esxcli provides.

ESXCLI can change PTP-related configurations through the esxcli system ptp namespace.

However, the only things you can change in ESXCLI that are not in the GUI are domainNumber and calibration_delay.

We can use configstorecli to dig deeper into the configuration. As mentioned in the previous post, starting with vSphere 7.0.3, ESXi started to consolidate all configuration files into a database called the Config Store. You can see which files are included in the Config Store at the following link.

Because ESXCLI modifies the Config Store through VMOMI, changing the settings in ESXi means answering the question, “What values can I put in the Config Store?

You can answer this question with the command configstorecli schema get -c esx -g system -k system_time.

This command shows the format of NTP and PTP settings that can be stored in the Config Store. As of 8.0.2, there are only four PTP-related configurations stored in the Config Store

log_level, calibarion_delay, ports, domain_number Should we be thankful that we can set domain_number and calibration_delay unlike in 7.0.3?

Unfortunately, this is not enough to properly configure PTP, as PTP parameters can vary greatly depending on your network structure and usage.

Until 7.0.2, this could be handled by modifying the /etc/ptpd.conf file, but since 7.0.3, the daemon references the Config Store configuration, making this impossible.

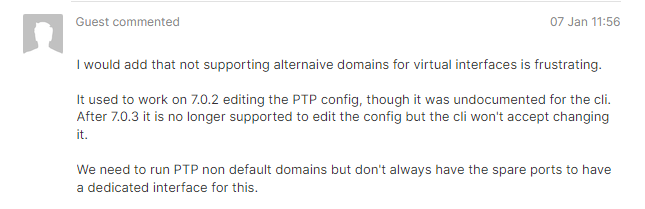

[Figure 5. An anonymous user is upset that they can't change their PTP Domain settings]

I could actually find people complaining about this issue, and while vSphere 8 addressed some of the complaints, it didn't meet our company needs.

In other words, VMware does not officially support configuration changes beyond this.

Instead of stopping my analysis here, I decided to dig deeper.

PTP Implementations on ESXi

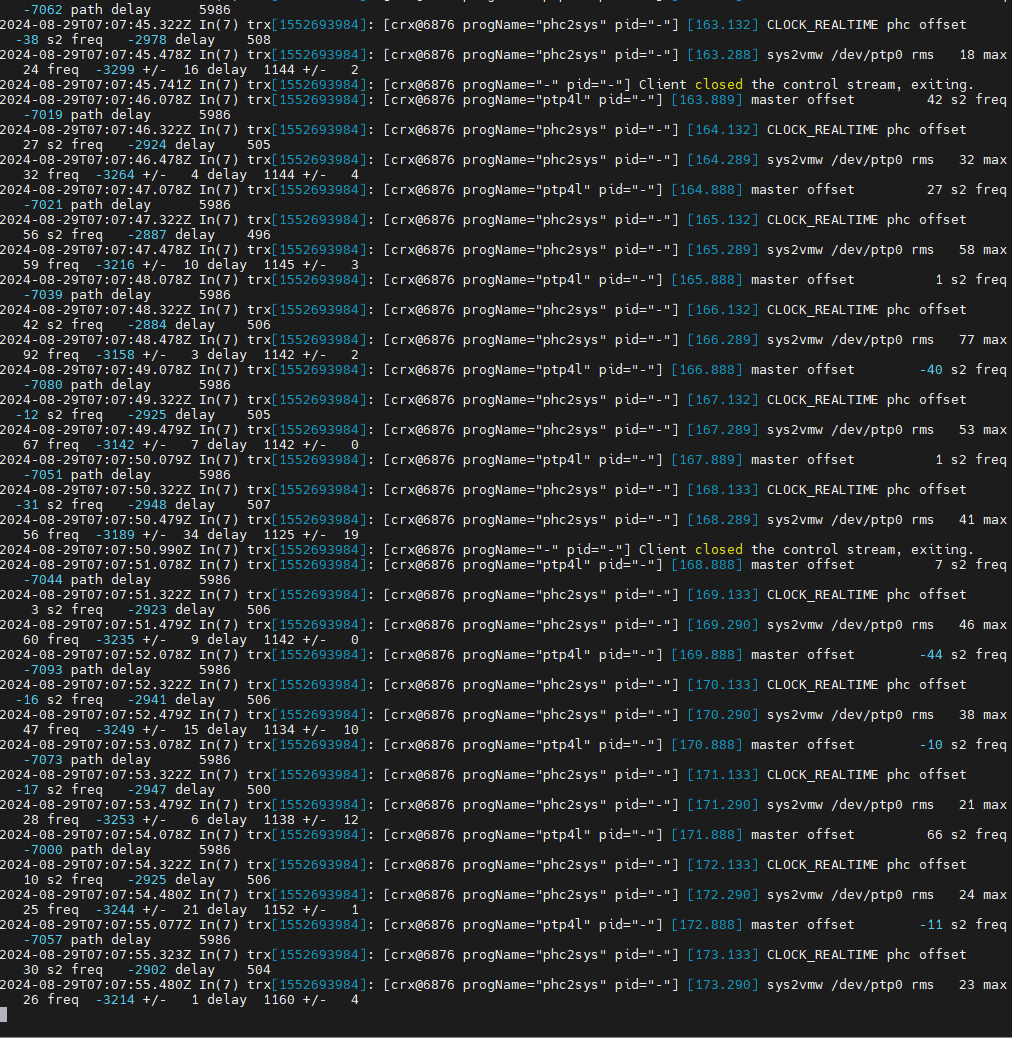

The best way to understand what we can do with ESXi's PTP implementation is to look at the logs.

After a quick look at vmkernel.log and hostd.log, I can see that the PTP service logs to /var/run/log/trx.log, and from the contents of trx.log, I can identify that the PTP service relies on Container Runtine for ESXi (CRX).

Container Runtime for ESXi

CRX is a lightweight Linux container implementation for ESXi developed for vSphere Pods. You can read more about it here, and it's unique in that it runs on top of VMX. If you've ever wondered why you need to passthrough a pNIC when setting up PTP above, that's now answered.

TRX, ESXi's hardware timestamp PTP implementation, runs in a container on top of VMX and requires passthrough to connect PCI-E devices directly to VMX.

Now that we know that TRX is dependent on CRX, what more can we do with it?

CRX-CLI

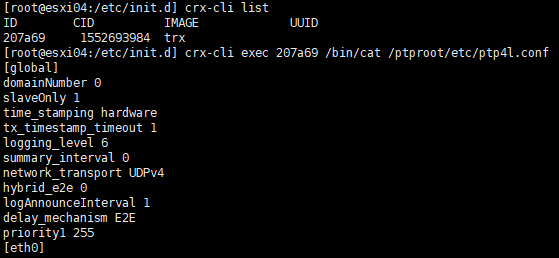

crx-cli is a tool that allows you to CLI manage containers running on ESXi. It's basically not much different than docker-cli, and I was able to use it to see what's going on inside a running TRX container.

The Architecture of TRX

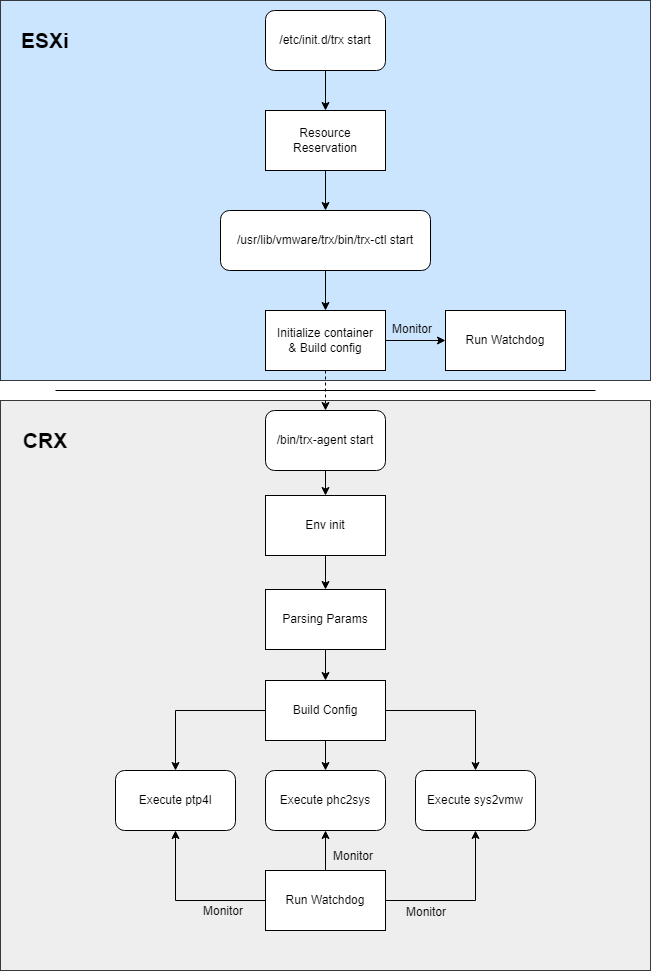

After investing a little time and effort, here's how TRX's execution flow is structured.

[Figure 6. Execution flow of TRX]

Since CRX is a lightweight Linux container, TRX can also use Linux ptp4l and phc2sys as is.

You can imagine that the vSphere team must have put a lot of thought into how to best support PTP on ESXi without requiring additional development.

Digging a little deeper into the files above, I found some interesting things.

List of devices supported by TRX

In the /etc/trx directory of the container, I found a file called device.list, which lists the VID/DIDs and driver modules for the devices supported by TRX.

This file can also be found in the /usr/lib/vmware/trx/etc directory on the ESXi host.

If you check the contents, you'll see that it supports the X710 and E810 together, unlike the official VMware Compatibility Guide.

Of particular interest in this file is the listing of vmxnet3, which I assume is to allow testing and validation to be done in a nested testbed, given the mention of mock device.

Pushing arbitrary ptp4l settings to TRX

Before we get started, I want to disclose that this configuration is not officially supported by VMware, that using ESXi in ways that VMware did not intend, such as in this paragraph, can have unexpected side effects, and that VMware's engineers may refuse service requests for failures that result from it.

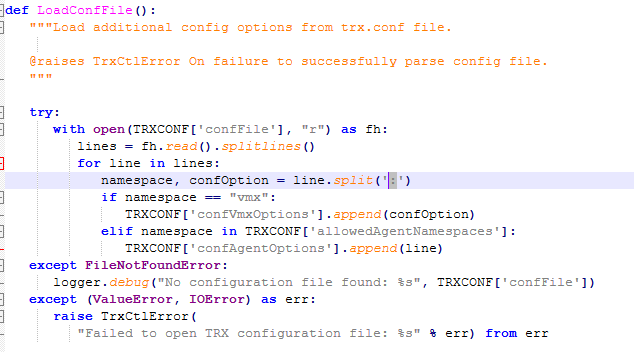

While looking at the source code of trx-ctl, I noticed something interesting.

The trx-ctl was supposed to pass an arbitrary string to trx-agent when using the -c option, which is perfect for applying arbitrary settings to an internal service for debugging purposes, right?

So I dug a little deeper into the code of trx-ctl and trx-agent and found the functionality I needed.

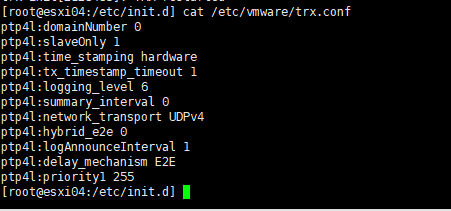

/etc/vmware/trx.conf

This file does not exist by default, but if it exists when TRX is run, trx-ctl reads the contents of the file line by line, parses it, and passes the values to trx-agent as appropriate.

The syntax of this file is as follows

<namespace>:<conf_key> <conf_value>The Namespace and Config lines are separated by :, and the key and value of the Config are separated by a space.

As of 8.0.2, a Namespace can contain three values

ptp4l, phc2sys, sys2vmwIn fact, if you run the TRX container after entering the grammatically correct settings in /etc/vmware/trx.conf, you should get the following results

[Figure 7-8. Using trx.conf to apply arbitrary settings to ptp4l on TRX]

I also confirmed that synchronization over PTP works fine.

[Figure 9. Execution log of TRX with arbitrary settings]

Undocumented limitations of TRX

I've summarized the undocumented constraints that I found during my source code analysis.

- ESXi's PTP implementation only supports E2E as a delay mechanism - a limitation that led me to analyze TRX, and which is addressed by the walkaround in this article.

- Passing through NICs other than E810/X710/VMXNET3 does not work with TRX - TRX dynamically loads the NIC driver's kernel modules during initialization. If you set up a Mellanox NIC, it will not work because the driver is not loaded.

- Pass-through targets must be PF 0 - there are validation routines that prevent passing through PF 1 from working (This may be a limitation of the E810 only.)

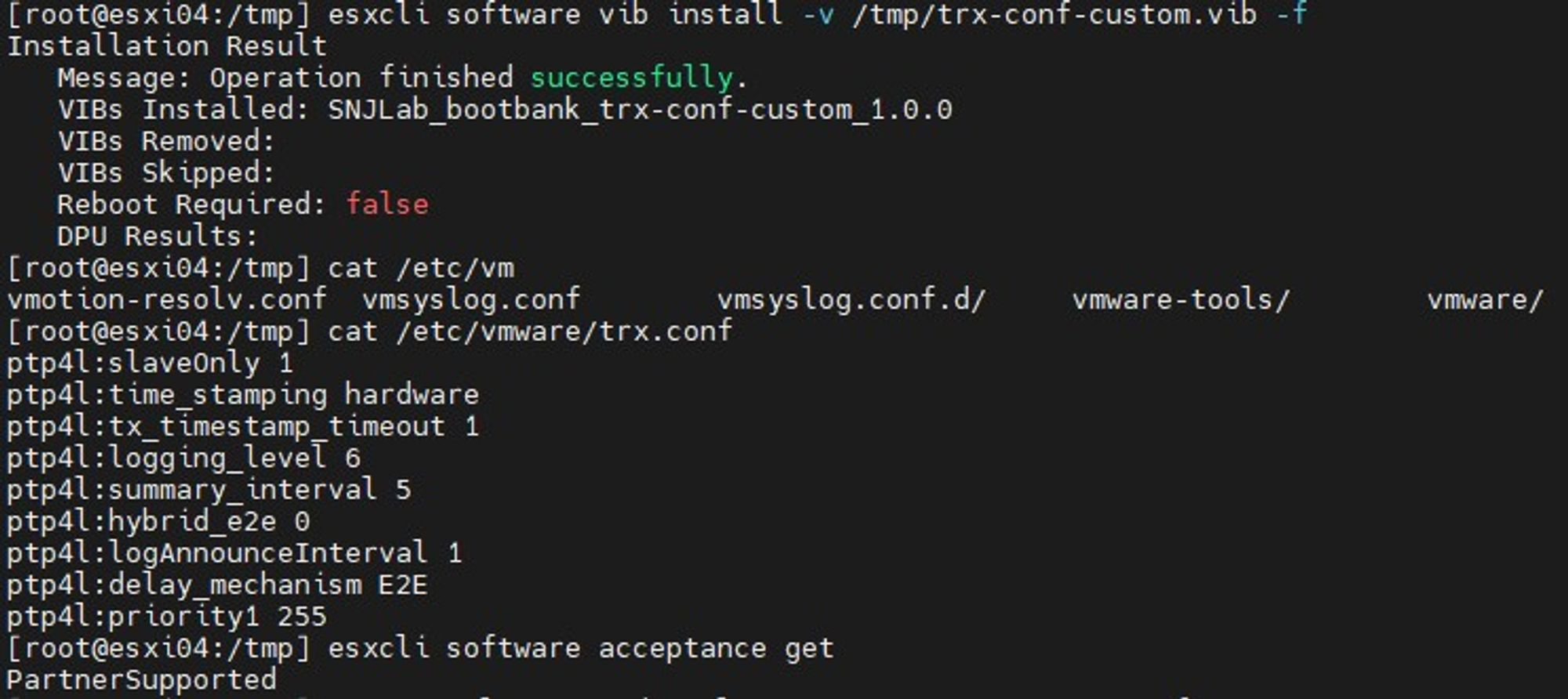

Appendix A. Make /etc/vmware/trx.conf persistent

There's still one last thing left to do. Because ESXi restores arbitrary files we've written or modified to the file system on reboot, we need to take steps to preserve the trx.conf file so that the settings we've changed will survive after the reboot.

We can get around this by writing a custom VIB, and William Lam has written a nice script to do this.

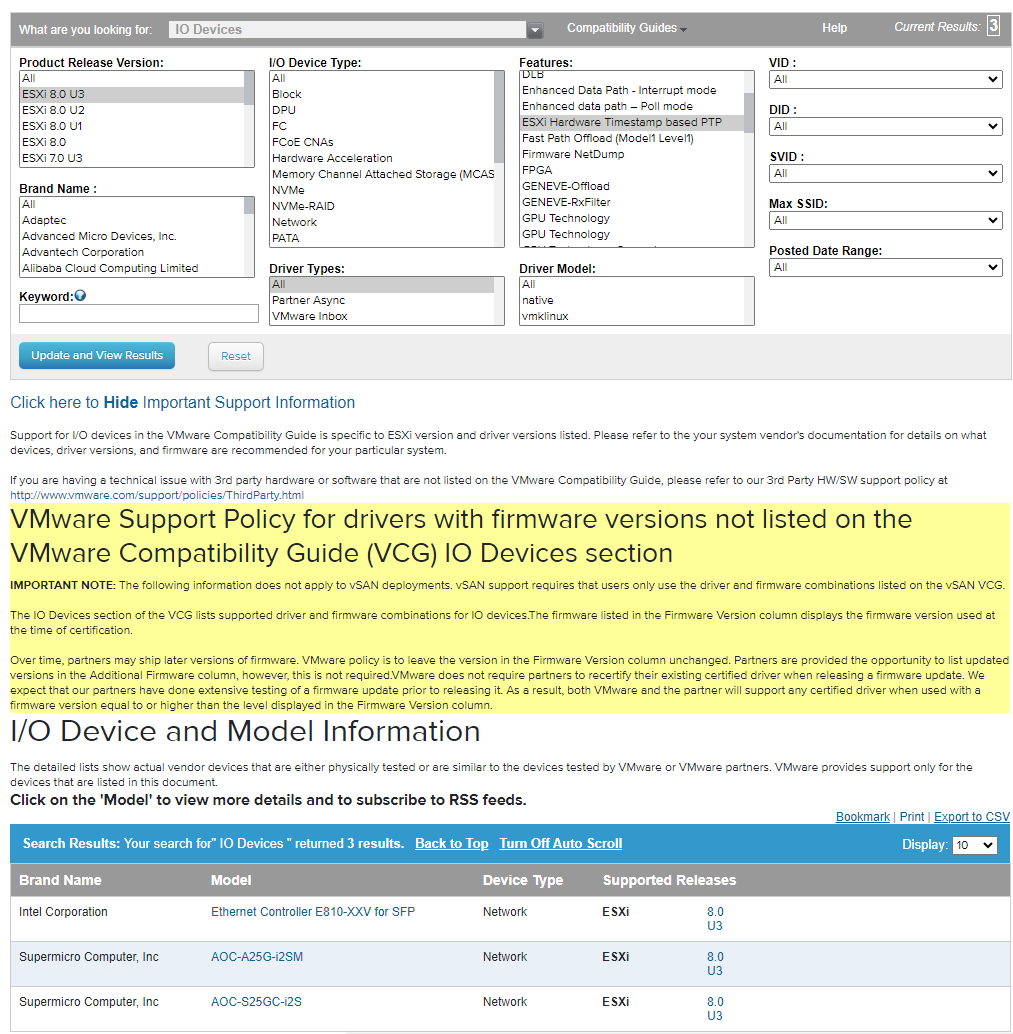

The initial directory structure for creating a VIB looks like below.

/tmp/vib-trx-conf

├── payloads

│ └── payload1

│ └── etc

│ └── vmware

│ └── trx.conf

──── run.shAnd after running the VIB build script, the final directory structure looks like below.

/tmp/vib-trx-conf

├── payloads

│ └── payload1

│ └── etc

│ └── vmware

│ └── trx.conf

──── run.sh

|

──── sig.pkcs7

|

──── trx-conf-custom.vibAfter installing the newly created VIB file on ESXi, the result is the following.

Note that an Acceptance Level of PartnerSupported or lower is required, and the -f option must be used when installing the VIB to avoid the VIB violates extensibility rule checks error.

[Figure 10. Custom VIB installation for trx.conf]

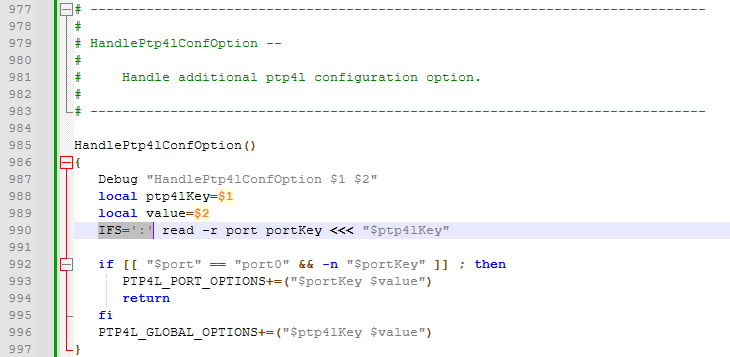

Appendix B. A bug of trx-ctl

As I was finalizing this analysis, I noticed that all of the ptp4l options were going into Global.

Of course, since there is only one network interface in the TRX container, it doesn't matter if it goes into Global, but the source code has a routine to parse the configuration by port, and when I tried it, it caused an error during initialization.

[Figure 11-12. TRX's Config file parsing routine]

This was caused by trx-ctl and trx-agent using the same delimiter, :. When entering a parameter like ptp4l:port0:delay_mechanism P2P to match the syntax required by trx-agent, trx-ctl would parse the line and return three objects, causing an exception.

This can be easily fixed by using = instead of : as a delimiter in trx-ctl.

This bug was not fixed because it was deemed unnecessary for debugging purposes, but it would be interesting to see it fixed in the next release.