gitops 란

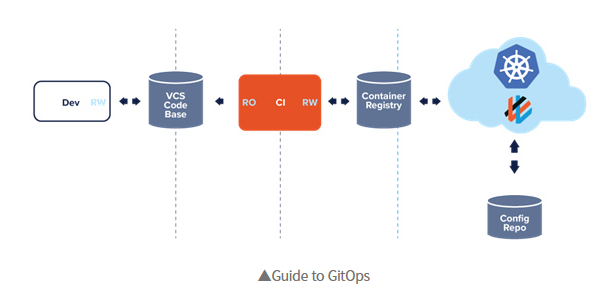

애플리케이션의 배포와 운영에 관련된 모든 요소를 코드화하여 git에서 관리 하는 것이 gitops의 핵심이다.

출처 : https://www.samsungsds.com/kr/insights/gitops.html

사용하는 이유

- 일관성 유지

모든 인프라와 애플리케이션 상태를 Git에 선언하여 환경 간 설정 불일치 방지 - 자동화된 배포 및 복구

git 변경만으로 자동 배포&롤백이 가능 - 변경 이력 관리

모든 변경 이력이 Git 커밋으로 남아서 관리 가능 - 보안 향상

운영 서버에 직접 접근하지 않고 Git 기반으로 제어 하여 보안 위험 감소

핵심 개념

-

선언형 모델

최종 상태를 정의

장점 : git을 Single Source Of Truth으로 사용한다

단점 : 즉각적인 제어가 어렵다

apiVersion: apps/v1 kind: Deployment metadata: name: web-app spec: replicas: 3 template: spec: containers: - name: web image: nginx:1.25 -

명령형 모델

명령어를 직접 실행해가며 상태를 변경

kubectl create deployment web-app --image=nginx:1.25 kubectl scale deployment web-app --replicas=3장점 : 즉각적인 제어가능

단점 : 변경 이력이 분산되고 추적이 어렵다.

배포 전략

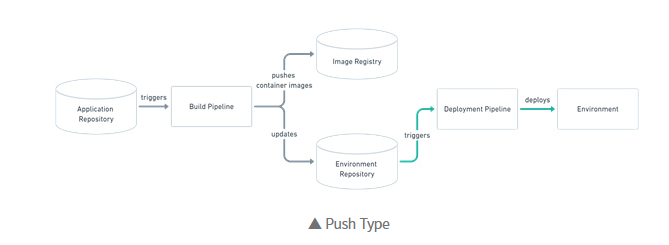

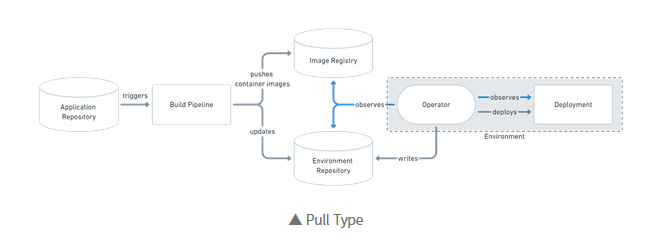

깃옵스에서는 push type 과 pull type 두 가지의 배포 전략을 가이드 하고 있다.

옵션 간의 차이점은 저장소에 있는 매니페스트와 배포 환경의 상태를 일치 시키는 방법

push type

출처 : https://www.samsungsds.com/kr/insights/gitops.html

저장소에 있는 매니페스트가 변경되는 경우 배포 파이프라인을 실행시키는 구조

pull type

출처 : https://www.samsungsds.com/kr/insights/gitops.html

배포 환경에 위치한 오퍼레이터가 배포 파이프라인을 대신한다.

오퍼레이터는 저장소의 매니페스트를 실시간으로 비교하여 변경을 확인하는 경우 저장소의 매니페스트 기준으로 배포 환경을 유지한다

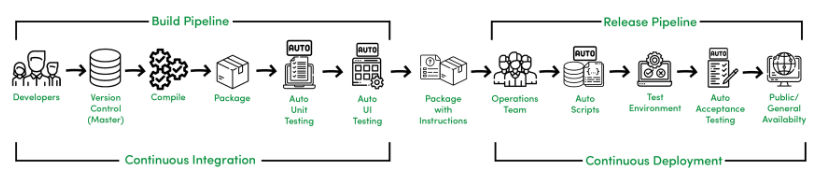

CICD

출처: https://www.geeksforgeeks.org/ci-cd-continuous-integration-and-continuous-delivery

CI : 지속적 통합 → 계속 변경하는 코드들을 중앙 저장소에 통합하고, 통합될 때마다 자동으로 빌드 및 테스트 수행하는 과정

CD : 지속적 배포 → CI를 통과한 코드 변경 사항을 프로덕션 환경에 배포할 수 있는 준비 상태로 만드는 것

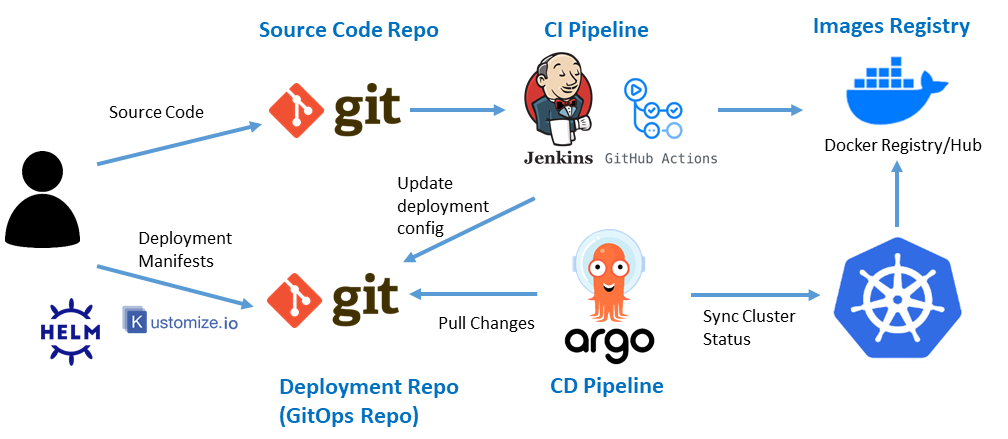

K8S에서의 CI & CD

CI (Continuous Integration)

개발자가 코드를 커밋하면, CI 서버(GitHub Actions 또는 Jenkins) 가 자동으로 빌드 과정을 수행합니다.

이 과정에서 컨테이너 이미지를 생성하고, 이를 Docker Registry(ECR, GCR 등) 에 푸시합니다.

흐름: 코드 커밋 → CI 서버 자동 빌드 → 컨테이너 이미지 생성 → Registry 푸시

CD (Continuous Deployment / Delivery)

GitOps 기반

Git 저장소의 변경사항을 감지하여 Kubernetes 클러스터에 자동 반영합니다. (예: ArgoCD, Flux)

Pipeline 기반

CI 서버가 직접 kubectl apply 또는 Helm 명령을 실행하여 배포를 수행합니다. (예: Jenkins, GitLab CI, GitHub Actions)

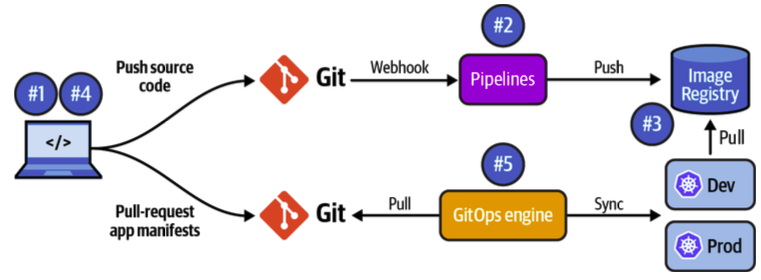

k8s gitOps 적용하는 법

- (#1 개발자)

- 개발자가 소스 코드를 작성하고 Git 저장소에 푸시합니다.

- 이 저장소는 애플리케이션 코드 저장소(App Repo) 역할을 합니다.

- (#2 CI 파이프라인 (Pipelines))

- Git 푸시(Webhook 트리거) 시 CI 서버(Jenkins, GitHub Actions 등) 가 자동으로 실행됩니다.

- 코드 빌드 → 컨테이너 이미지 생성 → Image Registry(ECR, GCR 등) 에 푸시합니다.

- (#3 이미지 레지스트리 → Kubernetes

- Kubernetes 클러스터(Dev, Prod 환경)는 이 이미지 레지스트리에서 새 버전 이미지를 Pull 하여 실행합니다.

- (#4 배포 설정 변경 (Pull Request)

- 개발자는 운영 저장소(Ops Repo) 에서 애플리케이션 매니페스트(YAML, Helm 등)를 수정합니다.

- 예:

image: my-app:v2.0으로 버전 변경 후 Pull Request 생성.

- (#5 GitOps 엔진 (ArgoCD, Flux 등)

- GitOps 엔진이 운영 저장소를 주기적으로 Pull 하여 변경 사항을 감지합니다.

- 변경된 설정을 Kubernetes 클러스터(Dev/Prod) 에 자동으로 Sync(동기화) 합니다.

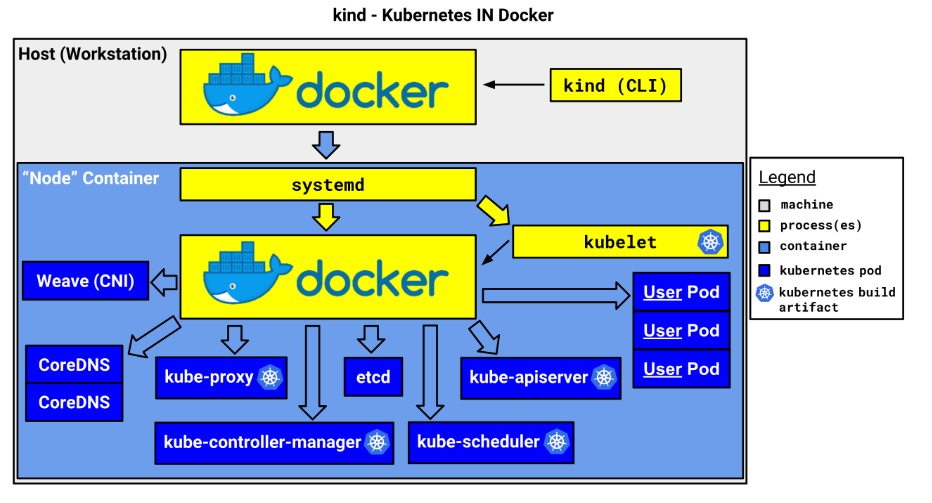

kind

로컬 환경에서 k8s 클러스터를 실행하기 위한 도구

출처: https://kind.sigs.k8s.io/docs/design/initial/

docker 컨테이너 안에 k8s 클러스터를 구축하는 도구이다.

각 노드는 docker 컨테이너로 동작한다.

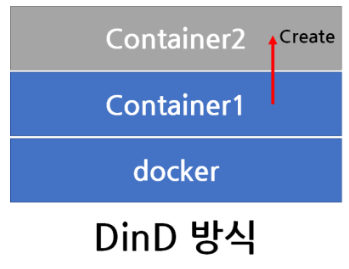

docker in docker

출처 : https://m.blog.naver.com/PostView.naver?isHttpsRedirect=true&blogId=isc0304&logNo=222274955992

DinD 은 컨테이너 안에서 다시 도커를 사용하는 구조입니다.

도커 컨테이너 내에서 도커 데몬을 추가로 동작시킵니다. 실제 데몬을 동작시켜야 하기 때문에

—privileged을 사용하여 추가 권한을 부여합니다.

docker run --privileged -d docker:dind--privileged 옵션은 도커 호스트의 모든 장치(Device)에 대한 접근 권한을 컨테이너에 부여하여, 내부 도커 데몬이 정상적으로 동작할 수 있도록 합니다.

kind의 경우에는 DinD 개념을 사용하여 노드 컨테이너 안에서 도커를 실행합니다.

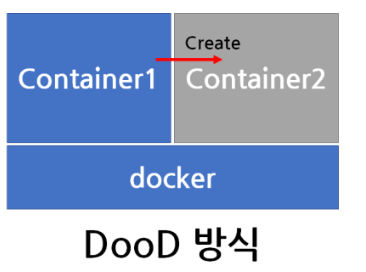

docker out of docker

출처 : https://m.blog.naver.com/PostView.naver?isHttpsRedirect=true&blogId=isc0304&logNo=222274955992

DooD는 기존의 사용하던 컨테이너를 추가로 생성한다.

컨테이너 안에서 도커 명령어를 실행하지만, 실제 컨테이너 생성은 호스트 도커가 수행하는 구조

docker run -it -v /var/run/docker.sock:/var/run/docker.sock docker:latest컨테이너에 /var/run/docker.sock 을 마운트하여 내부 docker 명령이 해당 소켓을 통해 호스트 도커를 제어한다.

출처 https://www.tiuweehan.com/blog/2020-09-10-docker-in-jenkins-in-docker/

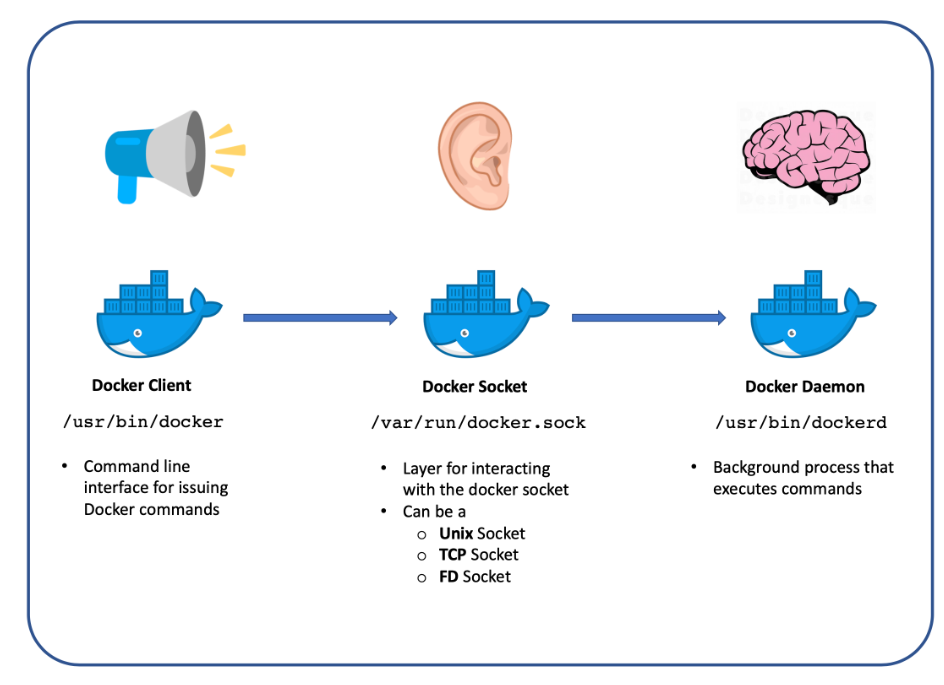

docker client : 사용자가 명령어를 입력하는 CLI 도구. 명령어를 입력하면, 클라이언트는 요청을 socket으로 전달한다.

docker socket: 클라이언트와 daemon 사이의 통로

docker daemon: 실제로 컨테이너를 생성*관리하는 백그라운드 프로세스

DooD 방식에서는 컨테이너 내부의 docker client가 호스트의 docker socket을 공유한다.

따라서 컨테이너 내부에서 실행한 명령(docker run) 은 호스트의 docker daemon으로 전달되어, 호스트에서 직접 컨테이너를 실행한다.

kind 로 k8s 배포 (windows wsl2 사용)

클러스터 배포 전 확인

docker ps cluster 생성하기 with kind

**kind create cluster --name myk8s** --image kindest/node:v1.32.8 **--config** - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000

hostPort: 30000

- containerPort: 30001

hostPort: 30001

- role: worker

EOFkind create cluster --name myk8s -- image kindest/node:v1.32.8 **--config**- kind 명령어를 통해 새로운 클러스터 생성

- —config를 통해서 YAML 전달

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000

hostPort: 30000

- containerPort: 30001

hostPort: 30001

- role: worker- kind : 클러스터 리소스 종류 지정

- apiVersion : 클러스터의 API 버전 지정

- nodes: 클러스터에 포함될 노드를 지정

- role : control-plane, worker 지정

- extraPortMappings: 추가적인 포트 매핑을 통해 호스트와 컨테이너 포트 연결

클러스터 정보 확인

kind get nodes --name **myk8s

kubens default**- kubens : kubectl 기반으로 만들어진 도구로, k8s 클러스터 내에서 네임스페이스를 전환, 확인하는 작업을 수행할 수 있게 도와준다.

kind 별도 도커 네트워크 생성후 사용

docker network ls

docker inspect kind | jqk8s api 주소 확인

kubectl cluster-infonode 정보 확인

kubectl get node -o wide pod 정보 확인

kubectl get pod -A -o wide namespace 확인

kubectl get namespaces control plan, worker node 확인

docker ps

docker images ~/.kube/config 권한 인증 로드

kubectl get pod -v6컨테이너

컨테이너는 애플리케이션 배포 목적으로 패킹지할때 널리 사용되는 표준 형식

OCI

OCI(Open Container Initiative)는 컨테이너 관련 표준을 정의하기 위한 오픈소스 프로젝트로, 다양한 컨테이너화 도구들이 호환되도록 지원합니다.

초기에는 Docker가 컨테이너 이미지를 만들고 실행하는 형식과 규격을 자체적으로 정의하였고, 이로 인해 Docker 환경에서는 원활하게 작동했지만, Docker 이외의 도구들에서 이를 활용하는 데 어려움이 있었습니다. 즉, Docker에서 만든 이미지는 다른 컨테이너 런타임에서 실행할 수 있는 표준화된 형식을 따르지 않았기 때문에 호환성 문제가 발생했습니다.

이러한 문제를 해결하기 위해 OCI는 컨테이너 이미지와 런타임에 대한 표준을 정의하였습니다. 이를 통해 Docker뿐만 아니라 다른 컨테이너 런타임(containerd, runc, Podman 등)에서도 동일한 이미지를 사용하고 실행할 수 있게 되었으며, 컨테이너화된 애플리케이션의 이식성과 상호 운용성을 크게 향상시켰습니다.

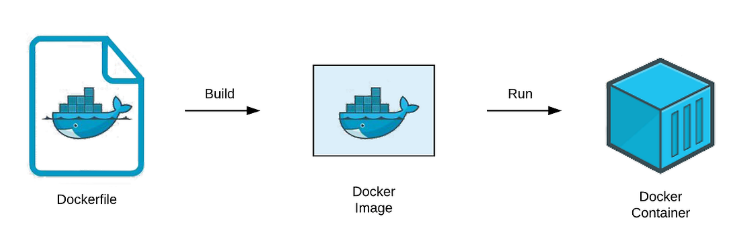

도커를 사용한 컨테이너 빌드

docker file : 이미지를 만들기 위해 모든 명령을 담은 텍스트 문서

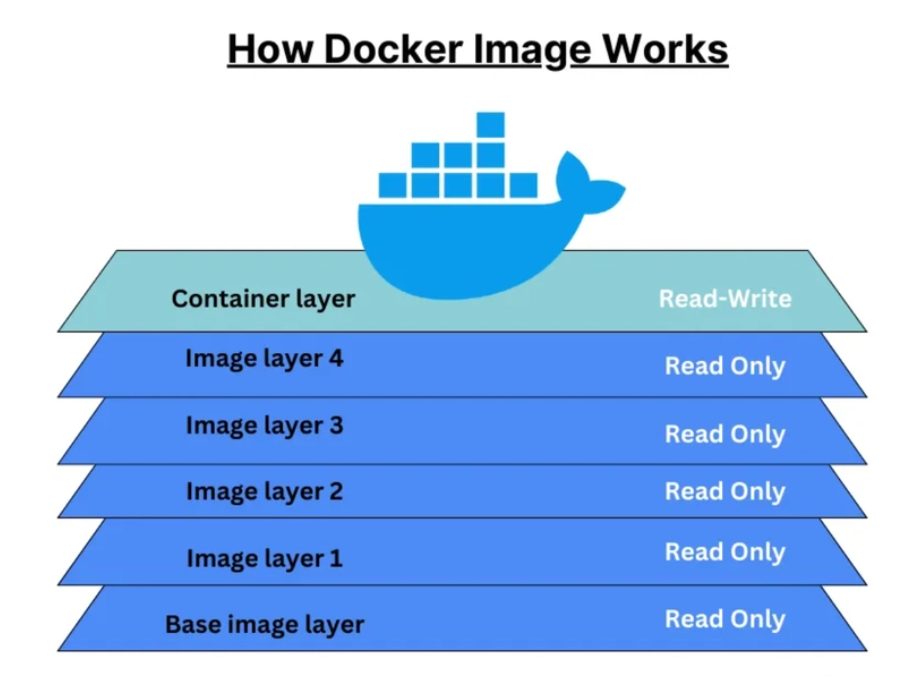

containter 이미지 구조

base image layer : 기본 os 이미지 또는 런타임 환경이 포함된 첫 번째 레이어

base image layer 이후의 레이어는 파일 시스템의 차이점을 기록한다.

이러한 layer들이 모여서 최종적인 이미지를 형성한다.

이미지 layer로 구성하는 이유는 각 레이어는 중복을 최소화 하기 위해 docker는 동일한 레이어를 여러 이미지에서 재사용할 수 있다. 이를 통해 효율적인 이미지 관리가 가능하다.

출처 : https://dev.to/mayankcse/understanding-docker-image-layers-optimizing-build-performance-1obb

Layer 1 (Base Image): ubuntu:18.04

Layer 2 (Application Dependencies): RUN apt-get update && apt-get install -y python3

Layer 3 (Application Code): COPY ./my_app /app

Layer 4 (Configuration): ENV APP_ENV=production

Layer 5 (Execution Command): CMD ["python3", "/app/main.py"]도커를 사용한 컨테이너 빌드 실습

git clone https://github.com/gitops-cookbook/chaptersapp.py

from flask import Flask

app = Flask(__name__)

@app.route("/")

def home():

return "Hello, World!"

if __name__ == "__main__":

app.run(host='0.0.0.0', port=8080, debug=True)Dockerfile

FROM registry.access.redhat.com/ubi8/python-39

ENV PORT 8080

EXPOSE 8080

WORKDIR /usr/src/app

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

ENTRYPOINT ["python"]

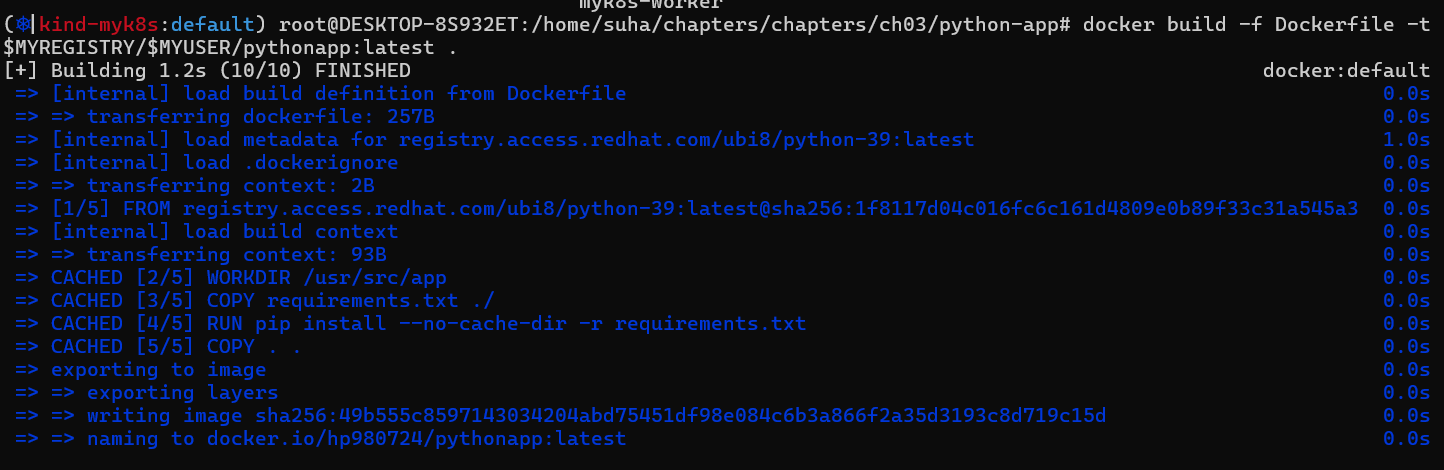

CMD ["app.py"]FROM 이후 부터니깐 총 9개의 layer 구성

변수 지정

# 변수 지정 : 저장소 사용을 위한 계정 정보

MYREGISTRY=docker.io

혹은

MYREGISTRY=quay.io

MYUSER=<각자 자신의 계정명>

MYUSER=gasidadocker build -f Dockerfile -t $MYREGISTRY/$MYUSER/pythonapp:latest .

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:efbb01c414da9dbe80503875585172034d618260b0179622a67440af141ada49",

"sha256:0e770dacd8dd8e0f783addb2f8e2889360ecc1443acc1ca32f03158f8b459b14",

"sha256:03b1af2d2f1752f587c99bf9afca0a564054b79f46cf22cef211f86f1d4a4497",

"sha256:ae81327beceb885cbdb2663e2f89e6e55aaa614f5ce2d502f772420d6fe37f2f",

"sha256:4b332b986f09d49e2a20bc8602d21ca54584b29b2f23824d22885cfda2ba2560",

"sha256:d48133e6e3147aac4861d37ab6e6ecccc7f5bf6bfd8142d44ec98a177f1d59d9",

"sha256:0509aa0684a474f3a7962edb8cb5ea3a8b1406f53783fe5175ba954d8c57e6d4",

"sha256:201be77ae36e365a1576c316457bda391a12b53b4d595f2910eca8df4fcd7412"

]

},(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# docker history $MYUSER/pythonapp:latest

IMAGE CREATED CREATED BY SIZE COMMENT

49b555c85971 11 minutes ago CMD ["app.py"] 0B buildkit.dockerfile.v0

<missing> 11 minutes ago ENTRYPOINT ["python"] 0B buildkit.dockerfile.v0

<missing> 11 minutes ago COPY . . # buildkit 404B buildkit.dockerfile.v0

<missing> 11 minutes ago RUN /bin/sh -c pip install --no-cache-dir -r… 4.05MB buildkit.dockerfile.v0

<missing> 11 minutes ago COPY requirements.txt ./ # buildkit 5B buildkit.dockerfile.v0

<missing> 11 minutes ago WORKDIR /usr/src/app 0B buildkit.dockerfile.v0

<missing> 11 minutes ago EXPOSE [8080/tcp] 0B buildkit.dockerfile.v0

<missing> 11 minutes ago ENV PORT=8080 0B buildkit.dockerfile.v0base image만 pull

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# docker pull registry.access.redhat.com/ubi8/python-39:latestthon-39:latest

latest: Pulling from ubi8/python-39

90a82be6f2a4: Already exists

8bd6f3302451: Already exists

ece6e387048b: Already exists

9cea5b67778f: Already exists

Digest: sha256:1f8117d04c016fc6c161d4809e0b89f33c31a545a3217573bf1edbca30d105da

Status: Downloaded newer image for registry.access.redhat.com/ubi8/python-39:latest

registry.access.redhat.com/ubi8/python-39:latest4개 모든 layer가 로컬 캐시 재사용

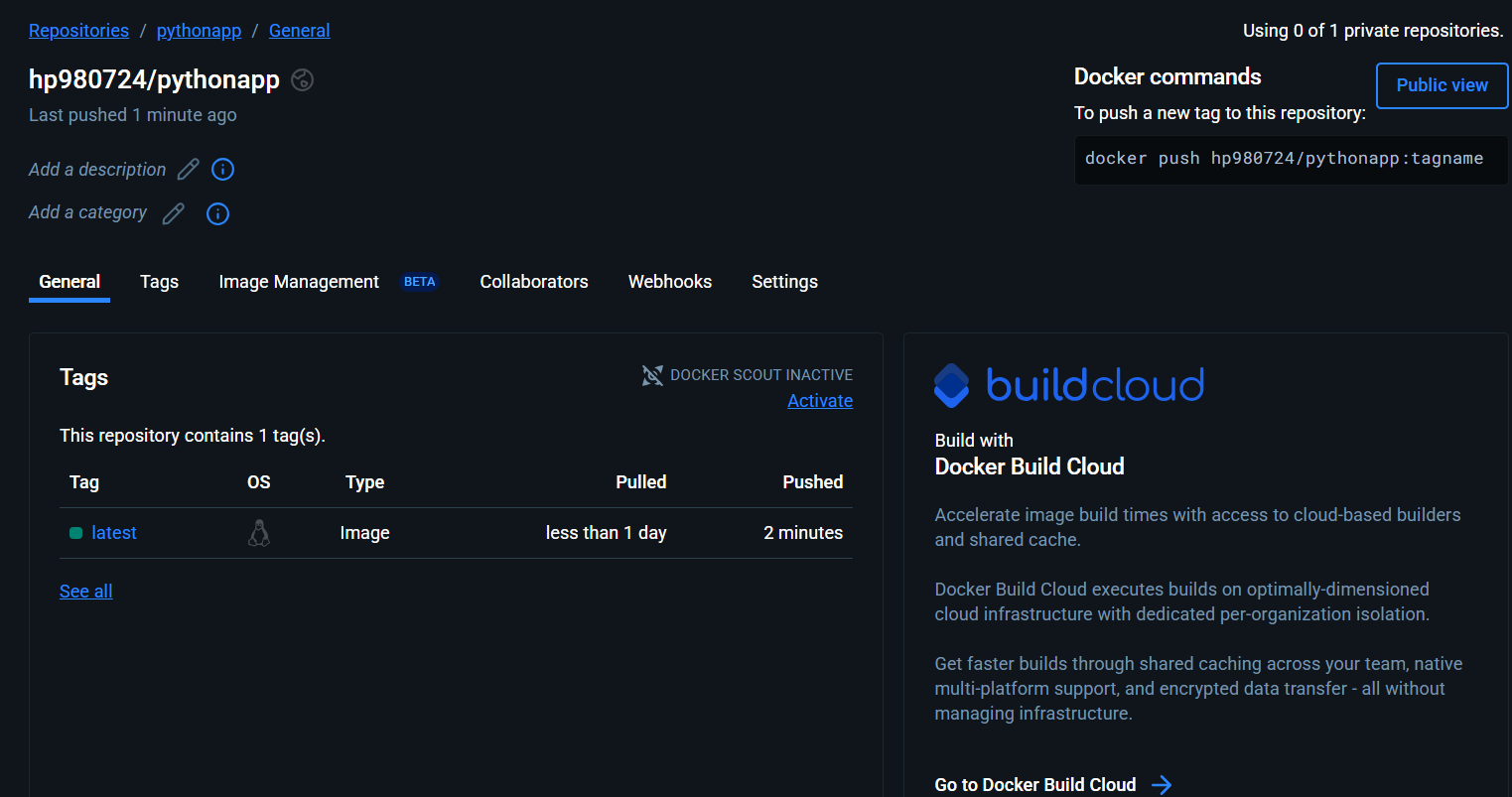

docker hub 에 올리기

docker login

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# docker login $MYREGISTRY

USING WEB-BASED LOGIN

i Info → To sign in with credentials on the command line, use 'docker login -u <username>'

Your one-time device confirmation code is: WZZH-WVGS

Press ENTER to open your browser or submit your device code here: https://login.docker.com/activate

Waiting for authentication in the browser…

WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'.

Configure a credential helper to remove this warning. See

https://docs.docker.com/go/credential-store/

Login Succeeded

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# docker push $MYREGISTRY/$MYUSER/pythonapp:latest

The push refers to repository [docker.io/hp980724/pythonapp]

201be77ae36e: Pushed

0509aa0684a4: Pushed

d48133e6e314: Pushed

4b332b986f09: Pushed

ae81327beceb: Pushed

03b1af2d2f17: Pushed

0e770dacd8dd: Pushed

efbb01c414da: Pushed

latest: digest: sha256:bf3b00fcbf448bf2135560ca2c6556de1f5f97bf492a7cd8ca650a8a4575ffd6 size: 1999

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# curl 127.0.0.1:8080

Hello, World!(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# docker logs myweb

* Serving Flask app 'app'

* Debug mode: on

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:8080

* Running on http://172.17.0.2:8080

Press CTRL+C to quit

* Restarting with stat

* Debugger is active!

* Debugger PIN: 697-100-641

172.17.0.1 - - [15/Oct/2025 13:21:19] "GET / HTTP/1.1" 200 -

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app#docker 필요 없는 jib을 사용한 컨테이너 빌드

Docker를 사용하지 않고도 이미지를 빌드할 수 있습니다. pom.xml 또는 build.gradle 파일을 통해 간편하게 설정할 수 있습니다.

- 이미지 레이어링 최적화: Jib는 애플리케이션의 변경 사항만 레이어에 포함시킵니다. 예를 들어, 애플리케이션 코드가 변경되면 해당 코드만 레이어로 추가하고, 라이브러리 파일은 다시 빌드하지 않습니다. 이로 인해 이미지 빌드 속도가 상당히 빠릅니다.

- 자동 레이어 분리: Jib는 애플리케이션 코드, 의존성, 애플리케이션 설정을 별도의 레이어로 분리하여 효율적인 캐싱을 지원합니다.

Java에 최적화: Jib는 Java 애플리케이션에 최적화되어 있으며, 다른 언어로 작성된 애플리케이션에서는 사용하기 어렵습니다.

jib 실습

bash 접속

docker exec -it myk8s-worker bash

설치

# openjdk 설치

apt update

mkdir -p /usr/share/man/man1

apt install perl-modules-5.36 -y

apt install openjdk-17-jdk -y

# java 버전 확인

java -version

# maven 설치

apt install maven -y

# maven 버전 확인

mvn -version

# 툴 설치

apt install git tree wget curl jq -y

# 소스 코드 가져오기

git clone https://github.com/gitops-cookbook/chapters

cd /chapters/chapters

treeroot@myk8s-worker:/chapters/chapters/ch03/springboot-app# tree | tee -a before.txt

.

|-- mvnw

|-- mvnw.cmd

|-- pom.xml

`-- src

|-- main

| |-- java

| | `-- com

| | `-- redhat

| | `-- hello

| | |-- Greeting.java

| | |-- GreetingController.java

| | `-- HelloApplication.java

| `-- resources

| `-- application.properties

`-- test

`-- java

`-- com

`-- redhat

`-- hello

`-- HelloApplicationTests.java

13 directories, 8 files

root@myk8s-worker:/chapters/chapters/ch03/springboot-app#root@myk8s-worker:/chapters/chapters/ch03/springboot-app# docker info

bash: docker: command not founddocker 없음

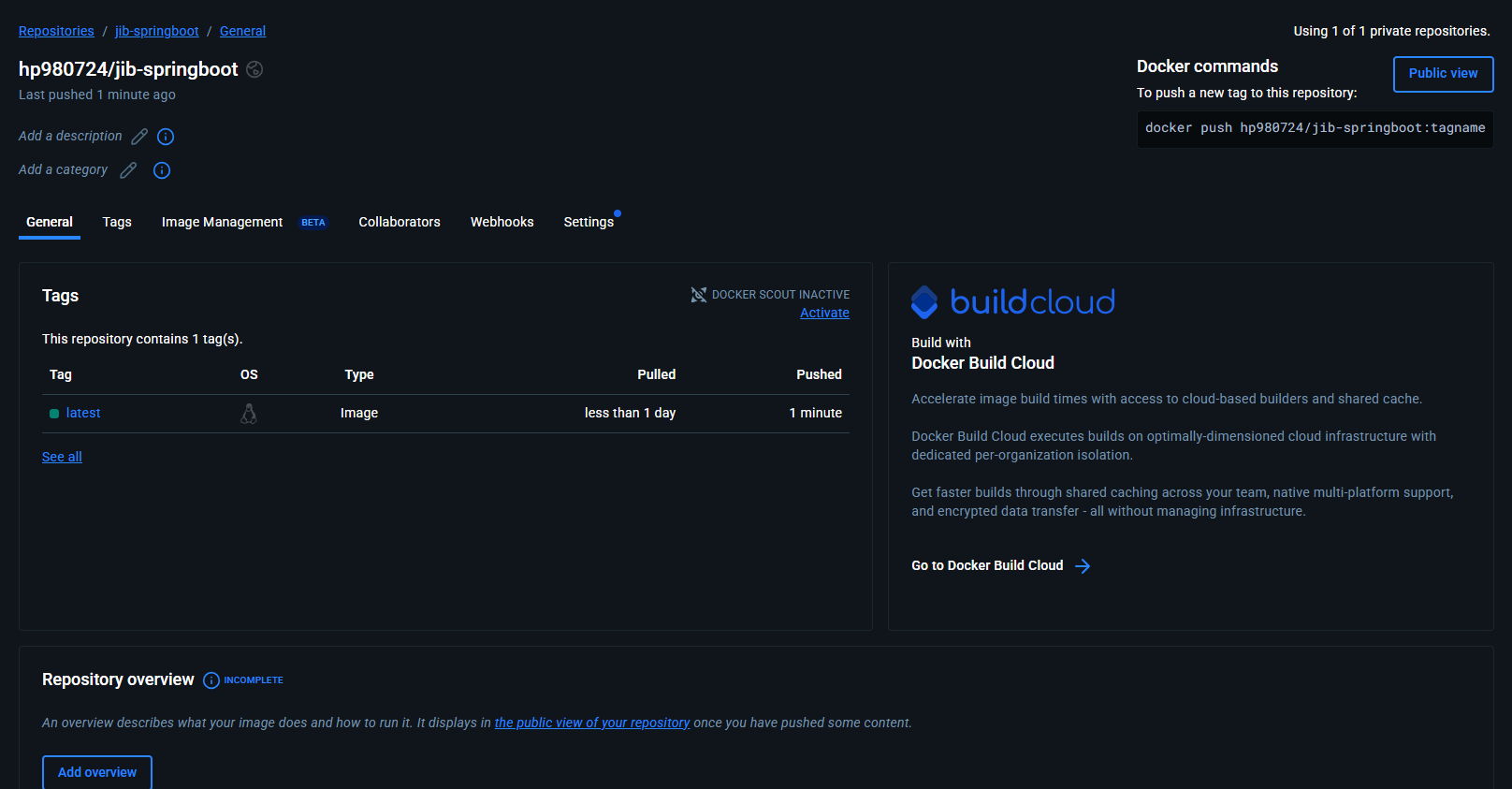

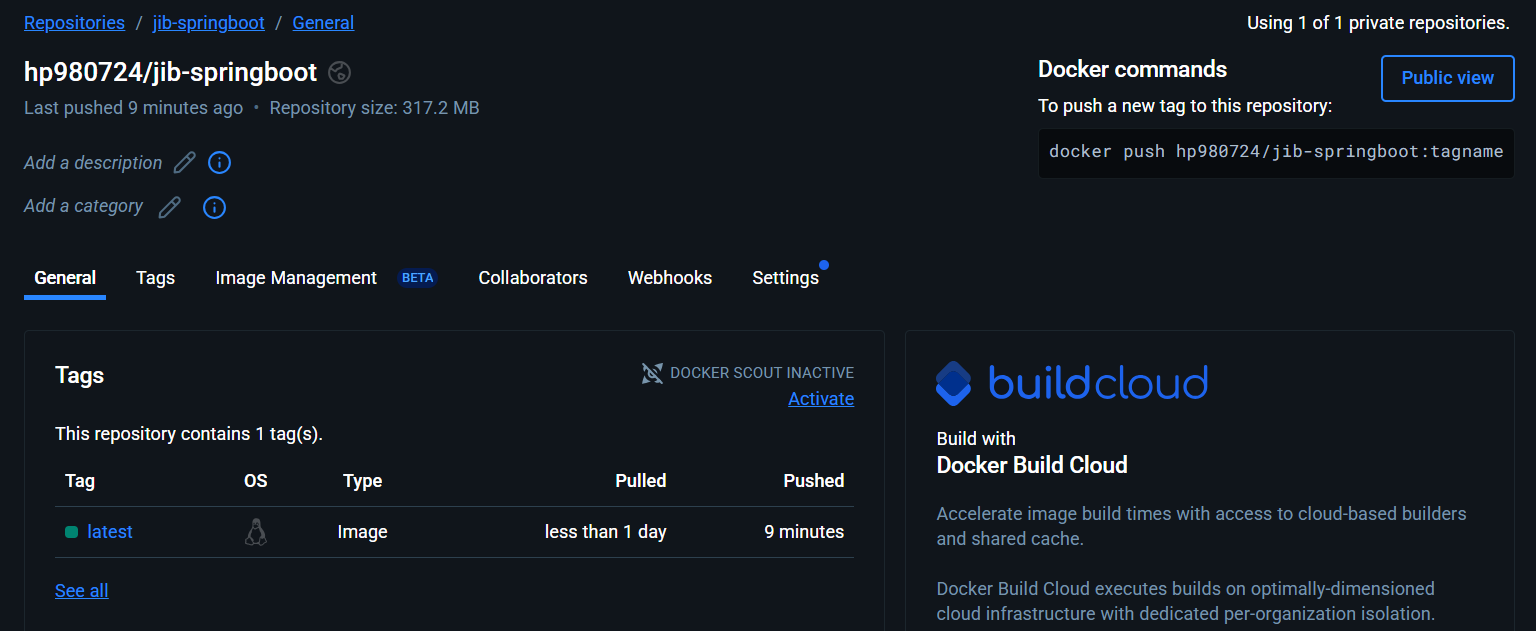

**mvn compile com.google.cloud.tools:jib-maven-plugin:3.4.6:build \

-Dimage=*docker.io/hp980724*/jib-example:latest \

-Djib.to.auth.username=hp980724\

-Djib.to.auth.password="access token" \**

-Djib.from.platforms=linux/*amd64*

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

96606f95d46f hp980724/jib-example "java -cp @/app/jib-…" 5 seconds ago Up 3 seconds 0.0.0.0:8080->8080/tcp, [::]:8080->8080/tcp myweb2

eab624284ce3 kindest/node:v1.32.8 "/usr/local/bin/entr…" 2 hours ago Up 2 hours 0.0.0.0:30000-30001->30000-30001/tcp, 127.0.0.1:39183->6443/tcp myk8s-control-plane

b9fc82f7d8a9 kindest/node:v1.32.8 "/usr/local/bin/entr…" 2 hours ago Up 2 hours myk8s-worker

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/chapters/chapters/ch03/python-app# curl -s 127.0.0.1:8080/hello | jq

{

"id": 1,

"content": "Hello, World!"

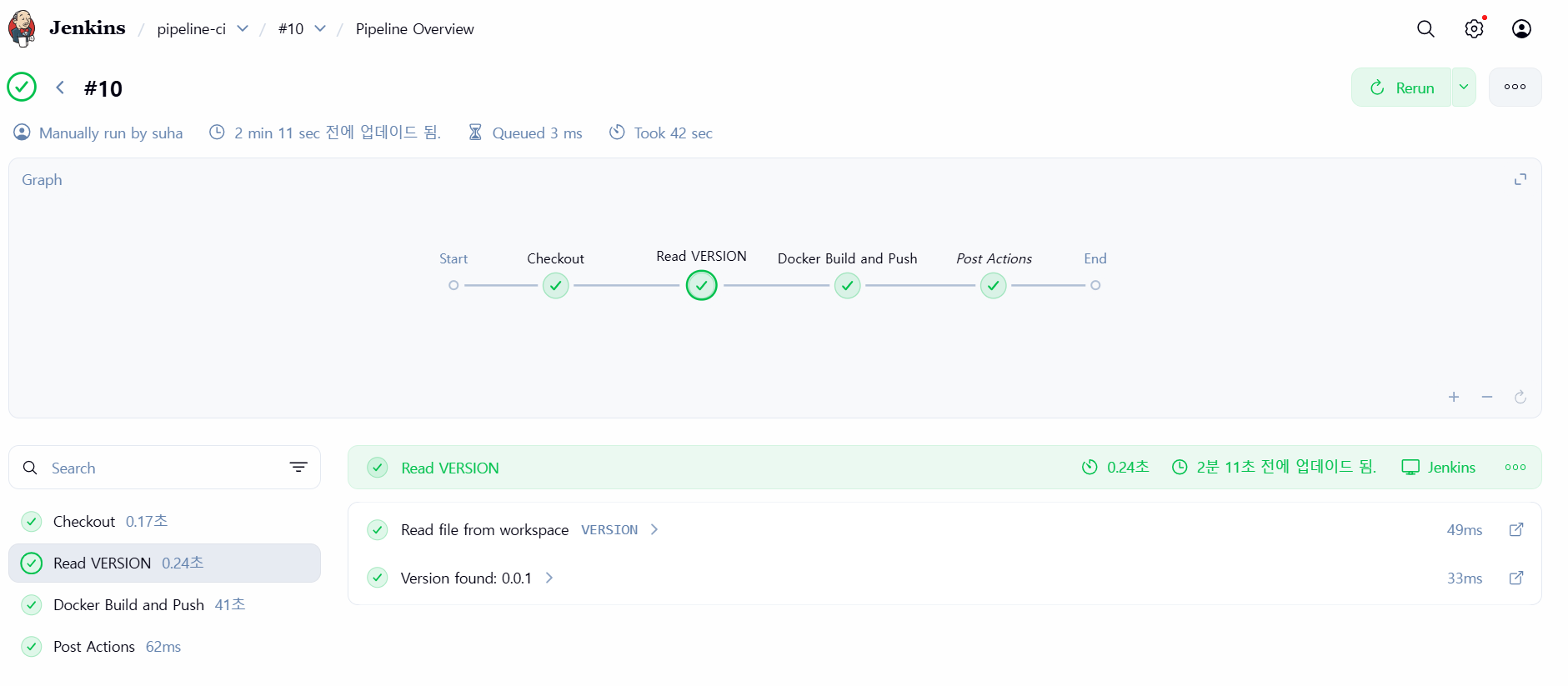

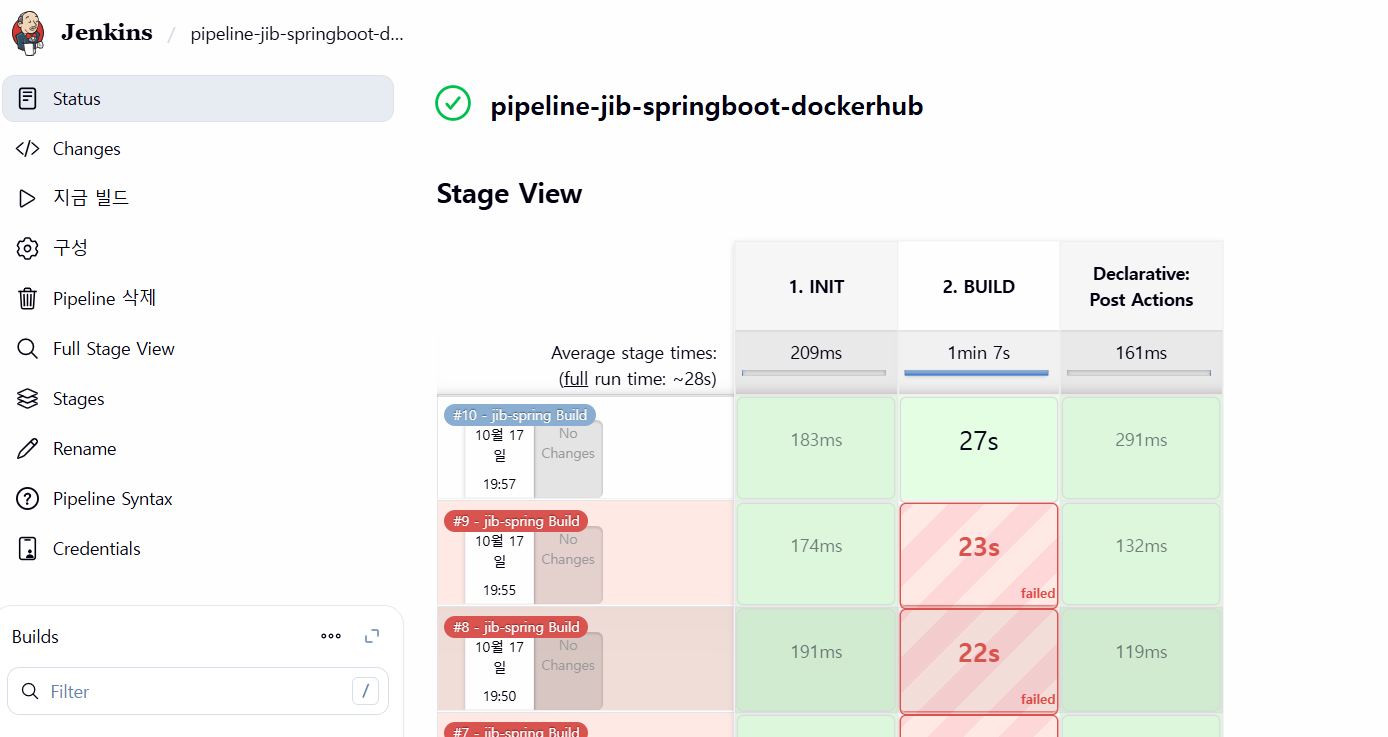

}jib를 활용하여 젠킨스 파이프라인 구성하기(GitOps + SpringBoot + Gradle )

젠킨스파일 비교하기(Docker vs Jib)

jib를 도입하기 전에는

Dockerfile 작성 과정에서 개발자의 실수(휴먼 에러)가 빈번하게 발생

했고, CI 서버(Jenkins)에서 Docker-in-

Docker(DinD)를 사용해야 했기 때문에 권한 문제, 버전 충돌 등 다양한 의존성 문제

가 있었다. 특히, Kaniko와 같은 대안 도구도 버전 호환성 문제로 자주 충돌이 발생했다.

jib를 도입하면서 Dockerfile 없이 Maven/Gradle 설정 파일로 이미지를 빌드할 수 있게 되어 휴먼 에러가 줄었고, Docker 데몬 의존성을 제거함으로써 CI/CD 파이프라인에서 권한 및 버전 문제를 해결했다. 또한,

Jenkinsfile에서 이미지와 프로필 설정을 명시적으로 관리하여 가독성과 일관성이

높아지고, 파이프라인의 안정성과 유지보수성이 크게 개선되었다.

젠킨스

기본 사용

- 작업을 수행하는 시점 Trigger

- 작업 수행 태스크 task가 언제 시작될지를 지시

- 작업을 구성하는 단계별 태스크 Built step

- 특정 목표를 수행하기 위한 태스크를 단계별 step로 구성할 수 있다.

- 이것을 젠킨스에서는 빌드 스텝 build step이라고 부른다.

- 태스크가 완료 후 수행할 명령 Post-build action

- 예를 들어 작업의 결과(성공 or 실패)를 사용자에게 알려주는 후속 동작이나, 자바 코드를 컴파일한 후 생성된 클래스 파일을 특정 위치로 복사 등

젠킨스 파이프라인 구성

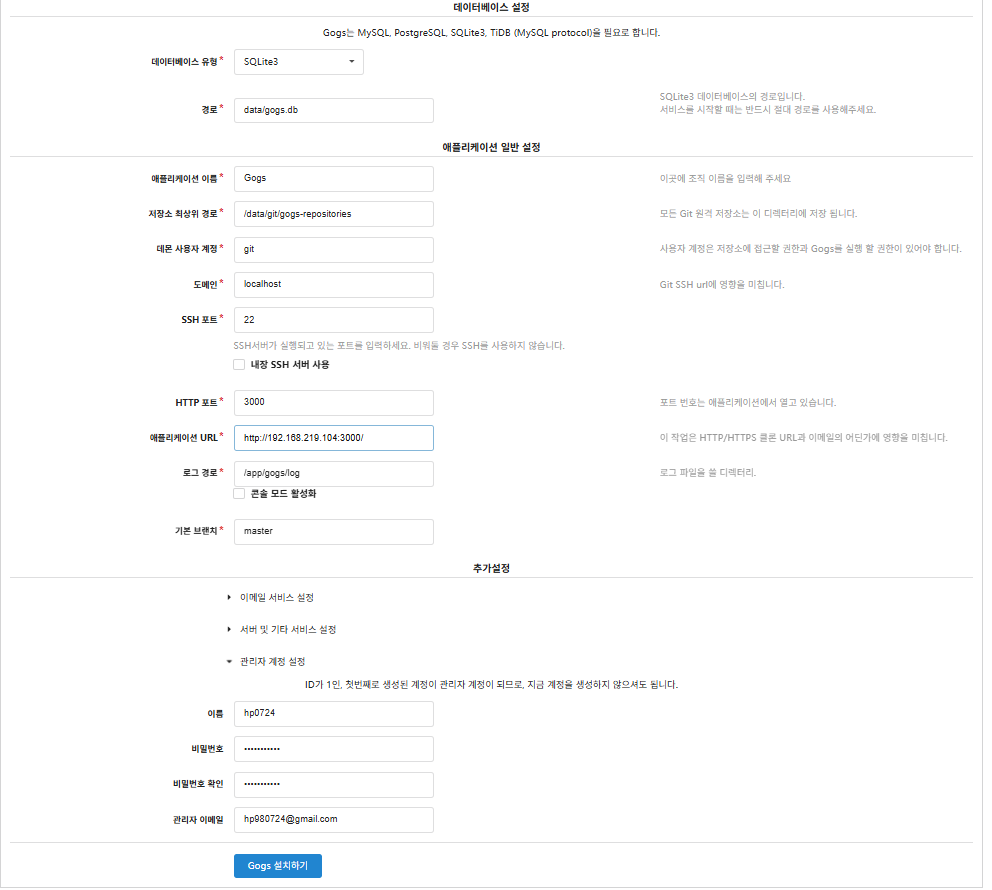

docker compose 활용

services:

gogs:

image: gogs/gogs:latest

container_name: gogs

environment:

- USER_UID=1000

- USER_GID=1000

ports:

- "3000:3000" # Gogs UI 접속

- "2222:22" # Gogs SSH 접속

volumes:

- gogs_data:/data # Gogs 데이터를 지속적으로 저장

networks:

- jenkins_network

jenkins:

image: jenkins/jenkins:lts

container_name: jenkins

environment:

- JENKINS_OPTS=--prefix=/jenkins

ports:

- "8080:8080" # Jenkins UI 접속

- "50000:50000" # Jenkins 에이전트와 통신

volumes:

- jenkins_home:/var/jenkins_home # Jenkins 데이터를 지속적으로 저장

- /var/run/docker.sock:/var/run/docker.sock

networks:

- jenkins_network

depends_on:

- gogs

volumes:

gogs_data:

driver: local

jenkins_home:

driver: local

networks:

jenkins_network:

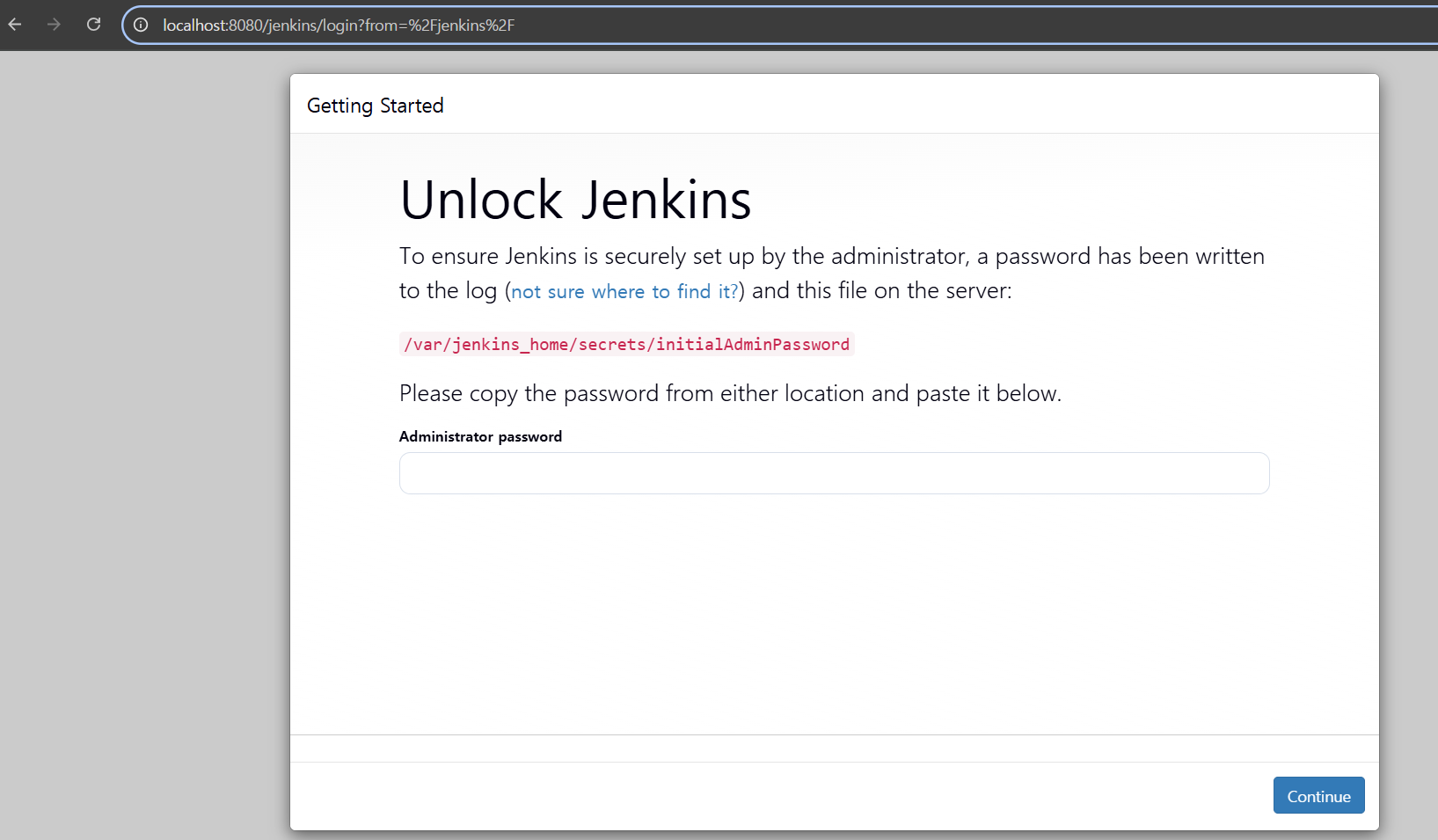

driver: bridgedocker compose up -d docker compose ps (⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# docker compose ps

WARN[0000] /home/suha/docker-compose.yaml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

gogs gogs/gogs:latest "/app/gogs/docker/st…" gogs About a minute ago Up About a minute (healthy) 0.0.0.0:22->22/tcp, [::]:22->22/tcp, 0.0.0.0:3000->3000/tcp, [::]:3000->3000/tcp

jenkins jenkins/jenkins:lts "/usr/bin/tini -- /u…" jenkins About a minute ago Up About a minute 0.0.0.0:8080->8080/tcp, [::]:8080->8080/tcp, 0.0.0.0:50000->50000/tcp, [::]:50000->50000/tcp패스워드는 /var/jenkins_home/secrets/initialAdminPassword 확인 가능

cat /var/jenkins_home/secrets/initialAdminPassword

• jenkins 컨테이너 내에서 root 사용자 권한으로 bash 셸을 실행하고 docker 를 설합니다.

# Jenkins 컨테이너 내부에 도커 실행 파일 설치

**docker compose exec --privileged -u root jenkins bash**

-----------------------------------------------------

id

curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc

chmod a+r /etc/apt/keyrings/docker.asc

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/debian \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update && apt install **docker-ce-cli** curl tree jq -y

**docker info

docker ps**

which docker

# Jenkins 컨테이너 내부에서 root가 아닌 jenkins 유저도 docker를 실행할 수 있도록 권한을 부여

groupadd -g 2000 -f docker

chgrp docker /var/run/docker.sock

ls -l /var/run/docker.sock

usermod -aG docker jenkins

cat /etc/group | grep docker

exit

****--------------------------------------------

# jenkins item 실행 시 docker 명령 실행 권한 에러 발생 : Jenkins 컨테이너 재기동으로 위 설정 내용을 Jenkins app 에도 적용 필요

~~~~docker compose restart jenkins

~~~~sudo docker compose restart jenkins # Windows 경우 이후부터 sudo 붙여서 실행하자

~~~~

# jenkins user로 docker 명령 실행 확인

docker compose exec jenkins **id**

docker compose exec jenkins **docker info**

docker compose exec jenkins **docker ps**Server:

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

root@bab10236ca42:/# docker ps

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

root@bab10236ca42:/#

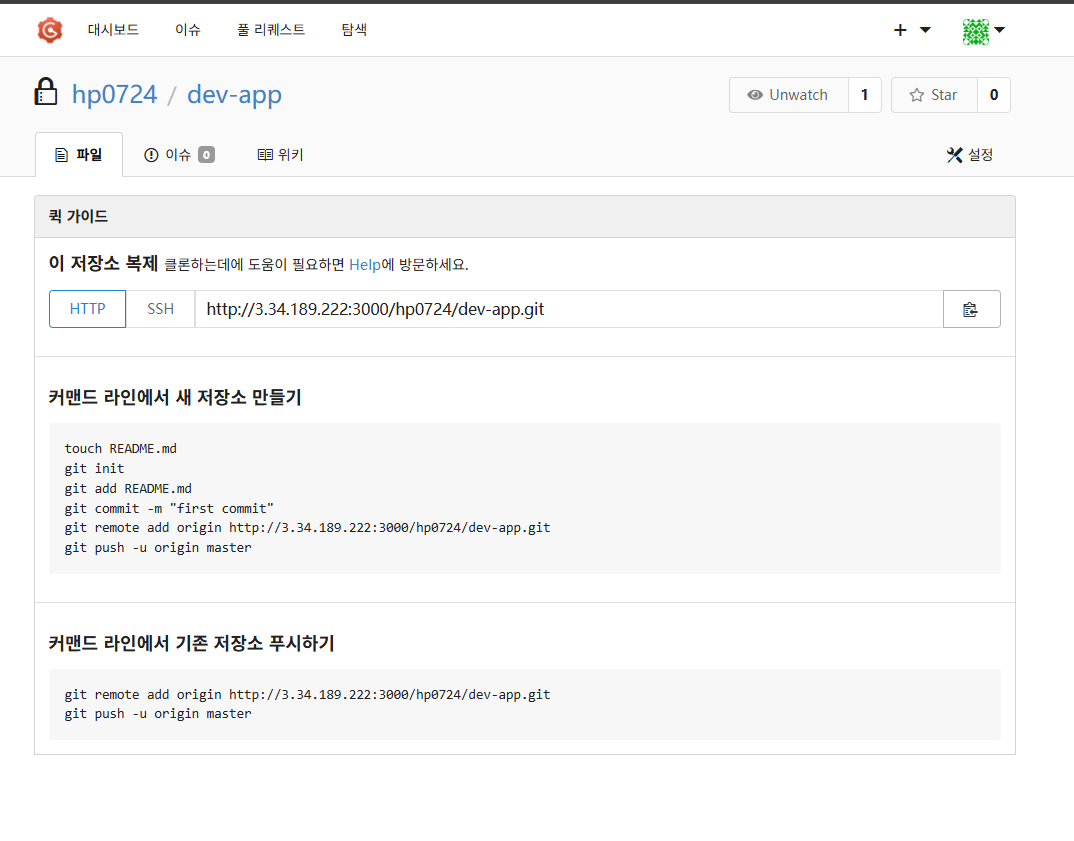

root@ip-172-31-12-75:/home/ubuntu# git clone http://3.34.189.222:3000/hp0724/dev-app.git

Cloning into 'dev-app'...

Username for 'http://3.34.189.222:3000': hp0724

Password for 'http://hp0724@3.34.189.222:3000':

warning: You appear to have cloned an empty repository.root@ip-172-31-12-75:/home/ubuntu# tree dev-app/

dev-app/

0 directories, 0 files

root@ip-172-31-12-75:/home/ubuntu# cd dev-app/

root@ip-172-31-12-75:/home/ubuntu/dev-app# git branch

root@ip-172-31-12-75:/home/ubuntu/dev-app# git remote -v

origin http://3.34.189.222:3000/hp0724/dev-app.git (fetch)

origin http://3.34.189.222:3000/hp0724/dev-app.git (push)

root@ip-172-31-12-75:/home/ubuntu/dev-app# cat > server.py <<EOF

from http.server import ThreadingHTTPServer, BaseHTTPRequestHandler

from datetime import datetime

class RequestHandler(BaseHTTPRequestHandler):

def do_GET(self):

self.send_response(200)

self.send_header('Content-type', 'text/plain')

self.end_headers()

now = datetime.now()

response_string = now.strftime("The time is %-I:%M:%S %p, CloudNeta Study.\n")

self.wfile.write(bytes(response_string, "utf-8"))

def startServer():

try:

server = ThreadingHTTPServer(('', 80), RequestHandler)

print("Listening on " + ":".join(map(str, server.server_address)))

server.serve_forever()

except KeyboardInterrupt:

server.shutdown()

if __name__== "__main__":

startServer()

EOF

root@ip-172-31-12-75:/home/ubuntu/dev-app# ls

server.py

root@ip-172-31-12-75:/home/ubuntu/dev-app# cat > Dockerfile <<EOF

FROM python:3.12

ENV PYTHONUNBUFFERED 1

COPY . /app

WORKDIR /app

CMD python3 server.py

EOF

root@ip-172-31-12-75:/home/ubuntu/dev-app# ls

Dockerfile server.py

root@ip-172-31-12-75:/home/ubuntu/dev-app# echo "0.0.1" > VERSION

root@ip-172-31-12-75:/home/ubuntu/dev-app# ls

Dockerfile VERSION server.py

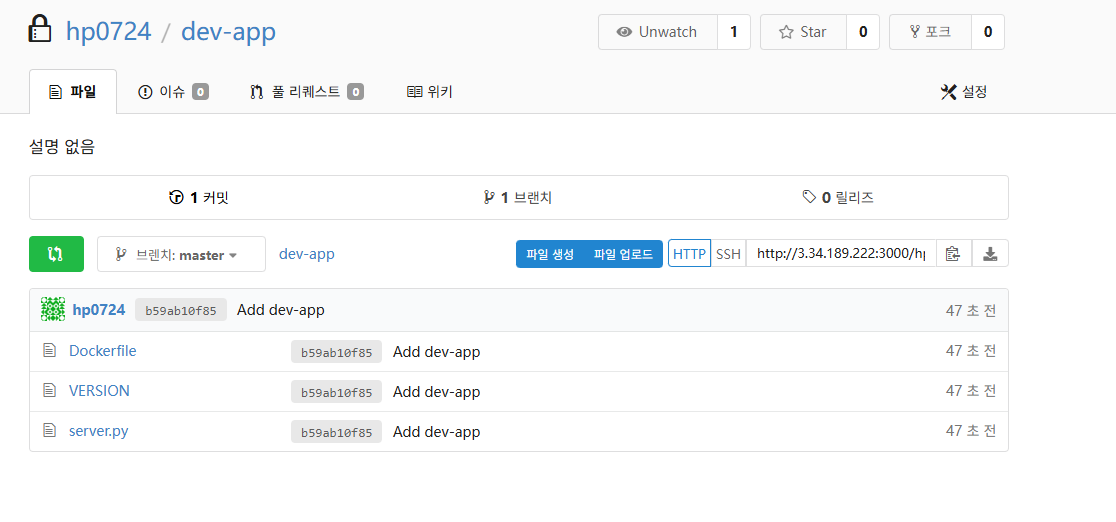

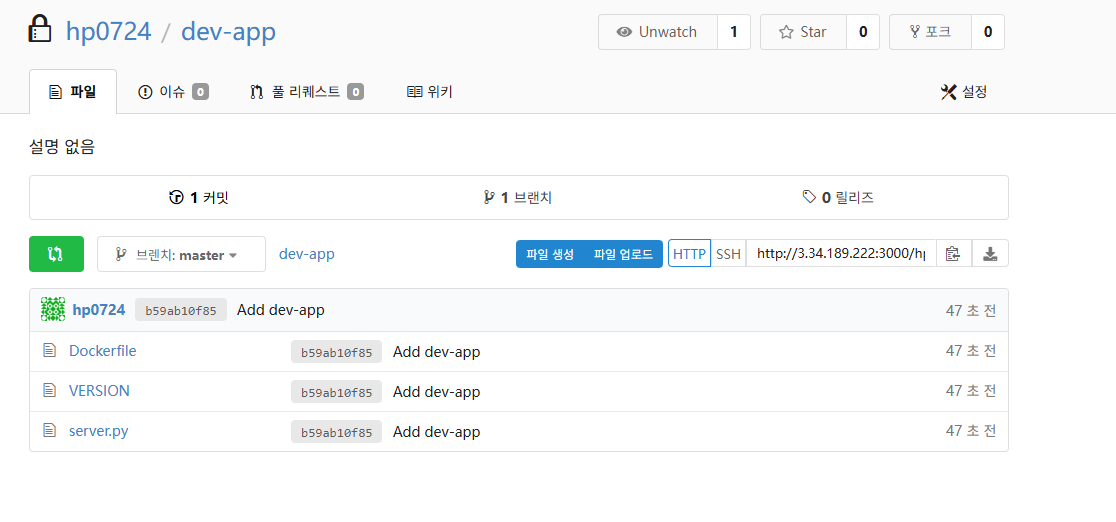

root@ip-172-31-12-75:/home/ubuntu/dev-app# git add .

root@ip-172-31-12-75:/home/ubuntu/dev-app# git commit -m "Add dev-app"

[master (root-commit) b59ab10] Add dev-app

3 files changed, 28 insertions(+)

create mode 100644 Dockerfile

create mode 100644 VERSION

create mode 100644 server.py

root@ip-172-31-12-75:/home/ubuntu/dev-app# git push -u origin main

error: src refspec main does not match any

error: failed to push some refs to 'http://3.34.189.222:3000/hp0724/dev-app.git'

root@ip-172-31-12-75:/home/ubuntu/dev-app# git push -u origin master

Username for 'http://3.34.189.222:3000': hp0724

Password for 'http://hp0724@3.34.189.222:3000':

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 2 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (5/5), 765 bytes | 765.00 KiB/s, done.

Total 5 (delta 0), reused 0 (delta 0), pack-reused 0

To http://3.34.189.222:3000/hp0724/dev-app.git

* [new branch] master -> master

branch 'master' set up to track 'origin/master'.

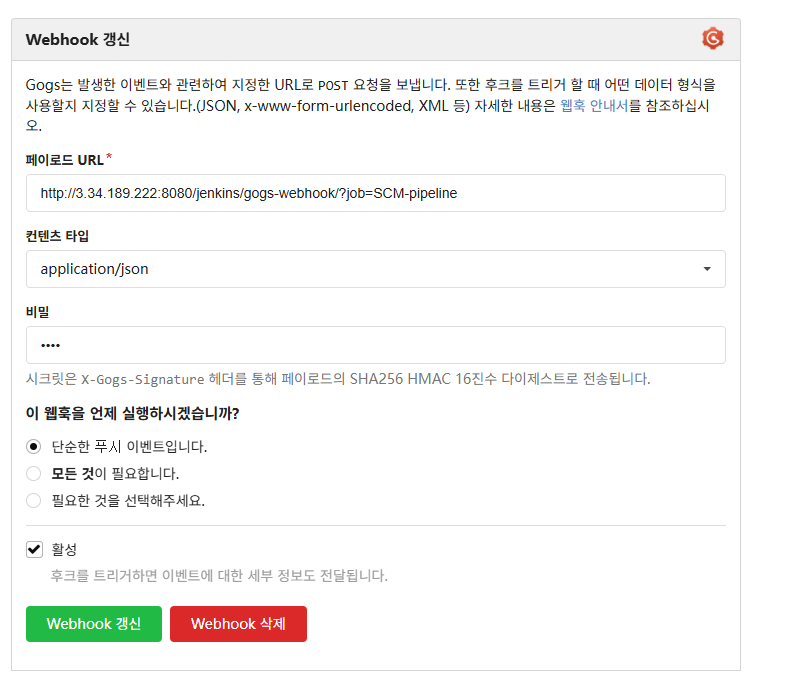

gogs 수정

62498d577dd1:/data/gogs/conf# cat app.ini

BRAND_NAME = Gogs

RUN_USER = git

RUN_MODE = prod

[database]

TYPE = sqlite3

HOST = 127.0.0.1:5432

NAME = gogs

SCHEMA = public

USER = gogs

PASSWORD =

SSL_MODE = disable

PATH = data/gogs.db

[repository]

ROOT = /data/git/gogs-repositories

DEFAULT_BRANCH = master

[server]

DOMAIN = localhost

HTTP_PORT = 3000

EXTERNAL_URL = http://3.34.189.222:3000/

DISABLE_SSH = false

SSH_PORT = 22

START_SSH_SERVER = false

OFFLINE_MODE = false

[email]

ENABLED = false

[auth]

REQUIRE_EMAIL_CONFIRMATION = false

DISABLE_REGISTRATION = false

ENABLE_REGISTRATION_CAPTCHA = true

REQUIRE_SIGNIN_VIEW = false

[user]

ENABLE_EMAIL_NOTIFICATION = false

[picture]

DISABLE_GRAVATAR = false

ENABLE_FEDERATED_AVATAR = false

[session]

PROVIDER = file

[log]

MODE = file

LEVEL = Info

ROOT_PATH = /app/gogs/log

[security]

INSTALL_LOCK = true

SECRET_KEY = gbYjnDOkXezn7BL

LOCAL_NETWORK_ALLOWLIST = 3.34.189.222

url jenkins 넣어주기

jib를 활용하여 젠킨스 파이프라인 구성하기(GitOps + SpringBoot + Gradle )

spring boot

https://github.com/spring-guides/gs-spring-boot

git clone 하기

build 하기

build.gradle

plugins {

id 'org.springframework.boot' version '3.3.0'

id 'java'

}

apply plugin: 'io.spring.dependency-management'

group = 'com.example'

version = '0.0.1-SNAPSHOT'

sourceCompatibility = '17'

repositories {

mavenCentral()

}

dependencies {

implementation 'org.springframework.boot:spring-boot-starter-web'

testImplementation 'org.springframework.boot:spring-boot-starter-test'

}

test {

useJUnitPlatform()

}jib 플러그인 추가

plugins {

id 'org.springframework.boot' version '3.3.0'

id 'java'

id 'com.google.cloud.tools.jib' version '3.4.0' // Jib 플러그인 추가

}

apply plugin: 'io.spring.dependency-management'

group = 'com.example'

version = '0.0.1-SNAPSHOT'

sourceCompatibility = '17'

repositories {

mavenCentral()

}

dependencies {

implementation 'org.springframework.boot:spring-boot-starter-web'

testImplementation 'org.springframework.boot:spring-boot-starter-test'

}

test {

useJUnitPlatform()

}build.gradle 수정

root@ip-172-31-12-75:~/gs-spring-boot/initial# cat build.gradle

plugins {

id 'org.springframework.boot' version '3.3.0'

id 'java'

id 'com.google.cloud.tools.jib' version '3.4.0' // Jib 플러그인 추가

}

apply plugin: 'io.spring.dependency-management'

group = 'com.example'

version = '0.0.1-SNAPSHOT'

sourceCompatibility = '17'

repositories {

mavenCentral()

}

dependencies {

implementation 'org.springframework.boot:spring-boot-starter-web'

testImplementation 'org.springframework.boot:spring-boot-starter-test'

}

jib {

to {

image = 'hp980724/jib-springboot:latest' // Docker Hub에 푸시될 이미지 이름 설정

}

}

test {

useJUnitPlatform()

}docker login

root@ip-172-31-12-75:~/gs-spring-boot/initial# docker login

USING WEB-BASED LOGIN

i Info → To sign in with credentials on the command line, use 'docker login -u <username>'

Your one-time device confirmation code is: VFKK-KRFG

Press ENTER to open your browser or submit your device code here: https://login.docker.com/activate

Waiting for authentication in the browser…

^Clogin canceled

root@ip-172-31-12-75:~/gs-spring-boot/initial# docker login

USING WEB-BASED LOGIN

i Info → To sign in with credentials on the command line, use 'docker login -u <username>'

Your one-time device confirmation code is: KLJG-BLWZ

Press ENTER to open your browser or submit your device code here: https://login.docker.com/activate

Waiting for authentication in the browser…

WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'.

Configure a credential helper to remove this warning. See

https://docs.docker.com/go/credential-store/

Login Succeeded

root@ip-172-31-12-75:~/gs-spring-boot/initial# ./gradlew jib --image=hp980724/jib-springboot:latest

> Task :jib

Containerizing application to hp980724/jib-springboot...

Base image 'eclipse-temurin:17-jre' does not use a specific image digest - build may not be reproducible

Using credentials from Docker config (/root/.docker/config.json) for hp980724/jib-springboot

The base image requires auth. Trying again for eclipse-temurin:17-jre...

Using credentials from Docker config (/root/.docker/config.json) for eclipse-temurin:17-jre

Using base image with digest: sha256:db1c787e2cd41943a90b025191e4e66cbecfc0695e4ff619353eef92e460378f

Container entrypoint set to [java, -cp, @/app/jib-classpath-file, com.example.springboot.Application]

Built and pushed image as hp980724/jib-springboot

Executing tasks:

[==============================] 100.0% complete

Deprecated Gradle features were used in this build, making it incompatible with Gradle 9.0.

You can use '--warning-mode all' to show the individual deprecation warnings and determine if they come from your own scripts or plugins.

For more on this, please refer to https://docs.gradle.org/8.3/userguide/command_line_interface.html#sec:command_line_warnings in the Gradle documentation.

BUILD SUCCESSFUL in 15s

2 actionable tasks: 1 executed, 1 up-to-date

빌드 해보기

host 에서 설정한 spring boot 폴더에서 gogs 저장소 git remote 하기

git remote add origin http://3.34.189.222:3000/hp980724/jib-spring.git젠킨스 파일 만들기

pipeline {

agent any

environment {

REGISTRY_URL = "hp980724/jib-springboot"

REGISTRY_CREDENTIAL_ID = "docker-hub"

GIT_REPOSITORY_URL = "http://43.201.112.199:3000/hp980724/jib-spring.git"

GIT_CREDENTIAL_ID = "gogs"

GIT_BRANCH = "main"

GIT_COMMIT_ID = ""

}

stages {

stage("1. INIT") {

steps {

script {

currentBuild.displayName = "#${BUILD_NUMBER} - jib-spring Build"

currentBuild.description = "Executed by Jenkins"

}

}

}

stage("2. BUILD") {

steps {

script {

echo "[INFO] Starting build process"

// Git 리포지토리 클론

git branch: GIT_BRANCH,

credentialsId: GIT_CREDENTIAL_ID,

url: GIT_REPOSITORY_URL

def profile = "-Djib.container.environment=SPRING_PROFILES_ACTIVE=dev"

// Get the current commit ID

GIT_COMMIT_ID = sh(script: "git rev-parse HEAD", returnStdout: true).trim()

echo "[INFO] commit_id : ${GIT_COMMIT_ID}"

// jib 이미지 빌드 & 푸시

withCredentials([usernamePassword(credentialsId: REGISTRY_CREDENTIAL_ID, usernameVariable: 'REGISTRY_USERNAME', passwordVariable: 'REGISTRY_PASSWORD')]) {

// ./gradlew 경로 수정 (initial 디렉토리에서 실행)

sh """

chmod +x ./gradlew # 실행 권한 부여

./gradlew :jib ${profile} \

-Djib.from.image='openjdk:17-jdk-slim' \

-Djib.to.image=${REGISTRY_URL}:${GIT_COMMIT_ID} \

-Djib.to.auth.username=${REGISTRY_USERNAME} \

-Djib.to.auth.password=${REGISTRY_PASSWORD} \

-Djib.allowInsecureRegistries=true \

-DsendCredentialsOverHttp=true \

-x test

"""

}

}

}

}

}

post {

always {

cleanWs()

}

}

}

젠킨슨 속도문제 해결

https://jiholine10.tistory.com/414

빌다 Buildah

| 개념 | 설명 |

|---|---|

| 목적 | 컨테이너 이미지를 빌드하고 관리하는 도구 |

| 개발 주체 | Red Hat (Podman과 동일 계열) |

| 대상 환경 | rootless(비root) 환경에서도 동작 가능 |

| 상호 관계 | Podman이 실행을 담당하고, Buildah가 빌드를 담당함 |

1) 기존 이미지 기반으로 빌드

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# buildah login -u hp980724 docker.io

Password:

Login Succeeded!기존 이미지에서 작동하는 컨테이너를 빌드할 때 Buildah는 기본적으로 이미지 이름에 '-working-container'를 추가하여 컨테이너 이름을 생성합니다. Buildah CLI는 새 컨테이너의 이름을 편리하게 반환합니다. 표준 셸 대입을 사용하여 반환된 값을 셸 변수에 할당하면 이 기능을 활용할 수 있습니다.

빌다 설명하기

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# # container=$(buildah from fedora)

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# container=$(buildah from fedora)

Resolved "fedora" as an alias (/etc/containers/registries.conf.d/shortnames.conf)

Trying to pull registry.fedoraproject.org/fedora:latest...

Getting image source signatures

Copying blob 309bed875238 done |

Copying config 11b37a6cbb done |

Writing manifest to image destination

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# echo $container

fedora-working-container

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# buildah run $container bash

WARN[0000] can't raise ambient capability CAP_CHOWN: operation not permitted

WARN[0000] can't raise ambient capability CAP_DAC_OVERRIDE: operation not permitted

WARN[0000] can't raise ambient capability CAP_FOWNER: operation not permitted

WARN[0000] can't raise ambient capability CAP_FSETID: operation not permitted

WARN[0000] can't raise ambient capability CAP_KILL: operation not permitted

WARN[0000] can't raise ambient capability CAP_NET_BIND_SERVICE: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETFCAP: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETGID: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETPCAP: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETUID: operation not permitted

WARN[0000] can't raise ambient capability CAP_SYS_CHROOT: operation not permitted

[root@a96fb6fb1a42 /]# exit

exit

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# buildah run $container java

WARN[0000] can't raise ambient capability CAP_CHOWN: operation not permitted

WARN[0000] can't raise ambient capability CAP_DAC_OVERRIDE: operation not permitted

WARN[0000] can't raise ambient capability CAP_FOWNER: operation not permitted

WARN[0000] can't raise ambient capability CAP_FSETID: operation not permitted

WARN[0000] can't raise ambient capability CAP_KILL: operation not permitted

WARN[0000] can't raise ambient capability CAP_NET_BIND_SERVICE: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETFCAP: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETGID: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETPCAP: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETUID: operation not permitted

WARN[0000] can't raise ambient capability CAP_SYS_CHROOT: operation not permitted

ERRO[0000] runc create failed: unable to start container process: error during container init: exec: "java": executable file not found in $PATH

error running container: from /usr/bin/runc creating container for [java]: : exit status 1

ERRO[0000] did not get container create message from subprocess: EOF

Error: while running runtime: exit status 1

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# buildah run $container -- dnf -y install javajava 설치 완료 javer -version으로 확인

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# buildah run $container java -version

WARN[0000] can't raise ambient capability CAP_CHOWN: operation not permitted

WARN[0000] can't raise ambient capability CAP_DAC_OVERRIDE: operation not permitted

WARN[0000] can't raise ambient capability CAP_FOWNER: operation not permitted

WARN[0000] can't raise ambient capability CAP_FSETID: operation not permitted

WARN[0000] can't raise ambient capability CAP_KILL: operation not permitted

WARN[0000] can't raise ambient capability CAP_NET_BIND_SERVICE: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETFCAP: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETGID: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETPCAP: operation not permitted

WARN[0000] can't raise ambient capability CAP_SETUID: operation not permitted

WARN[0000] can't raise ambient capability CAP_SYS_CHROOT: operation not permitted

openjdk version "21.0.8" 2025-07-15

OpenJDK Runtime Environment (Red_Hat-21.0.8.0.9-1) (build 21.0.8+9)

OpenJDK 64-Bit Server VM (Red_Hat-21.0.8.0.9-1) (build 21.0.8+9, mixed mode, sharing)컨테이너를 처음부터 만들기

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# newcontainer=$(buildah from scratch)

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# buildah containers

CONTAINER ID BUILDER IMAGE ID IMAGE NAME CONTAINER NAME

d9786ab761a0 * scratch working-container

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# buildah images

REPOSITORY TAG IMAGE ID CREATED SIZE- "스크래치" 기반 컨테이너는 처음부터 시작한다

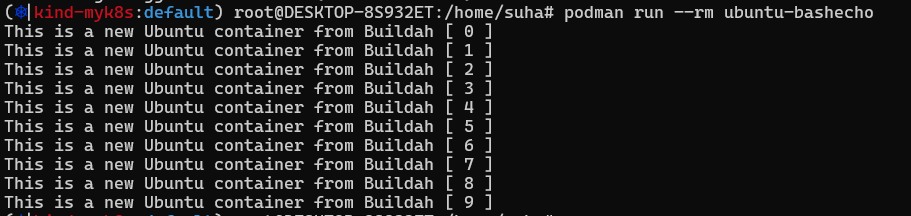

Ubuntu에서 Buildah scratch 컨테이너 생성하기

1. debootstrap 설치

apt update && apt install -y debootstrap2. scratch 컨테이너 생성

newcontainer=$(buildah from scratch)3. 컨테이너 마운트

scratchmnt=$(buildah mount $newcontainer)

echo $scratchmnt

4. Ubuntu 루트 파일시스템 설치

여기서 jammy는 Ubuntu 22.04 LTS 코드명입니다.

다른 버전을 원하면

noble(24.04),focal(20.04) 등으로 변경하세요.

debootstrap --variant=minbase jammy $scratchmnt http://mirror.kakao.com/ubuntu/

이 명령은 $scratchmnt 아래에 Ubuntu 최소 루트 파일시스템을 설치합니다.

가까운 kakao 에서 찾도록 진행

5. bash, coreutils 등 추가 설치

chroot $scratchmnt apt update

chroot $scratchmnt apt update6. 실행 스크립트 생성 및 복사

cat <<'EOF' > runecho.sh

#!/usr/bin/env bash

for i in $(seq 0 9); do

echo "This is a new Ubuntu container from Buildah [ $i ]"

done

EOF

chmod +x runecho.sh

buildah copy $newcontainer ./runecho.sh /usr/bin/7. 컨테이너 CMD 설정 및 이미지 커밋

buildah config --cmd /usr/bin/runecho.sh $newcontainer

buildah commit $newcontainer ubuntu-bashecho8. 실행 테스트

podman run --rm ubuntu-bashecho

| 구분 | Buildah | Podman | Docker |

|---|---|---|---|

| 역할 | 이미지 빌드 | 컨테이너 실행 | 빌드 + 실행 통합 |

| 데몬 필요 여부 | ❌ 없음 (완전 클라이언트) | ❌ 없음 | ✅ dockerd 데몬 필수 |

| 루트 권한 필요 | ❌ rootless 가능 | ❌ rootless 가능 | ⚠️ 보통 root 필요 |

| Dockerfile 사용 | 가능 (buildah build) | ❌ | 가능 (docker build) |

| OCI 호환 | ✅ 완전 호환 | ✅ 완전 호환 | ✅ (일부 구현 차이 있음) |

| 스토리지 공유 | ✅ Buildah ↔ Podman 공유 | ✅ | ✅ (자체 관리) |

| 목표 | CI/CD 빌드 자동화 | 컨테이너 런타임 | 범용 “올인원” 도구 |

Podman

Podman이란 무엇인가

- Podman은 컨테이너 생성·실행·관리용 유틸리티

- 주요 특징:

- daemon 없이 실행 가능 → root 권한 없이도 “루트리스(rootless)” 모드 지원.

- 이미지 및 컨테이너가 OCI(Open Container Initiative) 표준을 따릅니다

- Docker CLI와 유사한 명령어 구성

2. 주요 기능 및 사용 흐름

이미지 검색·다운로드·관리

podman search <이미지명>: remote registry 이미지를 검색.podman pull <이미지 주소>: 이미지 다운로드. 예:docker.io/library/httpdpodman images: 로컬에 저장된 이미지 목록 확인.

컨테이너 실행·관리

podman run [옵션] 이미지명 [명령어]: 컨테이너 생성 및 실행. 예:podman run -dt -p 8080:80/tcp docker.io/library/httpdpodman ps: 실행 중인 컨테이너 목록.a옵션을 주면 생성되었지만 종료된 컨테이너까지 표시.podman logs,podman top,podman inspect: 각각 로그 보기, 프로세스 정보 보기, 내부 메타데이터 조회

루트리스(Rootless) 및 네트워킹

- 루트 권한 없이 일반 사용자만으로 컨테이너를 실행 가능. 보안상 장점이 큽니다.

- 루트리스 환경에서는 컨테이너에 IP주소가 직접 할당되지 않을 수 있다.

3. Docker와의 차이점 (비교)

| 항목 | Docker | Podman |

|---|---|---|

| 데몬 존재 여부 | dockerd 데몬이 항상 실행되어야 함 | 데몬 불필요 (클라이언트 단독 실행 가능) |

| 루트 권한 | 일반적으로 root 또는 sudo 필요 | 루트리스 모드에서 일반 사용자 권한만으로 실행 가능 |

| 이미지 빌드 & 실행 | docker build + docker run | Podman 단독 실행 가능 + 보통 buildah와 함께 이미지 빌드 |

| 구조 단순성 | 통합된 CLI 및 데몬 구조 | 빌드·실행 분리 가능, 경량화된 구조 |

| 보안/격리 | 데몬 공격면 존재 가능성 있음 | 사용자 네임스페이스 활용, 데몬 제거로 공격면 감소 |

| Docker와의 호환성 | 자체 이미지 포맷 사용 | OCI 표준을 따르며 Docker 이미지 호환 가능 |

4. 듀토리얼

suha@DESKTOP-8S932ET:~$ podman run --name basic_httpd -dt -p 8080:80/tcp docker.io/nginx

WARN[0000] "/" is not a shared mount, this could cause issues or missing mounts with rootless containers

Trying to pull docker.io/library/nginx:latest...

Getting image source signatures

Copying blob 8da8ed3552af done |

Copying blob 8c7716127147 done |

Copying blob 5d8ea9f4c626 done |

Copying blob 250b90fb2b9a done |

Copying blob b459da543435 done |

Copying blob 58d144c4badd done |

Copying blob 54e822d8ee0c done |

Copying config 07ccdb7838 done |

Writing manifest to image destination

051bfcedc53d6d3285c3a0cb0bac420517a5c4bcf77664e56d32d95073c6843c

suha@DESKTOP-8S932ET:~$ podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

051bfcedc53d docker.io/library/nginx:latest nginx -g daemon o... 5 seconds ago Up 5 seconds 0.0.0.0:8080->80/tcp basic_httpd

suha@DESKTOP-8S932ET:~$ podman inspect basic_httpd | grep IPAddress

"IPAddress": "",

suha@DESKTOP-8S932ET:~$ curl http://localhost:8080

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

suha@DESKTOP-8S932ET:~$ podman logs 051bfcedc53d

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2025/10/18 15:54:46 [notice] 1#1: using the "epoll" event method

2025/10/18 15:54:46 [notice] 1#1: nginx/1.29.2

2025/10/18 15:54:46 [notice] 1#1: built by gcc 14.2.0 (Debian 14.2.0-19)

2025/10/18 15:54:46 [notice] 1#1: OS: Linux 6.6.87.2-microsoft-standard-WSL2

2025/10/18 15:54:46 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2025/10/18 15:54:46 [notice] 1#1: start worker processes

2025/10/18 15:54:46 [notice] 1#1: start worker process 29

2025/10/18 15:54:46 [notice] 1#1: start worker process 30

2025/10/18 15:54:46 [notice] 1#1: start worker process 31

2025/10/18 15:54:46 [notice] 1#1: start worker process 32

2025/10/18 15:54:46 [notice] 1#1: start worker process 33

2025/10/18 15:54:46 [notice] 1#1: start worker process 34

2025/10/18 15:54:46 [notice] 1#1: start worker process 35

2025/10/18 15:54:46 [notice] 1#1: start worker process 36

2025/10/18 15:54:46 [notice] 1#1: start worker process 37

2025/10/18 15:54:46 [notice] 1#1: start worker process 38

2025/10/18 15:54:46 [notice] 1#1: start worker process 39

2025/10/18 15:54:46 [notice] 1#1: start worker process 40

2025/10/18 15:54:46 [notice] 1#1: start worker process 41

2025/10/18 15:54:46 [notice] 1#1: start worker process 42

2025/10/18 15:54:46 [notice] 1#1: start worker process 43

2025/10/18 15:54:46 [notice] 1#1: start worker process 44

2025/10/18 15:54:46 [notice] 1#1: start worker process 45

2025/10/18 15:54:46 [notice] 1#1: start worker process 46checkpoint 기능의 경우

E: Package 'criu' has no installation candidate에러로 인해 실습 불가

빌드팩 Buildpacks

Cloud Native Buildpack (CNB)에 대한 설명

빌드팩(Buildpack)

은 애플리케이션 소스코드를 분석(Detect)하고, 필요한 런타임이나 의존성을 설치(Build)하며, 마지막 실행환경(Run)까지 자동으로 구성해주는 모듈입니다.

듀토리얼

설치

sudo add-apt-repository ppa:cncf-buildpacks/pack-cli

sudo apt-get update

sudo apt-get install pack-cli(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# mkdir node-js-sample-app

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# ls

chapters docker-compose.yaml get-docker.sh hello.sh kube-ps1 node-js-sample-app runecho.sh

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha# cd node-js-sample-app/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app#

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# vi app.js

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# vi package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# docker version

Client: Docker Engine - Community

Version: 28.5.1

API version: 1.51

Go version: go1.24.8

Git commit: e180ab8

Built: Wed Oct 8 12:17:26 2025

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 28.5.1

API version: 1.51 (minimum version 1.24)

Go version: go1.24.8

Git commit: f8215cc

Built: Wed Oct 8 12:17:26 2025

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: v1.7.28

GitCommit: b98a3aace656320842a23f4a392a33f46af97866

runc:

Version: 1.3.0

GitCommit: v1.3.0-0-g4ca628d1

docker-init:

Version: 0.19.0

GitCommit: de40ad0

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack buildpack new examples/node-js \

--api 0.10 \

--path node-js-buildpack \

--version 0.0.1 \

--targets "linux/amd64"

create buildpack.toml

create bin/build

create bin/detect

Successfully created examples/node-js

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cd node-js-buildpack/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# ls

bin buildpack.toml

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cat buildpack.toml

api = "0.10"

WithWindowsBuild = false

WithLinuxBuild = false

[buildpack]

id = "examples/node-js"

version = "0.0.1"

[[targets]]

os = "linux"

arch = "amd64"

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd bin/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd de

-bash: cd: de: No such file or directory

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi build

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# pack config default-builder cnbs/sample-builder:noble

Builder cnbs/sample-builder:noble is now the default builder

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# pack config trusted-builders add cnbs/sample-builder:noble

Builder cnbs/sample-builder:noble is now trusted

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# pack build test-node-js-app --path ./node-js-sample-app --buildpack ./node-js-buildpack --no-color

ERROR: failed to build: invalid app path './node-js-sample-app': evaluate symlink: lstat node-js-sample-app: no such file or directory

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# pwd

/home/suha/node-js-sample-app/node-js-buildpack

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# (⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# pack build test-node-js-app --path ./node-js-sample-app --buildpack ./node-js-buildpack --no-color

ERROR: failed to build: invalid app path './node-js-sample-app': evaluate symlink: lstat node-js-sample-app: no such file or directory

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# pwd

/home/suha/node-js-sample-app/node-js-buildpack

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack#^C

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack build test-node-js-app --path ./node-js-sample-app --buildpack ./node-js-buildpack --no-color

ERROR: failed to build: invalid app path './node-js-sample-app': evaluate symlink: lstat node-js-sample-app: no such file or directory

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pwd

/home/suha/node-js-sample-app

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# (⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# pack build test-node-js-app --path ./node-js-sample-app --buildpack ./node-js-buildpack --no-color

ERROR: failed to build: invalid app path './node-js-sample-app': evaluate symlink: lstat node-js-sample-app: no such file or directory

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# pwd

/home/suha/node-js-sample-app/node-js-buildpack

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack#^C

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack build test-node-js-app --path . --buildpack ./node-js-buildpack --no-color

noble: Pulling from cnbs/sample-builder

d9d352c11bbd: Pull complete

a89a6d4ce7ab: Pull complete

40f88e0b9e2e: Pull complete

7cd438807671: Pull complete

5c9ae9786b5c: Pull complete

96cd9b06980f: Pull complete

6c6c63e26408: Pull complete

963d0cc368d6: Pull complete

295156d243be: Pull complete

1763d0c6967f: Pull complete

8ca48f841f8c: Pull complete

2dfaa2cde8a8: Pull complete

1e96ded835bc: Pull complete

738cf76368f9: Pull complete

fd112c64004f: Pull complete

3882072c5ab9: Pull complete

de305c158623: Pull complete

4f4fb700ef54: Pull complete

Digest: sha256:7362804fe5d76e41bdd49895b941142faef859d1a8c28f70da07505a8fe29807

Status: Downloaded newer image for cnbs/sample-builder:noble

noble: Pulling from cnbs/sample-base-run

d9d352c11bbd: Already exists

a89a6d4ce7ab: Already exists

40f88e0b9e2e: Already exists

971f5e2fccdc: Pull complete

Digest: sha256:5c9ee593643a015b66bb3769da88a7d633d4faaac48387d5e24326d735cb5681

Status: Downloaded newer image for cnbs/sample-base-run:noble

Warning: Builder is trusted but additional modules were added; using the untrusted (5 phases) build flow

0.20.11: Pulling from buildpacksio/lifecycle

35d697fe2738: Pull complete

bfb59b82a9b6: Pull complete

4eff9a62d888: Pull complete

a62778643d56: Pull complete

7c12895b777b: Pull complete

3214acf345c0: Pull complete

5664b15f108b: Pull complete

0bab15eea81d: Pull complete

4aa0ea1413d3: Pull complete

da7816fa955e: Pull complete

ddf74a63f7d8: Pull complete

3060f567f090: Pull complete

Digest: sha256:8ed1a37b3071951cc282ac0ae775c9b43e2957f6b30d7457b818c9be84ec0f08

Status: Downloaded newer image for buildpacksio/lifecycle:0.20.11

Warning: PACK_VOLUME_KEY is unset; set this environment variable to a secret value to avoid creating a new volume cache on every build

===> ANALYZING

[analyzer] Image with name "test-node-js-app" not found

===> DETECTING

[detector] ======== Results ========

[detector] err: examples/node-js@0.0.1 (1)

[detector] ERROR: No buildpack groups passed detection.

[detector] ERROR: failed to detect: buildpack(s) failed with err

ERROR: failed to build: executing lifecycle: failed with status code: 21

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# vi package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cd node-js-buildpack/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# ls

bin buildpack.toml

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd bin/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack build test-node-js-app --path . --buildpack ./node-js-buildpack --no-color

noble: Pulling from cnbs/sample-builder

Digest: sha256:7362804fe5d76e41bdd49895b941142faef859d1a8c28f70da07505a8fe29807

Status: Image is up to date for cnbs/sample-builder:noble

noble: Pulling from cnbs/sample-base-run

Digest: sha256:5c9ee593643a015b66bb3769da88a7d633d4faaac48387d5e24326d735cb5681

Status: Image is up to date for cnbs/sample-base-run:noble

Warning: Builder is trusted but additional modules were added; using the untrusted (5 phases) build flow

0.20.11: Pulling from buildpacksio/lifecycle

Digest: sha256:8ed1a37b3071951cc282ac0ae775c9b43e2957f6b30d7457b818c9be84ec0f08

Status: Image is up to date for buildpacksio/lifecycle:0.20.11

===> ANALYZING

[analyzer] Image with name "test-node-js-app" not found

===> DETECTING

[detector] examples/node-js 0.0.1

===> RESTORING

===> BUILDING

[builder] ---> NodeJS Buildpack

[builder] ERROR: failed to build: exit status 1

ERROR: failed to build: executing lifecycle: failed with status code: 51

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cd node-js-buildpack/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# ls

bin buildpack.toml

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd bin/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi build

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi build

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack build test-node-js-app --path . --buildpack ./node-js-buildpack

noble: Pulling from cnbs/sample-builder

Digest: sha256:7362804fe5d76e41bdd49895b941142faef859d1a8c28f70da07505a8fe29807

Status: Image is up to date for cnbs/sample-builder:noble

noble: Pulling from cnbs/sample-base-run

Digest: sha256:5c9ee593643a015b66bb3769da88a7d633d4faaac48387d5e24326d735cb5681

Status: Image is up to date for cnbs/sample-base-run:noble

Warning: Builder is trusted but additional modules were added; using the untrusted (5 phases) build flow

0.20.11: Pulling from buildpacksio/lifecycle

Digest: sha256:8ed1a37b3071951cc282ac0ae775c9b43e2957f6b30d7457b818c9be84ec0f08

Status: Image is up to date for buildpacksio/lifecycle:0.20.11

===> ANALYZING

[analyzer] Image with name "test-node-js-app" not found

===> DETECTING

[detector] examples/node-js 0.0.1

===> RESTORING

===> BUILDING

[builder] ---> NodeJS Buildpack

[builder] ---> Downloading and extracting NodeJS

===> EXPORTING

[exporter] Adding layer 'examples/node-js:node-js'

[exporter] Adding layer 'buildpacksio/lifecycle:launch.sbom'

[exporter] Added 1/1 app layer(s)

[exporter] Adding layer 'buildpacksio/lifecycle:launcher'

[exporter] Adding layer 'buildpacksio/lifecycle:config'

[exporter] Adding label 'io.buildpacks.lifecycle.metadata'

[exporter] Adding label 'io.buildpacks.build.metadata'

[exporter] Adding label 'io.buildpacks.project.metadata'

[exporter] no default process type

[exporter] Saving test-node-js-app...

[exporter] *** Images (311ac036bb13):

[exporter] test-node-js-app

Successfully built image test-node-js-app

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cd node-js-buildpack/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# ls

bin buildpack.toml

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd bin/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi build

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# ls

bin buildpack.toml

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# vi app.js

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cd node-js-buildpack/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd bin/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi build

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack build test-node-js-app --path . --buildpack ./node-js-buildpack

noble: Pulling from cnbs/sample-builder

Digest: sha256:7362804fe5d76e41bdd49895b941142faef859d1a8c28f70da07505a8fe29807

Status: Image is up to date for cnbs/sample-builder:noble

noble: Pulling from cnbs/sample-base-run

Digest: sha256:5c9ee593643a015b66bb3769da88a7d633d4faaac48387d5e24326d735cb5681

Status: Image is up to date for cnbs/sample-base-run:noble

Warning: Builder is trusted but additional modules were added; using the untrusted (5 phases) build flow

0.20.11: Pulling from buildpacksio/lifecycle

Digest: sha256:8ed1a37b3071951cc282ac0ae775c9b43e2957f6b30d7457b818c9be84ec0f08

Status: Image is up to date for buildpacksio/lifecycle:0.20.11

===> ANALYZING

[analyzer] Restoring data for SBOM from previous image

===> DETECTING

[detector] examples/node-js 0.0.1

===> RESTORING

[restorer] Restoring metadata for "examples/node-js:node-js" from app image

===> BUILDING

[builder] ---> NodeJS Buildpack

[builder] ---> Downloading and extracting NodeJS

===> EXPORTING

[exporter] Reusing layer 'examples/node-js:node-js'

[exporter] Reusing layer 'buildpacksio/lifecycle:launch.sbom'

[exporter] Added 1/1 app layer(s)

[exporter] Reusing layer 'buildpacksio/lifecycle:launcher'

[exporter] Adding layer 'buildpacksio/lifecycle:config'

[exporter] Adding layer 'buildpacksio/lifecycle:process-types'

[exporter] Adding label 'io.buildpacks.lifecycle.metadata'

[exporter] Adding label 'io.buildpacks.build.metadata'

[exporter] Adding label 'io.buildpacks.project.metadata'

[exporter] Setting default process type 'web'

[exporter] Saving test-node-js-app...

[exporter] *** Images (bfeeb409b69f):

[exporter] test-node-js-app

Successfully built image test-node-js-app

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# docker run --rm -p 8080:8080 test-node-js-app

docker: Error response from daemon: failed to set up container networking: driver failed programming external connectivity on endpoint vigilant_haibt (10dadff7c776dfc3c5eee0b361144d406b17e2d582b082009c47437db76bf0d6): failed to bind host port for 0.0.0.0:8080:172.17.0.2:8080/tcp: address already in use

Run 'docker run --help' for more information

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

eab624284ce3 kindest/node:v1.32.8 "/usr/local/bin/entr…" 3 days ago Up About an hour 0.0.0.0:30000-30001->30000-30001/tcp, 127.0.0.1:39183->6443/tcp myk8s-control-plane

b9fc82f7d8a9 kindest/node:v1.32.8 "/usr/local/bin/entr…" 3 days ago Up About an hour myk8s-worker

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

cb831a5f7b8a docker.io/library/nginx:latest nginx -g daemon o... 32 minutes ago Up 32 minutes 0.0.0.0:8080->80/tcp basic_httpd

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# podman stop cb831a5f7b8a

cb831a5f7b8a

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# docker run --rm -p 8080:8080 test-node-js-app

Server running at http://0.0.0.0:8080/

^C^C^C

got 3 SIGTERM/SIGINTs, forcefully exiting

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cd node-js-buildpack/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# ls

bin buildpack.toml

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd bin/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# ls

build detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi build

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack build test-node-js-app --path . --buildpack ./node-js-buildpack

noble: Pulling from cnbs/sample-builder

Digest: sha256:7362804fe5d76e41bdd49895b941142faef859d1a8c28f70da07505a8fe29807

Status: Image is up to date for cnbs/sample-builder:noble

noble: Pulling from cnbs/sample-base-run

Digest: sha256:5c9ee593643a015b66bb3769da88a7d633d4faaac48387d5e24326d735cb5681

Status: Image is up to date for cnbs/sample-base-run:noble

Warning: Builder is trusted but additional modules were added; using the untrusted (5 phases) build flow

0.20.11: Pulling from buildpacksio/lifecycle

Digest: sha256:8ed1a37b3071951cc282ac0ae775c9b43e2957f6b30d7457b818c9be84ec0f08

Status: Image is up to date for buildpacksio/lifecycle:0.20.11

===> ANALYZING

[analyzer] Restoring data for SBOM from previous image

===> DETECTING

[detector] examples/node-js 0.0.1

===> RESTORING

[restorer] Restoring metadata for "examples/node-js:node-js" from app image

===> BUILDING

[builder] ---> NodeJS Buildpack

[builder] ---> Downloading and extracting NodeJS

===> EXPORTING

[exporter] Reusing layer 'examples/node-js:node-js'

[exporter] Reusing layer 'buildpacksio/lifecycle:launch.sbom'

[exporter] Added 1/1 app layer(s)

[exporter] Reusing layer 'buildpacksio/lifecycle:launcher'

[exporter] Reusing layer 'buildpacksio/lifecycle:config'

[exporter] Reusing layer 'buildpacksio/lifecycle:process-types'

[exporter] Adding label 'io.buildpacks.lifecycle.metadata'

[exporter] Adding label 'io.buildpacks.build.metadata'

[exporter] Adding label 'io.buildpacks.project.metadata'

[exporter] Setting default process type 'web'

[exporter] Saving test-node-js-app...

[exporter] *** Images (017e3da8804b):

[exporter] test-node-js-app

[exporter] Adding cache layer 'examples/node-js:node-js'

Successfully built image test-node-js-app

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# ls

app.js node-js-buildpack package.json

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cd node-js-buildpack/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# ls

bin buildpack.toml

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd bin/

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi detect

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# vi build

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack/bin# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app/node-js-buildpack# cd ..

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# pack build test-node-js-app --path . --buildpack ./node-js-buildpack

noble: Pulling from cnbs/sample-builder

Digest: sha256:7362804fe5d76e41bdd49895b941142faef859d1a8c28f70da07505a8fe29807

Status: Image is up to date for cnbs/sample-builder:noble

noble: Pulling from cnbs/sample-base-run

Digest: sha256:5c9ee593643a015b66bb3769da88a7d633d4faaac48387d5e24326d735cb5681

Status: Image is up to date for cnbs/sample-base-run:noble

Warning: Builder is trusted but additional modules were added; using the untrusted (5 phases) build flow

0.20.11: Pulling from buildpacksio/lifecycle

Digest: sha256:8ed1a37b3071951cc282ac0ae775c9b43e2957f6b30d7457b818c9be84ec0f08

Status: Image is up to date for buildpacksio/lifecycle:0.20.11

===> ANALYZING

[analyzer] Restoring data for SBOM from previous image

===> DETECTING

[detector] examples/node-js 0.0.1

===> RESTORING

[restorer] Restoring metadata for "examples/node-js:node-js" from app image

[restorer] Restoring data for "examples/node-js:node-js" from cache

===> BUILDING

[builder] ---> NodeJS Buildpack

[builder] -----> Downloading and extracting NodeJS 3.1.3

[builder] xz: (stdin): File format not recognized

[builder] tar: Child returned status 1

[builder] tar: Error is not recoverable: exiting now

[builder] ERROR: failed to build: exit status 2

ERROR: failed to build: executing lifecycle: failed with status code: 51Shipwright

Shipwright란 무엇인가?

- Shipwright는 Kubernetes 클러스터 내부에서 직접 컨테이너 이미지를 빌드할 수 있게 해 주는 프레임워크입니다. CD Foundation+3shipwright.io+3GitHub+3

- 기존에

docker build처럼 로컬이나 CI 외부에서 이미지 빌드 → 푸시 → 배포하는 흐름을, Kubernetes 내부에서 “소스 → 빌드 → 레지스트리 푸시”까지 통합할 수 있게 만듭니다. Medium+1 - 2024년 기준으로 Cloud Native Computing Foundation(CNCF)의 Sandbox 프로젝트로 채택되어 안정성과 커뮤니티 기반이 강화되고 있습니다. CD Foundation

주요 구성 요소

Shipwright에는 이미지 빌드 흐름을 정의하고 실행하기 위한 핵심 리소스(CRD)가 몇 가지 있습니다. shipwright.io+1

| 리소스명 | 설명 |

|---|---|

| BuildStrategy / ClusterBuildStrategy | 어떻게(build tool) 이미지를 빌드할지 전략을 정의합니다. 예: Kaniko, Buildah, Buildpacks 등을 사용할 수 있도록 정의. GitHub+1 |

| Build | 무엇(소스코드)을 빌드할지, 어디에(이미지 주소) 출력할지 정의합니다. GitHub |

| BuildRun | 언제(build 실행) 빌드를 실행할지 트리거하는 리소스입니다. shipwright.io |

1) Tekton Pipelines 설치

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/latest/release.yaml

2) Shipwright 설치(컨트롤러 + CRDs)

kubectl apply -f https://github.com/shipwright-io/build/releases/latest/download/release.yaml --server-side

3) 샘플 BuildStrategy 설치(빌드팩, 빌다, 카니코 등)

kubectl apply -f https://github.com/shipwright-io/build/releases/latest/download/sample-strategies.yaml --server-side

4) 설치 확인 및 대기

kubectl -n tekton-pipelines wait --for=condition=Available deployment --all --timeout=300s && kubectl -n shipwright-build wait --for=condition=Available deployment --all --timeout=300s

5) 푸시용 레지스트리 Secret 생성(Docker Hub 예시)

REGISTRY_SERVER=https://index.docker.io/v1/ REGISTRY_USER=<docker-id> REGISTRY_PASSWORD=<docker-password> REGISTRY_EMAIL=<you@example.com>; kubectl create secret docker-registry push-secret --docker-server=$REGISTRY_SERVER --docker-username=$REGISTRY_USER --docker-password=$REGISTRY_PASSWORD --docker-email=$REGISTRY_EMAIL

6) 샘플 Node.js Build 리소스 생성(Buildpacks 전략 사용)

REGISTRY_ORG=<docker-id>; cat <<EOF | kubectl apply -f -

apiVersion: shipwright.io/v1beta1

kind: Build

metadata:

name: buildpack-nodejs-build

spec:

source:

type: Git

git:

url: https://github.com/shipwright-io/sample-nodejs

contextDir: source-build

strategy:

name: buildpacks-v3

kind: ClusterBuildStrategy

paramValues: []

output:

image: docker.io/${REGISTRY_ORG}/sample-nodejs:latest

pushSecret: push-secret

EOF

7) BuildRun으로 빌드 트리거

cat <<EOF | kubectl apply -f -

apiVersion: shipwright.io/v1beta1

kind: BuildRun

metadata:

generateName: buildpack-nodejs-buildrun-

spec:

build:

name: buildpack-nodejs-build

EOF

8) 빌드 완료 대기 및 로그 확인

BR=$(kubectl get buildrun -o jsonpath='{.items[-1:].0.metadata.name}') && kubectl wait buildrun/$BR --for=condition=Succeeded --timeout=600s && kubectl logs -n shipwright-build -l shipwright.io/buildrun=$BR --all-containers=true --prefix

성공하면 docker.io/<docker-id>/sample-nodejs:latest로 푸시됩니다.Kustomize

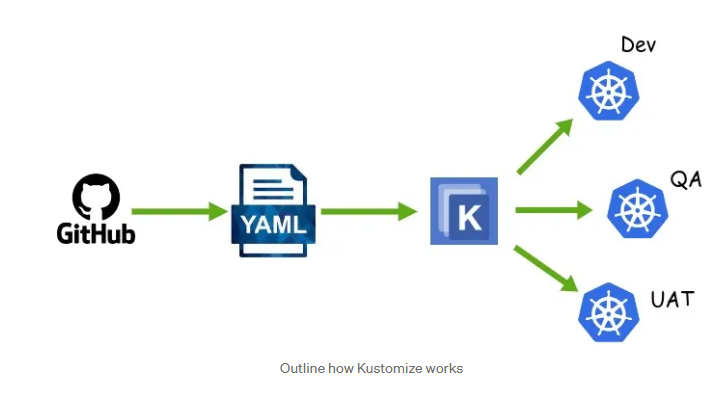

Kustomize란?

- Kustomize는 쿠버네티스(Kubernetes) 리소스 YAML 파일을 템플릿(Go 템플릿 등) 없이 그대로 유지하면서, 환경별·버전별로 커스터마이즈(맞춤 수정) 할 수 있게 해 주는 도구입니다.

- 즉, YAML 리소스들을 복제하거나 각 환경(dev/prod)별로 복사해서 수정하는 대신, “베이스(base)” 설정은 그대로 두고, 오버레이(overlay)를 이용해 수정 사항만 정의해 재사용성을 높일 수 있습니다.

주요 개념 및 기능

| 개념 | 설명 |

|---|---|

| Base | 공통으로 사용하는 기본 리소스 디렉터리. 예: deployment.yaml, service.yaml 등. |

| Overlay | 특정 환경(dev, prod 등)에서 Base 위에 추가되거나 수정될 리소스를 포함하는 디렉터리. Base를 참조하고 namePrefix, patches, images 등을 통해 수정. |

| kustomization.yaml | 각 디렉터리(Base 또는 Overlay)에 위치하는 메타파일로, 어떤 리소스를 포함하고 어떤 패치/수정(transformers/generators)을 적용할지 정의함. |

| Generators | ConfigMap 또는 Secret 등을 외부 파일이나 리터럴(literal)로부터 자동 생성할 수 있는 기능. 예: configMapGenerator, secretGenerator. |

| Transformers / Patches | 리소스를 수정하는 방식으로, 예컨대 commonLabels, nameSuffix, images 수정, patchesStrategicMerge, patchesJson6902 등이 있음. |

| Composition | Base + 여러 Overlay + 하위 디렉터리 구조로 구성 가능하며, 다양한 환경/version에 맞춘 YAML을 손쉽게 생성 가능. |

출처 : https://medium.com/@altairthinesh2/kustomize-kubernetes-configuration-management-23630d4f9c96

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# mkdir base dev prod

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cat << EOF > base/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

run: my-nginx

replicas: 2

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx:alpine

EOF

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cat << EOF > base/service.yaml

apiVersion: v1

kind: Service

metadata:

name: my-nginx

labels:

run: my-nginx

spec:

ports:

- port: 80

protocol: TCP

selector:

run: my-nginx

EOF

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cat << EOF > base/kustomization.yaml

resources:

- deployment.yaml

- service.yaml

EOF

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cat <<EOF > dev/kustomization.yaml

resources:

- ../base

namePrefix: dev-

EOF

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# cat <<EOF > prod/kustomization.yaml

resources:

- ../base

namePrefix: prod-

EOF

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# tree base dev prod

base

├── deployment.yaml

├── kustomization.yaml

└── service.yaml

dev

└── kustomization.yaml

prod

└── kustomization.yaml

3 directories, 5 files

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl create -k dev/ --dry-run=client -o yaml --save-config=false

apiVersion: v1

kind: Service

metadata:

labels:

run: my-nginx

name: dev-my-nginx

namespace: default

spec:

ports:

- port: 80

protocol: TCP

selector:

run: my-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dev-my-nginx

namespace: default

spec:

replicas: 2

selector:

matchLabels:

run: my-nginx

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- image: nginx:alpine

name: my-nginx

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl apply -k dev/

service/dev-my-nginx created

deployment.apps/dev-my-nginx created

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl get -k dev/

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dev-my-nginx ClusterIP 10.96.22.86 <none> 80/TCP 4s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/dev-my-nginx 0/2 2 0 4s

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl get pod,svc,ep -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/dev-my-nginx-646754b7dd-bwfjm 0/1 ContainerCreating 0 7s <none> myk8s-worker <none> <none>

pod/dev-my-nginx-646754b7dd-v66sw 0/1 ContainerCreating 0 7s <none> myk8s-worker <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dev-my-nginx ClusterIP 10.96.22.86 <none> 80/TCP 7s run=my-nginx

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d5h <none>

NAME ENDPOINTS AGE

endpoints/dev-my-nginx <none> 7s

endpoints/kubernetes 172.18.0.2:6443 3d5h

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl create -k prod/ --dry-run=client -o yaml --save-config=false

apiVersion: v1

kind: Service

metadata:

labels:

run: my-nginx

name: prod-my-nginx

namespace: default

spec:

ports:

- port: 80

protocol: TCP

selector:

run: my-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prod-my-nginx

namespace: default

spec:

replicas: 2

selector:

matchLabels:

run: my-nginx

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- image: nginx:alpine

name: my-nginx

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl apply -k prod/

service/prod-my-nginx created

deployment.apps/prod-my-nginx created

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl get -k prod/

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prod-my-nginx ClusterIP 10.96.200.204 <none> 80/TCP 3s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prod-my-nginx 2/2 2 2 3s

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl get pod,svc,ep -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/dev-my-nginx-646754b7dd-bwfjm 1/1 Running 0 20s 10.244.1.7 myk8s-worker <none> <none>

pod/dev-my-nginx-646754b7dd-v66sw 1/1 Running 0 20s 10.244.1.8 myk8s-worker <none> <none>

pod/prod-my-nginx-646754b7dd-czzxw 1/1 Running 0 6s 10.244.1.9 myk8s-worker <none> <none>

pod/prod-my-nginx-646754b7dd-l5s75 1/1 Running 0 6s 10.244.1.10 myk8s-worker <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dev-my-nginx ClusterIP 10.96.22.86 <none> 80/TCP 20s run=my-nginx

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d5h <none>

service/prod-my-nginx ClusterIP 10.96.200.204 <none> 80/TCP 6s run=my-nginx

NAME ENDPOINTS AGE

endpoints/dev-my-nginx 10.244.1.10:80,10.244.1.7:80,10.244.1.8:80 + 1 more... 20s

endpoints/kubernetes 172.18.0.2:6443 3d5h

endpoints/prod-my-nginx 10.244.1.10:80,10.244.1.7:80,10.244.1.8:80 + 1 more... 6s

(⎈|kind-myk8s:default) root@DESKTOP-8S932ET:/home/suha/node-js-sample-app# kubectl delete -k dev/