ingress-nginx 배포

(⎈|N/A:N/A) root@DESKTOP-8S932ET:~# cat <<EOT> kind-ingress.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

- containerPort: 30000

hostPort: 30000

EOT

(⎈|N/A:N/A) root@DESKTOP-8S932ET:~# ls

app-project.yaml argocd-values.yaml cicd-labs kind-ingress.yaml monitor-values.yaml my-sample-app yes yes.pub

(⎈|N/A:N/A) root@DESKTOP-8S932ET:~# kind create cluster --config kind-ingress.yaml --name myk8s

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.34.0) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

Thanks for using kind! 😊

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

28461f81d3f3 kindest/node:v1.34.0 "/usr/local/bin/entr…" 36 seconds ago Up 34 seconds 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp, 0.0.0.0:30000->30000/tcp, 127.0.0.1:43457->6443/tcp myk8s-control-plane

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# docker port myk8s-control-plane

80/tcp -> 0.0.0.0:80

443/tcp -> 0.0.0.0:443

6443/tcp -> 127.0.0.1:43457

30000/tcp -> 0.0.0.0:30000

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl get node

NAME STATUS ROLES AGE VERSION

myk8s-control-plane Ready control-plane 29s v1.34.0

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl get nodes myk8s-control-plane -o jsonpath={.metadata.labels} | jq

{

"beta.kubernetes.io/arch": "amd64",

"beta.kubernetes.io/os": "linux",

"ingress-ready": "true",

"kubernetes.io/arch": "amd64",

"kubernetes.io/hostname": "myk8s-control-plane",

"kubernetes.io/os": "linux",

"node-role.kubernetes.io/control-plane": ""

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl logs -n ingress-nginx deploy/ingress-nginx-controller -f

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v1.14.0

Build: 52c0a83ac9bc72e9ce1b9fe4f2d6dcc8854516a8

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.27.1

-------------------------------------------------------------------------------

W1110 13:26:06.668523 11 client_config.go:667] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I1110 13:26:06.668914 11 main.go:205] "Creating API client" host="https://10.96.0.1:443"

I1110 13:26:06.679761 11 main.go:248] "Running in Kubernetes cluster" major="1" minor="34" git="v1.34.0" state="clean" commit="f28b4c9efbca5c5c0af716d9f2d5702667ee8a45" platform="linux/amd64"

I1110 13:26:06.771116 11 main.go:101] "SSL fake certificate created" file="/etc/ingress-controller/ssl/default-fake-certificate.pem"

I1110 13:26:06.791298 11 ssl.go:535] "loading tls certificate" path="/usr/local/certificates/cert" key="/usr/local/certificates/key"

I1110 13:26:06.806124 11 nginx.go:273] "Starting NGINX Ingress controller"

I1110 13:26:06.816956 11 event.go:377] Event(v1.ObjectReference{Kind:"ConfigMap", Namespace:"ingress-nginx", Name:"ingress-nginx-controller", UID:"2f51abea-83fa-42f0-be6b-b3b0bda89497", APIVersion:"v1", ResourceVersion:"580", FieldPath:""}): type: 'Normal' reason: 'CREATE' ConfigMap ingress-nginx/ingress-nginx-controller

I1110 13:26:08.008319 11 nginx.go:319] "Starting NGINX process"

I1110 13:26:08.008460 11 leaderelection.go:257] attempting to acquire leader lease ingress-nginx/ingress-nginx-leader...

I1110 13:26:08.009448 11 nginx.go:339] "Starting validation webhook" address=":8443" certPath="/usr/local/certificates/cert" keyPath="/usr/local/certificates/key"

I1110 13:26:08.010248 11 controller.go:214] "Configuration changes detected, backend reload required"

I1110 13:26:08.037278 11 leaderelection.go:271] successfully acquired lease ingress-nginx/ingress-nginx-leader

I1110 13:26:08.037799 11 status.go:85] "New leader elected" identity="ingress-nginx-controller-76f7f87994-86zs6"

I1110 13:26:08.095576 11 controller.go:228] "Backend successfully reloaded"

I1110 13:26:08.096257 11 controller.go:240] "Initial sync, sleeping for 1 second"

I1110 13:26:08.096632 11 event.go:377] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-76f7f87994-86zs6", UID:"de86a426-9561-4eee-802b-8d07f60a3bad", APIVersion:"v1", ResourceVersion:"614", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

W1110 13:33:41.408929 11 controller.go:1232] Service "default/foo-service" does not have any active Endpoint.

W1110 13:33:41.408991 11 endpointslices.go:86] Error obtaining Endpoints for Service "default/bar-service": no object matching key "default/bar-service" in local store

W1110 13:33:41.409020 11 controller.go:1232] Service "default/bar-service" does not have any active Endpoint.

I1110 13:33:41.410437 11 main.go:107] "successfully validated configuration, accepting" ingress="default/example-ingress"

I1110 13:33:41.420947 11 store.go:443] "Found valid IngressClass" ingress="default/example-ingress" ingressclass="_"

I1110 13:33:41.421268 11 event.go:377] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"example-ingress", UID:"1770e321-2efa-4387-a0cd-077d24a139c3", APIVersion:"networking.k8s.io/v1", ResourceVersion:"1553", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

W1110 13:33:44.693787 11 controller.go:1232] Service "default/foo-service" does not have any active Endpoint.

W1110 13:33:44.693866 11 controller.go:1232] Service "default/bar-service" does not have any active Endpoint.

I1110 13:33:44.696050 11 controller.go:214] "Configuration changes detected, backend reload required"

I1110 13:33:44.750686 11 controller.go:228] "Backend successfully reloaded"

I1110 13:33:44.751997 11 event.go:377] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-76f7f87994-86zs6", UID:"de86a426-9561-4eee-802b-8d07f60a3bad", APIVersion:"v1", ResourceVersion:"614", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

W1110 13:33:48.027234 11 controller.go:1232] Service "default/foo-service" does not have any active Endpoint.

W1110 13:33:48.027322 11 controller.go:1232] Service "default/bar-service" does not have any active Endpoint.

W1110 13:33:53.313949 11 controller.go:1232] Service "default/bar-service" does not have any active Endpoint.

^C(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# curl localhost/foo/hostname

foo-app(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# curl localhost/bar/hostname

bar-app(⎈|kind-myk8s:N/A) root@DESKTOP-8S93kubectl exec -it foo-app -- curl localhost:8080/foo/hostnameostname

foo-app(⎈|kind-myk8s:N/A) root@DESKTOP-8S93kubectl exec -it foo-app -- curl localhost:8080/hostnameostname

foo-app(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~#

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl get ingress myweb

NAME CLASS HOSTS ADDRESS PORTS AGE

myweb <none> webv1.myweb.com,webv2.myweb.com localhost 80 5s

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl describe ingress myweb

Name: myweb

Labels: <none>

Namespace: default

Address: localhost

Ingress Class: <none>

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

webv1.myweb.com

/ webv1:8080 (10.244.0.12:8080,10.244.0.13:8080)

webv2.myweb.com

/ webv2:8080 (10.244.0.15:8080,10.244.0.14:8080)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 10s (x2 over 12s) nginx-ingress-controller Scheduled for sync

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl logs -n ingress-nginx -l app.kubernetes.io/instance=ingress-nginx -f

I1110 13:34:48.960383 11 controller.go:228] "Backend successfully reloaded"

I1110 13:34:48.963384 11 event.go:377] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-76f7f87994-86zs6", UID:"de86a426-9561-4eee-802b-8d07f60a3bad", APIVersion:"v1", ResourceVersion:"614", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

I1110 13:47:02.478294 11 main.go:107] "successfully validated configuration, accepting" ingress="default/myweb"

I1110 13:47:02.483068 11 store.go:443] "Found valid IngressClass" ingress="default/myweb" ingressclass="_"

I1110 13:47:02.484316 11 event.go:377] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"myweb", UID:"78be8e16-08de-419d-aa24-2c432c3f54c1", APIVersion:"networking.k8s.io/v1", ResourceVersion:"2990", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

I1110 13:47:02.485438 11 controller.go:214] "Configuration changes detected, backend reload required"

I1110 13:47:02.533861 11 controller.go:228] "Backend successfully reloaded"

I1110 13:47:02.534559 11 event.go:377] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-76f7f87994-86zs6", UID:"de86a426-9561-4eee-802b-8d07f60a3bad", APIVersion:"v1", ResourceVersion:"614", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

I1110 13:47:04.281173 11 status.go:311] "updating Ingress status" namespace="default" ingress="myweb" currentValue=null newValue=[{"hostname":"localhost"}]

I1110 13:47:04.296106 11 event.go:377] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"myweb", UID:"78be8e16-08de-419d-aa24-2c432c3f54c1", APIVersion:"networking.k8s.io/v1", ResourceVersion:"2995", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

curl http://webv1.myweb.com

^C(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# curl http://webv1.myweb.com

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# curl http://webv2.myweb.com

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl logs -n ingress-nginx -l app.kubernetes.io/instance=ingress-nginx -f

I1110 13:34:48.960383 11 controller.go:228] "Backend successfully reloaded"

I1110 13:34:48.963384 11 event.go:377] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-76f7f87994-86zs6", UID:"de86a426-9561-4eee-802b-8d07f60a3bad", APIVersion:"v1", ResourceVersion:"614", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

I1110 13:47:02.478294 11 main.go:107] "successfully validated configuration, accepting" ingress="default/myweb"

I1110 13:47:02.483068 11 store.go:443] "Found valid IngressClass" ingress="default/myweb" ingressclass="_"

I1110 13:47:02.484316 11 event.go:377] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"myweb", UID:"78be8e16-08de-419d-aa24-2c432c3f54c1", APIVersion:"networking.k8s.io/v1", ResourceVersion:"2990", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

I1110 13:47:02.485438 11 controller.go:214] "Configuration changes detected, backend reload required"

I1110 13:47:02.533861 11 controller.go:228] "Backend successfully reloaded"

I1110 13:47:02.534559 11 event.go:377] Event(v1.ObjectReference{Kind:"Pod", Namespace:"ingress-nginx", Name:"ingress-nginx-controller-76f7f87994-86zs6", UID:"de86a426-9561-4eee-802b-8d07f60a3bad", APIVersion:"v1", ResourceVersion:"614", FieldPath:""}): type: 'Normal' reason: 'RELOAD' NGINX reload triggered due to a change in configuration

I1110 13:47:04.281173 11 status.go:311] "updating Ingress status" namespace="default" ingress="myweb" currentValue=null newValue=[{"hostname":"localhost"}]

I1110 13:47:04.296106 11 event.go:377] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"myweb", UID:"78be8e16-08de-419d-aa24-2c432c3f54c1", APIVersion:"networking.k8s.io/v1", ResourceVersion:"2995", FieldPath:""}): type: 'Normal' reason: 'Sync' Scheduled for sync

^C(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kind delete cluster --name myk8s

Deleting cluster "myk8s" ...

Deleted nodes: ["myk8s-control-plane"]

(⎈|N/A:N/A) root@DESKTOP-8S932ET:~#

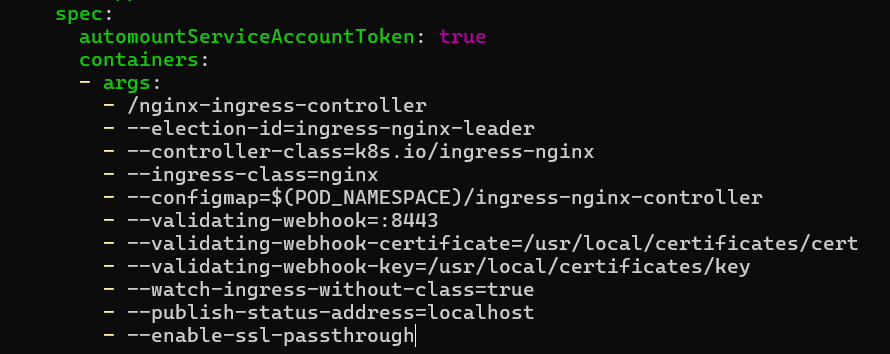

https://kubernetes.github.io/ingress-nginx/user-guide/tls/#ssl-passthrough

TLS 동작

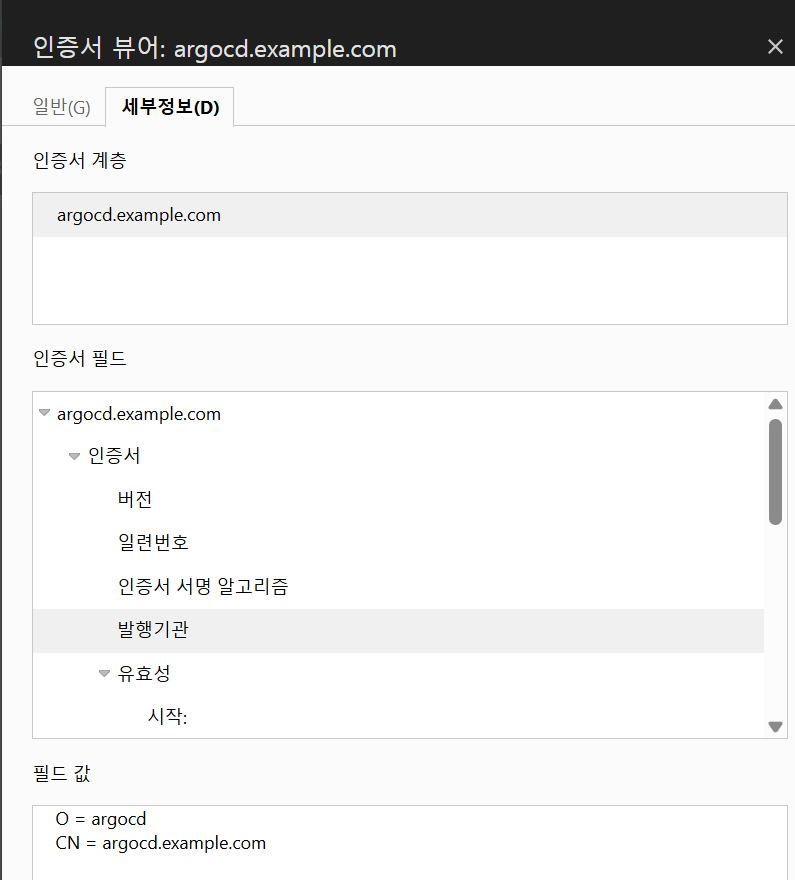

openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

-keyout argocd.example.com.key \

-out argocd.example.com.crt \

-subj "/CN=**argocd.example.com**/O=**argocd**"

**ls -l argocd.example.com.***

**openssl x509 -noout -text -in argocd.example.com.crt**

kubectl create ns **argocd**

# tls 시크릿 생성 : key/crt 파일이 로컬에 있어야 함

**kubectl -n argocd create secret tls argocd-server-tls \

--cert=argocd.example.com.crt \

--key=argocd.example.com.key**

**kubectl get secret -n argocd**

*NAME TYPE DATA AGE

argocd-server-tls **kubernetes.io/tls** 2 7s*

****cat <<EOF > argocd-values.yaml

global:

domain: argocd.example.com

server:

ingress:

enabled: true

ingressClassName: nginx

annotations:

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

tls: true

EOF

helm repo add argo https://argoproj.github.io/argo-helm

helm install **argocd** argo/argo-cd --version 9.0.5 -f argocd-values.yaml --namespace argocd

kubectl get ingress -n argocd argocd-server

NAME CLASS HOSTS ADDRESS PORTS AGE

argocd-server nginx argocd.example.com localhost 80, 443 22h

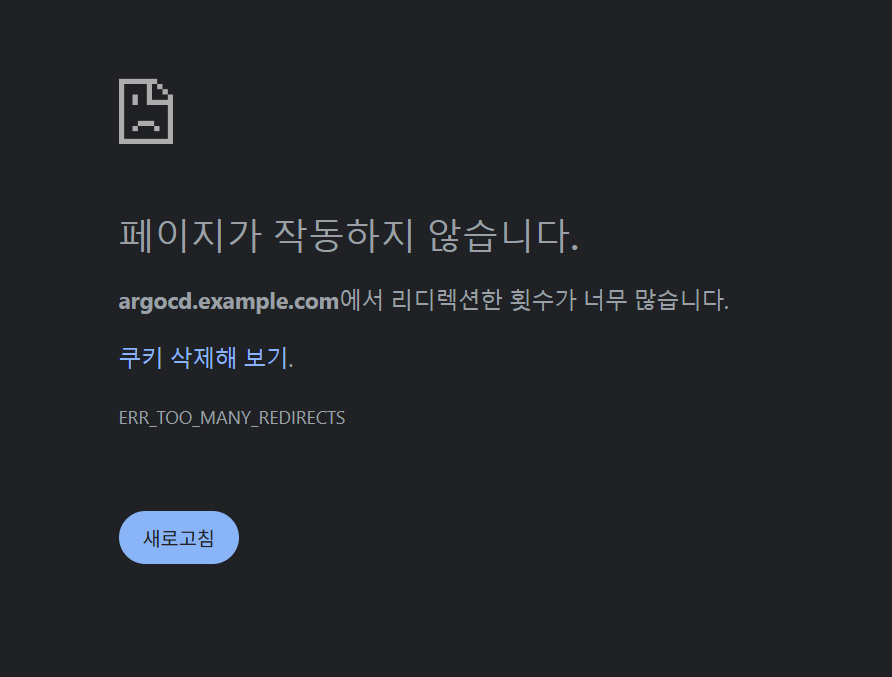

## C:\Windows\System32\drivers\etc\hosts 관리자모드에서 메모장에 내용 추가

127.0.0.1 argocd.example.com

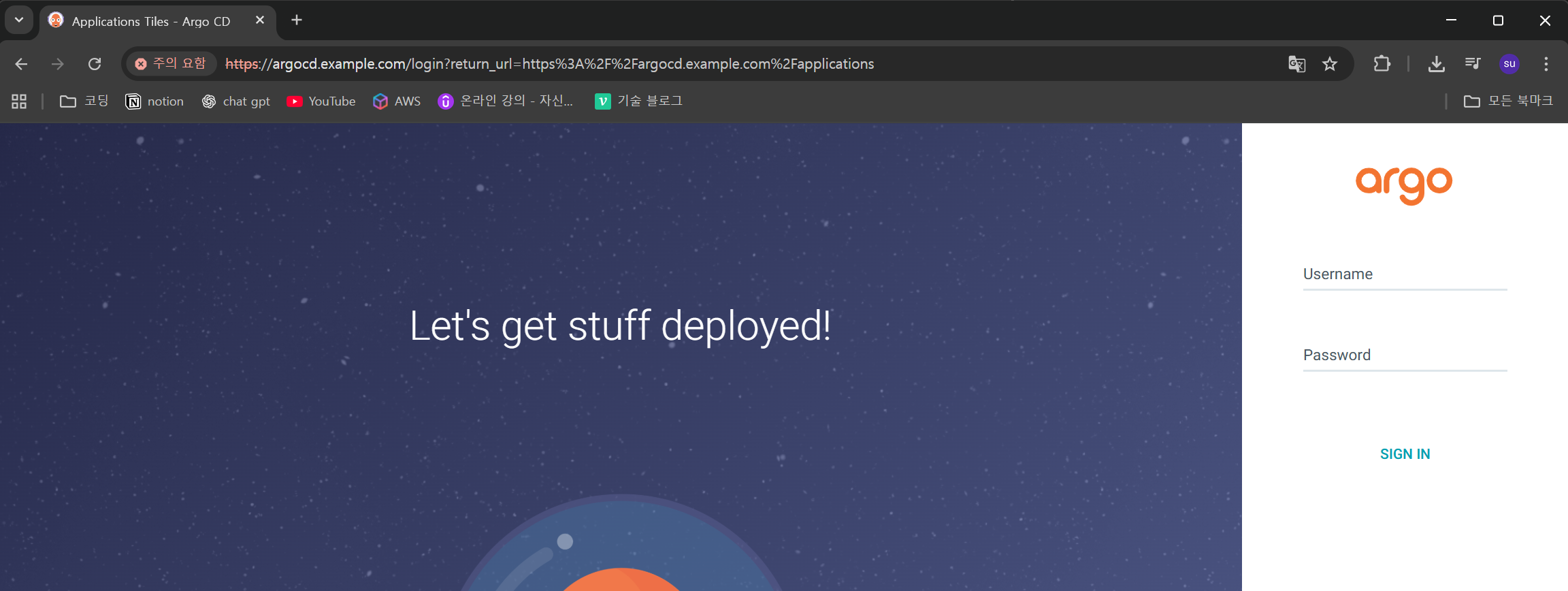

# 최초 접속 암호 확인

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d ;echo

*38L2ZmXne7jIaRSZ*

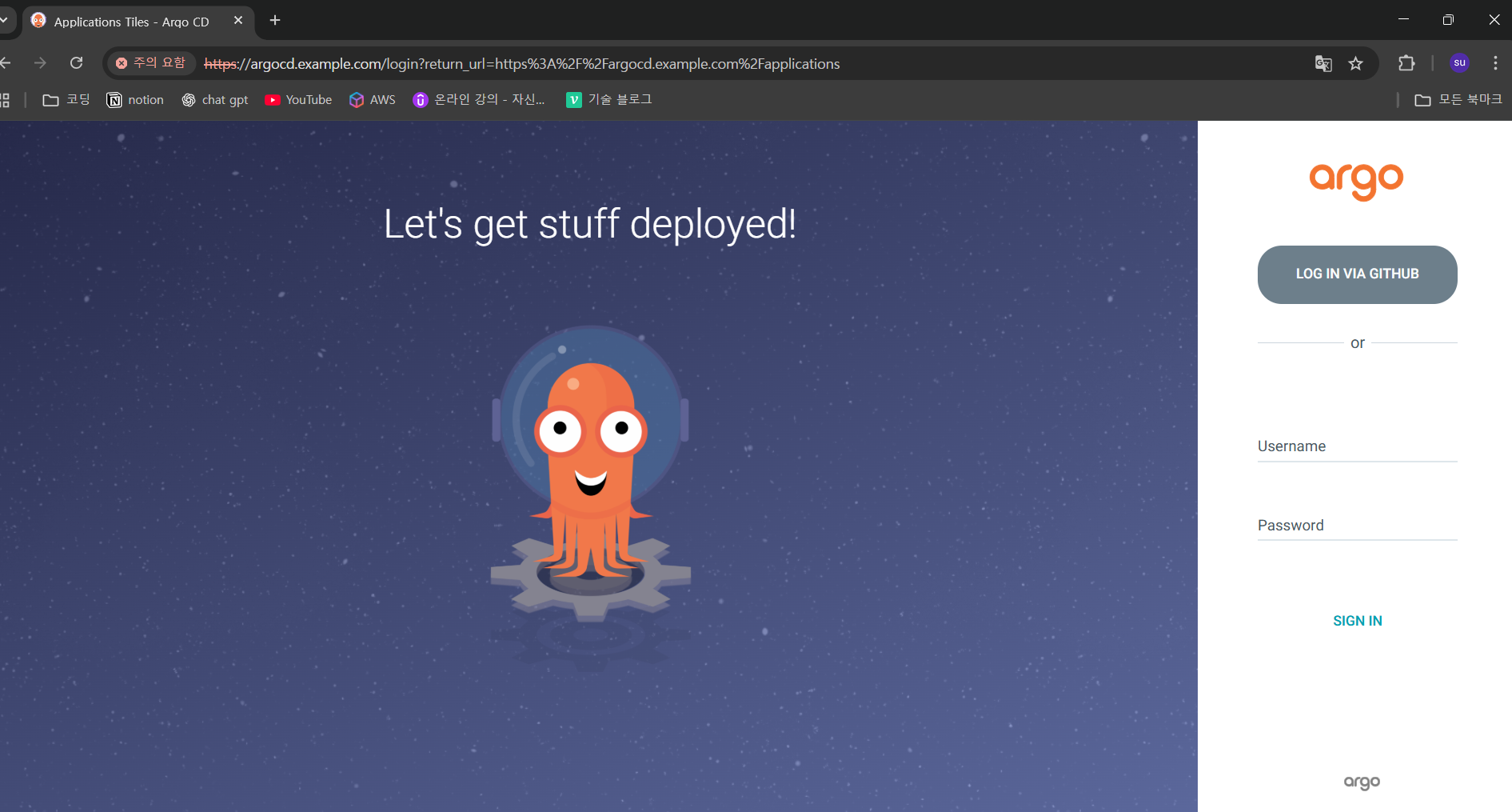

# Argo CD 웹 접속 주소 확인 : 초기 암호 입력 (admin 계정)

open "http://argocd.example.com"

open "https://argocd.example.com"

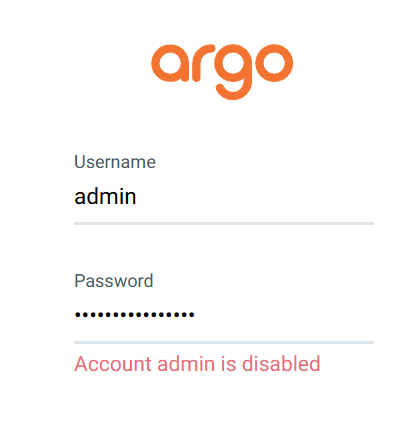

접근제어

argocd 설치하면 admin 권한을 가진 기본 사용자 한명 생성

**kubectl get secret -n argocd argocd-initial-admin-secret --context kind-myk8s -o jsonpath='{.data.password}' | base64 -d**- 해당 코드로 비밀번호 확인 가능

**argocd account update-password**- password 변경 가능

RBAC

리소스에 대한 접근을 역할(role)에 기반하여 제어하는 방식입니다.

RBAC는 역할(Role)과 권한(Permission)을 기준으로 접근을 제어합니다.

- 역할 (Role): 특정 작업을 수행할 수 있는 권한의 집합입니다. 예를 들어,

admin,editor,viewer와 같은 역할이 있을 수 있습니다. - 사용자 (User): 시스템에 접근하는 주체로, 각 사용자에게 하나 이상의 역할이 할당됩니다.

- 권한 (Permission): 특정 리소스에 대해 사용자가 수행할 수 있는 작업을 정의합니다. 예를 들어,

read,write,delete등의 작업이 있을 수 있습니다. - 리소스 (Resource): 사용자가 접근하고 조작할 수 있는 시스템의 객체입니다. 예를 들어, 데이터베이스 테이블, 서버, 애플리케이션의 특정 기능 등이 리소스가 될 수 있습니다.

- SSO 또는 로컬 사용자를 구성한 후에는 추가 RBAC 역할을 정의하고 SSO 그룹 또는 로컬 사용자를 역할에 매핑

argocd Valut Plugin

[ArgoCD] argocd v.2.8이상 사이드카로 Vault Plugin 적용하기

helm repo add hashicorp https://helm.releases.hashicorp.com

vi vault-server-values.yaml

---

server:

dev:

enabled: true

devRootToken: "root"

logLevel: debug

injector:

enabled: "false"

helm install vault hashicorp/vault -n vault --create-namespace --values vault-server-values.yamlvalue 구성

# shell 접속

kubectl exec -n vault vault-0 -it -- sh

# enable kv-v2 engine in Vault

vault secrets enable kv-v2

# create kv-v2 secret with two keys

vault kv put kv-v2/demo user="secret_user" password="secret_password"

# create policy to enable reading above secret

vault policy write demo - <<EOF

path "kv-v2/data/demo" {

capabilities = ["read"]

}

EOF

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# cat argocd-valut-plugin-credentials.yaml

kind: Secret

apiVersion: v1

metadata:

name: argocd-vault-plugin-credentials

namespace: argocd

type: Opaque

stringData:

AVP_AUTH_TYPE: "k8s"

AVP_K8S_ROLE: "argocd"

AVP_TYPE: "vault"

VAULT_ADDR: "http://vault.vault:8200"

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl apply -f argocd-valut-plugin-credentials.yaml

secret/argocd-vault-plugin-credentials created

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl get secrets -n argocd

NAME TYPE DATA AGE

argocd-initial-admin-secret Opaque 1 24h

argocd-notifications-secret Opaque 0 24h

argocd-redis Opaque 1 24h

argocd-secret Opaque 3 24h

argocd-server-tls kubernetes.io/tls 2 24h

argocd-vault-plugin-credentials Opaque 4 9s

sh.helm.release.v1.argocd.v1 helm.sh/release.v1 1 24hVault Plugin 설치

Installation via a sidecar container

SSO

SSO는 한 번 로그인하면 여러 서비스에 다시 로그인하지 않아도 자동 인증되는 구조입니다.

사용자가 여러 시스템을 사용할 때 매번 ID/PW를 입력하는 번거로움을 없애며, 기업 보안과 계정 관리 효율성을 크게 높입니다.

| 구성요소 | 역할 |

|---|---|

| Identity Provider(IdP) | 사용자의 인증을 수행. 예: Google Workspace, Azure AD, Keycloak, Okta |

| Service Provider(SP) | 인증이 필요한 애플리케이션. 예: GitLab, AWS, Zadara, Opensearch 등 |

| Token | IdP가 발급한 인증 증명. SAML, OIDC/JWT, OAuth2 Token 등 |

| SSO Session | IdP에 저장되는 로그인 세션. 이 세션이 있어야 재로그인 없이 인증 가능 |

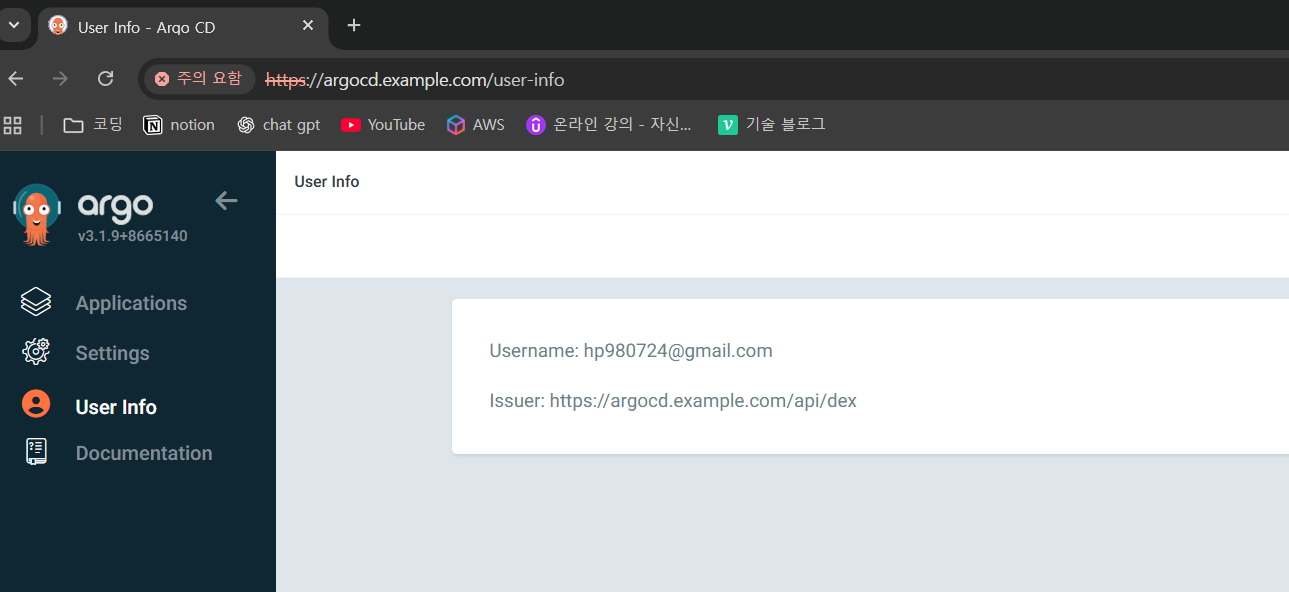

Argo CD Dex 를 사용한 인증(GitHub, LDAP 등) 실습

Overview - Argo CD - Declarative GitOps CD for Kubernetes

Dex : OIDC(OpenID Connect) 인증을 제공하는 오픈소스 인증 서버

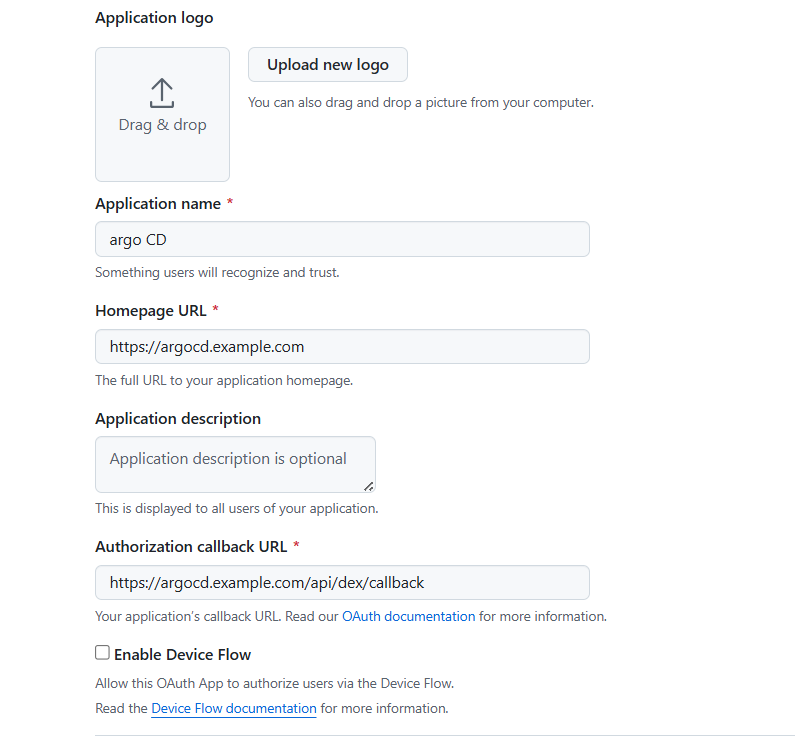

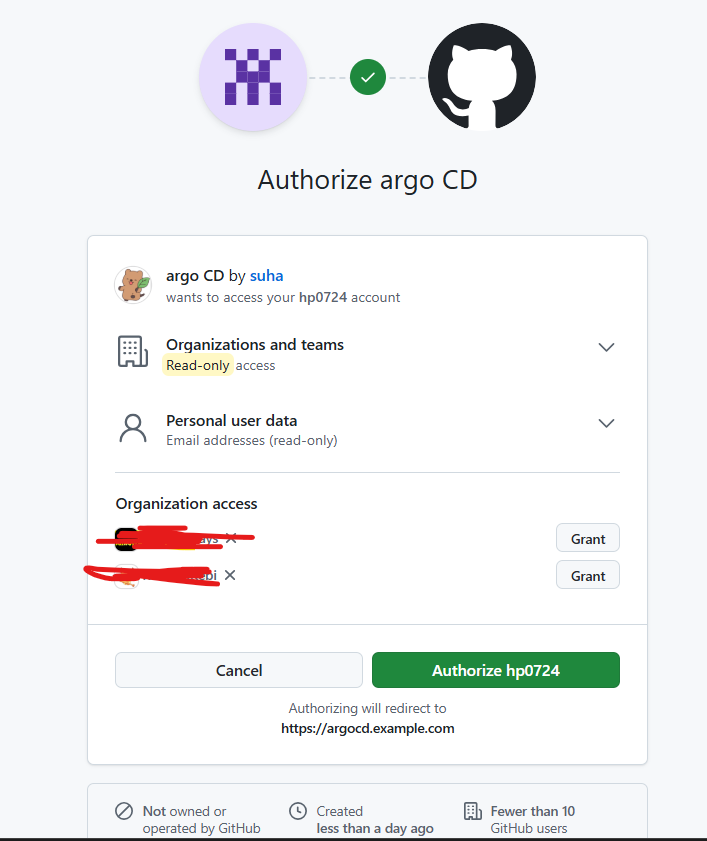

GitHub OAuth App 등록

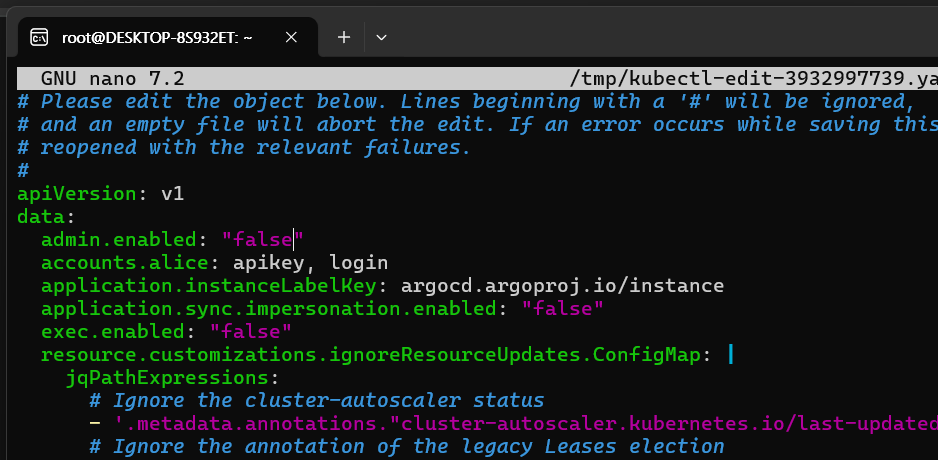

argocd-cm configmap

apiVersion: v1

data:

accounts.suha: apikey, login

accounts.suha.enabled: "false"

admin.enabled: "true"

application.instanceLabelKey: argocd.argoproj.io/instance

application.sync.impersonation.enabled: "false"

dex.config: |

connectors:

- type: github

id: github

name: GitHub

config:

clientID: "Ov23ligYJX5qbBTvibvg"

clientSecret: "clientSecret"

exec.enabled: "false"

resource.customizations.ignoreResourceUpdates.ConfigMap: |

jqPathExpressions:

# Ignore the cluster-autoscaler status

- '.metadata.annotations."cluster-autoscaler.kubernetes.io/last-updated"'

# Ignore the annotation of the legacy Leases election

- '.metadata.annotations."control-plane.alpha.kubernetes.io/leader"'

resource.customizations.ignoreResourceUpdates.Endpoints: |

jsonPointers:

- /metadata

- /subsets

resource.customizations.ignoreResourceUpdates.all: |

jsonPointers:

- /status

resource.customizations.ignoreResourceUpdates.apps_ReplicaSet: |

jqPathExpressions:

- '.metadata.annotations."deployment.kubernetes.io/desired-replicas"'

- '.metadata.annotations."deployment.kubernetes.io/max-replicas"'

- '.metadata.annotations."rollout.argoproj.io/desired-replicas"'

resource.customizations.ignoreResourceUpdates.argoproj.io_Application: |

jqPathExpressions:

- '.metadata.annotations."notified.notifications.argoproj.io"'

- '.metadata.annotations."argocd.argoproj.io/refresh"'

- '.metadata.annotations."argocd.argoproj.io/hydrate"'

- '.operation'

resource.customizations.ignoreResourceUpdates.argoproj.io_Rollout: |

jqPathExpressions:

- '.metadata.annotations."notified.notifications.argoproj.io"'

resource.customizations.ignoreResourceUpdates.autoscaling_HorizontalPodAutoscaler: |

jqPathExpressions:

- '.metadata.annotations."autoscaling.alpha.kubernetes.io/behavior"'

- '.metadata.annotations."autoscaling.alpha.kubernetes.io/conditions"'

- '.metadata.annotations."autoscaling.alpha.kubernetes.io/metrics"'

- '.metadata.annotations."autoscaling.alpha.kubernetes.io/current-metrics"'

resource.customizations.ignoreResourceUpdates.discovery.k8s.io_EndpointSlice: |

jsonPointers:

- /metadata

- /endpoints

- /ports

resource.exclusions: |

### Network resources created by the Kubernetes control plane and excluded to reduce the number of watched events and UI clutter

- apiGroups:

- ''

- discovery.k8s.io

kinds:

- Endpoints

- EndpointSlice

### Internal Kubernetes resources excluded reduce the number of watched events

- apiGroups:

- coordination.k8s.io

kinds:

- Lease

### Internal Kubernetes Authz/Authn resources excluded reduce the number of watched events

- apiGroups:

- authentication.k8s.io

- authorization.k8s.io

kinds:

- SelfSubjectReview

- TokenReview

- LocalSubjectAccessReview

- SelfSubjectAccessReview

- SelfSubjectRulesReview

- SubjectAccessReview

### Intermediate Certificate Request excluded reduce the number of watched events

- apiGroups:

- certificates.k8s.io

kinds:

- CertificateSigningRequest

- apiGroups:

- cert-manager.io

kinds:

- CertificateRequest

### Cilium internal resources excluded reduce the number of watched events and UI Clutter

- apiGroups:

- cilium.io

kinds:

- CiliumIdentity

- CiliumEndpoint

- CiliumEndpointSlice

### Kyverno intermediate and reporting resources excluded reduce the number of watched events and improve performance

- apiGroups:

- kyverno.io

- reports.kyverno.io

- wgpolicyk8s.io

kinds:

- PolicyReport

- ClusterPolicyReport

- EphemeralReport

- ClusterEphemeralReport

- AdmissionReport

- ClusterAdmissionReport

- BackgroundScanReport

- ClusterBackgroundScanReport

- UpdateRequest

server.rbac.log.enforce.enable: "false"

statusbadge.enabled: "false"

timeout.hard.reconciliation: 0s

timeout.reconciliation: 180s

url: https://argocd.example.com

kind: ConfigMap

metadata:

annotations:

meta.helm.sh/release-name: argocd

meta.helm.sh/release-namespace: argocd

creationTimestamp: "2025-11-10T14:18:37Z"

labels:

app.kubernetes.io/component: server

app.kubernetes.io/instance: argocd

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: argocd-cm

app.kubernetes.io/part-of: argocd

app.kubernetes.io/version: v3.1.9

helm.sh/chart: argo-cd-9.0.5

name: argocd-cm

namespace: argocd

resourceVersion: "27802"

uid: 781ae10b-d06e-49c0-be84-dc0664391caf

SSO

SSO를 도입할 때는 인증(Authentication)과 인가(Authorization) 를 명확히 구분해서 고려해야 합니다.

현재 회사에서는 이메일을 Google 기반으로 운영하고 있으므로, 사용자 인증(Authentication)은 Google SSO를 통해 처리할 수 있습니다.

그러나 사용자 인가(Authorization)는 로그인한 사용자가 어떤 권한을 가지고 있는지는 부서, 직무, 협력사 여부 등에 따라 다르게 적용됩니다.

SSO 3가지 주요 프로토콜

SAML

SAML은 인증 정보 제공자와 서비스 제공자 간의 인증 및 인가 데이터를 교환하기 위한 XML 기반의 표준 데이터 포맷입니다.

SAML 은 Assertion 이라는 xml 데이터를 암호화한 문서를 생성합니다. 해당 문서에는 ID 공급자 이름, 발행일 만료일 정보가 포함되어 있습니다.

OAuth 2.0

Authorization 을 위한 개방형 표준 프로토콜입니다.

사용자의 동의를 받고 Third-Party App과 중요한 정보를 공유하지 않고도 자원에 접근할 수 있게 해줍니다.

예를들어 모바일 게임에서 facebook 친구 추가를 위해서 facebook 친구 목록에 접근하는것을 허용하는것을 말합니다.

OIDC (openID Connect)

권한 허가 프로토콜인 OAuth2.0을 이용하여 만들어진 인증 레이어입니다.

OAuth에서 발급하는 Access Token은 권한을 허가해주는 토큰일뿐 사용자에 대한 정보는 담고있지 않습니다.

OIDC에서는 인증을 위해서 ID Token 을 추가하였습니다.

- 사용자 인증은 Google Workspace SSO를 통해 처리됩니다.

- SAML 또는 OIDC를 지원하는 시스템에는 Okta 또는 Keycloak을 중계 인증 서버로 적용할 수 있습니다.

- LDAP만 지원하는 시스템의 경우, 별도의 LDAP 디렉터리를 구성하고 사용자 인증 후 LDAP 질의를 통해 권한 정보를 조회합니다.

중앙 디렉터리 or 권한 관리 플랫폼

| 옵션 | 특징 | 비용 | 장점 | 단점 |

|---|---|---|---|---|

| Google Cloud Directory | 사용자/그룹 정보 저장Google Workspace 연동에 최적 | Google Workspace Enterprise 요금제 필요 | Google 사용자 연동 지원 | 확장성 낮음, 커스터마이징 제한 |

| Open LDAP | LDAP 서버로 사용자/그룹 직접 저장 | 무료 | LDAP 기반 시스템과 호환성 높음 | 운영 복잡성 (보안, 백업 등) SCIM/SSO 미지원 |

| Keycloak | 인증 후 사용자 정보 기반 권한 정책 부여 | 무료 오픈소스 | 자유로운 커스터마이징, Google SSO 연동 가능 | 직접 설치 및 운영 필요, 초반 학습 난이도 있음 |

| Okta | 다양한 SaaS SSO 지원 | 유료, free plan을 기능적 제한 | 보안 기능 제공 | 고급 기능은 비용 발생 |

고려해야 할 사항

로그인 인증시 MFA 도입

- 관리자 및 중요 사용자에게 MFA 필수

LDAP 도입시 LDAP 에 구조

- LDAP은 계층적 트리 구조이므로 사용자 조직별 구분이 중요합니다.

- 계층적 구조 기반 (OU 단위 권한 분리)

- 속성 기반 검색 (부서, 직무, 지역 등)

로깅 관련 고려 사항

- Google Workspace 로그인 로그

- LDAP 인증 및 검색 로그

- Keycloak / Okta의 토큰 발급 및 권한 로그

SSO 로그인 관련 정책

- 로그인 실패시 정책 필요

Argo Rollout

주요 특징

1. Canary 배포

- 새 버전을 일정 비율만 먼저 배포하고, 안정성 확인 후 비율을 늘리는 방식

- 분석(Analysis) 자동화 가능

- 서비스 메시에 따라 트래픽 미세 조정 가능

- Istio, Linkerd, NGINX, AWS ALB 등 연동

2. Blue-Green 배포

- 기존 버전(Blue)과 신규 버전(Green)을 동시에 준비

- 자동/수동 전환 가능

- 전환 실패 시 즉시 원복 가능

3. 자동 분석(AnalysisRuns)

- 메트릭 기반 자동 판단

- 예: Prometheus, Datadog, Kayenta 등

- 예: 오류율 증가 → 자동 중단 및 롤백

4. Progressive Delivery 기능

- 점진적 배포 + 자동화된 품질 체크

- 위험을 최소화하고 가시성을 극대화

| 컴포넌트 | 설명 |

|---|---|

| Rollout | Deployment 대체 리소스 |

| AnalysisTemplate | 메트릭 기반 검사 정의 |

| AnalysisRun | 실시간 분석 실행 |

| Experiment | 여러 버전을 동시에 비교하는 실험적 배포 |