기본 실습 환경 구성

kind k8s 배포

kind create cluster --name **myk8s** --image kindest/node:v1.32.8 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: **control-plane

labels:

ingress-ready: true**

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

- containerPort: 30000

hostPort: 30000

EOFIngress-Nginx 배포

nginx ingress 배포

**kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/kind/deploy.yaml**ssl Passthrough flag 활성화

spec:

automountServiceAccountToken: true

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

- --watch-ingress-without-class=true

- --publish-status-address=localhost

- --enable-ssl-passthroughjenkins 배포

kubectl create ns jenkins

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-pvc

namespace: jenkins

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: jenkins

spec:

replicas: 1

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

securityContext:

fsGroup: 1000

containers:

- name: jenkins

image: **jenkins/jenkins:lts**

ports:

- name: http

containerPort: 8080

- name: agent

containerPort: 50000

*livenessProbe:

httpGet:

path: "/login"

port: 8080

initialDelaySeconds: 90

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 5

readinessProbe:

httpGet:

path: "/login"

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3*

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

volumes:

- name: jenkins-home

persistentVolumeClaim:

claimName: jenkins-pvc

---

apiVersion: v1

kind: Service

metadata:

name: jenkins-svc

namespace: jenkins

spec:

type: ClusterIP

selector:

app: jenkins

ports:

- port: 8080

targetPort: http

protocol: TCP

name: http

- port: 50000

targetPort: agent

protocol: TCP

name: agent

---

apiVersion: networking.k8s.io/v1

kind: **Ingress**

metadata:

name: jenkins-ingress

namespace: jenkins

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

spec:

ingressClassName: nginx

rules:

- host: **jenkins.example.com**

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: jenkins-svc

port:

number: 8080

EOF

domain 설정

C:\Windows\System32\drivers\etc\hosts 관리자모드에서 메모장에 내용 추가

127.0.0.1 **jenkins.example.com**암호 확인

kubectl exec -it -n jenkins deploy/jenkins -- **cat /var/jenkins_home/secrets/initialAdminPassword**argocd 배포

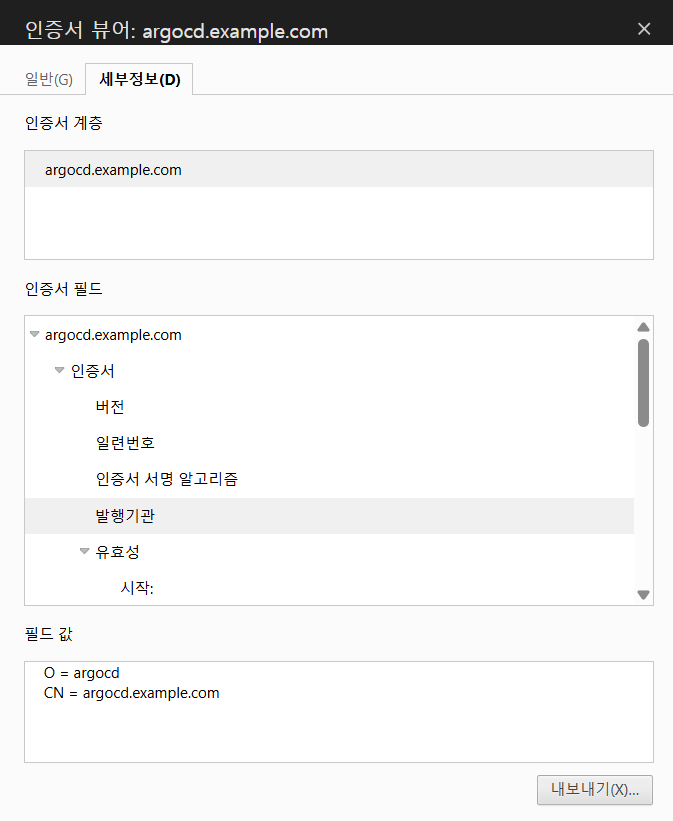

argocd TLS 동작 요약

- openSSL 로 self-signed 인증서 생성 및 secrets 생성

- argoCD 서버는 TLS 인증서를 argocd-server-tls secret에서 직접 로드

- 설정으로 ingress는 TLS를 종료하지 않고 Pod에게 전다

server.ingress.tls=truenginx.ingress.kubernetes.io/ssl-passthrough=true

TLS 키 * 인증서 생성

openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

-keyout argocd.example.com.key \

-out argocd.example.com.crt \

-subj "/CN=**argocd.example.com**/O=**argocd**"- 공통 이름 argocd.example.com

argocd가 tls쓰도록 시크릿 생성

kubectl create ns **argocd****kubectl -n argocd create secret tls argocd-server-tls \

--cert=argocd.example.com.crt \

--key=argocd.example.com.key**argocd-values.yaml

cat <<EOF > argocd-values.yaml

global:

domain: **argocd.example.com**

server:

**ingress**:

enabled: true

ingressClassName: **nginx**

annotations:

nginx.ingress.kubernetes.io/**force-ssl-redirect: "true"**

nginx.ingress.kubernetes.io/**ssl-passthrough: "true"**

**tls: true**

EOF

argocd 설치

helm repo add argo https://argoproj.github.io/argo-helm

helm install **argocd** argo/argo-cd --version **9.1.0** -f **argocd-values.yaml** --namespace argocd비밀번호 확인

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d ;echo8WTg7uivZ82KA1zUarocd 서버 CLI로그인

**argocd login argocd.example.com --insecure** --username admin --password $ARGOPW

argocd 서버 password 변경

argocd account update-password --current-password $ARGOPW --new-password "password"

- 인증서 정보 확인 가능

keycloak 소개

Keycloak(키클록)은 오픈소스 IAM(Identity and Access Management) 솔루션으로,

SSO(싱글 사인온), OAuth2/OIDC 인증, 사용자 관리, 권한 관리, 연동 인증을 한 번에 제공하는 통합 인증 플랫폼입니다.

Keycloak = ID·Password 관리 + OAuth2/OIDC + SSO + RBAC + Social Login을 한 번에 제공하는 인증 서버.

사용 이유

-

서비스마다 로그인 기능을 다시 만들 필요 없음

→ Keycloak이 통합 로그인 페이지 제공

-

OAuth2 / OIDC / SAML 프로토콜 자동 지원

→ 인증 표준을 직접 구현할 필요 없음

-

SSO(Single Sign-On)

→ 한 번 로그인하면 여러 서비스에서 자동 로그인

-

Social Login 쉽게 연동(Google, GitHub, Naver 등)

→ UI에서 클릭 몇 번이면 끝

-

RBAC(Role Based Access Control)

→ 역할 기반 권한 관리 내장

-

기업 환경 연동 가능

→ LDAP, Active Directory, OpenLDAP 연동 가능

Keycloak 배포 + Ingress

kubectl create ns keycloakcat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: keycloak

namespace: keycloak

labels:

app: keycloak

spec:

replicas: 1

selector:

matchLabels:

app: keycloak

template:

metadata:

labels:

app: keycloak

spec:

containers:

- name: keycloak

image: quay.io/keycloak/keycloak:26.4.0

args: ["**start-dev**"] # dev mode 실행

env:

- name: KEYCLOAK_ADMIN

value: **admin**

- name: KEYCLOAK_ADMIN_PASSWORD

value: **admin**

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: keycloak

namespace: keycloak

spec:

selector:

app: keycloak

ports:

- name: http

**port: 80**

targetPort: 8080

---

apiVersion: networking.k8s.io/v1

kind: **Ingress**

metadata:

name: keycloak

namespace: keycloak

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

ingressClassName: nginx

rules:

- host: **keycloak.example.com**

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: keycloak

port:

number: 8080

EOF/etc/hosts 추가

127.0.0.1 **keycloak.example.com**접속 /admin 붙여줘야함

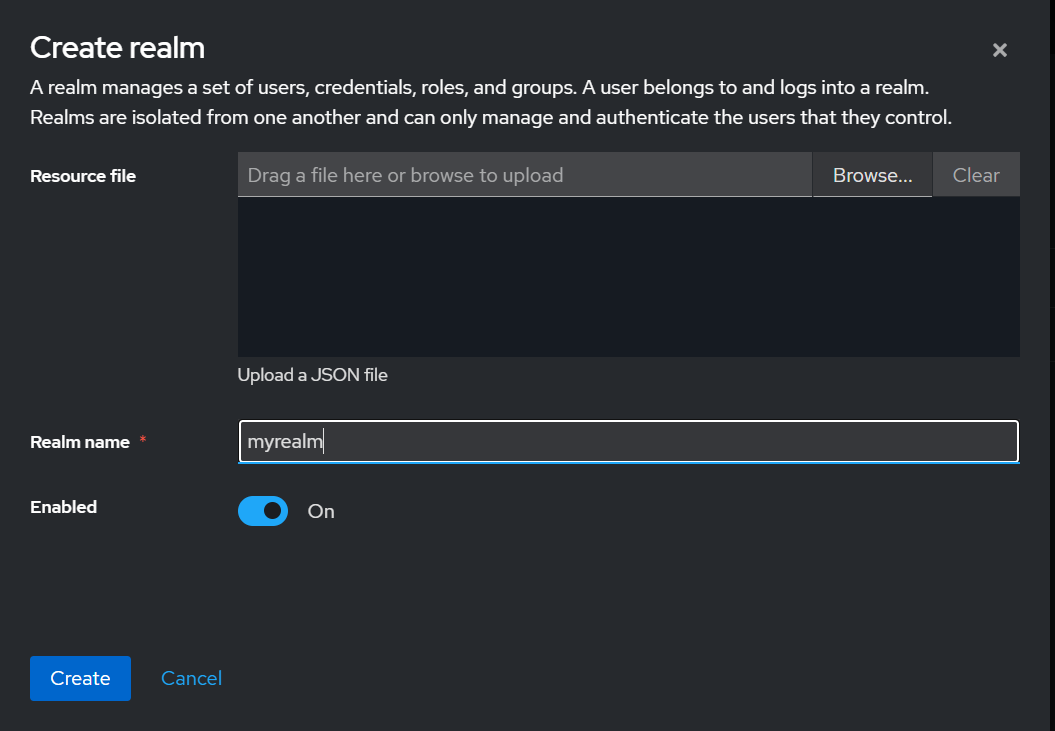

open "http://keycloak.example.com/admin"Realm

Realm = 독립적인 인증/인가 영역(도메인) 입니다.

하나의 Realm은 ‘하나의 로그인 세계’입니다.

Realm 안에는 다음이 존재

| 사용자(User) | 클라이언트(Client) | 역할(Role) |

|---|---|---|

| 그룹(Group) | 인증 설정(Flows) | 토큰 정책(Token) |

| Social 로그인 설정(IdP) |

각 Realm은 서로 완전히 독립된 인증 공간입니다.

다른 Realm의 사용자는 기본적으로 접근할 수 없습니다.

Realm의 핵심 특징

| 기능 | 설명 |

|---|---|

| 사용자 독립 | Realm A 사용자 = Realm B 사용자와 무관 |

| 클라이언트 독립 | 앱/서비스도 Realm 단위로 구분 |

| 로그인 화면 독립 | Realm마다 UI/정책 다르게 운영 가능 |

| 설정 독립 | 토큰 만료 시간, 인증 흐름 등 분리 |

Master Realm

Keycloak 설치 시 기본으로 생성되는 최상위 관리 Realm입니다.

Keycloak 전체 시스템을 관리하는 "관리자 영역"이라고 보면 됩니다.

Master Realm에서만 가능한 기능:

- 새로운 Realm 생성

- Keycloak 전역 관리자(Admin user) 관리

- 전체 서버 설정 관리

- 전역 클라이언트(예: admin-cli) 관리

ream 추가

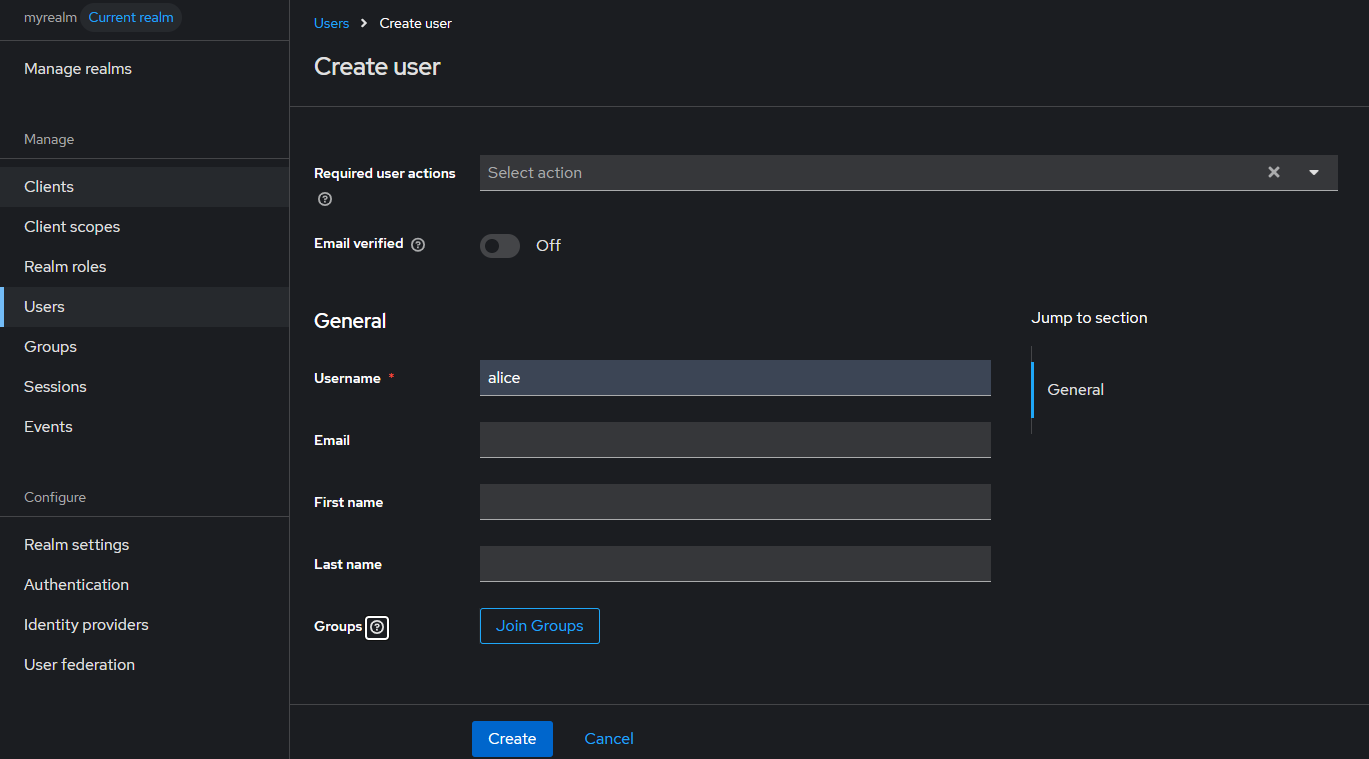

user 생성 && password

- users 생성 : alice - 암호 alice123

경량의 curl 테스트용 파드 생성

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: **curl**

namespace: default

spec:

containers:

- name: curl

image: curlimages/**curl:latest**

command: ["sleep", "infinity"]

EOFk8s 내부에서 실습을 위한 도메인 호출 설정

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl get svc -n jenkins

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins-svc ClusterIP 10.96.29.42 <none> 8080/TCP,50000/TCP 13m

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl get svc -n argocd argocd-server

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-server ClusterIP 10.96.156.153 <none> 80/TCP,443/TCP 5m27s

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl get svc -n keycloak

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

keycloak ClusterIP 10.96.235.87 <none> 80/TCP 3m30s

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~#coredns 수정

**KUBE_EDITOR="vi" kubectl edit cm -n kube-system coredns

### hosts 부분 추가

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

hosts {

10.96.74.179 argocd.example.com

10.96.159.13 keycloak.example.com

failthrough

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30 {

disable success cluster.local

disable denial cluster.local

}

loop

reload

loadbalance

}**(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl exec -it curl -- nslookup keycloak.example.com

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: keycloak.example.com

Address: 10.96.254.195

(⎈|kind-myk8s:N/A) root@DESKTOP-8S932ET:~# kubectl exec -it curl -- nslookup argocd.example.com

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: argocd.example.com

Address: 10.96.74.179SSO 설정

JENKINS 설정

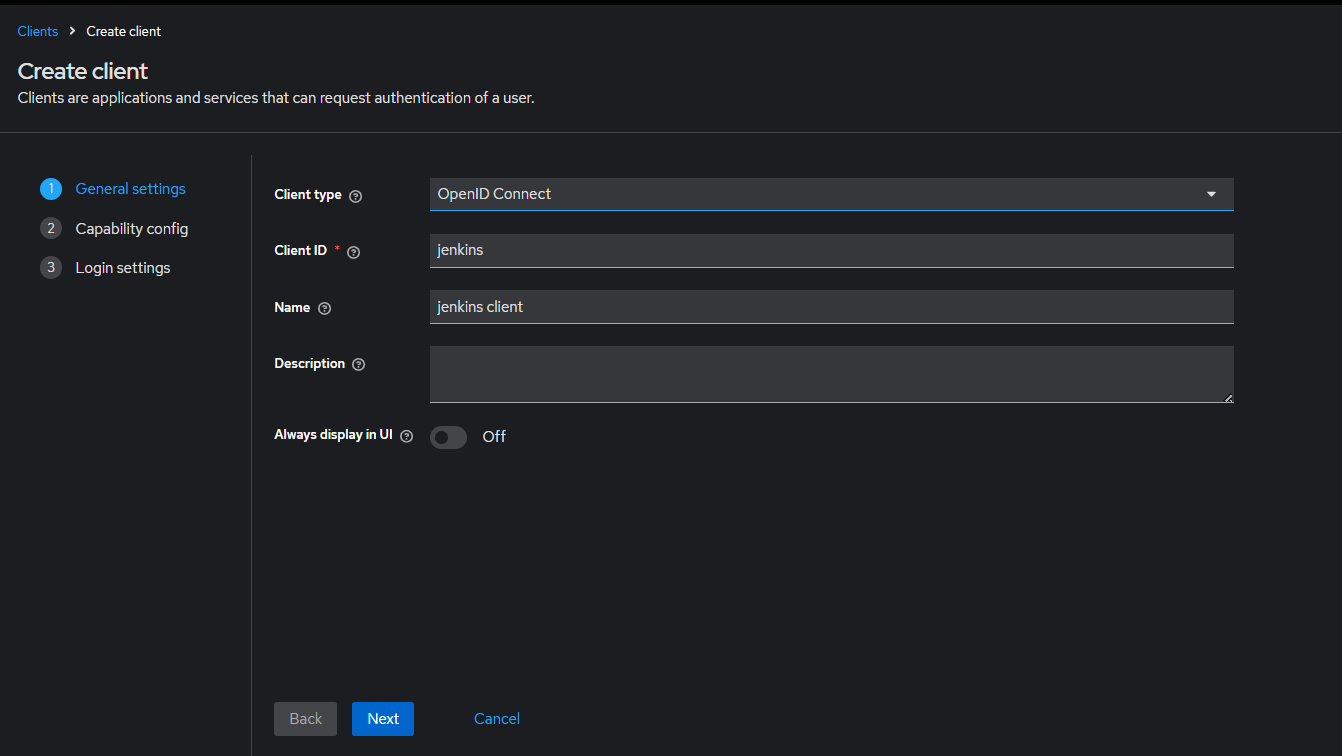

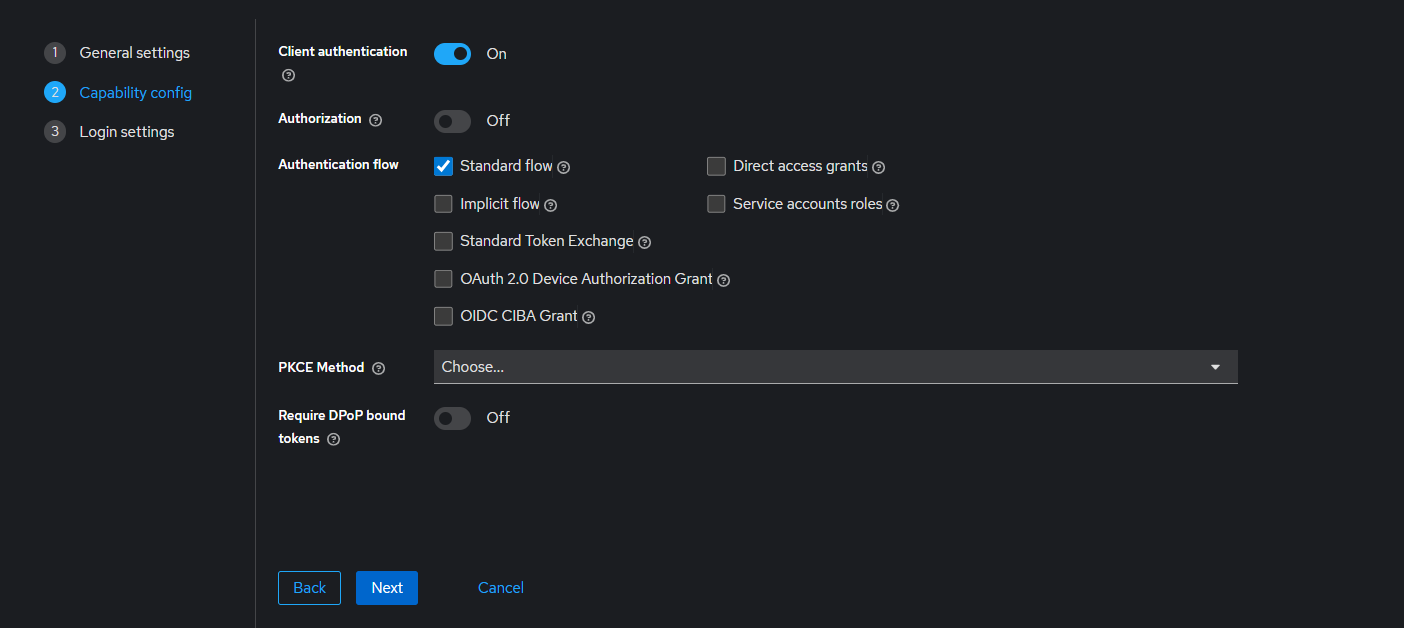

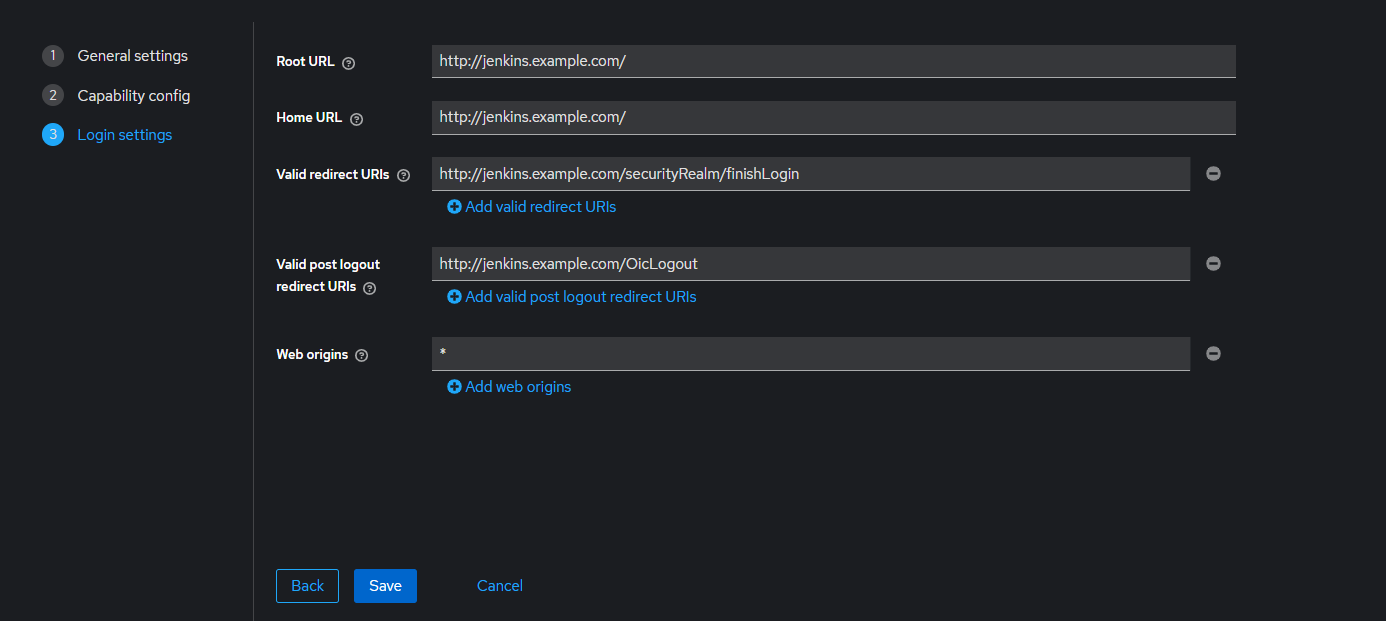

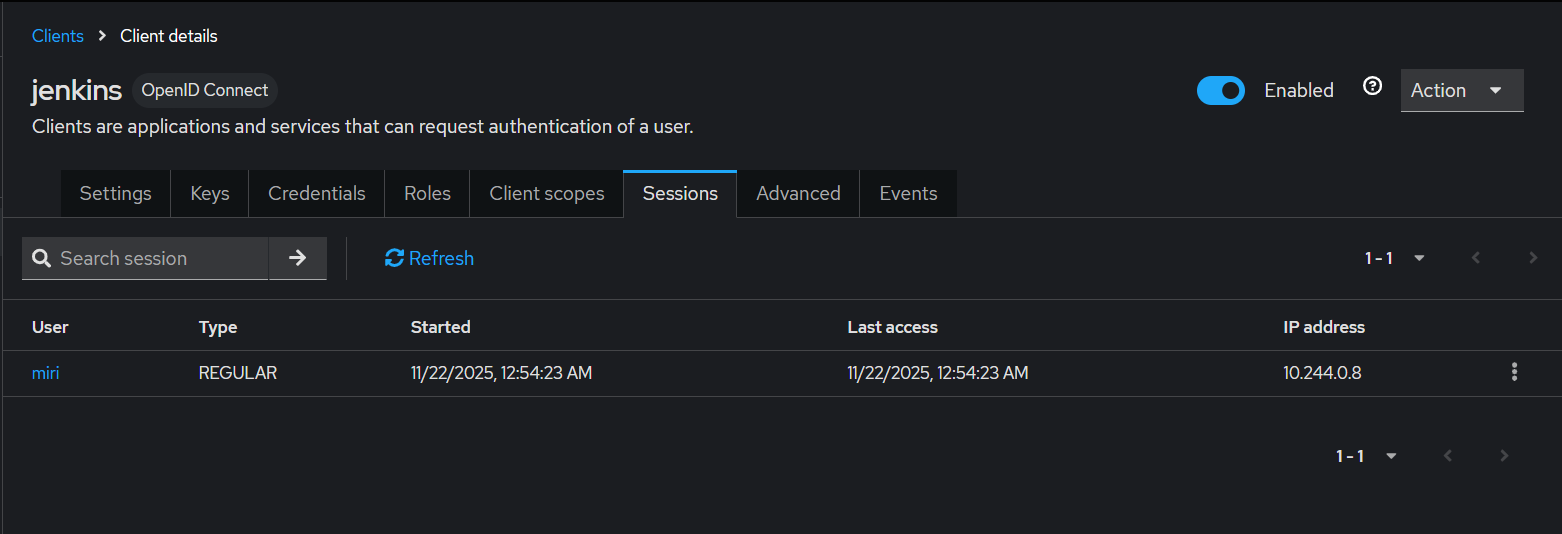

Keycloak Client 설정

- client id : jenkins

- name: jenkins client

- Client authentication : Check

- Authentication flow : Standard flow

- Root URL : http://jenkins.example.com/

- Home URL : http://jenkins.example.com/

- Valid redirect URIs : http://jenkins.example.com/securityRealm/finishLogin

- Valid post logout redirect URIs : http://jenkins.example.com/OicLogout

- Web origins : +

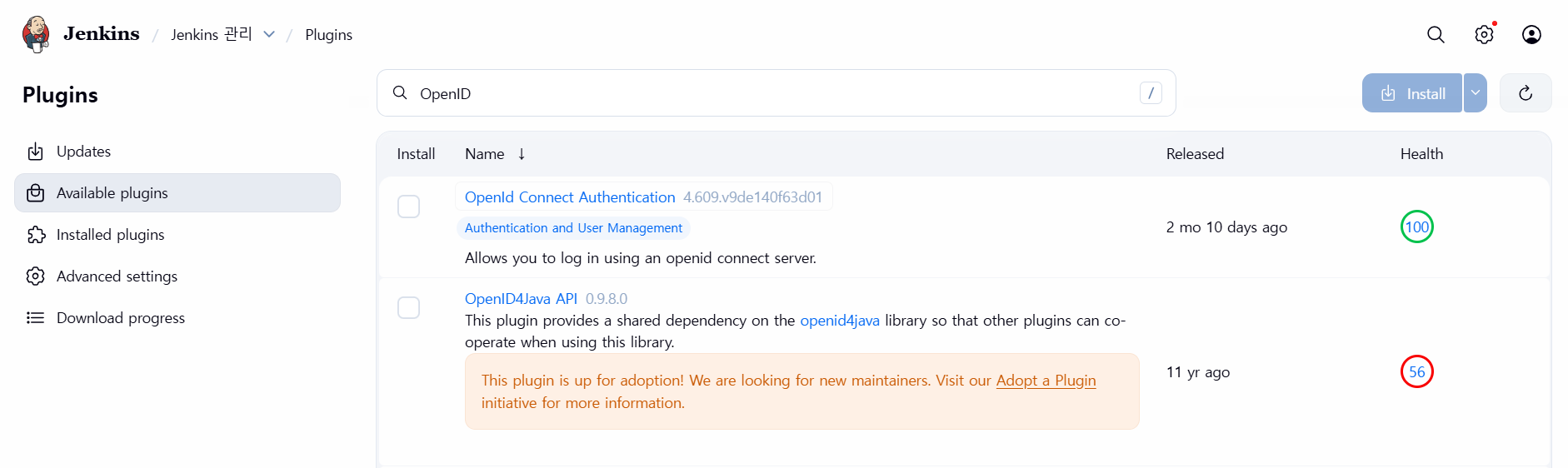

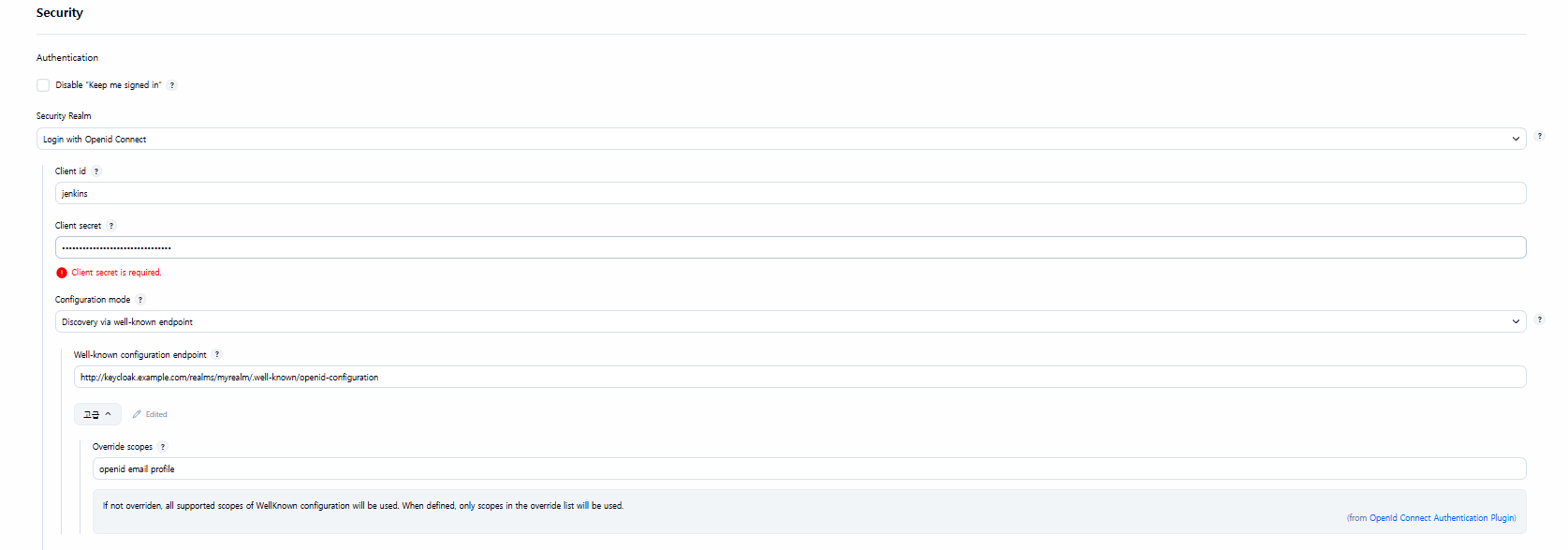

jenkis OIDC 설정

- OpenID Connect Authentication 설치

- client id : jenkins

- client secret: keycloak jenkins client secret

- Well-know configuration endpoint :

http://keycloak.example.com/realms/**myrealm**/.well-known/openid-configuration - [고급] 클릭 → Override scopes : openid email profile

- Advanced configuration : [User fileds] 클릭 → User name field name : preferred_username

- Security configuration 클릭

- Disable ssl verification : Check

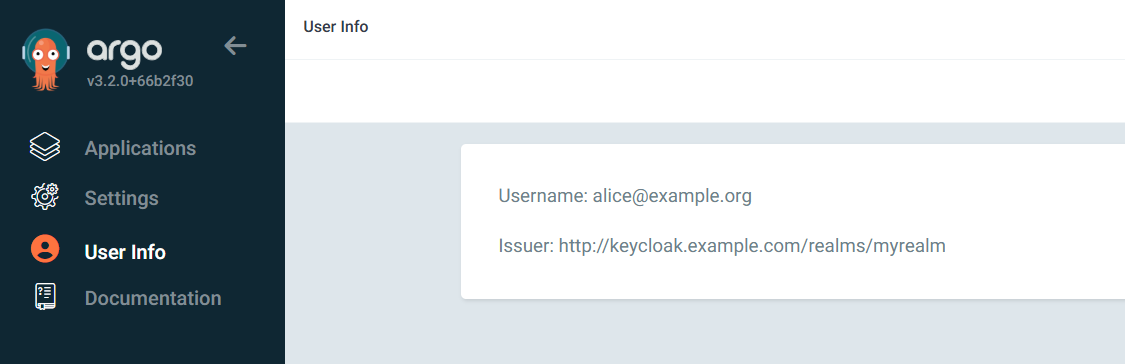

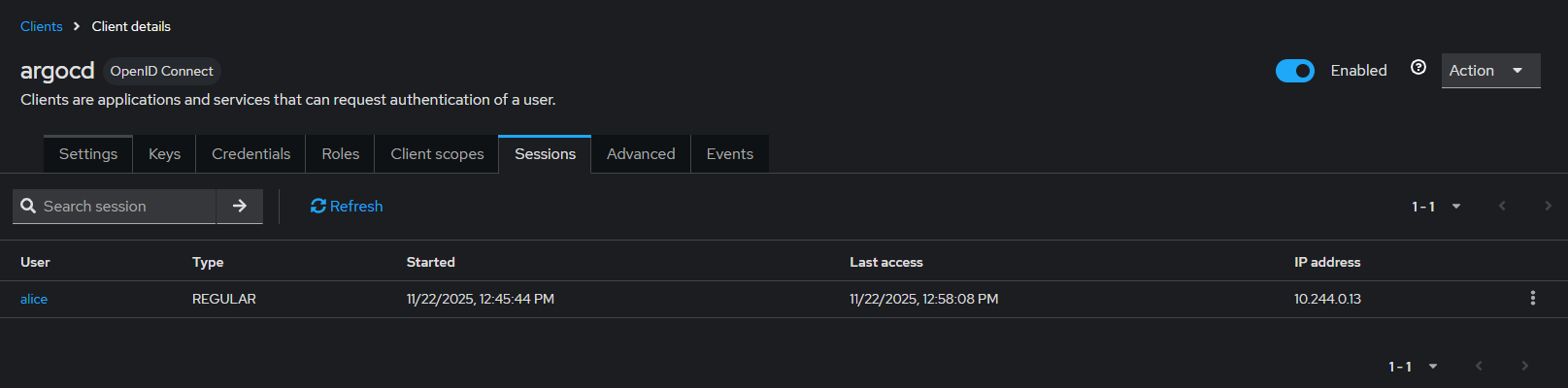

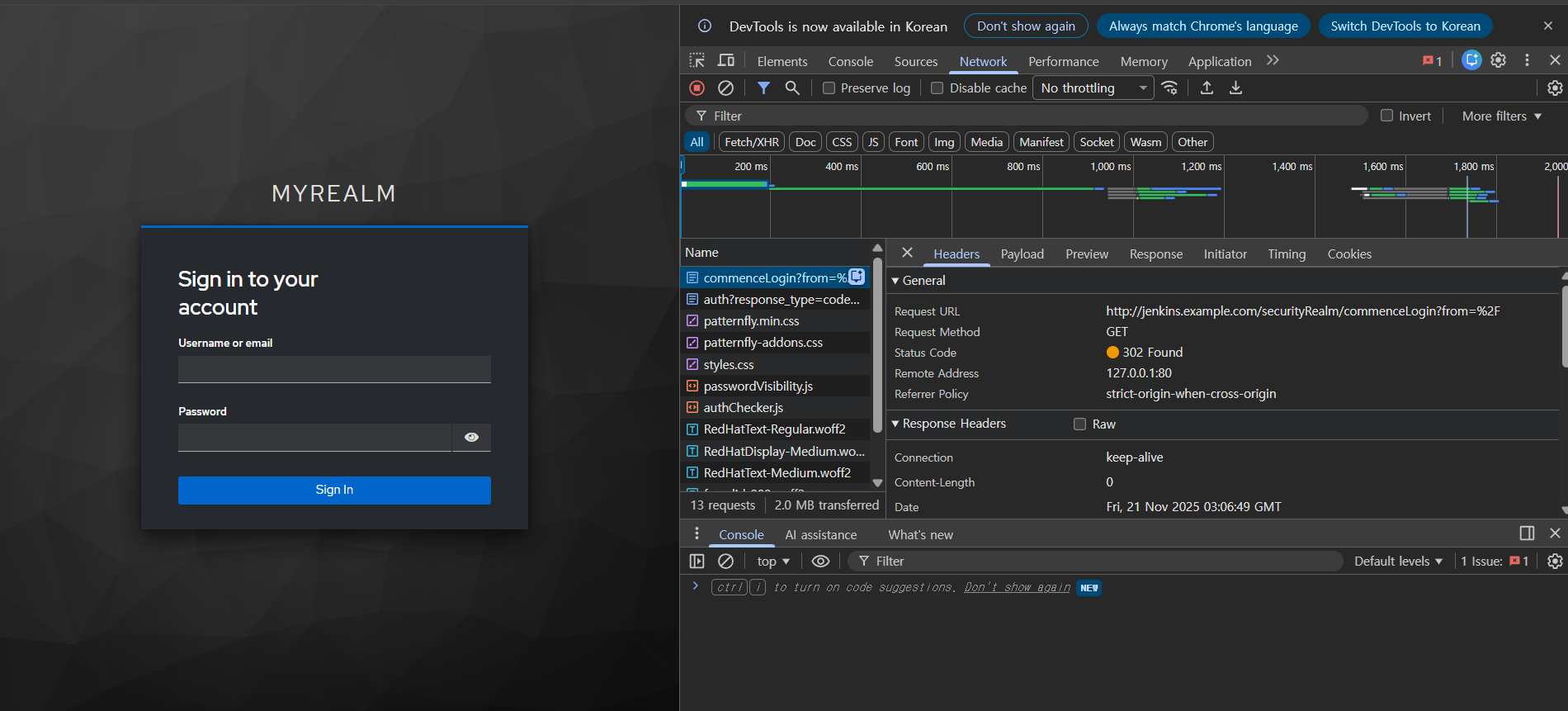

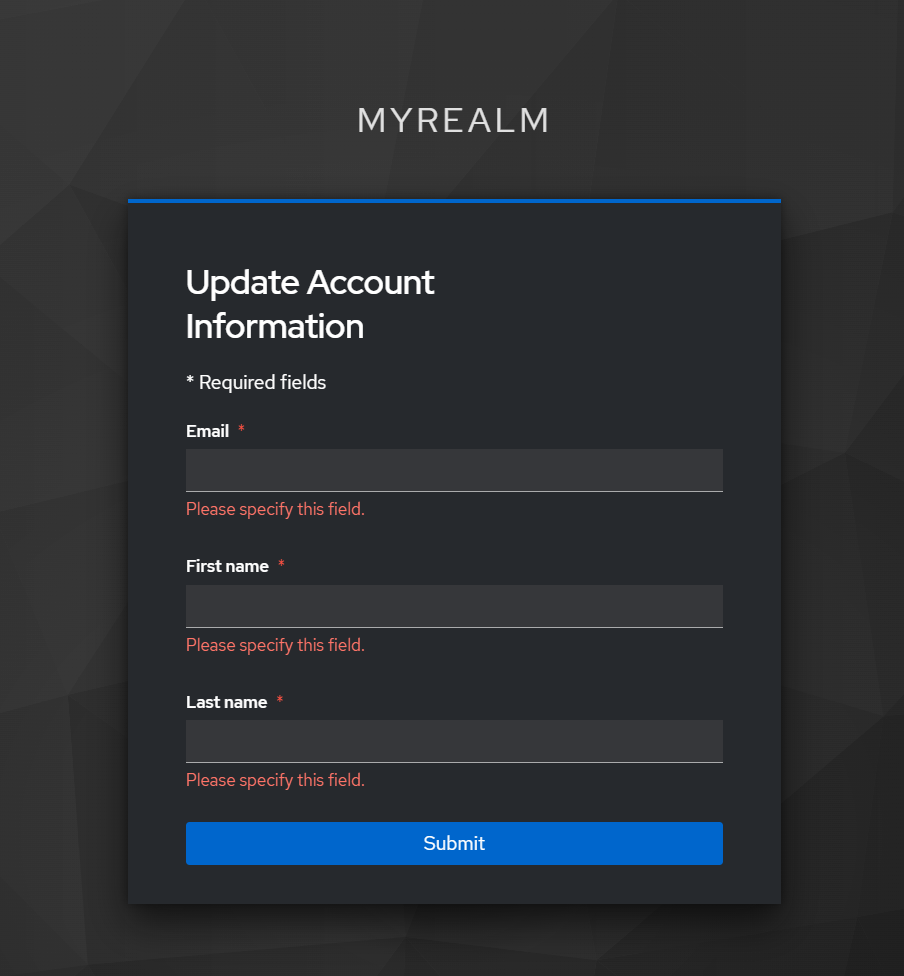

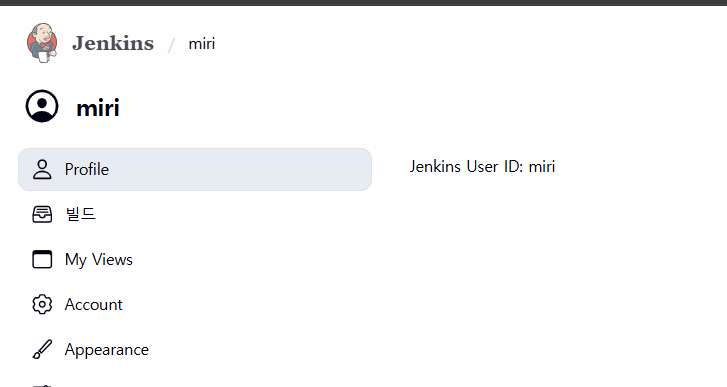

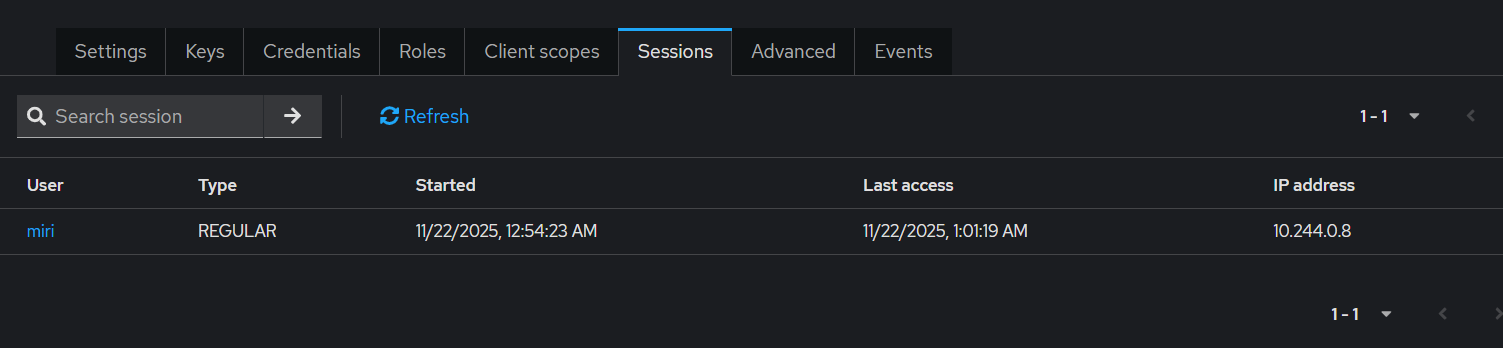

jenkins with SSO

- name 초기화

- 접속 성공

- client session 확인

argocd 설정

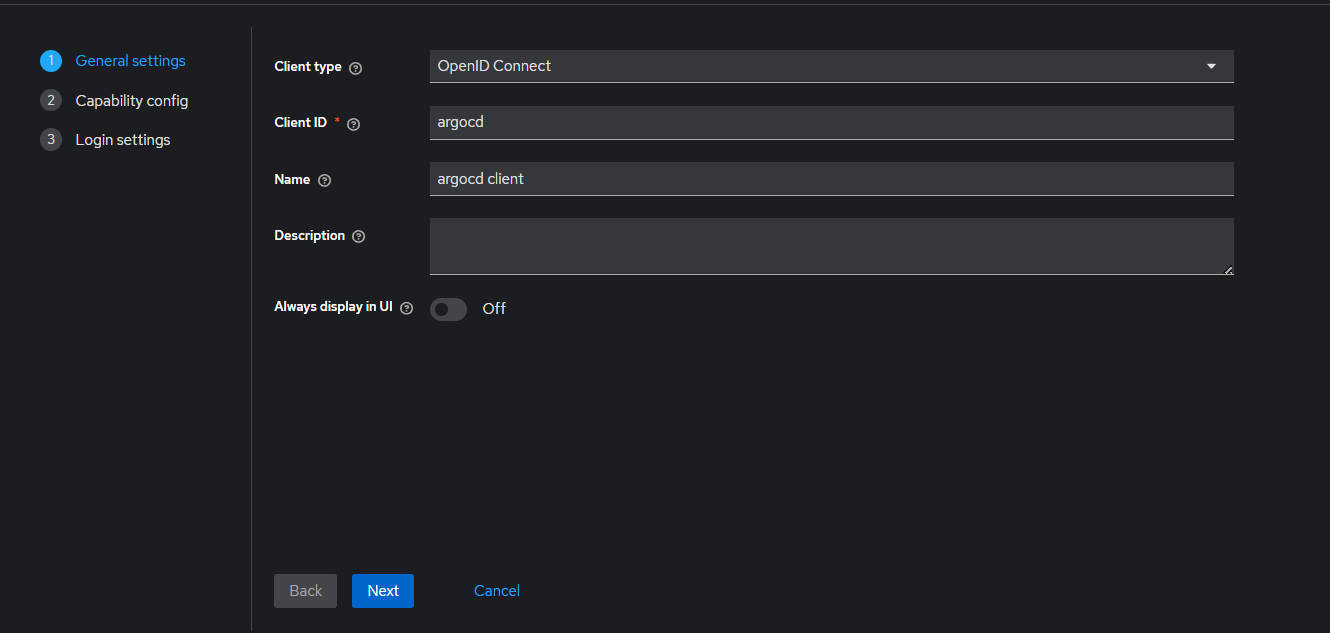

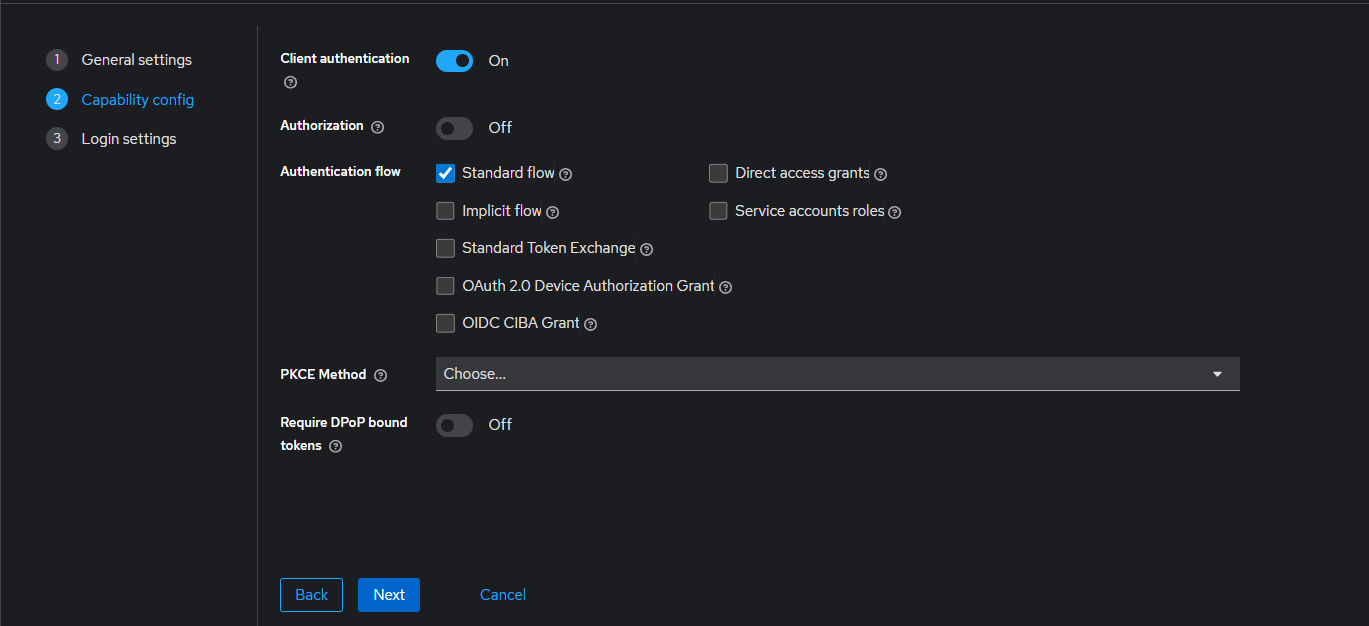

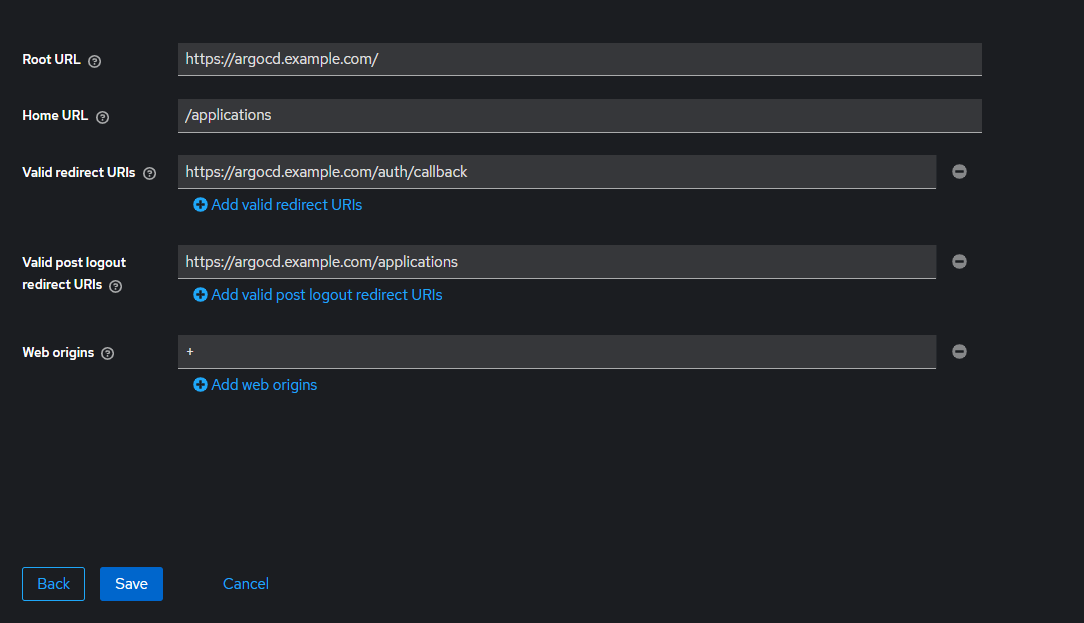

keycloak argocd client 설정

- client id : argocd

- name : argocd client

- Client authentication : Check

- Authentication flow : Standard flow

- Root URL : https://argocd.example.com/

- Home URL : /applications

- Valid redirect URIs : https://argocd.example.com/auth/callback

- http://localhost:8085/auth/callback (needed for argo-cd cli, depends on value from --sso-port)

- Valid post logout redirect URIs : https://argocd.example.com/applications

- Web origins : +

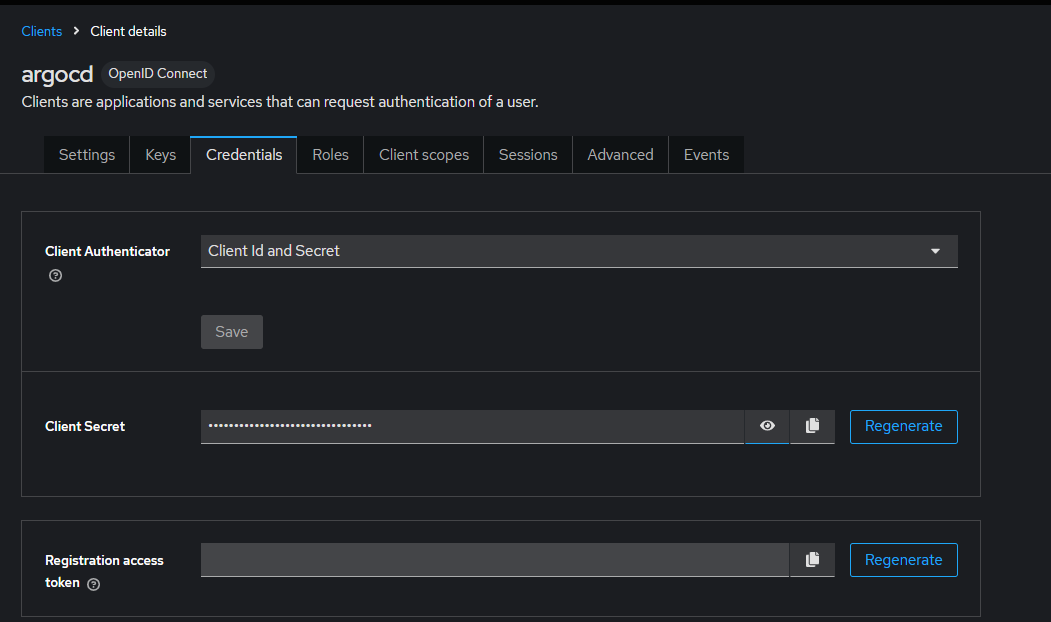

client 에서 Credentials : 메모 해두기

argocd OIDC 설정

kubectl -n argocd patch secret argocd-secret --patch='{"stringData": { "oidc.keycloak.clientSecret": "oYZglJalH94UMRq5p5dTGT7qATltDkbr" }}'

kubectl get secret -n argocd argocd-secret -o jsonpath='{.data}' | jq

{

"admin.password": "JDJhJDEwJEVPSUEwNHRRbVdJZGlLN0ZnbUYvTWVKaUJocmZuWDcwRUpOL2htUVE1Llltcm9WcnBrb3dD",

"admin.passwordMtime": "MjAyNS0xMS0yMVQwMToyMzozOFo=",

"oidc.keycloak.clientSecret": "b1laZ2xKYWxIOTRVTVJxNXA1ZFRHVDdxQVRsdERrYnI=",

"server.secretkey": "Mk1zTENsMDdXeDlMcDJKQnducFViRnpETkZsR0NsQzVVWVpiQXNlMGorTT0="

}설정 추가

kubectl patch cm argocd-cm -n argocd --type merge -p '

data:

oidc.config: |

name: Keycloak

issuer: http://**keycloak.example.com**/realms/**myrealm**

clientID: argocd

clientSecret: oYZglJalH94UMRq5p5dTGT7qATltDkbr

requestedScopes: ["openid", "profile", "email"]

'

argocd-server pod 재시작

**kubectl rollout restart deploy argocd-server -n argocd**

OpenLDAP 설정

LDAP이란

사용자 그룹 권한 정보를 계층적으로 보관하는 조직도

LDAP 구성요소

1. Directory Information Tree (DIT)

- 디렉터리의 구조(계층 트리)

dc=example,dc=com

└── ou=People

├── uid=user1

├── uid=user2

2. Entry

- 디렉터리에 저장되는 실제 객체(사람, 그룹, 장비)

- 각 Entry는 DN(Distinguished Name) 으로 고유 식별됨

3. Attribute

- Entry가 보유한 속성값 예: uid, cn, sn, mail, userPassword 등

4. Schema

- 어떤 속성과 객체 클래스를 사용할지 정의

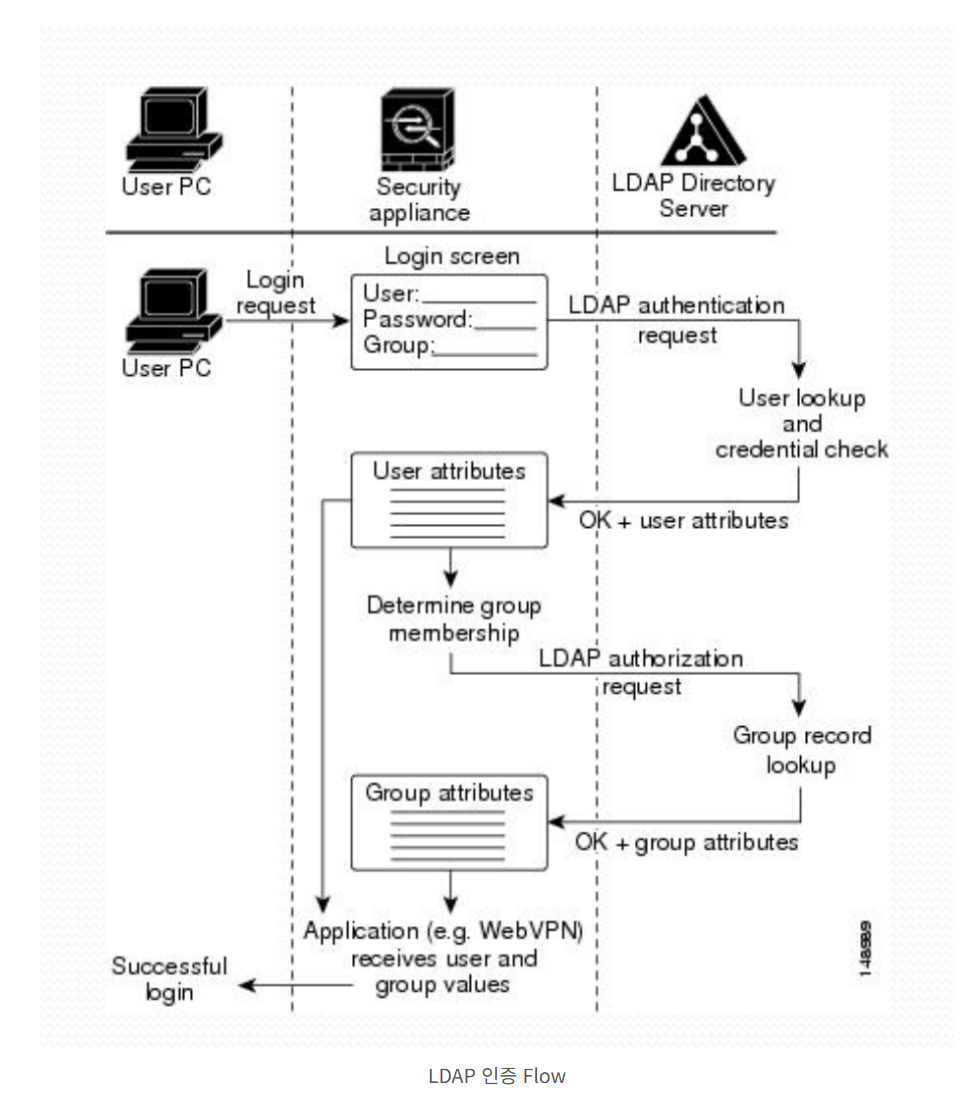

LDAP 인증 FLOW

| 단계 | 설명 |

|---|---|

| 1 | 사용자가 보안장비에 로그인 요청 |

| 2 | 보안장비 → LDAP 서버에 인증 요청(Bind) |

| 3 | LDAP: 사용자 계정 찾기 + 비밀번호 검증 |

| 4 | LDAP → 보안장비로 사용자 속성 반환 |

| 5 | 보안장비가 사용자 그룹 판단(memberOf 등) |

| 6 | 보안장비 → LDAP 서버에 추가 인가 확인 요청 |

| 7 | LDAP: 그룹 정보 조회 후 반환 |

| 8 | 보안장비가 최종 사용자·그룹 값 결정 |

| 9 | 성공적으로 로그인 처리 및 서비스 제공 |

참조 : https://yongho1037.tistory.com/796

OpenLDAP서버 배포

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: **Namespace**

metadata:

name: **openldap**

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openldap

namespace: openldap

spec:

replicas: 1

selector:

matchLabels:

app: openldap

template:

metadata:

labels:

app: openldap

spec:

containers:

- name: **openldap**

image: **osixia/openldap:1.5.0**

ports:

- containerPort: 389

name: ldap

- containerPort: 636

name: ldaps

env:

- name: LDAP_ORGANISATION # 기관명, LDAP 기본 정보 생성 시 사용

value: "Example Org"

- name: LDAP_DOMAIN # LDAP 기본 Base DN 을 자동 생성

value: "**example.org**"

- name: LDAP_ADMIN_PASSWORD # LDAP 관리자 패스워드

value: "**admin**"

- name: LDAP_CONFIG_PASSWORD

value: "**admin**"

- name: **phpldapadmin**

image: **osixia/phpldapadmin:0.9.0**

ports:

- containerPort: 80

name: phpldapadmin

env:

- name: PHPLDAPADMIN_HTTPS

value: "false"

- name: PHPLDAPADMIN_LDAP_HOSTS

value: "**openldap**" # LDAP hostname inside cluster

---

apiVersion: v1

kind: Service

metadata:

name: openldap

namespace: openldap

spec:

selector:

app: openldap

ports:

- name: phpldapadmin

port: 80

targetPort: 80

**nodePort: 30000**

- name: ldap

port: 389

targetPort: 389

- name: ldaps

port: 636

targetPort: 636

type: NodePort

EOF

OpenLDAP 조직도 구성 : 사용자(alice, bob), 그룹(devs, admins)

**kubectl -n openldap exec -it deploy/openldap -c openldap -- bash**관리자 인증테스트

**ldapsearch** -x -H **ldap**://localhost:**389** -b dc=**example**,dc=**org** -D "**cn=admin**,dc=example,dc=org" -w **admin

# extended LDIF

#

# LDAPv3

# base <dc=example,dc=org> with scope subtree

# filter: (objectclass=*)

# requesting: ALL

#

# example.org

dn: dc=example,dc=org

objectClass: top

objectClass: dcObject

objectClass: organization

o: Example Org

dc: example

# search result

search: 2

result: 0 Success

# numResponses: 2

# numEntries: 1**ldapadd 로 ou 추가 ( organizationalUnit)

cat <<EOF | **ldapadd** -x -D "cn=admin,dc=example,dc=org" -w admin

dn: ou=people,dc=example,dc=org

objectClass: **organizationalUnit**

ou: **people**

dn: ou=groups,dc=example,dc=org

objectClass: organizationalUnit

ou: **groups**

EOF- 조직 추가 (people, groups)

ldapadd 로 users 추가

cat <<EOF | **ldapadd** -x -D "cn=admin,dc=example,dc=org" -w admin

**dn**: uid=alice,**ou=people**,dc=example,dc=org

objectClass: **inetOrgPerson**

cn: Alice

sn: Kim

**uid**: alice

mail: alice@example.org

**userPassword**: alice123

dn: uid=bob,ou=people,dc=example,dc=org

objectClass: inetOrgPerson

cn: Bob

sn: Lee

**uid**: bob

mail: bob@example.org

userPassword: bob123

EOF- 유저 추가 (alice, bob)

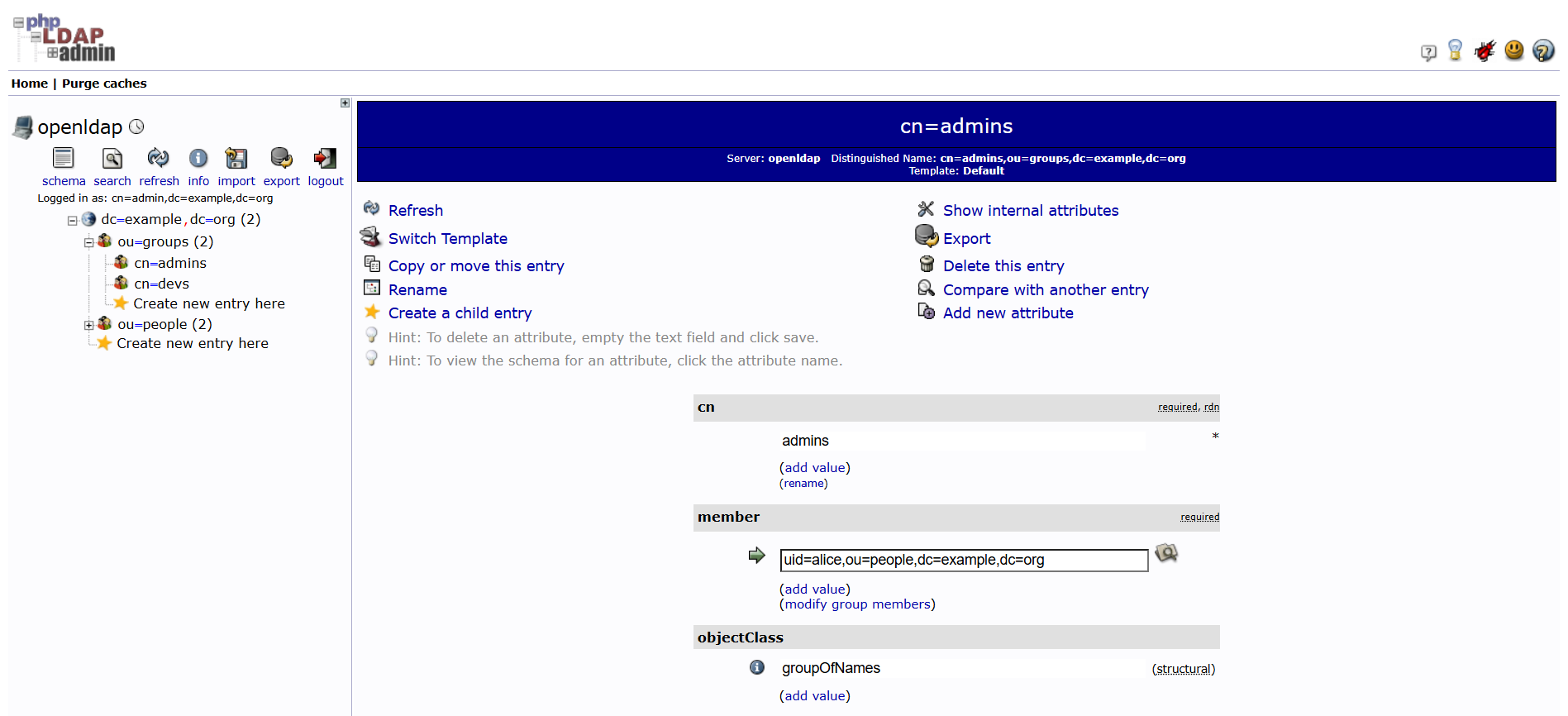

ldapadd로 groups 추가

cat <<EOF | **ldapadd** -x -D "cn=admin,dc=example,dc=org" -w admin

dn: cn=devs,ou=groups,dc=example,dc=org

objectClass: **groupOfNames**

cn: **devs**

member: uid=bob,ou=people,dc=example,dc=org

dn: cn=admins,ou=groups,dc=example,dc=org

objectClass: groupOfNames

cn: **admins**

member: uid=alice,ou=people,dc=example,dc=org

EOF- 그룹 추가 (devs, admins)

조직 검색

ldapsearch -x -D "cn=admin,dc=example,dc=org" -w admin \

-b "dc=example,dc=org" "(objectClass=organizationalUnit)" ou사용자 검색

ldapsearch -x -D "cn=admin,dc=example,dc=org" -w admin \

-b "ou=people,dc=example,dc=org" "(uid=*)" uid cn mail그룹 검색

ldapsearch -x -D "cn=admin,dc=example,dc=org" -w admin \

-b "ou=groups,dc=example,dc=org" "(objectClass=groupOfNames)" cn member앤트릭 출력

**ldapsearch** -x -H **ldap**://localhost:**389** -b dc=**example**,dc=**org** -D "**cn=admin**,dc=example,dc=org" -w **admin

# extended LDIF

#

# LDAPv3

# base <dc=example,dc=org> with scope subtree

# filter: (objectclass=*)

# requesting: ALL

#

# example.org

dn: dc=example,dc=org

objectClass: top

objectClass: dcObject

objectClass: organization

o: Example Org

dc: example

# people, example.org

dn: ou=people,dc=example,dc=org

objectClass: organizationalUnit

ou: people

# groups, example.org

dn: ou=groups,dc=example,dc=org

objectClass: organizationalUnit

ou: groups

# alice, people, example.org

dn: uid=alice,ou=people,dc=example,dc=org

objectClass: inetOrgPerson

cn: Alice

sn: Kim

uid: alice

mail: alice@example.org

userPassword:: YWxpY2UxMjM=

# bob, people, example.org

dn: uid=bob,ou=people,dc=example,dc=org

objectClass: inetOrgPerson

cn: Bob

sn: Lee

uid: bob

mail: bob@example.org

userPassword:: Ym9iMTIz

# devs, groups, example.org

dn: cn=devs,ou=groups,dc=example,dc=org

objectClass: groupOfNames

cn: devs

member: uid=bob,ou=people,dc=example,dc=org

# admins, groups, example.org

dn: cn=admins,ou=groups,dc=example,dc=org

objectClass: groupOfNames

cn: admins

member: uid=alice,ou=people,dc=example,dc=org

# search result

search: 2

result: 0 Success

# numResponses: 8

# numEntries: 7**

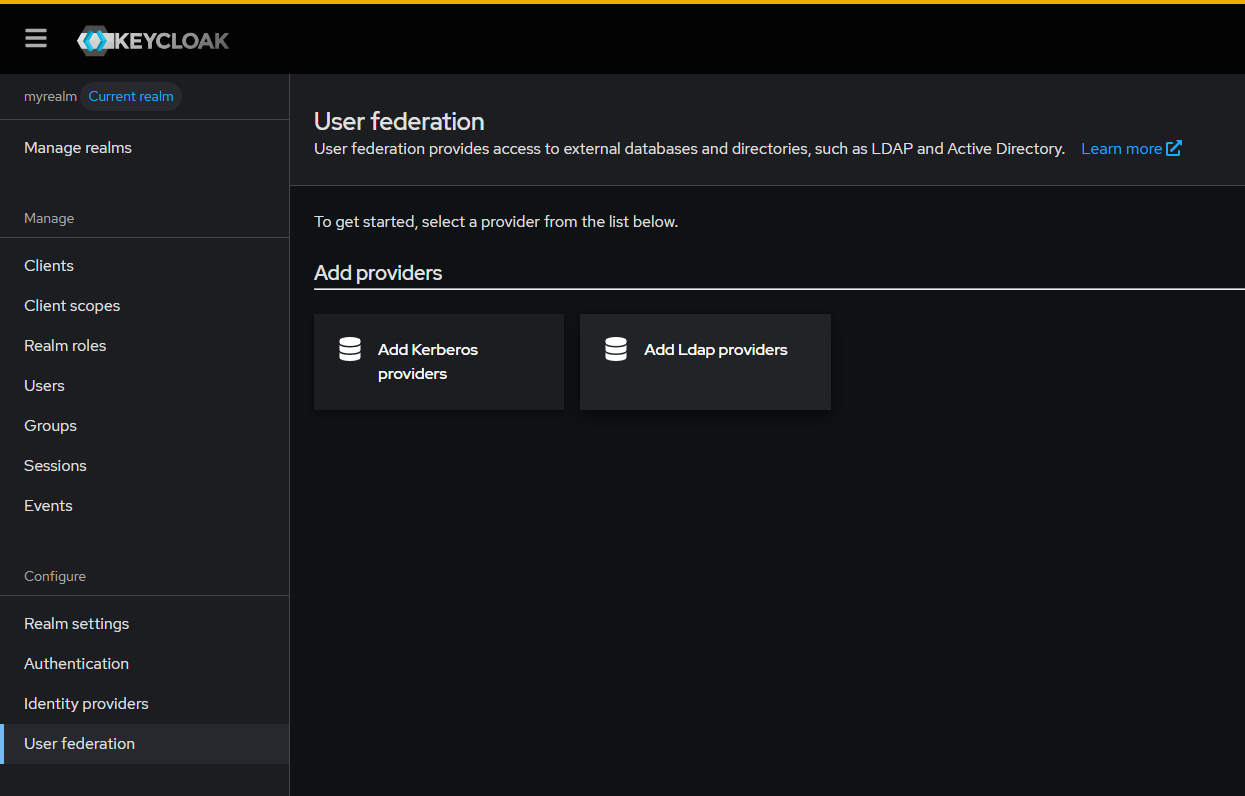

keycloak LDAP 연동

필드값 최소 설정

- General

UI display name: ldapVendor: Other

- Connection and authentication

Connection URL:ldap://openldap.openldap.svc:389⇒ Test connectionBind DN: (= Login DN)cn=admin,dc=example,dc=orgBind Credential: admin ⇒ Test authentication

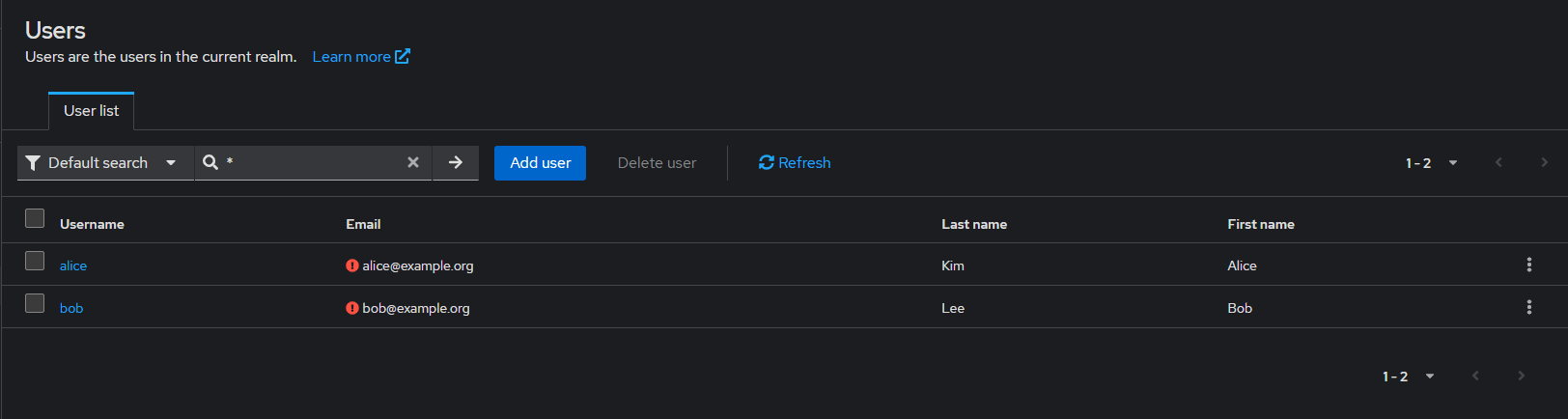

- LDAP searching and updating : 참고로 LDAP에 uid 로 alice, bob 존재

Edit mode: READ_ONLYUsers DN:ou=people,dc=example,dc=orgUsername LDAP attribute: uidRDN LDAP attribute: uidUser Object Classes: inetOrgPersonSearch scope: Subtree (OU 하위 모두 탐색)

- Synchronization settings

Import Users: On (LDAP → KeyCloak : Sync OK)Sync Registrations: Off (KeyCloak → LDAP : Sync OK)Periodic full sync: OnPeriodic changed users sync: On

Save

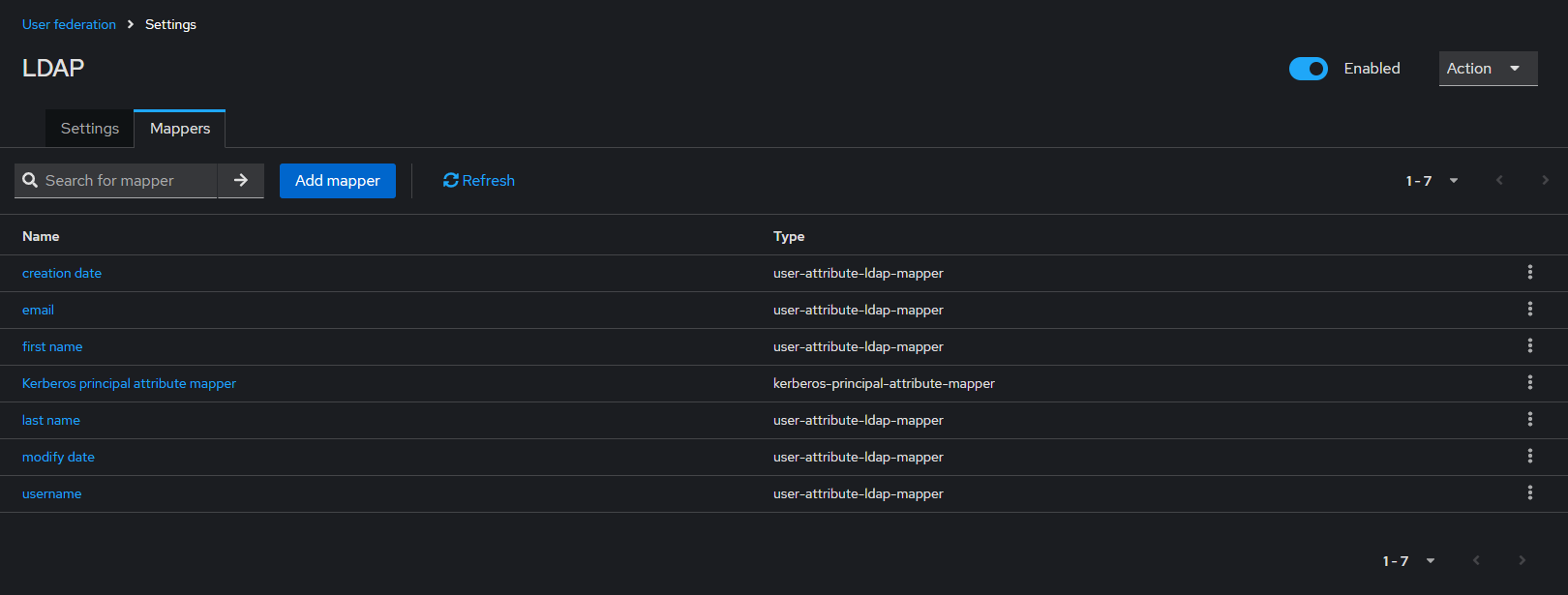

mapper 확인

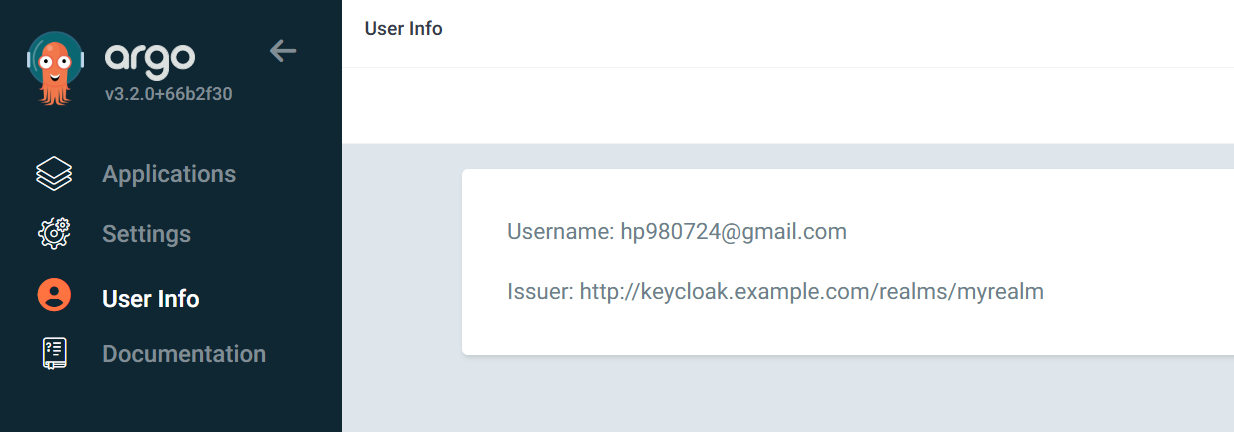

argocd 로그인