해당 글은 Jim Kurose 교수님의 Computer Networks 강의를 듣고 요약한 내용이다

Overview

3-1 Transport Layer

- definitions

- transport layer: logical communication b/ processes

- network layer: logical communication b/ hosts(can have many diff processes)

- process

- application layer passes a message through socket

- transport layer determines segment header fields values and creates segment

- transport layer passes down the segment to the network layer, to the IP protocol

- network layer sends the segment to the receiving host

- receiving host checks the header values, extracts application layer message and demultiplexes messages up to application via socket

- two principal internet transport protocols

- TCP

- UDP(connectionless)

Transport Layer Multiplexing and Demultiplexing

3-2 Transport Layer Multiplexing and Demultiplexing

- demultiplexing

- process by which the payloads of a datagram(received from the sender host) are directed to the designated applications

- host uses IP addresses and port numbers specified in the transport layer segment of the datagram it received to direct segment to appropriate socket

- all the UDP datagrams with same destination port # will be directed to the same socket

- TCP socket is identified by both source and destination IP address and port number whereas UDP socket only requires those of destination. This means each TCP socket will be associated with a different connecting client as they all have different source IP addresses

- multiplexing

- many applications sending down messages through respective sockets to TCP and it is TCP’s job to funnel down the messages to the IP protocol

Connectionless Transport - UDP

3-3 Connectionless Transport UDP

- features

- User Datagram Protocol, or UDP for short, provides “best effort” service, thus segments can be lost or delivered out of order

- UDP is connectionless, meaning sender and receiver does not share handshakes or states. Since there is no connection, there is no connection establishment

- As UDP provides relatively simple service, the header size is also relatively small

- UDP provides no congestion control so it can function on a congested network, unlike TCP

- All of the characteristics above make UDP suitable for applications such as streaming services, DNS, SNMP and so on

- UDP segment header

- Header includes source port #, destination port #, length of the payload(application data) and checksum field

- Pseudo header containing source and destination addresses is prefixed to the UDP header

- checksum

- Its goal is to detect errors in transmitted segment

- Sender turns the contents of UDP segment(including header fields and IP addresses) into a sequence of 16-bit integers. Add all the integers and the complement sum of the addition is the checksum value

- Receiver compute the checksum of the received segment to see if it matches the checksum field

- Checksum is not foolproof however, as the two values turning out to be equal does not necessarily mean the received segment has not been corrupted

- HTTP3 sets security measures in application layer on top of UDP to supplement UDP’s weak protection

Principles of Reliable Data Transfer

3-4 Principles of Reliable Data Transfer Part1

- abstraction and implementation

- While the process of sending data from one point to another is abstracted away as a uni-directional, reliable service, it really is a bi-directional message changing(NOT data transfer) process through reliable data transfer protocol, then over an unreliable channel in the network layer

- Complexity of reliable data transfer protocol will rely on the characteristics of unreliable channel

- Sender and receiver do not know the state of each other or what’s happening at the channel unless they are communicating via messages

- Reliable Data Transfer Protocol

- Finite State Machines are used to specify the state of sender and receiver

- If the underlying channel is perfectly reliable, the FSMs on each end only need to send/read data from the channel. For example, on the sending side, rdt will send data to the transport layer. The transport layer’s actions then, are to turn data into a series of packets and send them through the underlying channel

- If the underlying channel has impairments, rdt2.0 is used to recover from errors. Rdt 2.0 uses acknowledgements(ACKs) or negative acknowledgements(NAKs) whereby receiver explicitly tells sender that a packet is received okay or not. Sender retransmits the packet on receipt of NAK. Acknowledgement happens after every one packet

- Rdt 2.0

- Sender goes from one state to another: waiting for call from above for another rdt send, to waiting for ACK/NAK. If it gets an ACK, it falls back to the initial state whereas a NAK response will call for a retransmit of the packet till it gets an ACK back

- Receiver will be waiting for the arrival of the packet and send out an ACK or NAK accordingly. If the data is found out to be not corrupt, it gets extracted and delivered up to the application layer and then the receiver sends an ACK back to the sender

- ACK/NAK can be corrupted during communication. In such a case, sender will retransmit the current packet and add a sequence # to each packet so that the receiver can detect and discard duplicate packets

- Rdt 2.1

- Sender is in one of the four possible states: the two of which are the same as those of rdt 2.0 but with the sequence # of 0; and the remaining two, with the sequence # of 1. The initial state is waiting for call 0 from above and on receiving an ACK, it transitions to waiting for call 1 from above. Upon receiving an ACK, it goes back to the initial state and the cycle repeats

- Receiver has two possible states: waiting for 0 from below and waiting for 1 from below. When it receives a packet of 0 in the state of waiting for 1, it will still send an ACK since when it transitions to the other state, it will receive a packet of 1 anyways

- TCP uses only ACK along with checksum

3-4 Principles of Reliable Data Transfer Part2

-

Rdt 3.0

- The underlying channel can both get corrupt or lose packets

- Sender starts timer and waits some amount of time for ACK and retransmits the packet if it did not receive an ACK(timeout). When it receives a response, the timer stops. Corrupt packets are treated the same as lost packets that will be recovered by a later timeout retransmit. ACK with a wrong sequence number or any ACK received while waiting for call from above is ignored. When an ACK is lost or delayed, the sender will resend the packet again at timeout

-

Pipeline Protocols

- Stop-and-wait behavior of the protocol limits the performance of underlying channel. A more efficient alternative is pipelined protocol. Sender sends multiple yet-to-be-acknowledged packets. The range of sequence number should be increase in this case

- In Go-Back-N protocol, sender can transmit N amounts of unacknowledged packets in a row. Sender has a window of size up to N that shows the state(whether it’s been sent/ack’ed). When a timeout occurs, sender retransmit the current packet and all the packes with higher sequence # in the window. Upon receiving a packet, Receiver sends an ack for the correctly received packet so far with the highest in-order sequence #

- In Selective Repeat protocol, receiver can individually acknowledge all correctly received packets and it can buffer an out-of-order packet and later deliver it to the above. Sender maintains timer for each unACKed packet

TCP Reliability, Flow Control and Connection Management

3-5 TCP Reliability, Flow Control and Connection Management part1

- TCP segment structure

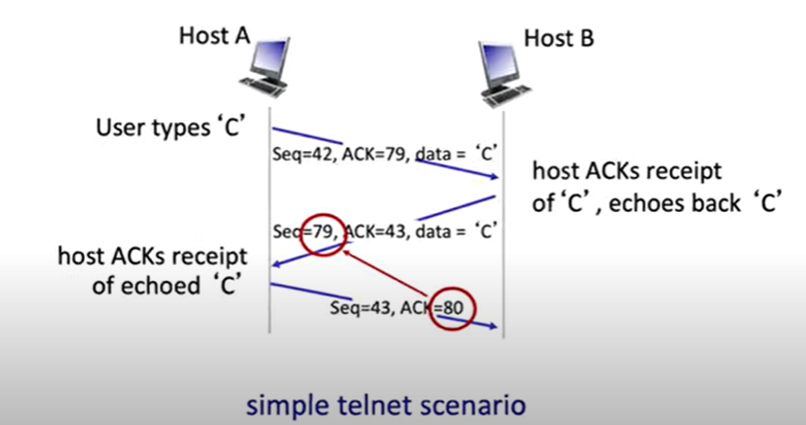

- Sequence #s in the header is the byte stream # of the first byte in segment’s data

- ACKs are sequence # of next byte expected from the sender. It’s cumulative in that the number shows all the byte stream before that # has been received. This means even though the receiver gets a segment and has ACK pending, it will send only one cumulative ACK

- TCP round trip time, timeout

- Timeout interval will be the average value of recent measured times from segment transmission until ACK receipt + safety margin

- usually implemented so that timer checks for the oldest unACKed segment

- TCP fast retransmit

- If sender receives 3 ACKS for same data, it will resend unACKed segment with the smallest sequence # in the current payload instead of waiting for timeout

3-5 TCP Reliability, Flow Control and Connection Management part2

-

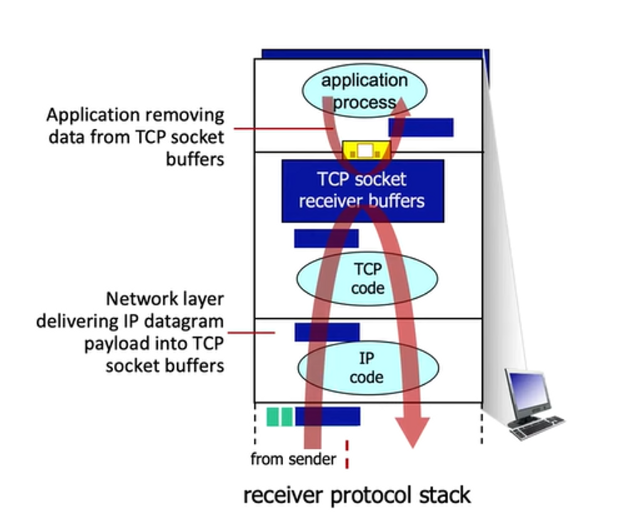

TCP flow control

- How the transport layer receives a segment is first, when the segment is brought up to the transport layer the payload is removed from the segment and is written into the socket buffers. Then an application program will perform a socket read and that’s when the data is removed from the socket buffers. Flow control allows the receiver to control the sender so that the sender won’t overflow the receiver’s buffer by transmitting too much, too fast

- Receiver will notify the sender how much free space there is in the buffers so that the sender will know the limit of the amount of data it can send. It is specified in

rwndfield in TCP header

- How the transport layer receives a segment is first, when the segment is brought up to the transport layer the payload is removed from the segment and is written into the socket buffers. Then an application program will perform a socket read and that’s when the data is removed from the socket buffers. Flow control allows the receiver to control the sender so that the sender won’t overflow the receiver’s buffer by transmitting too much, too fast

-

TCP Connection Management

- TCP is connection oriented in that the sender and the receiver have a lot of shared state and they need to build them before establishing a connection

- A handshake is done by the client reaching out the server(request) and the server answering back(accept) to establish a connection. This 2-way handshake doesn’t always work in network settings because of variable delays, message loss and other issues

- TCP uses 3-way handshake. Both the client and server create a TCP socket and enter the LISTEN state. Then, the client connects to the server by sending a SYN message and a sequence number. Upon receiving it, the server enters the SYN RECEIVED state(not yet established). The server sends a SYN and ACK message back and the client finally sends an ACK message to the server. When the server receives the ACK, it enters the ESTABLISH state and that’s when the connection is established

-

One side sends a FIN message, the other side sends a FIN and ACK message, after which it times out and closes

Principles of Congestion Control

- A congestions is when too many sources sending too much data too fast for network to handle. Long delays and packet loss can happen during congestion. It differs from flow control, which is about one sender sending data too fast for one receiver

- End-end congestion control mainly and network layer assisted congestion control tactics are used by TCP for congestion control