I took a data structure course in the fall of 2021 and it's been a year since then. Recently, I was told by a co-worker at my internship company, who has been a software developer for about more than 10 years, that it is always the best to make the basics as clear and strong as possible. So I decided to write some posts about the basics - data structure, operating system and computer architecture etc. Each post won't be so long so that I can stay focused to finish posting. Let's start on my first BackToBasics post.

Inductive proof

The very first thing that I ran into was the induction. It is widely explained as dominos.

For a predicate (e.g.),

1) Set a base case and prove the base case.

2) Show that the predicate holds for n+1 when the predicate holds for n (induction step).

What is data structures?

An efficient way of organizing data for computer.

Why do we need data structures?

With appropriate algorithms, we can have better performances when it comes to solving problems.

Performance, here by, means how fast and small your programs are (and, of course, always must be correct).

How to measure performance?

Usually by theoretical analysis because experimental measurement can differ by many factors, for example, hardware, language, compiler and OS etc.

Big-O notation

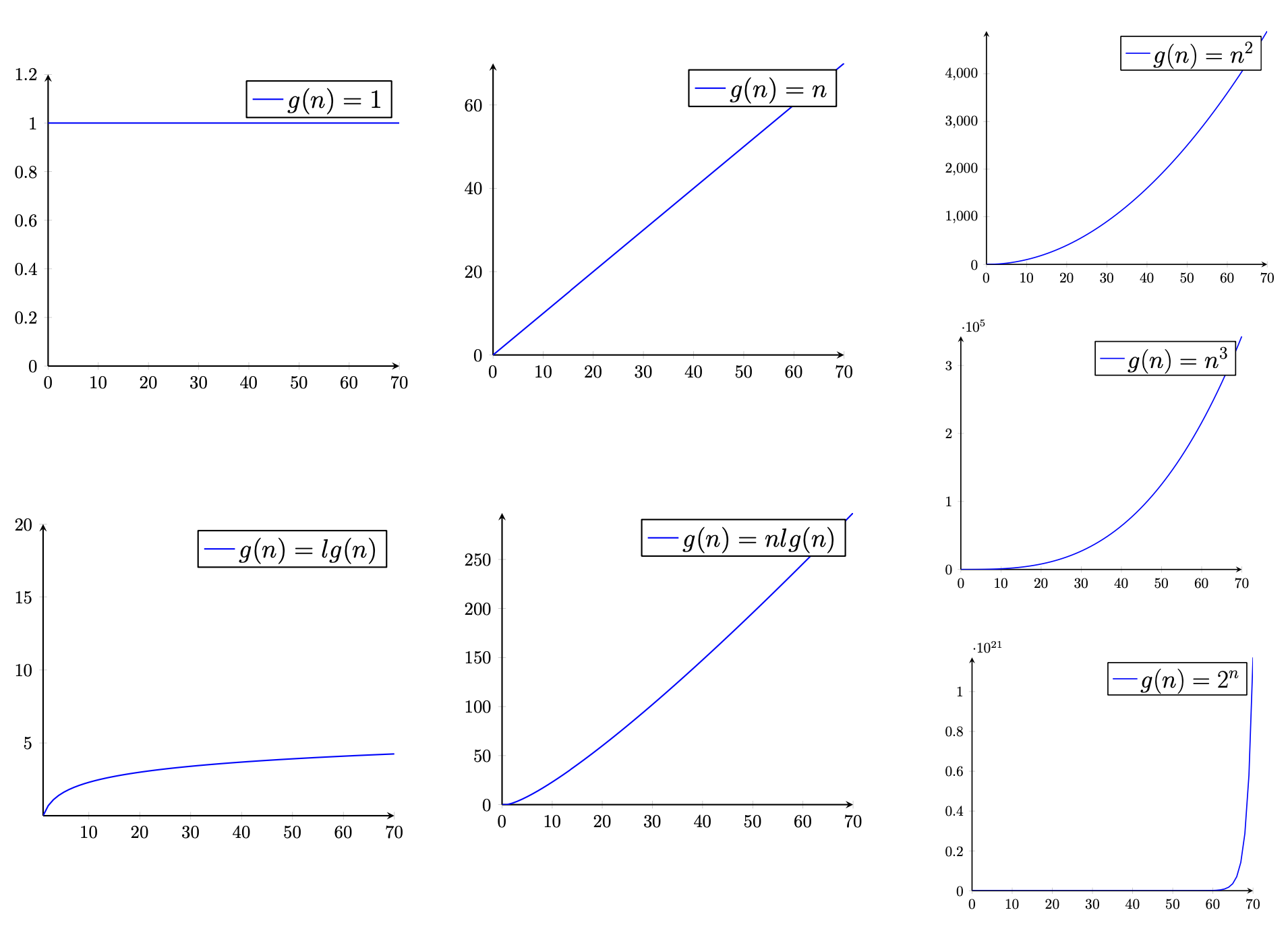

One of the most popular notation when it comes to measuring performances and denoting time complexity. n means the number of inputs from now on.

1) : The fastest time complexity. a.k.a constant time complexity, which means the run time is irrelevant to the number of inputs. It does not mean some operation exactly has to be 1 computation.

2) : Very efficient. The base of logarithm is always 2 without specific mentions.

3) : a.k.a linear time complexity.

4) : The fastest time complexity of sorting algorithms without any additional information.

5) : Quadratic time complexity.

6) : Cubic time complexity.

7) : Exponential time complexity.

Mathematical definition of Big-O notation

Given functions and ,

we say that is if there are positive constants and such that

for

It simply means that is bounded by .

Big-Omega

is if

for

Big-Theta

is if

for and