Introduction

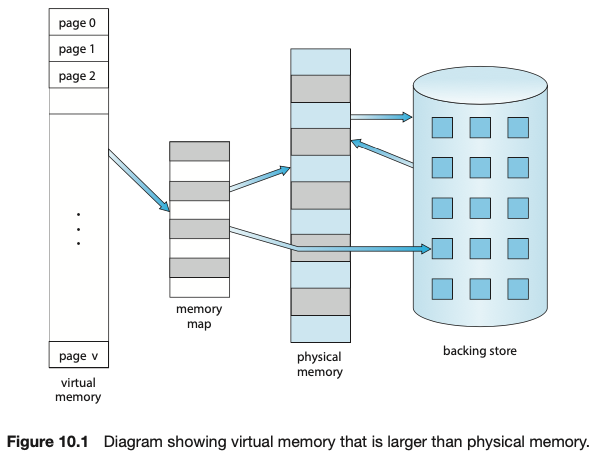

💡 Virtual Memory

- Programmer's view of memory

- Each process has its own virtual memory

- A linear array of bytes addressed by the virtual address

✔️ 가상 메모리를 왜 사용하는가?

- 파티션 크기에 따라 overlay 수정을 할 필요없이 OS가 책임져줘서 프로그래밍을 하기 쉽다.

- 다 올라올 필요없이 프로세스 이미지의 일부만 올라와서 실행한다.

- 가상 메모리는 충분히 크게 잡혀져있어서 프로그래머는 메모리가 (거의) 무한하다고 생각할 수 있다.

💡 Physical Memory

- Machine's physical memory (DRAM)

- Caches are parts of physical memory

- Also called main memory

✔️ Virtual address : The address of a program 명령어나 데이터의 주소

✔️ Physical address : The address of a DRAM

✔️ Page table : OS 가 관리하는 데이터 구조

✔️ TLB (MMU) : Virtual page number 를 Physical Page Number 로 바꾸는 것이 Translate 이라고 한다. Page table 의 entry 들을 Caching 한 하드웨어. 특수한 entry 를 제외하고는 대개 컨텍스트 스위칭될 때마다 flush 되어야 한다. 이것이 없다면 매번 CPU 가 exception 이 걸려서 OS 가 찾아와야한다.. 너무 느려진다!

Virtual Memory

Functions

- Large address space

- Easy to program

- Provide the illusion of infinite amount of memory

- Program (including both code and data) can exceed the main memory capacity

- Processes partially resident in memory

- Protection

- Access rights: read modify/ execute permission

- Each segment/page has its own access rights

- Privilege level

- Access rights: read modify/ execute permission

- Sharing

- Portability

- Increased CPU utilization

- More programs can run at the same time

Require the following functions

- Memory allocation (Placement)

- Any virtual page can be placed in any page frame

- Memory deallocation (Replacement)

- LRU, Clock algorithm

- Memory mapping (Translation)

- Virtual address must be translated to physical address to access main memory and caches

memory management

- Automatic movement of data between main memory and secondary storage

- Done by OS with the help of processor HW (TLB)

- Main memory contains only the most frequently used portions of a process's address space

- Illusion of infinite memory(size of secondary storage) but access time is equal to main memory

- Usually use demand paging (cache 는 눈치빠르게 PreFetching)

- Bring a page on demand

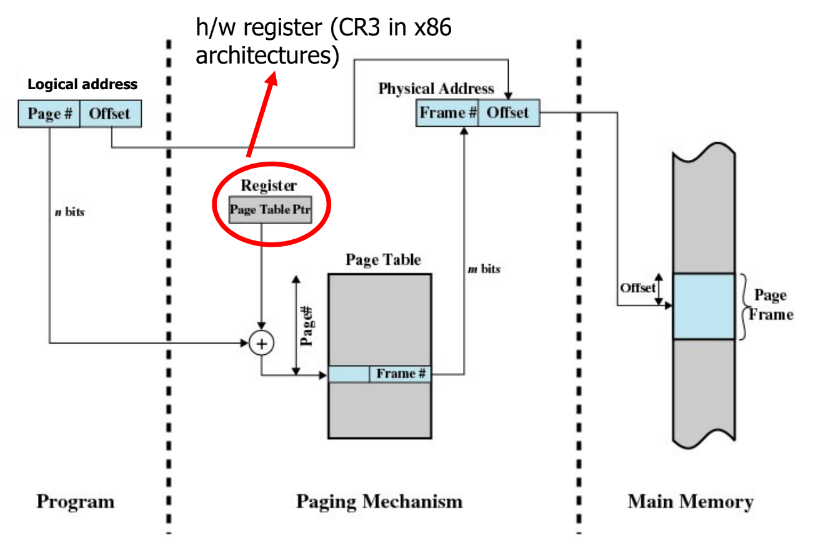

Paging

-

Divide address space into fixed size pages

- VA consists of VPN, offset

- PA consists of PPN, offset

-

Map a virtual page to a physical page frame at runtime

-

Each process has its own page table

- The page table contains mapping between VPN and PPN

- VPN is used as an index into the page table

-

Page Table Entry(PTN) contains

- PPN (Physical Page Number or Frame Number)

- Presence bit : 1 if this page is in main memory

- Modified bit : 1 if this page has been modified

- Reference bits : reference statistics into used for page replacement

- Access control : read/write/execute permissions

- Privilege level : user-level page versus system-level page

- Disk Address

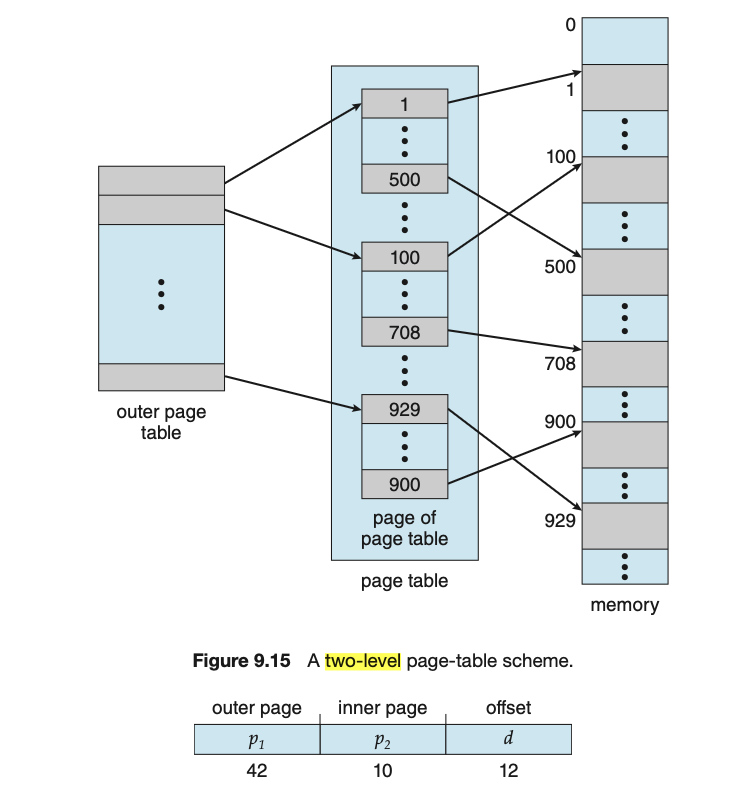

Page table organization

-

Linear : one PTE per virtual page

-

Hierarchical : tree structured page table

- Page Directory 👉 Page Table 👉 Virtual Memory

-

Inverted : PTEs for only pages in main memory 프로세스 수에 상관없이 메모리에 올라와있는 페이지에 대해서만 관리하겠다. frame 에 따라 관리하겠다.

- Hashing 으로 페이지와 프레임을 짝지어준다. Hash collision 이 나면 chain 으로 그 다음 것을 연결한다

-

Page table entries are dynamically allocated as needed

TLB (MMU; Memory Management Unit)

TLB 도 캐시이므로 TLB miss, hit 이 있다

이것이 CPU 내에 있어야만 가상메모리를 지원할 수 있다

✔️ Translation Lookaside Buffer

- Cache of page table entries (PTEs)

- On TLB hit, can do virtual to physical translation without accessing the page table

- On TLB miss (Exception), must search the page table for the missing entry

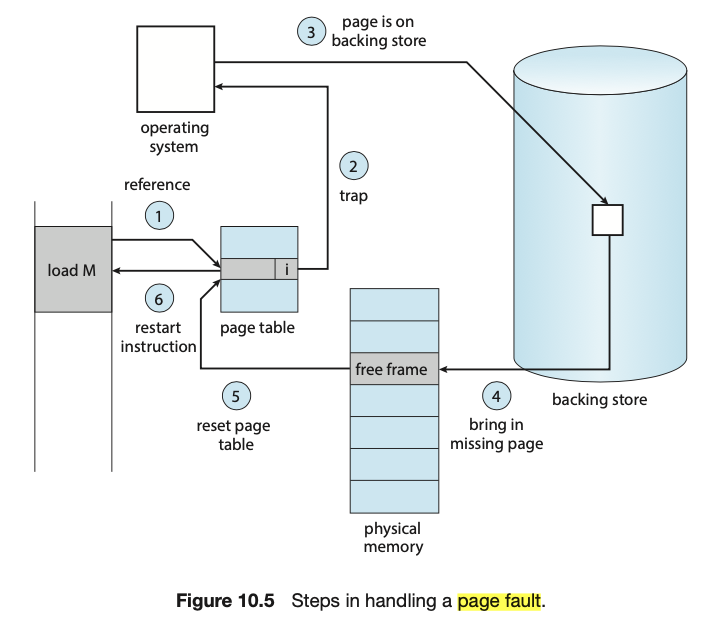

Page fault

💡 But what happens if the process tries to access a page that was not brought into memory? Access to a page marked invalid causes a page fault.

The paging hardware, in translating the address through the page table, will notice that the invalid bit is set, causing a trap to the operating system. This trap is the result of the operating system’s failure to bring the desired page into memory. The procedure for handling this page fault is straightforward (Figure 10.5):

- We check an internal table (usually kept with the process control block) for this process to determine whether the reference was a valid or an invalid memory access.

- If the reference was invalid, we terminate the process. If it was valid but we have not yet brought in that page, we now page it in.

- We find a free frame (by taking one from the free-frame list, for example)

- We schedule a secondary storage operation to read the desired page into

the newly allocated frame. - When the storage read is complete, we modify the internal table kept with the process and the page table to indicate that the page is now in memory.

- We restart the instruction that was interrupted by the trap. The process can now access the page as though it had always been in memory.

Page Size

커지는 추세다. 가져오는 오버헤드를 줄이기 위해서.

- The smaller the page size, the lesser the amount of internal fragmnentation

- However, more pages are required per process

- More pages per process means larger page tables

- For large programs, portion of the page tables of active processes must be in secondary storage instead of main memory

Segmentation

✔️ Segmentation allows a programmer to view a virtual memory as a collection of sgments

✔️ Advantages

- Simplify the handling of growing data structures

- Facilitate sharing among processes

✔️ Segment Table Entry Contains

- Base address of the corresponding segment in main memory (DRAM 에서 시작하는 주소)

- Length of the segment (범위)

- Presence bit - 1 if this segment is in main memory

- Modified bit - 1 if this segment has been modified

Virtual Memory Policies

💡 Key issues: Performance

- Minimize page faults

Fetch Policy

✔️ Demand paging

- Bring a page into main memory only on a page miss

- Generate many page faults when process is first started

- Principle of locality suggests that as more and more pages are brought in, most future references will be to pages that have recently been brought in. and page fault should drop to a very low level

✔️ Prepaging

- Pages other than the one demanded by a page fault are brought in

- If pages of a process are stored contiquously in secondary memory it is more efficient to bring in a number of pages at one time

- Ineffective if extra pages are not referenced

Frame Locking

✔️ Frame Locking

- When a frame is locked, the page currently stored in that frame should not be replaced

- OS kernel and key control structures are locked

- I/O buffers and time-critical areas may be locked

- Locking is achieved by associating a lock bit with each frame

Replacement Algorithms

✔️ Optimal

- Select the page for which the time to the next reference is the longest

✔️ LRU

- Select the page that has not been referenced for the longest time

✔️ FIFO

- Page that has been in memory the longest is replaced

✔️ Clock

- Associate a use bit with each frames

- When a page is first loaded or referenced, the use bit is set to

- Any frame with a use bit of 1 is passed over by the algorithm

- Page frames visualized as laid out in a circle

Page Buffering

✔️ When a page is replaced, the page is not physically moved

- Instead, the PTE for this page is removed and placed in either the free or modified page list

- Free page list is a list of page frames available for reading in pages

- When a page is to be replaced, it remains in memory and its page frame is added to the tail of the free page list

- Thus, if the process reference the page, it is returned to the resident set of that process at little cost

- When a page is to be read in, the page frame at the head of the list is used. destroying the page that was there

- Modified page list is a list of page frames that have been modified

- When a modified is replaced, the page frame is added to the tail of the modified page list

- Modified pages are written out in clusters rather than one at a timer significantly reducing the number of I/O operation

Page Fault Frequency (PFF)

PF 가 너무 자주 발생하면 working set 을 늘려주고 PF 가 너무 가끔 발생하면 working set 을 줄여준다.

✔️ Requires a use bit to be associated with each page in memory

- Bit is set to 1 when that page is accessed

- When a page fault occurs, the OS notes the virtual time since the last page fault for that process

- If the amount of time since the last page fault is less than a threshold (임계점), then a page is added to the working set of the process

- Otherwise, discard all pages with a use bit of 0, and shrink the working set accordingly. At the same time reset the use bit on the remaining pages

- The strategy can be refined by using 2 thresholds: An upper threshold is used to trigger a growth in the working size while a lower threshold is used to trigger a shrink in the working set size

✔️ Does not perform well during the transient periods when there is a shift to a new locality

Multiprogramming

process 수가 너무 많거나 적으면 문제다!

하나의 process 수가 너무 많으면 working set 이 줄어든다. 👉 한 process 안에서 page fault 가 반복된다 👉 thrashing

✔️ Load Control

- Determine the number of processes that will be resident in main memory

- This number is called multiprogramming level

✔️ Too few processes lead to swapping

- Since all the processes can be blocked

✔️ Too many processes, lead to insufficient working set size, resulting in thrashing (frequent faults)

Process Suspension

✔️ If the degree of multiprogramming is to be reduced,

- One or more of the currently resident processes must be swapped out

✔️ Six Possibilities

- Lowest-priority process

- Faulting process

- Last process activated

- Process with the smallest working set

- Largest process

- Process with the largest remaining execution window

🔗 Reference

[KUOCW] 최린 교수님의 운영체제 강의를 수강하고 정리한 내용입니다. 잘못된 내용이 있다면 댓글로 알려주시면 감사하겠습니다 😊