교차검증

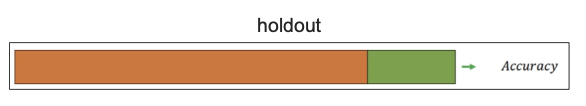

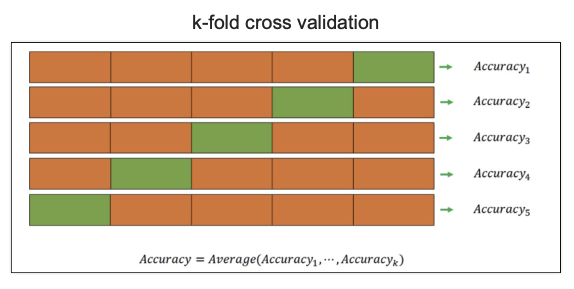

교차 검증(Cross Validation) : 모델의 학습 과정에서 학습 / 검증데이터를 나눌때 단순히 1번 나누는게 아니라 K번 나누고 각각의 학습 모델의 성능을 비교하는 방법

- 과적합 확인

- 데이터에 적용한 모델의 성능을 정확히 표현하기 위해서도 유용하다.

- hold out : train_set, test_set으로 나누는 방법

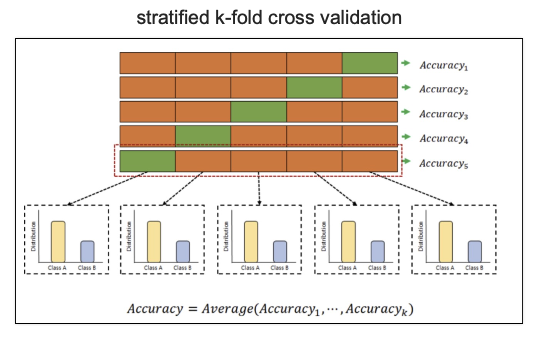

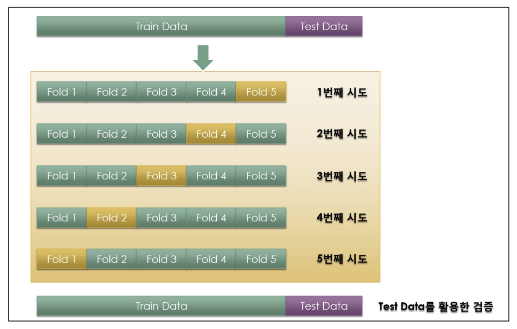

- k-fold cross validation : train_set을 k개로 나누어 새로운 train_set, test_set으로 나누어 평균값 사용

- stratified k-fold cross validation : 데이터의 분포가 다르다면 분포를 유지하는 방법

- 검증(Validation)이 끝난 후 test용 데이터로 최종 평가

교차검증 구현하기

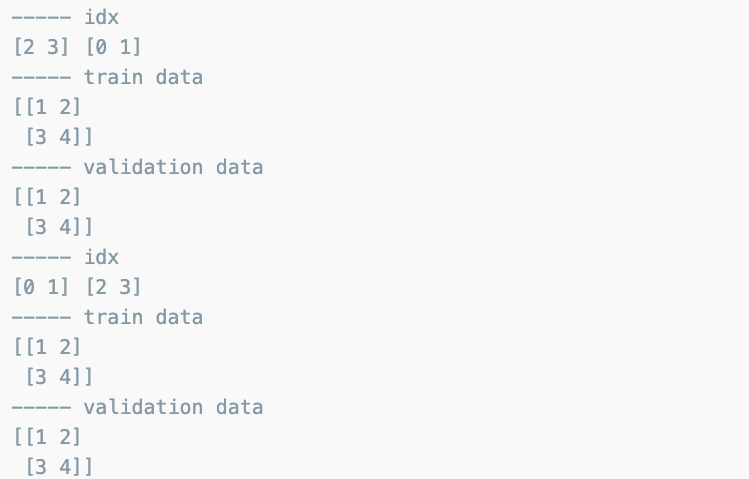

# simple test

import numpy as np

from sklearn.model_selection import KFold

X = np.array([[1, 2], [3, 4], [1, 2], [3, 4]])

y = np.array([1, 2, 3, 4])

# 랜덤으로 하고 싶다면

# kf = KFold(n_splits=2, random_state = val, shuffle=True)

kf = KFold(n_splits=2)

print(kf.get_n_splits)

print(kf)

for train_idx, test_idx in kf.split(X):

print('----- idx')

print(train_idx, test_idx)

print('----- train idx')

print(X[train_idx])

print('----- val data')

print(X[test_idx])

와인 맛 분류하던 데이터

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1.

white_wine['color'] = 0.

wine = pd.concat([red_wine, white_wine])와인 맛 분류기를 위한 데이터 정리

wine['taste'] = [1. if grade > 5 else 0. for grade in wine['quality']]

X = wine.drop(['taste', 'quality'], axis=1)

y = wine['taste']의사결정 나무모델

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

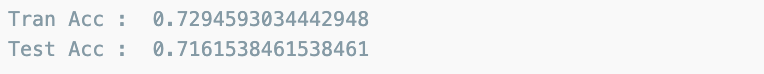

print('Tran Acc : ', accuracy_score(y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(y_test, y_pred_test))

Acc를 신뢰할수 있는지, 위 데이터 분리가 최선 ?

KFOLD

from sklearn.model_selection import KFold

kfold = KFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)KFOLD는 index를 반환한다.

for train_idx,test_idx in kfold.split(X):

print(len(train_idx), len(test_idx))

각각의 fold에 대한 학습 후 acc

cv_accuracy = []

for train_idx,test_idx in kfold.split(X):

X_train = X.iloc[train_idx]

X_test = X.iloc[test_idx]

y_train = y.iloc[train_idx]

y_test = y.iloc[test_idx]

wine_tree_cv.fit(X_train, y_train)

pred = wine_tree_cv.predict(X_test)

cv_accuracy.append(accuracy_score(y_test, pred))

cv_accuracy

각 acc의 분산이 크지 않다면 평균을 대표 값으로 한다.

np.mean(cv_accuracy)

StratifiedKFOLD

from sklearn.model_selection import StratifiedKFold

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)

cv_accuracy = []

for train_idx,test_idx in skfold.split(X,y):

X_train = X.iloc[train_idx]

X_test = X.iloc[test_idx]

y_train = y.iloc[train_idx]

y_test = y.iloc[test_idx]

wine_tree_cv.fit(X_train, y_train)

pred = wine_tree_cv.predict(X_test)

cv_accuracy.append(accuracy_score(y_test, pred))

cv_accuracy

acc의 평균이 더 나쁘다.

np.mean(cv_accuracy)

이럴 경우 , cross validation을 보다 더 간편히

from sklearn.model_selection import cross_val_score

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=5, random_state=13)

cross_val_score(wine_tree_cv, X, y, cv=skfold)

depth가 높다고 무조건 acc가 좋아지는 것도 아니다

def skfold_df(depth):

from sklearn.model_selection import cross_val_score

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=depth, random_state=13)

print(cross_val_score(wine_tree_cv, X, y, cv=skfold))

skfold_df(3)

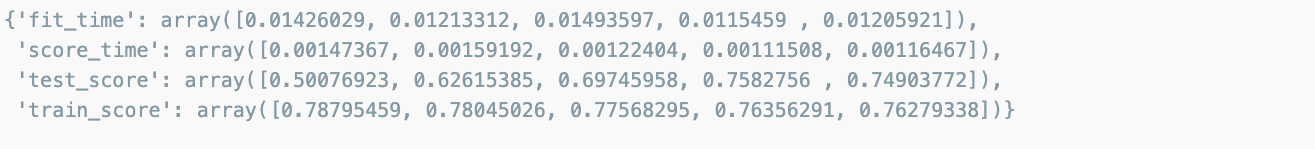

train score와 함께 보고 싶다면

from sklearn.model_selection import cross_validate

cross_validate(wine_tree_cv, X, y, cv=skfold, return_train_score=True)

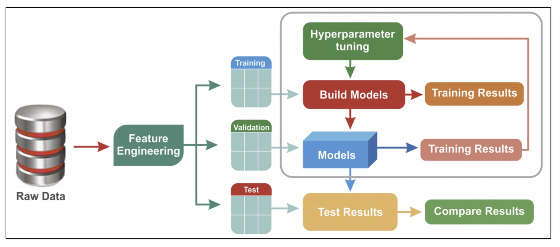

하이퍼파라미터 튜닝

-

모델의 성능을 확보하기 위해 조절하는 설정 값

-

Feature Enginerring : 특성을 관찰하고 머신러닝 모델이 보다 학습결과를 잘 이끌어 낼 수있도록 변경하거나 새로운 특성을 찾아내는 작업

- Training, Vailidation, Test로 데이터 구분

- Train 데이터로 모델 생성 -> 학습 -> Valilidation 데이터로 성과 확인

- 결과를 통해 Hyperparameter 표현 : 손(수동)으로 조정해야하는 값 -

Hyperparameter

- 학습률

- 학습률 스케줄링 방법

- 활성화 함수

- 손실 함수

- 훈련 반복횟수

- 가중치 초기화 방법

- 정규화 방법

- 적층할 계층의 수 -

튜닝대상

- 결정나무에서 아직 튜닝해 볼만한 것은 max_depth이다.

- 간단하게 반복문으로 max_depth를 바꿔가며 테스트해볼 수 있을것이다.

- 그런데 앞으로를 생각해서 보다 더 간편하고 유용한 방법을 생각해보자.

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1.

white_wine['color'] = 0.

wine = pd.concat([red_wine, white_wine])

wine['taste'] = [1. if grade > 5 else 0. for grade in wine['quality']]

X = wine.drop(['taste', 'quality'], axis=1)

y = wine['taste']GridSearchCV

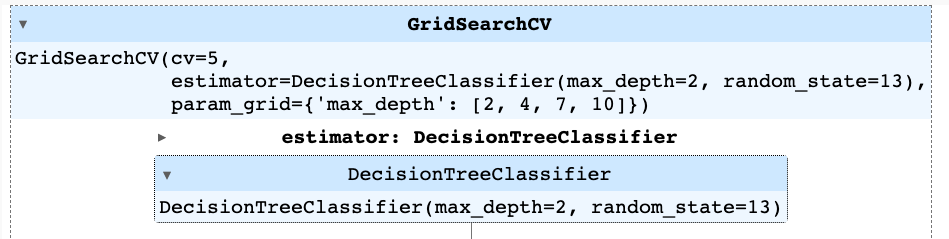

GridSearchCV : 그리드를 사용한 복수 하이퍼 파라미터 최적화, 모형 래퍼(Wrapper) 성격의 클래스

- 클래스 객체에 fit 메서드를 호출하면 grid search를 사용하여 자동으로 복수개의 내부 모형을 생성하고 이를 모두 실행시켜서 최적 파라미터를 찾아준다.

- gridscores : param_grid 의 모든 파리미터 조합에 대한 성능 결과. 각각의 원소는 다음 요소로 이루어진 튜플이다.

- parameters: 사용된 파라미터

- mean_validation_score: 교차 검증(cross-validation) 결과의 평균값

- cv_validation_scores: 모든 교차 검증(cross-validation) 결과

- bestscore : 최고 점수

- bestparams : 최고 점수를 낸 파라미터

- bestestimator : 최고 점수를 낸 파라미터를 가진 모형

from sklearn.model_selection import GridSearchCV

from sklearn.tree import DecisionTreeClassifier

params = {'max_depth' : [2, 4, 7, 10]}

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

gridsearch = GridSearchCV(estimator=wine_tree, param_grid=params, cv=5)

gridsearch.fit(X,y)

여기서 n_jobs 옵션을 높여주면 CPU의 코어를 보다 병렬로 활용함.

Core가 많으면 n_jobs를 높이면 속도가 빨라짐.

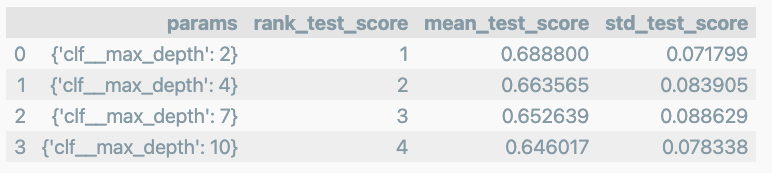

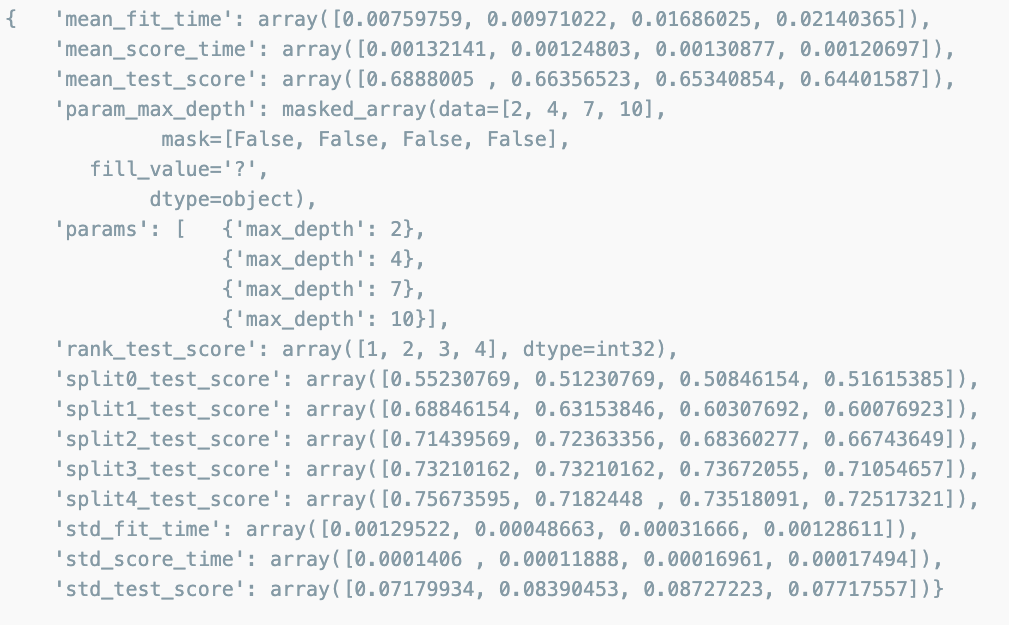

GridSearchCV의 결과

import pprint

pp = pprint.PrettyPrinter(indent=4)

pp.pprint(gridsearch.cv_results_)

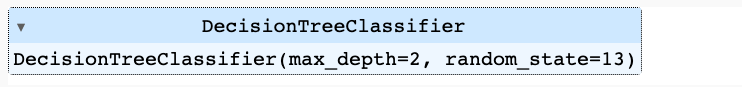

최적의 성능을 가진 모델은?

gridsearch.best_estimator_

gridsearch.best_score_

gridsearch.best_params_

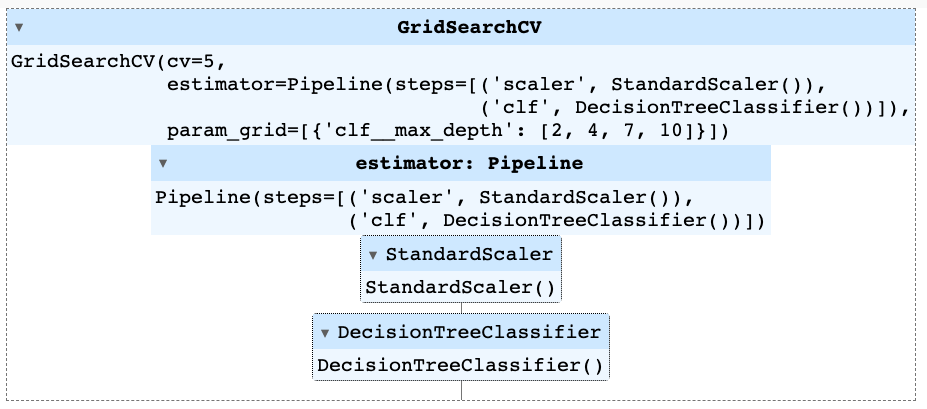

만약 pipeline을 적용한 모델에 GridSearch를 적용하고 싶다면

from sklearn.pipeline import Pipeline

from sklearn.tree import DecisionTreeClassifier

from sklearn.preprocessing import StandardScaler

estimators = [

('scaler',StandardScaler()),

('clf',DecisionTreeClassifier())

]

pipe = Pipeline(estimators)

param_grid = [{'clf__max_depth':[2,4,7,10]}]

GridSearch = GridSearchCV(estimator=pipe, param_grid=param_grid, cv =5)

GridSearch.fit(X,y)

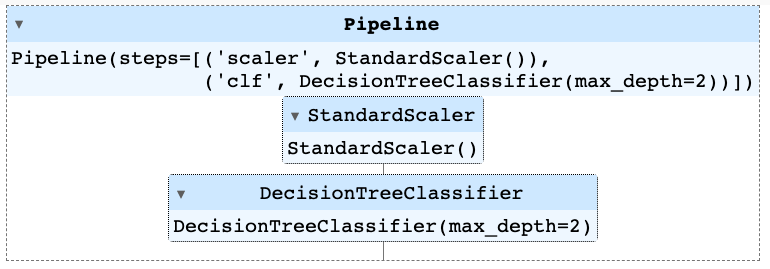

best 모델

GridSearch.best_estimator_

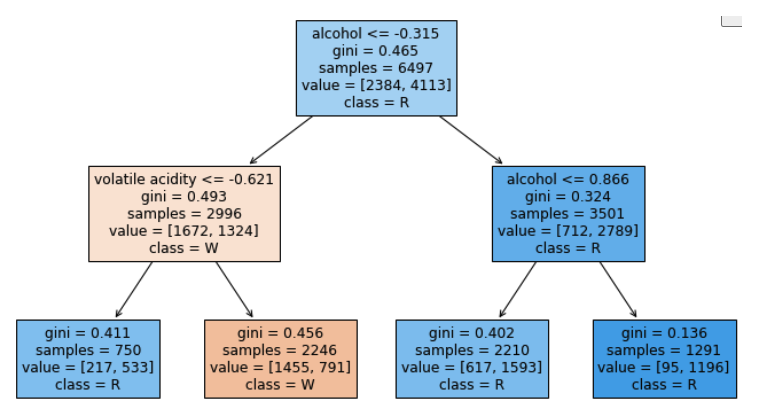

최적 모델 트리 그리기

import matplotlib.pyplot as plt

from sklearn.tree import plot_tree

plt.figure(figsize=(12, 7))

plot_tree(GridSearch.best_estimator_['clf'], feature_names=X.columns,

class_names=['W', 'R'],

filled=True)

plt.show()

표로 성능결과 정리

- accuracy의 평균과 표준편차를 확인