Istio 2주차

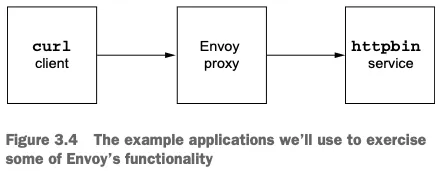

🚀 Envoy in action

- https://github.com/AcornPublishing/istio-in-action

- https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/upstream/load_balancing/load_balancers

1. 도커 이미지 가져오기

docker pull envoyproxy/envoy:v1.19.0

docker pull curlimages/curl

docker pull mccutchen/go-httpbin2. 도커 이미지 확인

docker images✅ 출력

REPOSITORY TAG IMAGE ID CREATED SIZE

curlimages/curl latest e507f3e43db3 13 days ago 21.9MB

mccutchen/go-httpbin latest 18fc7a0469d6 2 weeks ago 38.1MB

envoyproxy/envoy v1.19.0 f48f130ac643 3 years ago 134MB

3. httpbin 서비스 실행

docker run -d -e PORT=8000 --name httpbin mccutchen/go-httpbin

# 결과

8db4f5f5a1971082e620c4ec023b4799f4b6d1d73800c273df5ce683d49c78d9docker ps✅ 출력

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8db4f5f5a197 mccutchen/go-httpbin "/bin/go-httpbin" About a minute ago Up About a minute 8080/tcp httpbin4. curl 컨테이너로 httpbin 호출 확인

docker run -it --rm --link httpbin curlimages/curl curl -X GET http://httpbin:8000/headers✅ 출력

{

"headers": {

"Accept": [

"*/*"

],

"Host": [

"httpbin:8000"

],

"User-Agent": [

"curl/8.13.0"

]

}

}/headers엔드포인트 호출 시 사용된 헤더가 함께 반환됨

5. Envoy 프록시 실행 및 help 출력 확인

docker run -it --rm envoyproxy/envoy:v1.19.0 envoy --help✅ 출력

USAGE:

envoy [--enable-core-dump] [--socket-mode <string>] [--socket-path

<string>] [--disable-extensions <string>] [--cpuset-threads]

[--enable-mutex-tracing] [--disable-hot-restart] [--mode

<string>] [--parent-shutdown-time-s <uint32_t>] [--drain-strategy

<string>] [--drain-time-s <uint32_t>] [--file-flush-interval-msec

<uint32_t>] [--service-zone <string>] [--service-node <string>]

[--service-cluster <string>] [--hot-restart-version]

[--restart-epoch <uint32_t>] [--log-path <string>]

[--enable-fine-grain-logging] [--log-format-escaped]

[--log-format <string>] [--component-log-level <string>] [-l

<string>] [--local-address-ip-version <string>]

[--admin-address-path <string>] [--ignore-unknown-dynamic-fields]

[--reject-unknown-dynamic-fields] [--allow-unknown-static-fields]

[--allow-unknown-fields] [--bootstrap-version <string>]

[--config-yaml <string>] [-c <string>] [--concurrency <uint32_t>]

[--base-id-path <string>] [--use-dynamic-base-id] [--base-id

<uint32_t>] [--] [--version] [-h]

Where:

--enable-core-dump

Enable core dumps

--socket-mode <string>

Socket file permission

--socket-path <string>

Path to hot restart socket file

--disable-extensions <string>

Comma-separated list of extensions to disable

--cpuset-threads

Get the default # of worker threads from cpuset size

--enable-mutex-tracing

Enable mutex contention tracing functionality

--disable-hot-restart

Disable hot restart functionality

--mode <string>

One of 'serve' (default; validate configs and then serve traffic

normally) or 'validate' (validate configs and exit).

--parent-shutdown-time-s <uint32_t>

Hot restart parent shutdown time in seconds

--drain-strategy <string>

Hot restart drain sequence behaviour, one of 'gradual' (default) or

'immediate'.

--drain-time-s <uint32_t>

Hot restart and LDS removal drain time in seconds

--file-flush-interval-msec <uint32_t>

Interval for log flushing in msec

--service-zone <string> # 프록시를 배포할 가용 영역을 지정

Zone name

--service-node <string> # 프록시에 고유한 이름 부여

Node name

--service-cluster <string>

Cluster name

--hot-restart-version

hot restart compatibility version

--restart-epoch <uint32_t>

hot restart epoch #

--log-path <string>

Path to logfile

--enable-fine-grain-logging

Logger mode: enable file level log control(Fancy Logger)or not

--log-format-escaped

Escape c-style escape sequences in the application logs

--log-format <string>

Log message format in spdlog syntax (see

https://github.com/gabime/spdlog/wiki/3.-Custom-formatting)

Default is "[%Y-%m-%d %T.%e][%t][%l][%n] [%g:%#] %v"

--component-log-level <string>

Comma separated list of component log levels. For example

upstream:debug,config:trace

-l <string>, --log-level <string>

Log levels: [trace][debug][info][warning

|warn][error][critical][off]

Default is [info]

--local-address-ip-version <string>

The local IP address version (v4 or v6).

--admin-address-path <string>

Admin address path

--ignore-unknown-dynamic-fields

ignore unknown fields in dynamic configuration

--reject-unknown-dynamic-fields

reject unknown fields in dynamic configuration

--allow-unknown-static-fields

allow unknown fields in static configuration

--allow-unknown-fields

allow unknown fields in static configuration (DEPRECATED)

--bootstrap-version <string>

API version to parse the bootstrap config as (e.g. 3). If unset, all

known versions will be attempted

--config-yaml <string>

Inline YAML configuration, merges with the contents of --config-path

-c <string>, --config-path <string> # 설정 파일을 전달

Path to configuration file

--concurrency <uint32_t>

# of worker threads to run

--base-id-path <string>

path to which the base ID is written

--use-dynamic-base-id

the server chooses a base ID dynamically. Supersedes a static base ID.

May not be used when the restart epoch is non-zero.

--base-id <uint32_t>

base ID so that multiple envoys can run on the same host if needed

--, --ignore_rest

Ignores the rest of the labeled arguments following this flag.

--version

Displays version information and exits.

-h, --help

Displays usage information and exits.

envoy6. Envoy 실행 오류 확인

설정 파일을 전달하지 않고 Envoy를 실행하려다 발생한 에러 확인

docker run -it --rm envoyproxy/envoy:v1.19.0 envoy ✅ 출력

[2025-04-19 11:09:34.147][1][info][main] [source/server/server.cc:338] initializing epoch 0 (base id=0, hot restart version=11.104)

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:340] statically linked extensions:

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.filters.listener: envoy.filters.listener.http_inspector, envoy.filters.listener.original_dst, envoy.filters.listener.original_src, envoy.filters.listener.proxy_protocol, envoy.filters.listener.tls_inspector, envoy.listener.http_inspector, envoy.listener.original_dst, envoy.listener.original_src, envoy.listener.proxy_protocol, envoy.listener.tls_inspector

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.filters.udp_listener: envoy.filters.udp.dns_filter, envoy.filters.udp_listener.udp_proxy

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.clusters: envoy.cluster.eds, envoy.cluster.logical_dns, envoy.cluster.original_dst, envoy.cluster.static, envoy.cluster.strict_dns, envoy.clusters.aggregate, envoy.clusters.dynamic_forward_proxy, envoy.clusters.redis

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.filters.http: envoy.bandwidth_limit, envoy.buffer, envoy.cors, envoy.csrf, envoy.ext_authz, envoy.ext_proc, envoy.fault, envoy.filters.http.adaptive_concurrency, envoy.filters.http.admission_control, envoy.filters.http.alternate_protocols_cache, envoy.filters.http.aws_lambda, envoy.filters.http.aws_request_signing, envoy.filters.http.bandwidth_limit, envoy.filters.http.buffer, envoy.filters.http.cache, envoy.filters.http.cdn_loop, envoy.filters.http.composite, envoy.filters.http.compressor, envoy.filters.http.cors, envoy.filters.http.csrf, envoy.filters.http.decompressor, envoy.filters.http.dynamic_forward_proxy, envoy.filters.http.dynamo, envoy.filters.http.ext_authz, envoy.filters.http.ext_proc, envoy.filters.http.fault, envoy.filters.http.grpc_http1_bridge, envoy.filters.http.grpc_http1_reverse_bridge, envoy.filters.http.grpc_json_transcoder, envoy.filters.http.grpc_stats, envoy.filters.http.grpc_web, envoy.filters.http.header_to_metadata, envoy.filters.http.health_check, envoy.filters.http.ip_tagging, envoy.filters.http.jwt_authn, envoy.filters.http.local_ratelimit, envoy.filters.http.lua, envoy.filters.http.oauth2, envoy.filters.http.on_demand, envoy.filters.http.original_src, envoy.filters.http.ratelimit, envoy.filters.http.rbac, envoy.filters.http.router, envoy.filters.http.set_metadata, envoy.filters.http.squash, envoy.filters.http.tap, envoy.filters.http.wasm, envoy.grpc_http1_bridge, envoy.grpc_json_transcoder, envoy.grpc_web, envoy.health_check, envoy.http_dynamo_filter, envoy.ip_tagging, envoy.local_rate_limit, envoy.lua, envoy.rate_limit, envoy.router, envoy.squash, match-wrapper

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.tracers: envoy.dynamic.ot, envoy.lightstep, envoy.tracers.datadog, envoy.tracers.dynamic_ot, envoy.tracers.lightstep, envoy.tracers.opencensus, envoy.tracers.skywalking, envoy.tracers.xray, envoy.tracers.zipkin, envoy.zipkin

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.route_matchers: default

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.retry_priorities: envoy.retry_priorities.previous_priorities

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.http.cache: envoy.extensions.http.cache.simple

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.matching.action: composite-action, skip

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.internal_redirect_predicates: envoy.internal_redirect_predicates.allow_listed_routes, envoy.internal_redirect_predicates.previous_routes, envoy.internal_redirect_predicates.safe_cross_scheme

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.guarddog_actions: envoy.watchdog.abort_action, envoy.watchdog.profile_action

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.formatter: envoy.formatter.req_without_query

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.stats_sinks: envoy.dog_statsd, envoy.graphite_statsd, envoy.metrics_service, envoy.stat_sinks.dog_statsd, envoy.stat_sinks.graphite_statsd, envoy.stat_sinks.hystrix, envoy.stat_sinks.metrics_service, envoy.stat_sinks.statsd, envoy.stat_sinks.wasm, envoy.statsd

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.filters: envoy.filters.thrift.rate_limit, envoy.filters.thrift.router

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.matching.http.input: request-headers, request-trailers, response-headers, response-trailers

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.health_checkers: envoy.health_checkers.redis

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.resolvers: envoy.ip

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.protocols: dubbo

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.bootstrap: envoy.bootstrap.wasm, envoy.extensions.network.socket_interface.default_socket_interface

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.retry_host_predicates: envoy.retry_host_predicates.omit_canary_hosts, envoy.retry_host_predicates.omit_host_metadata, envoy.retry_host_predicates.previous_hosts

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.transports: auto, framed, header, unframed

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.downstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, raw_buffer, starttls, tls

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.quic.server.crypto_stream: envoy.quic.crypto_stream.server.quiche

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.wasm.runtime: envoy.wasm.runtime.null, envoy.wasm.runtime.v8

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.rate_limit_descriptors: envoy.rate_limit_descriptors.expr

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.access_loggers: envoy.access_loggers.file, envoy.access_loggers.http_grpc, envoy.access_loggers.open_telemetry, envoy.access_loggers.stderr, envoy.access_loggers.stdout, envoy.access_loggers.tcp_grpc, envoy.access_loggers.wasm, envoy.file_access_log, envoy.http_grpc_access_log, envoy.open_telemetry_access_log, envoy.stderr_access_log, envoy.stdout_access_log, envoy.tcp_grpc_access_log, envoy.wasm_access_log

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.compression.compressor: envoy.compression.brotli.compressor, envoy.compression.gzip.compressor

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.request_id: envoy.request_id.uuid

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.filters: envoy.filters.dubbo.router

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.quic.proof_source: envoy.quic.proof_source.filter_chain

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.http.original_ip_detection: envoy.http.original_ip_detection.custom_header, envoy.http.original_ip_detection.xff

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.matching.common_inputs: envoy.matching.common_inputs.environment_variable

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.http.stateful_header_formatters: preserve_case

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.compression.decompressor: envoy.compression.brotli.decompressor, envoy.compression.gzip.decompressor

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.matching.input_matchers: envoy.matching.matchers.consistent_hashing, envoy.matching.matchers.ip

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.resource_monitors: envoy.resource_monitors.fixed_heap, envoy.resource_monitors.injected_resource

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.serializers: dubbo.hessian2

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.upstream_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions, envoy.upstreams.http.http_protocol_options

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.tls.cert_validator: envoy.tls.cert_validator.default, envoy.tls.cert_validator.spiffe

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.grpc_credentials: envoy.grpc_credentials.aws_iam, envoy.grpc_credentials.default, envoy.grpc_credentials.file_based_metadata

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.filters.network: envoy.client_ssl_auth, envoy.echo, envoy.ext_authz, envoy.filters.network.client_ssl_auth, envoy.filters.network.connection_limit, envoy.filters.network.direct_response, envoy.filters.network.dubbo_proxy, envoy.filters.network.echo, envoy.filters.network.ext_authz, envoy.filters.network.http_connection_manager, envoy.filters.network.kafka_broker, envoy.filters.network.local_ratelimit, envoy.filters.network.mongo_proxy, envoy.filters.network.mysql_proxy, envoy.filters.network.postgres_proxy, envoy.filters.network.ratelimit, envoy.filters.network.rbac, envoy.filters.network.redis_proxy, envoy.filters.network.rocketmq_proxy, envoy.filters.network.sni_cluster, envoy.filters.network.sni_dynamic_forward_proxy, envoy.filters.network.tcp_proxy, envoy.filters.network.thrift_proxy, envoy.filters.network.wasm, envoy.filters.network.zookeeper_proxy, envoy.http_connection_manager, envoy.mongo_proxy, envoy.ratelimit, envoy.redis_proxy, envoy.tcp_proxy

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.upstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, envoy.transport_sockets.upstream_proxy_protocol, raw_buffer, starttls, tls

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.upstreams: envoy.filters.connection_pools.tcp.generic

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.protocols: auto, binary, binary/non-strict, compact, twitter

[2025-04-19 11:09:34.148][1][critical][main] [source/server/server.cc:112] error initializing configuration '': At least one of --config-path or --config-yaml or Options::configProto() should be non-empty

[2025-04-19 11:09:34.148][1][info][main] [source/server/server.cc:855] exiting

At least one of --config-path or --config-yaml or Options::configProto() should be non-empty7. simple.yaml 파일 확인

cat ch3/simple.yaml✅ 출력

admin:

address:

socket_address: { address: 0.0.0.0, port_value: 15000 }

static_resources:

listeners:

- name: httpbin-demo

address:

socket_address: { address: 0.0.0.0, port_value: 15001 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

http_filters:

- name: envoy.filters.http.router

route_config:

name: httpbin_local_route

virtual_hosts:

- name: httpbin_local_service

domains: ["*"]

routes:

- match: { prefix: "/" }

route:

auto_host_rewrite: true

cluster: httpbin_service

clusters:

- name: httpbin_service

connect_timeout: 5s

type: LOGICAL_DNS

dns_lookup_family: V4_ONLY

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: httpbin

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: httpbin

port_value: 8000- 포트 15001에 단일 리스너를 설정해 모든 트래픽을 httpbin 클러스터로 전달

8. Envoy 프록시 컨테이너 시작

docker run --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple.yaml)"✅ 출력

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:338] initializing epoch 0 (base id=0, hot restart version=11.104)

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:340] statically linked extensions:

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.transports: auto, framed, header, unframed

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.http.stateful_header_formatters: preserve_case

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.filters: envoy.filters.thrift.rate_limit, envoy.filters.thrift.router

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.network: envoy.client_ssl_auth, envoy.echo, envoy.ext_authz, envoy.filters.network.client_ssl_auth, envoy.filters.network.connection_limit, envoy.filters.network.direct_response, envoy.filters.network.dubbo_proxy, envoy.filters.network.echo, envoy.filters.network.ext_authz, envoy.filters.network.http_connection_manager, envoy.filters.network.kafka_broker, envoy.filters.network.local_ratelimit, envoy.filters.network.mongo_proxy, envoy.filters.network.mysql_proxy, envoy.filters.network.postgres_proxy, envoy.filters.network.ratelimit, envoy.filters.network.rbac, envoy.filters.network.redis_proxy, envoy.filters.network.rocketmq_proxy, envoy.filters.network.sni_cluster, envoy.filters.network.sni_dynamic_forward_proxy, envoy.filters.network.tcp_proxy, envoy.filters.network.thrift_proxy, envoy.filters.network.wasm, envoy.filters.network.zookeeper_proxy, envoy.http_connection_manager, envoy.mongo_proxy, envoy.ratelimit, envoy.redis_proxy, envoy.tcp_proxy

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.health_checkers: envoy.health_checkers.redis

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.upstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, envoy.transport_sockets.upstream_proxy_protocol, raw_buffer, starttls, tls

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.http: envoy.bandwidth_limit, envoy.buffer, envoy.cors, envoy.csrf, envoy.ext_authz, envoy.ext_proc, envoy.fault, envoy.filters.http.adaptive_concurrency, envoy.filters.http.admission_control, envoy.filters.http.alternate_protocols_cache, envoy.filters.http.aws_lambda, envoy.filters.http.aws_request_signing, envoy.filters.http.bandwidth_limit, envoy.filters.http.buffer, envoy.filters.http.cache, envoy.filters.http.cdn_loop, envoy.filters.http.composite, envoy.filters.http.compressor, envoy.filters.http.cors, envoy.filters.http.csrf, envoy.filters.http.decompressor, envoy.filters.http.dynamic_forward_proxy, envoy.filters.http.dynamo, envoy.filters.http.ext_authz, envoy.filters.http.ext_proc, envoy.filters.http.fault, envoy.filters.http.grpc_http1_bridge, envoy.filters.http.grpc_http1_reverse_bridge, envoy.filters.http.grpc_json_transcoder, envoy.filters.http.grpc_stats, envoy.filters.http.grpc_web, envoy.filters.http.header_to_metadata, envoy.filters.http.health_check, envoy.filters.http.ip_tagging, envoy.filters.http.jwt_authn, envoy.filters.http.local_ratelimit, envoy.filters.http.lua, envoy.filters.http.oauth2, envoy.filters.http.on_demand, envoy.filters.http.original_src, envoy.filters.http.ratelimit, envoy.filters.http.rbac, envoy.filters.http.router, envoy.filters.http.set_metadata, envoy.filters.http.squash, envoy.filters.http.tap, envoy.filters.http.wasm, envoy.grpc_http1_bridge, envoy.grpc_json_transcoder, envoy.grpc_web, envoy.health_check, envoy.http_dynamo_filter, envoy.ip_tagging, envoy.local_rate_limit, envoy.lua, envoy.rate_limit, envoy.router, envoy.squash, match-wrapper

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.http.input: request-headers, request-trailers, response-headers, response-trailers

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.protocols: auto, binary, binary/non-strict, compact, twitter

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.quic.server.crypto_stream: envoy.quic.crypto_stream.server.quiche

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.upstream_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions, envoy.upstreams.http.http_protocol_options

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.request_id: envoy.request_id.uuid

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.retry_host_predicates: envoy.retry_host_predicates.omit_canary_hosts, envoy.retry_host_predicates.omit_host_metadata, envoy.retry_host_predicates.previous_hosts

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.compression.compressor: envoy.compression.brotli.compressor, envoy.compression.gzip.compressor

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.resource_monitors: envoy.resource_monitors.fixed_heap, envoy.resource_monitors.injected_resource

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.route_matchers: default

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.rate_limit_descriptors: envoy.rate_limit_descriptors.expr

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.listener: envoy.filters.listener.http_inspector, envoy.filters.listener.original_dst, envoy.filters.listener.original_src, envoy.filters.listener.proxy_protocol, envoy.filters.listener.tls_inspector, envoy.listener.http_inspector, envoy.listener.original_dst, envoy.listener.original_src, envoy.listener.proxy_protocol, envoy.listener.tls_inspector

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.udp_listener: envoy.filters.udp.dns_filter, envoy.filters.udp_listener.udp_proxy

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.tls.cert_validator: envoy.tls.cert_validator.default, envoy.tls.cert_validator.spiffe

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.http.original_ip_detection: envoy.http.original_ip_detection.custom_header, envoy.http.original_ip_detection.xff

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.filters: envoy.filters.dubbo.router

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.action: composite-action, skip

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.stats_sinks: envoy.dog_statsd, envoy.graphite_statsd, envoy.metrics_service, envoy.stat_sinks.dog_statsd, envoy.stat_sinks.graphite_statsd, envoy.stat_sinks.hystrix, envoy.stat_sinks.metrics_service, envoy.stat_sinks.statsd, envoy.stat_sinks.wasm, envoy.statsd

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.formatter: envoy.formatter.req_without_query

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.upstreams: envoy.filters.connection_pools.tcp.generic

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.serializers: dubbo.hessian2

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.downstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, raw_buffer, starttls, tls

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.tracers: envoy.dynamic.ot, envoy.lightstep, envoy.tracers.datadog, envoy.tracers.dynamic_ot, envoy.tracers.lightstep, envoy.tracers.opencensus, envoy.tracers.skywalking, envoy.tracers.xray, envoy.tracers.zipkin, envoy.zipkin

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.guarddog_actions: envoy.watchdog.abort_action, envoy.watchdog.profile_action

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.resolvers: envoy.ip

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.bootstrap: envoy.bootstrap.wasm, envoy.extensions.network.socket_interface.default_socket_interface

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.access_loggers: envoy.access_loggers.file, envoy.access_loggers.http_grpc, envoy.access_loggers.open_telemetry, envoy.access_loggers.stderr, envoy.access_loggers.stdout, envoy.access_loggers.tcp_grpc, envoy.access_loggers.wasm, envoy.file_access_log, envoy.http_grpc_access_log, envoy.open_telemetry_access_log, envoy.stderr_access_log, envoy.stdout_access_log, envoy.tcp_grpc_access_log, envoy.wasm_access_log

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.wasm.runtime: envoy.wasm.runtime.null, envoy.wasm.runtime.v8

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.clusters: envoy.cluster.eds, envoy.cluster.logical_dns, envoy.cluster.original_dst, envoy.cluster.static, envoy.cluster.strict_dns, envoy.clusters.aggregate, envoy.clusters.dynamic_forward_proxy, envoy.clusters.redis

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.protocols: dubbo

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.internal_redirect_predicates: envoy.internal_redirect_predicates.allow_listed_routes, envoy.internal_redirect_predicates.previous_routes, envoy.internal_redirect_predicates.safe_cross_scheme

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.input_matchers: envoy.matching.matchers.consistent_hashing, envoy.matching.matchers.ip

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.retry_priorities: envoy.retry_priorities.previous_priorities

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.http.cache: envoy.extensions.http.cache.simple

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.compression.decompressor: envoy.compression.brotli.decompressor, envoy.compression.gzip.decompressor

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.grpc_credentials: envoy.grpc_credentials.aws_iam, envoy.grpc_credentials.default, envoy.grpc_credentials.file_based_metadata

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.common_inputs: envoy.matching.common_inputs.environment_variable

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.quic.proof_source: envoy.quic.proof_source.filter_chain

[2025-04-19 11:17:53.161][1][info][main] [source/server/server.cc:358] HTTP header map info:

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] request header map: 632 bytes: :authority,:method,:path,:protocol,:scheme,accept,accept-encoding,access-control-request-method,authentication,authorization,cache-control,cdn-loop,connection,content-encoding,content-length,content-type,expect,grpc-accept-encoding,grpc-timeout,if-match,if-modified-since,if-none-match,if-range,if-unmodified-since,keep-alive,origin,pragma,proxy-connection,referer,te,transfer-encoding,upgrade,user-agent,via,x-client-trace-id,x-envoy-attempt-count,x-envoy-decorator-operation,x-envoy-downstream-service-cluster,x-envoy-downstream-service-node,x-envoy-expected-rq-timeout-ms,x-envoy-external-address,x-envoy-force-trace,x-envoy-hedge-on-per-try-timeout,x-envoy-internal,x-envoy-ip-tags,x-envoy-max-retries,x-envoy-original-path,x-envoy-original-url,x-envoy-retriable-header-names,x-envoy-retriable-status-codes,x-envoy-retry-grpc-on,x-envoy-retry-on,x-envoy-upstream-alt-stat-name,x-envoy-upstream-rq-per-try-timeout-ms,x-envoy-upstream-rq-timeout-alt-response,x-envoy-upstream-rq-timeout-ms,x-forwarded-client-cert,x-forwarded-for,x-forwarded-proto,x-ot-span-context,x-request-id

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] request trailer map: 136 bytes:

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] response header map: 432 bytes: :status,access-control-allow-credentials,access-control-allow-headers,access-control-allow-methods,access-control-allow-origin,access-control-expose-headers,access-control-max-age,age,cache-control,connection,content-encoding,content-length,content-type,date,etag,expires,grpc-message,grpc-status,keep-alive,last-modified,location,proxy-connection,server,transfer-encoding,upgrade,vary,via,x-envoy-attempt-count,x-envoy-decorator-operation,x-envoy-degraded,x-envoy-immediate-health-check-fail,x-envoy-ratelimited,x-envoy-upstream-canary,x-envoy-upstream-healthchecked-cluster,x-envoy-upstream-service-time,x-request-id

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] response trailer map: 160 bytes: grpc-message,grpc-status

[2025-04-19 11:17:53.179][1][info][admin] [source/server/admin/admin.cc:132] admin address: 0.0.0.0:15000

[2025-04-19 11:17:53.179][1][info][main] [source/server/server.cc:707] runtime: {}

[2025-04-19 11:17:53.179][1][info][config] [source/server/configuration_impl.cc:127] loading tracing configuration

[2025-04-19 11:17:53.179][1][info][config] [source/server/configuration_impl.cc:87] loading 0 static secret(s)

[2025-04-19 11:17:53.179][1][info][config] [source/server/configuration_impl.cc:93] loading 1 cluster(s)

[2025-04-19 11:17:53.180][1][info][config] [source/server/configuration_impl.cc:97] loading 1 listener(s)

[2025-04-19 11:17:53.181][1][info][config] [source/server/configuration_impl.cc:109] loading stats configuration

[2025-04-19 11:17:53.181][1][info][runtime] [source/common/runtime/runtime_impl.cc:449] RTDS has finished initialization

[2025-04-19 11:17:53.181][1][info][upstream] [source/common/upstream/cluster_manager_impl.cc:206] cm init: all clusters initialized

[2025-04-19 11:17:53.181][1][warning][main] [source/server/server.cc:682] there is no configured limit to the number of allowed active connections. Set a limit via the runtime key overload.global_downstream_max_connections

[2025-04-19 11:17:53.182][1][info][main] [source/server/server.cc:785] all clusters initialized. initializing init manager

[2025-04-19 11:17:53.182][1][info][config] [source/server/listener_manager_impl.cc:834] all dependencies initialized. starting workers

[2025-04-19 11:17:53.183][1][info][main] [source/server/server.cc:804] starting main dispatch loop9. 프록시 로그 확인

docker logs proxy✅ 출력

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:338] initializing epoch 0 (base id=0, hot restart version=11.104)

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:340] statically linked extensions:

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.transports: auto, framed, header, unframed

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.http.stateful_header_formatters: preserve_case

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.filters: envoy.filters.thrift.rate_limit, envoy.filters.thrift.router

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.network: envoy.client_ssl_auth, envoy.echo, envoy.ext_authz, envoy.filters.network.client_ssl_auth, envoy.filters.network.connection_limit, envoy.filters.network.direct_response, envoy.filters.network.dubbo_proxy, envoy.filters.network.echo, envoy.filters.network.ext_authz, envoy.filters.network.http_connection_manager, envoy.filters.network.kafka_broker, envoy.filters.network.local_ratelimit, envoy.filters.network.mongo_proxy, envoy.filters.network.mysql_proxy, envoy.filters.network.postgres_proxy, envoy.filters.network.ratelimit, envoy.filters.network.rbac, envoy.filters.network.redis_proxy, envoy.filters.network.rocketmq_proxy, envoy.filters.network.sni_cluster, envoy.filters.network.sni_dynamic_forward_proxy, envoy.filters.network.tcp_proxy, envoy.filters.network.thrift_proxy, envoy.filters.network.wasm, envoy.filters.network.zookeeper_proxy, envoy.http_connection_manager, envoy.mongo_proxy, envoy.ratelimit, envoy.redis_proxy, envoy.tcp_proxy

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.health_checkers: envoy.health_checkers.redis

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.upstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, envoy.transport_sockets.upstream_proxy_protocol, raw_buffer, starttls, tls

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.http: envoy.bandwidth_limit, envoy.buffer, envoy.cors, envoy.csrf, envoy.ext_authz, envoy.ext_proc, envoy.fault, envoy.filters.http.adaptive_concurrency, envoy.filters.http.admission_control, envoy.filters.http.alternate_protocols_cache, envoy.filters.http.aws_lambda, envoy.filters.http.aws_request_signing, envoy.filters.http.bandwidth_limit, envoy.filters.http.buffer, envoy.filters.http.cache, envoy.filters.http.cdn_loop, envoy.filters.http.composite, envoy.filters.http.compressor, envoy.filters.http.cors, envoy.filters.http.csrf, envoy.filters.http.decompressor, envoy.filters.http.dynamic_forward_proxy, envoy.filters.http.dynamo, envoy.filters.http.ext_authz, envoy.filters.http.ext_proc, envoy.filters.http.fault, envoy.filters.http.grpc_http1_bridge, envoy.filters.http.grpc_http1_reverse_bridge, envoy.filters.http.grpc_json_transcoder, envoy.filters.http.grpc_stats, envoy.filters.http.grpc_web, envoy.filters.http.header_to_metadata, envoy.filters.http.health_check, envoy.filters.http.ip_tagging, envoy.filters.http.jwt_authn, envoy.filters.http.local_ratelimit, envoy.filters.http.lua, envoy.filters.http.oauth2, envoy.filters.http.on_demand, envoy.filters.http.original_src, envoy.filters.http.ratelimit, envoy.filters.http.rbac, envoy.filters.http.router, envoy.filters.http.set_metadata, envoy.filters.http.squash, envoy.filters.http.tap, envoy.filters.http.wasm, envoy.grpc_http1_bridge, envoy.grpc_json_transcoder, envoy.grpc_web, envoy.health_check, envoy.http_dynamo_filter, envoy.ip_tagging, envoy.local_rate_limit, envoy.lua, envoy.rate_limit, envoy.router, envoy.squash, match-wrapper

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.http.input: request-headers, request-trailers, response-headers, response-trailers

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.protocols: auto, binary, binary/non-strict, compact, twitter

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.quic.server.crypto_stream: envoy.quic.crypto_stream.server.quiche

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.upstream_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions, envoy.upstreams.http.http_protocol_options

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.request_id: envoy.request_id.uuid

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.retry_host_predicates: envoy.retry_host_predicates.omit_canary_hosts, envoy.retry_host_predicates.omit_host_metadata, envoy.retry_host_predicates.previous_hosts

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.compression.compressor: envoy.compression.brotli.compressor, envoy.compression.gzip.compressor

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.resource_monitors: envoy.resource_monitors.fixed_heap, envoy.resource_monitors.injected_resource

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.route_matchers: default

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.rate_limit_descriptors: envoy.rate_limit_descriptors.expr

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.listener: envoy.filters.listener.http_inspector, envoy.filters.listener.original_dst, envoy.filters.listener.original_src, envoy.filters.listener.proxy_protocol, envoy.filters.listener.tls_inspector, envoy.listener.http_inspector, envoy.listener.original_dst, envoy.listener.original_src, envoy.listener.proxy_protocol, envoy.listener.tls_inspector

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.filters.udp_listener: envoy.filters.udp.dns_filter, envoy.filters.udp_listener.udp_proxy

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.tls.cert_validator: envoy.tls.cert_validator.default, envoy.tls.cert_validator.spiffe

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.http.original_ip_detection: envoy.http.original_ip_detection.custom_header, envoy.http.original_ip_detection.xff

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.filters: envoy.filters.dubbo.router

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.action: composite-action, skip

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.stats_sinks: envoy.dog_statsd, envoy.graphite_statsd, envoy.metrics_service, envoy.stat_sinks.dog_statsd, envoy.stat_sinks.graphite_statsd, envoy.stat_sinks.hystrix, envoy.stat_sinks.metrics_service, envoy.stat_sinks.statsd, envoy.stat_sinks.wasm, envoy.statsd

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.formatter: envoy.formatter.req_without_query

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.upstreams: envoy.filters.connection_pools.tcp.generic

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.serializers: dubbo.hessian2

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.downstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, raw_buffer, starttls, tls

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.tracers: envoy.dynamic.ot, envoy.lightstep, envoy.tracers.datadog, envoy.tracers.dynamic_ot, envoy.tracers.lightstep, envoy.tracers.opencensus, envoy.tracers.skywalking, envoy.tracers.xray, envoy.tracers.zipkin, envoy.zipkin

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.guarddog_actions: envoy.watchdog.abort_action, envoy.watchdog.profile_action

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.resolvers: envoy.ip

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.bootstrap: envoy.bootstrap.wasm, envoy.extensions.network.socket_interface.default_socket_interface

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.access_loggers: envoy.access_loggers.file, envoy.access_loggers.http_grpc, envoy.access_loggers.open_telemetry, envoy.access_loggers.stderr, envoy.access_loggers.stdout, envoy.access_loggers.tcp_grpc, envoy.access_loggers.wasm, envoy.file_access_log, envoy.http_grpc_access_log, envoy.open_telemetry_access_log, envoy.stderr_access_log, envoy.stdout_access_log, envoy.tcp_grpc_access_log, envoy.wasm_access_log

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.wasm.runtime: envoy.wasm.runtime.null, envoy.wasm.runtime.v8

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.clusters: envoy.cluster.eds, envoy.cluster.logical_dns, envoy.cluster.original_dst, envoy.cluster.static, envoy.cluster.strict_dns, envoy.clusters.aggregate, envoy.clusters.dynamic_forward_proxy, envoy.clusters.redis

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.protocols: dubbo

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.internal_redirect_predicates: envoy.internal_redirect_predicates.allow_listed_routes, envoy.internal_redirect_predicates.previous_routes, envoy.internal_redirect_predicates.safe_cross_scheme

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.input_matchers: envoy.matching.matchers.consistent_hashing, envoy.matching.matchers.ip

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.retry_priorities: envoy.retry_priorities.previous_priorities

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.http.cache: envoy.extensions.http.cache.simple

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.compression.decompressor: envoy.compression.brotli.decompressor, envoy.compression.gzip.decompressor

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.grpc_credentials: envoy.grpc_credentials.aws_iam, envoy.grpc_credentials.default, envoy.grpc_credentials.file_based_metadata

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.matching.common_inputs: envoy.matching.common_inputs.environment_variable

[2025-04-19 11:17:53.156][1][info][main] [source/server/server.cc:342] envoy.quic.proof_source: envoy.quic.proof_source.filter_chain

[2025-04-19 11:17:53.161][1][info][main] [source/server/server.cc:358] HTTP header map info:

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] request header map: 632 bytes: :authority,:method,:path,:protocol,:scheme,accept,accept-encoding,access-control-request-method,authentication,authorization,cache-control,cdn-loop,connection,content-encoding,content-length,content-type,expect,grpc-accept-encoding,grpc-timeout,if-match,if-modified-since,if-none-match,if-range,if-unmodified-since,keep-alive,origin,pragma,proxy-connection,referer,te,transfer-encoding,upgrade,user-agent,via,x-client-trace-id,x-envoy-attempt-count,x-envoy-decorator-operation,x-envoy-downstream-service-cluster,x-envoy-downstream-service-node,x-envoy-expected-rq-timeout-ms,x-envoy-external-address,x-envoy-force-trace,x-envoy-hedge-on-per-try-timeout,x-envoy-internal,x-envoy-ip-tags,x-envoy-max-retries,x-envoy-original-path,x-envoy-original-url,x-envoy-retriable-header-names,x-envoy-retriable-status-codes,x-envoy-retry-grpc-on,x-envoy-retry-on,x-envoy-upstream-alt-stat-name,x-envoy-upstream-rq-per-try-timeout-ms,x-envoy-upstream-rq-timeout-alt-response,x-envoy-upstream-rq-timeout-ms,x-forwarded-client-cert,x-forwarded-for,x-forwarded-proto,x-ot-span-context,x-request-id

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] request trailer map: 136 bytes:

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] response header map: 432 bytes: :status,access-control-allow-credentials,access-control-allow-headers,access-control-allow-methods,access-control-allow-origin,access-control-expose-headers,access-control-max-age,age,cache-control,connection,content-encoding,content-length,content-type,date,etag,expires,grpc-message,grpc-status,keep-alive,last-modified,location,proxy-connection,server,transfer-encoding,upgrade,vary,via,x-envoy-attempt-count,x-envoy-decorator-operation,x-envoy-degraded,x-envoy-immediate-health-check-fail,x-envoy-ratelimited,x-envoy-upstream-canary,x-envoy-upstream-healthchecked-cluster,x-envoy-upstream-service-time,x-request-id

[2025-04-19 11:17:53.162][1][info][main] [source/server/server.cc:361] response trailer map: 160 bytes: grpc-message,grpc-status

[2025-04-19 11:17:53.179][1][info][admin] [source/server/admin/admin.cc:132] admin address: 0.0.0.0:15000

[2025-04-19 11:17:53.179][1][info][main] [source/server/server.cc:707] runtime: {}

[2025-04-19 11:17:53.179][1][info][config] [source/server/configuration_impl.cc:127] loading tracing configuration

[2025-04-19 11:17:53.179][1][info][config] [source/server/configuration_impl.cc:87] loading 0 static secret(s)

[2025-04-19 11:17:53.179][1][info][config] [source/server/configuration_impl.cc:93] loading 1 cluster(s)

[2025-04-19 11:17:53.180][1][info][config] [source/server/configuration_impl.cc:97] loading 1 listener(s)

[2025-04-19 11:17:53.181][1][info][config] [source/server/configuration_impl.cc:109] loading stats configuration

[2025-04-19 11:17:53.181][1][info][runtime] [source/common/runtime/runtime_impl.cc:449] RTDS has finished initialization

[2025-04-19 11:17:53.181][1][info][upstream] [source/common/upstream/cluster_manager_impl.cc:206] cm init: all clusters initialized

[2025-04-19 11:17:53.181][1][warning][main] [source/server/server.cc:682] there is no configured limit to the number of allowed active connections. Set a limit via the runtime key overload.global_downstream_max_connections

[2025-04-19 11:17:53.182][1][info][main] [source/server/server.cc:785] all clusters initialized. initializing init manager

[2025-04-19 11:17:53.182][1][info][config] [source/server/listener_manager_impl.cc:834] all dependencies initialized. starting workers

[2025-04-19 11:17:53.183][1][info][main] [source/server/server.cc:804] starting main dispatch loop- 프록시가 성공적으로 시작해 15001 포트를 리스닝하고 있음

10. 프록시를 통한 요청 라우팅 테스트

curl 컨테이너를 이용해 Envoy(15001)로 요청을 보내 httpbin 서비스로 라우팅됨을 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/headers✅ 출력

{

"headers": {

"Accept": [

"*/*"

],

"Host": [

"httpbin"

],

"User-Agent": [

"curl/8.13.0"

],

"X-Envoy-Expected-Rq-Timeout-Ms": [

"15000"

],

"X-Forwarded-Proto": [

"http"

],

"X-Request-Id": [

"9829b5f3-038c-40d0-ac6b-0f856581a5a5"

]

}

}- 요청이 httpbin으로 전달되었으며 Envoy에서 X-Envoy-Expected-Rq-Timeout-Ms와 X-Request-Id 헤더가 추가됨

11. 다음 실습을 위해 Envoy 종료

docker rm -f proxy

# 결과

proxy12. 타임아웃 설정 변경

라우팅 규칙에 timeout: 1s 추가

cat ch3/simple_change_timeout.yaml✅ 출력

admin:

address:

socket_address: { address: 0.0.0.0, port_value: 15000 }

static_resources:

listeners:

- name: httpbin-demo

address:

socket_address: { address: 0.0.0.0, port_value: 15001 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

http_filters:

- name: envoy.filters.http.router

route_config:

name: httpbin_local_route

virtual_hosts:

- name: httpbin_local_service

domains: ["*"]

routes:

- match: { prefix: "/" }

route:

auto_host_rewrite: true

cluster: httpbin_service

timeout: 1s # 타임아웃 1초

clusters:

- name: httpbin_service

connect_timeout: 5s

type: LOGICAL_DNS

dns_lookup_family: V4_ONLY

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: httpbin

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: httpbin

port_value: 800013. Envoy 프록시 시작

docker run --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple_change_timeout.yaml)"✅ 출력

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:338] initializing epoch 0 (base id=0, hot restart version=11.104)

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:340] statically linked extensions:

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.resolvers: envoy.ip

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.protocols: auto, binary, binary/non-strict, compact, twitter

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.upstreams: envoy.filters.connection_pools.tcp.generic

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.compression.decompressor: envoy.compression.brotli.decompressor, envoy.compression.gzip.decompressor

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.health_checkers: envoy.health_checkers.redis

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.matching.input_matchers: envoy.matching.matchers.consistent_hashing, envoy.matching.matchers.ip

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.filters.udp_listener: envoy.filters.udp.dns_filter, envoy.filters.udp_listener.udp_proxy

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.guarddog_actions: envoy.watchdog.abort_action, envoy.watchdog.profile_action

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.request_id: envoy.request_id.uuid

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.matching.common_inputs: envoy.matching.common_inputs.environment_variable

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.grpc_credentials: envoy.grpc_credentials.aws_iam, envoy.grpc_credentials.default, envoy.grpc_credentials.file_based_metadata

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.rate_limit_descriptors: envoy.rate_limit_descriptors.expr

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.upstream_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions, envoy.upstreams.http.http_protocol_options

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.matching.action: composite-action, skip

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.filters.network: envoy.client_ssl_auth, envoy.echo, envoy.ext_authz, envoy.filters.network.client_ssl_auth, envoy.filters.network.connection_limit, envoy.filters.network.direct_response, envoy.filters.network.dubbo_proxy, envoy.filters.network.echo, envoy.filters.network.ext_authz, envoy.filters.network.http_connection_manager, envoy.filters.network.kafka_broker, envoy.filters.network.local_ratelimit, envoy.filters.network.mongo_proxy, envoy.filters.network.mysql_proxy, envoy.filters.network.postgres_proxy, envoy.filters.network.ratelimit, envoy.filters.network.rbac, envoy.filters.network.redis_proxy, envoy.filters.network.rocketmq_proxy, envoy.filters.network.sni_cluster, envoy.filters.network.sni_dynamic_forward_proxy, envoy.filters.network.tcp_proxy, envoy.filters.network.thrift_proxy, envoy.filters.network.wasm, envoy.filters.network.zookeeper_proxy, envoy.http_connection_manager, envoy.mongo_proxy, envoy.ratelimit, envoy.redis_proxy, envoy.tcp_proxy

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.protocols: dubbo

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.transports: auto, framed, header, unframed

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.tracers: envoy.dynamic.ot, envoy.lightstep, envoy.tracers.datadog, envoy.tracers.dynamic_ot, envoy.tracers.lightstep, envoy.tracers.opencensus, envoy.tracers.skywalking, envoy.tracers.xray, envoy.tracers.zipkin, envoy.zipkin

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.wasm.runtime: envoy.wasm.runtime.null, envoy.wasm.runtime.v8

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.http.original_ip_detection: envoy.http.original_ip_detection.custom_header, envoy.http.original_ip_detection.xff

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.resource_monitors: envoy.resource_monitors.fixed_heap, envoy.resource_monitors.injected_resource

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.thrift_proxy.filters: envoy.filters.thrift.rate_limit, envoy.filters.thrift.router

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.upstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, envoy.transport_sockets.upstream_proxy_protocol, raw_buffer, starttls, tls

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.stats_sinks: envoy.dog_statsd, envoy.graphite_statsd, envoy.metrics_service, envoy.stat_sinks.dog_statsd, envoy.stat_sinks.graphite_statsd, envoy.stat_sinks.hystrix, envoy.stat_sinks.metrics_service, envoy.stat_sinks.statsd, envoy.stat_sinks.wasm, envoy.statsd

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.clusters: envoy.cluster.eds, envoy.cluster.logical_dns, envoy.cluster.original_dst, envoy.cluster.static, envoy.cluster.strict_dns, envoy.clusters.aggregate, envoy.clusters.dynamic_forward_proxy, envoy.clusters.redis

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.retry_priorities: envoy.retry_priorities.previous_priorities

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.http.stateful_header_formatters: preserve_case

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.matching.http.input: request-headers, request-trailers, response-headers, response-trailers

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.quic.server.crypto_stream: envoy.quic.crypto_stream.server.quiche

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.bootstrap: envoy.bootstrap.wasm, envoy.extensions.network.socket_interface.default_socket_interface

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.filters.http: envoy.bandwidth_limit, envoy.buffer, envoy.cors, envoy.csrf, envoy.ext_authz, envoy.ext_proc, envoy.fault, envoy.filters.http.adaptive_concurrency, envoy.filters.http.admission_control, envoy.filters.http.alternate_protocols_cache, envoy.filters.http.aws_lambda, envoy.filters.http.aws_request_signing, envoy.filters.http.bandwidth_limit, envoy.filters.http.buffer, envoy.filters.http.cache, envoy.filters.http.cdn_loop, envoy.filters.http.composite, envoy.filters.http.compressor, envoy.filters.http.cors, envoy.filters.http.csrf, envoy.filters.http.decompressor, envoy.filters.http.dynamic_forward_proxy, envoy.filters.http.dynamo, envoy.filters.http.ext_authz, envoy.filters.http.ext_proc, envoy.filters.http.fault, envoy.filters.http.grpc_http1_bridge, envoy.filters.http.grpc_http1_reverse_bridge, envoy.filters.http.grpc_json_transcoder, envoy.filters.http.grpc_stats, envoy.filters.http.grpc_web, envoy.filters.http.header_to_metadata, envoy.filters.http.health_check, envoy.filters.http.ip_tagging, envoy.filters.http.jwt_authn, envoy.filters.http.local_ratelimit, envoy.filters.http.lua, envoy.filters.http.oauth2, envoy.filters.http.on_demand, envoy.filters.http.original_src, envoy.filters.http.ratelimit, envoy.filters.http.rbac, envoy.filters.http.router, envoy.filters.http.set_metadata, envoy.filters.http.squash, envoy.filters.http.tap, envoy.filters.http.wasm, envoy.grpc_http1_bridge, envoy.grpc_json_transcoder, envoy.grpc_web, envoy.health_check, envoy.http_dynamo_filter, envoy.ip_tagging, envoy.local_rate_limit, envoy.lua, envoy.rate_limit, envoy.router, envoy.squash, match-wrapper

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.compression.compressor: envoy.compression.brotli.compressor, envoy.compression.gzip.compressor

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.filters: envoy.filters.dubbo.router

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.quic.proof_source: envoy.quic.proof_source.filter_chain

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.retry_host_predicates: envoy.retry_host_predicates.omit_canary_hosts, envoy.retry_host_predicates.omit_host_metadata, envoy.retry_host_predicates.previous_hosts

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.filters.listener: envoy.filters.listener.http_inspector, envoy.filters.listener.original_dst, envoy.filters.listener.original_src, envoy.filters.listener.proxy_protocol, envoy.filters.listener.tls_inspector, envoy.listener.http_inspector, envoy.listener.original_dst, envoy.listener.original_src, envoy.listener.proxy_protocol, envoy.listener.tls_inspector

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.internal_redirect_predicates: envoy.internal_redirect_predicates.allow_listed_routes, envoy.internal_redirect_predicates.previous_routes, envoy.internal_redirect_predicates.safe_cross_scheme

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.access_loggers: envoy.access_loggers.file, envoy.access_loggers.http_grpc, envoy.access_loggers.open_telemetry, envoy.access_loggers.stderr, envoy.access_loggers.stdout, envoy.access_loggers.tcp_grpc, envoy.access_loggers.wasm, envoy.file_access_log, envoy.http_grpc_access_log, envoy.open_telemetry_access_log, envoy.stderr_access_log, envoy.stdout_access_log, envoy.tcp_grpc_access_log, envoy.wasm_access_log

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.transport_sockets.downstream: envoy.transport_sockets.alts, envoy.transport_sockets.quic, envoy.transport_sockets.raw_buffer, envoy.transport_sockets.starttls, envoy.transport_sockets.tap, envoy.transport_sockets.tls, raw_buffer, starttls, tls

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.http.cache: envoy.extensions.http.cache.simple

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.serializers: dubbo.hessian2

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.tls.cert_validator: envoy.tls.cert_validator.default, envoy.tls.cert_validator.spiffe

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.dubbo_proxy.route_matchers: default

[2025-04-19 11:37:55.506][1][info][main] [source/server/server.cc:342] envoy.formatter: envoy.formatter.req_without_query

[2025-04-19 11:37:55.510][1][info][main] [source/server/server.cc:358] HTTP header map info:

[2025-04-19 11:37:55.510][1][info][main] [source/server/server.cc:361] request header map: 632 bytes: :authority,:method,:path,:protocol,:scheme,accept,accept-encoding,access-control-request-method,authentication,authorization,cache-control,cdn-loop,connection,content-encoding,content-length,content-type,expect,grpc-accept-encoding,grpc-timeout,if-match,if-modified-since,if-none-match,if-range,if-unmodified-since,keep-alive,origin,pragma,proxy-connection,referer,te,transfer-encoding,upgrade,user-agent,via,x-client-trace-id,x-envoy-attempt-count,x-envoy-decorator-operation,x-envoy-downstream-service-cluster,x-envoy-downstream-service-node,x-envoy-expected-rq-timeout-ms,x-envoy-external-address,x-envoy-force-trace,x-envoy-hedge-on-per-try-timeout,x-envoy-internal,x-envoy-ip-tags,x-envoy-max-retries,x-envoy-original-path,x-envoy-original-url,x-envoy-retriable-header-names,x-envoy-retriable-status-codes,x-envoy-retry-grpc-on,x-envoy-retry-on,x-envoy-upstream-alt-stat-name,x-envoy-upstream-rq-per-try-timeout-ms,x-envoy-upstream-rq-timeout-alt-response,x-envoy-upstream-rq-timeout-ms,x-forwarded-client-cert,x-forwarded-for,x-forwarded-proto,x-ot-span-context,x-request-id

[2025-04-19 11:37:55.510][1][info][main] [source/server/server.cc:361] request trailer map: 136 bytes:

[2025-04-19 11:37:55.510][1][info][main] [source/server/server.cc:361] response header map: 432 bytes: :status,access-control-allow-credentials,access-control-allow-headers,access-control-allow-methods,access-control-allow-origin,access-control-expose-headers,access-control-max-age,age,cache-control,connection,content-encoding,content-length,content-type,date,etag,expires,grpc-message,grpc-status,keep-alive,last-modified,location,proxy-connection,server,transfer-encoding,upgrade,vary,via,x-envoy-attempt-count,x-envoy-decorator-operation,x-envoy-degraded,x-envoy-immediate-health-check-fail,x-envoy-ratelimited,x-envoy-upstream-canary,x-envoy-upstream-healthchecked-cluster,x-envoy-upstream-service-time,x-request-id

[2025-04-19 11:37:55.510][1][info][main] [source/server/server.cc:361] response trailer map: 160 bytes: grpc-message,grpc-status

[2025-04-19 11:37:55.527][1][info][admin] [source/server/admin/admin.cc:132] admin address: 0.0.0.0:15000

[2025-04-19 11:37:55.527][1][info][main] [source/server/server.cc:707] runtime: {}

[2025-04-19 11:37:55.527][1][info][config] [source/server/configuration_impl.cc:127] loading tracing configuration

[2025-04-19 11:37:55.527][1][info][config] [source/server/configuration_impl.cc:87] loading 0 static secret(s)

[2025-04-19 11:37:55.527][1][info][config] [source/server/configuration_impl.cc:93] loading 1 cluster(s)

[2025-04-19 11:37:55.528][1][info][config] [source/server/configuration_impl.cc:97] loading 1 listener(s)

[2025-04-19 11:37:55.529][1][info][config] [source/server/configuration_impl.cc:109] loading stats configuration

[2025-04-19 11:37:55.529][1][info][runtime] [source/common/runtime/runtime_impl.cc:449] RTDS has finished initialization

[2025-04-19 11:37:55.529][1][info][upstream] [source/common/upstream/cluster_manager_impl.cc:206] cm init: all clusters initialized

[2025-04-19 11:37:55.529][1][warning][main] [source/server/server.cc:682] there is no configured limit to the number of allowed active connections. Set a limit via the runtime key overload.global_downstream_max_connections

[2025-04-19 11:37:55.529][1][info][main] [source/server/server.cc:785] all clusters initialized. initializing init manager

[2025-04-19 11:37:55.529][1][info][config] [source/server/listener_manager_impl.cc:834] all dependencies initialized. starting workers

[2025-04-19 11:37:55.531][1][info][main] [source/server/server.cc:804] starting main dispatch loop14. 타임아웃 설정 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/headers✅ 출력

{

"headers": {

"Accept": [

"*/*"

],

"Host": [

"httpbin"

],

"User-Agent": [

"curl/8.13.0"

],

"X-Envoy-Expected-Rq-Timeout-Ms": [

"1000" # 1000ms초 = 1초

],

"X-Forwarded-Proto": [

"http"

],

"X-Request-Id": [

"22e6eaf3-fb3e-42c0-81b1-41462c19c8c8"

]

}

}15. Envoy Admin API 로깅 레벨 조회 및 변경

(1) 기본 로깅 레벨 조회

모든 로거가 info 레벨로 표시됨

docker run -it --rm --link proxy curlimages/curl curl -X POST http://proxy:15000/logging✅ 출력

active loggers:

admin: info

aws: info

assert: info

backtrace: info

cache_filter: info

client: info

config: info

connection: info

conn_handler: info

decompression: info

dubbo: info

envoy_bug: info

ext_authz: info

rocketmq: info

file: info

filter: info

forward_proxy: info

grpc: info

hc: info

health_checker: info

http: info

http2: info

hystrix: info

init: info

io: info

jwt: info

kafka: info

lua: info

main: info

matcher: info

misc: info

mongo: info

quic: info

quic_stream: info

pool: info

rbac: info

redis: info

router: info

runtime: info

stats: info

secret: info

tap: info

testing: info

thrift: info

tracing: info

upstream: info

udp: info

wasm: info(2) HTTP 로깅만 debug 레벨로 변경

docker run -it --rm --link proxy curlimages/curl curl -X POST http://proxy:15000/logging\?http\=debug✅ 출력

active loggers:

admin: info

aws: info

assert: info

backtrace: info

cache_filter: info

client: info

config: info

connection: info

conn_handler: info

decompression: info

dubbo: info

envoy_bug: info

ext_authz: info

rocketmq: info

file: info

filter: info

forward_proxy: info

grpc: info

hc: info

health_checker: info

http: debug

http2: info

hystrix: info

init: info

io: info

jwt: info

kafka: info

lua: info

main: info

matcher: info

misc: info

mongo: info

quic: info

quic_stream: info

pool: info

rbac: info

redis: info

router: info

runtime: info

stats: info

secret: info

tap: info

testing: info

thrift: info

tracing: info

upstream: info

udp: info

wasm: info[2025-04-19 11:41:51.447][1][debug][http] [source/common/http/conn_manager_impl.cc:1456] [C3][S11192091099210294253] encoding headers via codec (end_stream=false):

':status', '200'

'content-type', 'text/plain; charset=UTF-8'

'cache-control', 'no-cache, max-age=0'

'x-content-type-options', 'nosniff'

'date', 'Sat, 19 Apr 2025 11:41:51 GMT'

'server', 'envoy'16. 지연 요청 테스트

(1) 0.5초 지연 요청 테스트

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/delay/0.5✅ 출력

{

"args": {},

"headers": {

"Accept": [

"*/*"

],

"Host": [

"httpbin"

],

"User-Agent": [

"curl/8.13.0"

],

"X-Envoy-Expected-Rq-Timeout-Ms": [

"1000"

],

"X-Forwarded-Proto": [

"http"

],

"X-Request-Id": [

"38a3bb6d-a4a3-48ff-893e-de6fe1272312"

]

},

"method": "GET",

"origin": "172.17.0.3:51818",

"url": "http://httpbin/delay/0.5",

"data": "",

"files": {},

"form": {},

"json": null

}[2025-04-19 11:43:24.074][45][debug][http] [source/common/http/filter_manager.cc:808] [C4][S1849126035353602963] request end stream

[2025-04-19 11:43:24.576][45][debug][http] [source/common/http/conn_manager_impl.cc:1456] [C4][S1849126035353602963] encoding headers via codec (end_stream=false):

':status', '200'

'access-control-allow-credentials', 'true'

'access-control-allow-origin', '*'

'content-type', 'application/json; charset=utf-8'

'server-timing', 'initial_delay;dur=500.00;desc="initial delay"'

'date', 'Sat, 19 Apr 2025 11:43:24 GMT'

'content-length', '483'

'x-envoy-upstream-service-time', '501'

'server', 'envoy'(2) 1초 지연 요청 테스트 (타임아웃)

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/delay/1✅ 출력

upstream request timeout%[2025-04-19 11:44:22.733][48][debug][http] [source/common/http/filter_manager.cc:808] [C6][S16463014453874968413] request end stream

[2025-04-19 11:44:23.733][48][debug][http] [source/common/http/filter_manager.cc:909] [C6][S16463014453874968413] Sending local reply with details upstream_response_timeout

[2025-04-19 11:44:23.733][48][debug][http] [source/common/http/conn_manager_impl.cc:1456] [C6][S16463014453874968413] encoding headers via codec (end_stream=false):

':status', '504'

'content-length', '24'

'content-type', 'text/plain'

'date', 'Sat, 19 Apr 2025 11:44:23 GMT'

'server', 'envoy'(3) 2초 지연 요청 테스트 (타임아웃)

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/delay/2✅ 출력

upstream request timeout%[2025-04-19 11:45:27.485][37][debug][http] [source/common/http/filter_manager.cc:808] [C8][S7626896203224584865] request end stream

[2025-04-19 11:45:28.485][37][debug][http] [source/common/http/filter_manager.cc:909] [C8][S7626896203224584865] Sending local reply with details upstream_response_timeout

[2025-04-19 11:45:28.485][37][debug][http] [source/common/http/conn_manager_impl.cc:1456] [C8][S7626896203224584865] encoding headers via codec (end_stream=false):

':status', '504'

'content-length', '24'

'content-type', 'text/plain'

'date', 'Sat, 19 Apr 2025 11:45:28 GMT'

'server', 'envoy'⚙️ Envoy’s Admin API

엔보이의 Admin API를 통해 프록시 동작, 메트릭, 설정 정보 실시간으로 확인 가능

1. Envoy stat 확인

응답은 리스너, 클러스터, 서버에 대한 통계 및 메트릭

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats✅ 출력

cluster.httpbin_service.assignment_stale: 0

cluster.httpbin_service.assignment_timeout_received: 0

cluster.httpbin_service.bind_errors: 0

cluster.httpbin_service.circuit_breakers.default.cx_open: 0

cluster.httpbin_service.circuit_breakers.default.cx_pool_open: 0

cluster.httpbin_service.circuit_breakers.default.rq_open: 0

cluster.httpbin_service.circuit_breakers.default.rq_pending_open: 0

cluster.httpbin_service.circuit_breakers.default.rq_retry_open: 0

cluster.httpbin_service.circuit_breakers.high.cx_open: 0

cluster.httpbin_service.circuit_breakers.high.cx_pool_open: 0

cluster.httpbin_service.circuit_breakers.high.rq_open: 0

cluster.httpbin_service.circuit_breakers.high.rq_pending_open: 0

cluster.httpbin_service.circuit_breakers.high.rq_retry_open: 0

cluster.httpbin_service.default.total_match_count: 1

cluster.httpbin_service.external.upstream_rq_200: 2

cluster.httpbin_service.external.upstream_rq_2xx: 2

cluster.httpbin_service.external.upstream_rq_504: 2

cluster.httpbin_service.external.upstream_rq_5xx: 2

cluster.httpbin_service.external.upstream_rq_completed: 4

cluster.httpbin_service.http1.dropped_headers_with_underscores: 0

cluster.httpbin_service.http1.metadata_not_supported_error: 0

cluster.httpbin_service.http1.requests_rejected_with_underscores_in_headers: 0

cluster.httpbin_service.http1.response_flood: 0

cluster.httpbin_service.lb_healthy_panic: 0

...2. retry 관련 통계 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats | grep retry✅ 출력

cluster.httpbin_service.circuit_breakers.default.rq_retry_open: 0

cluster.httpbin_service.circuit_breakers.high.rq_retry_open: 0

cluster.httpbin_service.retry_or_shadow_abandoned: 0

cluster.httpbin_service.upstream_rq_retry: 0

cluster.httpbin_service.upstream_rq_retry_backoff_exponential: 0

cluster.httpbin_service.upstream_rq_retry_backoff_ratelimited: 0

cluster.httpbin_service.upstream_rq_retry_limit_exceeded: 0

cluster.httpbin_service.upstream_rq_retry_overflow: 0

cluster.httpbin_service.upstream_rq_retry_success: 0

vhost.httpbin_local_service.vcluster.other.upstream_rq_retry: 0

vhost.httpbin_local_service.vcluster.other.upstream_rq_retry_limit_exceeded: 0

vhost.httpbin_local_service.vcluster.other.upstream_rq_retry_overflow: 0

vhost.httpbin_local_service.vcluster.other.upstream_rq_retry_success: 03. 다른 엔드포인트 일부 목록들 확인

(1) 인증서 목록 조회

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/certs✅ 출력

{

"certificates": []

}(2) 클러스터 설정 조회

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/clusters✅ 출력

httpbin_service::observability_name::httpbin_service

httpbin_service::default_priority::max_connections::1024

httpbin_service::default_priority::max_pending_requests::1024

httpbin_service::default_priority::max_requests::1024

httpbin_service::default_priority::max_retries::3

httpbin_service::high_priority::max_connections::1024

httpbin_service::high_priority::max_pending_requests::1024

httpbin_service::high_priority::max_requests::1024

httpbin_service::high_priority::max_retries::3

httpbin_service::added_via_api::false

httpbin_service::172.17.0.2:8000::cx_active::0

httpbin_service::172.17.0.2:8000::cx_connect_fail::0

httpbin_service::172.17.0.2:8000::cx_total::4

httpbin_service::172.17.0.2:8000::rq_active::0

httpbin_service::172.17.0.2:8000::rq_error::2

httpbin_service::172.17.0.2:8000::rq_success::2

httpbin_service::172.17.0.2:8000::rq_timeout::2

httpbin_service::172.17.0.2:8000::rq_total::4

httpbin_service::172.17.0.2:8000::hostname::httpbin

httpbin_service::172.17.0.2:8000::health_flags::healthy

httpbin_service::172.17.0.2:8000::weight::1

httpbin_service::172.17.0.2:8000::region::

httpbin_service::172.17.0.2:8000::zone::

httpbin_service::172.17.0.2:8000::sub_zone::

httpbin_service::172.17.0.2:8000::canary::false

httpbin_service::172.17.0.2:8000::priority::0

httpbin_service::172.17.0.2:8000::success_rate::-1.0

httpbin_service::172.17.0.2:8000::local_origin_success_rate::-1.0(3) 리스너 정보 조회

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/listeners✅ 출력

httpbin-demo::0.0.0.0:15001(4) 로깅 레벨 조회 및 변경

기본 조회

docker run -it --rm --link proxy curlimages/curl curl -X POST http://proxy:15000/logging✅ 출력

active loggers:

admin: info

aws: info

assert: info

backtrace: info

cache_filter: info

client: info

config: info

connection: info

conn_handler: info

decompression: info

dubbo: info

envoy_bug: info

ext_authz: info

rocketmq: info

file: info

filter: info

forward_proxy: info

grpc: info

hc: info

health_checker: info

http: debug

http2: info

hystrix: info

init: info

io: info

jwt: info

kafka: info

lua: info

main: info

matcher: info

misc: info

mongo: info

quic: info

quic_stream: info

pool: info

rbac: info

redis: info

router: info

runtime: info

stats: info

secret: info

tap: info

testing: info

thrift: info

tracing: info

upstream: info

udp: info

wasm: infoHTTP 로거를 debug로 변경

docker run -it --rm --link proxy curlimages/curl curl -X POST http://proxy:15000/logging\?http\=debug✅ 출력

active loggers:

admin: info

aws: info

assert: info

backtrace: info

cache_filter: info

client: info

config: info

connection: info

conn_handler: info

decompression: info

dubbo: info

envoy_bug: info

ext_authz: info

rocketmq: info

file: info

filter: info

forward_proxy: info

grpc: info

hc: info

health_checker: info

http: debug

http2: info

hystrix: info

init: info

io: info

jwt: info

kafka: info

lua: info

main: info

matcher: info

misc: info

mongo: info

quic: info

quic_stream: info

pool: info

rbac: info

redis: info

router: info

runtime: info

stats: info

secret: info

tap: info

testing: info

thrift: info

tracing: info

upstream: info

udp: info

wasm: info(5) Prometheus 형식 통계 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats/prometheus✅ 출력

# TYPE envoy_cluster_assignment_stale counter

envoy_cluster_assignment_stale{envoy_cluster_name="httpbin_service"} 0

# TYPE envoy_cluster_assignment_timeout_received counter

envoy_cluster_assignment_timeout_received{envoy_cluster_name="httpbin_service"} 0

# TYPE envoy_cluster_bind_errors counter

envoy_cluster_bind_errors{envoy_cluster_name="httpbin_service"} 0

# TYPE envoy_cluster_default_total_match_count counter

envoy_cluster_default_total_match_count{envoy_cluster_name="httpbin_service"} 1

# TYPE envoy_cluster_external_upstream_rq counter