🤡 BERT4Rec: Sequential Recommendation with Bidirectional Encoder Representations from Transformer

Euron 논문 리뷰

논문 리뷰를 듣고...

- 가장 마지막의 단어를 예측한다는 것 => 스포 당함X

ex) 카메라 -> SD카드 -> 컴퓨터 -> _ _ _ _ _ (여기에 올 것은?)

-

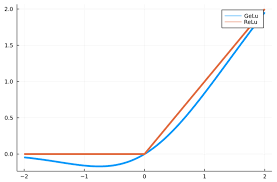

GELU function

-

ReLU와 다르게 아래로 볼록한 부분 존재

ABSTRACT

기존의 추천 모델들은 사용자의 과거 상호작용 기록에 대해 단방향 순차적(좌측 -> 우측)으로 인코딩함. 그러나,

(a) 단방향 아키텍처는 사용자의 행동 시퀀스에 내재된 능력을 제한함.

(b) 순서가 있는 시퀀스가 항상 실용적인 것은 아님.

이러한 제한점을 해결하기 위해 deep bidirectional self-attention으로 사용자 행동을 모델링하는 BERT4Rec 모델을 제안함. Cloze를 도입하여 좌측, 우측 맥락을 모두 고려하여 sequence에서 임의로 mask된 item을 예측함.

1. INTRODUCTION

단방향 모델의 주요 한계: Historical Sequence에서 각 항목들의 숨겨진 능력과 맥락을 제한함. 각 항목이 이전 항목에서의 정보만을 인코딩할 수 있기 때문임.

=> 사용자의 상호작용은 다양한 관측 불가능한 외부 요인들이 있기 때문에 실제 응용 프로그램에서는 사용자 행동이 엄격하게 순서를 따르지 않을 수 있음.

- 사용자 행동 sequence에서 양쪽 방향의 문맥을 고려하는 것이 중요함. 텍스트 맥락 이해에서 성공한 모델 BERT에 영감을 받아 양방향 self-attention 모델을 추천 모델에 적용함.

- 문제: 기존의 순차적 모델은 이전 항목을 통해 다음 항목을 예측하는 방향으로 훈련되는데, 양방향은 좌우 문맥에 모두 의존하게 되면서 예측해야할 항목을 보게 되어버리는 정보 누출을 초래 => 모델이 아무것도 배우지 못하게 될 수도.

Cloze 도입

- 다음 항목을 순차적으로 예측하는 것이 아니라, 단방향 모델의 '목적'을 대신함. 입력 sequence의 일부를 무작위로 mask하고, 주변 문맥을 기반으로 해당 masked된 항목의 ID를 예측함.

=> 정보 누출을 피하고 더 많은 샘플을 생성할 수 있음.

그러나 최송 순차적 추천과 일관성이 없음 => 입력 sequence 끝에 특수 토큰 'mask'를 추가하여 예측해야 할 항목을 나타내고, 최종 숨겨진 벡터를 기반으로 추천을 수행함.

2. BERT4REC

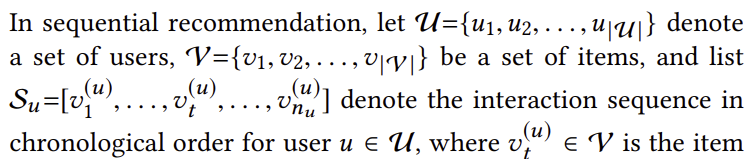

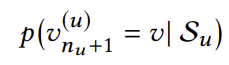

3.1 Problem Statement

순차적 추천에서 interaction history S가가 주어졌을 때 사용자 u가 아이템 v를 time step n+1에서 볼 확률임.

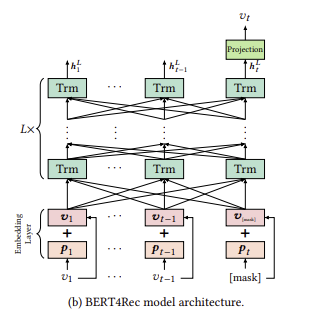

3.2 Model Architecture

BERT4Rec: Bidirectional Encoder Representations from Transformers to sequenction Recommendation

- L개의 bidirectional Transform Layer로 구성되어 있으며, 각 레이어에서는 이전 레이어의 모든 위치 간 정보를 교환하여 각 위치의 표현을 반복적으로 수정함.

=> self attention mechanism: 어떤 거리의 dependency도 직접적으로 잡아낼 수 있는 능력을 가짐.

+) 기존 모델들: CNN은 수용할 수 있는 영역이 제한적이고, self-attetion은 병렬화하기에 직관적이지 못함.

3.3 Transformer Layer

-

Input sequence: 길이 t => hidden representation h를 각 Layer에 대해 계산(Attention function이 모든 포지션에 대해 동시에 계산을 하므로 모든 h들을 하나의 행렬로 묶음.)

-

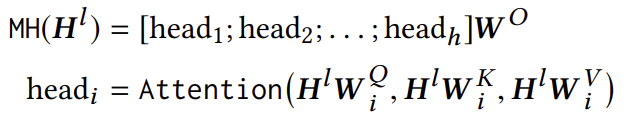

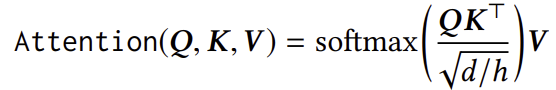

Multi-Head Self-Attention: Representation 쌍에 대하여 둘의 거리에 관계 없이 의존 정도를 파악할 수 있어 많은 작업에 활용됨.

=> 서로 다른 representation subspace로부터 다른 포지션의 정보를 jointly attend하는 것의 성능이 높음이 밝혀져 있음. 여기에 multi-head를 도입함.

앞서 구한 H 행렬 => h subspace => h attention function => output => 합친 후에 다시 한번 project

- W^Q, W^V, W^O는 모두 학습해야 하는 파라미터임. 이렇게 학습된 h를 활용하여 Attention Value를 구하게 됨.

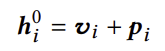

3.4 Embedding Layer

-

트랜스포머 layer Trm은 input sequence의 순서의 의미를 파악하지 못함. 따라서 Positional Embedding을 활용하여 input item에 해당 정보를 함께 넣음.

-

고정된 sinusoid embedding 대신 학습 가능한 positional embedding을 사용하여 성능을 더 높임.

3.5 Output Layer

L개의 layer가 계층적으로 모든 정보를 전 layer로부터 주고 받은 이후에, 최종적인 output H^L을 각 item에 대해서 출력함.

- Time step t에 대하여 item Vt를 mask 처리한 후에, 해당 item을 ht로 예측하는 학습을 수행함. 2개의 layer로 이루어진 GELU activation을 활용하여 output 분포를 출력함.

3.7 Discussion

기존 Recommendtion 모델과의 비교

- SASRec: 좌 -> 우로 이동하는 BERT4Rec의 버전임. Single head attention을 사용하고, 다음 아이템을 예측하함.

- CBOW & SG: BERT4Rec처럼 좌, 우 정보를 모두를 활용하나, 하나의 self-attion layer를 사용하고 모든 아이템에 대하여 동일한 weight를 부여함.

- BERT: Pretraining model인 반면 BERT4Rec은 end-to-end 모델임. BERT와 다르게 다음 문장의 loss 값을 지우고 embedding을 분리함.

4. Experiments

- 활용한 데이터셋

1) Amazon Beauty

2) Steam

3) MovieLens

- Evaluation Metrics

1) Hit Ratio

2) Normalized Discounted Cumulative Gain

3) Mean Reciprocal Rank

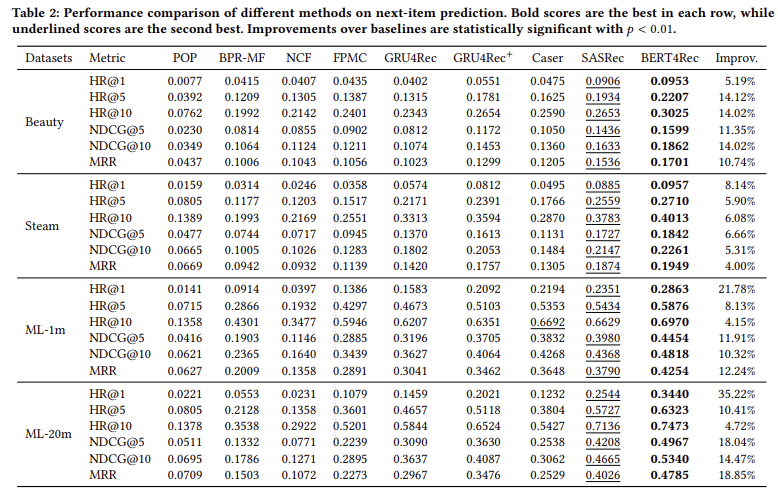

- Baseline 모델과 BERT4Rec을 4개의 데이터셋에 대하여 성능평가한 결과.

5. Conclusion and Future Work

미래 연구 방향: 제품의 카테고리와 가격, 영화의 캐스트와 같은 풍부한 항목 특성을 단순하게 항목 ID로 모델링하는 것이 아닌 BERT4Rec에 통합하는 것을 목표로함.