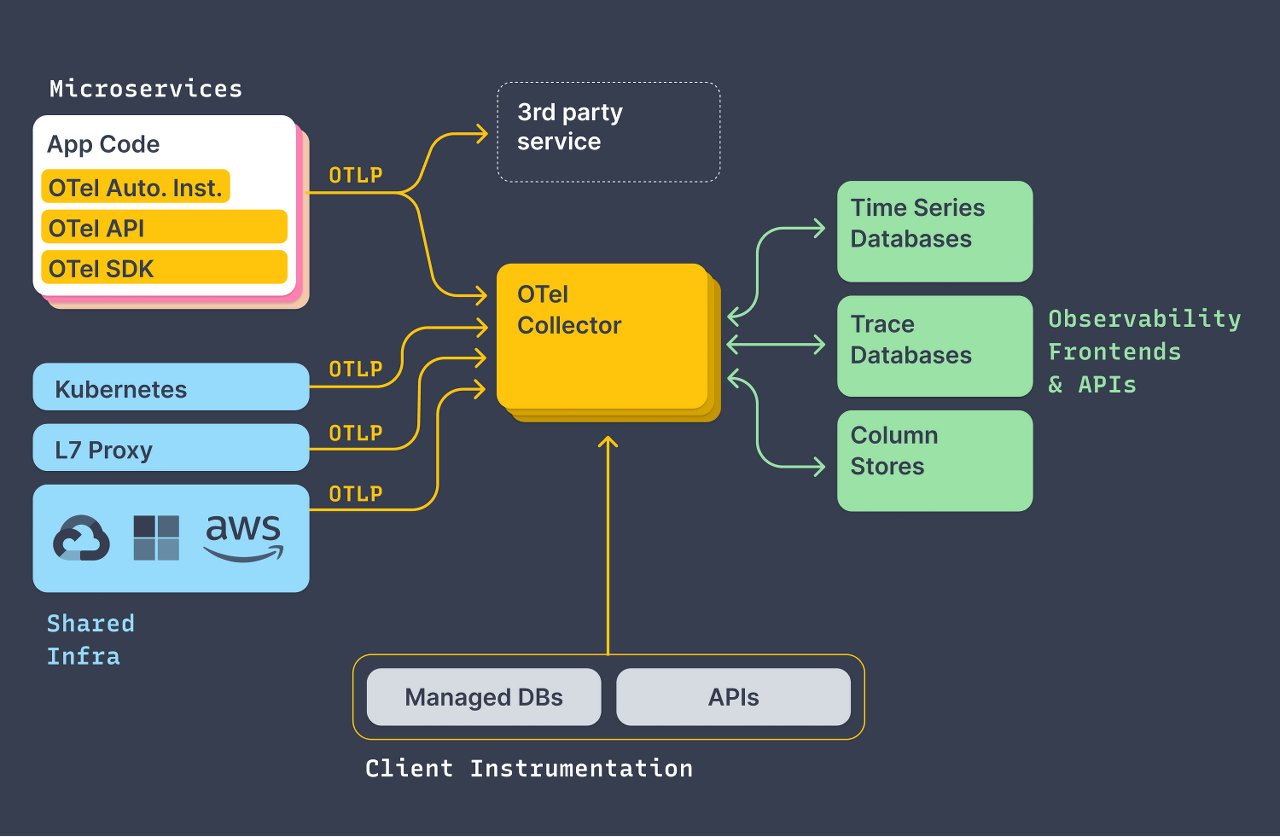

OpenTelemetry는 데이터 수집의 표준을 구축하기 위한 것으로 에이전트를 이용하여 데이터를 수집하고 간단한 처리 후 데이터를 저장하고 표현하는 목적지로 전송만 담당한다. 그 외의 영역인 데이터의 통계, 시각화와 같은 것은 벤더에게 위임함.

Open source를 기반으로 k8s observability 구성을 위해 찾아본 내용을 정리한다.

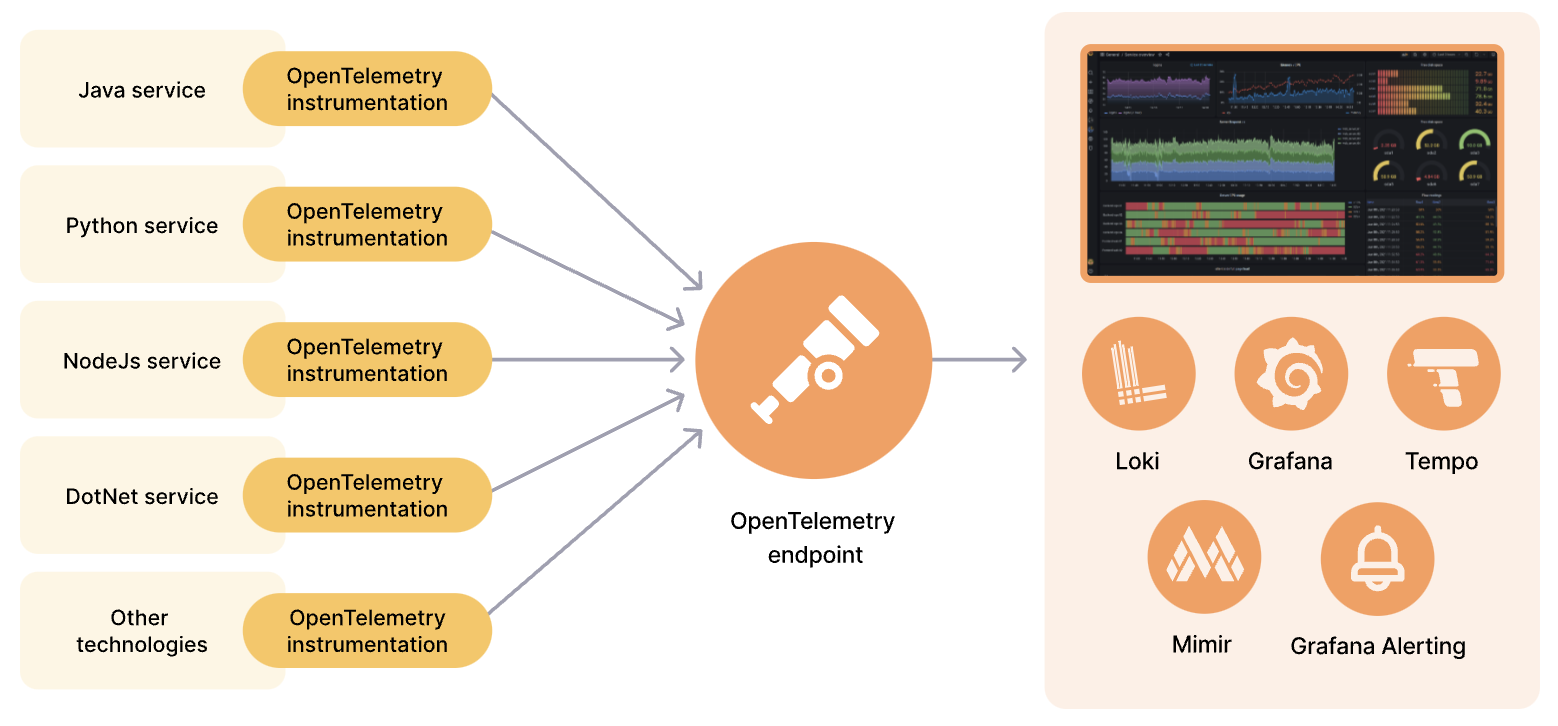

OpenTelemetry(OTel)은 MTL(Metrics, Traces, Logs)를 수신, 처리, 전송하기 위한 표준을 정의하고, backend 시스템은 상용시스템 및 오픈소스 시스템으로 구성할 수 있다.

- Collector

- Target Allocator

- Auto-Instrumentation

- OpenTelemetry Auto Instrumentation을 사용하면 소스 코드 수정없이 Application에서 Tracing 데이터를 가져올 수 있음.

- 사용하는 image version 정리

collector에 대한 배포판은 3가지가 있고, opentelemetry-collector(classic)은 핵심적인 구성요소로만 되어 있고 opentelemetry-collector-contrib는 모든 구성요소를 포함한다. opentelemetry-collector-k8s는 classic과 contrib의 구성요소중 k8s cluster와 구성요소를 모니터링할 수 있도록 특별히 제작되었다.

docker pull otel/opentelemetry-operator:0.102.0

docker pull otel/opentelemetry-collector:0.102.1

docker pull otel/opentelemetry-collector-contrib:0.102.1

docker pull otel/opentelemetry-collector-k8s:0.102.1

본 글에서는 opentelemetry-collector-contrib로 구성하되 각 환경에 맞게 따로 배포판을 만들어서 사용(https://opentelemetry.io/docs/collector/custom-collector)할 수도 있다. - 사용하는 image version 정리

Backend Systems

-

docker-compose로 구성하기

https://github.com/baiyongzhen/opentelemetry-collector-on-kubernetes 참고 -

otel/operator helm chart override-values.yaml

replicaCount: 1

nameOverride: ""

fullnameOverride: ""

imagePullSecrets: []

clusterDomain: cluster.local

additionalLabels: {}

pdb:

create: false

minAvailable: 1

maxUnavailable: ""

manager:

image:

repository: 192.168.0.52/opentelemetry-operator/opentelemetry-operator

tag: "0.102.0"

collectorImage:

repository: "192.168.0.52/opentelemetry-operator/opentelemetry-collector-contrib"

tag: 0.102.1

opampBridgeImage:

repository: ""

tag: ""

targetAllocatorImage:

repository: ""

tag: ""

autoInstrumentationImage:

java:

repository: ""

tag: ""

nodejs:

repository: ""

tag: ""

python:

repository: ""

tag: ""

dotnet:

repository: ""

tag: ""

go:

repository: ""

tag: ""

featureGates: ""

ports:

metricsPort: 8080

webhookPort: 9443

healthzPort: 8081

resources:

limits:

cpu: 100m

memory: 128Mi

# ephemeral-storage: 50Mi

requests:

cpu: 100m

memory: 64Mi

env:

ENABLE_WEBHOOKS: "true"

serviceAccount:

create: true

annotations: {}

name: ""

serviceMonitor:

enabled: false

extraLabels: {}

annotations: {}

metricsEndpoints:

- port: metrics

deploymentAnnotations: {}

serviceAnnotations: {}

podAnnotations: {}

podLabels: {}

prometheusRule:

enabled: false

groups: []

defaultRules:

enabled: false

extraLabels: {}

annotations: {}

createRbacPermissions: false

extraArgs: []

leaderElection:

enabled: true

verticalPodAutoscaler:

enabled: false

controlledResources: []

maxAllowed: {}

minAllowed: {}

updatePolicy:

updateMode: Auto

minReplicas: 2

rolling: false

securityContext: {}

kubeRBACProxy:

enabled: true

image:

repository: quay.io/brancz/kube-rbac-proxy

tag: v0.15.0

ports:

proxyPort: 8443

resources:

limits:

cpu: 500m

memory: 128Mi

requests:

cpu: 5m

memory: 64Mi

extraArgs: []

securityContext: {}

admissionWebhooks:

create: true

servicePort: 443

failurePolicy: Fail

secretName: ""

pods:

failurePolicy: Ignore

namePrefix: ""

timeoutSeconds: 10

namespaceSelector: {}

objectSelector: {}

certManager:

enabled: false

issuerRef: {}

certificateAnnotations: {}

issuerAnnotations: {}

autoGenerateCert:

enabled: true

recreate: true

certFile: ""

keyFile: ""

caFile: ""

serviceAnnotations: {}

secretAnnotations: {}

secretLabels: {}

crds:

create: true

role:

create: true

clusterRole:

create: true

affinity: {}

tolerations: []

nodeSelector: {}

topologySpreadConstraints: []

hostNetwork: false

priorityClassName: ""

securityContext:

runAsGroup: 65532

runAsNonRoot: true

runAsUser: 65532

fsGroup: 65532

testFramework:

image:

repository: busybox

tag: latestcollector workload

apiVersion: opentelemetry.io/v1alpha1

kind: OpenTelemetryCollector

metadata:

name: otel-collector

namespace: opentelemetry

labels:

app: opentelemetry

component: otel-collector

# https://github.com/open-telemetry/opentelemetry-operator/blob/main/docs/api.md#opentelemetrycollector

spec:

# https://github.com/open-telemetry/opentelemetry-operator/blob/main/docs/api.md#opentelemetrycollectorspec

# https://github.com/open-telemetry/opentelemetry-operator/blob/main/config/crd/bases/opentelemetry.io_opentelemetrycollectors.yaml

# mode: deployment

mode: daemonset

hostNetwork: true

config: |

receivers:

otlp:

protocols:

grpc:

http:

prometheus:

config:

scrape_configs:

- job_name: apps

# scrape_interval: 5s

kubernetes_sd_configs:

- role: pod

relabel_configs:

# scrape pods annotated with "prometheus.io/scrape: true"

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

regex: "true"

action: keep

# scrape pods annotated with "prometheus.io/path: /metrics"

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

# read the port from "prometheus.io/port: <port>" annotation and update scraping address accordingly

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

# escaped $1:$2

replacement: $1:$2

processors:

batch:

exporters:

logging:

# metrics

prometheusremotewrite:

endpoint: http://192.168.0.52:9090/api/v1/write

# traces

otlp/tempo:

endpoint: http://192.168.0.52:4317

tls:

insecure: true

insecure_skip_verify: true

# opentelemetry-collector:0.83.0: error decoding 'exporters': unknown type: "loki" for id

# logs

#loki:

# endpoint: http://192.168.0.52:3100/loki/api/v1/push

service:

pipelines:

metrics:

receivers: [prometheus]

processors: [batch]

#exporters: [logging]

exporters: [logging, prometheusremotewrite]

traces:

receivers: [otlp]

processors: [batch]

#exporters: [logging]

exporters: [logging, otlp/tempo]

logs:

receivers: [otlp]

processors: [batch]

exporters: [logging]

# https://github.com/open-telemetry/opentelemetry-operator/blob/main/docs/api.md#opentelemetrycollectorspecportsindex

ports:

- name: fluent-tcp

port: 8006

protocol: TCP

- name: fluent-udp

port: 8006

protocol: UDP

---

# https://github.com/lightstep/otel-collector-charts/blob/main/charts/collector-k8s/templates/collector.yaml

#apiVersion: rbac.authorization.k8s.io/v1beta1

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: otel-collector

namespace: opentelemetry

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- nodes/metrics

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups: ["monitoring.coreos.com"]

resources:

- servicemonitors

- podmonitors

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics", "/metrics/cadvisor"]

verbs: ["get"]

---

#apiVersion: rbac.authorization.k8s.io/v1beta1

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: otel-collector

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: otel-collector

subjects:

- kind: ServiceAccount

# quirk of the Operator

name: otel-collector-collector

namespace: opentelemetrykubectl -n opentelemetry port-forward svc/otel-golang 8080:8080 &

opentelemetry-collector-on-kubernetes/opentelemetry/apps/request-script.sh

- Loki

helm pull grafana/tempo-distributed

vi override-values.yaml

ingester:

persistence:

enabled: true

size: 10Gi

traces:

otlp:

http:

enabled: true

receiverConfig: {}

grpc:

enabled: true

receiverConfig: {}

storage:

trace:

block:

version: vParquet

backend: local

admin:

backend: filesystem

helm -n opentelemetry -i --create-namespace -f override-values.yaml smap-tempo ./tempo-distributed-1.4.2.tgz- Tempo

$ helm pull grafana/tempo-distributed

$ vi override-values.yaml

ingester:

persistence:

enabled: true

size: 10Gi

traces:

otlp:

http:

enabled: true

receiverConfig: {}

grpc:

enabled: true

receiverConfig: {}

storage:

trace:

block:

version: vParquet

backend: local

admin:

backend: filesystem

helm -n opentelemetry -i --create-namespace -f override-values.yaml smap-tempo ./tempo-distributed-1.4.2.tgz

kubectl get svc tempo-distributor-discoveryGrafana dashboard > Data sources > Add data source에서 이름을 Tempo, HTTP URL을 http://tempo-query-frontend-discovery.tempo:3100으로 입력하고 저장한다.

OpenTelemetry Operator 설치

이 operator는 OpenTelemetryCollector, Instrumentation workload를 관리한다. Operator는 yaml 또는 helm chart로 설치가 가능하다.

- Cert manager 설치(optional)

$ kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.15.0/cert-manager.yaml- helm 으로 operator 설치 ( yaml or helm chart)

6/7일 기준 otel/opentelemetry-operator:0.102.0 이 최종버전임

$ helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

$ helm repo updatevalues.yaml을 수정하여 배포한다.

$ helm show values opentelmetry/opentelemetry-operator > values.yaml

$ cp values.yaml override-values.yaml

$ vi override-values.yaml

helm -n opentelemetry upgrade -i utc-otel-operator --create-namespace -f override-values.yaml open-telemetry/opentelemetry-operatoroperator 설치 문제 발생시 아래 사이트를 참고한다.

- Helm chart - https://github.com/open-telemetry/opentelemetry-helm-charts)

- 가이드 - https://opentelemetry.io/docs/kubernetes/helm/operator

- https://github.com/open-telemetry/opentelemetry-helm-charts/blob/main/charts/opentelemetry-operator/README.md

- 설치 완료 후 확인

$ kubectl get crd | grep opentelemetry

instrumentations.opentelemetry.io 2024-06-06T05:30:17Z

opampbridges.opentelemetry.io 2024-06-06T05:30:17Z

opentelemetrycollectors.opentelemetry.io 2024-06-06T05:30:17ZOpenTelemetry collector 생성

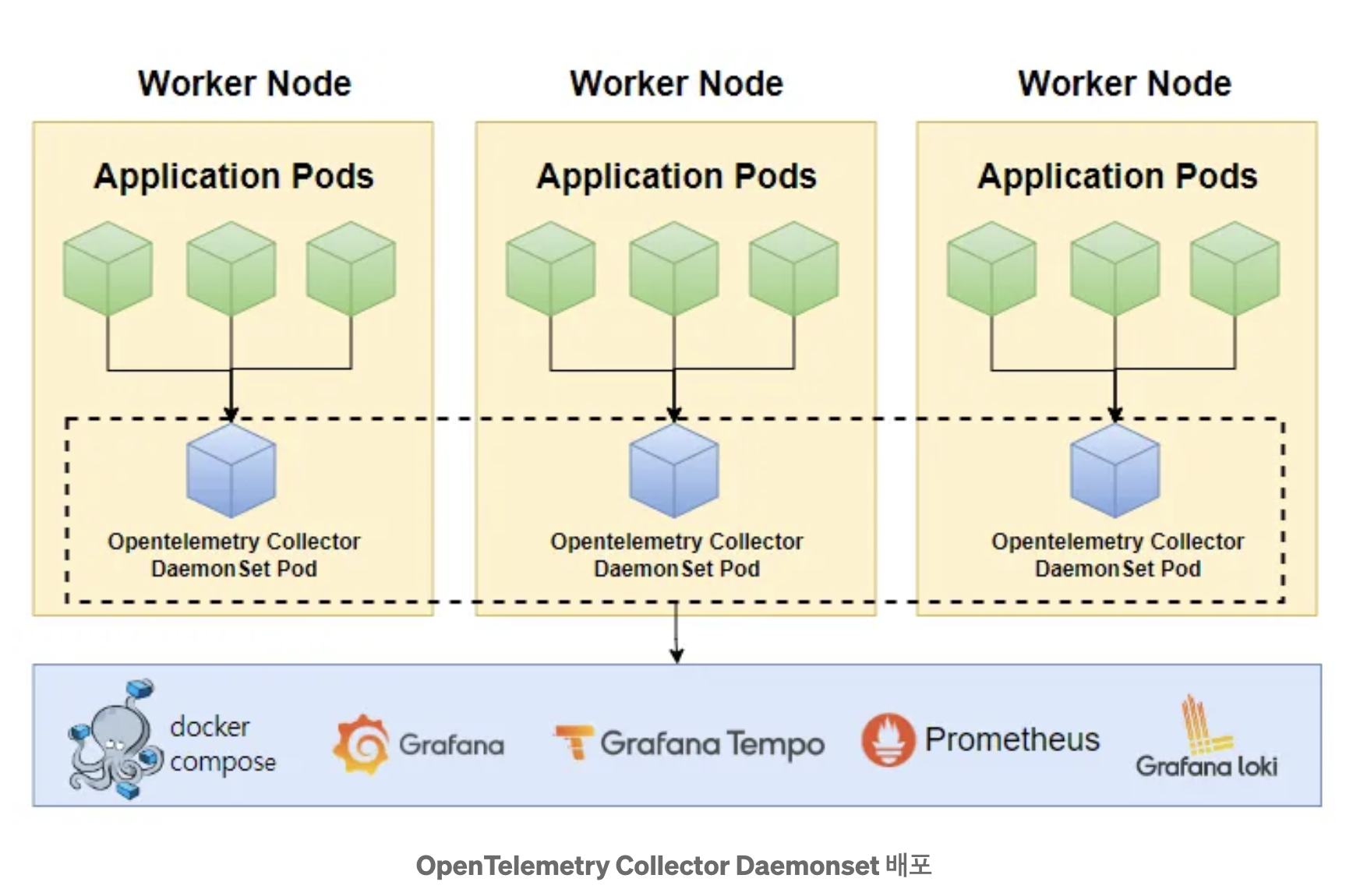

- OpenTelemetry Collector 배포방식은 Deployment(default), DaemonSet, StatefulSet, 그리고 Sidecar의 네 가지 옵션이 있음

$ kubectl apply -f - <<EOF

apiVersion: opentelemetry.io/v1alpha1

kind: OpenTelemetryCollector

metadata:

name: simplest

spec:

config: |

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

processors:

exporters:

# NOTE: Prior to v0.86.0 use `logging` instead of `debug`.

debug:

service:

pipelines:

traces:

receivers: [otlp]

processors: []

exporters: [debug]

EOFkubectl apply -f https://raw.githubusercontent.com/open-telemetry/opentelemetry-

collector/v0.102.1/examples/k8s/otel-config.yaml$ helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

$ helm pull open-telemetry/opentelemetry-collector

$ tar xvfz opentelemetry-collector-0.59.1.tgz

$ rm -rf opentelemetry-collector-0.59.1.tgz

$ mv opentelemetry-collector opentelemetry-collector-0.59.1

$ cd opentelemetry-collector-0.59.1

$ vi override-values.yaml

# Valid values are "daemonset", "deployment", and "statefulset".

mode: "deployment"

config:

exporters:

otlp:

endpoint: tempo-distributor-discovery.tempo:4317

tls:

insecure: true

extensions:

# The health_check extension is mandatory for this chart.

# Without the health_check extension the collector will fail the readiness and liveliness probes.

# The health_check extension can be modified, but should never be removed.

health_check: {}

memory_ballast: {}

processors:

memory_limiter:

check_interval: 1s

limit_percentage: 75

spike_limit_percentage: 15

batch:

send_batch_size: 10000

timeout: 10s

receivers:

otlp:

protocols:

grpc:

endpoint: ${env:MY_POD_IP}:4317

http:

endpoint: ${env:MY_POD_IP}:4318

service:

extensions:

- health_check

- memory_ballast

pipelines:

traces:

exporters:

- otlp

processors:

- memory_limiter

- batch

receivers:

- otlp

helm -n opentelemetry -i --create-namespace -f override-values.yaml smap-otel-collector ./tempo-distributed-1.4.2.tgz Collector components for k8s

kubeletstats Receiver

kubelet의 API 서버에서 Node, Pod, Container 지표를 수집하도록 구성할 수 있다.

receivers:

kubeletstats:

collection_interval: 10s

auth_type: 'serviceAccount'

endpoint: '${env:K8S_NODE_NAME}:10250'

insecure_skip_verify: true

metric_groups:

- node

- pod

- container또는 helm chart를 이용할 경우에는 kubeletMetrics preset을 true로 할 경우 관련된 clusterrole과 kubeletstatereceiver를 자동으로 metric pipeline에 추가한다.

mode: daemonset

presets:

kubeletMetrics:

enabled: trueFilelog Receiver

filelog:

include:

- /var/log/pods/*/*/*.log

exclude:

# Exclude logs from all containers named otel-collector

- /var/log/pods/*/otel-collector/*.log

start_at: beginning

include_file_path: true

include_file_name: false

operators:

# Find out which format is used by kubernetes

- type: router

id: get-format

routes:

- output: parser-docker

expr: 'body matches "^\\{"'

- output: parser-crio

expr: 'body matches "^[^ Z]+ "'

- output: parser-containerd

expr: 'body matches "^[^ Z]+Z"'

# Parse CRI-O format

- type: regex_parser

id: parser-crio

regex:

'^(?P<time>[^ Z]+) (?P<stream>stdout|stderr) (?P<logtag>[^ ]*)

?(?P<log>.*)$'

output: extract_metadata_from_filepath

timestamp:

parse_from: attributes.time

layout_type: gotime

layout: '2006-01-02T15:04:05.999999999Z07:00'

# Parse CRI-Containerd format

- type: regex_parser

id: parser-containerd

regex:

'^(?P<time>[^ ^Z]+Z) (?P<stream>stdout|stderr) (?P<logtag>[^ ]*)

?(?P<log>.*)$'

output: extract_metadata_from_filepath

timestamp:

parse_from: attributes.time

layout: '%Y-%m-%dT%H:%M:%S.%LZ'

# Parse Docker format

- type: json_parser

id: parser-docker

output: extract_metadata_from_filepath

timestamp:

parse_from: attributes.time

layout: '%Y-%m-%dT%H:%M:%S.%LZ'

- type: move

from: attributes.log

to: body

# Extract metadata from file path

- type: regex_parser

id: extract_metadata_from_filepath

regex: '^.*\/(?P<namespace>[^_]+)_(?P<pod_name>[^_]+)_(?P<uid>[a-f0-9\-]{36})\/(?P<container_name>[^\._]+)\/(?P<restart_count>\d+)\.log$'

parse_from: attributes["log.file.path"]

cache:

size: 128 # default maximum amount of Pods per Node is 110

# Rename attributes

- type: move

from: attributes.stream

to: attributes["log.iostream"]

- type: move

from: attributes.container_name

to: resource["k8s.container.name"]

- type: move

from: attributes.namespace

to: resource["k8s.namespace.name"]

- type: move

from: attributes.pod_name

to: resource["k8s.pod.name"]

- type: move

from: attributes.restart_count

to: resource["k8s.container.restart_count"]

- type: move

from: attributes.uid

to: resource["k8s.pod.uid"]Kubernetes Cluster Receiver

k8s_cluster:

auth_type: serviceAccount

node_conditions_to_report:

- Ready

- MemoryPressure

allocatable_types_to_report:

- cpu

- memoryKubernetes Object Receiver

The kubernetes Objects receiver collects(pull/watch) objects from the Kubernetes API server

k8sobjects:

auth_type: serviceAccount

objects:

- name: pods

mode: pull

label_selector: environment in (production),tier in (frontend)

field_selector: status.phase=Running

interval: 15m

- name: events

mode: watch

group: events.k8s.io

namespaces: [default]Prometheus Receiver

Host Metrics Receiver

Kubeletstats Receiver와 같이 사용할 경우 중복된 metric수집은 disable하는 것이 좋다.

가용한 scraper는 아래와 같다.

cpu, disk, load, filesystem, memory, network, paging, processes, process

receivers:

hostmetrics:

collection_interval: 30s

scrapers:

cpu:

memory:

hostmetrics/disk:

collection_interval: 1m

scrapers:

disk:

filesystem:

service:

pipelines:

metrics:

receivers: [hostmetrics, hostmetrics/disk]- add annotation

.NET: instrumentation.opentelemetry.io/inject-dotnet: "true"

Go: instrumentation.opentelemetry.io/inject-go: "true"

Java: instrumentation.opentelemetry.io/inject-java: "true"

Node.js: instrumentation.opentelemetry.io/inject-nodejs: "true"

Python: instrumentation.opentelemetry.io/inject-python: "true"