03. Web Data

1. BeautifulSoup for web data

BeautifulSoup Basic

- install

- conda install -c anaconda beautifulsoup4

- pip install beautifulsoup4

- data

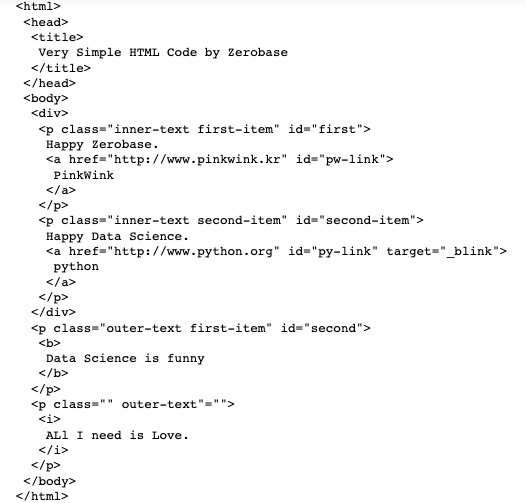

- 03.test_first.html

from bs4 import BeautifulSoup

page = open("../data/03. zerobase.html", "r").read()

soup = BeautifulSoup(page, "html.parser")

print(soup.prettify())

# prettify()하면 조금 들여써서 볼수있음.

# head 태그

soup.head

#출력 :

<head>

<title>Very Simple HTML Code by Zerobase</title>

</head>#body태그

soup.body

#출력 :

<body>

<div>

<p class="inner-text first-item" id="first">

Happy Zerobase.

<a href="http://www.pinkwink.kr" id="pw-link">PinkWink</a>

</p>

<p class="inner-text second-item" id="second-item">

Happy Data Science.

<a href="http://www.python.org" id="py-link" target="_blink">python</a>

</p>

</div>

<p class="outer-text first-item" id="second">

<b>Data Science is funny</b>

</p>

<p class="" outer-text"="">

<i>ALl I need is Love.</i>

</p>

</body>#p태그

# 처음 발견한 p태그만 출력

soup.p

#출력 :

<p class="inner-text first-item" id="first">

Happy Zerobase.

<a href="http://www.pinkwink.kr" id="pw-link">PinkWink</a>

</p>soup.find("p")

#출력 :

<p class="inner-text first-item" id="first">

Happy Zerobase.

<a href="http://www.pinkwink.kr" id="pw-link">PinkWink</a>

</p>soup.find("p", class_="inner-text second-item")

# 파이썬의 class와 겹치지 않기 위해 '_'사용

#출력 :

<p class="inner-text second-item" id="second-item">

Happy Data Science.

<a href="http://www.python.org" id="py-link" target="_blink">python</a>

</p>

# 위와 동일하지만 딕셔너리로도 찾을 수 있음. 텍스트만 출력하기 위해 text.strip() 적재

soup.find("p", {"class":"outer-text first-item"}).text.strip()

#출력 :

'Data Science is funny'# 다중 조건

soup.find("p", {"class": "inner-text first-item", "id":"first"})

#출력 :

<p class="inner-text first-item" id="first">

Happy Zerobase.

<a href="http://www.pinkwink.kr" id="pw-link">PinkWink</a>

</p>

#find_all() : 여러개의 태그를 반환

#list 형태로 반환

soup.find_all("p")

#출력 :

[<p class="inner-text first-item" id="first">

Happy Zerobase.

<a href="http://www.pinkwink.kr" id="pw-link">PinkWink</a>

</p>,

<p class="inner-text second-item" id="second-item">

Happy Data Science.

<a href="http://www.python.org" id="py-link" target="_blink">python</a>

</p>,

<p class="outer-text first-item" id="second">

<b>Data Science is funny</b>

</p>,

<p class="" outer-text"="">

<i>ALl I need is Love.</i>

</p>]

# 특정 태그

soup.find_all(id="pw-link")[0].text

#출력 :

'PinkWink'soup.find_all("p", class_="inner-text second-item")

#출력 :

[<p class="inner-text second-item" id="second-item">

Happy Data Science.

<a href="http://www.python.org" id="py-link" target="_blink">python</a>

</p>]#p 태그 리스트에서 텍스트 속성만 출력

for each_tag in soup.find_all("p"):

print("="*30)

print(each_tag.text)

#출력 :

==============================

Happy Zerobase.

PinkWink

==============================

Happy Data Science.

python

==============================

Data Science is funny

==============================

ALl I need is Love.# a태그에서 href 속성값에 있는 값 추출

links = soup.find_all("a")

links

#출력 :

[<a href="http://www.pinkwink.kr" id="pw-link">PinkWink</a>,

<a href="http://www.python.org" id="py-link" target="_blink">python</a>]for each in links:

href = each.get("href") #each["href"]

text = each.get_text()

print(text + "=>" + href)

#출력 :

PinkWink=>http://www.pinkwink.kr

python=>http://www.python.org2.시카고 맛집 데이터 분석 - 개요

- 최종목표 : 총 51개의 페이지에서 각 가게의 정보를 가져온다.

- 가게이름

- 대표메뉴

- 대표메뉴의 가격

- 가게주소

3.시카고 맛집 데이터 분석 - 메인페이지

from urllib.request import Request, urlopen

from fake_useragent import UserAgent

from bs4 import BeautifulSoup

url_base = "http://www.chicagomag.com/"

url_sub = "chicago-magazine/november-2012/best-sandwiches-chicago/"

url = url_base + url_sub

ua = UserAgent()

ua.ie

req = Request(url, headers={"user-agent":"ua.ai"})

html = urlopen(req)

# html.status

soup = BeautifulSoup(html, "html.parser")

print(soup.prettify())

#출력 :

<!DOCTYPE html>

<html lang="en-US">

<head>

<meta charset="utf-8"/>

<meta content="IE=edge" http-equiv="X-UA-Compatible">

<link href="https://gmpg.org/xfn/11" rel="profile"/>

<script src="https://cmp.osano.com/16A1AnRt2Fn8i1unj/f15ebf08-7008-40fe-9af3-db96dc3e8266/osano.js">

</script>

<title>

The 50 Best Sandwiches in Chicago – Chicago Magazine

</title>

<style type="text/css">

.heateor_sss_button_instagram span.heateor_sss_svg,a.heateor_sss_instagram span.heateor_sss_svg{background:radial-gradient(circle at 30% 107%,#fdf497 0,#fdf497 5%,#fd5949 45%,#d6249f 60%,#285aeb 90%)}

div.heateor_sss_horizontal_sharing a.heateor_sss_button_instagram span{background:#000!important;}div.heateor_sss_standard_follow_icons_container a.heateor_sss_button_instagram span{background:#000;}

.heateor_sss_horizontal_sharing .heateor_sss_svg,.heateor_sss_standard_follow_icons_container .heateor_sss_svg{

background-color: #000!important;

background: #000!important;

color: #fff; ...soup.find_all("div", class_="sammy")

len(soup.find_all("div", class_="sammy"))

#출력 : 50tmp_one = soup.find_all("div", "sammy")[0]

type(tmp_one), tmp_one

#출력 :

(bs4.element.Tag,

<div class="sammy" style="position: relative;">

<div class="sammyRank">1</div>

<div class="sammyListing"><a href="/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Old-Oak-Tap-BLT/"><b>BLT</b><br/>

Old Oak Tap<br/>

<em>Read more</em> </a></div>

</div>)tmp_one.find(class_="sammyRank").get_text()

#출력 : '1'tmp_one.find("div", {"class":"sammyListing"}).get_text()

#출력 : 'BLT\nOld Oak Tap\nRead more '

tmp_one.find("a")["href"]

#출력 : '/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Old-Oak-Tap-BLT/'import re

tmp_string = tmp_one.find(class_="sammyListing").get_text()

re.split(("\n|\r\n"), tmp_string)

#출력 : ['BLT', 'Old Oak Tap', 'Read more ']

print(re.split(("\n|\r\n"), tmp_string)[0])

print(re.split(("\n|\r\n"), tmp_string)[1])

#출력 :

BLT

Old Oak Tapfrom urllib.parse import urljoin

url_base = "http://www.chicagomag.com/"

#필요한 내용을 담을 리스트

#리스트로 하나씩 컬럼을 만들고, DataFrame으로 합칠 예정

rank = []

main_menu = []

cafe_name = []

url_add = []

list_soup = soup.find_all("div", "sammy")

for item in list_soup:

rank.append(item.find(class_="sammyRank").get_text())

tmp_string = item.find(class_="sammyListing").get_text()

main_menu.append(re.split(("\n|\r\n"), tmp_string)[0])

cafe_name.append(re.split(("\n|\r\n"), tmp_string)[1])

url_add.append(urljoin(url_base, item.find("a")["href"]))

데이터가 정보를 다 잘 갖고 왔나 확인하기.

len(rank), len(main_menu), len(cafe_name), len(url_add)

#출력 : (50, 50, 50, 50)rank[:5]

#출력 : ['1', '2', '3', '4', '5']

main_menu[:5]

#출력 : ['BLT', 'Fried Bologna', 'Woodland Mushroom', 'Roast Beef', 'PB&L']

cafe_name[:5]

#출력 : ['Old Oak Tap', 'Au Cheval', 'Xoco', 'Al’s Deli', 'Publican Quality Meats']

url_add[:5]

#출력 :

['http://www.chicagomag.com/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Old-Oak-Tap-BLT/',

'http://www.chicagomag.com/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Au-Cheval-Fried-Bologna/',

'http://www.chicagomag.com/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Xoco-Woodland-Mushroom/',

'http://www.chicagomag.com/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Als-Deli-Roast-Beef/',

'http://www.chicagomag.com/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Publican-Quality-Meats-PB-L/']데이터가 잘 들어왔으니 데이터프레임으로 만들어보자.

import pandas as pd

data = {

"Rank" : rank,

"Menu" : main_menu,

"Cafe" : cafe_name,

"URL" : url_add,

}

df = pd.DataFrame(data)

df

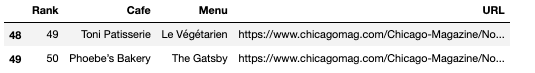

# 컬럼 순서 변경

df = pd.DataFrame(data, columns=["Rank", "Cafe", "Menu", "URL"])

df.tail(2)

# 데이터 저장

df.to_csv(

"../data/03. best_sandwiches_list.csv", sep=",", encoding="utf-8")

- 시카고 맛집 데이터 분석 - 하위 페이지

# requirements

import pandas as pd

from urllib.request import urlopen, Request

from fake_useragent import UserAgent

from bs4 import BeautifulSoup df["URL"][0]

#출력 :

'http://www.chicagomag.com/Chicago-Magazine/November-2012/Best-Sandwiches-in-Chicago-Old-Oak-Tap-BLT/'req = Request(df["URL"][0], headers={"user-agent":ua.ie})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, "html.parser")

soup_tmp.find("p", "addy")

#출력 :

<p class="addy">

<em>$10. 2109 W. Chicago Ave., 773-772-0406, <a href="http://www.theoldoaktap.com/">theoldoaktap.com</a></em></p># regular expression

price_tmp = soup_tmp.find("p", "addy").text

price_tmp

#출력 :

'\n$10. 2109 W. Chicago Ave., 773-772-0406, theoldoaktap.com'#쪼개기

import re

re.split(".,", price_tmp)

#출력 :

['\n$10. 2109 W. Chicago Ave', ' 773-772-040', ' theoldoaktap.com']price_tmp = re.split(".,", price_tmp)[0]

price_tmp

#출력 :

'\n$10. 2109 W. Chicago Ave'tmp = re.search("\$\d+\.(\d+)?", price_tmp).group()

price_tmp[len(tmp)+2:]

#출력 :

'2109 W. Chicago Ave'from tqdm import tqdm

price = []

address = []

for idx, row in df.iterrows():

req = Request(row["URL"], headers={"user-agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, "html.parser")

soup_tmp.find("p", "addy")

gettings = soup_tmp.find("p", "addy").get_text()

price_tmp = re.split(".,", gettings)[0]

tmp = re.search("\$\d+\.(\d+)?", price_tmp).group()

price.append(tmp)

address.append(price_tmp[len(tmp)+2:])

print(idx) #데이터 잘 불러오고 있는지 확인하기 위해

#출력 : 0 -49

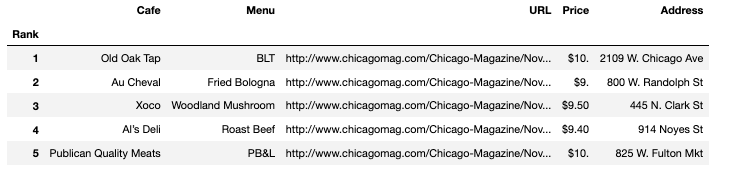

데이터가 잘 불러져 왔나 확인

len(price), len(address)

#출력 : (50, 50)price[:5]

#출력 : ['$10.', '$9.', '$9.50', '$9.40', '$10.']address[:5]

#출력 :

['2109 W. Chicago Ave',

'800 W. Randolph St',

' 445 N. Clark St',

' 914 Noyes St',

'825 W. Fulton Mkt']이제 df데이터에 합쳐보기.

df["Price"] = price

df["Address"] =address

df = df.loc[:, ["Rank", "Cafe", "Menu", "URL", "Price", "Address"]]

df.set_index("Rank", inplace=True)

df.head()

#데이터 저장

df.to_csv("../data/03. best_sandwiches_list_chicago2.csv", sep=",", encoding="utf-8")