📌 Notice

Database Operator In Kubernetes study (=DOIK)

데이터베이스 오퍼레이터를 이용하여 쿠버네티스 환경에서 배포/운영하는 내용을 정리한 블로그입니다.

CloudNetaStudy그룹에서 스터디를 진행하고 있습니다.

Gasida님께 다시한번 🙇 감사드립니다.EKS 및 KOPS 관련 스터디 내용은 아래 링크를 통해 확인할 수 있습니다.

[AEWS] AWS EKS 스터디

[PKOS] KOPS를 이용한 AWS에 쿠버네티스 배포

📌 Review

6주간의 스터디가 빠르게 지나갔습니다.

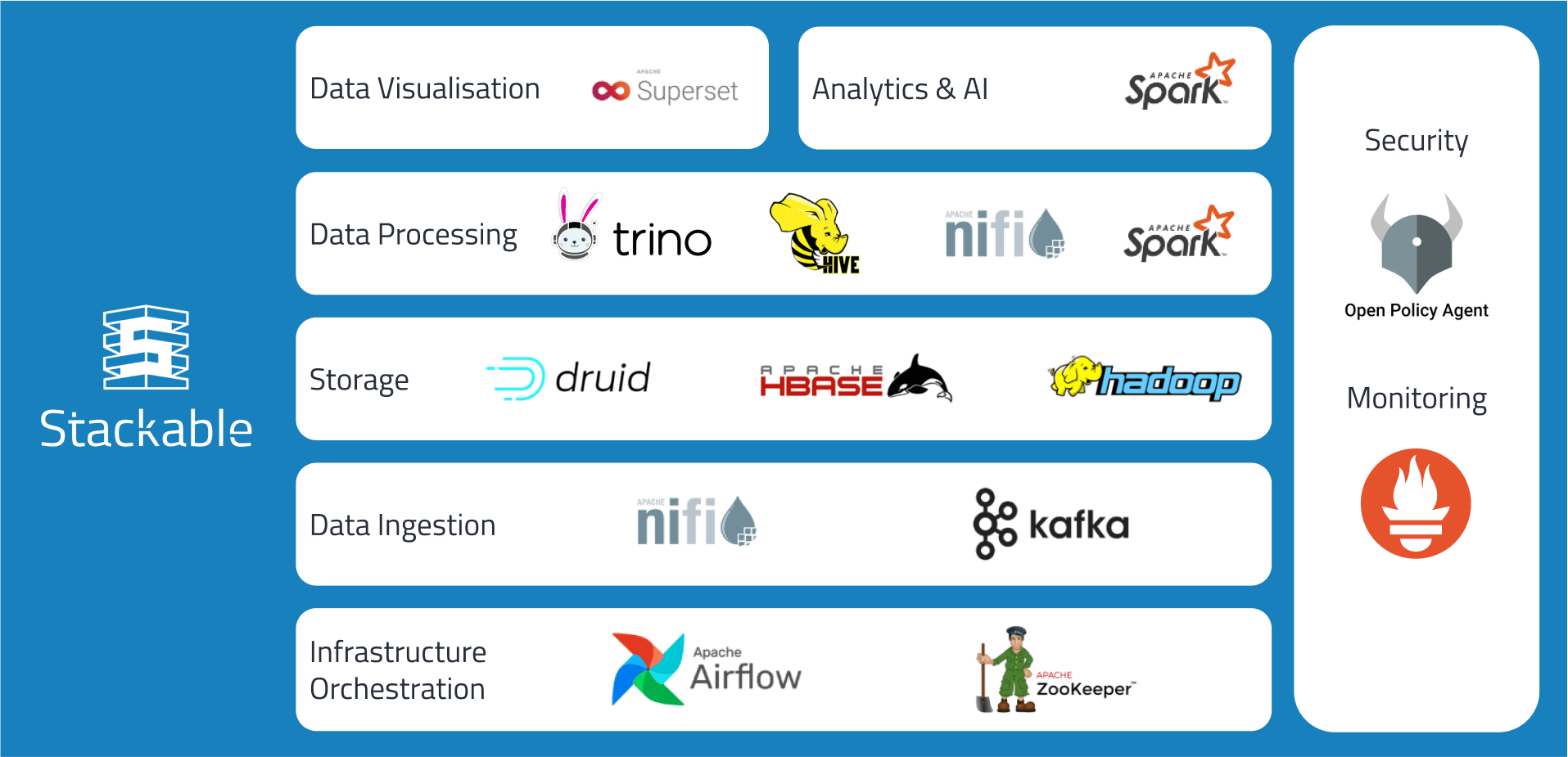

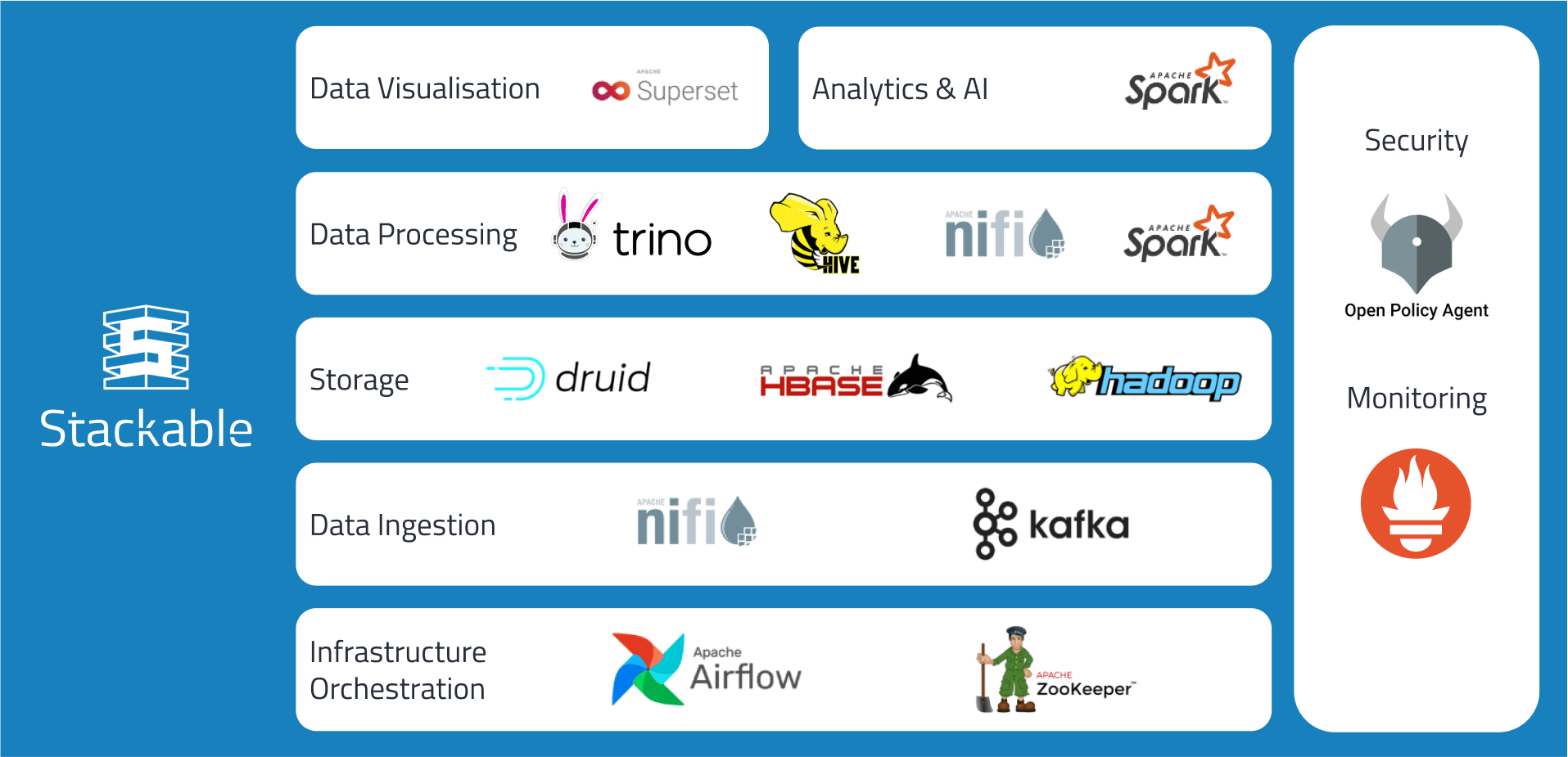

데이터 플랫폼을 쉽고 빠르게 도입할 수 있는 Stackable에 대해서 스터디 하였습니다. 현재 직무에서는 데이터 파이프라인까지는 다루고 있지 않아, 생소한 내용도 많았지만, 하나씩 실습해 보면서 정말 쉽게 배포 할 수 있구나를 알게 되었습니다.

DOIK 스터디를 통해 쿠버네티스에서 Database를 운영하는 기술에 대해서 많이 배울 수 있었습니다.

📌 Summary

-

K8s의 Operator를 통해 어플리케이션을 패키징-배포-관리할 수 있습니다.

-

데이터베이스 오퍼레이터를 이해하기 위해 반드시 알아야하는 쿠버네티스 관련 지식을 정리합니다.

-

데이터 아키텍처를 손쉽게 구축할 수 있는

Stackable에 대해서 알아보며 쿠버네티스에 배포하는 실습을 진행 합니다.

📌 Study

👉 Step 01. SDP

Stackable Data Platform (SDP) 이해 : 오픈소스 데이터 관련 애플리케이션 손쉽게 배포 관리

데이터 관련 제공 오퍼레이터 - Link

- Airflow, Druid, HBase, Hadoop HDFS, Hive, Kafka, NiFi, Spark, Superset, Trino, ZooKeeper

✅ Stackable 설치

Stackable을 클러스터에 배포합니다.

# 다운로드 #curl -L -o stackablectl https://github.com/stackabletech/stackable-cockpit/releases/download/stackablectl-1.0.0-rc2/stackablectl-x86_64-unknown-linux-gnu curl -L -o stackablectl https://github.com/stackabletech/stackable-cockpit/releases/download/stackablectl-1.0.0-rc3/stackablectl-x86_64-unknown-linux-gnu chmod +x stackablectl mv stackablectl /usr/local/bin # 확인 stackablectl -h stackablectl -V stackablectl 1.0.0-rc3 stackablectl release list ... # 자동완성 wget https://raw.githubusercontent.com/stackabletech/stackable-cockpit/main/extra/completions/stackablectl.bash mv stackablectl.bash /etc/bash_completion.d/ # 제공 오퍼레이터 stackablectl operator list # 제공 스택 stackablectl stack list # 제공 데모 : Stackable release 설치 > 스택 구성 > 데이터 구성 stackablectl demo list

👉 Step 02. Demo Trino-Taxi-Data

✅ Demo 설치

Stackable에서 제공하는 DEMO 서비스를 배포하며 실습을 진행합니다.

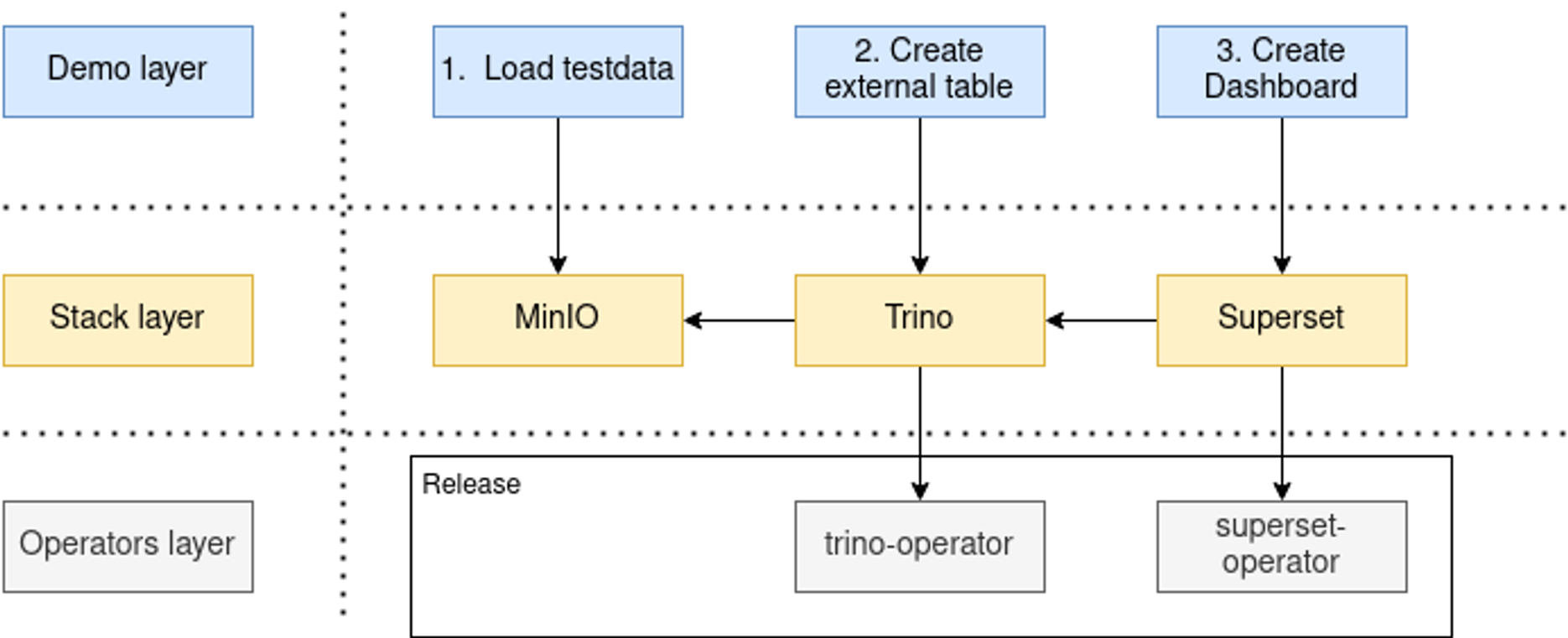

데이터는COVID-19시대의 택시 예제 데이터입니다.The data was put into the S3 storage → Trino enables you to query the data via SQL → Superset was used as a web-based frontend to execute SQL statements and build dashboards.

# Demo 정보 확인 stackablectl demo list stackablectl demo list -o json | jq stackablectl demo describe trino-taxi-data Demo trino-taxi-data > Description Demo loading 2.5 years of New York taxi data into S3 bucket, creating a Trino table and a Superset dashboard Documentation https://docs.stackable.tech/stackablectl/stable/demos/trino-taxi-data.html > Stackable stack trino-superset-s3 > Labels trino, superset, minio, s3, ny-taxi-data

- Spin up the follow data products

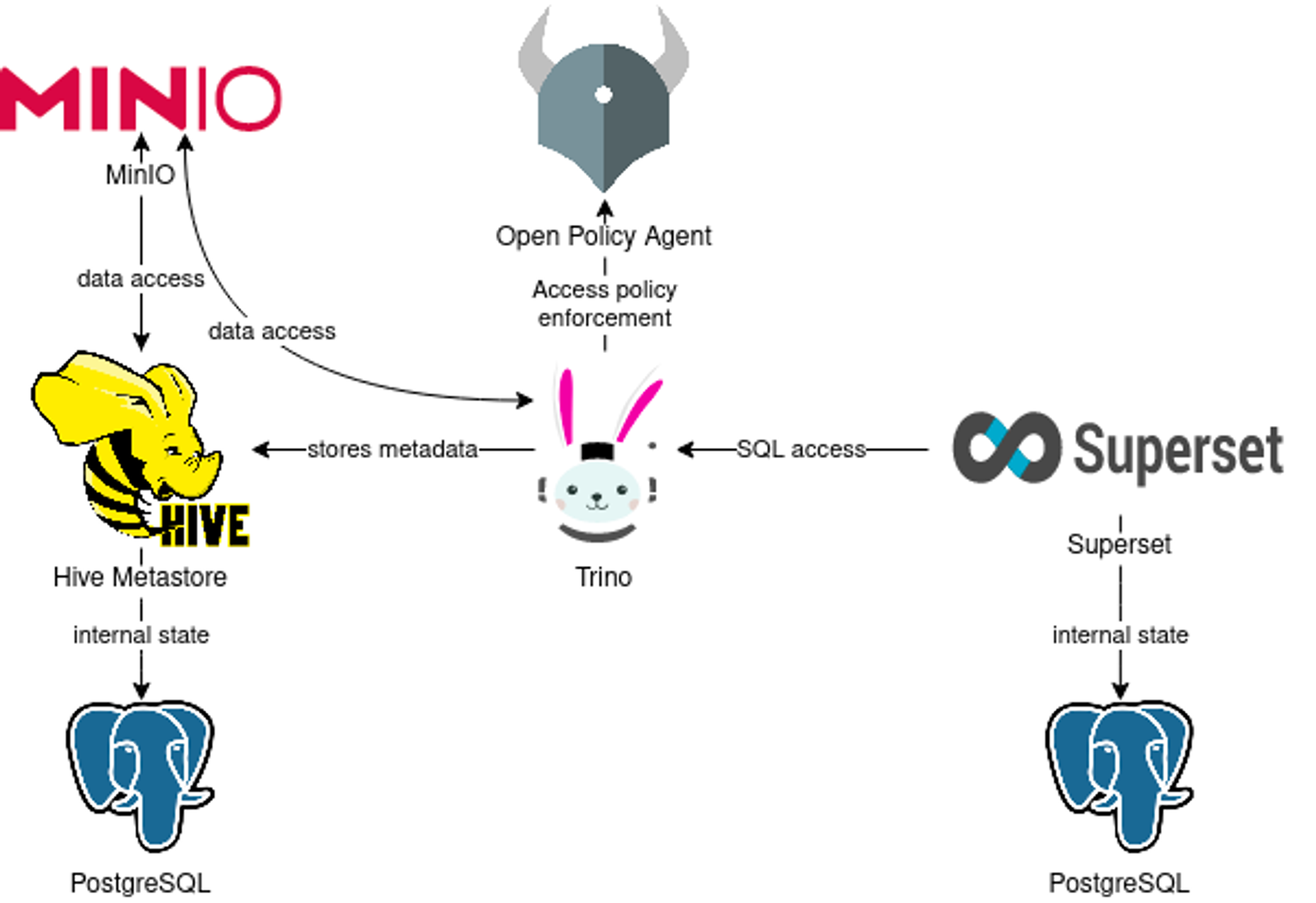

- MinIO: A S3 compatible object store. This demo uses it as persistent storage to store all the data used

- Hive metastore: A service that stores metadata related to Apache Hive and other services. This demo uses it as metadata storage for Trino - Link

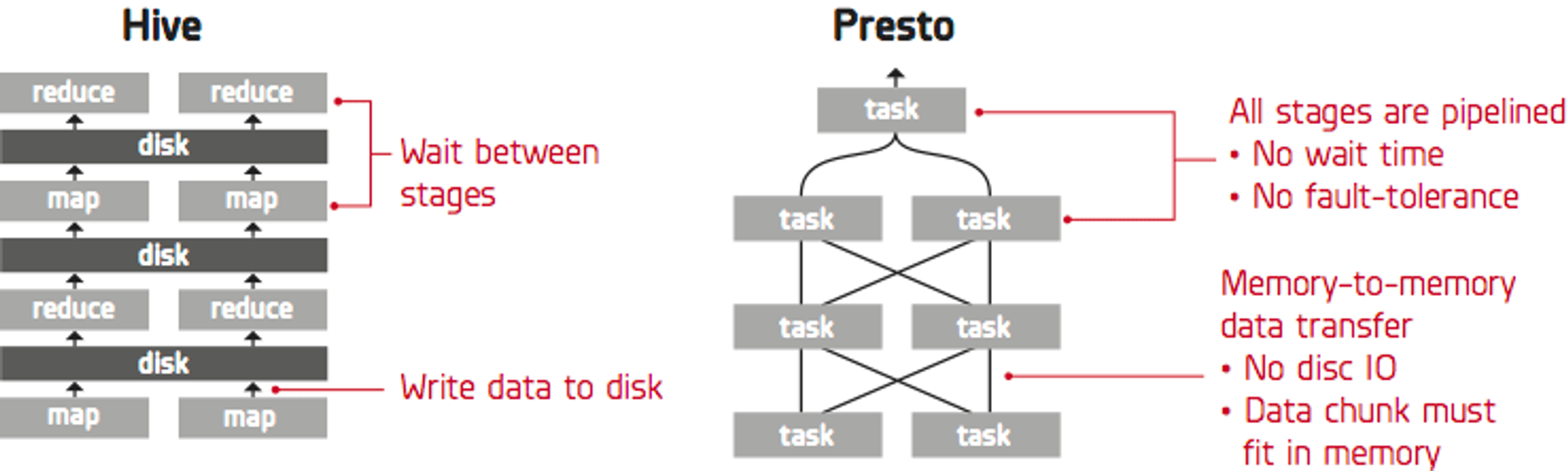

- Trino: A fast distributed SQL query engine for big data analytics that helps you explore your data universe. This demo uses it to enable SQL access to the data

- Superset: A modern data exploration and visualization platform. This demo utilizes Superset to retrieve data from Trino via SQL queries and build dashboards on top of that data

- Open policy agent (OPA): An open source, general-purpose policy engine that unifies policy enforcement across the stack. This demo uses it as the authorizer for Trino, which decides which user is able to query which data.

- Load testdata into S3. It contains 2.5 years of New York City taxi trips

- Make data accessible via SQL in Trino

- Create Superset dashboards for visualization of the data

✅ Demo 실습

배포된 Demo 관련 리소스를 확인합니다.

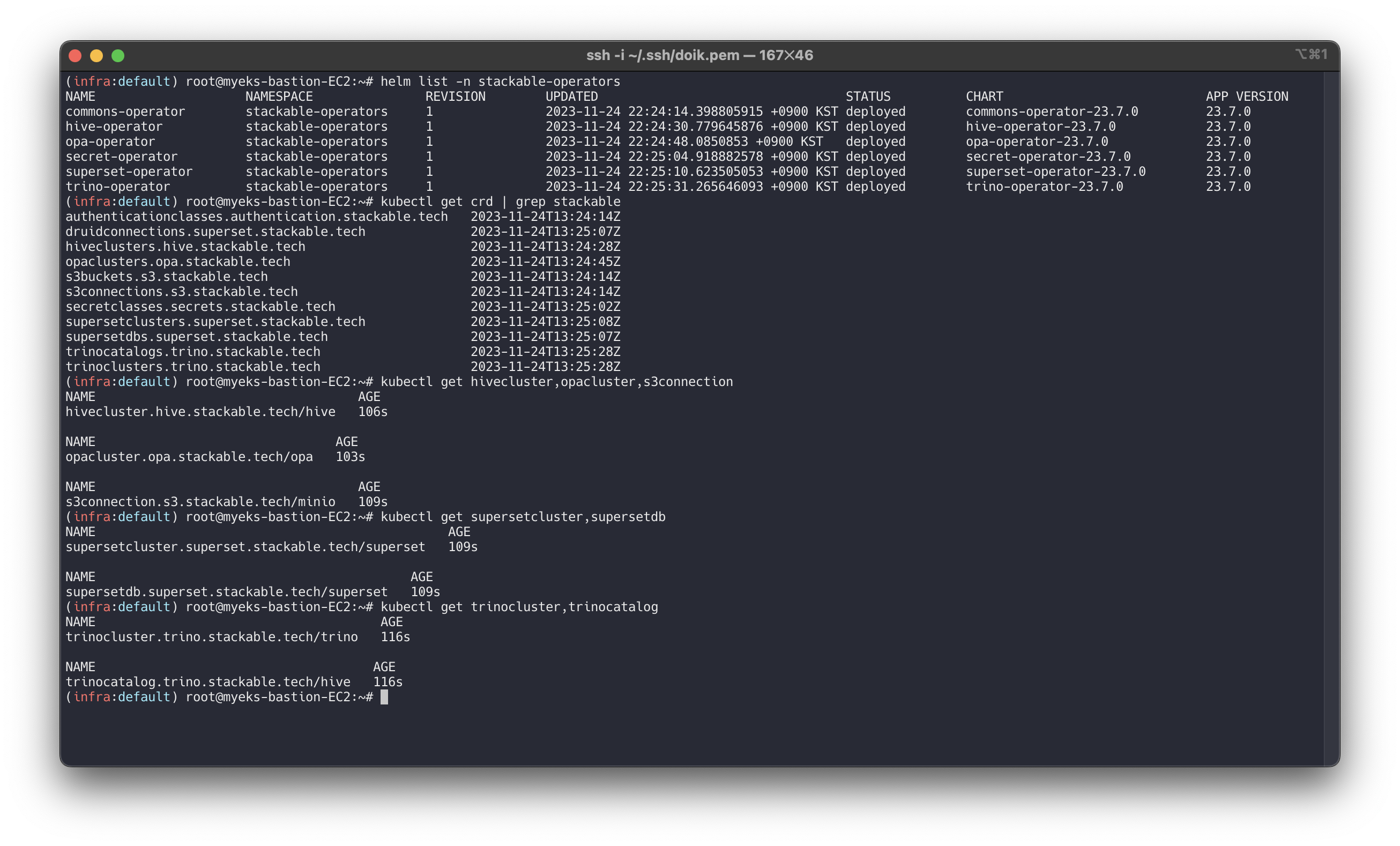

# [터미널] 모니터링 watch -d "kubectl get pod -n stackable-operators;echo;kubectl get pod,job,svc,pvc" # 데모 설치 : 데이터셋 다운로드 job 포함 8분 정도 소요 stackablectl demo install trino-taxi-data # 설치 확인 helm list -n stackable-operators helm list

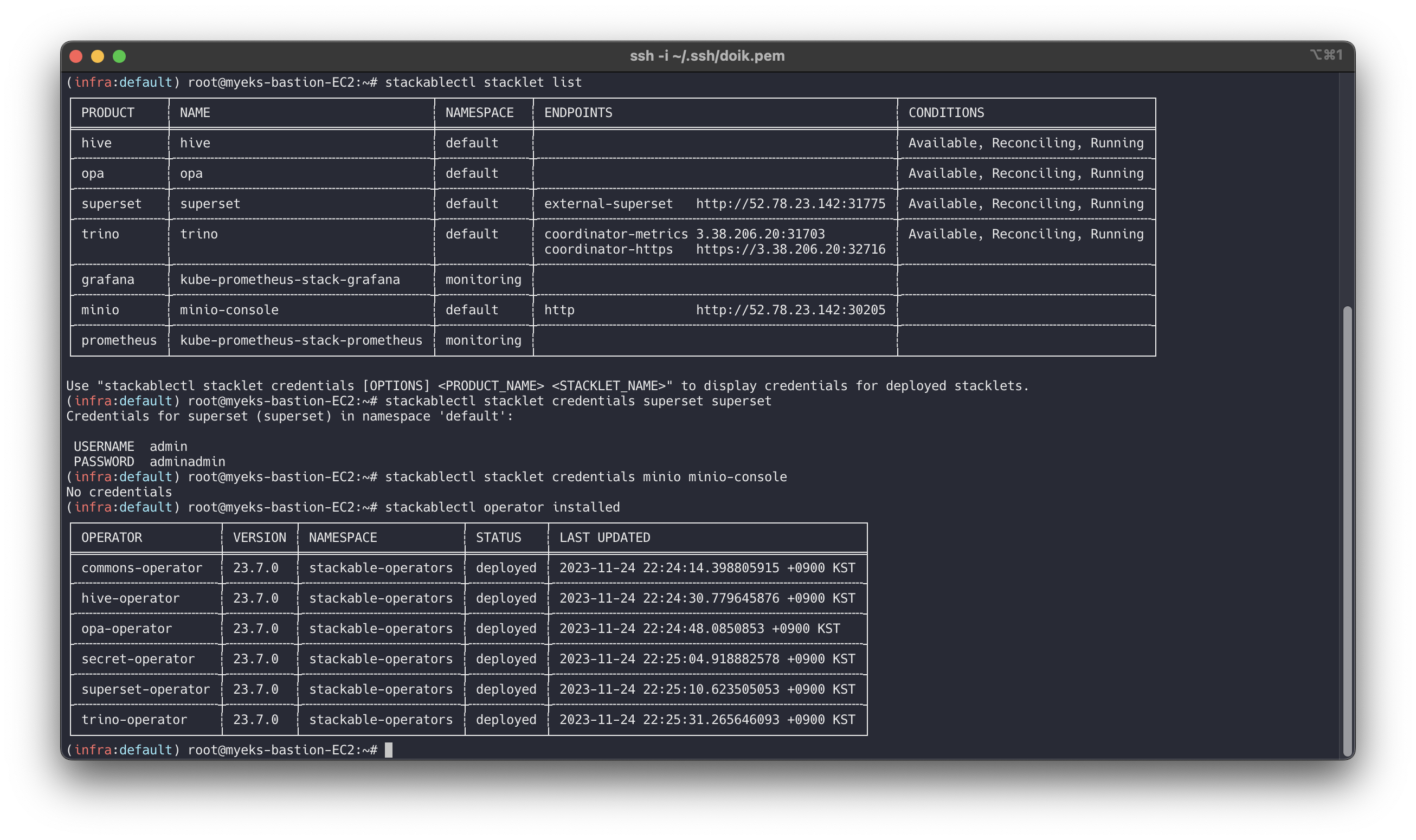

kubectl top node kubectl top pod -A kubectl get-all -n default kubectl get deploy,sts,pod kubectl get job kubectl get job load-ny-taxi-data -o yaml | kubectl neat | cat -l yaml kubectl get job create-ny-taxi-data-table-in-trino -o yaml | kubectl neat | cat -l yaml kubectl get job setup-superset -o yaml | kubectl neat | cat -l yaml kubectl get job superset -o yaml | kubectl neat | cat -l yaml kubectl get sc,pvc,pv kubectl get pv |grep gp3 kubectl get sc secrets.stackable.tech -o yaml | kubectl neat | cat -l yaml kubectl df-pv kubectl get svc,ep,endpointslices kubectl get cm,secret kubectl get cm minio -o yaml | kubectl neat | cat -l yaml kubectl describe cm minio kubectl get cm hive-metastore-default -o yaml | kubectl neat | cat -l yaml kubectl get cm hive -o yaml | kubectl neat | cat -l yaml kubectl get cm postgresql-hive-extended-configuration -o yaml | kubectl neat | cat -l yaml kubectl get cm trino-coordinator-default -o yaml | kubectl neat | cat -l yaml kubectl get cm trino-coordinator-default-catalog -o yaml | kubectl neat | cat -l yaml kubectl get cm trino-worker-default -o yaml | kubectl neat | cat -l yaml kubectl get cm trino-worker-default-catalog -o yaml | kubectl neat | cat -l yaml kubectl get cm create-ny-taxi-data-table-in-trino-script -o yaml | kubectl neat | cat -l yaml kubectl get cm superset-node-default -o yaml | kubectl neat | cat -l yaml kubectl get cm superset-init-db -o yaml | kubectl neat | cat -l yaml kubectl get cm setup-superset-script -o yaml | kubectl neat | cat -l yaml kubectl get secret minio -o yaml | kubectl neat | cat -l yaml kubectl get secret minio-s3-credentials -o yaml | kubectl neat | cat -l yaml kubectl get secret postgresql-hive -o yaml | kubectl neat | cat -l yaml kubectl get secret postgresql-superset -o yaml | kubectl neat | cat -l yaml kubectl get secret trino-users -o yaml | kubectl neat | cat -l yaml kubectl get secret trino-internal-secret -o yaml | kubectl neat | cat -l yaml kubectl get secret superset-credentials -o yaml | kubectl neat | cat -l yaml kubectl get secret superset-mapbox-api-key -o yaml | kubectl neat | cat -l yaml kubectl get crd | grep stackable kubectl explain trinoclusters kubectl describe trinoclusters.trino.stackable.tech kubectl get hivecluster,opacluster,s3connection kubectl get supersetcluster,supersetdb kubectl get trinocluster,trinocatalog kubectl get hivecluster -o yaml | kubectl neat | cat -l yaml kubectl get s3connection -o yaml | kubectl neat | cat -l yaml kubectl get supersetcluster -o yaml | kubectl neat | cat -l yaml kubectl get supersetdb -o yaml | kubectl neat | cat -l yaml kubectl get trinocluster -o yaml | kubectl neat | cat -l yaml kubectl get trinocatalog -o yaml | kubectl neat | cat -l yaml # 배포 스택 정보 확인 : 바로 확인 하지 말고, 설치 완료 후 아래 확인 할 것 - Endpoint(접속 주소 정보), Conditions(상태 정보) stackablectl stacklet list ┌────────────┬──────────────────────────────────┬────────────┬──────────────────────────────────────────────────┬─────────────────────────────────┐ │ PRODUCT ┆ NAME ┆ NAMESPACE ┆ ENDPOINTS ┆ CONDITIONS │ ╞════════════╪══════════════════════════════════╪════════════╪══════════════════════════════════════════════════╪═════════════════════════════════╡ │ hive ┆ hive ┆ default ┆ ┆ Available, Reconciling, Running │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ opa ┆ opa ┆ default ┆ ┆ Available, Reconciling, Running │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ superset ┆ superset ┆ default ┆ external-superset http://43.202.112.25:31493 ┆ Available, Reconciling, Running │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ trino ┆ trino ┆ default ┆ coordinator-metrics 15.164.129.120:30531 ┆ Available, Reconciling, Running │ │ ┆ ┆ ┆ coordinator-https https://15.164.129.120:31597 ┆ │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ grafana ┆ kube-prometheus-stack-grafana ┆ monitoring ┆ ┆ │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ minio ┆ minio-console ┆ default ┆ http http://3.35.25.225:30697 ┆ │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ prometheus ┆ kube-prometheus-stack-prometheus ┆ monitoring ┆ ┆ │ └────────────┴──────────────────────────────────┴────────────┴──────────────────────────────────────────────────┴─────────────────────────────────┘ # 배포 스택의 product 접속 계정 정보 확인 : 대부분 admin / adminadmin 계정 정보 사용 stackablectl stacklet credentials superset superset stackablectl stacklet credentials minio minio-console # admin / adminadmin 계정 정보 출력 안됨... 아직은 rc 단계라 그런듯 # 배포 오퍼레이터 확인 stackablectl operator installed ┌───────────────────┬─────────┬─────────────────────┬──────────┬─────────────────────────────────────────┐ │ OPERATOR ┆ VERSION ┆ NAMESPACE ┆ STATUS ┆ LAST UPDATED │ ╞═══════════════════╪═════════╪═════════════════════╪══════════╪═════════════════════════════════════════╡ │ commons-operator ┆ 23.7.0 ┆ stackable-operators ┆ deployed ┆ 2023-11-19 10:37:56.08217875 +0900 KST │ ├╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ hive-operator ┆ 23.7.0 ┆ stackable-operators ┆ deployed ┆ 2023-11-19 10:38:13.358512684 +0900 KST │ ├╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ opa-operator ┆ 23.7.0 ┆ stackable-operators ┆ deployed ┆ 2023-11-19 10:38:32.724016087 +0900 KST │ ├╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ secret-operator ┆ 23.7.0 ┆ stackable-operators ┆ deployed ┆ 2023-11-19 10:38:51.410402351 +0900 KST │ ├╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ superset-operator ┆ 23.7.0 ┆ stackable-operators ┆ deployed ┆ 2023-11-19 10:38:56.963602496 +0900 KST │ ├╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ trino-operator ┆ 23.7.0 ┆ stackable-operators ┆ deployed ┆ 2023-11-19 10:39:15.346593878 +0900 KST │ └───────────────────┴─────────┴─────────────────────┴──────────┴─────────────────────────────────────────┘

집 PC에서 직접 ENDPOINTS(워커 노드의 NodePort)로 접속 설정

→ 아래 보안 그룹 추가 후 접속 가능합니다.# 워커노드의 '#-nodegroup-ng1-remoteAccess' 보안 그룹에 자신의 집 공인IP 접속 허용 추가 NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values='ng1' --query "SecurityGroups[*].[GroupId]" --output text) aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr $(curl -s ipinfo.io/ip)/32

(Optional)ingress 로 접속 시

# 파일 생성 : minio 예시 cat <<EOT > minio-ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: annotations: alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/ssl-redirect: "443" alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/target-type: ip labels: app: minio name: minio spec: ingressClassName: alb rules: - host: minio.$MyDomain http: paths: - backend: service: name: minio-console port: number: 9001 path: /* pathType: ImplementationSpecific EOT # ingress 생성 kubectl apply -f minio-ingress.yaml # 확인 kubectl get ingress -A echo -e "minio URL = https://minio.$MyDomain"

📕 Inspect data in S3

Inspect data in S3

S3에 저장된 데이터를 확인합니다.

PRODUCT minio ENDPOINTS console-http 접속 :

admin/adminadminClick on the blue button

Browseon the bucketdemoand open the foldersny-taxi-data→raw

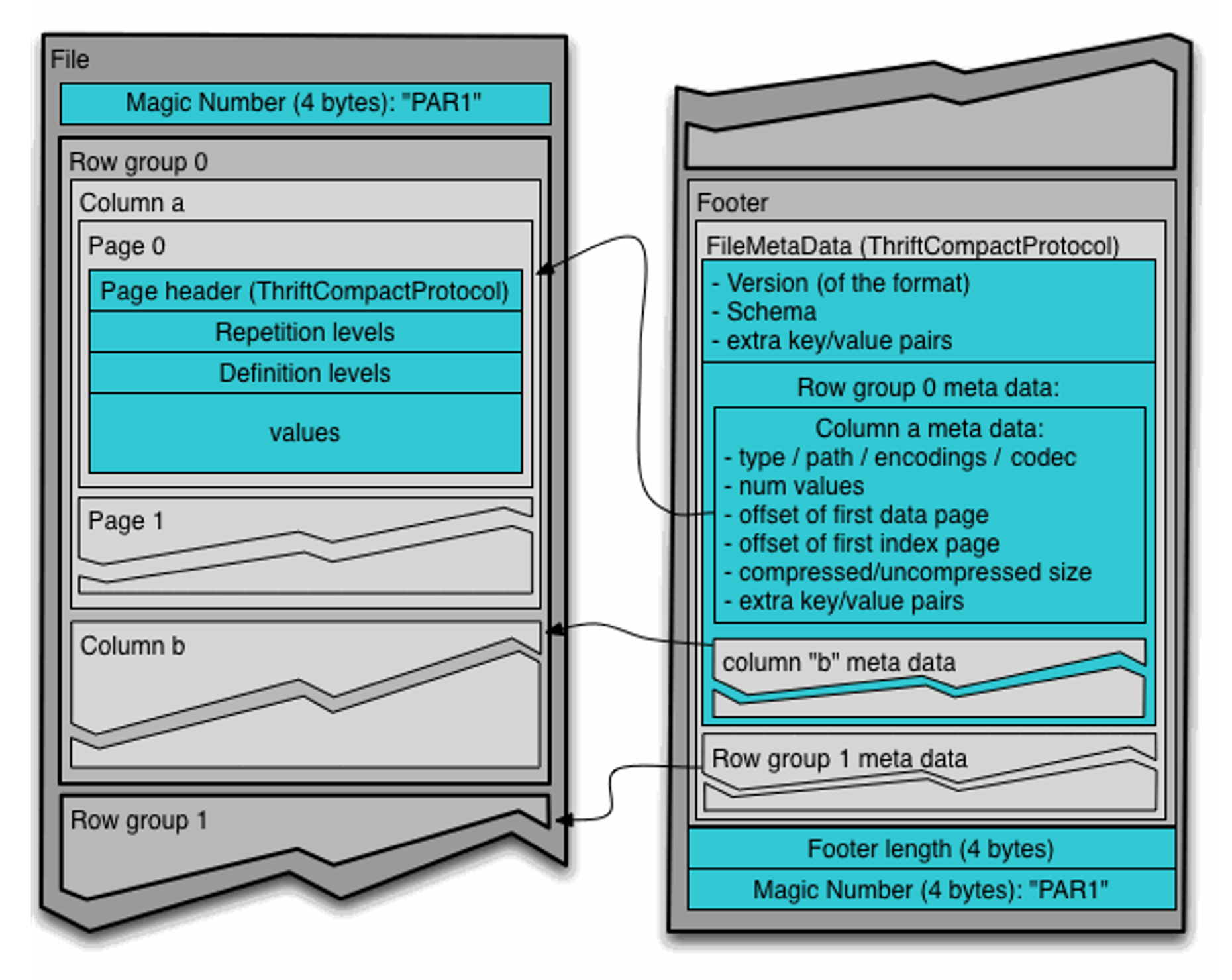

- As you can see the demo uploaded 1GB of parquet files, one file per month. The data contain taxi rides in New York City

- The demo loaded 2.5 years of taxi trip data from New York City with 68 million records and a total size of 1GB in parquet files.

- 필드 : 승차 및 하차 날짜/시간, 승차 및 하차 위치, 이동 거리, 항목별 요금, 요금 유형, 지불 유형 및 운전자가 보고한 승객 수 - Link

- You can see the file size (and therefore the number of rides) decrease drastically because of the Covid-19 pandemic starting from

2020-03.- Parquet is an open source, column-oriented data file format designed for efficient data storage and retrieval.

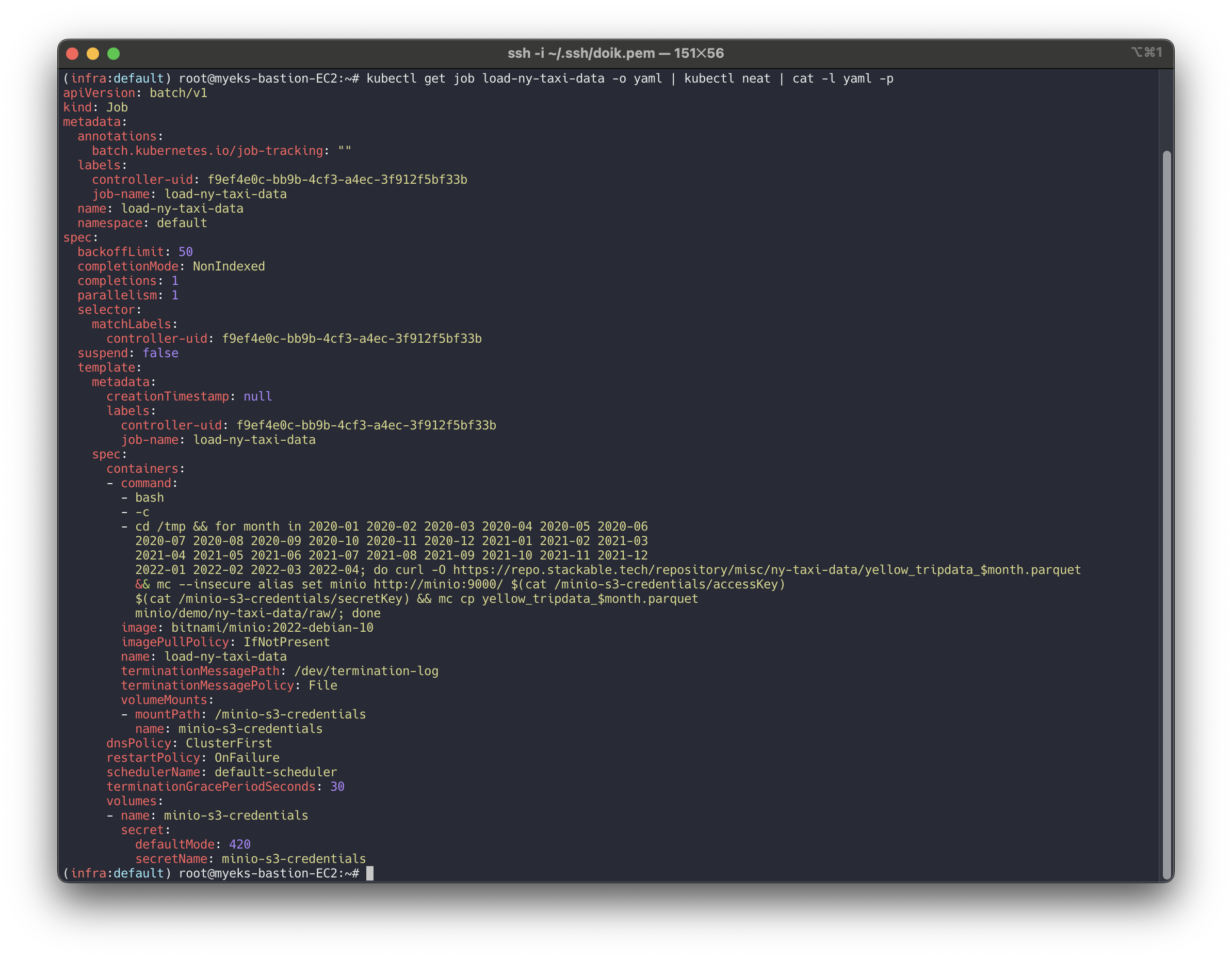

# kubectl get svc,ep minio-console # 데이터셋 다운로드 동작 확인 kubectl get job load-ny-taxi-data -o yaml | kubectl neat | cat -l yaml -p ... spec: containers: - command: - bash - -c - cd /tmp && for month in 2020-01 2020-02 2020-03 2020-04 2020-05 2020-06 2020-07 2020-08 2020-09 2020-10 2020-11 2020-12 2021-01 2021-02 2021-03 2021-04 2021-05 2021-06 2021-07 2021-08 2021-09 2021-10 2021-11 2021-12 2022-01 2022-02 2022-03 2022-04; do curl -O https://repo.stackable.tech/repository/misc/ny-taxi-data/yellow_tripdata_$month.parquet && mc --insecure alias set minio http://minio:9000/ $(cat /minio-s3-credentials/accessKey) $(cat /minio-s3-credentials/secretKey) && mc cp yellow_tripdata_$month.parquet minio/demo/ny-taxi-data/raw/; done ... ## 샘플 다운로드 : '연도-월' 만 바꿔서 다운로드 가능 curl -O https://repo.stackable.tech/repository/misc/ny-taxi-data/yellow_tripdata_2023-01.parquet # 추가 정보 kubectl get cm minio -o yaml | kubectl neat | cat -l yaml kubectl describe cm minio kubectl get secret minio -o yaml | kubectl neat | cat -l yaml kubectl get secret minio-s3-credentials -o yaml | kubectl neat | cat -l yaml

📘 Trino Web

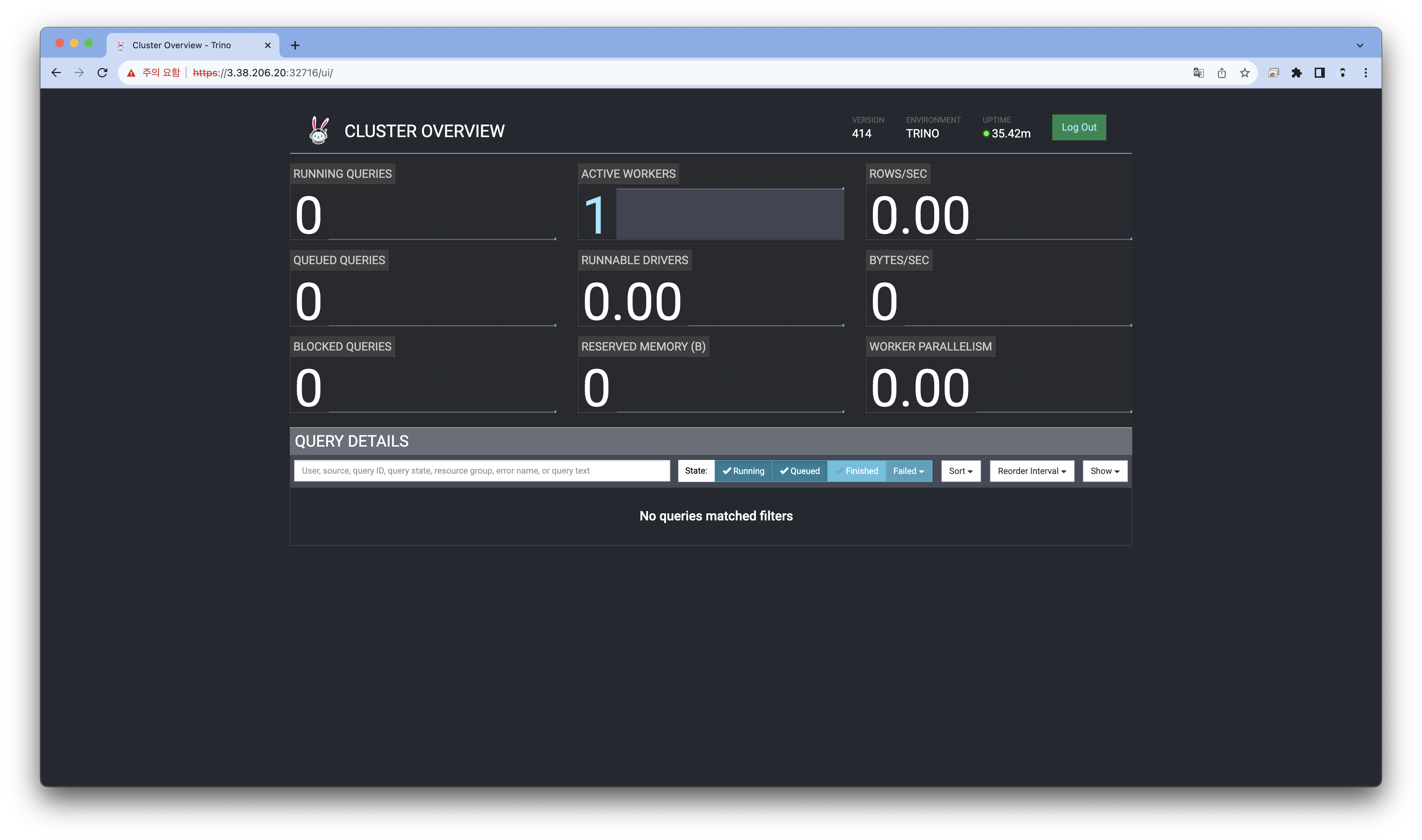

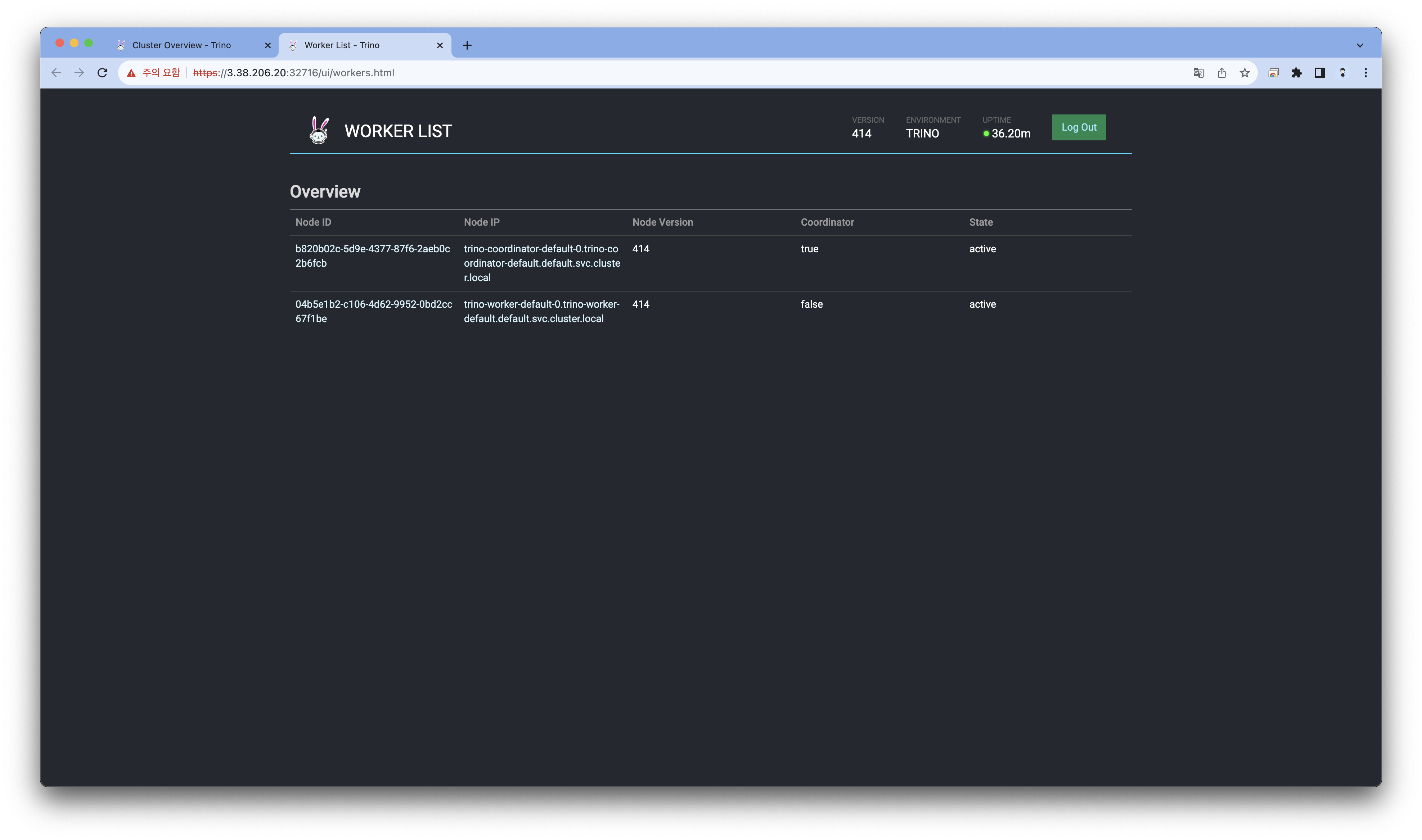

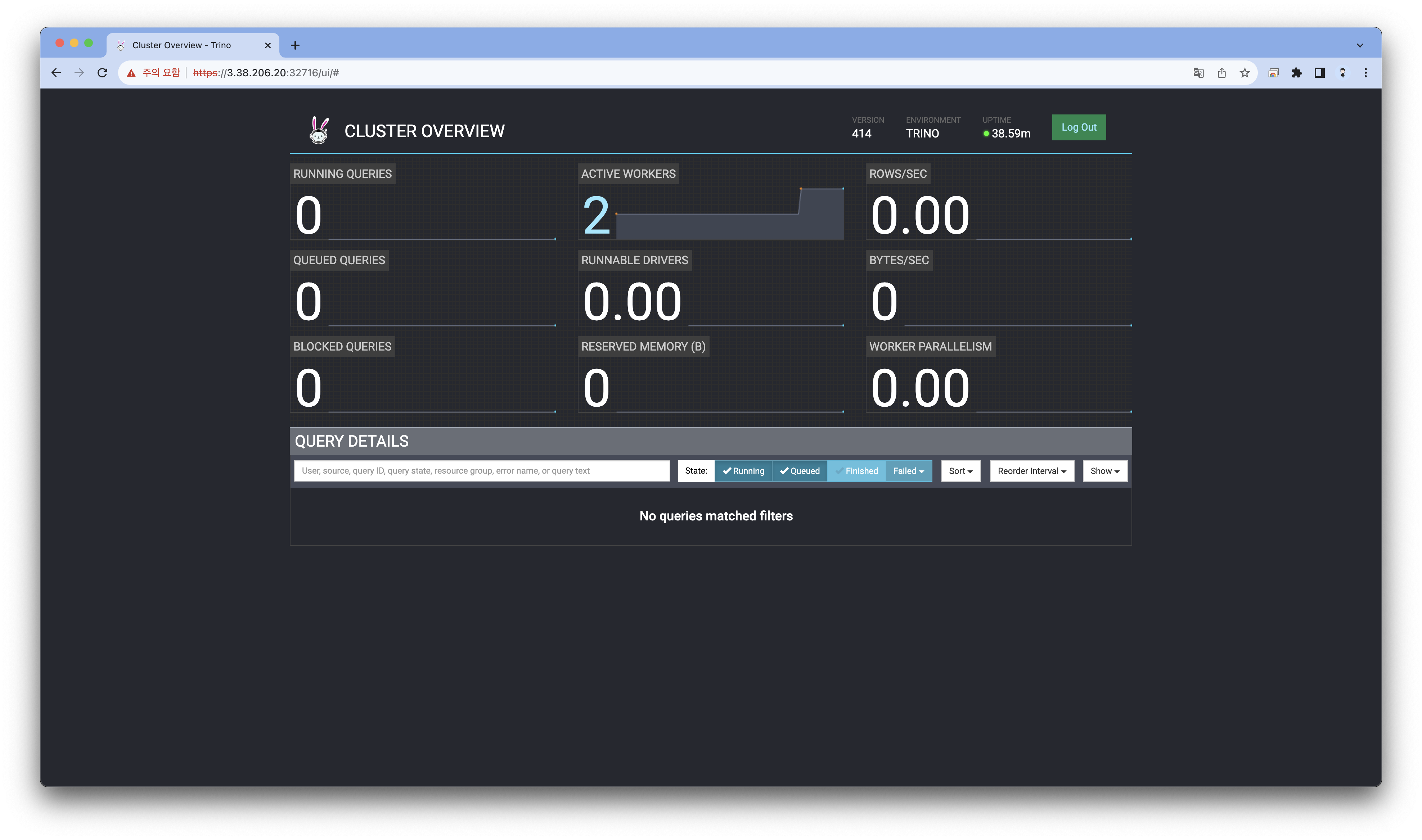

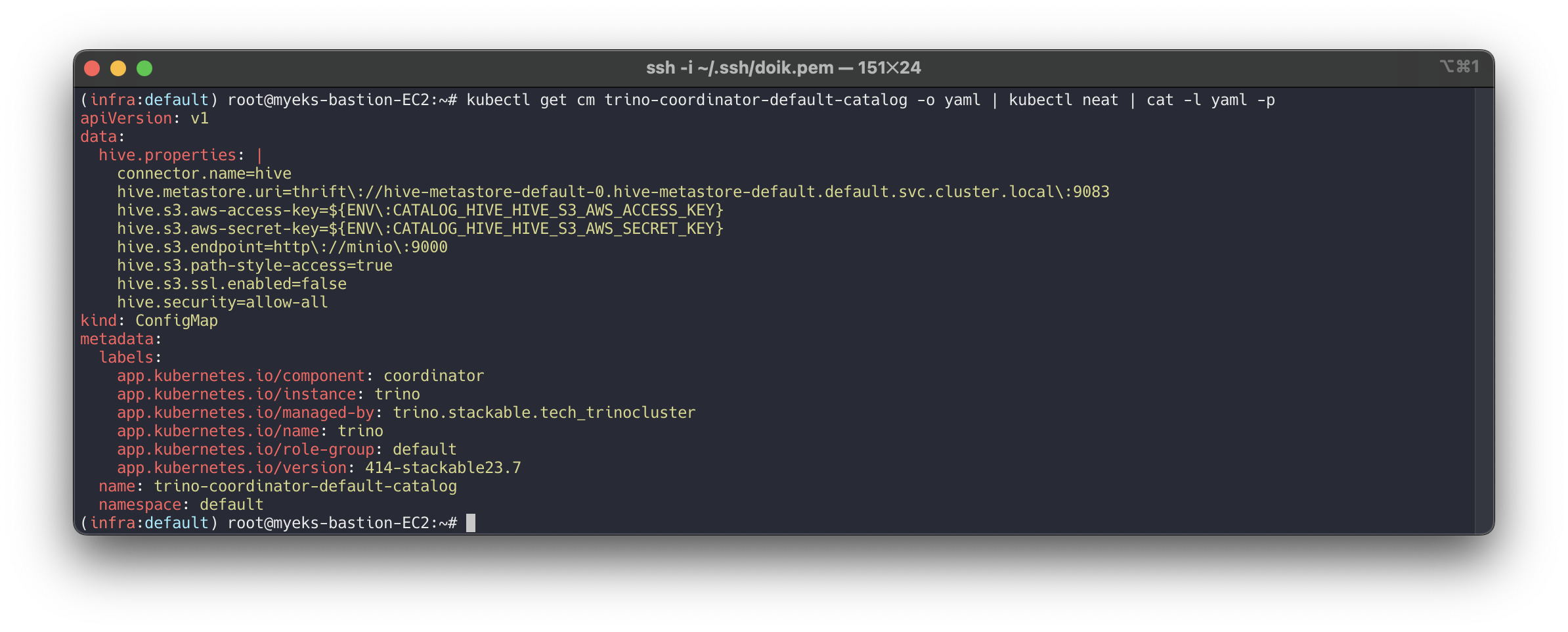

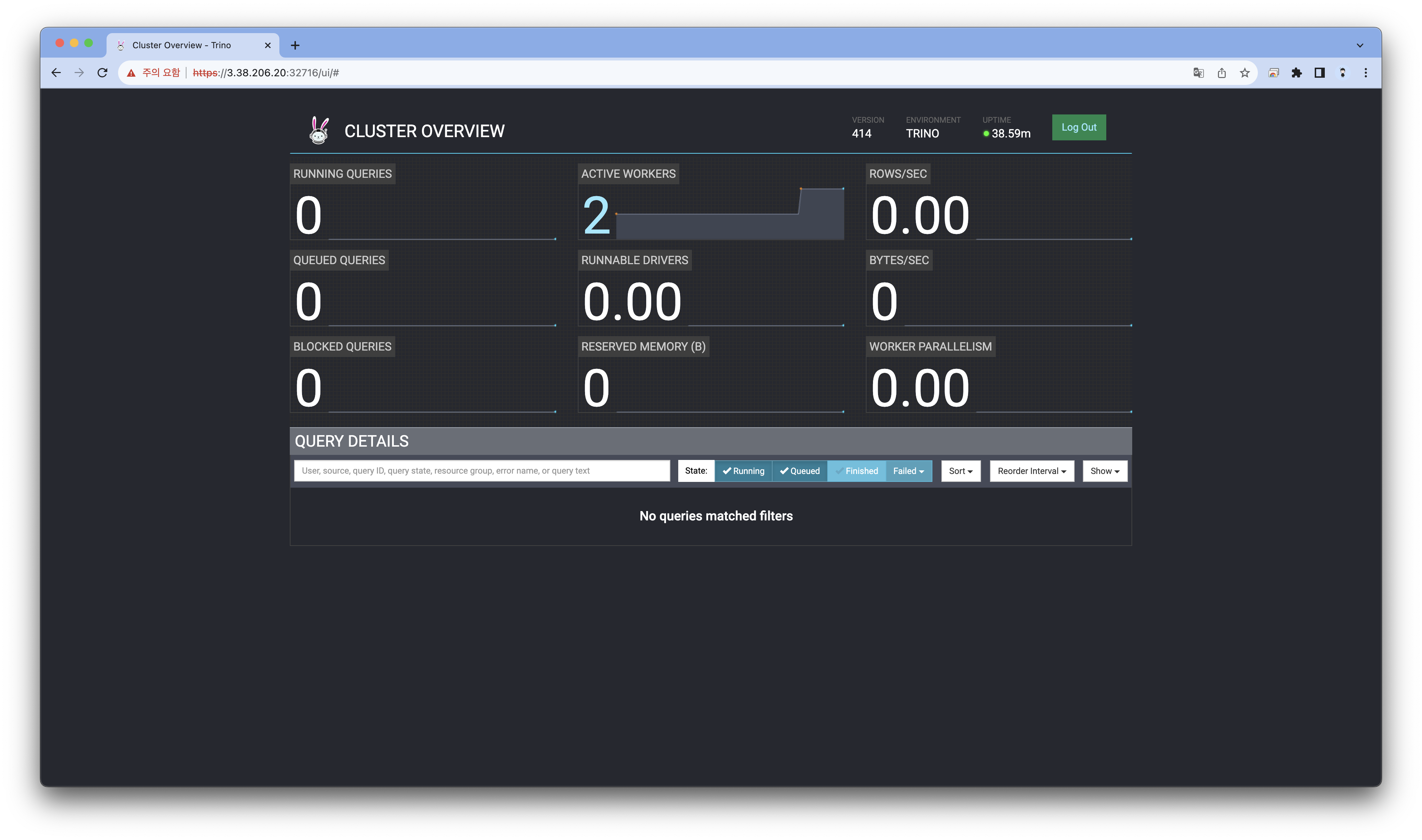

Use Trino Web Interface

Trino Web UI를 사용하여 현재 배포된 워커의 내용을 확인할 수 있습니다.

Trino, a query engine that runs at ludicrous speed : Fast distributed SQL query engine for big data analytics - Link

Trino offers SQL access to the data within S3

PRODUCT trino ENDPOINTS coordinator-https 접속 : admin / adminadmin

Cluster Overview

Worker List

Worker Status

When you start executing SQL queries you will see the queries getting processed here.

# kubectl get svc,ep trino-coordinator # kubectl get job create-ny-taxi-data-table-in-trino -o yaml | kubectl neat | cat -l yaml kubectl get trinocluster,trinocatalog kubectl get trinocluster -o yaml | kubectl neat | cat -l yaml -p kubectl get trinocatalog -o yaml | kubectl neat | cat -l yaml -p ... spec: connector: # hive, s3 hive: metastore: configMap: hive s3: reference: minio ... # kubectl get cm trino-coordinator-default -o yaml | kubectl neat | cat -l yaml kubectl get cm trino-coordinator-default-catalog -o yaml | kubectl neat | cat -l yaml -p ... data: hive.properties: | connector.name=hive hive.metastore.uri=thrift\://hive-metastore-default-0.hive-metastore-default.default.svc.cluster.local\:9083 hive.s3.aws-access-key=${ENV\:CATALOG_HIVE_HIVE_S3_AWS_ACCESS_KEY} hive.s3.aws-secret-key=${ENV\:CATALOG_HIVE_HIVE_S3_AWS_SECRET_KEY} hive.s3.endpoint=http\://minio\:9000 hive.s3.path-style-access=true hive.s3.ssl.enabled=false hive.security=allow-all ... kubectl get cm trino-worker-default -o yaml | kubectl neat | cat -l yaml kubectl get cm trino-worker-default-catalog -o yaml | kubectl neat | cat -l yaml kubectl get cm create-ny-taxi-data-table-in-trino-script -o yaml | kubectl neat | cat -l yaml # kubectl get secret trino-users -o yaml | kubectl neat | cat -l yaml kubectl get secret trino-internal-secret -o yaml | kubectl neat | cat -l yaml

trino-operator를 통해서 trino worker 2대로 증설

# 오퍼레이터 로깅 수준을 높여보자.. kubectl logs -n stackable-operators -l app.kubernetes.io/instance=trino-operator -f # trino worker 2대로 증설 kubectl get trinocluster trino -o json | cat -l json -p kubectl patch trinocluster trino --type='json' -p='[{"op": "replace", "path": "/spec/workers/roleGroups/default/replicas", "value":2}]'

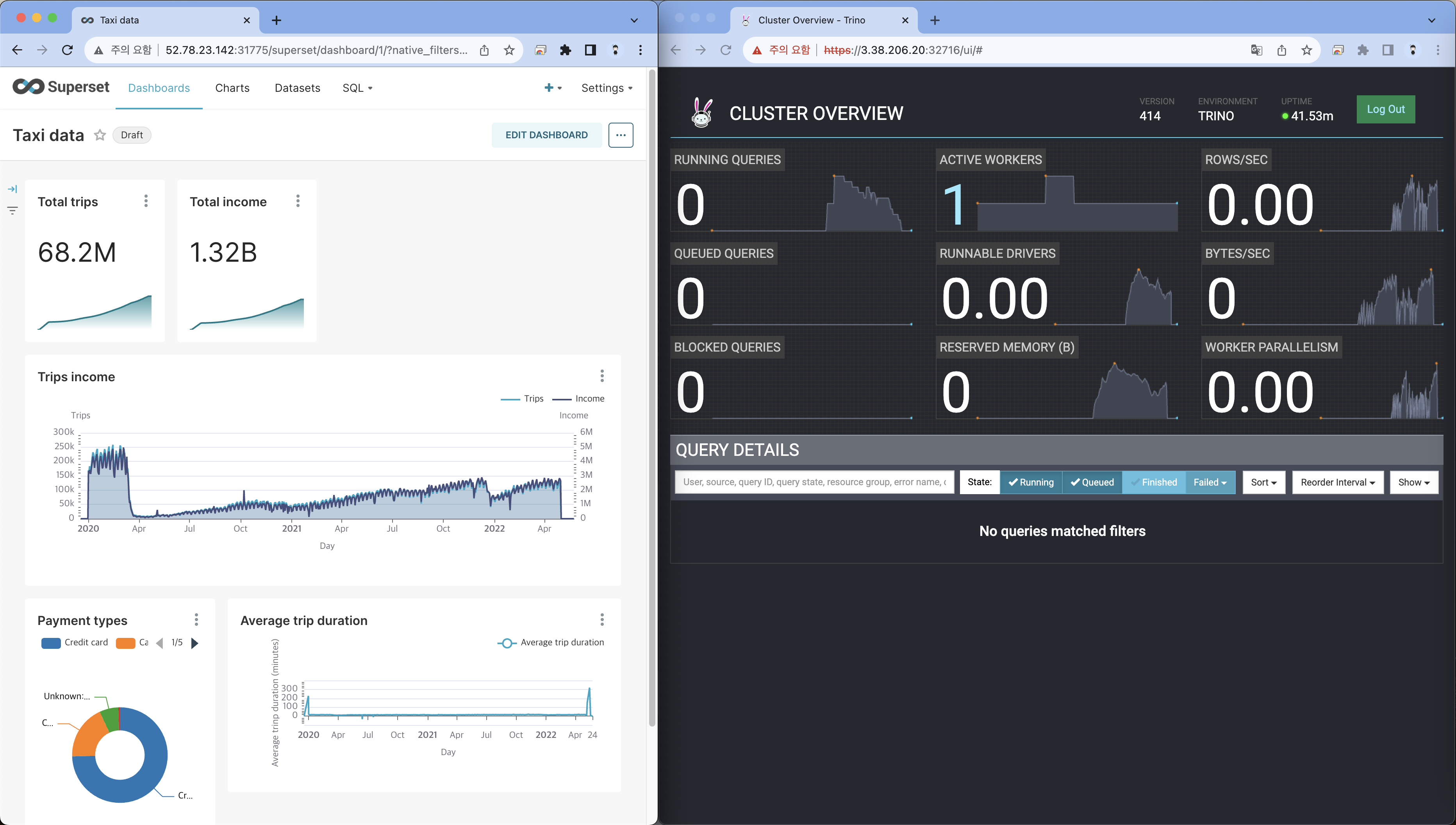

📗 Superset Web

Use Superset Web Interface - Docs Superset Connect_Trino

Superset Web을 사용하여, 데이터를 확인하고, 웹에서 쿼리를 사용할 수 있습니다.

- Apache Superset™ is an open-source modern data exploration and visualization platform

- Superset gives the ability to execute SQL queries and build dashboards

- PRODUCT superset ENDPOINTS external-superset 접속 : admin / adminadmin

- On the top click on the tab Dashboards → Click on the dashboard called

Taxi data.

- It might take some time until the dashboards renders all the included charts. ⇒ 다소 시간 걸림(새로 고침), Trino 같이 확인

You can clearly see the impact of Covid-19 on the taxi business → ‘20.3월 이후 부터 급격히 감소했음을 확인 할 수 있다

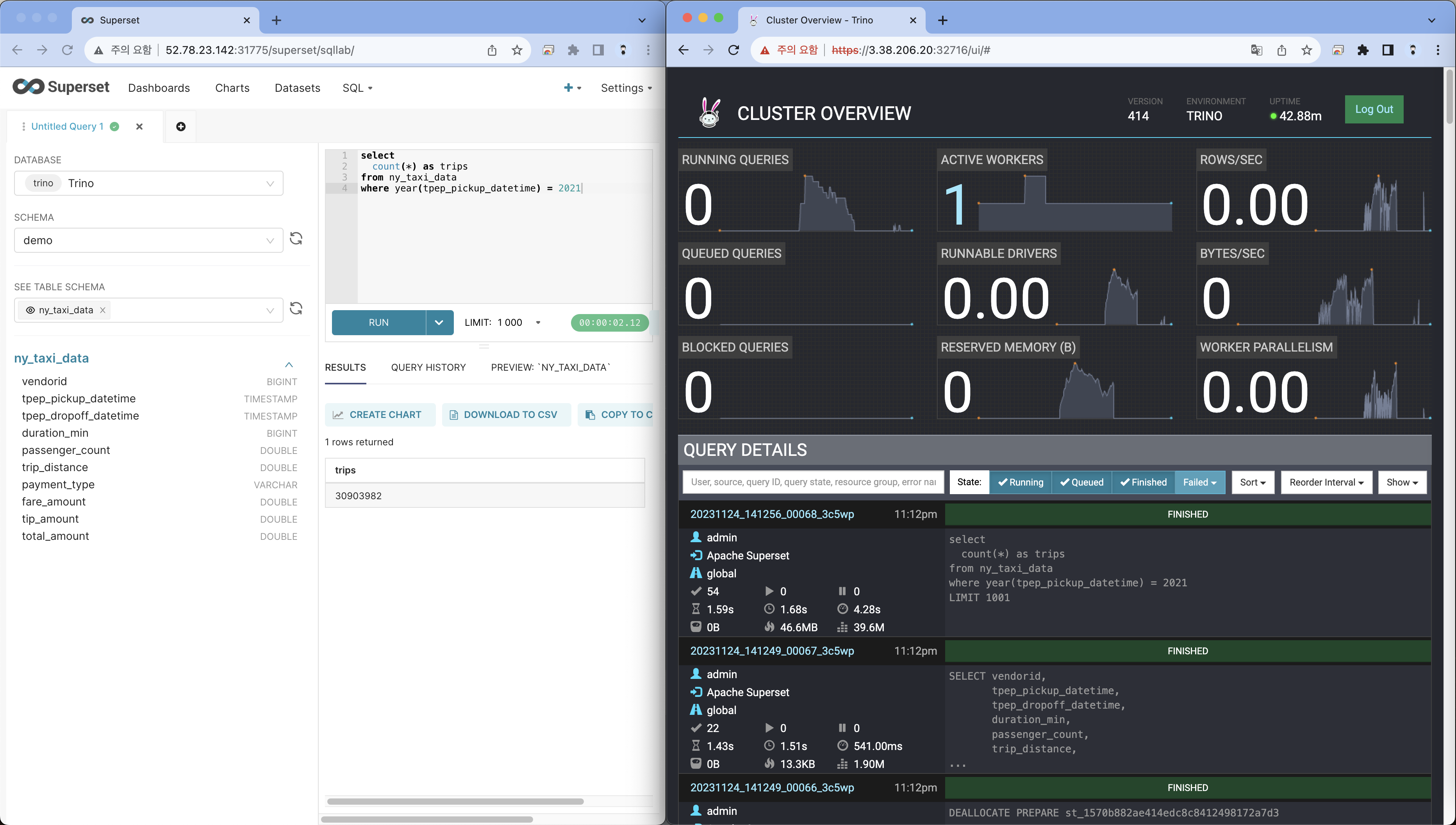

Execute arbitrary SQL statements

- Within Superset you can not only create dashboards but also run arbitrary SQL statements. On the top click on the tab

SQL Lab→SQL Editor.- On the left select the database

Trino, the schemademoand setSee table schematony_taxi_data.

How many taxi trips there where in the year 2021?

select count(*) as trips from ny_taxi_data where year(tpep_pickup_datetime) = 2021

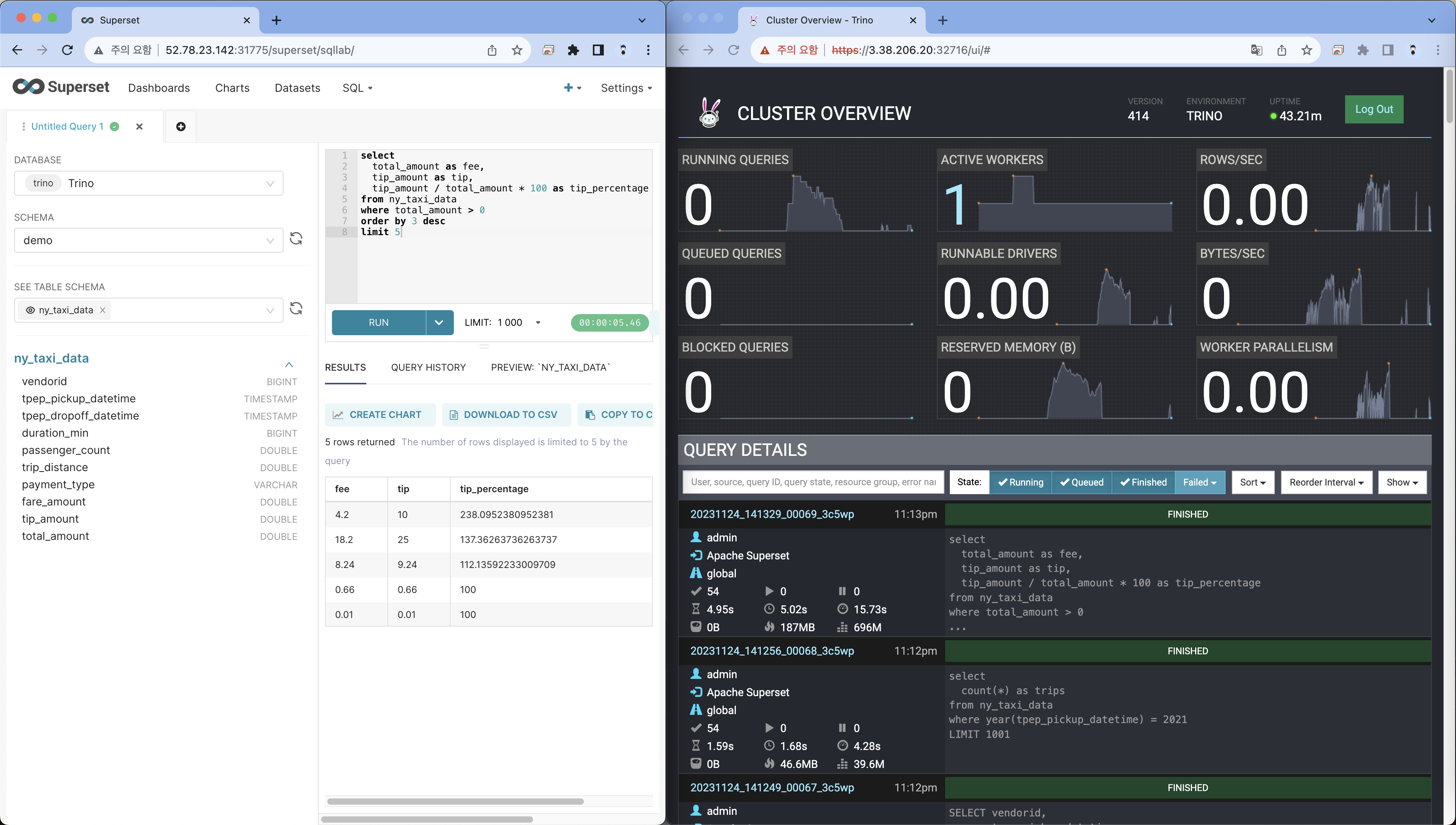

What was the highest tip (measured in percentage of the original fee) ever given?

select total_amount as fee, tip_amount as tip, tip_amount / total_amount * 100 as tip_percentage from ny_taxi_data where total_amount > 0 order by 3 desc limit 5

🔥 CleanUp

Demo를 위해 배포한 리소스를 정리합니다.

# kubectl delete supersetcluster,supersetdb superset kubectl delete trinocluster trino && kubectl delete trinocatalog hive kubectl delete hivecluster hive kubectl delete s3connection minio kubectl delete opacluster opa # helm uninstall postgresql-superset helm uninstall postgresql-hive helm uninstall minio # kubectl delete job --all kubectl delete pvc --all # kubectl delete cm create-ny-taxi-data-table-in-trino-script setup-superset-script trino-opa-bundle kubectl delete secret minio-s3-credentials secret-provisioner-tls-ca superset-credentials superset-mapbox-api-key trino-users kubectl delete sa superset-sa # operator 삭제 stackablectl operator uninstall superset trino hive secret opa commons # 남은 리소스 확인 kubectl get-all -n stackable-operators

👉 Step 03. Stackable Operator

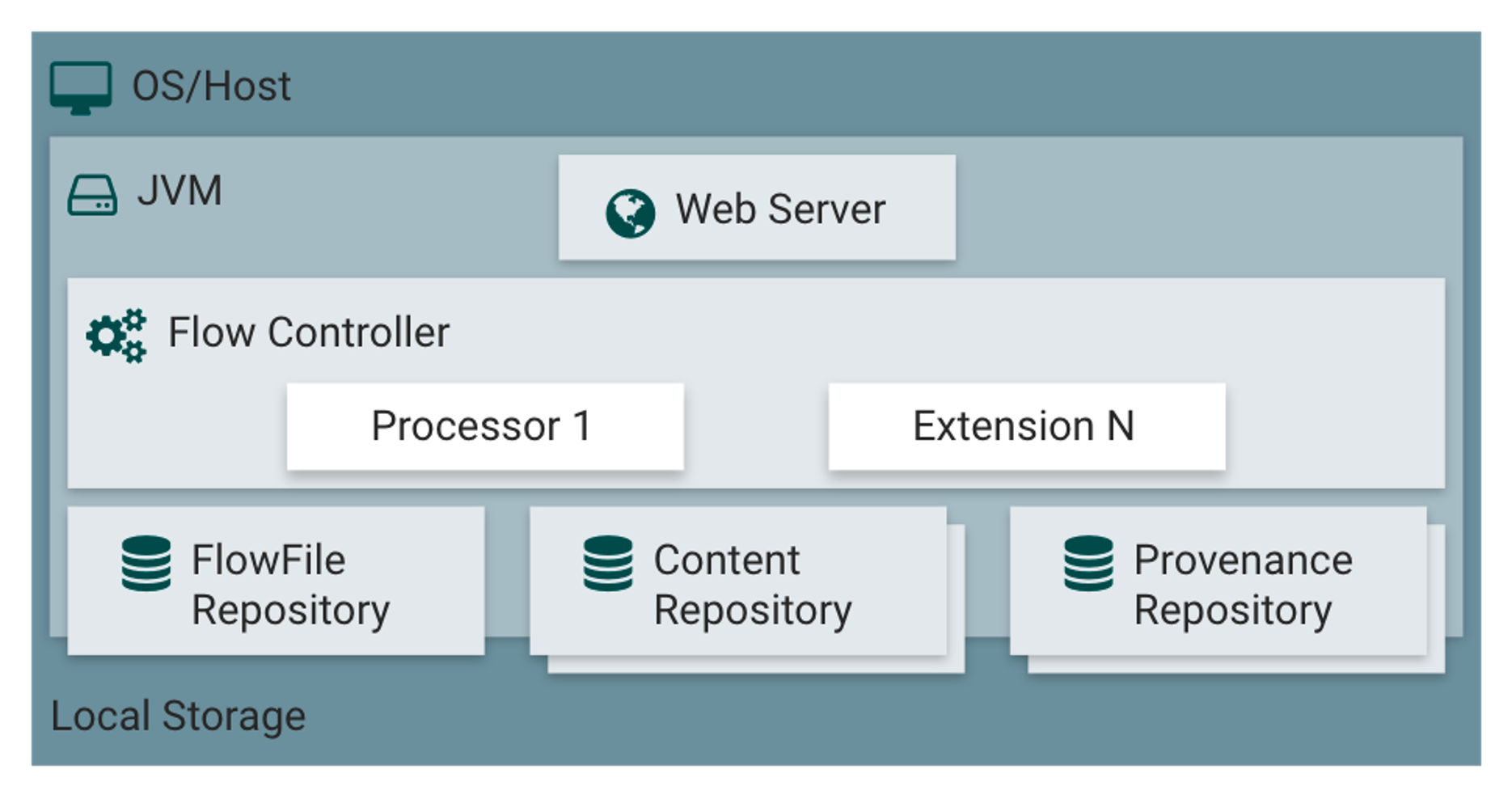

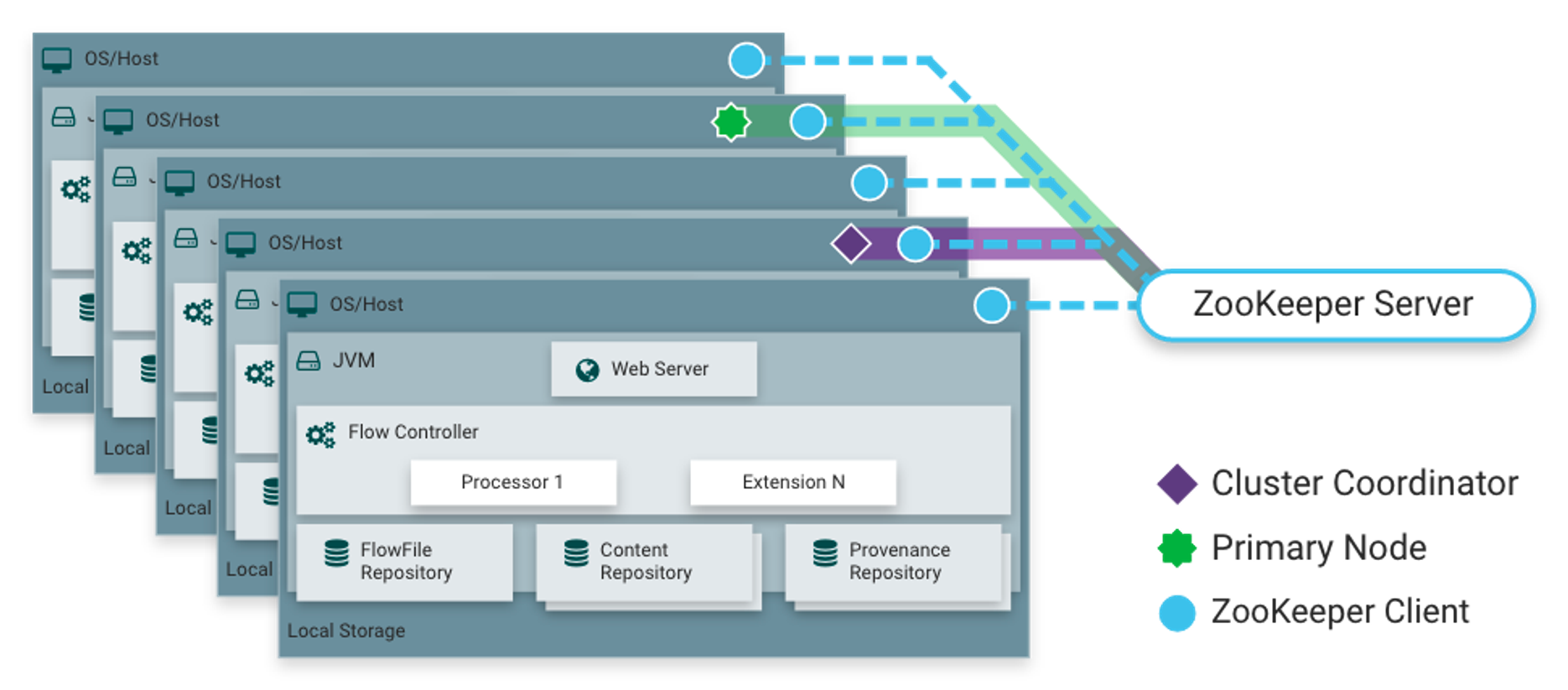

Stackable은 Kubernetes를 기반으로 하며 이를 Control plane으로 사용하여 클러스터를 관리합니다.

- Overview : Stackable is based on Kubernetes and uses this as the control plane to manage clusters.

- In this guide we will build a simple cluster with 3 services; Apache ZooKeeper, Apache Kafka and Apache NiFi.

- Installing Stackable Operators - Link

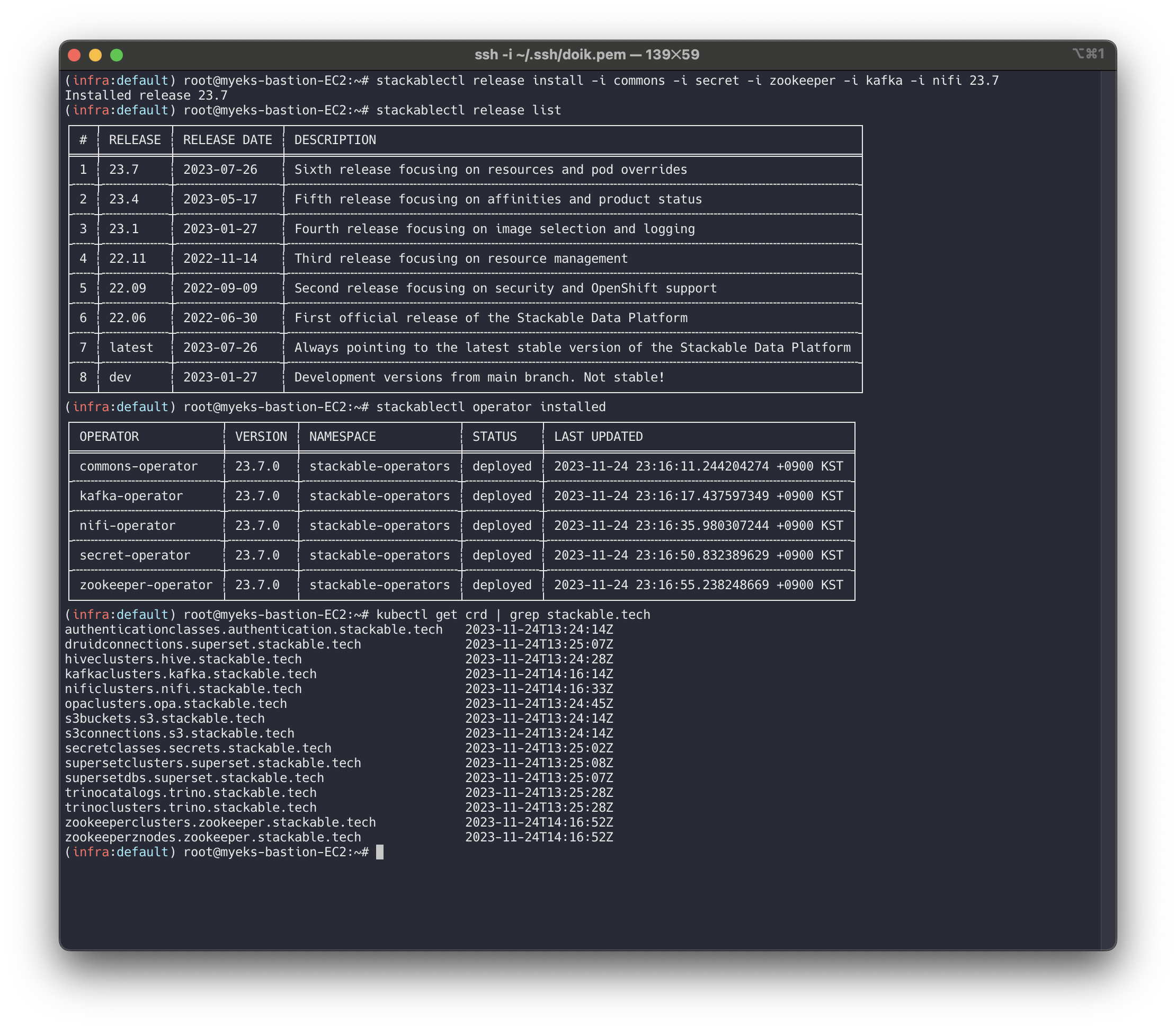

# [터미널1] 모니터링 watch -d "kubectl get pod -n stackable-operators" # [터미널2] 설치 stackablectl release list stackablectl release install -i commons -i secret -i zookeeper -i kafka -i nifi 23.7 [INFO ] Installing release 23.7 [INFO ] Installing commons operator in version 23.7.0 [INFO ] Installing kafka operator in version 23.7.0 [INFO ] Installing nifi operator in version 23.7.0 [INFO ] Installing secret operator in version 23.7.0 [INFO ] Installing zookeeper operator in version 23.7.0 # 설치 확인 helm list -n stackable-operators stackablectl operator installed kubectl get crd | grep stackable.tech kubectl get pod

✅ Deploying Stackable Services - Link

Zookeeper, Kafka, Nifi를 설치하는 실습을 진행합니다.

Apache ZooKeeper

kubectl apply -f - <<EOF --- apiVersion: zookeeper.stackable.tech/v1alpha1 kind: ZookeeperCluster metadata: name: simple-zk spec: image: productVersion: "3.8.1" stackableVersion: "23.7" clusterConfig: tls: serverSecretClass: null servers: roleGroups: primary: replicas: 1 config: myidOffset: 10 --- apiVersion: zookeeper.stackable.tech/v1alpha1 kind: ZookeeperZnode metadata: name: simple-zk-znode spec: clusterRef: name: simple-zk EOF

Apache Kafka

We will deploy an Apache Kafka broker that depends on the ZooKeeper service we just deployed.

- The zookeeperReference property below points to the namespace and name we gave to the ZooKeeper service deployed previously.

kubectl apply -f - <<EOF --- apiVersion: kafka.stackable.tech/v1alpha1 kind: KafkaCluster metadata: name: simple-kafka spec: image: productVersion: "3.4.0" stackableVersion: "23.7" clusterConfig: zookeeperConfigMapName: simple-kafka-znode tls: serverSecretClass: null brokers: roleGroups: brokers: replicas: 3 --- apiVersion: zookeeper.stackable.tech/v1alpha1 kind: ZookeeperZnode metadata: name: simple-kafka-znode spec: clusterRef: name: simple-zk namespace: default EOFKafka UI - 링크

# helm repo add kafka-ui https://provectus.github.io/kafka-ui-charts cat <<EOF > kafkaui-values.yml yamlApplicationConfig: kafka: clusters: - name: yaml bootstrapServers: simple-kafka-broker-brokers:9092 auth: type: disabled management: health: ldap: enabled: false EOF # 설치 helm install kafka-ui kafka-ui/kafka-ui -f kafkaui-values.yml # 접속 확인 kubectl patch svc kafka-ui -p '{"spec":{"type":"LoadBalancer"}}' kubectl annotate service kafka-ui "external-dns.alpha.kubernetes.io/hostname=kafka-ui.$MyDomain" echo -e "kafka-ui Web URL = http://kafka-ui.$MyDomain"

kubectl apply -f - <<EOF --- apiVersion: zookeeper.stackable.tech/v1alpha1 kind: ZookeeperZnode metadata: name: simple-nifi-znode spec: clusterRef: name: simple-zk --- apiVersion: v1 kind: Secret metadata: name: nifi-admin-credentials-simple stringData: username: admin password: AdminPassword --- apiVersion: nifi.stackable.tech/v1alpha1 kind: NifiCluster metadata: name: simple-nifi spec: image: productVersion: "1.21.0" stackableVersion: "23.7" clusterConfig: listenerClass: external-unstable zookeeperConfigMapName: simple-nifi-znode authentication: method: singleUser: adminCredentialsSecret: nifi-admin-credentials-simple sensitiveProperties: keySecret: nifi-sensitive-property-key autoGenerate: true nodes: roleGroups: default: replicas: 1 EOF

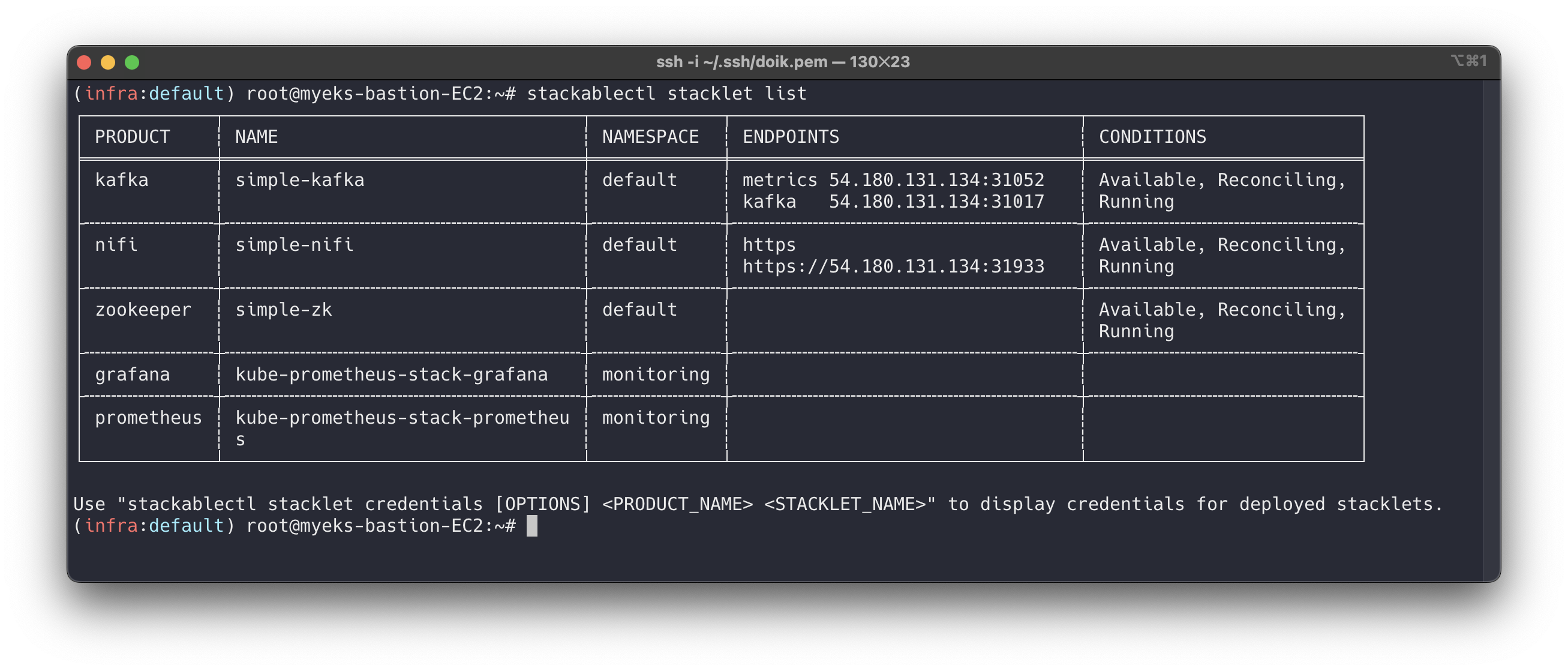

📚 Test Cluster

설치가 완료된 서비스를 테스트합니다.

Testing your cluster - Link

설치 확인

stackablectl stacklet list ┌────────────┬──────────────────────────────────┬────────────┬─────────────────────────────────────┬─────────────────────────────────┐ │ PRODUCT ┆ NAME ┆ NAMESPACE ┆ ENDPOINTS ┆ CONDITIONS │ ╞════════════╪══════════════════════════════════╪════════════╪═════════════════════════════════════╪═════════════════════════════════╡ │ kafka ┆ simple-kafka ┆ default ┆ metrics 54.180.131.134:31052 ┆ Available, Reconciling, Running │ │ ┆ ┆ ┆ kafka 54.180.131.134:31017 ┆ │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ nifi ┆ simple-nifi ┆ default ┆ https https://54.180.131.134:31933 ┆ Available, Reconciling, Running │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ zookeeper ┆ simple-zk ┆ default ┆ ┆ Available, Reconciling, Running │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ grafana ┆ kube-prometheus-stack-grafana ┆ monitoring ┆ ┆ │ ├╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┼╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┤ │ prometheus ┆ kube-prometheus-stack-prometheus ┆ monitoring ┆ ┆ │ └────────────┴──────────────────────────────────┴────────────┴─────────────────────────────────────┴─────────────────────────────────┘

Apache ZooKeeper

# ZooKeeper CLI shell kubectl exec -i -t simple-zk-server-primary-0 -c zookeeper -- bin/zkCli.sh ------------------ # znodes 확인 # You can run the ls / command to see the list of znodes in the root path, # which should include those created by Apache Kafka and Apache NiFi. ls / [znode-5fef78a9-71e1-4250-bc35-ced60243d60f, znode-b0bf14f8-a1f6-4b31-aaba-4f4bbc68767d, znode-c45d9efd-a071-4723-943a-79d5fe49a162, zookeeper] quit ------------------

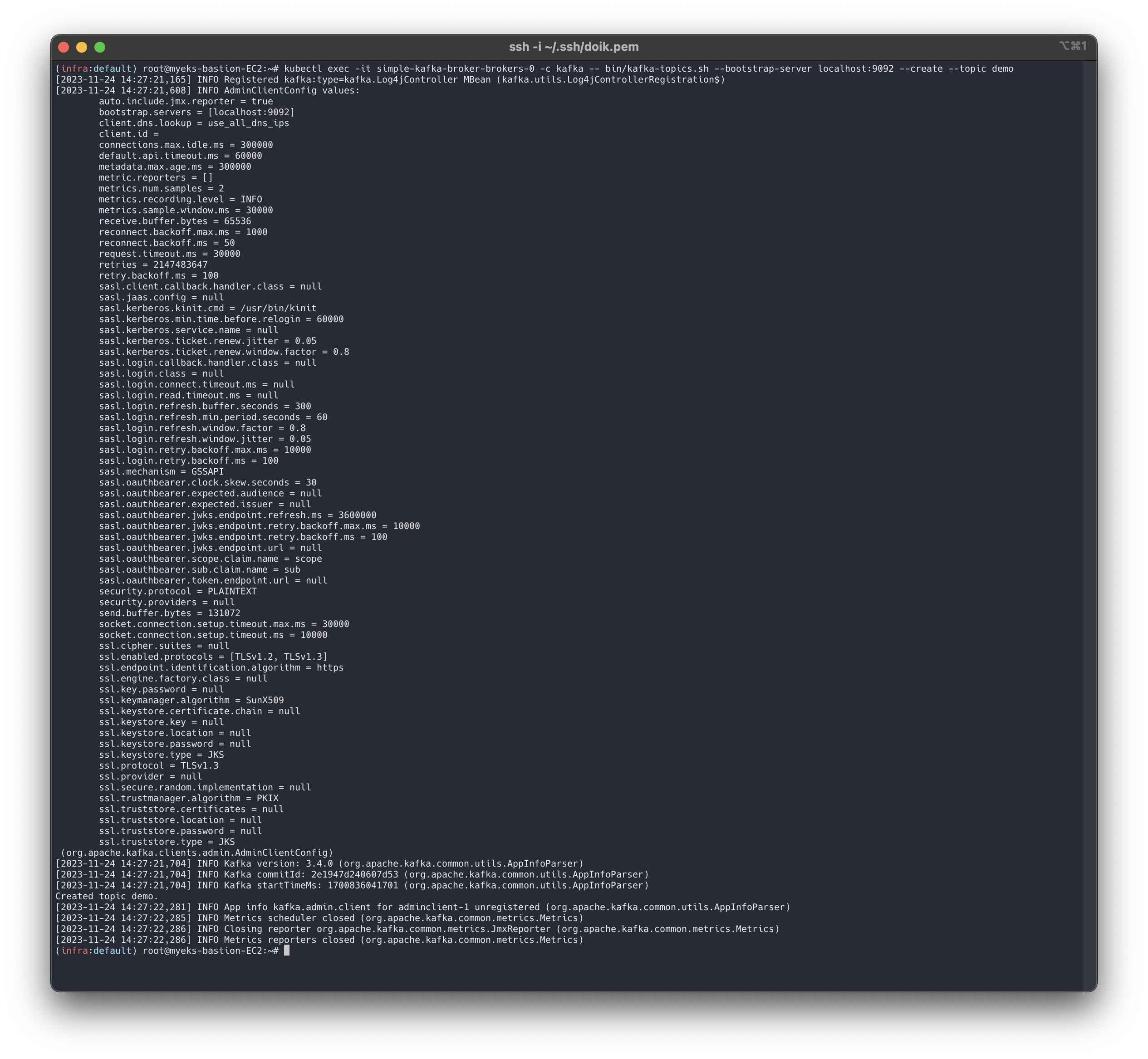

Apache Kafka

# 토픽 생성 kubectl exec -it simple-kafka-broker-brokers-0 -c kafka -- bin/kafka-topics.sh --bootstrap-server localhost:9092 --create --topic demo ... Created topic demo. ...

# 토픽 확인 kubectl exec -it simple-kafka-broker-brokers-0 -c kafka -- bin/kafka-topics.sh --bootstrap-server localhost:9092 --list ... demo ...

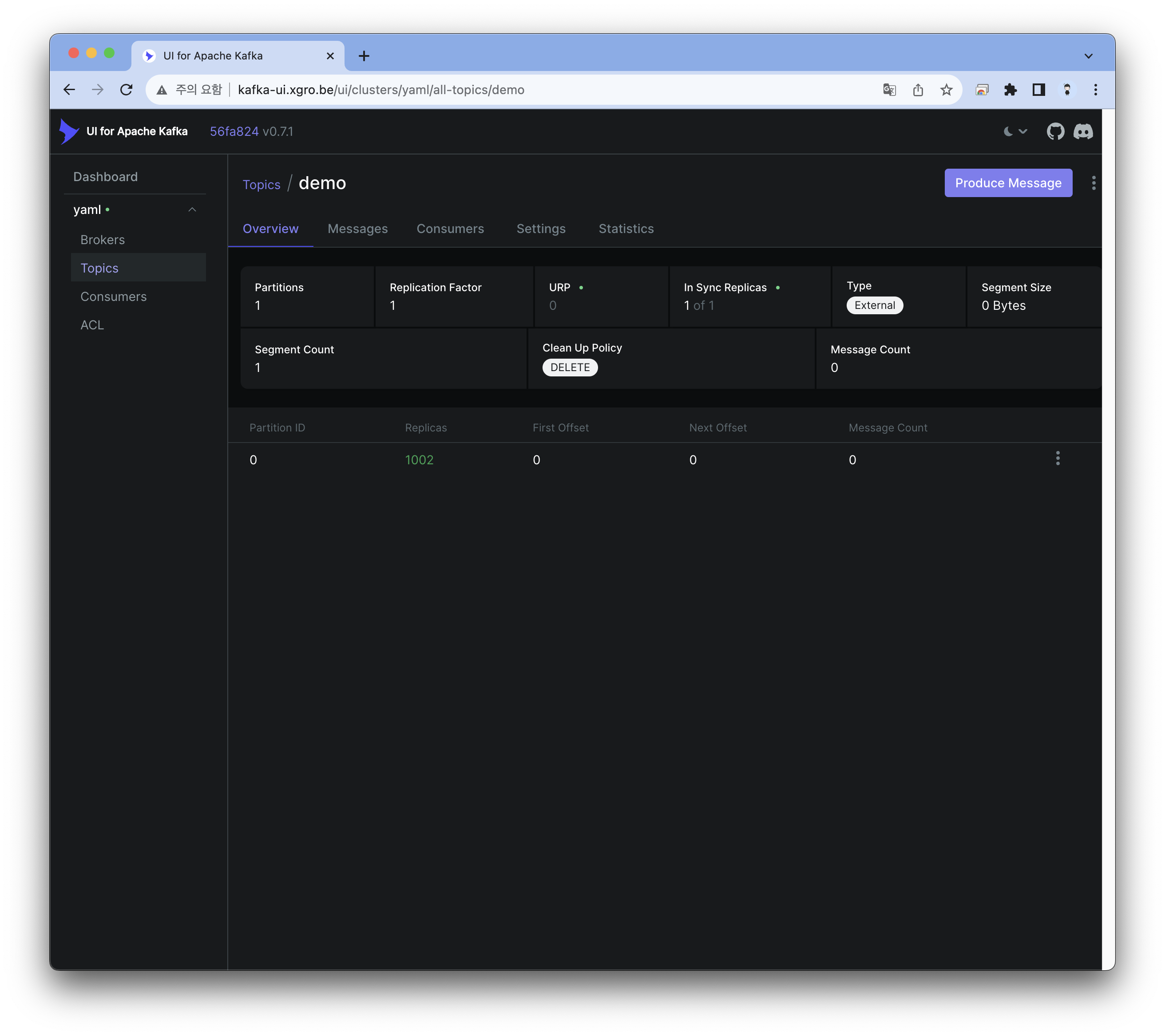

결과

Kafka UI로 Demo 토픽이 생성된 것을 확인할 수 있습니다.

Apache NiFi : PRODUCT nifi ENDPOINTS https 접속 : admin / AdminPassword

# NiFi admin 계정의 암호 확인 kubectl get secrets nifi-admin-credentials-simple -o jsonpath="{.data.password}" | base64 -d && echo AdminPassword

Nifi에 접속하여 기능을 살펴봅니다.

🔥 CleanUp

배포한 리소스를 정리합니다.

# Apache NiFi 삭제 kubectl delete nificluster simple-nifi && kubectl delete zookeeperznode simple-nifi-znode # kafka-ui 삭제 helm uninstall kafka-ui # Apache kafka 삭제 kubectl delete kafkacluster simple-kafka && kubectl delete zookeeperznode simple-kafka-znode # Apache ZooKeeper 삭제 kubectl delete zookeepercluster simple-zk && kubectl delete zookeeperznode simple-zk-znode # secret, pvc 삭제 kubectl delete secret nifi-admin-credentials-simple nifi-sensitive-property-key secret-provisioner-tls-ca kubectl delete pvc --all # operator 삭제 stackablectl operator uninstall nifi kafka zookeeper secret commons # 남은 리소스 확인 kubectl get-all -n stackable-operators