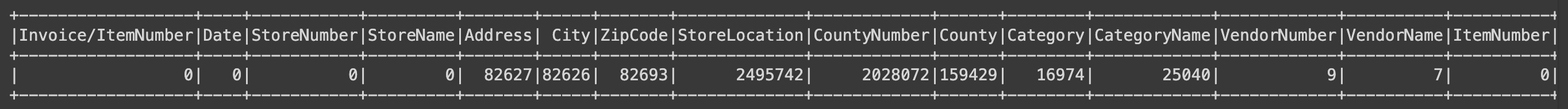

1. 결측치 찾기

1.1 pyspark sql functions 사용

several built-in standard functions to work with DataFrame and SQL queries

from pyspark.sql import functions as F

df.select(

[F.count(F.when(F.isnull(c), c)).alias(c) for c in df.columns]

).show()

1) select

- 특정 컬럼을 선택하기 위한 함수

2) count

- 데이터프레임의 행 갯수를 연산

3) when

- 데이터프레임에서 if문과 같은 조건문을 만드는 함수

- F.when(조건(True), 조건에 부합할 시 반환값).otherwise(조건에 부합하지 않을 시 반환값)

4) isnull

- 해당 값이 null인 경우 True를 반환

5) alias

- 새롭게 연산된 컬럼의 컬럼명 설정

2. Column 결측치 제거

1) filter

- 구문이 True인 값을 필터링

- where 함수도 동일한 기능

# filter(구문) where

df.filter(F.col("StoreLocation").isNull()).show()df.filter(F.col("CountyNumber").isNull()).filter(F.col("County").isNotNull()).count()PySpark DataFrame API에서는 위처럼 Transformation을 추가하는 형태로 코드를 작성 할 수 있다. 아래 예시와 동일한 결과를 가져온다.

df.filter(

(F.col("CountyNumber").isNull())

& (F.col("County").isNotNull())

).count()2) drop

- 명시된 컬럼을 제외한 DataFrame을 반환

df = df.drop("StoreLocation", "CountyNumber")df.printSchema()

# 결과값:

# root

# |-- Invoice/ItemNumber: string (nullable = true)

# |-- Date: string (nullable = true)

# |-- StoreNumber: integer (nullable = true)

# |-- StoreName: string (nullable = true)

# |-- Address: string (nullable = true)

# |-- City: string (nullable = true)

# |-- ZipCode: string (nullable = true)

# |-- County: string (nullable = true)

# |-- CategoryName: string (nullable = true)3. Row 결측치 제거

region_cols = ["Address", "City", "ZipCode", "County"]

df.select(

[F.count(F.when(F.isnull(c), c)).alias(c) for c in region_cols] # df.columns

).show()

# 결과값:

# +-------+-----+-------+------+

# |Address| City|ZipCode|County|

# +-------+-----+-------+------+

# | 82627|82626| 82693|159429|

# +-------+-----+-------+------+근소한 차이지만, city의 결측치가 82,626개로 가장 적다.

# City를 기준으로 결측치가 없는 데이터프레임만 필터링

df = df.filter(F.col("City").isNotNull())

# Address, Zipcode, County 컬럼 제거

df = df.drop("Address", "Zipcode", "County")

df.printSchema()

# 결과값:

# root

# |-- Invoice/ItemNumber: string (nullable = true)

# |-- Date: string (nullable = true)

# |-- StoreNumber: integer (nullable = true)

# |-- StoreName: string (nullable = true)

# |-- City: string (nullable = true)

# |-- Category: integer (nullable = true)

# |-- CategoryName: string (nullable = true)