Attention is all you need, NeurIPS 2017

Transformer

Goal

sequence modeling and transduction problem을 잘 푸는 것

Challenge (Motivation)

Constraint of sequential computation

Solution

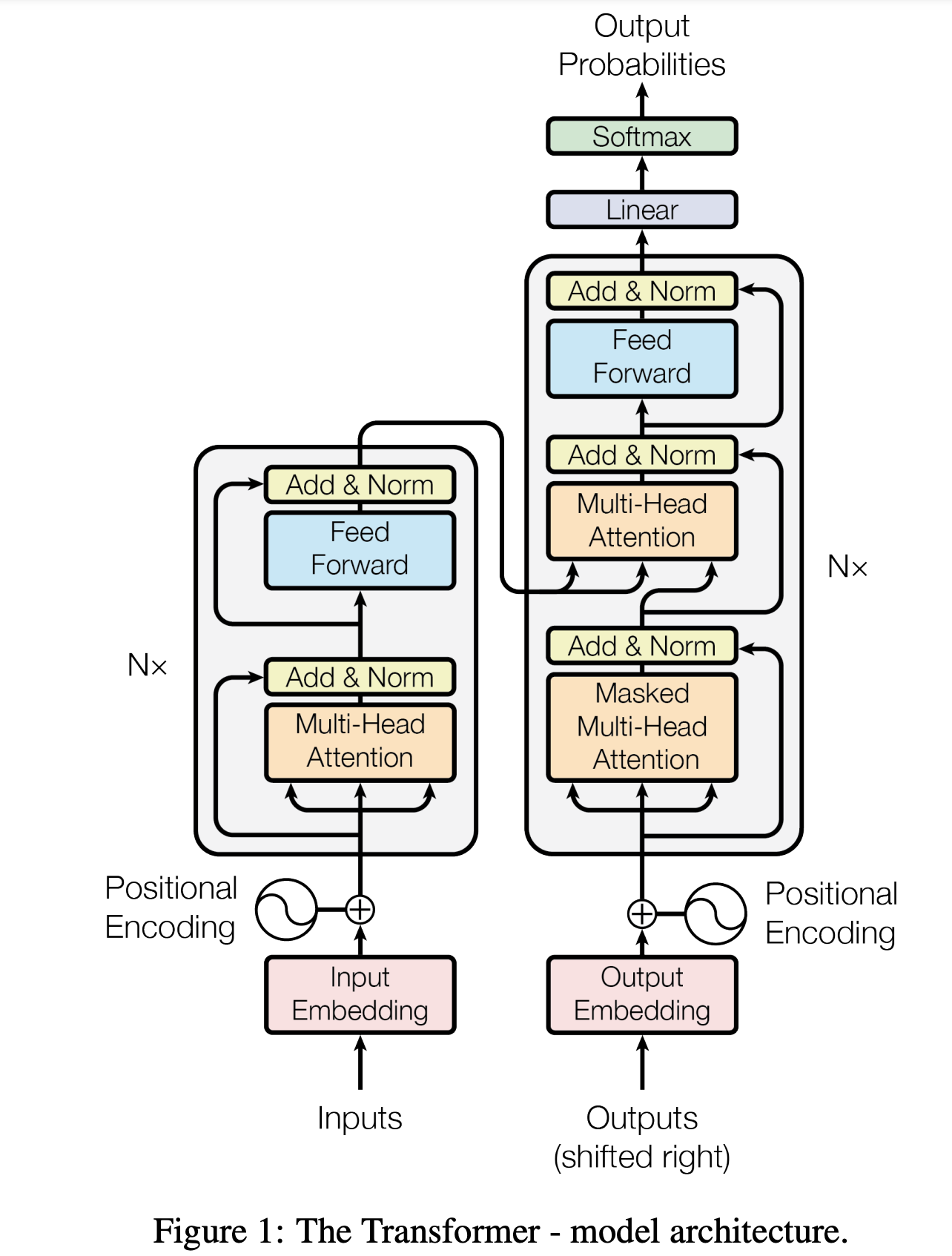

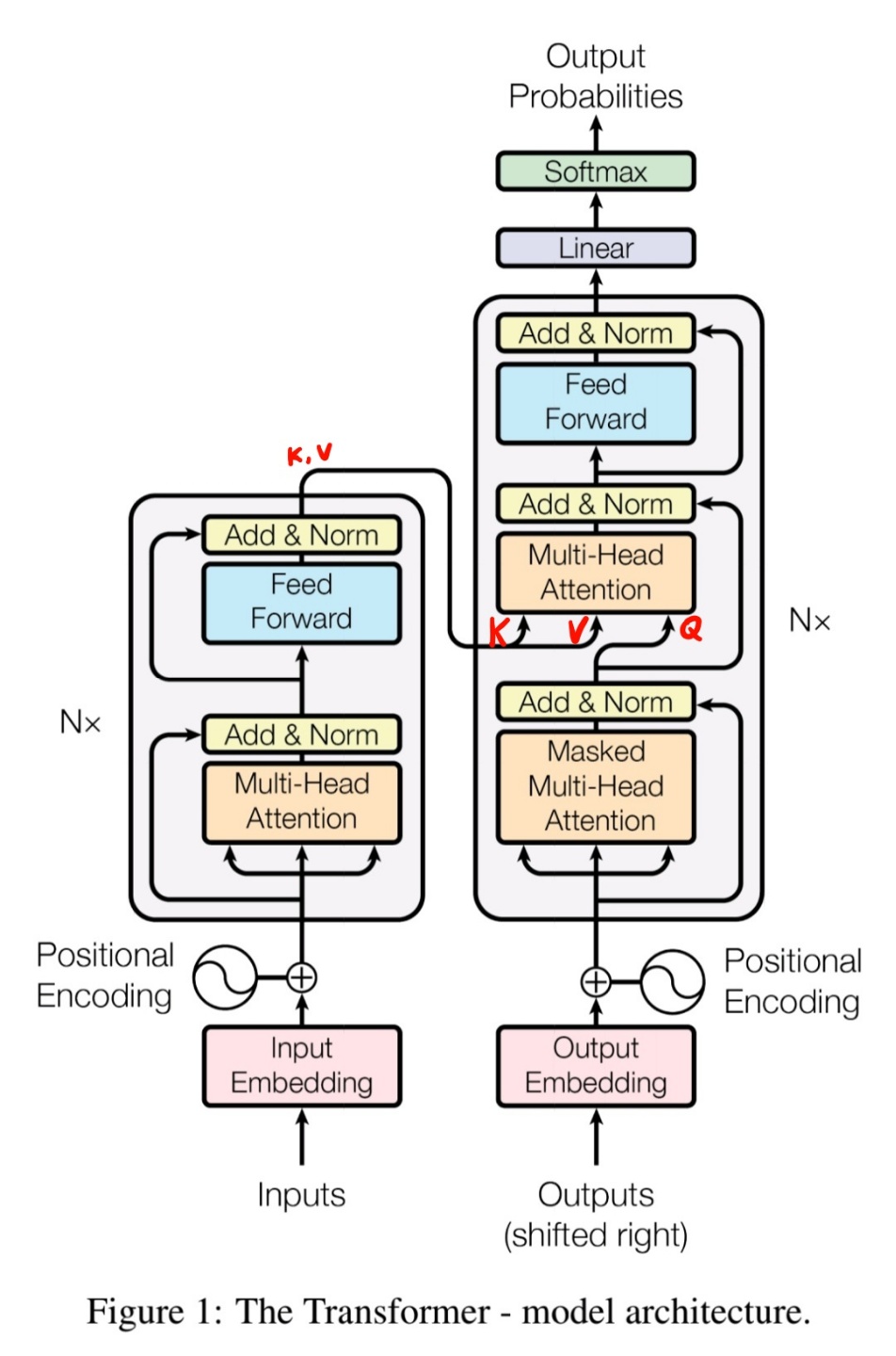

- Based on Self-Attention mechanism (discard recurrence and convolutions)

- Add (relative or absolute) positional information of sequence to the input embeddings

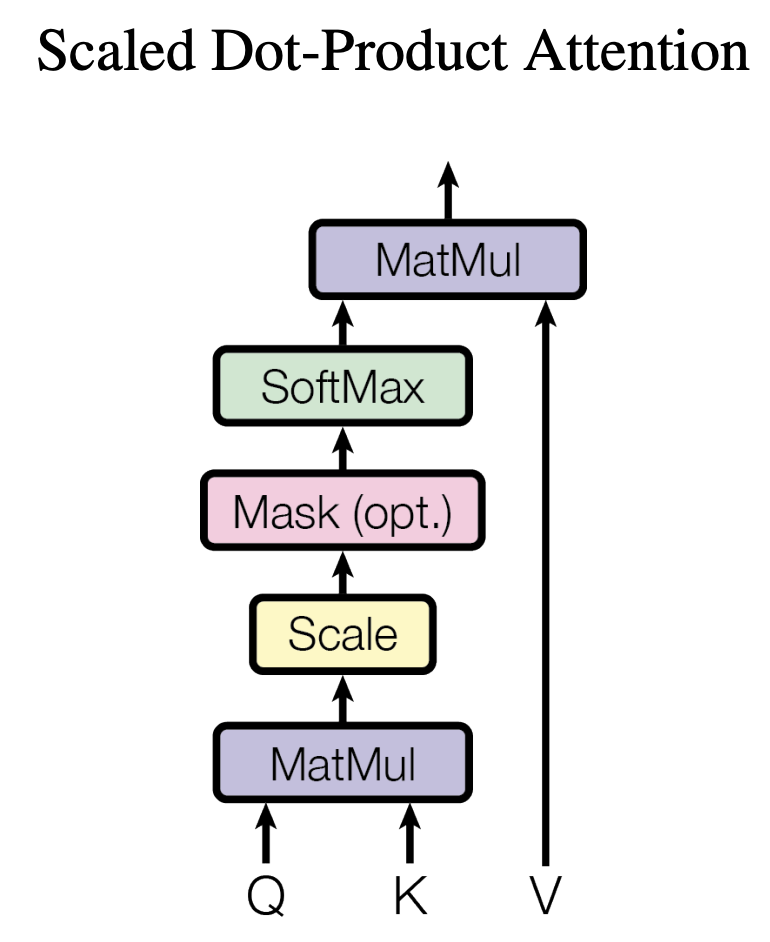

Method

Scaled dot-product QKV multi-head attention

Positional Encoding

Experiment

Evaluation on Translation task : BLEU

Comparing Training cost

source:

Vaswani, Ashish, et al. "Attention is all you need." Advances in neural information processing systems. 2017.

https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf