Cloud Native PostgreSQL

1. PostgreSQL

1.1 PostgreSQL 이란?

PostgreSQL은 오픈 소스 객체-관계형 데이터베이스 시스템(ORDBMS)으로, Enterprise급 DBMS의 기능과 차세대 DBMS에서나 볼 수 있을 법한 기능들을 제공한다.약 20여년의 오랜 역사를 갖는 PostgreSQL은 다른 관계형 데이터베이스 시스템과 달리 연산자, 복합 자료형, 집계 함수, 자료형 변환자, 확장 기능 등 다양한 데이터베이스 객체를 사용자가 임의로 만들 수 있는 기능을 제공함으로써 마치 새로운 하나의 프로그래밍 언어처럼 무한한 기능을 손쉽게 구현할 수 있다.

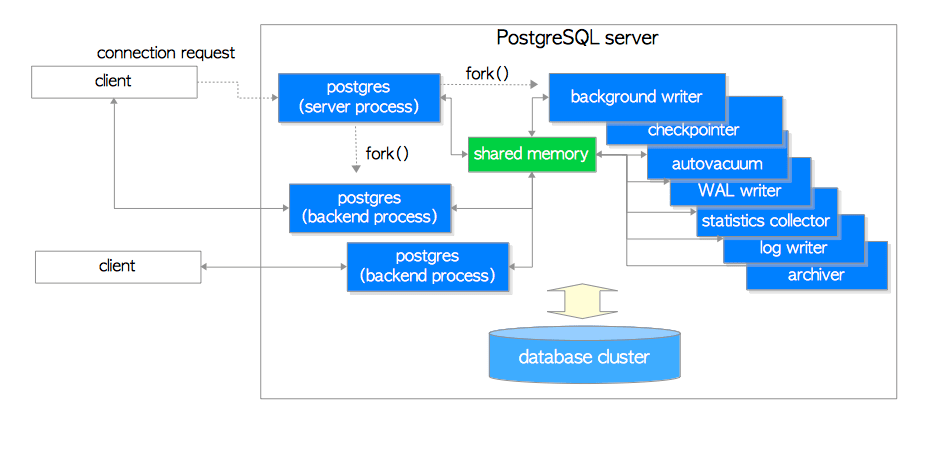

1.2 PostgreSQL의 구조

PostgreSQL은 클라이언트/서버 모델을 사용한다. 서버는 데이터베이스 파일들을 관리하며, 클라이언트 애플리케이션으로부터 들어오는 연결을 수용하고, 클라이언트를 대신하여 데이터베이스 액션을 수행한다. 서버는 다중 클라이언트 연결을 처리할 수 있는데, 서버는 클라이언트의 연결 요청이 오면 각 커넥션에 대해 새로운 프로세스를 fork한다. 그리고 클라이언트는 기존 서버와의 간섭 없이 새로 생성된 서버 프로세스와 통신하게 된다.

2. PostgreSQL 설치

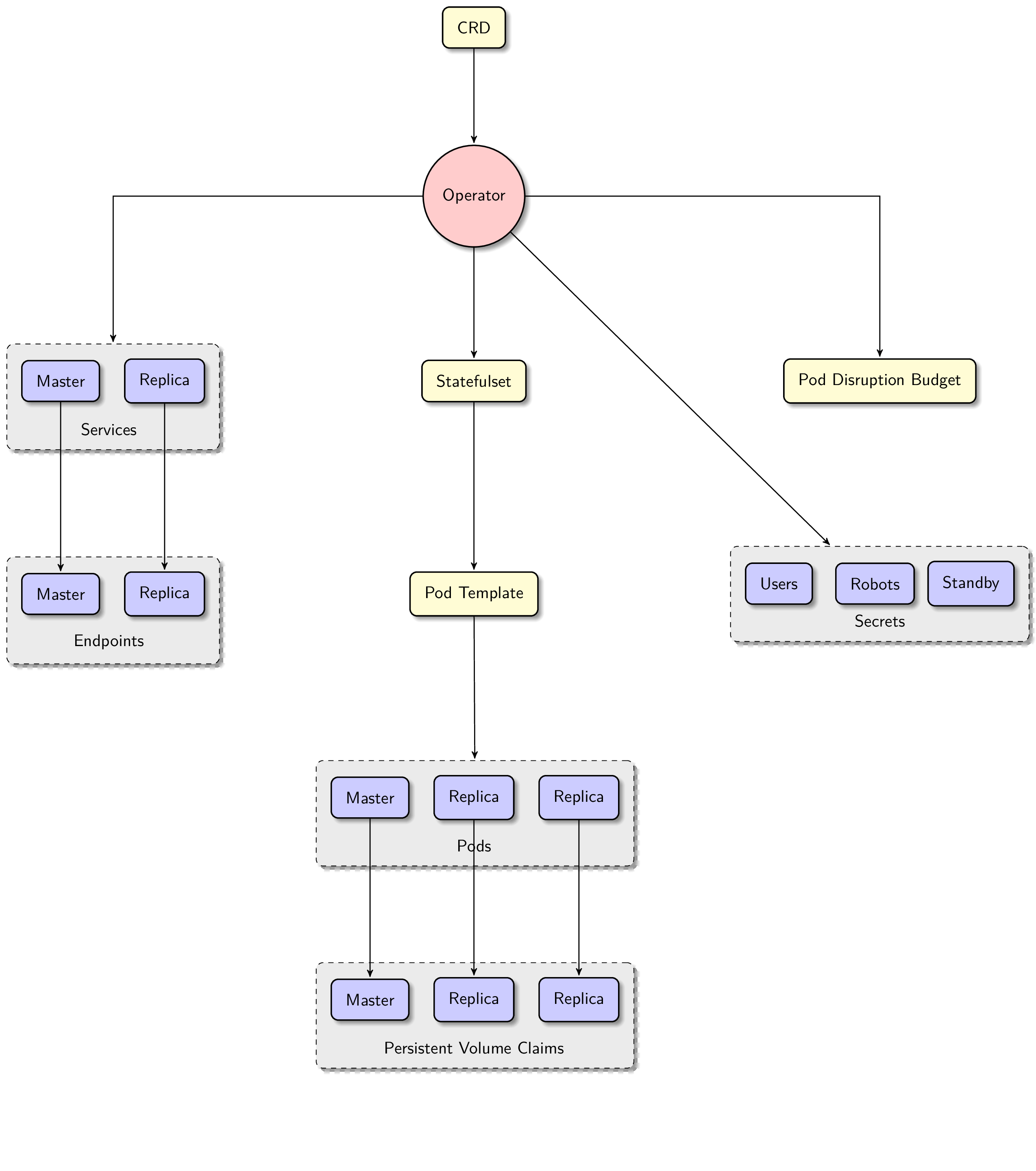

PostgreSQL 클러스터 배포 요약 다이어그램

사전 준비 사항

- K8S 클러스터 (Master Node 1대, Worker Node 2대)

2.1 PostgreSQL operator 설치

Operator 설치 과정

# Install Operator Lifecycle Manager (OLM), a tool to help manage the Operators running on your cluster.

curl -sL https://github.com/operator-framework/operator-lifecycle-manager/releases/download/v0.25.0/install.sh | bash -s v0.25.0

root@rook-01:~/postgresql# k get all -n olm

NAME READY STATUS RESTARTS AGE

pod/catalog-operator-7d66c6f84b-4hljx 1/1 Running 0 69s

pod/olm-operator-5745b6f788-28c55 1/1 Running 0 69s

pod/operatorhubio-catalog-s5z2n 1/1 Running 0 58s

pod/packageserver-8484876764-ttbkc 1/1 Running 0 57s

pod/packageserver-8484876764-xnqj7 1/1 Running 0 57s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/operatorhubio-catalog ClusterIP 10.111.74.135 <none> 50051/TCP 58s

service/packageserver-service ClusterIP 10.96.41.28 <none> 5443/TCP 58s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/catalog-operator 1/1 1 1 69s

deployment.apps/olm-operator 1/1 1 1 69s

deployment.apps/packageserver 2/2 2 2 58s

NAME DESIRED CURRENT READY AGE

replicaset.apps/catalog-operator-7d66c6f84b 1 1 1 69s

replicaset.apps/olm-operator-5745b6f788 1 1 1 69s

replicaset.apps/packageserver-8484876764 2 2 2 58s

Operator CRD 설치

# Install the operator by running the following command

# This Operator will be installed in the "operators" namespace and will be usable from all namespaces in the cluster.

curl -s -O https://operatorhub.io/install/cloudnative-pg.yaml

root@rook-01:~/postgresql# kubectl get all -n operators

NAME READY STATUS RESTARTS AGE

pod/cnpg-controller-manager-7c5cd68975-sjnsk 1/1 Running 0 65s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cnpg-controller-manager-service ClusterIP 10.99.223.89 <none> 443/TCP 65s

service/cnpg-webhook-service ClusterIP 10.102.108.135 <none> 443/TCP 66s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cnpg-controller-manager 1/1 1 1 65s

NAME DESIRED CURRENT READY AGE

replicaset.apps/cnpg-controller-manager-7c5cd68975 1 1 1 65s

root@rook-01:~/postgresql# kubectl get crd | grep cnpg

backups.postgresql.cnpg.io 2023-11-03T08:32:37Z

clusters.postgresql.cnpg.io 2023-11-03T08:32:37Z

poolers.postgresql.cnpg.io 2023-11-03T08:32:37Z

scheduledbackups.postgresql.cnpg.io 2023-11-03T08:32:37Z

2.2 PostgreSQL Cluster 배포

이제 연산자가 설치되었으므로 가용성이 높은 PostgreSQL 클러스터를 생성해 보겠습니다. 또한 애플리케이션 데이터베이스를 부트스트랩하고 일부 데이터베이스 사용자를 추가하며 일부 구성 옵션을 추가합니다. 이 모든 작업을 단일 yaml 매니페스트에서 수행할 수 있으므로 클러스터 설정이 간편해집니다.

클러스터 설치 Deploy a PostgreSQL cluster : 버전 15.3 (추후 15.4 롤링 업데이트 예정)

3대 파드 구성(프라이머리 1대, 스탠드바이 2대) , config parameters 설정, pg_hba 설정, bootstrap 설정, 파드 모니터 설정

cat <<EOT> mycluster1.yaml

# Example of PostgreSQL cluster

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: mycluster

spec:

imageName: ghcr.io/cloudnative-pg/postgresql:15.3

instances: 3

storage:

size: 3Gi

postgresql:

parameters:

max_worker_processes: "40"

timezone: "Asia/Seoul"

pg_hba:

- host all postgres all trust

primaryUpdateStrategy: unsupervised

enableSuperuserAccess: true

bootstrap:

initdb:

database: app

encoding: UTF8

localeCType: C

localeCollate: C

owner: app

monitoring:

enablePodMonitor: true

EOT

kubectl apply -f mycluster1.yaml

root@rook-01:~/postgresql# kubectl get pod,pvc,pv,svc,ep

NAME READY STATUS RESTARTS AGE

pod/mycluster-1 1/1 Running 0 4m31s

pod/mycluster-2 1/1 Running 0 2m13s

pod/mycluster-3 1/1 Running 0 45s

pod/nfs-pod-provisioner-867f957649-b47l2 1/1 Running 0 6d23h

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mycluster-1 Bound pvc-87262721-2b79-404f-a522-fa0dc86bd3c6 3Gi RWO nfs-storageclass 7m35s

persistentvolumeclaim/mycluster-2 Bound pvc-a9cfc0b2-eaa1-43f2-b277-382fb220235d 3Gi RWO nfs-storageclass 4m20s

persistentvolumeclaim/mycluster-3 Bound pvc-f8d4e5cf-7630-4805-9b07-526fbb532513 3Gi RWO nfs-storageclass 62s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-1932857f-49bf-41e0-b94c-c7187fb68a66 2Gi RWO Delete Terminating mysql-cluster/datadir-mycluster-2 local-path 11d

persistentvolume/pvc-2fd5b199-4b2f-4ff2-9941-eacd1623f182 2Gi RWO Delete Bound mysql-cluster/datadir-mycluster-0 local-path 11d

persistentvolume/pvc-87262721-2b79-404f-a522-fa0dc86bd3c6 3Gi RWO Delete Bound default/mycluster-1 nfs-storageclass 7m35s

persistentvolume/pvc-a9cfc0b2-eaa1-43f2-b277-382fb220235d 3Gi RWO Delete Bound default/mycluster-2 nfs-storageclass 4m20s

persistentvolume/pvc-c746bca6-2fd1-4d0d-a7a7-00633d5d50f1 2Gi RWO Delete Bound mysql-cluster/datadir-mycluster-1 local-path 11d

persistentvolume/pvc-f8d4e5cf-7630-4805-9b07-526fbb532513 3Gi RWO Delete Bound default/mycluster-3 nfs-storageclass 61s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16d

service/mycluster-r ClusterIP 10.111.159.145 <none> 5432/TCP 7m35s

service/mycluster-ro ClusterIP 10.98.119.223 <none> 5432/TCP 7m35s

service/mycluster-rw ClusterIP 10.96.37.127 <none> 5432/TCP 7m35s

NAME ENDPOINTS AGE

endpoints/k8s-sigs.io-nfs-subdir-external-provisioner <none> 6d23h

endpoints/kubernetes 172.19.187.140:6443 16d

endpoints/mycluster-r 10.244.125.198:5432,10.244.125.219:5432,10.244.155.112:5432 7m35s

endpoints/mycluster-ro 10.244.125.219:5432,10.244.155.112:5432 7m35s

endpoints/mycluster-rw 10.244.125.198:5432 7m35s

cpng 플러그인 설치

- cpng 플러그인을 통해서 클러스터의 다양한 정보를 확인 가능

- 관리의 편리를 위해서 CNPG 플러그인을 설치하고, kubectl cnpg 명령어를 사용해 배포가 잘되었는지 확인합니다.

- 아래처럼 아주 깔끔하게 현재 상태와 role까지 확인되며 Streaming 상태 체크도 가능합니다.

curl -sSfL https://github.com/cloudnative-pg/cloudnative-pg/raw/main/hack/install-cnpg-plugin.sh | sudo sh -s -- -b /usr/local/bin

kubectl krew install cnpg

kubectl cnpg status mycluster

root@rook-01:~/postgresql# kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: default

System ID: 7297152618599587859

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-1

Primary start time: 2023-11-03 08:39:21 +0000 UTC (uptime 4m1s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/6000060 (Timeline: 1 - WAL File: 000000010000000000000006)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-01 08:31:14 +0000 UTC 89.99

mycluster-replication 2024-02-01 08:31:14 +0000 UTC 89.99

mycluster-server 2024-02-01 08:31:14 +0000 UTC 89.99

Continuous Backup status

Not configured

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-2 0/6000060 0/6000060 0/6000060 0/6000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

mycluster-3 0/6000060 0/6000060 0/6000060 0/6000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-1 29 MB 0/6000060 Primary OK BestEffort 1.21.0 rook-03

mycluster-2 29 MB 0/6000060 Standby (async) OK BestEffort 1.21.0 rook-01

mycluster-3 29 MB 0/6000060 Standby (async) OK BestEffort 1.21.0 rook-03

기본 리소스들 확인

root@rook-01:~/postgresql# kubectl get pod,deploy

NAME READY STATUS RESTARTS AGE

pod/mycluster-1 1/1 Running 0 6m34s

pod/mycluster-2 1/1 Running 0 4m16s

pod/mycluster-3 1/1 Running 0 2m48s

root@rook-01:~/postgresql# kubectl get svc,ep,endpointslices -l cnpg.io/cluster=mycluster

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mycluster-r ClusterIP 10.111.159.145 <none> 5432/TCP 10m

service/mycluster-ro ClusterIP 10.98.119.223 <none> 5432/TCP 10m

service/mycluster-rw ClusterIP 10.96.37.127 <none> 5432/TCP 10m

NAME ENDPOINTS AGE

endpoints/mycluster-r 10.244.125.198:5432,10.244.125.219:5432,10.244.155.112:5432 10m

endpoints/mycluster-ro 10.244.125.219:5432,10.244.155.112:5432 10m

endpoints/mycluster-rw 10.244.125.198:5432 10m

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

endpointslice.discovery.k8s.io/mycluster-r-b99dn IPv4 5432 10.244.125.198,10.244.155.112,10.244.125.219 10m

endpointslice.discovery.k8s.io/mycluster-ro-jzj57 IPv4 5432 10.244.155.112,10.244.125.219 10m

endpointslice.discovery.k8s.io/mycluster-rw-j7t4p IPv4 5432 10.244.125.198 10m

root@rook-01:~/postgresql# kubectl get cm,secret

NAME DATA AGE

configmap/cnpg-default-monitoring 1 11m

configmap/kube-root-ca.crt 1 16d

NAME TYPE DATA AGE

secret/mycluster-app kubernetes.io/basic-auth 9 11m

secret/mycluster-ca Opaque 2 11m

secret/mycluster-replication kubernetes.io/tls 2 11m

secret/mycluster-server kubernetes.io/tls 2 11m

secret/mycluster-superuser kubernetes.io/basic-auth 9 11m

root@rook-01:~/postgresql# kubectl get pdb

NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE

mycluster 1 N/A 1 11m

mycluster-primary 1 N/A 0 11m

PV/PVC 확인

root@rook-01:~/postgresql# kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mycluster-1 Bound pvc-87262721-2b79-404f-a522-fa0dc86bd3c6 3Gi RWO nfs-storageclass 10m

persistentvolumeclaim/mycluster-2 Bound pvc-a9cfc0b2-eaa1-43f2-b277-382fb220235d 3Gi RWO nfs-storageclass 6m59s

persistentvolumeclaim/mycluster-3 Bound pvc-f8d4e5cf-7630-4805-9b07-526fbb532513 3Gi RWO nfs-storageclass 3m41s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-1932857f-49bf-41e0-b94c-c7187fb68a66 2Gi RWO Delete Terminating mysql-cluster/datadir-mycluster-2 local-path 11d

persistentvolume/pvc-2fd5b199-4b2f-4ff2-9941-eacd1623f182 2Gi RWO Delete Bound mysql-cluster/datadir-mycluster-0 local-path 11d

persistentvolume/pvc-87262721-2b79-404f-a522-fa0dc86bd3c6 3Gi RWO Delete Bound default/mycluster-1 nfs-storageclass 10m

persistentvolume/pvc-a9cfc0b2-eaa1-43f2-b277-382fb220235d 3Gi RWO Delete Bound default/mycluster-2 nfs-storageclass 6m59s

persistentvolume/pvc-c746bca6-2fd1-4d0d-a7a7-00633d5d50f1 2Gi RWO Delete Bound mysql-cluster/datadir-mycluster-1 local-path 11d

persistentvolume/pvc-f8d4e5cf-7630-4805-9b07-526fbb532513 3Gi RWO Delete Bound default/mycluster-3 nfs-storageclass 3m40s3. CloudNativePG 기본 사용

클러스터 설치가 되었으니 이제 작동이 잘되는지 확인해보자. 여기서는 PostgreSQL Client를 파드로 설치해서 접속하고 테스트한다.

myclient라는 파드를 생성하고, 해당 pod에서 mycluster에 접근을 시도하면 적절하게 분산접속이 가능한지 테스트할 수 있습니다. 저는 아래와 같은 결과 값을 획득하였습니다.

3.1 PostgreSQL 접속

# 2개의 자격 증명이 저장된 secret 확인

root@rook-01:~# kubectl get secret -l cnpg.io/cluster=mycluster

NAME TYPE DATA AGE

mycluster-app kubernetes.io/basic-auth 9 130m

mycluster-superuser kubernetes.io/basic-auth 9 130m

# superuser 계정명

root@rook-01:~# kubectl get secrets mycluster-superuser -o jsonpath={.data.username} | base64 -d ;echo

postgres

# superuser 계정 암호

root@rook-01:~# kubectl get secrets mycluster-superuser -o jsonpath={.data.password} | base64 -d ;echo

f7QN4PhVue6IWGxJuInuPprh2NS9GGoHFfeula6KQmzwxVmKmah1IbGFeUF4y80R

root@rook-01:~# kubectl get secrets mycluster-superuser -o jsonpath={.data.pgpass} | base64 -d

mycluster-rw:5432:*:postgres:f7QN4PhVue6IWGxJuInuPprh2NS9GGoHFfeula6KQmzwxVmKmah1IbGFeUF4y80R

# app 계정명

root@rook-01:~# kubectl get secrets mycluster-app -o jsonpath={.data.username} | base64 -d ;echo

app

# app 계정 암호

root@rook-01:~# kubectl get secrets mycluster-app -o jsonpath={.data.password} | base64 -d ;echo

CFNI85MbYlQwy3ehKfpIq7tVDMibjxA4T8QQwaxfANqc5FjFo2TKrg4LzzwSB3ei

# app 계정 암호 변수 지정

root@rook-01:~# AUSERPW=$(kubectl get secrets mycluster-app -o jsonpath={.data.password} | base64 -d)

# myclient 파드 3대 배포 : envsubst 활용

## PODNAME=myclient1 VERSION=15.3.0 envsubst < myclient.yaml | kubectl apply -f -

curl -s https://raw.githubusercontent.com/gasida/DOIK/main/5/myclient-new.yaml -o myclient.yaml

for ((i=1; i<=3; i++)); do PODNAME=myclient$i VERSION=15.3.0 envsubst < myclient.yaml | kubectl apply -f - ; done

# [myclient1] superuser 계정으로 mycluster-rw 서비스 접속

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 --variable=HISTFILE=/tmp/.psql_history

psql (15.3, server 15.4 (Debian 15.4-2.pgdg110+1))

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, compression: off)

Type "help" for help.

postgres=# \conninfo

You are connected to database "postgres" as user "postgres" on host "mycluster-rw" (address "10.96.37.127") at port "5432".

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, compression: off)

postgres=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | ICU Locale | Locale Provider | Access privileges

-----------+----------+----------+---------+-------+------------+-----------------+-----------------------

app | app | UTF8 | C | C | | libc |

postgres | postgres | UTF8 | C | C | | libc |

template0 | postgres | UTF8 | C | C | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | C | C | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

test | postgres | UTF8 | C | C | | libc |

(5 rows)

postgres=# SELECT * FROM pg_timezone_names WHERE name = current_setting('TIMEZONE');

name | abbrev | utc_offset | is_dst

------------+--------+------------+--------

Asia/Seoul | KST | 09:00:00 | f

(1 row)

postgres=# \q

# [myclient1] superuser 계정으로 mycluster-rw 서비스 접속하여 데이터베이스 리스트 조회

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -l

List of databases

Name | Owner | Encoding | Collate | Ctype | ICU Locale | Locale Provider | Access privileges

-----------+----------+----------+---------+-------+------------+-----------------+-----------------------

app | app | UTF8 | C | C | | libc |

postgres | postgres | UTF8 | C | C | | libc |

template0 | postgres | UTF8 | C | C | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | C | C | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

test | postgres | UTF8 | C | C | | libc |

(5 rows)

# [myclient1] app 계정으로 mycluster-rw 서비스 접속하여 app 데이터베이스 이동 >> app 계정 암호 직접 입력

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U app -h mycluster-rw -p 5432 -d app -W --variable=HISTFILE=/tmp/.psql_history

Password:

psql: error: connection to server at "mycluster-rw" (10.96.37.127), port 5432 failed: FATAL: password authentication failed for user "app"

connection to server at "mycluster-rw" (10.96.37.127), port 5432 failed: FATAL: password authentication failed for user "app"

command terminated with exit code 2

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U app -h mycluster-rw -p 5432 -d app -W --variable=HISTFILE=/tmp/.psql_history

Password:

psql (15.3, server 15.4 (Debian 15.4-2.pgdg110+1))

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, compression: off)

Type "help" for help.

app=> \conninfo

You are connected to database "app" as user "app" on host "mycluster-rw" (address "10.96.37.127") at port "5432".

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, compression: off)

app=> \l

List of databases

Name | Owner | Encoding | Collate | Ctype | ICU Locale | Locale Provider | Access privileges

-----------+----------+----------+---------+-------+------------+-----------------+-----------------------

app | app | UTF8 | C | C | | libc |

postgres | postgres | UTF8 | C | C | | libc |

template0 | postgres | UTF8 | C | C | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | C | C | | libc | =c/postgres +

| | | | | | | postgres=CTc/postgres

test | postgres | UTF8 | C | C | | libc |

(5 rows)

app=> \dt

Did not find any relations.

app=> \q4. CloudNativePG 장애 테스트

4.1 장애 테스트를 위한 사전 준비

# myclient 파드 3대 배포 : envsubst 활용

## PODNAME=myclient1 VERSION=15.3.0 envsubst < myclient.yaml | kubectl apply -f -

curl -s https://raw.githubusercontent.com/gasida/DOIK/main/5/myclient-new.yaml -o myclient.yaml

root@rook-01:~/postgresql# for ((i=1; i<=3; i++)); do PODNAME=myclient$i VERSION=15.3.0 envsubst < myclient.yaml | kubectl apply -f - ; done

pod/myclient1 created

pod/myclient2 created

pod/myclient3 created

# 파드IP 변수 지정

POD1=$(kubectl get pod mycluster-1 -o jsonpath={.status.podIP})

POD2=$(kubectl get pod mycluster-2 -o jsonpath={.status.podIP})

POD3=$(kubectl get pod mycluster-3 -o jsonpath={.status.podIP})

# query.sql

curl -s -O https://raw.githubusercontent.com/gasida/DOIK/main/5/query.sql

cat query.sql ;echo

CREATE DATABASE test;

\c test;

CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 TEXT NOT NULL);

INSERT INTO t1 VALUES (1, 'Luis');

root@rook-01:~/postgresql# kubectl cp query.sql myclient1:/tmp

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -f /tmp/query.sql

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT COUNT(*) FROM t1"

count

-------

1

(1 row)

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT * FROM t1"

c1 | c2

----+------

1 | Luis

(1 row)

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES (2, 'Luis2');"

INSERT 0 1

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT * FROM t1"

c1 | c2

----+-------

1 | Luis

2 | Luis2

(2 rows)

for ((i=3; i<=100; i++)); do kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES ($i, 'Luis$i');";echo; done

root@rook-01:~/postgresql# kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT COUNT(*) FROM t1"

count

-------

100

(1 row)

4.2 [장애1] 프라이머리 파드(인스턴스) 1대 강제 삭제 및 동작 확인

for ((i=301; i<=10000; i++)); do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES ($i, 'Luis$i');";echo; done

kubectl delete pvc/mycluster-1 pod/mycluster-1

root@rook-01:~/postgresql# kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: default

System ID: 7297152618599587859

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-2

Primary start time: 2023-11-03 09:10:03 +0000 UTC (uptime 2s)

Status: Creating a new replica Creating replica mycluster-4-join

Instances: 3

Ready instances: 2

Current Write LSN: 0/A003E40 (Timeline: 2 - WAL File: 00000002000000000000000A)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-replication 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-server 2024-02-01 08:31:14 +0000 UTC 89.97

Continuous Backup status

Not configured

Streaming Replication status

Not available yet

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-2 36 MB 0/A003E40 Primary OK BestEffort 1.21.0 rook-01

mycluster-3 36 MB 0/A0000A0 Standby (file based) OK BestEffort 1.21.0 rook-03

root@rook-01:~/postgresql# kubectl get pod -l cnpg.io/cluster=mycluster -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mycluster-2 1/1 Running 0 29m 10.244.155.112 rook-01 <none> <none>

mycluster-3 1/1 Running 0 27m 10.244.125.219 rook-03 <none> <none>

mycluster-4 1/1 Running 0 18s 10.244.140.231 rook-02 <none> <none>

결과

1) Primary가 삭제됨에 따라서, Standby 노드가 Primary로 승격되고 클러스터가 안정화 될 때까지 잠시 자료 입력에 오류가 생깁니다.

2) 그 후 클러스터가 안정화되면 일단 기존에 있는 노드를 통해서 자료 입력이 지속되고, 새로운 노드가 Standby됩니다.

4.3 [장애2] 프라이머리 파드(인스턴스) 가 배포된 노드 1대 drain 설정 및 동작 확인

# 워커노드 drain

# kubectl drain <<노드>> --ignore-daemonsets --delete-emptydir-data

root@rook-01:~/postgresql# kubectl get node

NAME STATUS ROLES AGE VERSION

rook-01 Ready control-plane 16d v1.28.2

rook-02 Ready <none> 16d v1.28.2

rook-03 Ready <none> 16d v1.28.2

root@rook-01:~/postgresql# kubectl drain rook-02 --delete-emptydir-data --force --ignore-daemonsets && kubectl get node -w

node/rook-02 cordoned

Warning: deleting Pods that declare no controller: default/myclient1, default/myclient3; ignoring DaemonSet-managed Pods: kube-system/calico-node-whwv2, kube-system/kube-proxy-zsdlf

evicting pod operators/cnpg-controller-manager-7c5cd68975-sjnsk

evicting pod default/myclient1

evicting pod default/myclient3

evicting pod default/mycluster-4

evicting pod kube-system/calico-kube-controllers-9d57d8f49-662j7

evicting pod kube-system/coredns-5dd5756b68-c8gqh

evicting pod olm/b679bb7b66d805e5fe1b13497531b969e9f6a87668694fa1c6bbaaa84dghwsf

evicting pod olm/catalog-operator-7d66c6f84b-4hljx

evicting pod olm/olm-operator-5745b6f788-28c55

evicting pod olm/operatorhubio-catalog-s5z2n

evicting pod olm/packageserver-8484876764-ttbkc

pod/b679bb7b66d805e5fe1b13497531b969e9f6a87668694fa1c6bbaaa84dghwsf evicted

pod/myclient3 evicted

pod/myclient1 evicted

pod/catalog-operator-7d66c6f84b-4hljx evicted

pod/packageserver-8484876764-ttbkc evicted

pod/olm-operator-5745b6f788-28c55 evicted

pod/operatorhubio-catalog-s5z2n evicted

pod/cnpg-controller-manager-7c5cd68975-sjnsk evicted

I1103 18:14:18.633371 607690 request.go:697] Waited for 1.053924954s due to client-side throttling, not priority and fairness, request: GET:https://172.19.187.140:6443/api/v1/namespaces/default/pods/mycluster-4

pod/mycluster-4 evicted

pod/calico-kube-controllers-9d57d8f49-662j7 evicted

pod/coredns-5dd5756b68-c8gqh evicted

node/rook-02 drained

NAME STATUS ROLES AGE VERSION

rook-01 Ready control-plane 16d v1.28.2

rook-02 Ready,SchedulingDisabled <none> 16d v1.28.2

rook-03 Ready <none> 16d v1.28.2

root@rook-01:~/postgresql# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myclient2 1/1 Running 0 22m 10.244.125.220 rook-03 <none> <none>

mycluster-2 1/1 Running 0 33m 10.244.155.112 rook-01 <none> <none>

mycluster-3 1/1 Running 0 31m 10.244.125.219 rook-03 <none> <none>

mycluster-4 1/1 Running 0 31s 10.244.125.223 rook-03 <none> <none>

nfs-pod-provisioner-867f957649-b47l2 1/1 Running 0 7d 10.244.125.216 rook-03 <none> <none>

root@rook-01:~/postgresql# kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: default

System ID: 7297152618599587859

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-2

Primary start time: 2023-11-03 09:10:03 +0000 UTC (uptime 4m57s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/C061720 (Timeline: 2 - WAL File: 00000002000000000000000C)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-replication 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-server 2024-02-01 08:31:14 +0000 UTC 89.97

Continuous Backup status

Not configured

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-3 0/C061720 0/C061720 0/C061720 0/C061720 00:00:00.000859 00:00:00.004233 00:00:00.00426 streaming async 0 active

mycluster-4 0/C061720 0/C061720 0/C061720 0/C061720 00:00:00.000668 00:00:00.00759 00:00:00.007626 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-2 37 MB 0/C061720 Primary OK BestEffort 1.21.0 rook-01

mycluster-4 36 MB 0/C061A00 Standby (async) OK BestEffort 1.21.0 rook-03

mycluster-3 36 MB 0/C0617D8 Standby (async) OK BestEffort 1.21.0 rook-03

root@rook-01:~/postgresql# k get nodes

NAME STATUS ROLES AGE VERSION

rook-01 Ready control-plane 16d v1.28.2

rook-02 Ready,SchedulingDisabled <none> 16d v1.28.2

rook-03 Ready <none> 16d v1.28.2

root@rook-01:~/postgresql# k uncordon rook-02

node/rook-02 uncordoned

root@rook-01:~/postgresql# k get nodes

NAME STATUS ROLES AGE VERSION

rook-01 Ready control-plane 16d v1.28.2

rook-02 Ready <none> 16d v1.28.2

rook-03 Ready <none> 16d v1.28.2

결과

- 기본적인 정책상 1개 이상의 Secondary와 Primary가 필요하기 때문에 node의 drain 상태라도 cnpg가 evict 되지 않는다.

4.4 Primary 파드 변경

root@rook-01:~/postgresql# kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: default

System ID: 7297152618599587859

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-2

Primary start time: 2023-11-03 09:10:03 +0000 UTC (uptime 7m8s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/D029BD8 (Timeline: 2 - WAL File: 00000002000000000000000D)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-replication 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-server 2024-02-01 08:31:14 +0000 UTC 89.97

Continuous Backup status

Not configured

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-3 0/D029BD8 0/D029BD8 0/D029BD8 0/D029BD8 00:00:00.000502 00:00:00.006392 00:00:00.006414 streaming async 0 active

mycluster-4 0/D029BD8 0/D029BD8 0/D029BD8 0/D029BD8 00:00:00.000648 00:00:00.005916 00:00:00.005942 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-2 37 MB 0/D029BD8 Primary OK BestEffort 1.21.0 rook-01

mycluster-4 36 MB 0/D029D48 Standby (async) OK BestEffort 1.21.0 rook-03

mycluster-3 36 MB 0/D029C90 Standby (async) OK BestEffort 1.21.0 rook-03

root@rook-01:~/postgresql# k get pods

NAME READY STATUS RESTARTS AGE

myclient2 1/1 Running 0 25m

mycluster-2 1/1 Running 0 35m

mycluster-3 1/1 Running 0 34m

mycluster-4 1/1 Running 0 3m4s

nfs-pod-provisioner-867f957649-b47l2 1/1 Running 0 7d

root@rook-01:~/postgresql# kubectl cnpg promote mycluster mycluster-4

root@rook-01:~/postgresql# kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: default

System ID: 7297152618599587859

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-4

Primary start time: 2023-11-03 09:17:30 +0000 UTC (uptime 13s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/E00F0F8 (Timeline: 3 - WAL File: 00000003000000000000000E)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-replication 2024-02-01 08:31:14 +0000 UTC 89.97

mycluster-server 2024-02-01 08:31:14 +0000 UTC 89.97

Continuous Backup status

Not configured

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-2 0/E00F0F8 0/E00F0F8 0/E00F0F8 0/E00F0F8 00:00:00.000653 00:00:00.003968 00:00:00.004035 streaming async 0 active

mycluster-3 0/E00F0F8 0/E00F0F8 0/E00F0F8 0/E00F0F8 00:00:00.000786 00:00:00.003863 00:00:00.00409 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-4 37 MB 0/E00F0F8 Primary OK BestEffort 1.21.0 rook-03

mycluster-3 36 MB 0/E00F040 Standby (async) OK BestEffort 1.21.0 rook-03

mycluster-2 36 MB 0/E00EBC0 Standby (async) OK BestEffort 1.21.0 rook-01

root@rook-01:~/postgresql# kubectl exec -it myclient2 -- psql -U postgres -h mycluster-rw -p 5432 -c "select inet_server_addr();"

inet_server_addr

------------------

10.244.125.223

(1 row)

root@rook-01:~/postgresql# k get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myclient2 1/1 Running 0 27m 10.244.125.220 rook-03 <none> <none>

mycluster-2 1/1 Running 1 (119s ago) 37m 10.244.155.112 rook-01 <none> <none>

mycluster-3 1/1 Running 0 36m 10.244.125.219 rook-03 <none> <none>

mycluster-4 1/1 Running 0 5m9s 10.244.125.223 rook-03 <none> <none>

5. CloudNativePG Scale & 롤링 업데이트

5.1 Scale 테스트

PostgreSQL을 운영 중에 Scale out이 필요할 수 있다. 매우 간단하다.

root@rook-01:~# kubectl get cluster mycluster

NAME AGE INSTANCES READY STATUS PRIMARY

mycluster 121m 3 3 Cluster in healthy state mycluster-4

kubectl patch cluster mycluster --type=merge -p '{"spec":{"instances":5}}' && kubectl get pod -l postgresql=mycluster -w

root@rook-01:~# k get pods

NAME READY STATUS RESTARTS AGE

myclient2 1/1 Running 0 107m

mycluster-2 1/1 Running 1 (81m ago) 117m

mycluster-3 1/1 Running 0 116m

mycluster-4 1/1 Running 0 85m

mycluster-5 1/1 Running 0 57s

mycluster-6 1/1 Running 0 24s

root@rook-01:~# for i in {1..30}; do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-r -p 5432 -c "select inet_server_addr();"; done | sort | uniq -c | sort -nr | grep 10

10 10.244.125.219

7 10.244.140.235

6 10.244.125.223

5 10.244.155.112

2 10.244.140.237

kubectl patch cluster mycluster --type=merge -p '{"spec":{"instances":3}}' && kubectl get pod -l postgresql=mycluster -w

root@rook-01:~# k get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myclient2 1/1 Running 0 109m 10.244.125.220 rook-03 <none> <none>

mycluster-2 1/1 Running 1 (83m ago) 119m 10.244.155.112 rook-01 <none> <none>

mycluster-3 1/1 Running 0 117m 10.244.125.219 rook-03 <none> <none>

mycluster-4 1/1 Running 0 86m 10.244.125.223 rook-03 <none> <none>

5.2 Rolling Update

PostgreSQL 버전 업그레이드 시 Rolling Update를 지원한다. Rolling Update는 Minor 버전까지만 지원되고 절차는 2가지 프로세스로 나누어진다.

Upgrading CloudNativePG operator is a two-step process:

1) upgrade the controller and the related Kubernetes resources

2) upgrade the instance manager running in every PostgreSQL pod

업그레이드 설정에 primaryUpdateStrategy가 있는데 2가지 옵션이 있다.

1) unsupervised(default값) : 자동업데이트

2) supervised : 수동업데이트 (관리자가 직접 kubectl cnpg promote/restart 명령으로 업데이트)

root@rook-01:~# kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: default

System ID: 7297152618599587859

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-4

Primary start time: 2023-11-03 09:17:30 +0000 UTC (uptime 1h24m31s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/16000060 (Timeline: 3 - WAL File: 000000030000000000000016)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-01 08:31:14 +0000 UTC 89.91

mycluster-replication 2024-02-01 08:31:14 +0000 UTC 89.91

mycluster-server 2024-02-01 08:31:14 +0000 UTC 89.91

Continuous Backup status

Not configured

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-2 0/16000060 0/16000060 0/16000060 0/16000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

mycluster-3 0/16000060 0/16000060 0/16000060 0/16000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-4 37 MB 0/16000060 Primary OK BestEffort 1.21.0 rook-03

mycluster-2 37 MB 0/16000060 Standby (async) OK BestEffort 1.21.0 rook-01

mycluster-3 37 MB 0/16000060 Standby (async) OK BestEffort 1.21.0 rook-03

root@rook-01:~# k get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myclient2 1/1 Running 0 111m 10.244.125.220 rook-03 <none> <none>

mycluster-2 1/1 Running 0 64s 10.244.155.114 rook-01 <none> <none>

mycluster-3 1/1 Running 0 96s 10.244.140.238 rook-02 <none> <none>

mycluster-4 1/1 Running 0 30s 10.244.125.195 rook-03 <none> <none>

root@rook-01:~# kubectl cnpg status mycluster | grep Image

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.4Rolling Update 후 이미지 버전이 바뀌고 Primary 파드도 바뀌었다. Primary 파드가 재시작되는 시간이 있기 때문에 write transaction의 순단현상을 최소화 하기 위해 Primary 파드를 이미 업데이트 된 mycluster-4로 바뀌었다.

이것으로 Cloud Native PostgreSQL 포스팅을 마친다.