kafka를 Docker로 띄운 후, 몇개의 설정을 변경 하여 Kafka 를 모니터링할 수 있는 환경을 구축

kafka를 Docker 클러스터로 구축하기

yaml 파일 수정

- 앞서 구축한 kafka cluster의 yml파일에서 몇가지를 수정해줘야 jmx 메트릭을 수집할 수 있음

## docker-compose-kafka1.yml 파일 (2, 3 도 동일하게 변경해줘야함 )

services:

broker:

image: confluentinc/cp-kafka:7.7.0

hostname: broker

container_name: broker

ports:

- "8080:8080" # 추가해줄 port -> metrics를 볼 수 있음 (외부ip:8080/metrics)

- "9101:9101" # 추가해줄 port -> jmx metric을 수집할수 있게 하는 port

- "9092:9092"

- "29093:29093"

- "29092:29092"

environment:

KAFKA_NODE_ID: 1

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: 'CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT'

KAFKA_ADVERTISED_LISTENERS: 'PLAINTEXT://10.178.0.3:29092,PLAINTEXT_HOST://10.178.0.3:9092'

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 3

KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 3

KAFKA_PROCESS_ROLES: 'broker,controller'

KAFKA_JMX_PORT: 9101

KAFKA_JMX_HOSTNAME: server1

KAFKA_CONTROLLER_QUORUM_VOTERS: '1@10.178.0.3:29093,2@10.178.0.4:29093, 3@10.178.0.5:29093'

KAFKA_LISTENERS: 'PLAINTEXT://broker:29092,CONTROLLER://broker:29093,PLAINTEXT_HOST://0.0.0.0:9092'

KAFKA_INTER_BROKER_LISTENER_NAME: 'PLAINTEXT'

KAFKA_CONTROLLER_LISTENER_NAMES: 'CONTROLLER'

KAFKA_LOG_DIRS: '/tmp/kraft-combined-logs'

CLUSTER_ID: 'TeSi-wM2RYORkvQHhvR5NQ'

# 아래 부분 모두 추가

KAFKA_OPTS: -javaagent:/usr/share/jmx_exporter/jmx_prometheus_javaagent-0.19.0.jar=8080:/usr/share/jmx_exporter/kafka-broker.yml

volumes:

- ../jmx-exporter:/usr/share/jmx_exporter/

- ../connect/libs/jmx_prometheus_javaagent-0.19.0.jar:/opt/kafka/libs/jmx_prometheus_javaagent-0.19.0.jar- volumes 부분 => {나의 폴더 위치}:{도커 안의 폴더 위치} 이기 때문에, 도커 안의 폴더 위치는 변경할 필요가 없지만, 내 폴더는 어떤 경로에 있는 지 확인할 필요가 있음.

- yml 파일이 있는 곳과 jmx-exporter 폴더가 있는 곳을 잘 확인하여 넣어줘야함.

jmx-exporter 설치

- 여기서는 0.19.0 version을 활용했고, 이를 변경하고 싶다면, 위쪽 설정들도 변경해줘야함.

- 앞서 만들었던, jmx-exporter 폴더 속에 .jar 파일을 넣어주기!

# .jar 파일 다운받기 명령어

wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.19.0/jmx_prometheus_javaagent-0.19.0.jarjmx-exporter 설정파일

- yml 형식으로 해야함.

- jmx-exporter 폴더 속에 kafka-broker.yml파일을 작성해서 함

- yml 파일의 이름은 상관 없지만, KAFKA_OPTS 부분을 변경해줘야함.

# jmx-exporter/kafka-broker.yml

# Provided by Prometheus example configs (github.com/prometheus/jmx_exporter)

lowercaseOutputName: true

rules:

# Special cases and very specific rules

- pattern: kafka.server<type=(.+), name=(.+), clientId=(.+), topic=(.+), partition=(.*)><>Value

name: kafka_server_$1_$2

type: GAUGE

labels:

clientId: "$3"

topic: "$4"

partition: "$5"

- pattern: kafka.server<type=(.+), name=(.+), clientId=(.+), brokerHost=(.+), brokerPort=(.+)><>Value

name: kafka_server_$1_$2

type: GAUGE

labels:

clientId: "$3"

broker: "$4:$5"

- pattern: kafka.coordinator.(\w+)<type=(.+), name=(.+)><>Value

name: kafka_coordinator_$1_$2_$3

type: GAUGE

# Generic per-second counters with 0-2 key/value pairs

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*, (.+)=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*, (.+)=(.+)><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

# Quota specific rules

- pattern: kafka.server<type=(.+), user=(.+), client-id=(.+)><>([a-z-]+)

name: kafka_server_quota_$4

type: GAUGE

labels:

resource: "$1"

user: "$2"

clientId: "$3"

- pattern: kafka.server<type=(.+), client-id=(.+)><>([a-z-]+)

name: kafka_server_quota_$3

type: GAUGE

labels:

resource: "$1"

clientId: "$2"

- pattern: kafka.server<type=(.+), user=(.+)><>([a-z-]+)

name: kafka_server_quota_$3

type: GAUGE

labels:

resource: "$1"

user: "$2"

# Generic gauges with 0-2 key/value pairs

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+), (.+)=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

# Emulate Prometheus 'Summary' metrics for the exported 'Histogram's.

#

# Note that these are missing the '_sum' metric!

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.*), (.+)=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

"$6": "$7"

quantile: "0.$8"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.*)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

quantile: "0.$6"

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

quantile: "0.$4"

# Generic gauges for MeanRate Percent

# Ex) kafka.server<type=KafkaRequestHandlerPool, name=RequestHandlerAvgIdlePercent><>MeanRate

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*><>MeanRate

name: kafka_$1_$2_$3_percent

type: GAUGE

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*><>Value

name: kafka_$1_$2_$3_percent

type: GAUGE

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*, (.+)=(.+)><>Value

name: kafka_$1_$2_$3_percent

type: GAUGE

labels:

"$4": "$5"

# Custom rules added to the Prometheus example config

- pattern: kafka.(.+)<type=(.+), name=(.+), (.+)=(.+), (.+)=(.+)><>(Count|Value)

name: kafka_$1_$2_$3

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(.+)<type=(.+), name=(.+), (.+)=(.+)><>(Count|Value)

name: kafka_$1_$2_$3

labels:

"$4": "$5"

- pattern: kafka.(.+)<type=(.+), name=(.+)><>(Count|Value)

name: kafka_$1_$2_$3

- pattern: kafka.(.+)<type=(.+), name=(.+), (.+)=(.*), (.+)=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

"$6": "$7"

quantile: "0.$8"

- pattern: kafka.(.+)<type=(.+), name=(.+), (.+)=(.*)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

quantile: "0.$6"

- pattern: kafka.(.+)<type=(.+), name=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

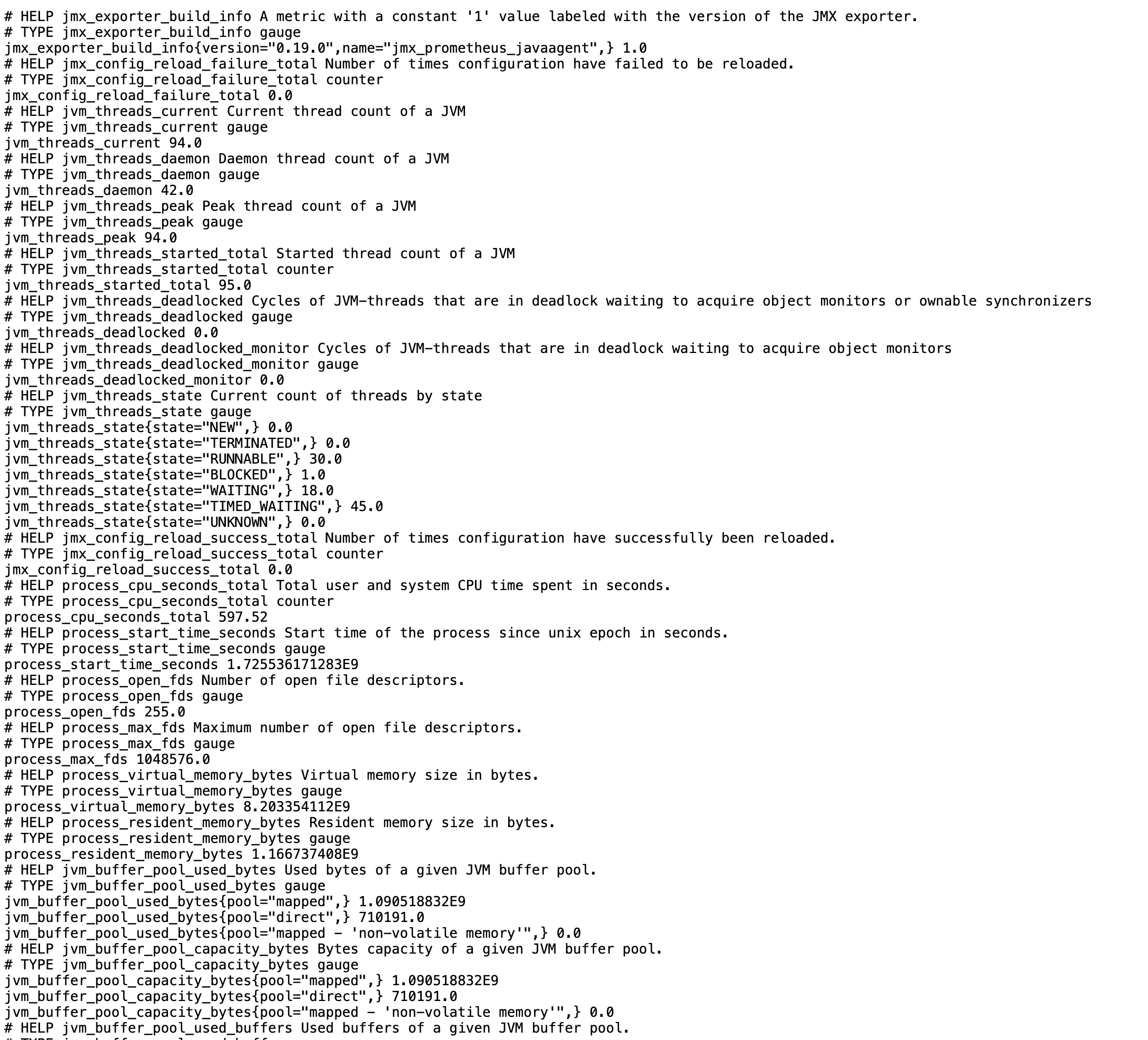

quantile: "0.$4"결과 확인

- 외부아이피:8080/metrics로 들어가면 됨.

- kafka의 메트릭 정보들이 왕창 들어가 있음.