1. 쿠버네티스 설치

1-1. 설치 환경

아래와 같이 실습을 진행할 수 있는 다양한 환경이 있으며, 환경에 따라 다양한 방법으로 설치 및 구성이 가능하다. 나의 실습 환경은 볼드 처리하여 구분했다.

Vagrant를 사용하면 virtual box 사용시 vm에 배포 / k8s 설치를 자동화 할 수 있다. vmware도 별도의 플러그인을 설치해 사용할 수 있다고 하나, 현재 esxi 7.0은 플러그인이 제대로 동작하지 않는 것 같다.

k8s 배포 환경

- 온프레미스 - 데이터센터, Home PC

- 퍼블릭 클라우드 - aws, azure, gcp 등

하이퍼바이저

- 타입 1 하이퍼바이저(Native) - VMware(ESXI), Xen, KVM(Kernel-based Virtual Machine) 등

- 타입 2 하이퍼바이저(Hosted) - VirtualBox, VMware(Workstation, Player)

게스트 OS

- CentOS

- Ubuntu (20.04)

- ...

컨테이너 오케스트레이션

- 쿠버네티스(k8s, k3s)

- Docker Swarm

- OpenShift

- ...

컨테이너 런타임

- docker

- containerd

- cri-o

- ...

쿠버네티스 배포 툴

- kubeadm

- kops

- kubespray

- ...

쿠버네티스 버전

- 1.21

1-2. 가상머신 스펙

구성 / 스펙

- 마스터 노드 - 1대(vCPU: 2, Memory: 4GB)

- 워커 노드 - 3대(vCPU: 1, Memory: 2GB)

⇒ 워커노드는 클러스터 내의 Raft 알고리즘의 동작방식(quorum)으로 인해, 홀수로 구성하는 것이 좋다고 한다.

1-3. 쿠버네티스 설치

가상머신 설치

다른 하이퍼바이저의 경우 가상머신 복제가 쉽게 가능하지만, esxi의 경우는 번거로워서 4대의 가상머신을 각각 설치했다. 설치 과정은 skip

설치 전 설정 & k8s 패키지 설치

# 관리자 전환

sudo su -

# 업데이트

apt update

# root password

printf "[사용할 비밀번호]\n[사용할 비밀번호]\n" | passwd

# ssh-config

sed -i "s/^PasswordAuthentication no/PasswordAuthentication yes/g" /etc/ssh/sshd_config

sed -i "s/^#PermitRootLogin prohibit-password/PermitRootLogin yes/g" /etc/ssh/sshd_config

systemctl restart sshd

# apparmor disable

systemctl stop apparmor && systemctl disable apparmor

# 스왑 off

swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab

cat /etc/fstab

# Letting iptables see bridged traffic

modprobe br_netfilter

cat <<EOF | tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

# /etc/hosts 파일 수정

echo "[마스터 노드 IP] master" >> /etc/hosts

echo "[워커 노드 1 IP] worker1" >> /etc/hosts

echo "[워커 노드 2 IP] worker2" >> /etc/hosts

echo "[워커 노드 3 IP] worker3" >> /etc/hosts

cat /etc/hosts

# docker install

curl -fsSL https://get.docker.com | sh

# Cgroup Driver systemd

cat <<EOF | tee /etc/docker/daemon.json

{"exec-opts": ["native.cgroupdriver=systemd"]}

EOF

systemctl daemon-reload && systemctl restart docker

docker info

# package install

apt-get install bridge-utils net-tools jq tree -y

# Installing kubeadm kubelet and kubectl

curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

## 복붙 시 여기서 한번 쉬고 복붙!

apt-get install -y kubelet kubeadm kubectl && apt-mark hold kubelet kubeadm kubectl

systemctl enable kubelet && systemctl start kubelet

kubeadm version

kubectl version

# 전원 off

poweroffk8s 클러스터 생성 (kubeadm)

- kubeadm: k8s 를 쉽게 설치할 수 있는 관리 도구, 온프레미스, 클라우드 인프라 환경에 상관없이 사용 가능

- 클러스터 초기화(Master only)

# 마스터 노드에서 클러스터 초기화 (K8s version v1.21.1)

# kubeadm init --apiserver-advertise-address <마스터 노드 IP> --pod-network-cidr <Pod 사용 대역> --service-cidr <서비스 사용 대역>

# --pod-network-cidr <Pod 사용 대역> --service-cidr <서비스 사용 대역> 생략 시 자동, 기본값의 네트워크 대역으로 설정함

root@master:~#kubeadm init --apiserver-advertise-address 192.168.1.211

[init] Using Kubernetes version: v1.21.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

... 생략 ...

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.211:6443 --token ulyvc5.u7wauz11h3br9uh3 \

--discovery-token-ca-cert-hash sha256:9e086d7956d31f2b251d81825fdbf1f73af1a4657be3a8a853de20248125cf1b

root@master:~#

# 아래 kubeadm join ~ 두줄을 복사 해두어야 함(워커 노드 설정에 사용)- k8s 관리를 위한 사용자 설정(Master only)

root@master:~# mkdir -p $HOME/.kube

root@master:~# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@master:~# chown $(id -u):$(id -g) $HOME/.kube/config- CNI - calico install : Pod 대역 자동으로 인식(Master only)

root@master:~# curl -O https://docs.projectcalico.org/manifests/calico.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 185k 100 185k 0 0 244k 0 --:--:-- --:--:-- --:--:-- 243k

root@master:~#

root@master:~# sed -i 's/policy\/v1beta1/policy\/v1/g' calico.yaml

root@master:~# kubectl apply -f calico.yaml

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrole.rbac.authorization.k8s.io/calico-node unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

daemonset.apps/calico-node configured

serviceaccount/calico-node unchanged

deployment.apps/calico-kube-controllers unchanged

serviceaccount/calico-kube-controllers unchanged

poddisruptionbudget.policy/calico-kube-controllers configured

root@master:~#- (옵션) calicoctl install(Master only)

root@master:~# curl -o kubectl-calico -O -L "https://github.com/projectcalico/calicoctl/releases/download/v3.19.1/calicoctl"

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 615 100 615 0 0 1971 0 --:--:-- --:--:-- --:--:-- 1971

100 42.8M 100 42.8M 0 0 13.0M 0 0:00:03 0:00:03 --:--:-- 14.8M

root@master:~# chmod +x kubectl-calico

root@master:~# mv kubectl-calico /usr/bin

root@master:~#- (옵션) etcdctl install(Master only)

root@master:~# apt install etcd-client -y

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following NEW packages will be installed:

etcd-client

0 upgraded, 1 newly installed, 0 to remove and 5 not upgraded.

Need to get 4,563 kB of archives.

After this operation, 17.2 MB of additional disk space will be used.

Get:1 http://kr.archive.ubuntu.com/ubuntu focal/universe amd64 etcd-client amd64 3.2.26+dfsg-6 [4,563 kB]

Fetched 4,563 kB in 3s (1,817 kB/s)

Selecting previously unselected package etcd-client.

(Reading database ... 71878 files and directories currently installed.)

Preparing to unpack .../etcd-client_3.2.26+dfsg-6_amd64.deb ...

Unpacking etcd-client (3.2.26+dfsg-6) ...

Setting up etcd-client (3.2.26+dfsg-6) ...

Processing triggers for man-db (2.9.1-1) ...

root@master:~#- kubectl, kubeadm 자동 완성 활성화(Master only)

root@master:~# source <(kubectl completion bash)

root@master:~# source <(kubeadm completion bash)

root@master:~#

root@master:~# echo 'source <(kubectl completion bash)' >>~/.bashrc

root@master:~# echo 'source <(kubeadm completion bash)' >>~/.bashrc

root@master:~#

root@master:~# echo 'alias k=kubectl' >> ~/.bashrc

root@master:~# echo 'complete -F __start_kubectl k' >>~/.bashrc- 워커노드1~3 클러스터에 조인(Worker node only)

root@worker1:~# kubeadm join 192.168.1.211:6443 --token ulyvc5.u7wauz11h3br9uh3 \

--discovery-token-ca-cert-hash sha256:9e086d7956d31f2b251d81825fdbf1f73af1a4657be3a8a853de20248125cf1b 노드 상태 확인

root@master:~# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 12m v1.21.1

worker1 Ready <none> 11m v1.21.1

worker2 Ready <none> 11m v1.21.1

worker3 Ready <none> 11m v1.21.1

root@master:~#

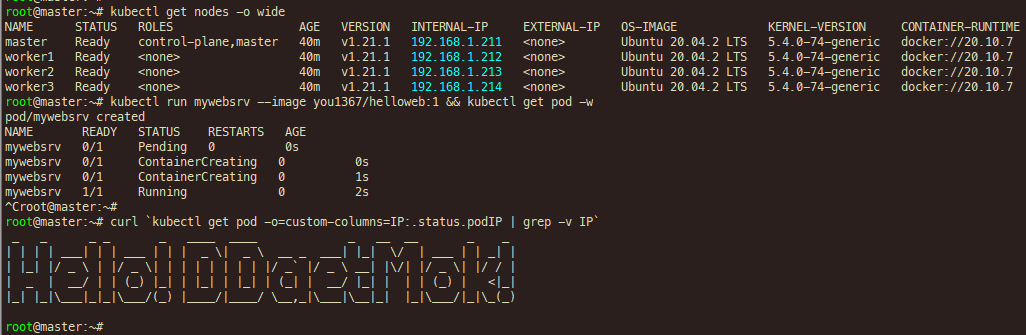

root@master:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane,master 12m v1.21.1 192.168.1.211 <none> Ubuntu 20.04.2 LTS 5.4.0-74-generic docker://20.10.7

worker1 Ready <none> 11m v1.21.1 192.168.1.212 <none> Ubuntu 20.04.2 LTS 5.4.0-74-generic docker://20.10.7

worker2 Ready <none> 11m v1.21.1 192.168.1.213 <none> Ubuntu 20.04.2 LTS 5.4.0-74-generic docker://20.10.7

worker3 Ready <none> 11m v1.21.1 192.168.1.214 <none> Ubuntu 20.04.2 LTS 5.4.0-74-generic docker://20.10.7

root@master:~#2. Pod 배포

지난 도커 실습 시 생성한 도커 저장소 이미지를 사용하여 Pod를 배포한다.

2-1. 도커 저장소 이미지로 Pod 배포

# 자신의 도커 저장소 이미지로 Pod 배포

# kubectl run mywebsrv --image <자신의 도커 저장소 이미지> && kubectl get pod -w

kubectl run mywebsrv --image you1367/helloweb:1 && kubectl get pod -w2-2. 배포된 Pod로 curl 확인

root@master:~# curl `kubectl get pod -o=custom-columns=IP:.status.podIP | grep -v IP`

# or

root@master:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mywebsrv 1/1 Running 0 2m39s 172.16.235.134 worker1 <none> <none>

root@master:~# curl 172.16.235.134

_ _ _ _ _ ____ ____ _ __ __ _ _

| | | | ___| | | ___ | | | _ \| _ \ __ _ ___| |_| \/ | ___ | | _| |

| |_| |/ _ \ | |/ _ \| | | | | | | | |/ _` |/ _ \ __| |\/| |/ _ \| |/ / |

| _ | __/ | | (_) |_| | |_| | |_| | (_| | __/ |_| | | | (_) | <|_|

|_| |_|\___|_|_|\___/(_) |____/|____/ \__,_|\___|\__|_| |_|\___/|_|\_(_)

root@master:~#2-3. 배포된 Pod 삭제

root@master:~# kubectl delete pod `kubectl get pod -o=custom-columns=NAME:.metadata.name | grep -v NAME`

# or

root@master:~# kubectl delete pod mywebsrv

pod "mywebsrv" deleted

root@master:~#

root@master:~# kubectl get pods

No resources found in default namespace.

root@master:~#