PyTorch

- Facebook developed the Python version of Torch, originally written in Lua, and released it in 2017.

- Initially, Torch was introduced as a scientific computing library similar to NumPy.

- Later, it evolved into a deep learning framework, enabling tensor manipulation with GPUs and dynamic neural network construction.

- Designed to be Pythonic, it offers flexibility and accelerated computation speed.

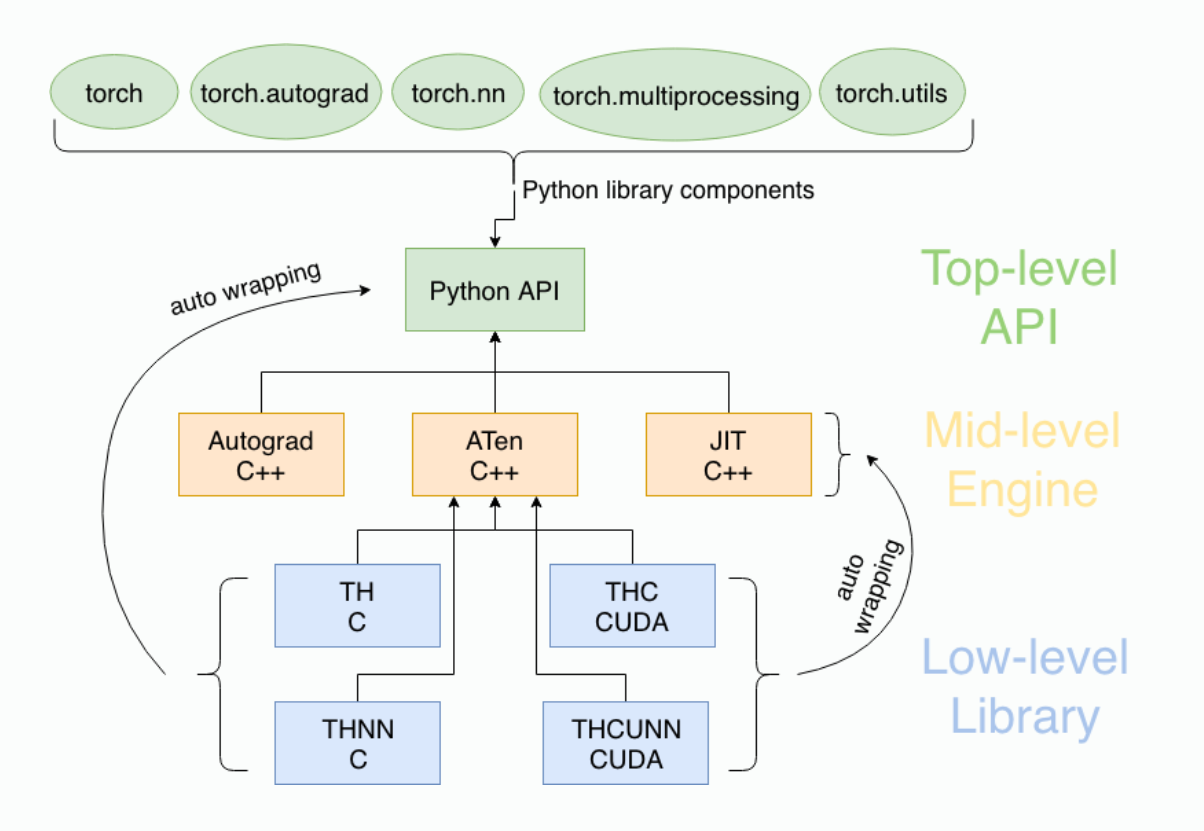

PyTorch Module Structure

ref: Deep Learning with PyTorch by Eli Stevens Luca Antiga. MEAP Publication. https://livebook.manning.com/#!/book/deep-learning-with-pytorch/welcome/v-7/

Components of PyTorch

torch: The main namespace, including tensors and various mathematical functions.torch.autograd: A library that provides automatic differentiation.torch.nn: A library for building neural networks, including data structures and layers.torch.multiprocessing: A library that supports parallel processing.torch.optim: Provides parameter optimization algorithms, mainly centered around SGD (Stochastic Gradient Descent).torch.utils: Utility functions for data manipulation and other operations.torch.onnx: ONNX (Open Neural Network Exchange) module, used for sharing models across different frameworks.

Tensors

- Tensors are the fundamental structure for data representation.

- A tensor is a container for storing data, typically numerical data.

- Similar to NumPy’s ndarray.

- Supports accelerated computation using GPUs.

import torch

torch.__version__'2.5.1+cu121'Tensor Initialization and data types

A tensor that is not initialized

x = torch.empty(4,2)

print(x)tensor([[1.0000e+00, 4.0000e+00],

[2.0000e+00, 5.0000e+00],

[3.0000e+00, 6.0000e+00],

[1.4013e-45, 0.0000e+00]])A tensor initialized with random values

x = torch.rand(4,2)

print(x)tensor([[0.2958, 0.4827],

[0.5380, 0.9182],

[0.9098, 0.5179],

[0.8977, 0.0085]])A tensor with long data type, filled with zeros

x = torch.zeros(4,2, dtype = torch.long)

print(x)tensor([[0, 0],

[0, 0],

[0, 0],

[0, 0]])Initialize a tensor with user-provided values.

x = torch.tensor([3,2.3])

print(x)tensor([3.0000, 2.3000])A 2 × 4 tensor of type double, filled with ones.

x = x.new_ones(2,4, dtype = torch.double)

print(x)tensor([[1., 1., 1., 1.],

[1., 1., 1., 1.]], dtype=torch.float64)A tensor with the same size as x, of type float, and filled with random values.

x = torch.randn_like(x, dtype=torch.float)

print(x)tensor([[-0.7010, 2.2324, -1.1151, 0.8353],

[ 0.0178, 0.7411, 0.8855, 0.3348]])Compute the size of a tensor

print(x.size())torch.Size([2, 4])Data Type

| Data type | dtype | CPU tensor | GPU tensor |

|---|---|---|---|

| 32-bit floating point | torch.float32 or torch.float | torch.FloatTensor | torch.cuda.FloatTensor |

| 64-bit floating point | torch.float64 or torch.double | torch.DoubleTensor | torch.cuda.DoubleTensor |

| 16-bit floating point | torch.float16 or torch.half | torch.HalfTensor | torch.cuda.HalfTensor |

| 8-bit integer(unsinged) | torch.uint8 | torch.ByteTensor | torch.cuda.ByteTensor |

| 8-bit integer(singed) | torch.int8 | torch.CharTensor | torch.cuda.CharTensor |

| 16-bit integer(signed) | torch.int16 or torch.short | torch.ShortTensor | torch.cuda.ShortTensor |

| 32-bit integer(signed) | torch.int32 or torch.int | torch.IntTensor | torch.cuda.IntTensor |

| 64-bit integer(signed) | torch.int64 or torch.long | torch.LongTensor | torch.cuda.LongTensor |

The default type of a FLoat Tensor is float32

ft = torch.FloatTensor([1,2,3])

print(ft)

print(ft.dtype)tensor([1., 2., 3.])

torch.float32Type casting

print(ft.short())

print(ft.int())

print(ft.long())tensor([1, 2, 3], dtype=torch.int16)

tensor([1, 2, 3], dtype=torch.int32)

tensor([1, 2, 3])it = torch.IntTensor([1,2,3])

print(it)

print(it.dtype)tensor([1, 2, 3], dtype=torch.int32)

torch.int32print(it.float())

print(it.double())

print(it.half())tensor([1., 2., 3.])

tensor([1., 2., 3.], dtype=torch.float64)

tensor([1., 2., 3.], dtype=torch.float16)CUDA Tensors

- You can use the .to method to move a tensor to any device (CPU, GPU).

x = torch.randn(1)

print(x)

print(x.item())

print(x.dtype)tensor([1.1125])

1.1124675273895264

torch.float32device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print("device: ", device)

y = torch.ones_like(x, device = device)

print("y: ", y)

x = x.to(device)

print("x: ", x)

z = x + y

print("z: ", z)

print("z to cpu: ", z.to('cpu', torch.double))device: cuda

y: tensor([1.], device='cuda:0')

x: tensor([1.1125], device='cuda:0')

z: tensor([2.1125], device='cuda:0')

z to cpu: tensor([2.1125], dtype=torch.float64)Representation of a Multi-Dimensional Tensor

0D Tensor(Scalar)

- A tensor containing a single number

- Has no axes or shape

t0 = torch.tensor(0)

print(t0.ndim)

print(t0.shape)

print(t0)0

torch.Size([])

tensor(0)1D Tensor(Vector)

- A tensor similar to a list that stores values

- Has a single axis

t1 = torch.tensor([1,2,3])

print(t1.ndim)

print(t1.shape)

print(t1)1

torch.Size([3])

tensor([1, 2, 3])2D Tensor(Matrix)

- A tensor with two axes, shaped like a matrix

- Commonly used for numerical and statistical datasets

- Typically structured with samples and features

t2 = torch.tensor([[1,2,3],

[4,5,6],

[7,8,9]])

print(t2.ndim)

print(t2.shape)

print(t2)2

torch.Size([3, 3])

tensor([[1, 2, 3],

[4, 5, 6],

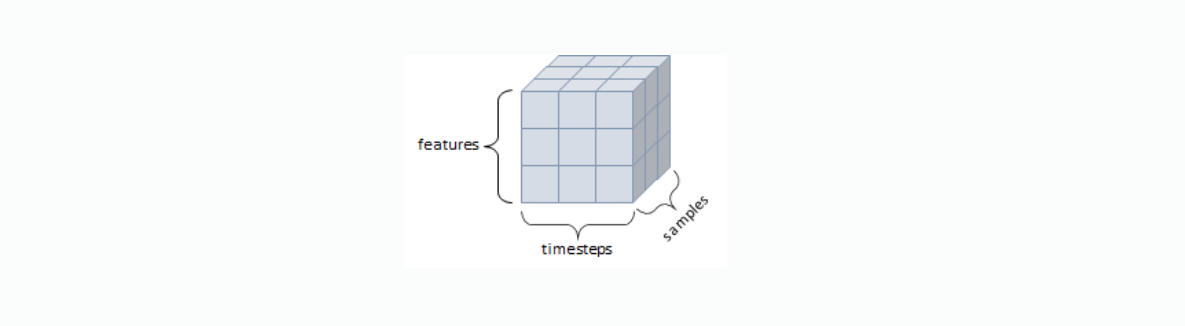

[7, 8, 9]])3D Tensor

- A tensor with three axes, shaped like a cube

- Used for sequential data or time-series data with a time axis

- Examples include stock price datasets and disease occurrence data over time

- Typically structured with samples, timesteps, and features

t3 = torch.tensor([[[1,2,3],

[4,5,6],

[7,8,9]],

[[1,2,3],

[4,5,6],

[7,8,9]],

[[1,2,3],

[4,5,6],

[7,8,9]]])

print(t3.ndim)

print(t3.shape)

print(t3)3

torch.Size([3, 3, 3])

tensor([[[1, 2, 3],

[4, 5, 6],

[7, 8, 9]],

[[1, 2, 3],

[4, 5, 6],

[7, 8, 9]],

[[1, 2, 3],

[4, 5, 6],

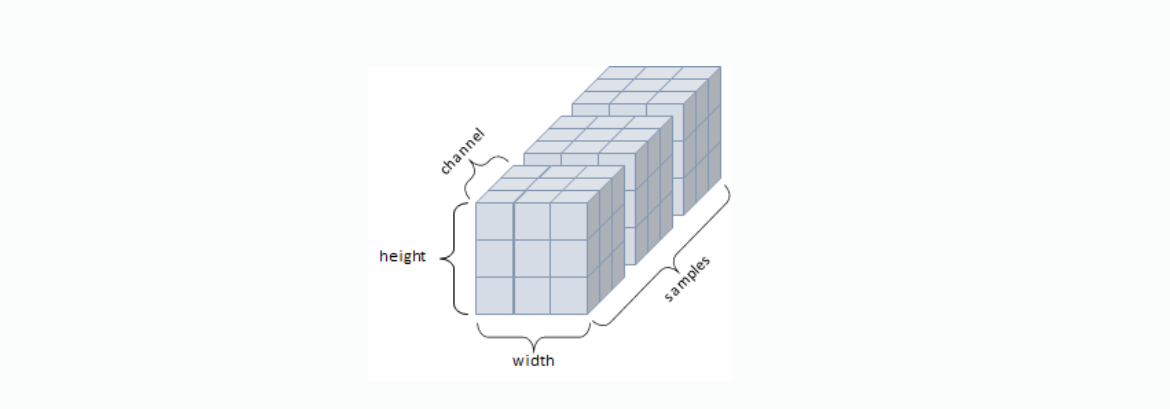

[7, 8, 9]]])4D Tensor

- Has 4 axes

- A typical example is color image data (grayscale image data can be represented as a 3D tensor)

- Commonly structured with samples, height, width, and color channels

5D Tensor

- Has 5 axes

- A typical example is video data

- Commonly structured with samples, frames, height, width, and color channels

Tensor Operations

- Provides mathematical operations, trigonometric functions, bitwise operations, comparison operations, and aggregation functions for tensors.

import math

a = torch.rand(1,2) * 2 - 1

print(a)

print(torch.abs(a))

print(torch.ceil(a))

print(torch.floor(a))

print(torch.clamp(a, -0.5, 0.5))tensor([[-0.0496, 0.8344]])

tensor([[0.0496, 0.8344]])

tensor([[-0., 1.]])

tensor([[-1., 0.]])

tensor([[-0.0496, 0.5000]])print(a)

print(torch.min(a))

print(torch.max(a))

print(torch.mean(a))

print(torch.std(a))

print(torch.prod(a))

print(torch.prod(a))

print(torch.unique(torch.tensor([1,2,3,1,2,2])))tensor([[-0.0496, 0.8344]])

tensor(-0.0496)

tensor(0.8344)

tensor(0.3924)

tensor(0.6251)

tensor(-0.0414)

tensor(-0.0414)

tensor([1, 2, 3])max and min return argmax and argmin when given the dim argument.

- argmax: The index of the maximum value

- argmin: The index of the minimum value

x = torch.rand(2,2)

print(x)

print(x.max(dim=0))

print(x.max(dim=1))tensor([[0.7560, 0.7411],

[0.8880, 0.9360]])

torch.return_types.max(

values=tensor([0.8880, 0.9360]),

indices=tensor([1, 1]))

torch.return_types.max(

values=tensor([0.7560, 0.9360]),

indices=tensor([0, 1]))x = torch.rand(2,2)

print(x)

y = torch.rand(2,2)

print(y)tensor([[0.3859, 0.8108],

[0.1640, 0.5917]])

tensor([[0.4182, 0.5112],

[0.7321, 0.2791]])torch.add: addition

print(x + y)

print(torch.add(x,y))tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])

tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])Provide the result tensor as an argument.

result = torch.empty(2,2)

torch.add(x,y, out=result)

print(result)tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])in-place method

- Operations that modify tensor values in-place have a trailing _''.

x.copy_(y), x.t_()

print(x)

print(y)

y.add_(x)

print(y)tensor([[0.3859, 0.8108],

[0.1640, 0.5917]])

tensor([[0.4182, 0.5112],

[0.7321, 0.2791]])

tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])torch.sub: subtraction

print(x)

print(y)

print(x - y)

print(torch.sub(x,y))

print(x.sub(y))tensor([[0.3859, 0.8108],

[0.1640, 0.5917]])

tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])

tensor([[-0.4182, -0.5112],

[-0.7321, -0.2791]])

tensor([[-0.4182, -0.5112],

[-0.7321, -0.2791]])

tensor([[-0.4182, -0.5112],

[-0.7321, -0.2791]])torch.mul: multiplication

print(x)

print(y)

print(x * y)

print(torch.mul(x,y))

print(x.mul(y))tensor([[0.3859, 0.8108],

[0.1640, 0.5917]])

tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])

tensor([[0.3103, 1.0719],

[0.1470, 0.5152]])

tensor([[0.3103, 1.0719],

[0.1470, 0.5152]])

tensor([[0.3103, 1.0719],

[0.1470, 0.5152]])torch.div: division

print(x)

print(y)

print(x / y)

print(torch.div(x,y))

print(x.div(y))tensor([[0.3859, 0.8108],

[0.1640, 0.5917]])

tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])

tensor([[0.4800, 0.6133],

[0.1830, 0.6795]])

tensor([[0.4800, 0.6133],

[0.1830, 0.6795]])

tensor([[0.4800, 0.6133],

[0.1830, 0.6795]])torch.mm: dot product

print(x)

print(y)

print(torch.matmul(x,y))

z = torch.mm(x,y)

print(z)

print(torch.svd(z))tensor([[0.3859, 0.8108],

[0.1640, 0.5917]])

tensor([[0.8041, 1.3220],

[0.8961, 0.8708]])

tensor([[1.0369, 1.2163],

[0.6621, 0.7321]])

tensor([[1.0369, 1.2163],

[0.6621, 0.7321]])

torch.return_types.svd(

U=tensor([[-0.8509, -0.5254],

[-0.5254, 0.8509]]),

S=tensor([1.8784, 0.0246]),

V=tensor([[-0.6549, 0.7557],

[-0.7557, -0.6549]]))Tensor Manipulations

Indexing: Can be used in the same way as NumPy indexing.

x = torch.Tensor([[1,2],

[3,4]])

print(x)

print(x[0,0])

print(x[0,1])

print(x[1,0])

print(x[1,1])

print(x[:,0])

print(x[:,1])

print(x[0,:])

print(x[1,:])tensor([[1., 2.],

[3., 4.]])

tensor(1.)

tensor(2.)

tensor(3.)

tensor(4.)

tensor([1., 3.])

tensor([2., 4.])

tensor([1., 2.])

tensor([3., 4.])view: Changes the size or shape of a tensor

- The number of elements must remain the same before and after the transformation.

- If set to -1, the size is inferred automatically.

x = torch.randn(4,5)

print(x)

y = x.view(20)

print(y)

z = x.view(5, -1)

print(z)tensor([[-0.3196, -0.1150, 0.7417, 0.5375, 0.2084],

[-1.7420, -0.7210, 0.1906, 0.1851, -0.3533],

[-1.5118, 0.1725, -0.7839, 1.0098, 0.8780],

[ 0.0451, -1.5603, 1.3050, -0.6944, 0.4988]])

tensor([-0.3196, -0.1150, 0.7417, 0.5375, 0.2084, -1.7420, -0.7210, 0.1906,

0.1851, -0.3533, -1.5118, 0.1725, -0.7839, 1.0098, 0.8780, 0.0451,

-1.5603, 1.3050, -0.6944, 0.4988])

tensor([[-0.3196, -0.1150, 0.7417, 0.5375],

[ 0.2084, -1.7420, -0.7210, 0.1906],

[ 0.1851, -0.3533, -1.5118, 0.1725],

[-0.7839, 1.0098, 0.8780, 0.0451],

[-1.5603, 1.3050, -0.6944, 0.4988]])item: If a tensor contains even a single value, a numerical value can be obtained.

x = torch.randn(1)

print(x)

print(x.item())

print(x.dtype)tensor([-0.3285])

-0.3284767270088196

torch.float32item() can only be used if the tensor contains a single scalar value.

x = torch.randn(2)

print(x)

print(x.item())

print(x.dtype)tensor([-2.2871, -1.0971])

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Cell In[55], line 3

1 x = torch.randn(2)

2 print(x)

----> 3 print(x.item())

4 print(x.dtype)

RuntimeError: a Tensor with 2 elements cannot be converted to Scalarsqueeze: Reduce (remove) a dimension

tensor = torch.rand(1,3,3)

print(tensor)

print(tensor.shape)tensor([[[0.5173, 0.6478, 0.6806],

[0.1551, 0.1516, 0.9616],

[0.0146, 0.4398, 0.9480]]])

torch.Size([1, 3, 3])t = tensor.squeeze()

print(t)

print(t.shape)tensor([[0.5173, 0.6478, 0.6806],

[0.1551, 0.1516, 0.9616],

[0.0146, 0.4398, 0.9480]])

torch.Size([3, 3])unsqueeze: Increase (create) a dimension

t = torch.rand(3,3)

print(t)

print(t.shape)tensor([[0.6241, 0.1078, 0.3111],

[0.2703, 0.7403, 0.6612],

[0.2824, 0.6723, 0.3450]])

torch.Size([3, 3])t2 = t.unsqueeze(dim=0)

print(t2)

print(t2.shape)tensor([[[0.6241, 0.1078, 0.3111],

[0.2703, 0.7403, 0.6612],

[0.2824, 0.6723, 0.3450]]])

torch.Size([1, 3, 3])t2 = t.unsqueeze(dim=2)

print(t2)

print(t2.shape)tensor([[[0.6241],

[0.1078],

[0.3111]],

[[0.2703],

[0.7403],

[0.6612]],

[[0.2824],

[0.6723],

[0.3450]]])

torch.Size([3, 3, 1])stack

x = torch.FloatTensor([1,4])

print(x)

y = torch.FloatTensor([2,5])

print(y)

z = torch.FloatTensor([3,6])

print(z)

print(torch.stack([x,y,z]))tensor([1., 4.])

tensor([2., 5.])

tensor([3., 6.])

tensor([[1., 4.],

[2., 5.],

[3., 6.]])cat(concatenate)

- Similar to NumPy's

stack, but requires adimto stack along - Expands the specified dimension before concatenation

a = torch.randn(1,3,3)

print(a)

b = torch.randn(1,3,3)

print(b)

c = torch.cat((a,b), dim=0)

print(c)

print(c.size())tensor([[[-2.0529, 0.0930, 1.2509],

[-1.5037, 1.2597, -0.5767],

[ 1.3482, -2.4116, -0.0364]]])

tensor([[[ 1.7181, 2.1459, 0.2636],

[-0.0553, 0.4114, -0.3377],

[ 0.1318, 0.9876, -1.3240]]])

tensor([[[-2.0529, 0.0930, 1.2509],

[-1.5037, 1.2597, -0.5767],

[ 1.3482, -2.4116, -0.0364]],

[[ 1.7181, 2.1459, 0.2636],

[-0.0553, 0.4114, -0.3377],

[ 0.1318, 0.9876, -1.3240]]])

torch.Size([2, 3, 3])c = torch.cat((a,b), dim=1)

print(c)

print(c.size()) # equivalent to c.shapetensor([[[-2.0529, 0.0930, 1.2509],

[-1.5037, 1.2597, -0.5767],

[ 1.3482, -2.4116, -0.0364],

[ 1.7181, 2.1459, 0.2636],

[-0.0553, 0.4114, -0.3377],

[ 0.1318, 0.9876, -1.3240]]])

torch.Size([1, 6, 3])c = torch.cat((a,b), dim=2)

print(c)

print(c.size()) # equivalent to c.shapetensor([[[-2.0529, 0.0930, 1.2509, 1.7181, 2.1459, 0.2636],

[-1.5037, 1.2597, -0.5767, -0.0553, 0.4114, -0.3377],

[ 1.3482, -2.4116, -0.0364, 0.1318, 0.9876, -1.3240]]])

torch.Size([1, 3, 6])chunk: Used to split a tensor into multiple parts (How many parts to split into?)

t = torch.rand(3,6)

print(t)

t1, t2, t3 = torch.chunk(t, 3, dim = 1)

print(t1)

print(t2)

print(t3)tensor([[0.2303, 0.8994, 0.5073, 0.0274, 0.0287, 0.1956],

[0.4251, 0.8970, 0.9545, 0.1221, 0.7975, 0.3167],

[0.3438, 0.2994, 0.3463, 0.9225, 0.6442, 0.9835]])

tensor([[0.2303, 0.8994],

[0.4251, 0.8970],

[0.3438, 0.2994]])

tensor([[0.5073, 0.0274],

[0.9545, 0.1221],

[0.3463, 0.9225]])

tensor([[0.0287, 0.1956],

[0.7975, 0.3167],

[0.6442, 0.9835]])split: Same function as chunk, but slightly different (What is the size of each tensor?)

t = torch.rand(3,6)

t1, t2 = torch.split(t, 3, dim=1)

print(t1)

print(t2)tensor([[0.4184, 0.5670, 0.8108],

[0.0885, 0.2221, 0.2999],

[0.4472, 0.5393, 0.7734]])

tensor([[0.0061, 0.1740, 0.7547],

[0.1808, 0.5949, 0.8283],

[0.6394, 0.5492, 0.7291]])torch ↔ numpy

- Torch Tensor can be converted to a NumPy array

numpy()from_numpy()

- If the Tensor is on the CPU, the NumPy array shares the same memory, meaning changes in one affect the other.

a = torch.ones(7)

print(a)tensor([1., 1., 1., 1., 1., 1., 1.])b = a.numpy()

print(b)[1. 1. 1. 1. 1. 1. 1.]a.add_(1)

print(a)

print(b) # same as a due to the memory is shared.tensor([2., 2., 2., 2., 2., 2., 2.])

[2. 2. 2. 2. 2. 2. 2.]import numpy as np

a = np.ones(7)

b = torch.from_numpy(a)

np.add(a, 1, out=a)

print(a)

print(b)[2. 2. 2. 2. 2. 2. 2.]

tensor([2., 2., 2., 2., 2., 2., 2.], dtype=torch.float64)