🤓 들어가며

text를 speech로 옮기는 일은 생각보다 간단했다. '그런데 speech를 text로 바꾸는 것은 어떻게 하지? MLKit 이라도 사용해야 하나?' 라는 걱정. 검색해보니 MLKit까지 사용할 필요는 없을 것 같고 SFSpeechRecognizer, AVAudioEngine 을 사용해서 기능을 구현한 SwiftUI 샘플이 있어 살펴보고자 한다. 토이프로젝트에 해당 기능을 넣어 번역하기 원하는 문장을 text로 입력할 뿐만 아니라 마이크를 사용해 입력할 수 있게 만들 예정이다.

https://developer.apple.com/tutorials/app-dev-training/transcribing-speech-to-text

base가 된 샘플 예제. 튜토리얼이 엄청나게 친절하다.

🎤 Speech

📰 overview

해당 프레임워크를 사용하면 녹음된 오디오나 음성에서 단어를 포착할 수 있다. 우리가 아이폰 키보드에서 사용할 수 있는 받아쓰기 기능이 오디오를 텍스트로 바꾸기 위해 스피치 프레임워크를 사용한다. 키보드에 의존하지 않고 음성으로 명령하는 것도 가능해진다. 해당 프레임워크는 다국어를 지원하나 각각의 SFSpeechRecognizer 객체는 한가지 언어만 커버한다. (나의 경우 4개 국어를 번역하기 위해 4개의 객체를 사용해야할 것) 다국어 지원을 위해서는 기본적으로 네트워크 연결이 되는 환경을 전제로 한다. (on-device로 지원하는 언어가 있지만 프레임워크 자체가 애플 서버에 의존하기 때문이다.)

📕 Class SFSpeechRecognizer

class SFSpeechRecognizer : NSObject- 음성 인식 프로세스의 메인 중추 객체

- 최초의 음성 인식을 위하여 authorization 요청

- 프로세스 중 어떤 언어를 사용할 지 결정

- 음성 인식 task init

1. ⛹️♂️ 시작하기

1) authorization setup

project > target > Info

Privacy - Speech Recognition Usage Description : You can view a text transcription of your meeting in the app.

Privacy - Microphone Usage Description : Audio is recorded to transcribe the meeting. Audio recordings are discarded after transcription.2) create object

import AVFoundation

import Foundation

import Speech

import SwiftUI

// interface가 되는 wrapper class랄지..

class SpeechRecognizer: ObservableObject {

private let recognizer: SFSpeechRecognizer?

init() {

recognizer = SFSpeechRecognizer()

Task(priority: .background) {

do {

guard recognizer != nil else {

throw RecognizerError.nilRecognizer

}

guard await SFSpeechRecognizer.hasAuthorizationToRecognize() else {

throw RecognizerError.notAuthorizedToRecognize

}

guard await AVAudioSession.sharedInstance().hasPermissionToRecord() else {

throw RecognizerError.notPermittedToRecord

}

} catch {

speakError(error)

}

}

}

- init하면서 객체에 대한 유효성 검사 (에러관련 enum은 자유롭게 custom하면 된다.)

- async 메서드를 사용하기 위해 Task 선언

- 가드문에서 사용된 메서드는 extension에서 선언된 메서드이므로 공식문서에는 존재하지 않는다. 아래의 코드 참고.

3) check authorization

extension SFSpeechRecognizer {

static func hasAuthorizationToRecognize() async -> Bool {

await withCheckedContinuation { continuation in

requestAuthorization { status in

continuation.resume(returning: status == .authorized)

}

}

}

}🔍 auth check 하나하나 뜯어보기

func withCheckedContinuation<T>(function: String = #function, _ body: (CheckedContinuation<T, Never>) -> Void) async -> TwithCheckedContinuation: concurrent generic function, 현재 진행되는 태스크를 중단하고 closure를 수행한다. closure에 argument로CheckedContinuation이 전달되는데 반드시 resume해야 중단되었던 태스크를 이어나갈 수 있다.

class func requestAuthorization(_ handler: @escaping (SFSpeechRecognizerAuthorizationStatus) -> Void)requestAuthorization(_:): SFSpeechRecognizer Type Method, handler는 AuthorizationStatus 값을 받는데 status 상태가 "known" 일 때에 이 block이 execute 된다. status parameter는 현재 내 앱의 auth 상태 값을 가지고 있다.

func resume(returning value: T)- CheckedContinuation의 instance method

- 중단된 지점에서 다시 태스크를 실행하는데 value로 넘긴 값을 caller-메서드를 호출한 곳, 여기서는 guard await SFSpeechRecognizer.hasAuthorizationToRecognize()-에게 반환한다.

- 따라서 status의 상태 값이 .authorized이 아니면 false를 반환해서 guard 문이 throw를 할 것이다.

2. 😎 locale?

앞서서 SFSpeechRecognizer는 한 객체가 하나의 언어만 처리할 수 있다고 언급했다. 따라서 기본 생성자는 사용자의 default 언어 셋팅을 따라가는 객체가 생성된다. 특정 언어를 인식하도록 설정하기 위해서는 다른 생성자를 사용해야 한다.

init?(locale: Locale)

// Creates a speech recognizer associated with the specified locale.- Locale(identifier: "en-US")

- Locale(identifier: "ko_KR")

- Locale(identifier: "ca_ES")

- Locale(identifier: "ja_JP")

3.🗣 transcribe, 이것을 위해 달려왔다.

특정 view에서 음성 인식을 시작하거나 반대로 음성 인식 프로세스를 정지시키는 포인트가 있다고 가정하자. 버튼 tap 이벤트일 수도 있겠고 UIKit이라면 viewWillAppear/viewWillDisapear, SwiftUI라면 onAppear/OnDisappear 일 수 있겠다. 언제인지는 선택하기 나름이고 중요한 것은 특정 시점에 우리가 생성한 speechRecognizer를 시작해야한다는 것이다.

.onAppear {

speechRecognizer.reset()

speechRecognizer.transcribe()

}⚙️ func reset

class SpeechRecognizer: ObservableObject {

private var audioEngine: AVAudioEngine?

private var request: SFSpeechAudioBufferRecognitionRequest?

private var task: SFSpeechRecognitionTask?

private let recognizer: SFSpeechRecognizer?

func reset() {

task?.cancel()

audioEngine?.stop()

audioEngine = nil

request = nil

task = nil

}- 위의 코드를 보면 느껴지겠지만 음성 인식을 한다는게 단순히 SFSpeechRecognizer 객체만 있다고 사용 가능한 것이 아니다.

- recognizer가 음성 인식 task를 수행하기 위해 필요한 준비물 2가지

audioEngine: AVAudioEnginerequest: SFSpeechAudioBufferRecognitionRequest

⚙️ func prepareEngine

let (audioEngine, request) = try Self.prepareEngine()

private static func prepareEngine() throws -> (AVAudioEngine, SFSpeechAudioBufferRecognitionRequest) {

let audioEngine = AVAudioEngine()

let request = SFSpeechAudioBufferRecognitionRequest()

request.shouldReportPartialResults = true

let audioSession = AVAudioSession.sharedInstance()

try audioSession.setCategory(.record, mode: .measurement, options: .duckOthers)

try audioSession.setActive(true, options: .notifyOthersOnDeactivation)

let inputNode = audioEngine.inputNode

let recordingFormat = inputNode.outputFormat(forBus: 0)

inputNode.installTap(onBus: 0, bufferSize: 1024, format: recordingFormat) { (buffer: AVAudioPCMBuffer, when: AVAudioTime) in

request.append(buffer)

}

audioEngine.prepare()

try audioEngine.start()

return (audioEngine, request)

}그나저나 tuple로 return해서 tuple로 받으면 여러개의 변수를 한 번에 initialize할 수 있는거 나만 몰랐나 혹쉬? 🤪

🔍 audio engine prepare 뜯어보기

그냥 생각없이 복사+붙여넣기 하면 편할거 같은데 그냥 넘어가기에는 마음이 불편하다. audioEngine은 딱 봐도 필요할거 같은데 (납득 100) 저 AudioSession이랑 inputNode가 대체 무엇이지.🧐 그러니까 알아보자.

1) 🔊 AudioSession

class AVAudioSession: NSObject- 나의 app과 OS system (= 오디오 하드웨어) 사이에서 중개자 역할을 한다.

- 이 세션 덕분에 내가 하드웨어의 동작 하나하나 간섭하거나 직접 interaction하지 않아도 된다.(따봉 오디오세션아 고마워!👍)

- 모든 애플 앱들은 기본적으로 default audio session을 가지고 있다.

- (기본 config 설정이 되어있으니 가져다 쓰면 됨.)

AVAudioSession.sharedInstance()- default session이 제공하는 것

- audio playback (녹음 후에 다시 재생하는 것.)

- iOS에서는 silent mode 사용 시 모든 오디오 재생을 침묵시킨다.

- default session이 제공하지 않는 것은 어떡하지? -> app의 오디오세션의

category를 수정하면 된다.

var category: AVAudioSession.Category { get }

func setCategory(AVAudioSession.Category, options: AVAudioSession.CategoryOptions)- audio session category는 오디오의 동작 (behavior)을 정의한다.

- default 값은 soloAmbient

- 이 외에도 multiRoute, playAndRecord, playback, record...

- option은 specific audio session categories에 유효하다

duckOthers: 해당 세션의 오디오가 재생될 때 다른 오디오 세션의 볼륨을 줄이는 옵션- https://developer.apple.com/documentation/avfaudio/avaudiosession/categoryoptions (다른 옵션들은 이 링크 참고하기)

func setActive(

_ active: Bool,

options: AVAudioSession.SetActiveOptions = []

) throws- 설정한 option을 토대로 생성된 오디오 세션을 활성화한다.

notifyOthersOnDeactivation: 내 앱의 오디오 세션을 비활성화하면 다른 앱들한테 알리는 옵션 (그래야 다른 앱의 소리를 다시 켤 수 있겠지..)

2) 💬 inputNode

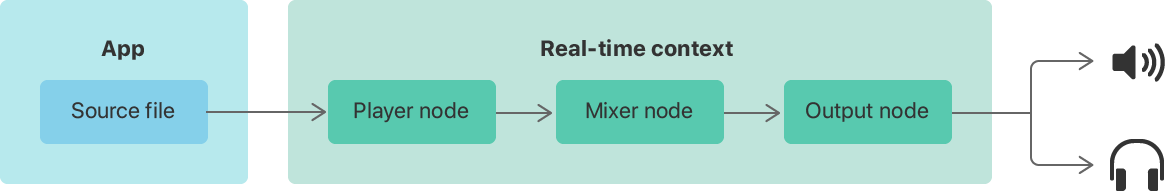

class AVAudioEngine : NSObject- audioEngine은 오디오 nodes를 관리하고 playback을 컨트롤하며 실시간으로 rendering 값을 설정하는 객체

AVAudioNode그룹을 엔진이 가지고 있는데 오디오를 프로세싱하는데에 이 노드 그룹이 필요하다.

let audioFile = /* An AVAudioFile instance that points to file that's open for reading. */

let audioEngine = AVAudioEngine()

let playerNode = AVAudioPlayerNode()

// Attach the player node to the audio engine.

audioEngine.attach(playerNode)

// Connect the player node to the output node.

audioEngine.connect(playerNode,

to: audioEngine.outputNode,

format: audioFile.processingFormat)

playerNode.scheduleFile(audioFile,

at: nil,

completionCallbackType: .dataPlayedBack) { _ in

/* Handle any work that's necessary after playback. */

}- 오디오 파일을 재생하고 싶다면, 준비물은 다음과 같다

- 오디오 엔진 객체

- 엔진에 붙일 노드 객체

- read할 수 있는 오디오 파일 객체

- 노드를 -> 엔진에 attach -> 오디오 파일을 -> 노드에 schedule

- 비유하자면 오디오 엔진이 카세트 플레이어 📹, 노드가 여러 개의 카세트 테이프 📼, 오디오 파일이 테이프에 새겨진 데이터🎞 라고 할 수 있을까? (낡은 비유🥲)

- 그런데 input tape와 output tape가 존재하는...* (input tape에 녹음하고 output tape로 재생한다고 이해하면 될지)

var inputNode: AVAudioInputNode { get }- 오디오 input을 하려면 이 inputNode를 통해 일한다.

- 내가 이 property에 접근하면 오디오엔진은 singletone 객체를 돌려줌

- 그럼 input을 돌려받으려면? 방법은 2가지

- 다른 node를 이 input의 output과 연결하기

recording tap생성하기

func outputFormat(forBus bus: AVAudioNodeBus) -> AVAudioFormat- retrieves the output format for bus (...?)

AVAudioNodeBus: 오디오 노드에 붙어있는 버스의 인덱스로 Int 값을 받는다- 공식문서에 따르면 오디오 노드 객체는 여러개의 input/ output 버스를 가지고 있다고 한다.

- 노드 안에 버스가 1줄로 줄줄이 붙어있다고 상상해보자. (공식 문서에 따르면 무조건 1:1로 연결된다고 함)

AVAudioFormat: 오디오 포맷 객체, AudioStreamBasicDescription의 wrapper

func installTap(

onBus bus: AVAudioNodeBus,

bufferSize: AVAudioFrameCount,

format: AVAudioFormat?,

block tapBlock: @escaping AVAudioNodeTapBlock

)

typealias AVAudioNodeTapBlock = (AVAudioPCMBuffer, AVAudioTime) -> Void- audio tap 이란, 트랙의 오디오 데이터에 접근할 때 사용한다.

- 내가 선택한 버스는 record, monitor, observe할 때 사용되고 이 버스에 오디오 탭을 붙인다. 노드에 줄줄이 서있는 버스와 그 버스마다 달려있는 탭을 상상하는 중.

- bus: tap을 붙일 output bus

- format: 이미 connected state인 output에 연결할 경우 에러 발생

- tapBlock: 오디오 버퍼(오디오 시스템이 노드에서 캡처한 오디오 output)와 함께 처리할 작업

⚙️ func transcribe

이전의 모든 과정은 이 transcribe을 위한 것이었다. 우리는 이 과정을 통해 준비한 오디오 엔진으로 음성을 받고 그 음성을 토대로 텍스트를 추출한다.

func transcribe() {

DispatchQueue(label: "Speech Recognizer Queue", qos: .background).async { [weak self] in

guard let self = self, let recognizer = self.recognizer, recognizer.isAvailable else {

self?.speakError(RecognizerError.recognizerIsUnavailable)

return

}

do {

let (audioEngine, request) = try Self.prepareEngine()

self.audioEngine = audioEngine

self.request = request

self.task = recognizer.recognitionTask(with: request, resultHandler: self.recognitionHandler(result:error:))

} catch {

self.reset()

self.speakError(error)

}

}

}- 우리의 음성을 인식 할 recognize 유효한지 확인하고

recognitionTask(with:resultHandler:)메서드로 request를 실행하고 그 결과를 핸들러 안에서 처리한다.- @escaping 함수 안에서는

SFSpeechRecognitionResult, Error 를 받는다. - 받은 argument를 그대로 parameter로 넘기기 때문에 (result:error:)로 쓸 수 있다.

🔍 recognitionHandler 하나하나 뜯어보기

역시 코드가 깔끔한 데에는 이유가 있다. 기능을 extract하여 핸들러를 별개의 private 메서드로 선언했다. 나도 앞으로 이렇게 코드를 짜야지.

private func recognitionHandler(result: SFSpeechRecognitionResult?, error: Error?) {

let receivedFinalResult = result?.isFinal ?? false

let receivedError = error != nil

if receivedFinalResult || receivedError {

audioEngine?.stop()

audioEngine?.inputNode.removeTap(onBus: 0)

}

if let result = result {

speak(result.bestTranscription.formattedString)

}

}

private func speak(_ message: String) {

transcript = message

}- 결과값이 에러이면 오디오 엔진을 중지하고 inputnode에 붙였던 탭을 제거한다.

- 결과 값이 에러 또는 nil이 아니면

bestTranscription말 그대로 가장 신뢰성이 높은 음성 -> 문자의 결과를 추출해 클래스의 프로퍼티에 담는다. - 결과 텍스트를 어떻게 사용할 지는 우리에게 달려있다. 😆 화이팅!

😇 정리하며

코드 자체는 몇줄 되지 않지만 오디오를 가져다 쓰는 개념이 생소해서 노드니 인풋 아웃풋이니 뜯어보는 데에 시간이 걸렸다. 물론 다 이해한 것은 아니고 이 오디오 프로세스를 하나하나 완벽하게 이해할 생각은 없다. 웹개발을 할 때에는 이미 생성된 오디오 파일을 다루는 일 정도는 있었지만 그 이상은 없었는데 이번 시도에는 내가 네이티브 자원을 활용한다는 것이 분명하게 느껴져서 재밌었다.