[인스턴스 선택]

인스턴스는 기계 학습 추론 및 그래픽 집약적 워크로드의 속도를 개선하는 데 도움이 되도록 설계되었습니다.

기능: 2세대 인텔 제온 스케일러블 프로세서(캐스케이드 레이크 P-8259CL)

- 최대 8개의 NVIDIA T4 Tensor 코어 GPU

- 최대 100Gbps의 네트워킹 처리량

- 최대 1.8TB의 로컬 NVMe 스토리지

가장 가성비 있는 GPU 유형으로 선택.

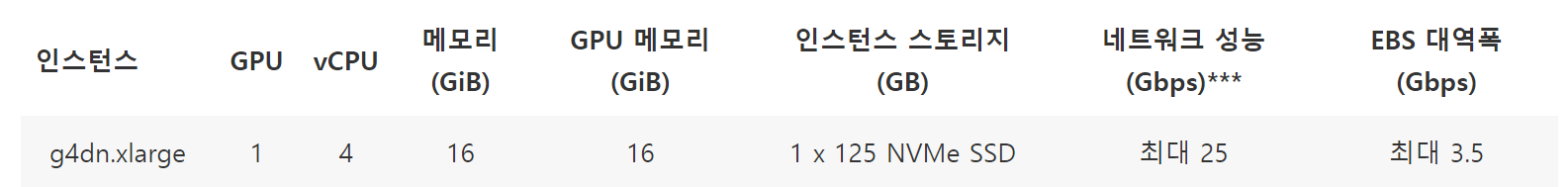

아래는 스펙이다.

[vCPU 할당량 증가]

https://calculator.aws/#/createCalculator/ec2-enhancement

위 링크에서 vCPU 계산

https://aws.amazon.com/ko/ec2/instance-types/

|| 위 링크에서 확인

g4dn.xlarge의 경우 4

http://aws.amazon.com/contact-us/ec2-request

위 링크에서 사례를 제출해야함

한도 증가 요청 1

Service: EC2 Instances

Primary Instance Type: All G instances

Region: Asia Pacific (Seoul)

한도 이름: Instance Limit

새 한도 값: 4

------------

활용 사례 설명: need to inference llm위와 같이 사례 제출 후 하루 정도 경과되면 AWS 담당자가 승인해준다.

사용 가능

[GPU setting]

https://docs.nvidia.com/cuda/cuda-installation-guide-linux/

Amazon Linux 2023 사용으로 3.11. 참고해서 진행

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/install-nvidia-driver.html#nvidia-GRID-driver

옵션3 GRID 드라이버

https://repost.aws/ko/articles/ARwfQMxiC-QMOgWykD9mco1w/how-do-i-install-nvidia-gpu-driver-cuda-toolkit-and-optionally-nvidia-container-toolkit-in-amazon-linux-2023-al2023?sc_ichannel=ha&sc_ilang=en&sc_isite=repost&sc_iplace=hp&sc_icontent=ARwfQMxiC-QMOgWykD9mco1w&sc_ipos=13

CUDA Toolkit

인스턴스의 GPU 확인

$ lspci | grep -i nvidia

00:1e.0 3D controller: NVIDIA Corporation TU104GL [Tesla T4] (rev a1)지원되는 linux버전 확인

$ uname -m && cat /etc/*release

x86_64

Amazon Linux release 2023.5.20240708 (Amazon Linux)gcc 컴파일러 설치 확인

$ gcc --version

gcc (GCC) 11.4.1 20230605 (Red Hat 11.4.1-2)

Copyright (C) 2021 Free Software Foundation, Inc.실행 중인 커널 버전 확인

$ uname -rkernel update

$ sudo dnf update -y

$ sudo dnf install -y dkms kernel-devel kernel-modules-extra

$ sudo reboot재시작 후 ADD NVIDIA repo

$ sudo dnf config-manager --add-repo https://developer.download.nvidia.com/compute/cuda/repos/amzn2023/x86_64/cuda-amzn2023.repo

$ sudo dnf clean expire-cacheNVIDIA driver

$ sudo dnf module install -y nvidia-driver:latest-dkmsCUDA toolkit

$ sudo dnf install -y cuda-toolkit

Disk Requirements:

At least 5686MB more space needed on the / filesystem.CUDA toolkit 중 볼륨 30GiB -> 100GiB 로 확장처리했음

재부팅 후 Verify NVIDIA driver

$ sudo reboot

$ nvidia-smi

Thu Jul 18 13:13:36 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 555.42.06 Driver Version: 555.42.06 CUDA Version: 12.5 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Tesla T4 Off | 00000000:00:1E.0 Off | 0 |

| N/A 39C P0 27W / 70W | 1MiB / 15360MiB | 8% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

Verify CUDA toolkit

$ /usr/local/cuda/bin/nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2024 NVIDIA Corporation

Built on Thu_Jun__6_02:18:23_PDT_2024

Cuda compilation tools, release 12.5, V12.5.82

Build cuda_12.5.r12.5/compiler.34385749_0[추론]

https://github.com/ggerganov/llama.cpp/blob/master/examples/server/README.md

lla.cpp reference 참고

docker로 llama.cpp server-cuda image pull

$ docker pull ghcr.io/ggerganov/llama.cpp:server-cudallama.cpp 서버 콘테이너 구동, -v로 호스트의 모델 동기화

$ docker run -p 8080:8080 -v ./model/model/:/models --gpus all ghcr.io/ggerganov/llama.cpp:server-cuda -m models/ggml-model-q8_0.gguf -c 512 --host 0.0.0.0 --port 8080 --n-gpu-layers 99

docker: Error response from daemon: could not select device driver "" with capabilities: [[gpu]].https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

nvidia-container-toolkit 설치 필요

$ curl -s -L https://nvidia.github.io/libnvidia-container/stable/rpm/nvidia-container-toolkit.repo | \

sudo tee /etc/yum.repos.d/nvidia-container-toolkit.repo

$ sudo yum-config-manager --enable nvidia-container-toolkit-experimental

$ sudo yum install -y nvidia-container-toolkit

$ sudo systemctl restart dockerdocker(웹서버 컨테이너)에서 요청하기 때문에, 8080 포트를 열어줘야 한다.

curl

$ curl --request POST \

--url http://localhost:8080/completion \

--header "Content-Type: application/json" \

--data '{"prompt": "Building a website can be done in 10 simple steps:","n_predict": 128}'

{"content":"\n1. Choose a domain name\n2. Register the domain\n3. Choose a host\n4. Buy web space\n5. Set up email\n6. Write your first page\n7. Test your site\n8. Link to others\n9. Add new pages\n10. Update your site\nThe steps above are not in any particular order. You can do the steps in any order that you like. The order below is based on the order that we do things in our office.\n1. Choose a domain name\nThere are many different ways to choose a domain name, but here are a few things to","id_slot":0,"stop":true,"model":"models/ggml-model-f16.gguf","tokens_predicted":128,"tokens_evaluated":14,"generation_settings":{"n_ctx":512,"n_predict":-1,"model":"models/ggml-model-f16.gguf","seed":4294967295,"temperature":0.800000011920929,"dynatemp_range":0.0,"dynatemp_exponent":1.0,"top_k":40,"top_p":0.949999988079071,"min_p":0.05000000074505806,"tfs_z":1.0,"typical_p":1.0,"repeat_last_n":64,"repeat_penalty":1.0,"presence_penalty":0.0,"frequency_penalty":0.0,"penalty_prompt_tokens":[],"use_penalty_prompt_tokens":false,"mirostat":0,"mirostat_tau":5.0,"mirostat_eta":0.10000000149011612,"penalize_nl":false,"stop":[],"n_keep":0,"n_discard":0,"ignore_eos":false,"stream":false,"logit_bias":[],"n_probs":0,"min_keep":0,"grammar":"","samplers":["top_k","tfs_z","typical_p","top_p","min_p","temperature"]},"prompt":"Building a website can be done in 10 simple steps:","truncated":false,"stopped_eos":false,"stopped_word":false,"stopped_limit":true,"stopping_word":"","tokens_cached":141,"timings":{"prompt_n":14,"prompt_ms":32.572,"prompt_per_token_ms":2.326571428571429,"prompt_per_second":429.8170207540218,"predicted_n":128,"predicted_ms":2887.882,"predicted_per_token_ms":22.561578125,"predicted_per_second":44.323140626936976}}python

def llama_cpp_server_call(prompt):

url = "http://{Private IP Address}:8080/completion"

headers = {"Content-Type": "application/json"}

data = {

"prompt": prompt,

"n_predict": 256

}

print(prompt)

response = requests.post(url, headers=headers, json=data)

print(response.json())

return response.json().get('content')

demo = gr.Interface(fn=llama_cpp_server_call, inputs="text", outputs="text")

demo.launch(server_name="0.0.0.0", server_port=7870)