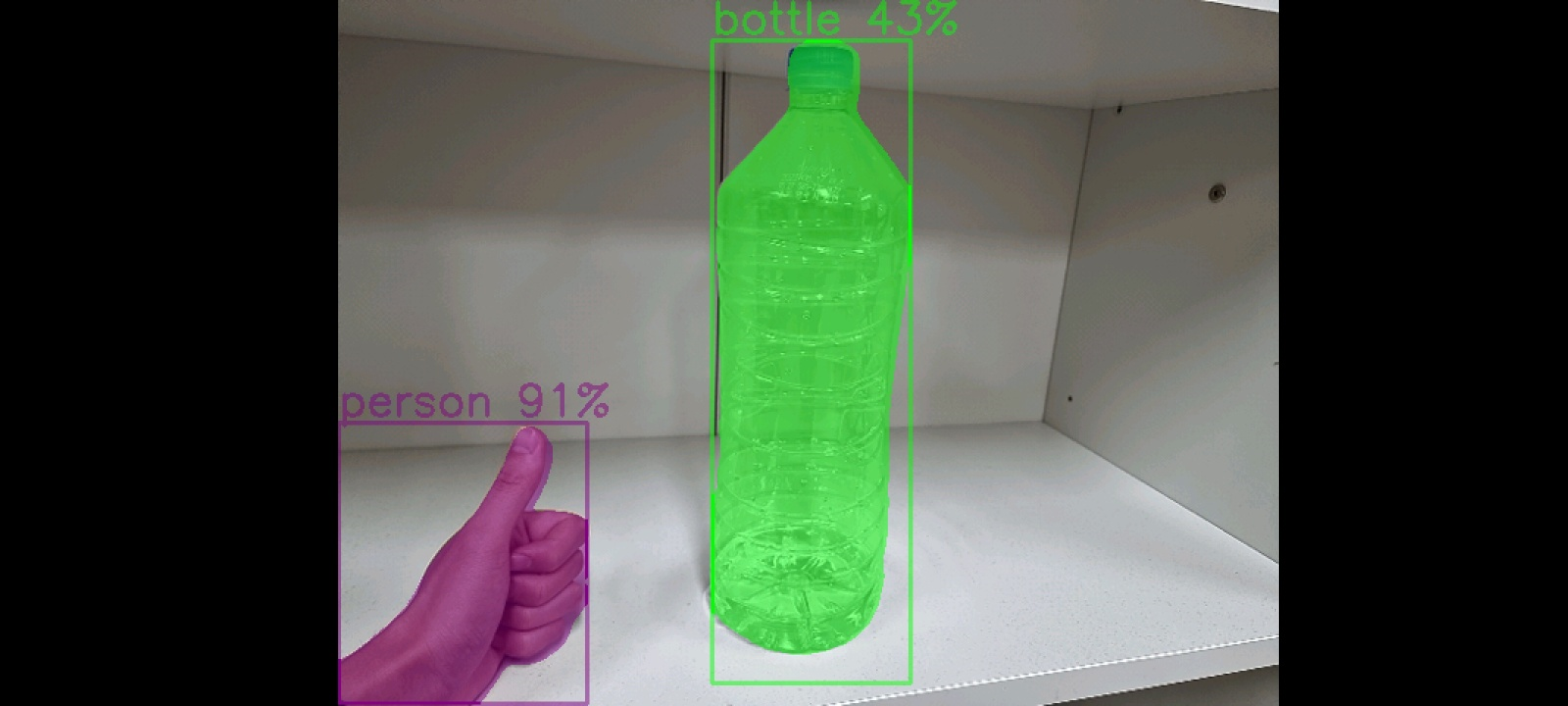

위 사진은 실시간으로 받아온 사진은 아니고, assets에 jpg 형식으로 저장된 사진에 대해 추론하고 화면에 표출한 것이다.

위의 사진과 같은 형식으로 만들 예정이다.

이전 글까지 추론 결과를 도출했다. 이제 화면에 표출만 하면 된다.

✔ 1. Draw 인터페이스 구현

아래와 같이 Draw 인터페이스를 생성하고 메인 액티비티에 implements 한다.

interface Draw {

}class MainActivity : ComponentActivity(), CameraBridgeViewBase.CvCameraViewListener2, Inference,

Draw {

// 이전과 동일

...

}Draw 인터페이스에 아래 메서드들을 추가한다.

interface Draw {

companion object {

const val ALPHA = 0.5

}

fun drawSeg(mat: Mat, lists: MutableList<Result>, labels: Array<String>): Mat {

val maskImg = mat.clone()

if (lists.size == 0) return maskImg

lists.forEach {

val box = it.box

val color = getColor(it.index)

val textPoint = Point(box.x.toDouble(), box.y.toDouble() - 5)

val text = "${labels[it.index]} ${round(it.confidence * 100).toInt()}%"

Imgproc.rectangle(mat, box, color, 2)

Imgproc.putText(mat, text, textPoint, Imgproc.FONT_HERSHEY_SIMPLEX, 1.0, color, 2)

val cropMask = it.maskMat

val cropMaskImg = Mat(maskImg, box)

val cropMaskRGB = Mat(cropMask.size(), CvType.CV_8UC3)

val list = List(3) { cropMask.clone() }

Core.merge(list, cropMaskRGB)

val temp1 = Mat.zeros(cropMaskRGB.size(), cropMaskRGB.type())

Core.add(temp1, Scalar(1.0, 1.0, 1.0), temp1)

Core.subtract(temp1, cropMaskRGB, temp1)

Core.multiply(cropMaskImg, temp1, cropMaskImg)

val temp2 = Mat()

Core.multiply(cropMaskRGB, color, temp2)

Core.add(cropMaskImg, temp2, cropMaskImg)

cropMask.release()

cropMaskImg.release()

temp1.release()

temp2.release()

cropMaskRGB.release()

it.maskMat.release()

list.forEach { mat -> mat.release() }

}

val resultMat = Mat(mat.size(), mat.type())

Core.addWeighted(maskImg, ALPHA, mat, 1 - ALPHA, 0.0, resultMat, CvType.CV_8UC3)

maskImg.release()

return resultMat

}

private fun getColor(index: Int): Scalar {

return when (index) {

// WHITE

45, 18, 19, 22, 30, 42, 43, 44, 61, 71, 72 -> Scalar(255.0, 255.0, 255.0)

// BLUE

1, 3, 14, 25, 37, 38, 79 -> Scalar(0.0, 0.0, 255.0)

// RED

2, 9, 10, 11, 32, 47, 49, 51, 52 -> Scalar(255.0, 0.0, 0.0)

// YELLOW

5, 23, 46, 48 -> Scalar(0.0, 255.0, 255.0)

// GRAY

6, 13, 34, 35, 36, 54, 59, 60, 73, 77, 78 -> Scalar(128.0, 128.0, 128.0)

// BLACK

7, 24, 26, 27, 28, 62, 64, 65, 66, 67, 68, 69, 74, 75 -> Scalar(0.0, 0.0, 0.0)

// GREEN

12, 29, 33, 39, 41, 58, 50 -> Scalar(0.0, 255.0, 0.0)

// DARK GRAY

15, 16, 17, 20, 21, 31, 40, 55, 57, 63 -> Scalar(64.0, 64.0, 64.0)

// LIGHT GRAY

70, 76 -> Scalar(192.0, 192.0, 192.0)

// PURPLE

else -> Scalar(128.0, 0.0, 128.0)

}

}

}원리는 간단하다. 최종 출력 마스크를 이용해서 마스크에 값이 1이라면 그 값에는 색을 덧대는 방식이다. 마스크에 넣을 색상은 임의로 getColor 메서드를 통해서 얻어왔다.

✔ 2. Draw

이제 화면에 그리는 코드를 추가하면 된다.

이전에 사용했던 메인 액티비티의 onCameraFrame 메서드에 아래 부분을 추가한다.

override fun onCameraFrame(inputFrame: CameraBridgeViewBase.CvCameraViewFrame?): Mat {

val frameMat = inputFrame!!.rgba()

// 추가된 부분

val results = detect(frameMat, net, labels)

Imgproc.cvtColor(frameMat, frameMat, Imgproc.COLOR_RGBA2RGB)

val outMat = drawSeg(frameMat, results, labels)

frameMat.release()

return outMat

}아래는 그 결과 사진이다.

segmentation은 색을 칠하는 과정에서 시간이 소요되다 보니 추론 속도가 조금 느린 감이 있다. 실시간으로 하지 않아도 위의 코드를 수정해서 사진을 받아와서 사진에 대해서 추론하는 방식도 좋을 것 같다.

코드를 보면 알 수 있듯이 추론 과정이 메인쓰레드에서 실행되고 있다. 그러다 보니 화면의 처리 속도가 느려지는 것을 확인할 수 있다.

비동기로 적절한 처리가 되면 아마 다음 글이 될 것 같다.

아래는 전체 코드이다

- 메인 액티비티

class MainActivity : ComponentActivity(), CameraBridgeViewBase.CvCameraViewListener2, Inference,

Draw {

companion object {

// 전면 = 1, 후면 = 0

const val CAMERA_ID = 0

}

private lateinit var net: Net

private lateinit var labels: Array<String>

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setPermissions()

val openCVCameraView = (JavaCameraView(this, CAMERA_ID) as CameraBridgeViewBase).apply {

setCameraPermissionGranted()

enableView()

setCvCameraViewListener(this@MainActivity)

setMaxFrameSize(640, 640)

layoutParams = ViewGroup.LayoutParams(

ViewGroup.LayoutParams.MATCH_PARENT, ViewGroup.LayoutParams.MATCH_PARENT

)

}

net = loadModel(assets, filesDir.toString())

labels = loadLabel(assets)

setContent {

AndroidView(modifier = Modifier.fillMaxSize(), factory = { openCVCameraView })

}

}

private fun setPermissions() {

val requestPermissionLauncher =

registerForActivityResult(ActivityResultContracts.RequestPermission()) {

if (!it) {

Toast.makeText(this, "권한을 허용 하지 않으면 사용할 수 없습니다!", Toast.LENGTH_SHORT).show()

finish()

}

}

val permissions = listOf(Manifest.permission.CAMERA)

permissions.forEach {

if (ContextCompat.checkSelfPermission(this, it) != PackageManager.PERMISSION_GRANTED) {

requestPermissionLauncher.launch(it)

}

}

OpenCVLoader.initDebug()

}

override fun onCameraViewStarted(width: Int, height: Int) {

}

override fun onCameraViewStopped() {

}

override fun onCameraFrame(inputFrame: CameraBridgeViewBase.CvCameraViewFrame?): Mat {

val frameMat = inputFrame!!.rgba()

val results = detect(frameMat, net, labels)

Imgproc.cvtColor(frameMat, frameMat, Imgproc.COLOR_RGBA2RGB)

val outMat = drawSeg(frameMat, results, labels)

frameMat.release()

return outMat

}

}

- assets에서 모델과 라벨링 데이터를 가져오는 Load 인터페이스

interface Load {

companion object {

const val FILE_NAME = "yolov8n-seg.onnx"

const val LABEL_NAME = "yolov8n.txt"

}

fun loadModel(assets: AssetManager, fileDir: String): Net {

val outputFile = File("$fileDir/$FILE_NAME")

assets.open(FILE_NAME).use { inputStream ->

FileOutputStream(outputFile).use { outputStream ->

val buffer = ByteArray(1024)

var read: Int

while (inputStream.read(buffer).also { read = it } != -1) {

outputStream.write(buffer, 0, read)

}

}

}

return Dnn.readNetFromONNX("$fileDir/$FILE_NAME")

}

fun loadLabel(assets: AssetManager): Array<String> {

BufferedReader(InputStreamReader(assets.open(LABEL_NAME))).use { reader ->

var line: String?

val classList = mutableListOf<String>()

while (reader.readLine().also { line = it } != null) {

line?.let { l -> classList.add(l) }

}

return classList.toTypedArray()

}

}

}- 추론을 담당하는 Inference 인터페이스

interface Inference : Load, Segment {

companion object {

const val OUTPUT_NAME_0 = "output0"

const val OUTPUT_NAME_1 = "output1"

const val INPUT_SIZE = 640

const val SCALE_FACTOR = 1 / 255.0

const val OUTPUT_SIZE = 8400

const val OUTPUT_MASK_SIZE = 160

const val CONFIDENCE_THRESHOLD = 0.3f

const val NMS_THRESHOLD = 0.5f

}

fun detect(mat: Mat, net: Net, labels: Array<String>): MutableList<Result> {

val inputMat = Mat()

Imgproc.resize(mat, inputMat, Size(INPUT_SIZE.toDouble(), INPUT_SIZE.toDouble()))

Imgproc.cvtColor(inputMat, inputMat, Imgproc.COLOR_RGBA2RGB)

inputMat.convertTo(inputMat, CvType.CV_32FC3)

val blob = Dnn.blobFromImage(inputMat, SCALE_FACTOR)

net.setInput(blob)

val output0 = Mat()

val output1 = Mat()

val outputList = arrayListOf(output0, output1)

val outputNameList = arrayListOf(OUTPUT_NAME_0, OUTPUT_NAME_1)

net.forward(outputList, outputNameList)

val lists =

postProcess(outputList[0], outputList[1], labels.size, mat.width(), mat.height())

blob.release()

inputMat.release()

output0.release()

output1.release()

return lists

}

private fun postProcess(

output0: Mat, output1: Mat, labelSize: Int, width: Int, height: Int

): MutableList<Result> {

val lists = boxOutput(output0, labelSize)

resizeBox(lists, width, height)

maskOutput(lists, output1, width, height)

output0.release()

output1.release()

return lists

}

private fun boxOutput(output: Mat, labelSize: Int): MutableList<Result> {

val detections = output.reshape(1, output.total().toInt() / OUTPUT_SIZE).t()

val boxes = Array(detections.rows()) { Rect2d() }

val maxScores = Array(detections.rows()) { 0f }

val indexes = Array(detections.rows()) { 0 }

for (i in 0 until detections.rows()) {

val scores = detections.row(i).colRange(4, 4 + labelSize)

val max = Core.minMaxLoc(scores)

val xPos = detections.get(i, 0)[0]

val yPos = detections.get(i, 1)[0]

val width = detections.get(i, 2)[0]

val height = detections.get(i, 3)[0]

val left = 0.0.coerceAtLeast(xPos - width / 2.0)

val top = 0.0.coerceAtLeast(yPos - height / 2.0)

boxes[i] = Rect2d(left, top, width, height)

maxScores[i] = max.maxVal.toFloat()

indexes[i] = max.maxLoc.x.toInt()

scores.release()

}

val rects = MatOfRect2d(*boxes)

val floats = MatOfFloat(*maxScores.toFloatArray())

val ints = MatOfInt(*indexes.toIntArray())

val indices = MatOfInt()

Dnn.NMSBoxesBatched(rects, floats, ints, CONFIDENCE_THRESHOLD, NMS_THRESHOLD, indices)

val list = mutableListOf<Result>()

if (indices.total().toInt() == 0) return list

indices.toList().forEach {

val scores = detections.row(it).colRange(4, 4 + labelSize)

val max = Core.minMaxLoc(scores)

val xPos = detections.get(it, 0)[0]

val yPos = detections.get(it, 1)[0]

val width = detections.get(it, 2)[0]

val height = detections.get(it, 3)[0]

val x = 0.0.coerceAtLeast(xPos - width / 2.0).toInt()

val y = 0.0.coerceAtLeast(yPos - height / 2.0).toInt()

val w = INPUT_SIZE.toDouble().coerceAtMost(width).toInt()

val h = INPUT_SIZE.toDouble().coerceAtMost(height).toInt()

val rect = Rect(x, y, w, h)

val score = max.maxVal.toFloat()

val index = max.maxLoc.x.toInt()

val mask = detections.row(it).colRange(4 + labelSize, detections.cols())

val result = Result(rect, score, index, mask)

list.add(result)

}

detections.release()

return list

}

private fun resizeBox(list: MutableList<Result>, width: Int, height: Int) {

list.forEach {

val box = it.box

val x = (box.x * width / INPUT_SIZE)

val y = (box.y * height / INPUT_SIZE)

var w = (box.width * width / INPUT_SIZE)

var h = (box.height * height / INPUT_SIZE)

if(w > width) w = width

if(h > height) h = height

if(x + w > width) w = width - x

if(y + h > height) h = height - y

val rect = Rect(x, y, w, h)

it.box = rect

}

}

}- result mask를 위한 Segment 인터페이스

interface Segment {

fun maskOutput(

boxOutputs: MutableList<Result>,

output1: Mat,

matWidth: Int,

matHeight: Int

) {

if (boxOutputs.size == 0) return

val maskPredictionList = boxOutputs.map { it.maskMat }

val maskPredictionMat = Mat()

Core.vconcat(maskPredictionList, maskPredictionMat)

val reshapeSize = Inference.OUTPUT_MASK_SIZE * Inference.OUTPUT_MASK_SIZE

val outputMat = output1.reshape(1, output1.total().toInt() / reshapeSize)

val matMul = Mat()

Core.gemm(maskPredictionMat, outputMat, 1.0, Mat(), 0.0, matMul)

val masks = sigmoid(matMul)

val resizedBoxes = resizeBoxes(boxOutputs, matWidth, matHeight)

val blurSize = Size(

(matWidth / Inference.OUTPUT_MASK_SIZE).toDouble(),

(matHeight / Inference.OUTPUT_MASK_SIZE).toDouble()

)

for (i in 0 until resizedBoxes.size) {

val resizeBox = resizedBoxes[i]

val scaleX = resizeBox.x

val scaleY = resizeBox.y

val scaleW = resizeBox.width

val scaleH = resizeBox.height

val w = boxOutputs[i].box.width

val h = boxOutputs[i].box.height

val mask = masks.row(i).reshape(1, Inference.OUTPUT_MASK_SIZE)

val resizedCropMask = Mat(mask, Rect(scaleX, scaleY, scaleW, scaleH))

val cropMask = Mat()

val blurMask = Mat()

val thresholdMask = Mat()

val resizeSize = Size(w.toDouble(), h.toDouble())

Imgproc.resize(resizedCropMask, cropMask, resizeSize, 0.0, 0.0, Imgproc.INTER_LINEAR)

Imgproc.blur(cropMask, blurMask, blurSize)

Imgproc.threshold(blurMask, thresholdMask, 0.5, 1.0, Imgproc.THRESH_BINARY)

thresholdMask.convertTo(thresholdMask, CvType.CV_8UC1)

boxOutputs[i].maskMat.release()

boxOutputs[i].maskMat = thresholdMask

mask.release()

resizedCropMask.release()

cropMask.release()

blurMask.release()

}

maskPredictionMat.release()

output1.release()

outputMat.release()

matMul.release()

masks.release()

maskPredictionList.forEach { it.release() }

}

private fun sigmoid(mat: Mat): Mat {

val oneMat = Mat.ones(mat.size(), mat.type())

val mulMat = Mat()

val expMat = Mat()

val outMat = Mat()

Core.multiply(mat, Scalar(-1.0), mulMat)

Core.exp(mulMat, expMat)

Core.add(oneMat, expMat, outMat)

Core.divide(oneMat, outMat, outMat)

oneMat.release()

mulMat.release()

expMat.release()

return outMat

}

private fun resizeBoxes(

boxOutputs: MutableList<Result>,

width: Int,

height: Int

): MutableList<Rect> {

val resizedBoxes = mutableListOf<Rect>()

boxOutputs.forEach {

val rect = it.box

val x = rect.x * Inference.OUTPUT_MASK_SIZE / width

val w = rect.width * Inference.OUTPUT_MASK_SIZE / width

val y = rect.y * Inference.OUTPUT_MASK_SIZE / height

val h = rect.height * Inference.OUTPUT_MASK_SIZE / height

resizedBoxes.add(Rect(x, y, w, h))

}

return resizedBoxes

}

}- 화면에 결과를 그리는 Draw 인터페이스

interface Draw {

companion object {

const val ALPHA = 0.5

}

fun drawSeg(mat: Mat, lists: MutableList<Result>, labels: Array<String>): Mat {

val maskImg = mat.clone()

if (lists.size == 0) return maskImg

lists.forEach {

val box = it.box

val color = getColor(it.index)

val textPoint = Point(box.x.toDouble(), box.y.toDouble() - 5)

val text = "${labels[it.index]} ${round(it.confidence * 100).toInt()}%"

Imgproc.rectangle(mat, box, color, 2)

Imgproc.putText(mat, text, textPoint, Imgproc.FONT_HERSHEY_SIMPLEX, 1.0, color, 2)

val cropMask = it.maskMat

val cropMaskImg = Mat(maskImg, box)

val cropMaskRGB = Mat(cropMask.size(), CvType.CV_8UC3)

val list = List(3) { cropMask.clone() }

Core.merge(list, cropMaskRGB)

val temp1 = Mat.zeros(cropMaskRGB.size(), cropMaskRGB.type())

Core.add(temp1, Scalar(1.0, 1.0, 1.0), temp1)

Core.subtract(temp1, cropMaskRGB, temp1)

Core.multiply(cropMaskImg, temp1, cropMaskImg)

val temp2 = Mat()

Core.multiply(cropMaskRGB, color, temp2)

Core.add(cropMaskImg, temp2, cropMaskImg)

cropMask.release()

cropMaskImg.release()

temp1.release()

temp2.release()

cropMaskRGB.release()

it.maskMat.release()

list.forEach { mat -> mat.release() }

}

val resultMat = Mat(mat.size(), mat.type())

Core.addWeighted(maskImg, ALPHA, mat, 1 - ALPHA, 0.0, resultMat, CvType.CV_8UC3)

maskImg.release()

return resultMat

}

private fun getColor(index: Int): Scalar {

return when (index) {

// WHITE

45, 18, 19, 22, 30, 42, 43, 44, 61, 71, 72 -> Scalar(255.0, 255.0, 255.0)

// BLUE

1, 3, 14, 25, 37, 38, 79 -> Scalar(0.0, 0.0, 255.0)

// RED

2, 9, 10, 11, 32, 47, 49, 51, 52 -> Scalar(255.0, 0.0, 0.0)

// YELLOW

5, 23, 46, 48 -> Scalar(0.0, 255.0, 255.0)

// GRAY

6, 13, 34, 35, 36, 54, 59, 60, 73, 77, 78 -> Scalar(128.0, 128.0, 128.0)

// BLACK

7, 24, 26, 27, 28, 62, 64, 65, 66, 67, 68, 69, 74, 75 -> Scalar(0.0, 0.0, 0.0)

// GREEN

12, 29, 33, 39, 41, 58, 50 -> Scalar(0.0, 255.0, 0.0)

// DARK GRAY

15, 16, 17, 20, 21, 31, 40, 55, 57, 63 -> Scalar(64.0, 64.0, 64.0)

// LIGHT GRAY

70, 76 -> Scalar(192.0, 192.0, 192.0)

// PURPLE

else -> Scalar(128.0, 0.0, 128.0)

}

}

}- 마지막으로 출력 결과를 정리한 Result data 클래스이다.

data class Result(var box: Rect, val confidence: Float, val index: Int, var maskMat: Mat)그 외 모델 및 더 자세한 코드는 아래 깃허브를 참고하면 될 듯하다.

https://github.com/Yurve/YOLOv8_Seg_android