📖 Introduction

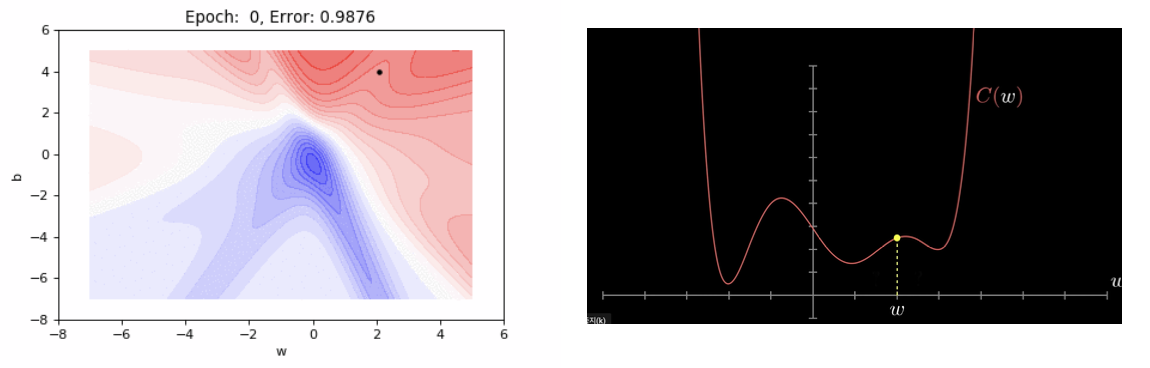

- Gradient Descent

- First-order iterative optimization algorithm for finding a local minimum of a differentiable function.

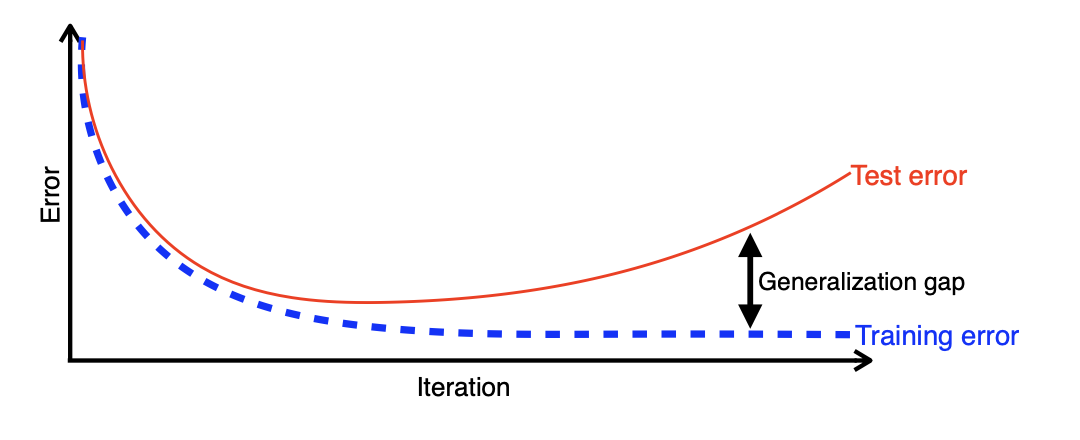

📖 Generalization

- How well the learned model will behave on unseen data.

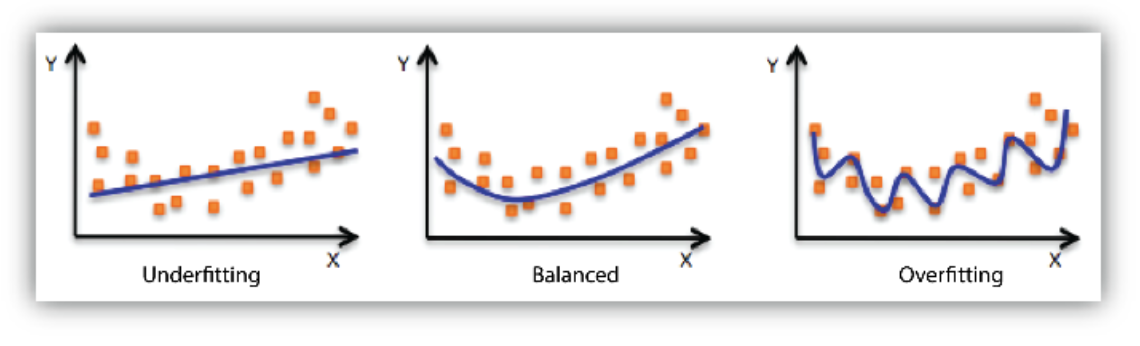

📖 Underfitting vs. Overfitting

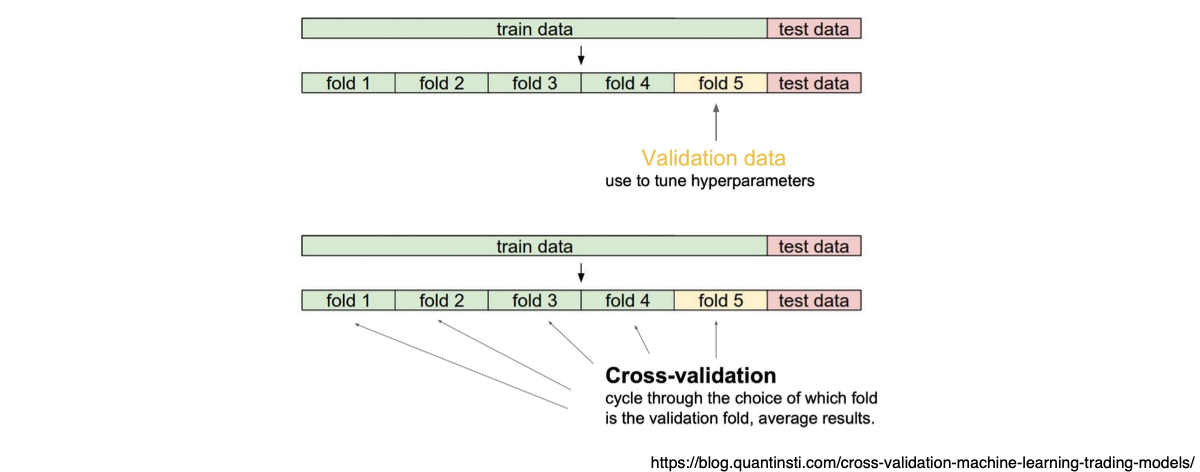

📖 Cross-validation

- Cross-validation is a model validation technique for assessing how the model will generalize to an independent (test) data set.

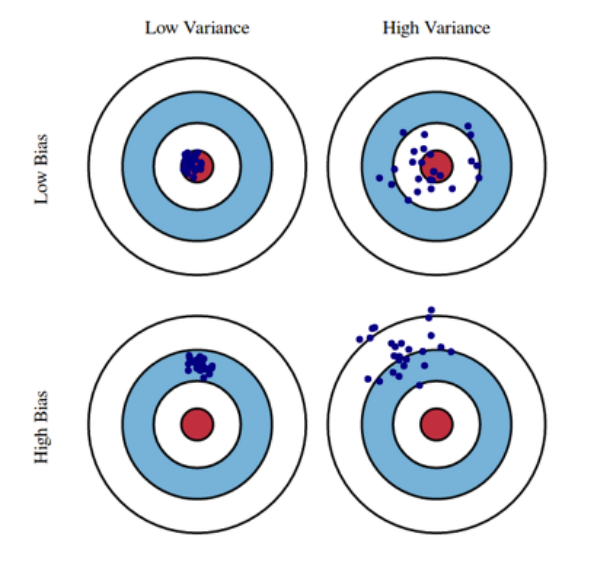

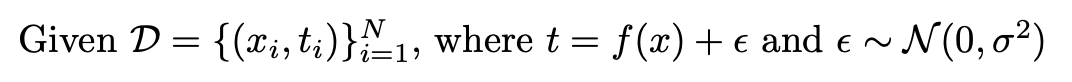

📖 Bias and Variance

📖 Bias and Variance Tradeoff

- We can derive that what we are minimizing (cost) can be decomposed

into three different parts: bias2, variance, and noise.

📖 Bootstrapping

- Bootstrapping is any test or metric that uses random sampling with replacement.

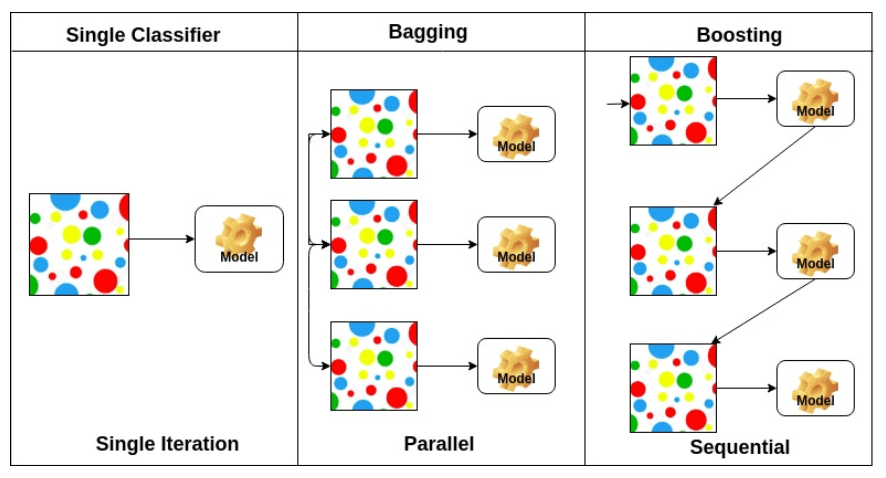

📖 Bagging vs. Boosting

- Bagging (Bootstrapping aggregating)

- Multiple models are being trained with bootstrapping.

- ex) Base classifiers are fitted on random subset where individual predictions are aggregated (voting or averaging).

- Boosting

- It focuses on those specific training samples that are hard to classify.

- A strong model is built by combining weak learners in sequence where each learner learns from the mistakes of the previous weak learner.

<이 게시물은 최성준 교수님의 '최적화의 주요 용어 이해하기' 강의 자료를 참고하여 작성되었습니다.>

본 포스트의 학습 내용은 [부스트캠프 AI Tech 5기] Pre-Course 강의 내용을 바탕으로 작성되었습니다.

부스트캠프 AI Tech 5기 Pre-Course는 일정 기간 동안에만 운영되는 강의이며,

AI 관련 강의를 학습하고자 하시는 분들은 부스트코스 AI 강좌에서 기간 제한 없이 학습하실 수 있습니다.

(https://www.boostcourse.org/)