반도체 박막 두께 분석

배경

최근 고사양 반도체 수요가 많아지면서 반도체를 수직으로 적층하는 3차원 공정이 많이 연구되고 있습니다. 반도체 박막을 수십 ~ 수백 층 쌓아 올리는 공정에서는 박막의 결함으로 인한 두께와 균일도가 저하되는 문제가 있습니다. 이는 소자 구조의 변형을 야기하며 성능 하락의 주요 요인이 됩니다. 이를 사전에 방지하기 위해서는 박막의 두께를 빠르면서도 정확히 측정하는 것이 중요합니다.

박막의 두께를 측정하기 위해 광스펙트럼 분석이 널리 사용되고 있습니다. 하지만 광 스펙트럼을 분석하기 위해서는 관련 지식을 많이 가진 전문가가 필요하며 분석과정에 많은 컴퓨팅자원이 필요합니다. 빅데이터 분석을 통해 이를 해결하고자 반도체 소자의 두께 분석 알고리즘 경진대회를 개최합니다.

배경 자료

반도체 박막은 얇은 반도체 막으로 박막의 종류와 두께는 반도체 소자의 특성을 결정짓는 중요한 요소 중 하나입니다. 박막의 두께를 측정하는 방법으로 반사율 측정이 널리 사용되며 반사율은 입사광 세기에 대한 반사광 세기의 비율로 정해집니다. (반사율 = 반사광/입사광) 반사율은 빛의 파장에 따라 변하며 파장에 따른 반사율의 분포를 반사율 스펙트럼이라고 합니다.

구조 설명

이번 대회에서 분석할 소자는 질화규소(layer_1)/이산화규소(layer_2)/질화규소(layer_3)/이산화규소(layer_4)/규소(기판) 총 5층 구조로 되어 있습니다. 대회의 목적은 기판인 규소를 제외한 layer_1 ~ layer_4의 두께를 예측하는 것으로 학습 데이터 파일에는 각 층의 두께와 반사율 스펙트럼이 포함되어 있습니다.

데이터 설명

train.csv 파일에는 4층 박막의 두께와 파장에 따른 반사율 스펙트럼이 주어집니다. 헤더의 이름에 따라 layer_1 ~ 4는 해당 박막의 두께,

데이터값인 0 ~ 225은 빛의 파장에 해당하는 반사율이 됩니다. 헤더 이름인 0~225은 파장을 뜻하며 비식별화 처리가 되어있어 실제 값과는 다릅니다.

import tensorflow as tf

from tensorflow.keras import Sequential

from tensorflow.keras import layers

import numpy as np

import matplotlib.pyplot as plt

import osuse_colab = True

assert use_colab in [True, False]from google.colab import drive

drive.mount('/content/drive')#Mounted at /content/drive데이터 로드

- 새로운 버전의 Colab파일에선 왼쪽의 폴더 tree에서 직접 드라이브 마운트를 진행해야합니다.

train_d = np.load("/content/drive/MyDrive/dataset/semi_cond/semicon_train_data.npy")

test_d = np.load("/content/drive/MyDrive/dataset/semi_cond/semicon_test_data.npy")

print(train_d.shape, test_d.shape)#(729000, 230) (81000, 230)print(train_d[1].shape)#(230,)print(test_d[0].shape)#(230,)# Raw Data 확인

print(train_d[1000])

# print(test_d[0])[7.00000000e+01 4.00000000e+01 1.50000000e+02 1.10000000e+02

4.02068880e-01 4.05173400e-01 4.22107550e-01 4.35197980e-01

4.66261860e-01 4.67785980e-01 4.76300870e-01 5.07203700e-01

5.23296600e-01 5.31541170e-01 5.23319200e-01 5.32340650e-01

5.38513840e-01 5.62478700e-01 5.74338900e-01 5.62083540e-01

5.83467800e-01 5.88462350e-01 5.89606940e-01 5.93186560e-01

5.93241930e-01 5.98241150e-01 5.87171000e-01 5.92055500e-01

6.06527860e-01 5.87087870e-01 5.94492100e-01 5.73233500e-01

5.94926300e-01 5.76777600e-01 5.70286300e-01 5.76913000e-01

5.52754400e-01 5.61735150e-01 5.52500000e-01 5.23800200e-01

5.11056840e-01 5.11732600e-01 4.92525460e-01 4.73373200e-01

4.57444160e-01 4.53428800e-01 4.19270130e-01 3.87709920e-01

3.87221520e-01 3.49848450e-01 3.16229370e-01 3.13858700e-01

2.80085700e-01 2.61263000e-01 2.30250820e-01 1.98457850e-01

1.53500440e-01 1.36026740e-01 1.08726785e-01 8.95344000e-02

6.58975300e-02 5.09539300e-02 3.69604570e-02 2.88708470e-02

1.54857090e-02 1.99594010e-02 2.33170990e-02 3.26487760e-02

5.89796340e-02 6.33707600e-02 9.20634640e-02 1.24182590e-01

1.63985540e-01 1.81501220e-01 2.16830660e-01 2.57668080e-01

2.86428720e-01 3.21613600e-01 3.65077380e-01 3.85984180e-01

4.33254400e-01 4.36655340e-01 4.60312520e-01 4.99771740e-01

5.03849100e-01 5.48682200e-01 5.58029060e-01 5.62947630e-01

5.99234340e-01 5.96399550e-01 6.04513000e-01 6.19093400e-01

6.38402700e-01 6.64816900e-01 6.56996000e-01 6.66098600e-01

6.69130500e-01 6.70661600e-01 6.80202360e-01 6.92821600e-01

6.96852400e-01 7.02015160e-01 7.00507460e-01 7.00250800e-01

7.11669300e-01 6.96838260e-01 7.08974360e-01 7.19114100e-01

7.18034700e-01 7.00589540e-01 7.05873250e-01 6.92893500e-01

7.14911160e-01 6.85250640e-01 6.82136830e-01 6.81311400e-01

6.78019200e-01 6.67259900e-01 6.78185700e-01 6.52875660e-01

6.34932940e-01 6.36031400e-01 6.16502100e-01 5.91461400e-01

5.74673350e-01 5.57246200e-01 5.42328200e-01 5.19422200e-01

5.03134850e-01 4.81939820e-01 4.45872100e-01 4.17206170e-01

4.05306970e-01 3.55970500e-01 3.27808440e-01 2.89738060e-01

2.44837760e-01 2.16494500e-01 1.69062380e-01 1.23260050e-01

1.03297760e-01 6.77379500e-02 3.89807700e-02 2.52380610e-02

2.59922780e-02 1.38827340e-02 1.90851740e-02 6.68724550e-02

7.21453900e-02 1.14929430e-01 1.77696590e-01 2.11153720e-01

2.55038900e-01 2.95599580e-01 3.21243700e-01 3.74151170e-01

3.91486200e-01 4.27665320e-01 4.61769700e-01 4.75576340e-01

4.78055980e-01 5.16503040e-01 5.30934450e-01 5.23902500e-01

5.51259300e-01 5.65235300e-01 5.67701600e-01 5.70858800e-01

5.76418300e-01 5.75021860e-01 6.01967200e-01 5.79546750e-01

5.81157900e-01 5.93576100e-01 5.84070600e-01 6.04394850e-01

5.87139800e-01 5.93731460e-01 5.91678200e-01 5.63344300e-01

5.69886800e-01 5.46404960e-01 5.43537260e-01 5.42394760e-01

5.42762940e-01 5.26218060e-01 4.89471520e-01 4.72608000e-01

4.59845570e-01 4.39476280e-01 4.24489440e-01 4.16756120e-01

4.11724180e-01 3.81418440e-01 3.60050380e-01 3.37973600e-01

3.20442830e-01 2.97488420e-01 2.85940900e-01 2.82688900e-01

2.78886770e-01 2.60735480e-01 2.64526520e-01 2.38374640e-01

2.46523140e-01 2.54422630e-01 2.63443470e-01 2.77559100e-01

3.05792480e-01 3.04755750e-01 3.47745950e-01 3.42683080e-01

3.59969050e-01 3.90264000e-01 4.21746500e-01 4.33448700e-01

4.50429620e-01 4.94950400e-01 4.90516330e-01 5.15429500e-01

5.46570300e-01 5.64438460e-01 5.68110800e-01 5.71491600e-01

5.81447660e-01 5.84012000e-01 6.05881900e-01 5.98874870e-01

5.97313170e-01 5.95610300e-01]- 데이터를 분석하여 모델에 학습할 수 있는 형태로 정리합니다.

train = train_d[:,4:] # 729000, 230 4번째 데이터부터 가져온다.

label = train_d[:,:4]

t_train = test_d[:,4:] # 81000, 230개 4번째 까지 데이터를 가져온다.

t_label = test_d[:,:4]print(train.shape, label.shape)# (729000, 226) (729000, 4)batch_size = 32

# for train

train_dataset = tf.data.Dataset.from_tensor_slices((train, label))

train_dataset = train_dataset.shuffle(10000).repeat().batch(batch_size=batch_size)

print(train_dataset)

# for test

test_dataset = tf.data.Dataset.from_tensor_slices((t_train, t_label))

test_dataset = test_dataset.batch(batch_size=batch_size)

print(test_dataset)#<_BatchDataset element_spec=(TensorSpec(shape=(None, 226), dtype=tf.float64, name=None), TensorSpec(shape=(None, 4), dtype=tf.float64, name=None))>

#<_BatchDataset element_spec=(TensorSpec(shape=(None, 226), dtype=tf.float64, name=None), TensorSpec(shape=(None, 4), dtype=tf.float64, name=None))>모델 구성

- 현재 가장 간단한 모델로 구성되어 있습니다.

- 데이터셋 자체를 가장 잘 학습할 수 있는 모델을 구현해 학습을 진행합니다.

model = Sequential([

tf.keras.layers.Dense(226, activation='relu'),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(32, activation='relu'),

tf.keras.layers.Dense(16, activation='relu'),

tf.keras.layers.Dense(8, activation='relu'),

tf.keras.layers.Dense(4)

])모델 학습

- 4개층의 박막의 두께를 예측하는 모델을 학습해봅시다.

# the save point

if use_colab:

checkpoint_dir ='./drive/My Drive/train_ckpt/semiconductor/exp1'

if not os.path.isdir(checkpoint_dir):

os.makedirs(checkpoint_dir)

else:

checkpoint_dir = 'semiconductor/exp1'

cp_callback = tf.keras.callbacks.ModelCheckpoint(checkpoint_dir,

save_weights_only=True,

monitor='val_loss',

mode='auto',

save_best_only=True,

verbose=1)max_epochs = 100

cos_decay = tf.keras.experimental.CosineDecay(1e-4,

max_epochs)

lr_callback = tf.keras.callbacks.LearningRateScheduler(cos_decay, verbose=1)model.compile(loss='mae', optimizer=tf.keras.optimizers.Adam(1e-4), metrics=['mae'])max_epochs = 10

history = model.fit(train_dataset, epochs=max_epochs,

steps_per_epoch=len(train) // batch_size,

validation_data=test_dataset,

validation_steps=len(t_train) // batch_size,

callbacks=[cp_callback]

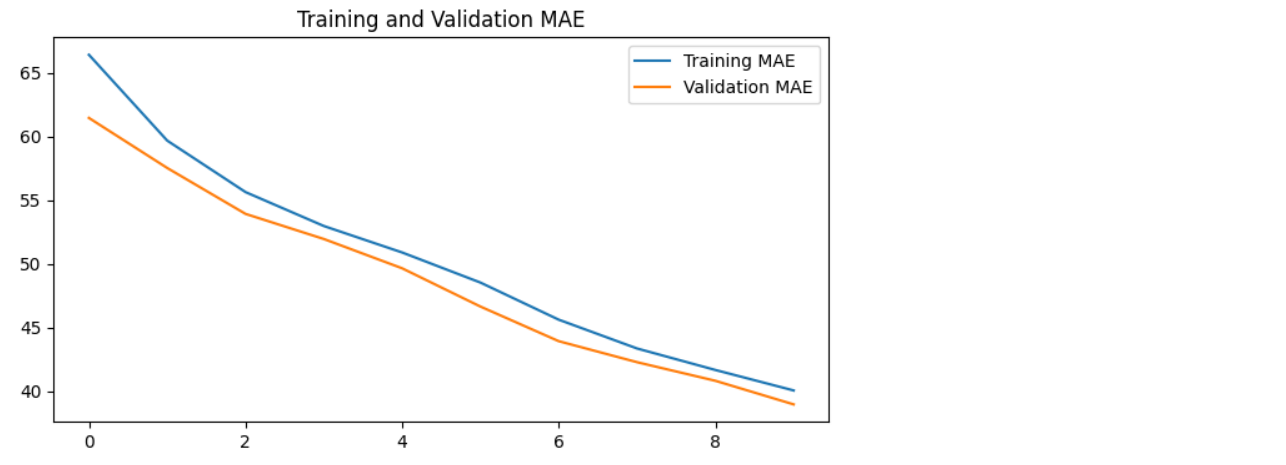

)Epoch 1/10

22775/22781 [============================>.] - ETA: 0s - loss: 66.4007 - mae: 66.4007

Epoch 1: val_loss improved from inf to 61.44498, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 115s 5ms/step - loss: 66.3992 - mae: 66.3992 - val_loss: 61.4450 - val_mae: 61.4450

Epoch 2/10

22773/22781 [============================>.] - ETA: 0s - loss: 59.6668 - mae: 59.6668

Epoch 2: val_loss improved from 61.44498 to 57.51788, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 109s 5ms/step - loss: 59.6652 - mae: 59.6652 - val_loss: 57.5179 - val_mae: 57.5179

Epoch 3/10

22773/22781 [============================>.] - ETA: 0s - loss: 55.6284 - mae: 55.6284

Epoch 3: val_loss improved from 57.51788 to 53.91689, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 105s 5ms/step - loss: 55.6273 - mae: 55.6273 - val_loss: 53.9169 - val_mae: 53.9169

Epoch 4/10

22771/22781 [============================>.] - ETA: 0s - loss: 52.9664 - mae: 52.9664

Epoch 4: val_loss improved from 53.91689 to 51.94687, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 103s 5ms/step - loss: 52.9657 - mae: 52.9657 - val_loss: 51.9469 - val_mae: 51.9469

Epoch 5/10

22775/22781 [============================>.] - ETA: 0s - loss: 50.8904 - mae: 50.8904

Epoch 5: val_loss improved from 51.94687 to 49.64795, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 102s 4ms/step - loss: 50.8902 - mae: 50.8902 - val_loss: 49.6480 - val_mae: 49.6480

Epoch 6/10

22773/22781 [============================>.] - ETA: 0s - loss: 48.5289 - mae: 48.5289

Epoch 6: val_loss improved from 49.64795 to 46.64631, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 104s 5ms/step - loss: 48.5281 - mae: 48.5281 - val_loss: 46.6463 - val_mae: 46.6463

Epoch 7/10

22772/22781 [============================>.] - ETA: 0s - loss: 45.6172 - mae: 45.6172

Epoch 7: val_loss improved from 46.64631 to 43.93079, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 105s 5ms/step - loss: 45.6164 - mae: 45.6164 - val_loss: 43.9308 - val_mae: 43.9308

Epoch 8/10

22777/22781 [============================>.] - ETA: 0s - loss: 43.3583 - mae: 43.3583

Epoch 8: val_loss improved from 43.93079 to 42.28270, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 104s 5ms/step - loss: 43.3579 - mae: 43.3579 - val_loss: 42.2827 - val_mae: 42.2827

Epoch 9/10

22777/22781 [============================>.] - ETA: 0s - loss: 41.6714 - mae: 41.6714

Epoch 9: val_loss improved from 42.28270 to 40.82317, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 103s 5ms/step - loss: 41.6712 - mae: 41.6712 - val_loss: 40.8232 - val_mae: 40.8232

Epoch 10/10

22773/22781 [============================>.] - ETA: 0s - loss: 40.0643 - mae: 40.0643

Epoch 10: val_loss improved from 40.82317 to 38.97026, saving model to ./drive/My Drive/train_ckpt/semiconductor/exp1

22781/22781 [==============================] - 107s 5ms/step - loss: 40.0640 - mae: 40.0640 - val_loss: 38.9703 - val_mae: 38.9703loss=history.history['mae']

val_loss=history.history['val_mae']

epochs_range = range(len(loss))

plt.figure(figsize=(8, 4))

plt.plot(epochs_range, loss, label='Training MAE')

plt.plot(epochs_range, val_loss, label='Validation MAE')

plt.legend(loc='upper right')

plt.title('Training and Validation MAE')

plt.show()

모델 평가

- Mean absolute error 를 이용해 모델이 정확히 예측하는지를 확인

model.load_weights(checkpoint_dir)

results = model.evaluate(test_dataset)

print("MAE :", results[0]) # MAE#2532/2532 [==============================] - 6s 2ms/step - loss: 38.9691 - mae: 38.9691

#MAE : 38.969112396240234