[📖논문 리뷰] Chain-of-Thought Prompting Elicits Reasoning in Large Language Models (2022)

PaperReview

최근에 Prompting, Chain-of-Thought를 활용한 Few-shot reasoning을 통한 논문을 내려고 작업하고 있다. 그러면서 제대로 꼼꼼히 읽으려고 보고 있고, 정리해 보았다.

본 논문은 NeurIPS 2022 Main Conference Track에 publish 된 논문으로 아마 많은 분들이 CoT라고 알고 있는 논문이다.

✒️Chain-of-Thought Prompting Elicits Reasoning in Large Language Models(⭐NeurIPS-2022-Main)

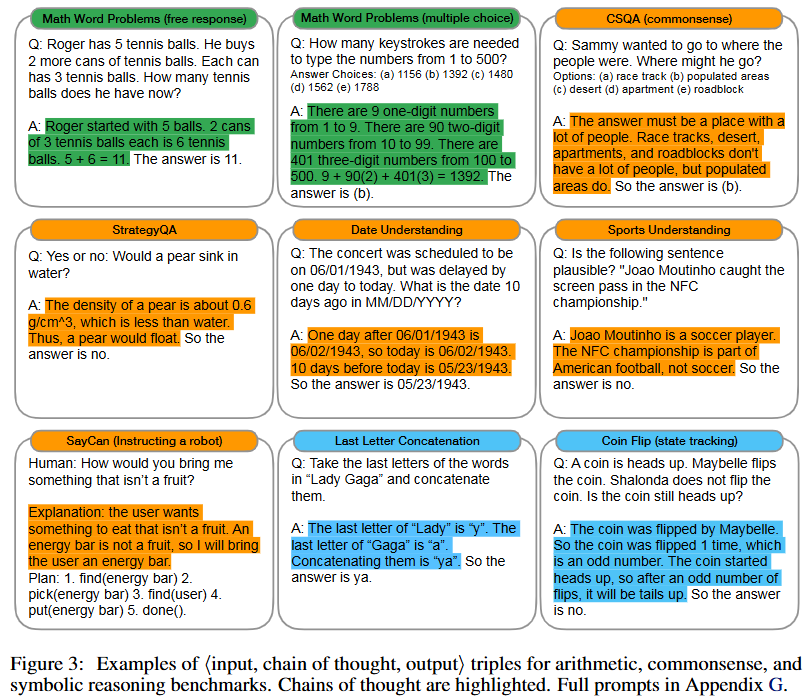

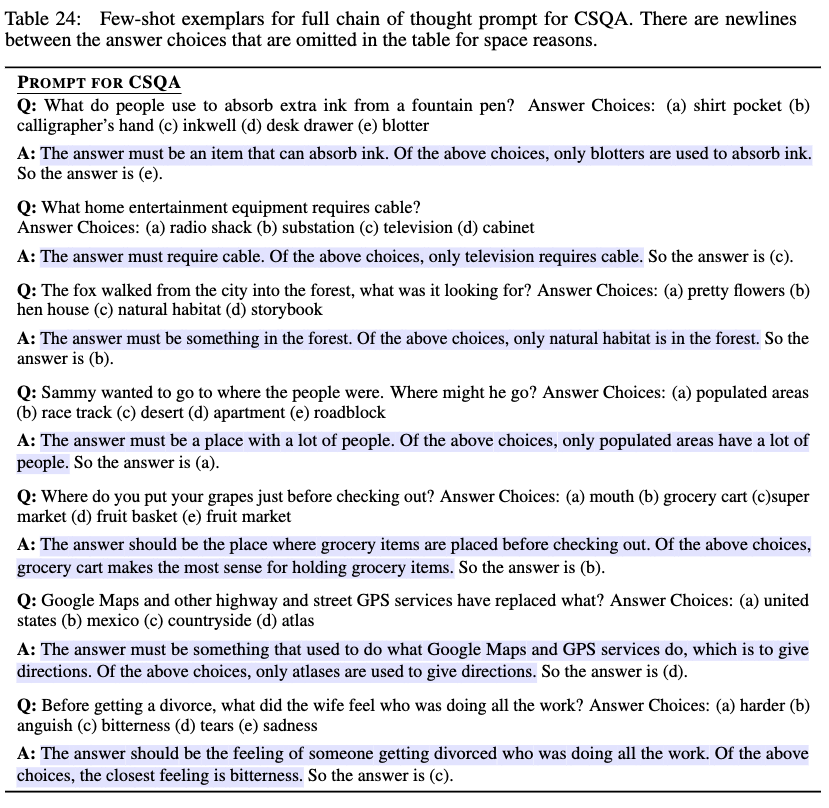

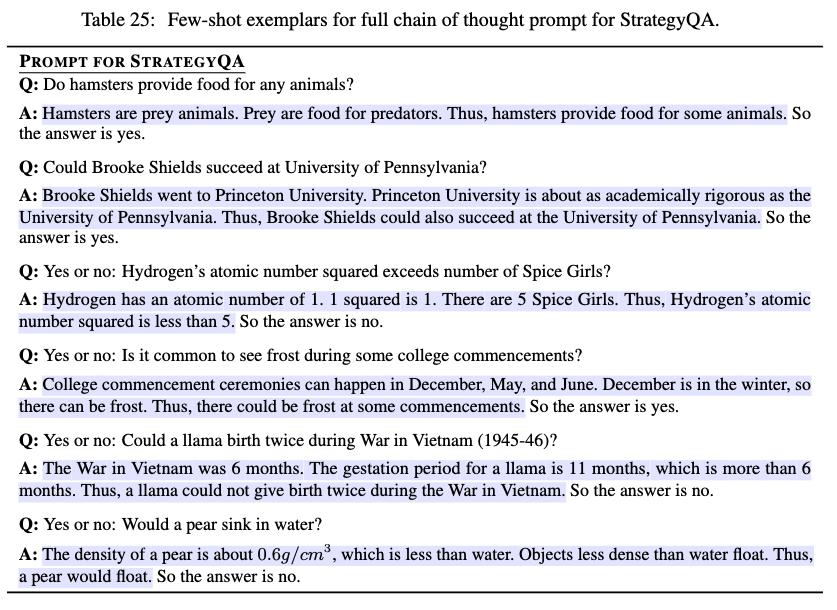

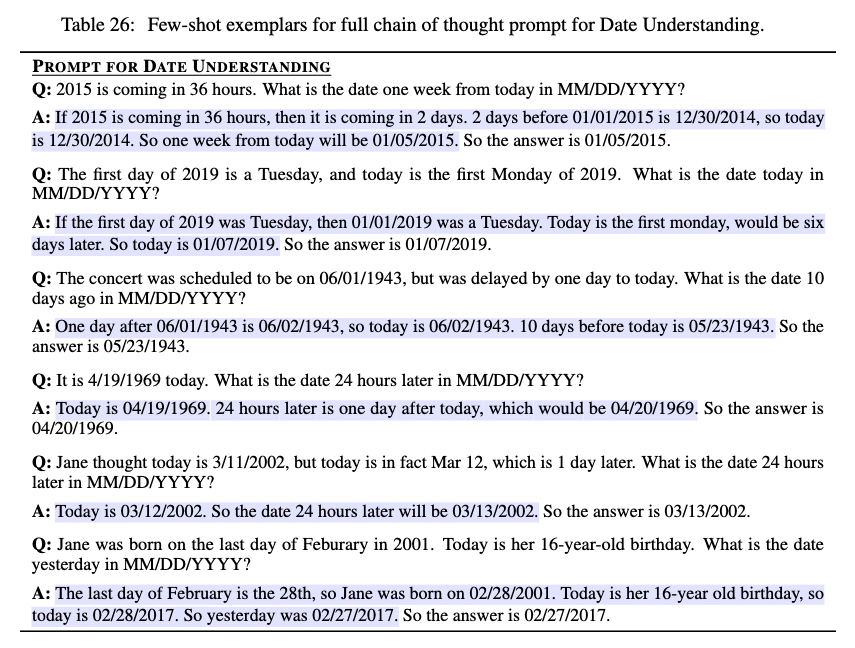

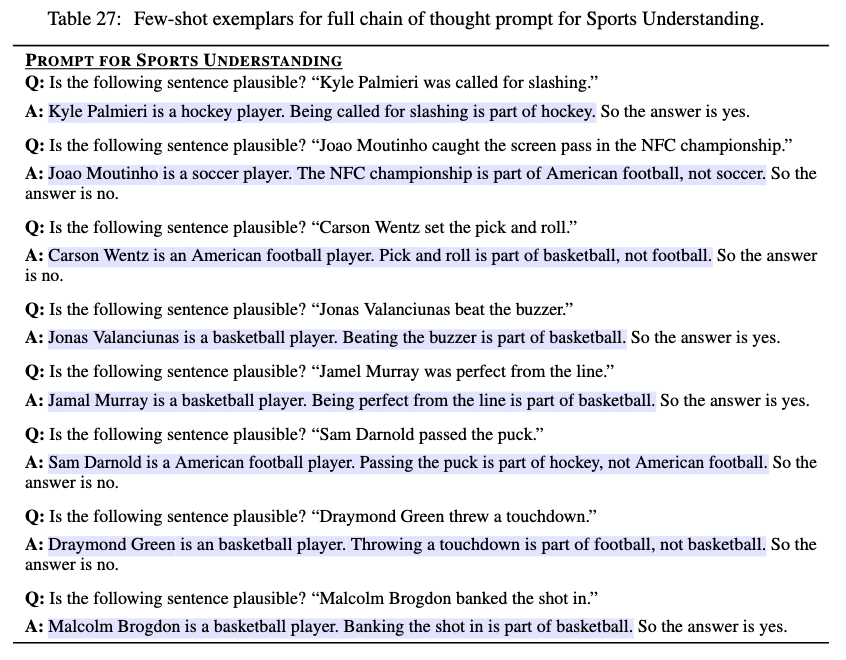

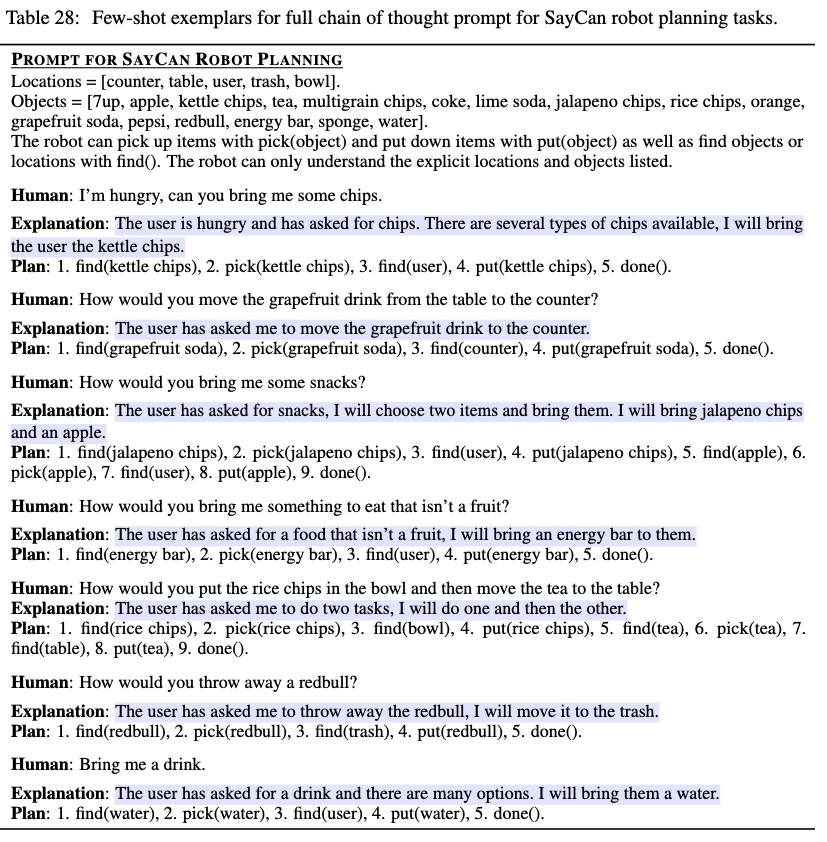

43페이지가 되는 Appendix까지 매우 자세하게 정리된 논문으로서 공부하는 분들은 하나하나 번역하면서 끝까지 읽어보길 추천한다. 특히 Appendix에 실제로 어떻게 few-shot exampler text(위의 그림에서 파랑색 부분)을 구성했는지 다 공개해서 정말 좋았다.

Abstract

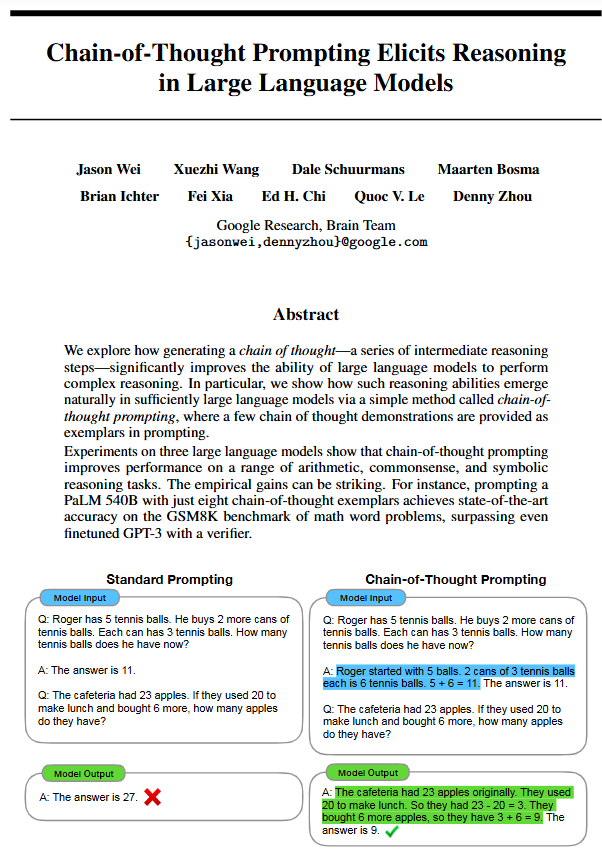

[주요 방법론] Chain of Thought (CoT)

- 중간 추론 단계를 제공해 few-shot inference 시 추론의 방향성을 간접적으로 전달하는 방식으로, 대규모 언어 모델의 복잡한 추론 능력을 크게 향상.

- 몇 개의 Chain of Thought 예제 텍스트인 "Exemplars"를 프롬프트에 제공하여 모델의 추론 능력을 강화

[실험 결과]

- 세 가지 대규모 언어 모델에서 실험 진행 : GPT-3(InstructGPT) - [350M, 1.3B, 6.7B, 175B]

- Chain of Thought Prompting이 Arithmetic Reasoning, Commonsense Reasoning, Symbolic Reasoning 작업에서 성능 향상을 보임

- 여러 Benchmark에서 Stardard Prompt를 능가함

- PaLM 540B 모델에 8개의 체인 오브 쏘트 예제를 프롬프팅으로 제공

- 수학 단어 문제(GSM8K) 벤치마크에서 최신 성능(SOTA)을 달성

- Fine-tuned GPT-3 with a verifier를 능가하는 정확도

Chain-of-Thought Prompting : 〈input, chain of thought, output>

위의 하이라이트된 부분이 chain of thought에 해당되는 prompt text이다. 위와 같은 텍스트들은 모델이 추론하는 과정에서 그 추론 과정을 유도하도록 하는 역할을 하고 있다.

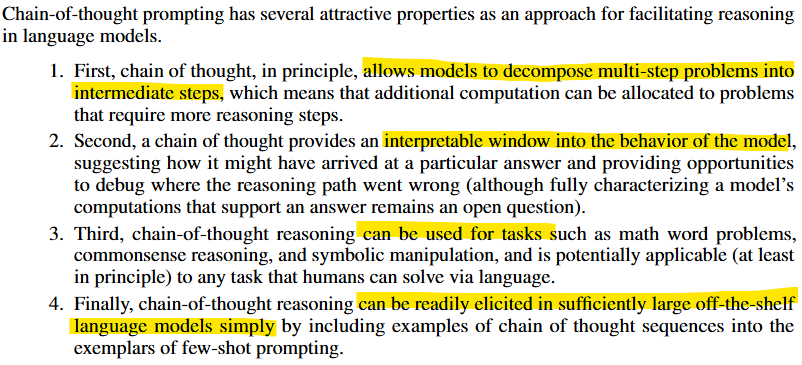

Chain-of-Thought 프롬프트의 주요 특징 및 장점

1. 복잡한 문제를 중간 단계로 분해 가능

체인 오브 소트는 다단계 문제를 중간 단계로 분해하도록 모델을 유도합니다. 이를 통해 추가적인 계산 리소스를 할당하여 더 복잡한 추론이 필요한 문제를 해결할 수 있습니다.

2. 모델의 행동을 해석할 수 있는 창 제공

체인 오브 소트는 모델이 특정 답에 도달한 경로를 해석할 수 있게 해줍니다. 이를 통해 추론 과정에서 어디서 오류가 발생했는지 디버깅할 수 있는 기회를 제공합니다. (다만, 모델의 계산 과정을 완전히 특성화하는 것은 여전히 해결되지 않은 과제입니다.)

3. 다양한 작업에 적용 가능

체인 오브 소트 추론은 수학 문제, 상식적 추론, 심볼릭 조작과 같은 작업에 사용할 수 있으며, 원칙적으로 인간이 언어로 해결할 수 있는 모든 작업에 적용할 수 있습니다.

4. 대형 언어 모델에서 간단히 활용 가능

충분히 큰 크기의 기존 언어 모델에 체인 오브 소트 예제를 포함시키는 것만으로 이러한 추론 능력을 쉽게 유도할 수 있습니다.

Experiment

✅ Model + Setting

- 사용한 pre-trained LLM 종류 (크기 다양)

- Instruct GPT(GPT3) [350M, 1.3B, 6.7B, 175B]

- LaMDA [422M, 2B, 8B, 68B, 137B]

- PaLM [8B, 62B, 540B]

- UL2 [20B]

- Codex

- 모든 경우에 대해서, greedy decoding 사용, 다음 토큰이 될 확률이 가장 높은 토큰을 선택하는 방법을 사용함

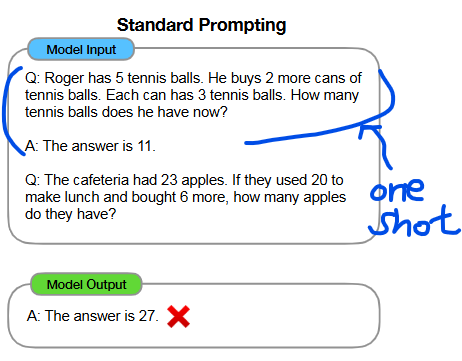

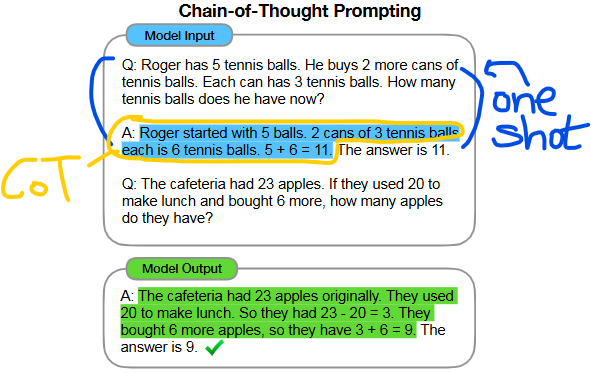

Standard prompting & Chain-of-thought prompting

산술추론 Task에 CoT를 적용해 실험하였고, SoTA 달성을 할 수 있었다.논문에서는 Few-Shot Prompt를 Standard Prompting이라고 부르면서 Base Prompt로 하였다. 그리고 CoT Prompt를 추가한 경우를 Chain-of-thought Prompting이라고 하여 실험을 하였다.

위의 경우가 1shot의 예시, 즉 input-output 예시가 1개 들어간 경우이다. 이렇게 구성한 경우가 가장 Base Prompt이다.

위의 경우가 같은 일단 1shot 이지만, chain-of-thought text가 들어간 거로 Chain-of-thought Prompting이다.

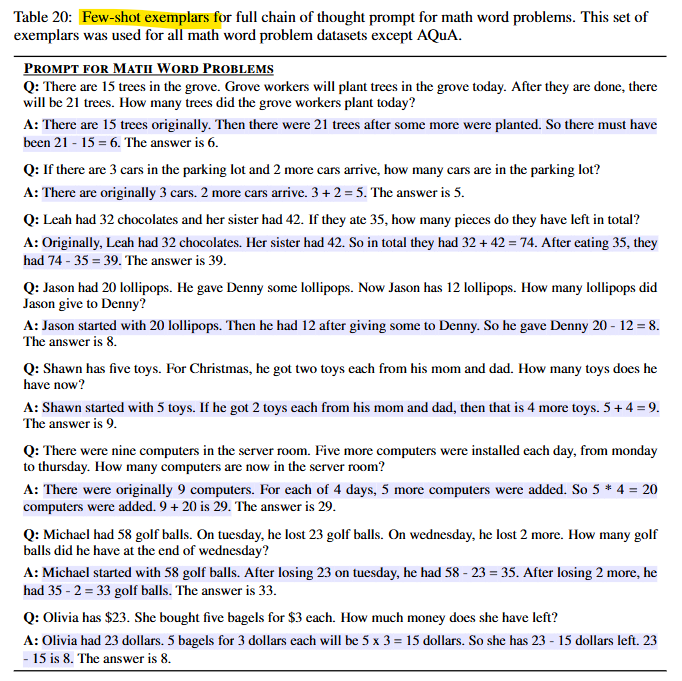

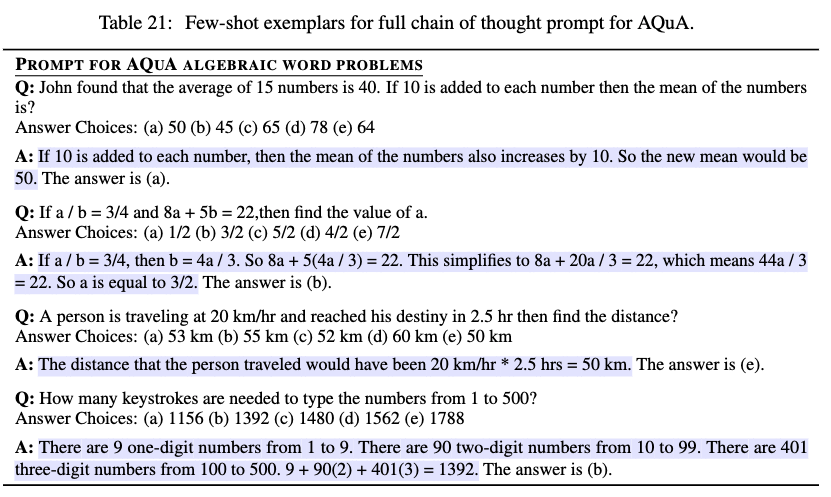

🤔 Chain-of-thought Prompt에 해당되는 추론 과정을 담은 예시, text는 어떻게 만든거지? 어디서 왔을까?

저렇게 추론을 유도하기 위해 chain-of-thought text를 넣어주는 건 알겠는데, 문제는 "어디서 저 CoT Text를 가져왔을까?"라는 거다. 실제로 CoT 방법론을 사용할 경우를 생각하더라도 저 CoT Text를 구하는게 문제이기 때문이다.

정답은 논문에 적혀 있는데, 그냥 사람이 직접 만들었다(manually composed)라고 적혀있다. 실제로 8개의 예시에 대한 CoT Text를 만들었는데 Appendix에 아래와 같이 기재되어 있었다.

위의 8개의 example에 있는 CoT 예시글을 만들어서 8shot 으로 prompt에 넣어준 것이다. 산술추론에 해당되는 benchmark는 모두 저 8개의 few shot eaxampler 가 들어간거다

물론 AQuA까지 해서 4개 더 있다.

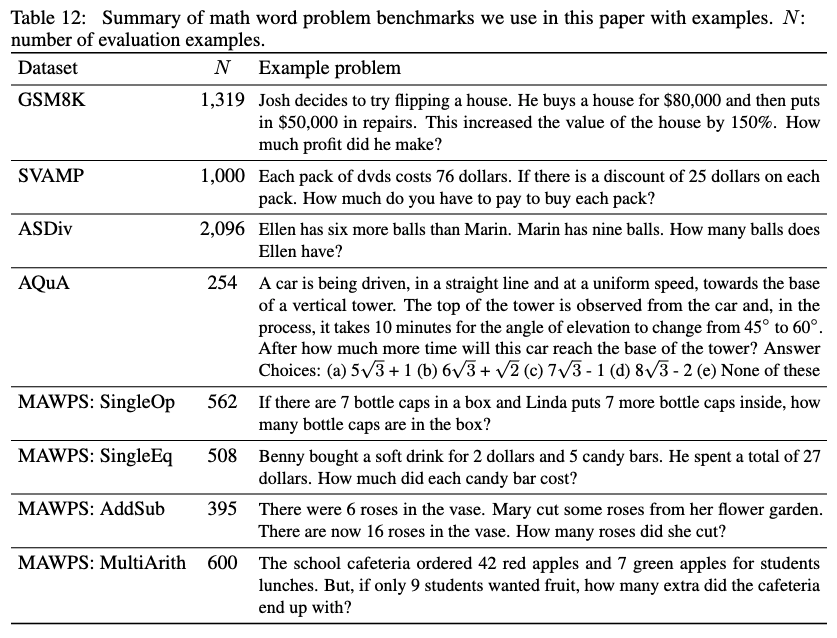

(1) Arithmetic Reasoning

우리가 아는 산술 문제인데, 약간 수식적인 느낌보다는 텍스트로 상황을 말하면 계산된 답을 말하도록 하는 QA형식의 데이터셋을 주로 가지고 실험했다.

Benchmark

아래와 같은 Benchmark 데이터셋을 사용했다.

Results

일단, 당연히 CoT를 적용한 프롬프트가 더 좋은 결과를 낸건 맞다. 하지만, 이 논문의 산술 추론 실험 결과에서 꼭 봐야 할 부분이 있다.

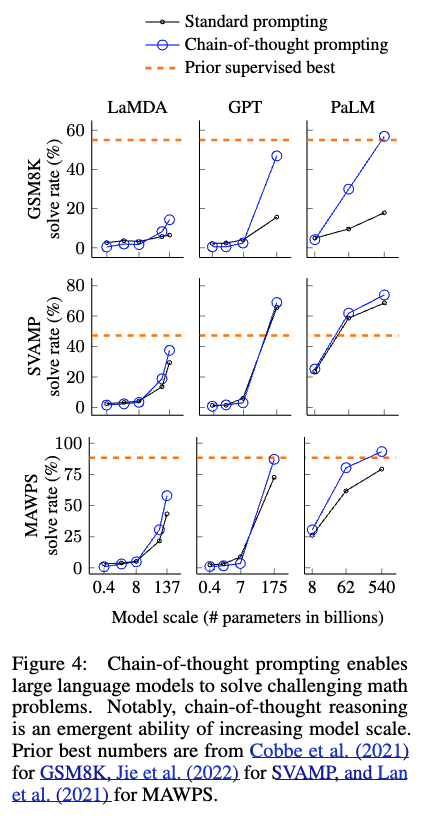

"First, Figure 4 shows that chain-of-thought prompting is an emergent ability of model scale (Wei et al., 2022b). That is, chain-of-thought prompting does not positively impact performance for small models, and only yields performance gains when used with models of ∼100B parameters. We qualitatively found that models of smaller scale produced fluent but illogical chains of thought, leading to lower performance than standard prompting."

➜ 100B 이상의 모델 파라미터 크기를 가지고 있는 경우에 한 해서, CoT Prompting이 Standard Prompting 보다 효과가 있다는 것

➜ CoT Prompting은 큰 LLM 모델에 적용한 경우에 산술 추론을 하는데 좋은 퍼포먼스를 보인다는 점

"Second, chain-of-thought prompting has larger performance gains for more-complicated problems. For instance, for GSM8K (the dataset with the lowest baseline performance), performance more than doubled for the largest GPT and PaLM models. On the other hand, for SingleOp, the easiest subset of MAWPS which only requires a single step to solve, performance improvements were either negative or very small(see Appendix Table 3)."

➜ CoT Prompting은 복잡한, 더 어려운 문제를 해결하는 경우에 강점이 있음

"Third, chain-of-thought prompting via GPT-3

175B and PaLM 540B compares favorably to prior state of the art, which typically finetunes a task-specific model on a labeled training dataset. Figure 4 shows how PaLM 540B uses chain-ofthought prompting to achieve new state of the art on GSM8K, SVAMP, and MAWPS (though note that standard prompting already passed the prior best for SVAMP). On the other two datasets, AQuA and ASDiv, PaLM with chain-of-thought prompting reaches within 2% of the state of the art (Appendix Table 2)."➜ CoT Prompting을 적용한 PaLM(540B), GPT3(175B)는 Fine-tuning 모델보다 더 좋은 성능을 내 SoTA를 달성

또한 어떤 경우에 오답을 말했는지도 체크하고자 GSM8K 데이터셋 실험 결과를 뽑아 보았는데,

- 정답 Tracking : 50문제 중 일단 정답은 50개 다 맞았지만, 그 중 2개는 우연히 맞은 케이스였음

- 오답 Tracking : 50문제 중 일단 오답은 50개로 다 틀렸는데, 46%는 계산 오류, 기호 매핑 오류, 추론 단계 missing 이였고, 54%는 semantic understanding or coherence 라는데 약간 논리적으로 말이 안되는 추론을 한 경우라고 보면 될 거 같다.

또, 논문에서도 설명하지만 PaLM 62B에서 틀렸던 문제는 모델 사이즈를 키워서 PaLM 540B에서는 맞췄다고 한다. 특히 one-step missing 이나 semantic understanding error 들을 큰 모델은 해결했다고 한다.

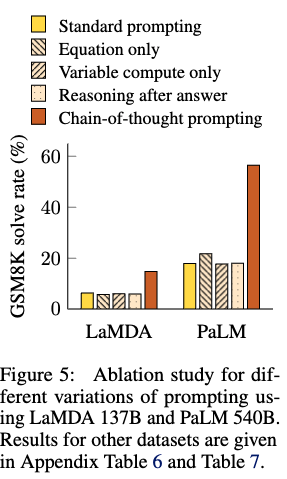

Ablation Study

다른 종류의 prompting 도 해보는 실험을 했다.

1) Equation only

- 수식만 prompting에 주는 실험

- 좋지 않은 성능을 보이는데, 결국 자연어적인 추론 단계를 넣어주는게 핵심임을 보임

2) Variable compute only

- CoT의 성공 요인이 단순히 "더 많은 계산 리소스를 사용하는 것" 때문인지, 아니면 "중간 계산 과정을 자연어로 표현하는 것"에 있는지를 구분하려는 목적임

- 모델에게 체인 오브 생각 방식 대신, 문제를 푸는 데 필요한 문자 수만큼의 점

(.)을 출력하게 했는데, 예를 들어, 어떤 수식을 푸는 데 필요한 문자 수가 10이라면, 모델이..........(10개의 점)을 출력하게 함 - 결과적으로, 점

(.)만 출력하도록 한 모델의 성능이 기존의 베이스라인 모델과 거의 동일하게 나왔고, 이 결과는 단순히 계산 리소스(점 출력 = 토큰 수)가 많아진다고 해서 성능이 향상되는 것은 아니라는 점을 보여줌

3) Chain of thought after answer

- CoT 방식이 "단계별 사고 과정"이 아니라, 모델이 이미 학습한 지식을 더 잘 활성화시키는 효과 때문인지 확인하고자 함

- 이 실험에서는 CoT의 순서를 바꾸어, 답을 먼저 출력한 뒤 체인 오브 생각을 작성하도록 모델을 설정했습니다.

- 기존 방식: 문제 → CoT(중간 계산) → 최종 답

- 변형 방식: 문제 → 최종 답 → CoT(중간 계산)

- 답을 먼저 출력하고 CoT를 뒤에 작성한 모델의 성능이 기존 베이스라인 모델과 거의 동일하게 나왔는데,CoT 방식이 단순히 사전 학습된 지식을 활성화하기 위한 도구로만 작동하는 것은 아님

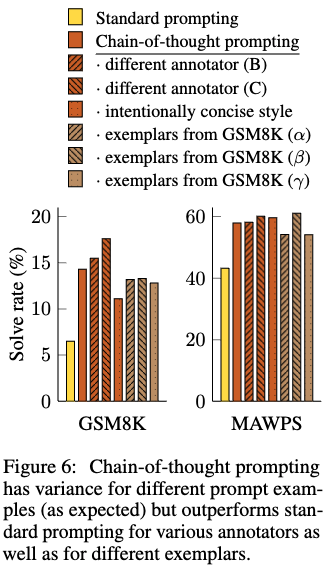

Robustness of Chain of Thought

- 8개의 CoT prompting의 글을 작성한 annotator를 변경해도, 일단 기존 Standard Prompting 보다는 나은 결과를 모든 경우에서 보인다, 그렇기에 언어적 스타일에 크게 의존하지 않는다는 것을 확인함

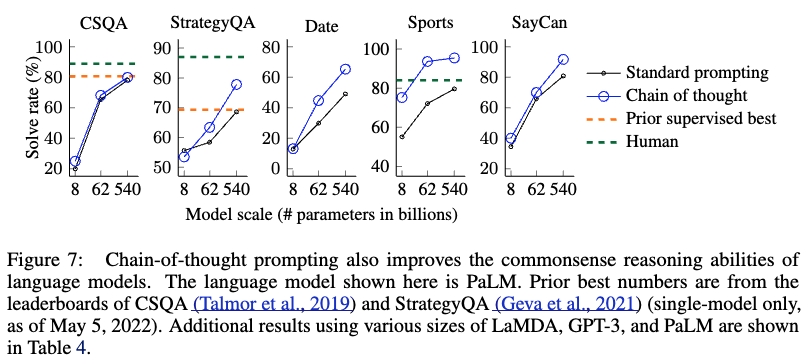

(2) Commensense Reasoning

사전학습된 모델의 지식을 바탕으로, 일상 생활에서 경험할 수 있는 간단한 문답을 어느정도로 답변할 수 있는지를 보는 거다.

Benchmark

아래와 같은 Benchmark 데이터셋을 사용했다.

-

CSQA

-

StrategyQA

-

Date understanding and sports understanding from BIG-Bench

-

SayCan

-

math는 exampler를 8개+4개 만들어서 1shot 예제로 넣었다면, 여기선 벤치마크별로 6~10개를 만들었고, 아래와 같다.

Results

결론적으로, Commonsense Reasoning 에서도 CoT Prompt가 효과가 있음을 확인할 수 있었다. 또한, 모델 스케일이 클 때 퍼포먼스가 나온다는 것도 동일했다.

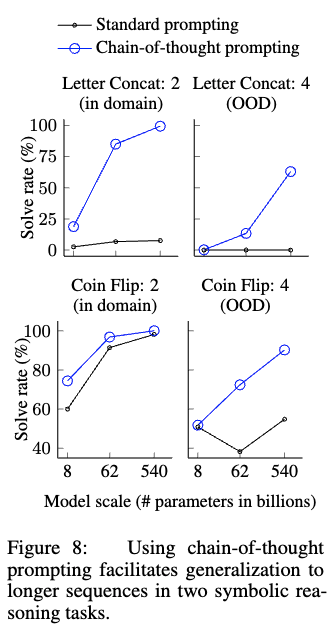

(3) Symbolic Reasoning

Symbolic Reasoning 인간에게는 비교적 쉬운 문제(예: 수식 계산, 논리 연산 등)지만, 언어 모델에게는 까다로울 수 있는 작업을 말한다. 기존의 Standard Prompting 방식에서는 이러한 심볼릭 추론 작업에서 언어 모델이 종종 한계를 보이는데, 단순히 정답을 맞히기보다 단계별 사고가 필요한 문제들이 많기 때문이다. 하지만 CoT는 이를 해결했다.

Tasks

아래의 두가지 Task로 Symbolic Reasoning 실험을 수행함

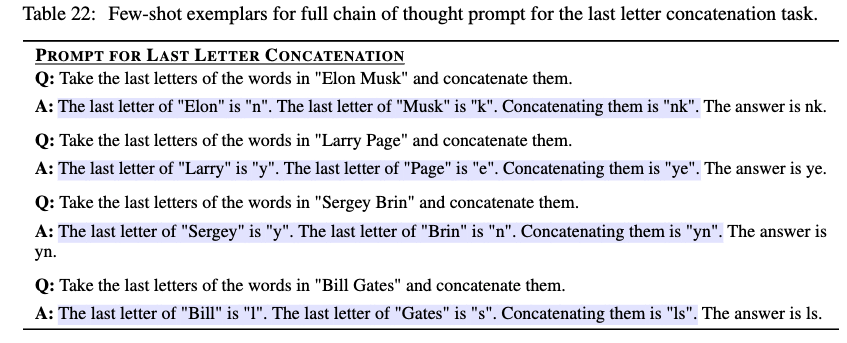

(1) Last Letter Concatenation

- 문제 정의: 주어진 이름에서 각 단어의 마지막 글자를 추출해 연결하는 작업 (예:

"Amy Brown"→"yn") - 기존의 "첫 글자 추출(First Letter Concatenation)" 작업보다 더 어려움 (예:

"Amy Brown"→"AB"는 체인 오브 생각(CoT) 없이도 언어 모델이 쉽게 수행 가능) - 마지막 글자를 추출하는 작업은 더 많은 추론 과정과 작업 메모리가 필요하므로 어려운 문제로 간주

- 데이터 생성 방법: 미국 인구조사 데이터(name census data)의 상위 1,000개의 이름에서 무작위로 이름(이름과 성)을 결합해 생성

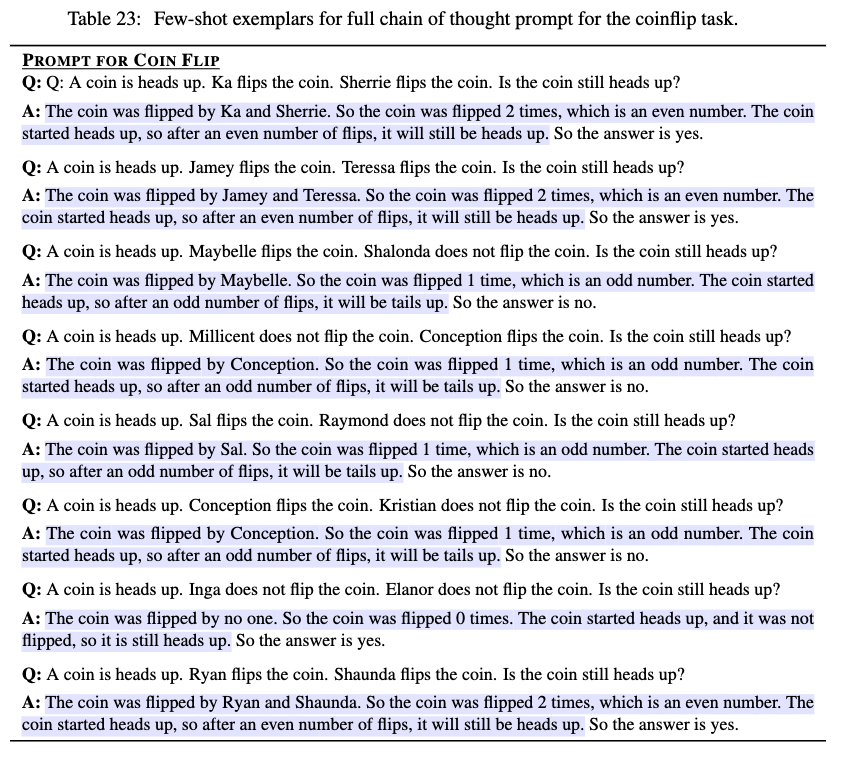

(2) Coin Flip

- 문제 정의: 주어진 사람들이 동전을 뒤집거나(Flip) 뒤집지 않는 행동을 수행한 후, 동전의 최종 상태를 추론

(예:입력: "A coin is heads up. Phoebe flips the coin. Osvaldo does not flip the coin. Is the coin still heads up?" ->출력: "no") - 각 사람의 행동(뒤집음/뒤집지 않음)을 추적해야 하므로 논리적 추론 과정이 필요

- 단순한 논리 문제처럼 보이지만, 여러 단계의 조건을 기억하고 처리해야 하므로 모델에겐 까다로운 작업

in-out domain 으로 나누어서 실험을 진행함

- In-Domain Test: 훈련 데이터와 동일한 단계 수를 요구하는 테스트

- Out-of-Domain (OOD) Test: 훈련 데이터보다 더 많은 단계(복잡한 문제)를 요구하는 테스트 세트

실제로 작성한 CoT Prompt는 태스크 별로 아래와 같다.

Results

결론적으로, Symbolic Reasoning 에서도 CoT Prompt가 효과가 있음을 확인할 수 있었다.

-

In-Domain 평가:

- PaLM 540B 모델:

- 체인 오브 생각(CoT) 프롬프팅으로 거의 100% 정답률 달성.

- 동전 뒤집기(Coin Flip) 작업은 CoT 없이도 PaLM 540B에서는 성공했지만, LaMDA 137B는 CoT가 필요.

- 작은 모델의 한계:

- 작은 모델은 CoT를 제공받아도 심볼릭 작업 수행에 실패.

- 심볼릭 조작 능력은 100B 이상의 대규모 모델에서만 나타남.

- PaLM 540B 모델:

-

OOD 평가:

- 표준 프롬프팅: 두 작업 모두 실패.

- CoT 프롬프팅:

- 대규모 모델에서 길이 일반화(length generalization) 가능.

- 성능은 In-Domain보다 낮지만, 더 긴 작업에서도 CoT가 효과적임.

-

결론:

- CoT는 대규모 모델에서 추론 능력을 크게 향상시키며, 훈련 데이터에서 보지 못한 길이나 복잡성을 가진 문제도 해결 가능.

- 그러나 이러한 능력은 충분히 큰 모델 규모(100B 이상)에서만 발현.

Discussion

🦄 연구 성과

-

CoT의 성능 개선:

- CoT 프롬프트는 산술 추론(arithmetic reasoning)에서 기존 방식보다 큰 성능 향상을 보임.

- 다양한 주석자, 예제, 언어 모델에 대해 일관되게 효과적임.

-

적용 가능성:

- CoT는 다단계 추론(multi-step reasoning)을 요구하는 작업에 일반적으로 적용 가능.

- 심볼릭 추론(symbolic reasoning)에서는 훈련 시 예제보다 더 긴 시퀀스 입력에 대해서도 성능을 유지하며 OOD(Out-of-Distribution) 일반화를 가능하게 함.

-

간단한 구현:

- CoT는 별도의 모델 파인튜닝 없이, 기존의 사전 학습된 언어 모델에 프롬프트를 통해 바로 적용 가능.

-

모델 스케일의 중요성:

- CoT 추론은 모델 크기 증가와 함께 나타나는 경향이 있음.

- 기존 프롬프팅 방식의 성능 한계를 넘어서, 모델이 해결할 수 있는 작업의 범위를 확장.

😅 한계점

-

진정한 '추론' 여부:

- CoT가 인간의 사고 과정을 모방하지만, 모델이 실제로 "추론(reasoning)"을 하고 있는지는 여전히 미해결된 질문.

-

주석 비용:

- Few-shot 설정에서는 CoT를 수작업으로 추가하는 비용이 적지만, 대규모 데이터셋에 적용하거나 파인튜닝하려면 주석 비용이 크게 증가할 가능성.

- 이를 해결하기 위해 합성 데이터 생성이나 제로샷 일반화가 대안이 될 수 있음.

-

정확하지 않은 추론 경로:

- CoT는 올바르지 않은 추론 과정도 생성할 수 있어, 정답과 오답이 혼재될 위험.

- 모델의 사실적(factual) 생성 능력을 개선하는 것이 앞으로의 과제.

-

모델 크기의 한계:

- CoT는 대규모 모델에서만 유의미한 성능 향상이 나타나, 실제 애플리케이션에 적용하기엔 비용이 높음.

- 소규모 모델에서도 추론 능력을 유도할 수 있는 방법에 대한 추가 연구가 필요.

🔥 앞으로의 연구 방향

-

모델 스케일 증가와 추론 능력:

- 모델 크기를 더욱 키울 경우, 추론 능력이 얼마나 더 향상될 수 있을지 탐구.

-

다른 프롬프트 방식:

- CoT 외에도 언어 모델이 해결할 수 있는 작업 범위를 확장할 수 있는 새로운 프롬프트 방식을 탐구.

-

합리적인 추론 과정 보장:

- CoT가 생성하는 추론 경로의 정확도를 높이는 방법 연구.

-

소규모 모델에서의 적용 가능성:

- 작은 모델에서 CoT를 유도할 수 있는 효율적인 방법 개발.