SR_IOV Test in Intel 10Gbps NIC

0. Setting:

Host Setting:

NIC: Intel 10G

VM: VFs from SR-IOV

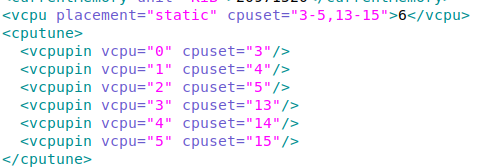

VM Sender side VM 1:

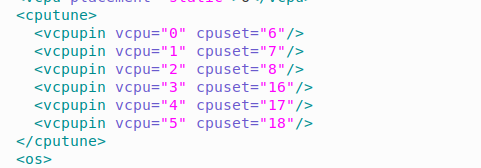

6cores(3cores 2threads P#3-5,13-15) were given as below:

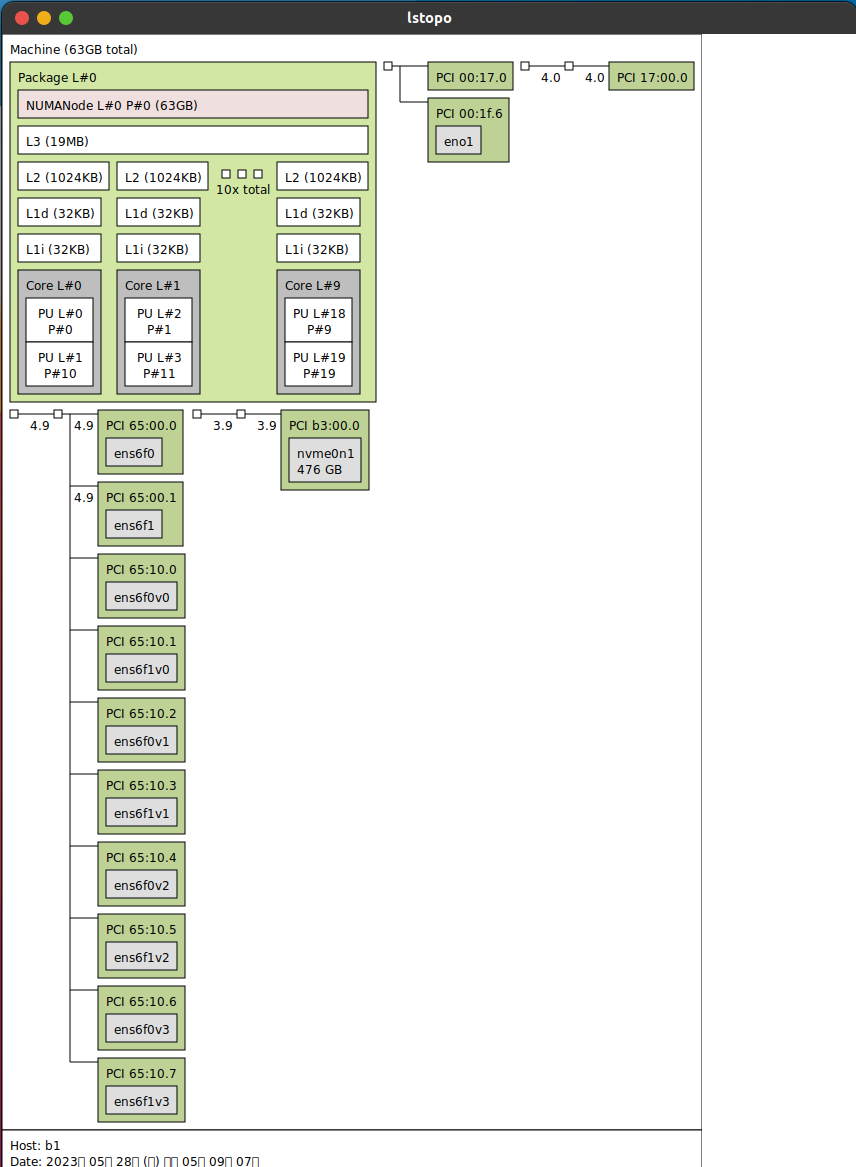

These were set based on sibiling corse ( which threads share same core), which was known from

lstopo:

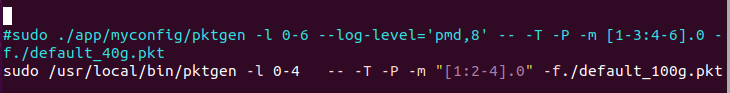

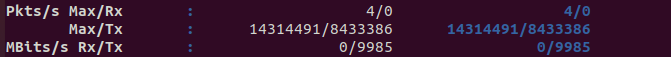

DPDK-Pktgen Baseline Test:

Gave 1 rx core // 1 tx core

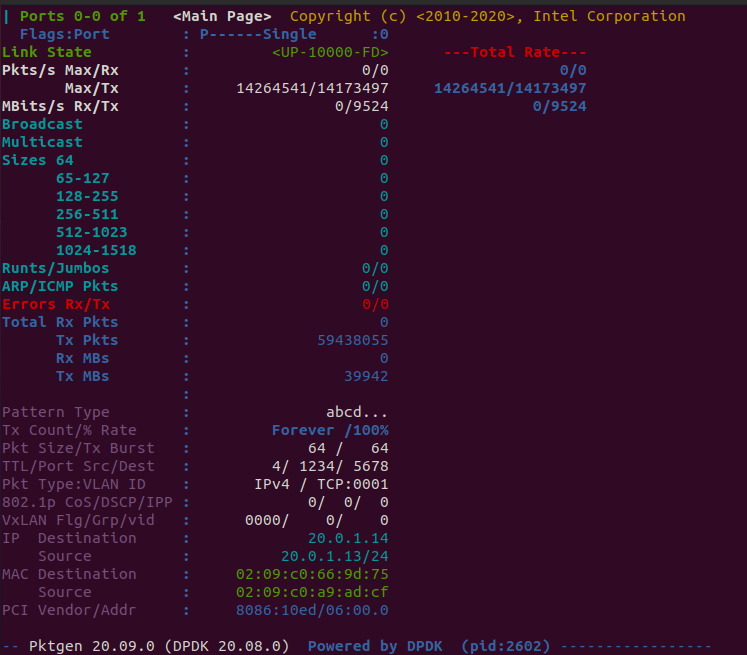

64byte Packet:

Approximately, >= 9.5Gbps (Allocating more CPU Cores did not show improvement in performance)

128 byte Packet:

Approximately, ~= 10Gbps

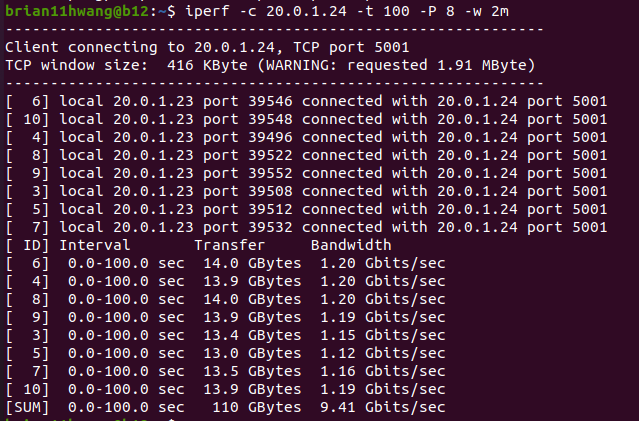

Iperf Baseline Test:

Server side : iperf -s -w 2m

Client side: iperf -c <recv_ip> -t 100 -w 2m -P 8

Approximately, >= 9.4Gbps

VM Sender side VM 2:

6cores(3cores 2threads P#6-8,16-18) were given as below:

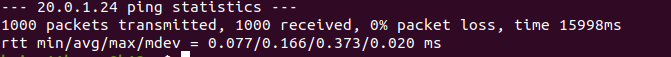

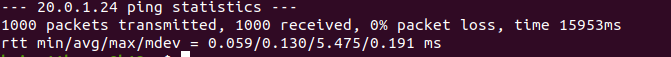

Ping Baseline Test:

with command sudo ping 20.0.1.24 -c 1000 -i 0.01

Result showed:

1. Test Result

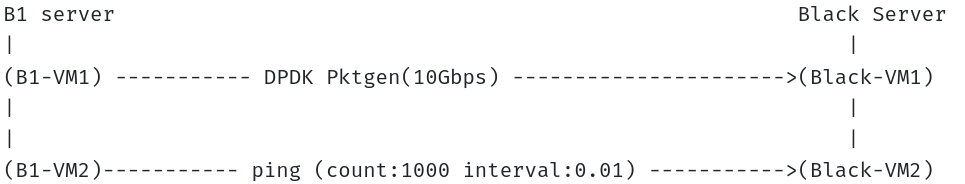

1.1 Message Rate Sensitive (Pktgen) vs Latency Sensitive (Ping)

Test was done with both DPDK side have Pktgen on

64 byte Packet:

128 byte Packet:

Result showed not much difference on latency and NO PACKET LOSS

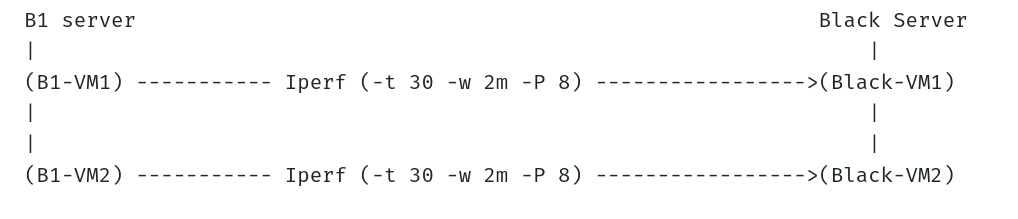

1.2.1. Bandwidth Sensitive (iperf) vs Bandwidth Sensitive (iperf)

Server side : iperf -s -w 2m

Client side: iperf -c <recv_ip> -t 30 -w 2m -P 8

Surprisingly, it showed exact 4.73Gbps (4.73 * 2 = 9.46 Gbps), which showed exact half of the maximum bandwidth.

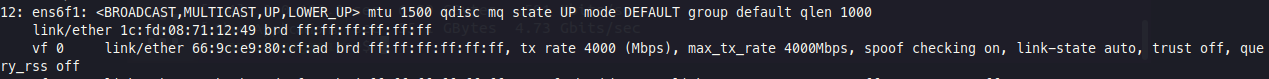

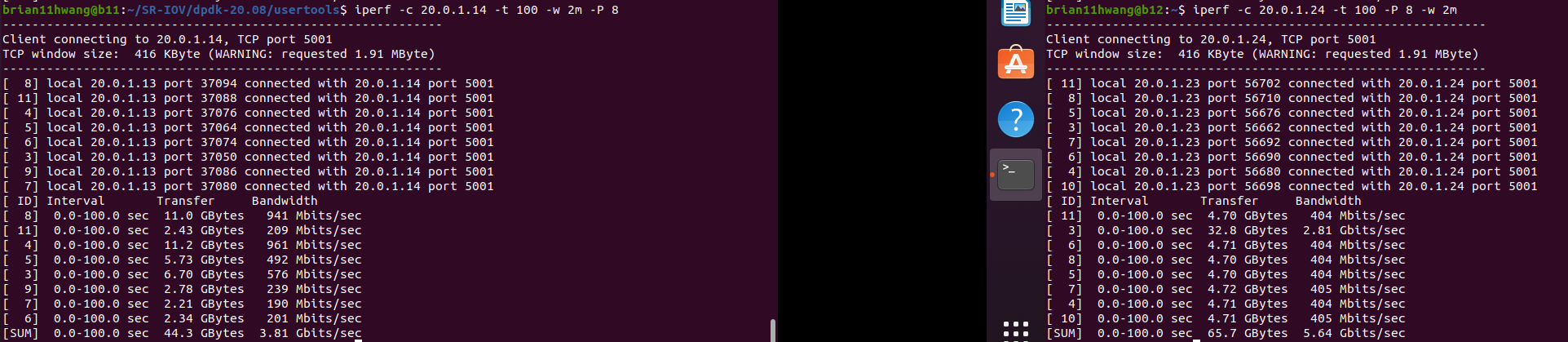

1.2.2. Bandwidth Sensitive (iperf) vs Bandwidth Sensitive (iperf) w/ Rate limit

Because each side of iperf showed 4.73Gbps, I tried to set a rate limit on VM1:

sudo ip link set ens6f1 vf 0 max_tx_rate <rate limit>:

Then, results were:

Rate Limit - 3000:

4.71Gbps(VM1) + 4.73Gbps(VM2) = 9.44 Gbps

Rate Limit - 2000:

3.81Gbps(VM1) + 5.64Gbps(VM2) = 9.45 Gbps

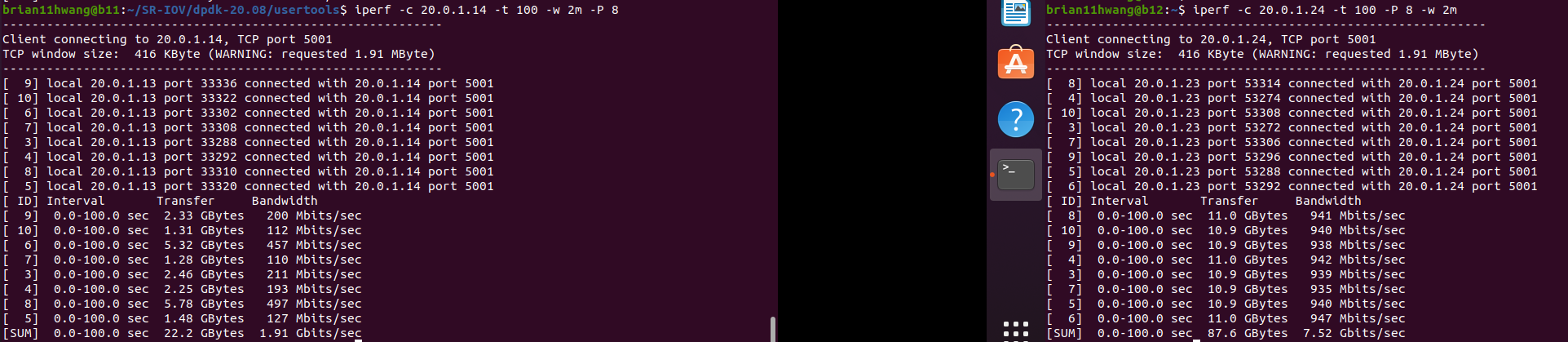

Rate Limit - 1000:

1.91Gbps(VM1) + 7.52Gbps(VM2) = 9.43 Gbps

Although BW were a bit higher than the max_rate_limit, Rate limit was set, and we could see that VM2 were getting better BW from the overall max BW.

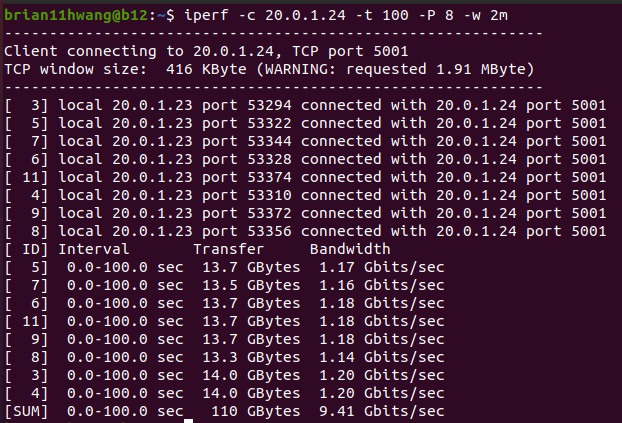

1.2.3. Bandwidth Sensitive (iperf) vs Bandwidth Sensitive (iperf) w/ CPU difference

VM1: 6cores(3cores 2threads P#3-5,13-15)

VM2: 2cores(1cores 2threads P#6,16)

VM2 alone iperf performance:

VM2 showed 9.41Gbps.

VM1 and VM2 iperf together :

4.72Gbps(VM1) + 4.71Gbps(VM2) = 9.43 Gbps