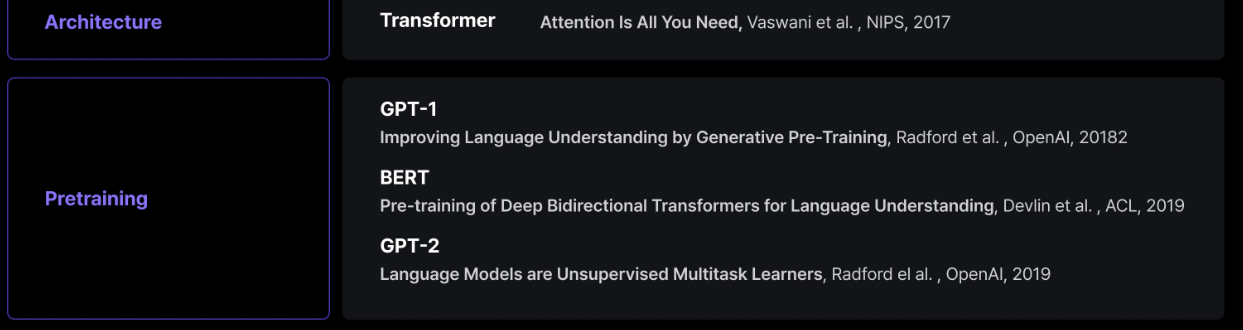

Architectures

Attention is all you need, Vaswani et al., NIPS, 2017

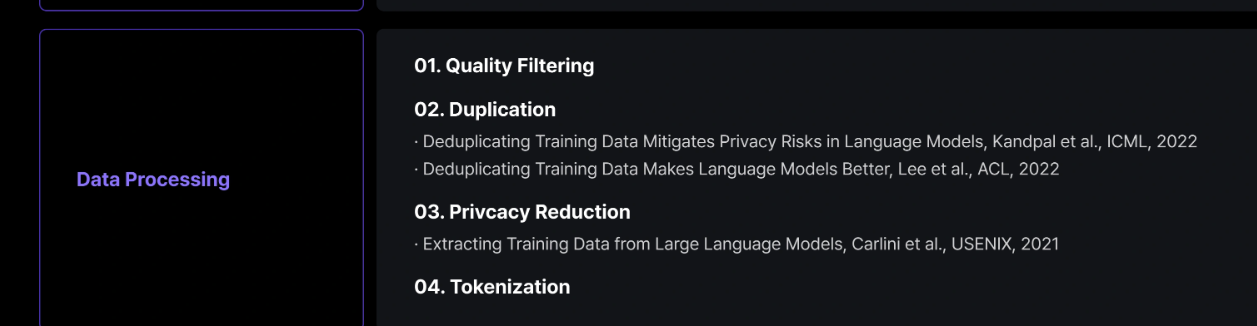

Data Processing

02. Duplication

- Deduplicating Training Data Mitigates Privacy Risks in Language Modes, Kadpal et al, ICML, 2022

- Deduplicating Training Data Makes Language Models Better, Lee at al., ACL, 2022

03 Privacy Reduction

- Extracting Training Data fom Large Language Models, Crlini et all., USENIX, 2021

04. Tokenization

Pretraining

- Improving Language Understanding by Generative Pre-Training, Radfod et al. , OpenAI, 20182

- Pre-training of Deep Bidirectional Transformers for Language Understanding, Devlin et al. , ACL, 2019

- Language Models are Unsupervised Multitask Learners, Radford et al., OpenAI 2019

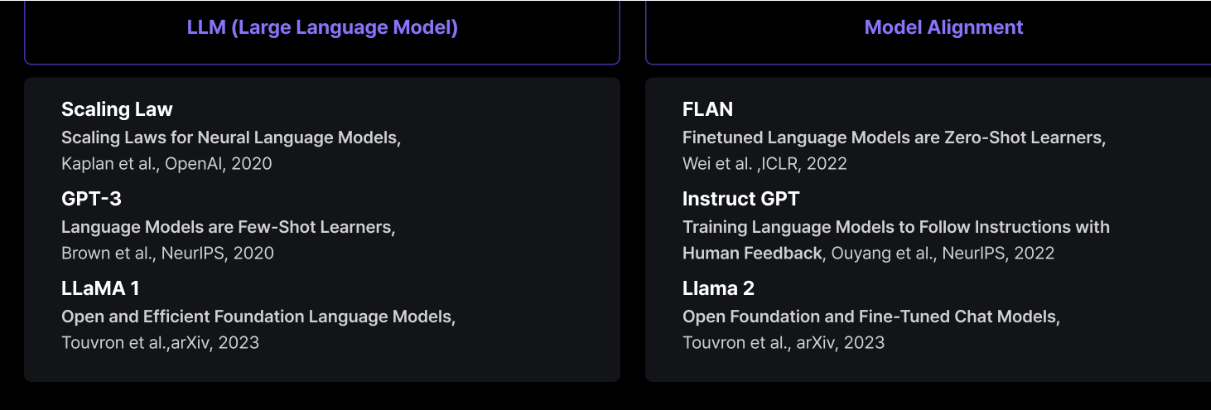

LLM(Large Language Model)

- Scaling Laws for Neural Language Models, kaplan et al., OpenAI, 2020

- Language Models are Few-Shot Learners, Brown et al., NeuralPS, 2020

- Open and Efficient Foundation Language Models, Touvron et al., arXive, 2023

Model Alignment

- Featured Language Models are Zero-Shot Learners, Wei et al., ICLR, 2022

- Training Language Models to Follow Instructions with Human Feedback, Ouyang et al, NeuralPS, 2022

- Open Foundation and Fine-Tuned Chat Models, Touvron et al., arXive, 2023

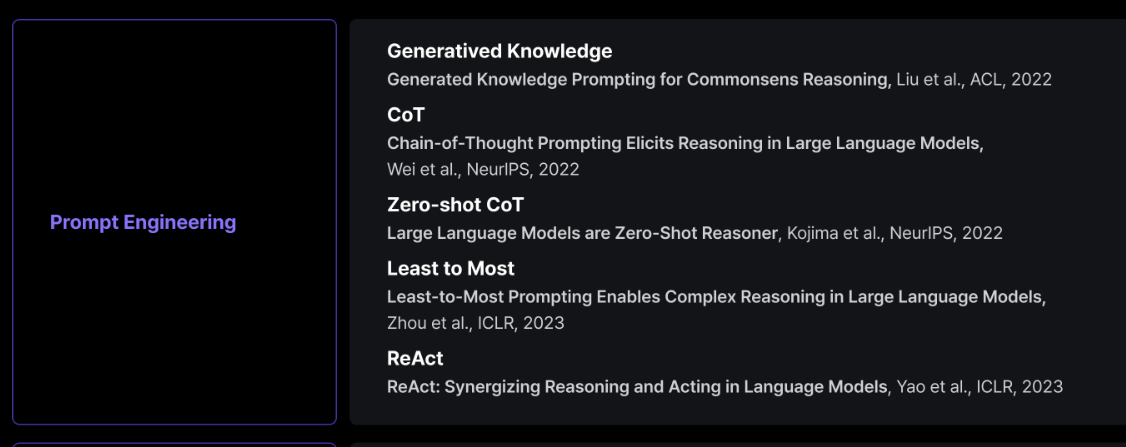

Prompt Engineering

- Generated Knowledge Prompting for Commonsens Reasoning, Liu et al., ACL, 2022

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, Wei et al., NeurlPS, 2022

- Large Language Models are Zero-Shot Reasoner, Kojima et al, NeuralPS, 2022

- Least-to-Most Prompting Enables Complex Reasoning in Large Language Models, Zhou et al., ICLR, 2023

- ReAct Synerging Reasoning and Acting in Language Models, Yao et al., ICLR, 2023

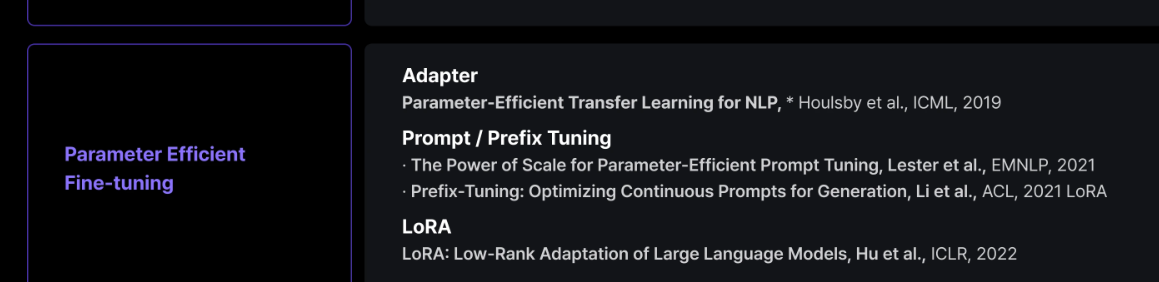

Parameter Efficient Fine-tuning

- Parameter-Efficient Transfer Learning for NLP, Houlsby et al, ICML, 2019

- The Power of Scale for Parameter-Efficient Prompt Tuning, Lester et al, EMNLP, 2021

- Prefix-Tuning: Optimizing Continuous Prompts for Generation, Li et al, ACL, 2021

- LoRA: Low-Rank Adaptation of Large Language Models, Hu et al., ICLR, 2022

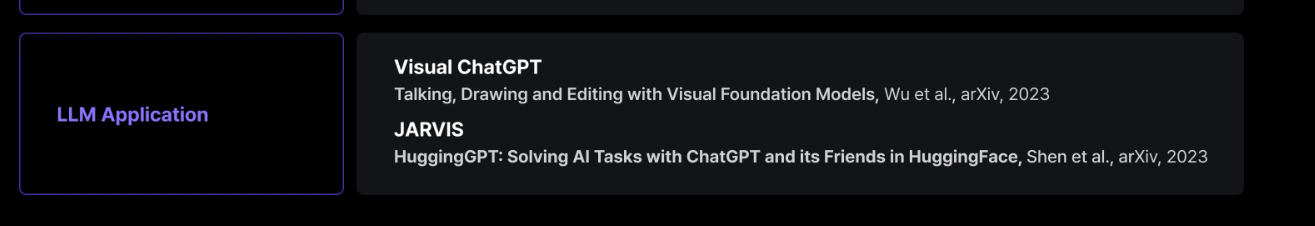

LLM Application

- Talking, Drawing and Editing with Visual Foundation Models, Wu et al, arXive, 2023

- HuggingGPT:Solving AI Tasks with ChatGPT and its Friends in HuggingFace, Shen et al., arXive, 2023