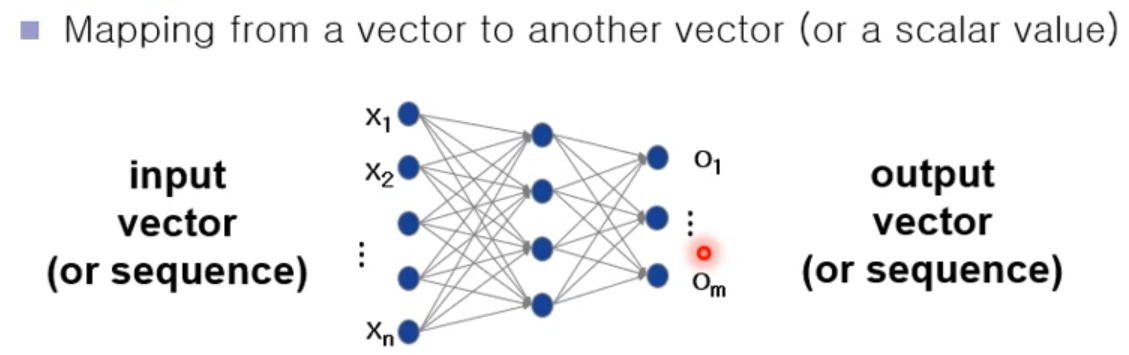

Neural Network

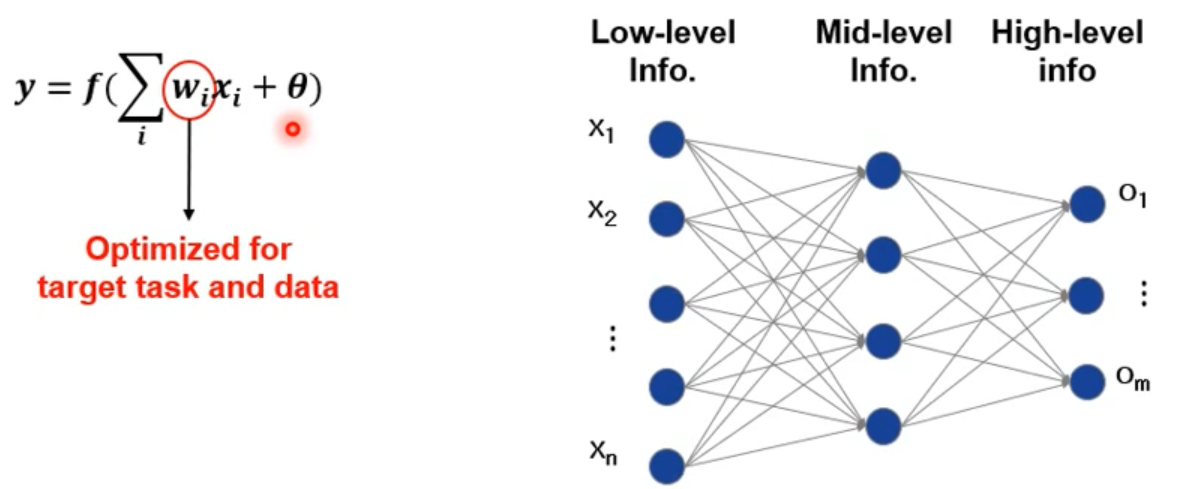

➡️ 입력정보를 merge해서 high-level information로 바꾸는 역할

- NN이 하는 일

- Learn mappings

- vector : 길이가 고정되어 있음

- sequence : 길이가 변할 수 있음

- Learn probability distribution

- Learn mappings

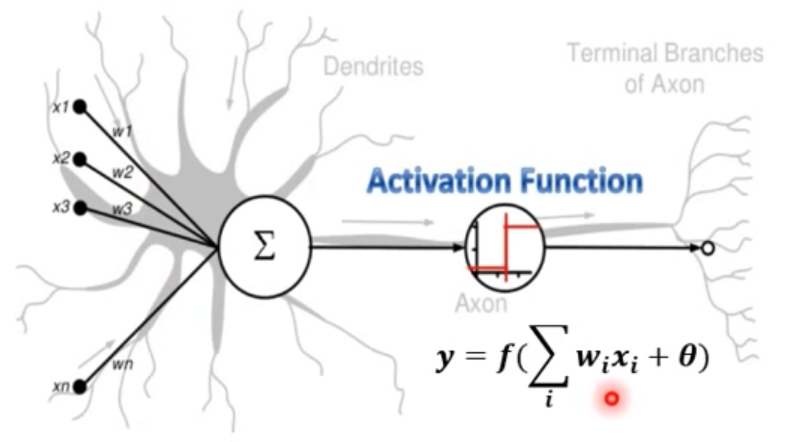

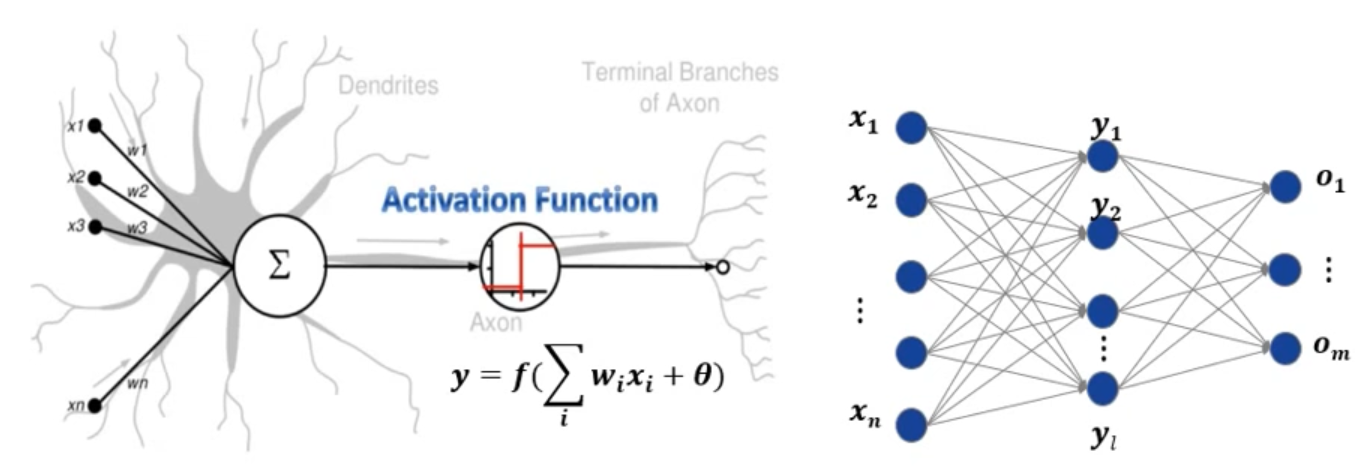

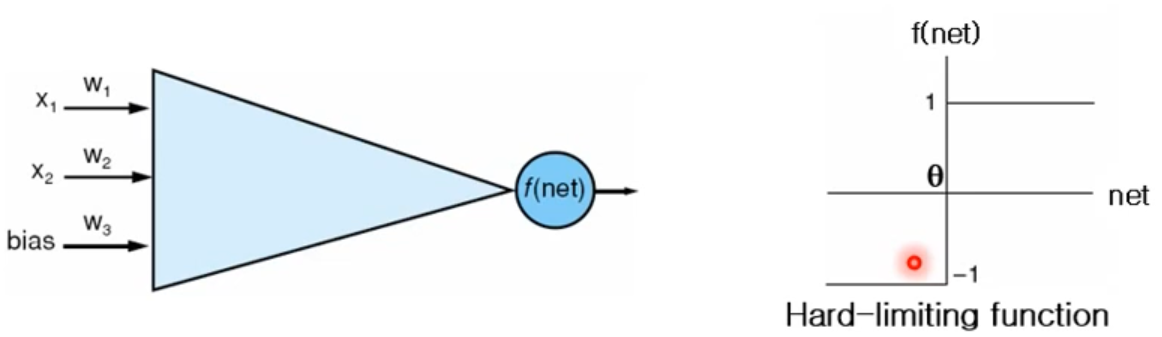

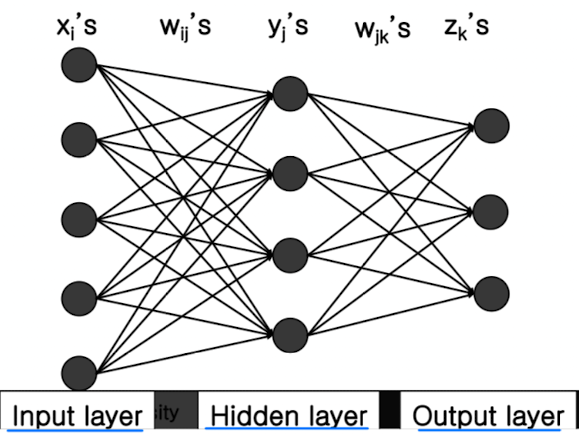

Perceptron Neuron

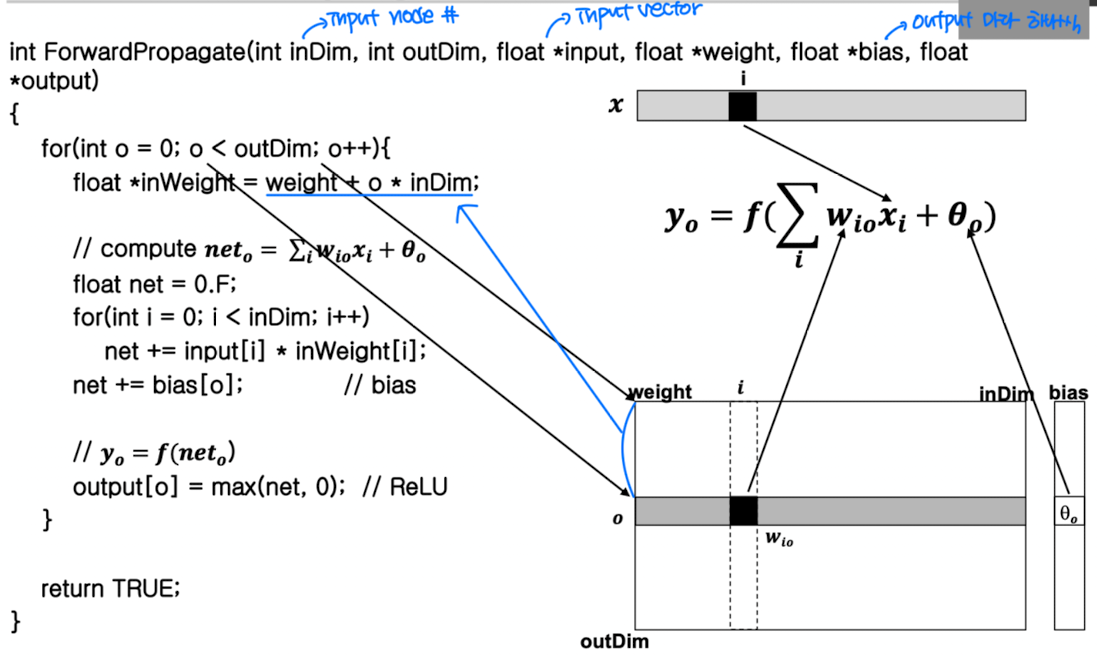

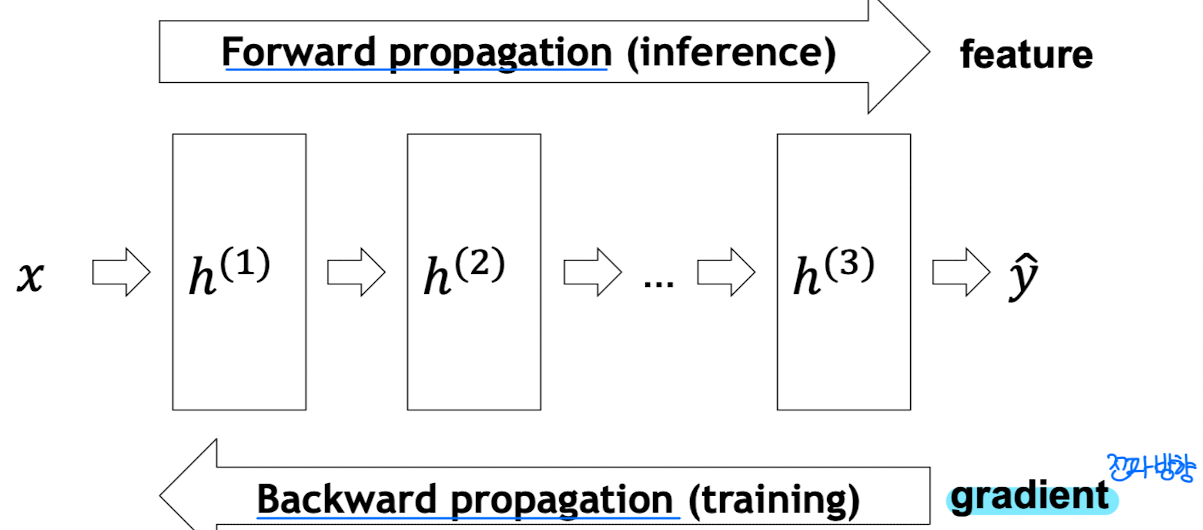

Forward Propagation

➡️ net value : activation function을 통과시키기 전 값

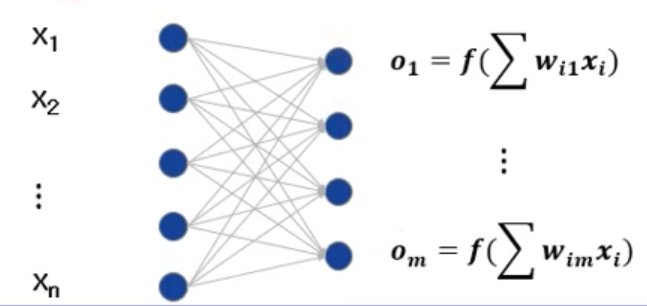

Single-layer Perceptron

: input layer 1개, output layer 1개

- Given

- Network structure and initial weights (random values)

- A set of training samples

- Labels (desired output) for each training sample

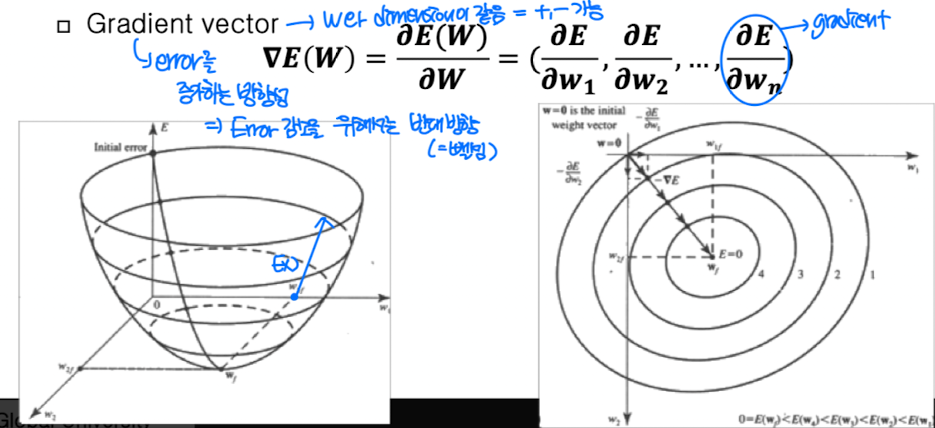

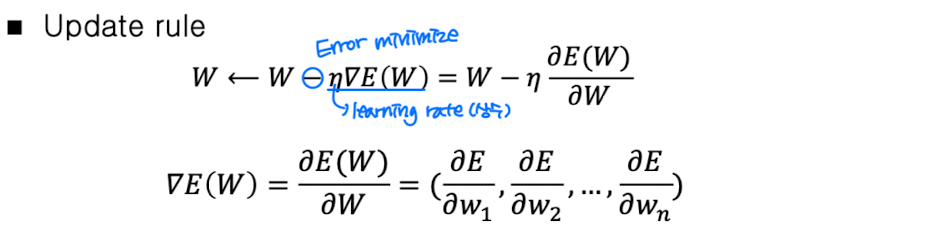

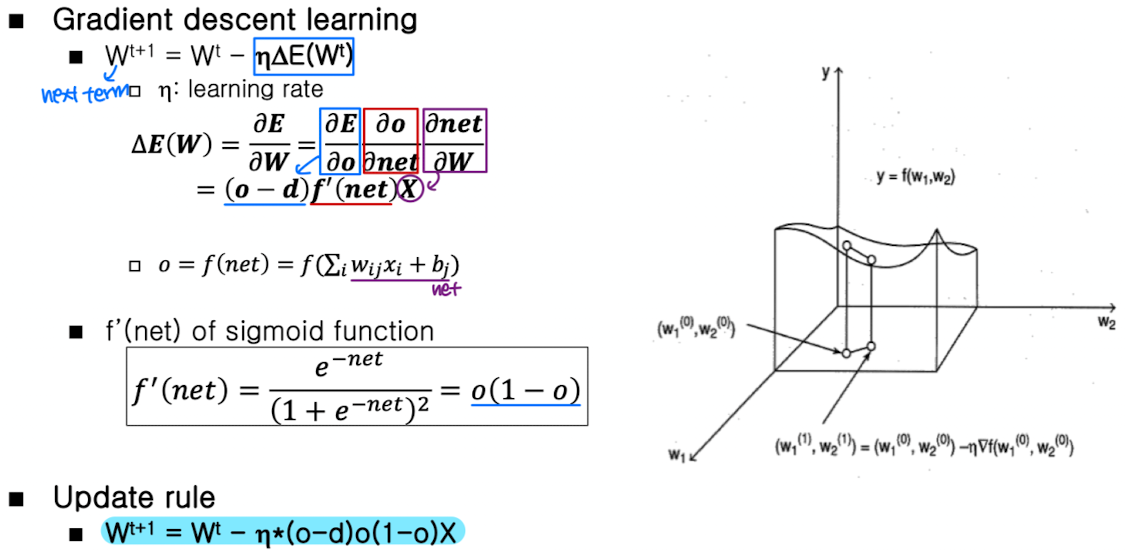

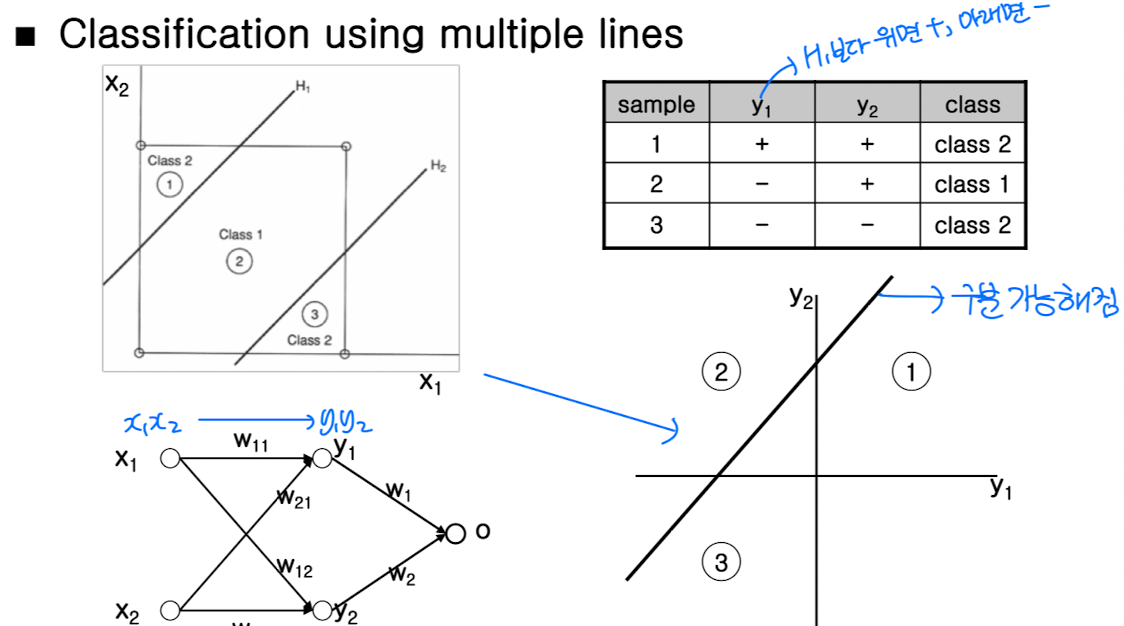

Gradient Descent

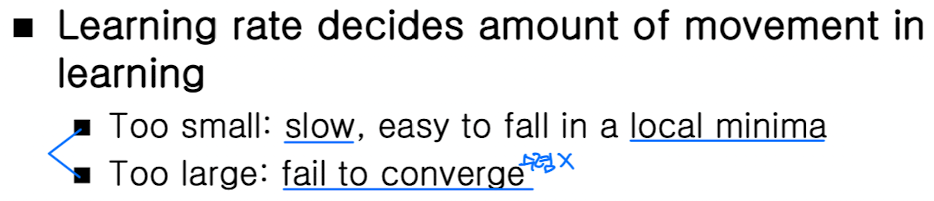

Learning Rate

➡️ 수렴을 결정하는데 중요한 역할!!

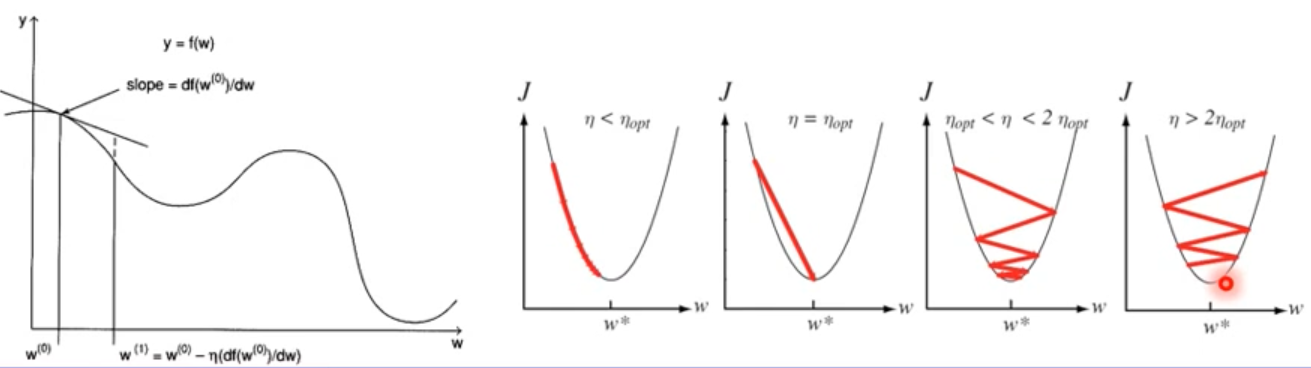

Training Algorithm

Multi-layer Perceptron

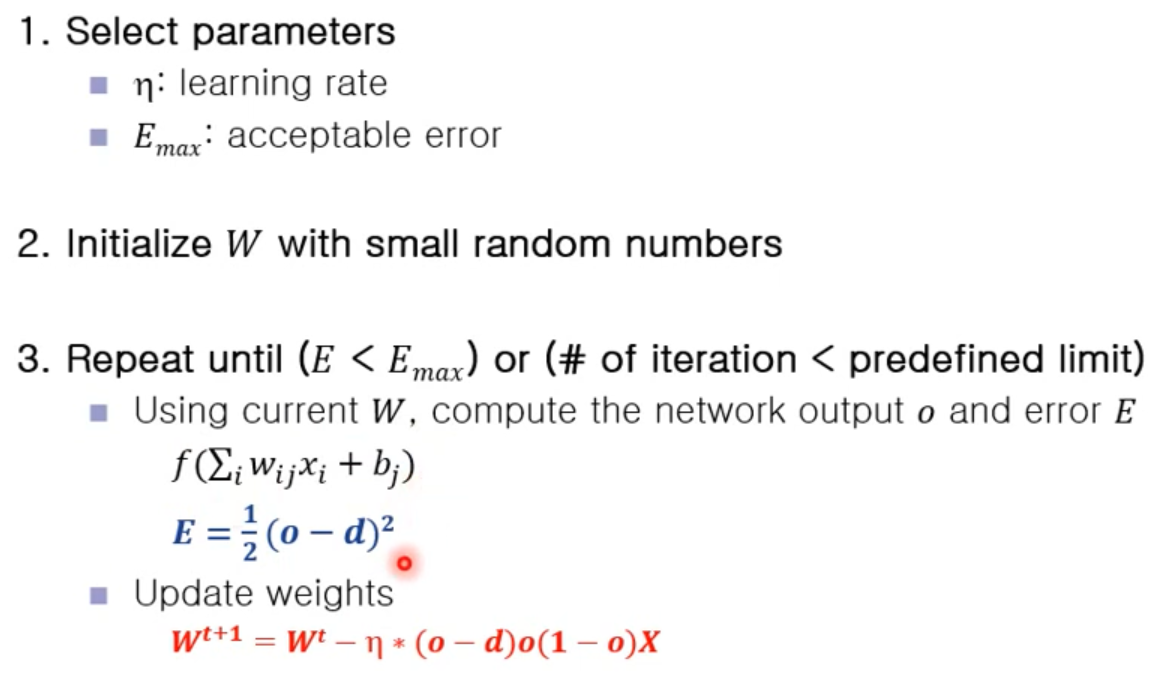

Limitation of Single-layer perception : XOR

➡️ Classification using multiple lines

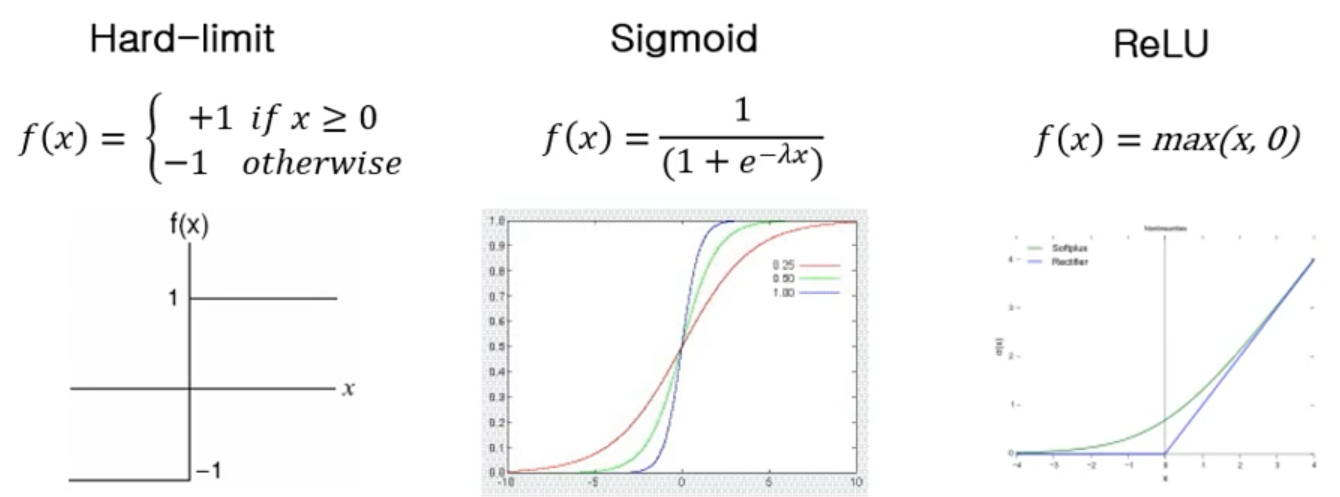

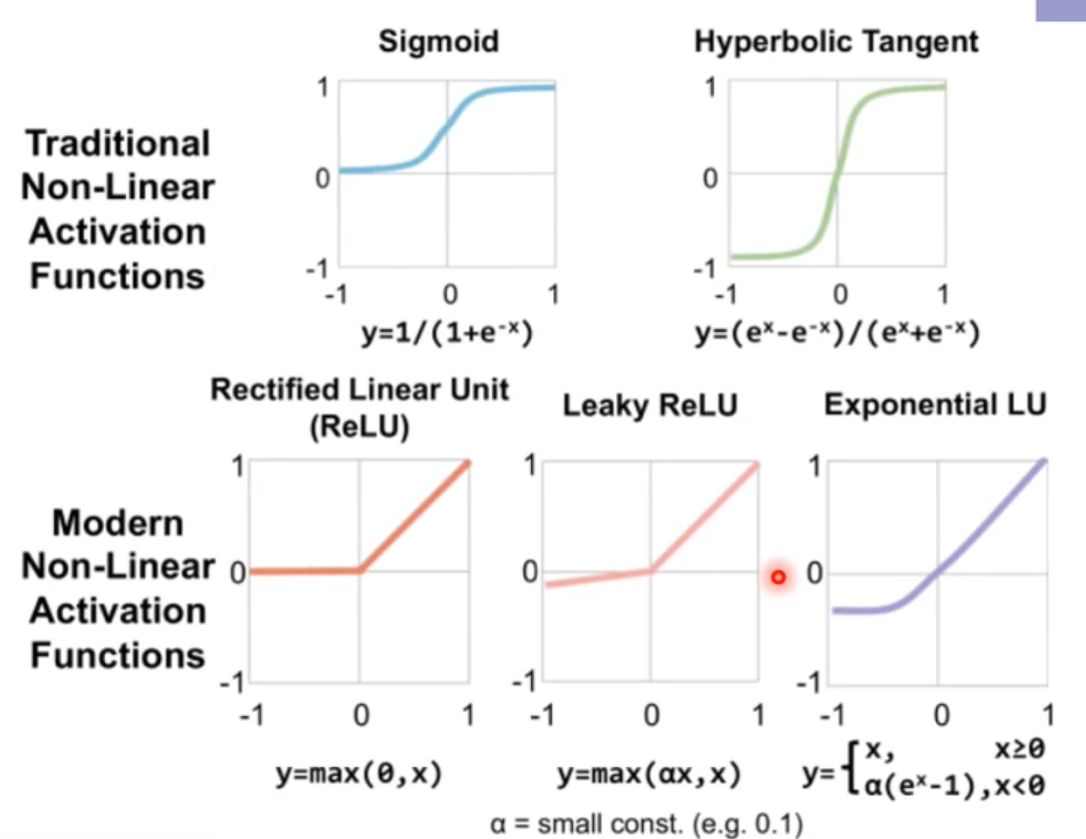

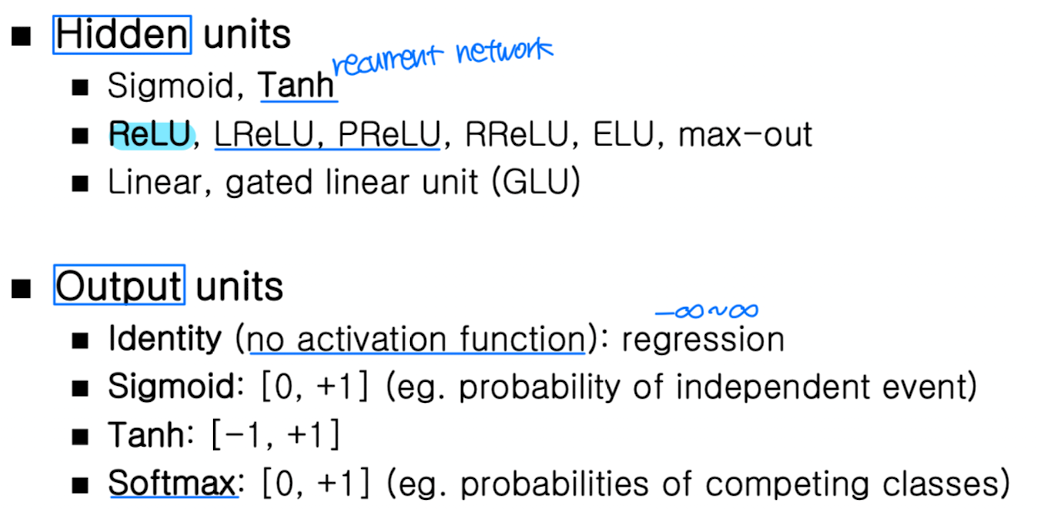

Activation Functions

A.k.a. Non-linearity functions

Why use?

- Non-linearity

- Restrict outputs in a specific range

- Measurement ➡️ probability or decision

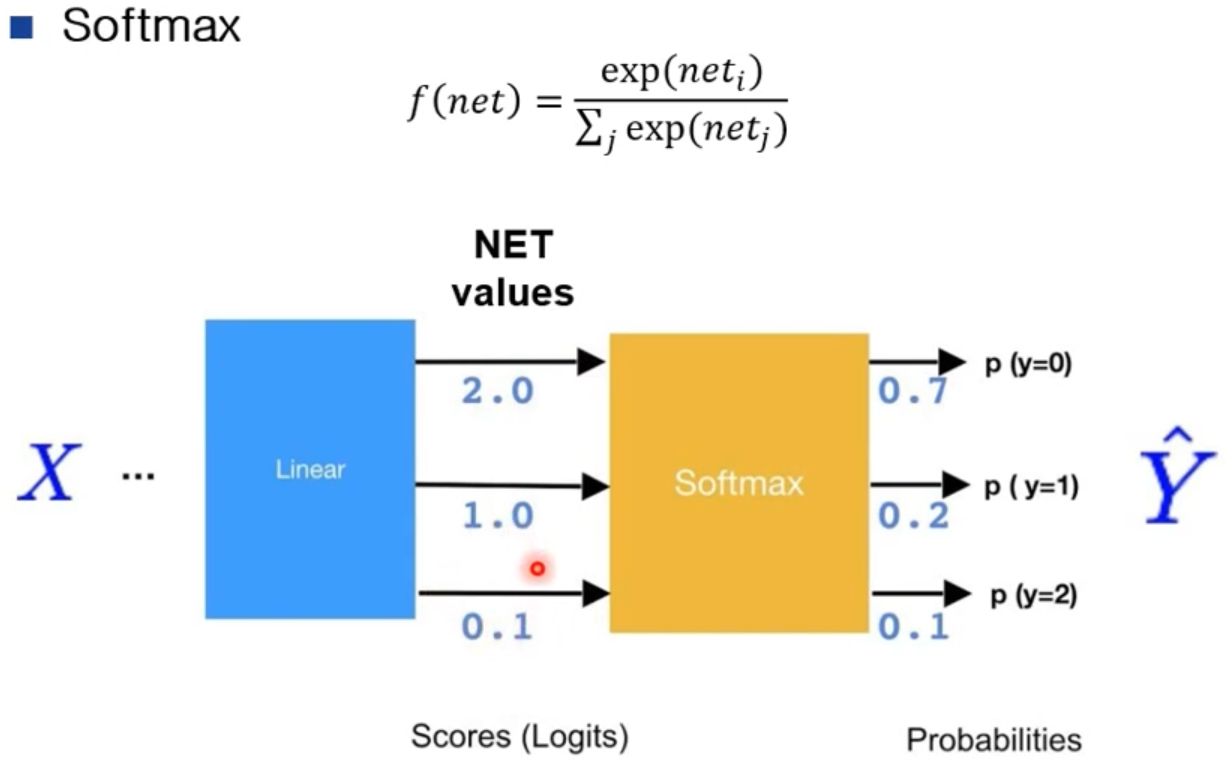

Functions

➡️ 확률로

Where?

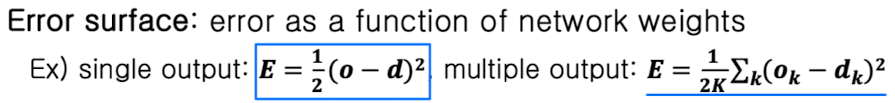

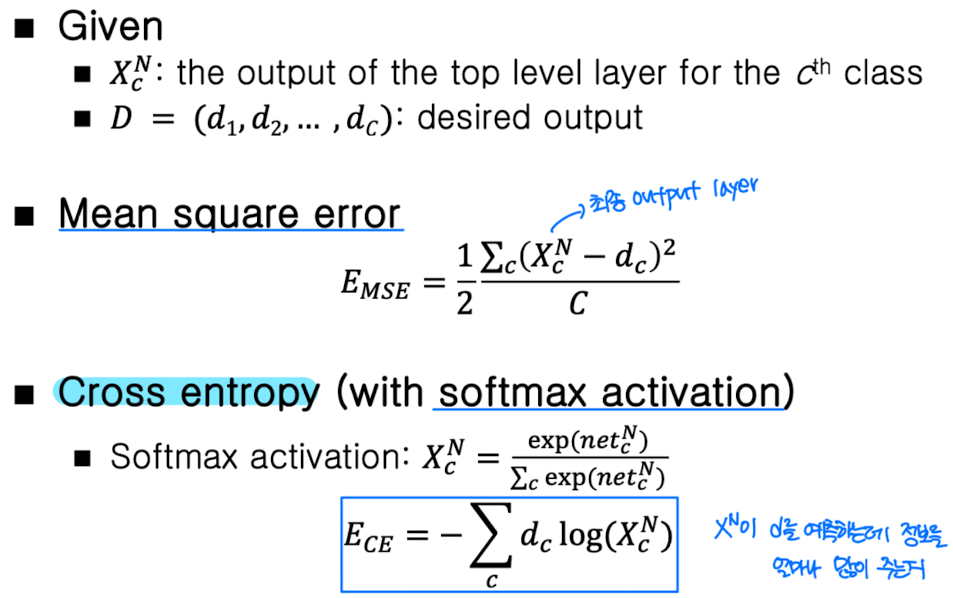

Loss Function ( Error Criteria )

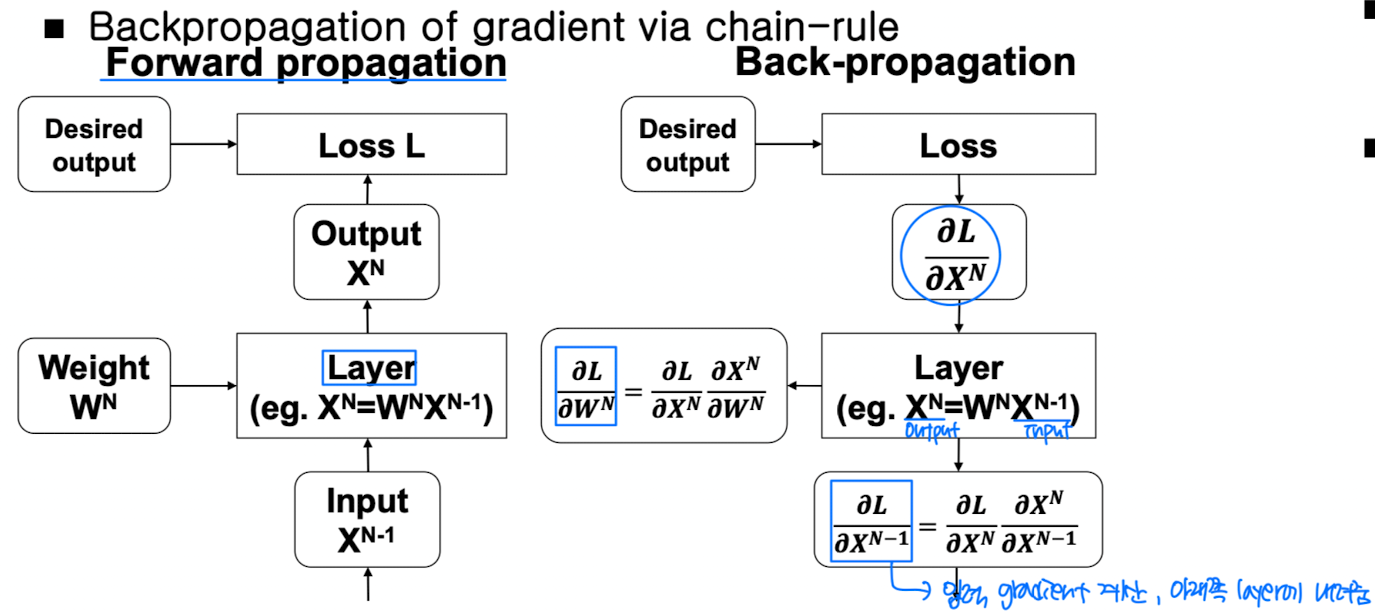

Back-propagation

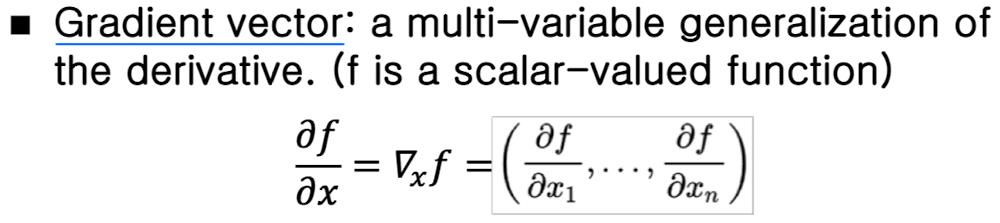

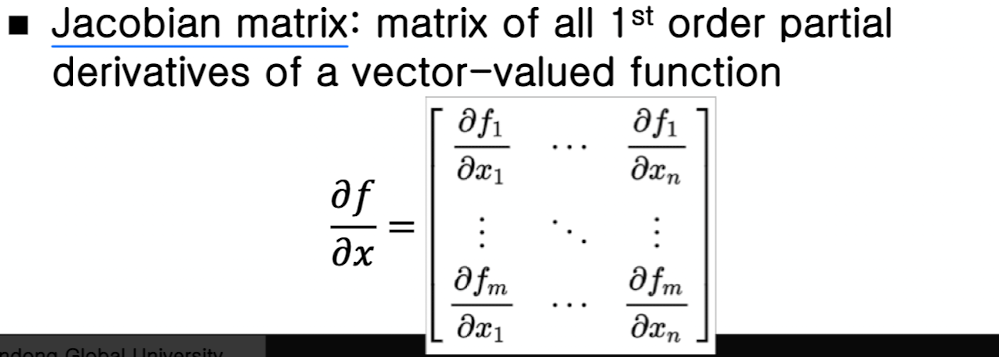

Gradient and Jacobian

➡️ scalar값을 vector로 미분 ➡️ vector값을 vector로 미분

➡️ vector값을 vector로 미분

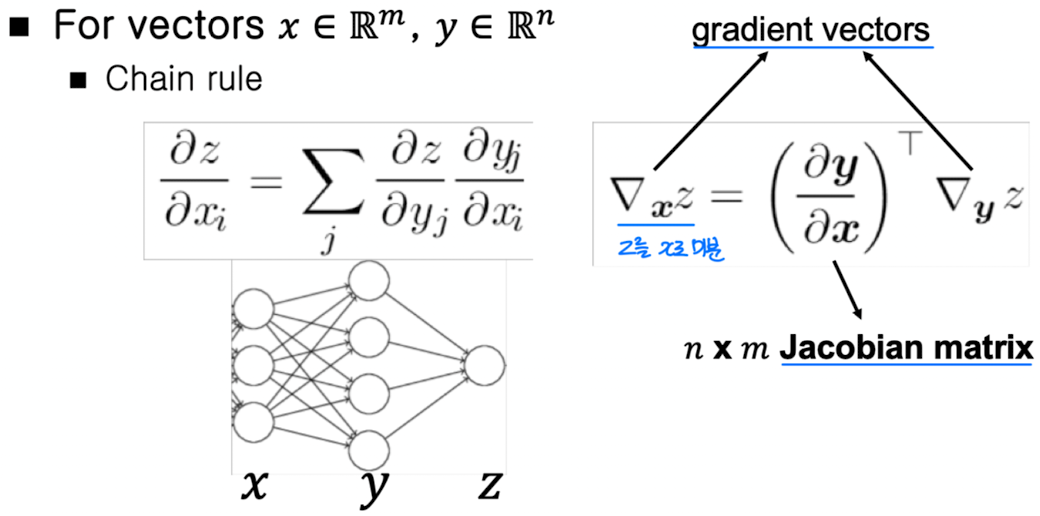

Chain Rule

벡터끼리의 chain rule은 matrix * vector로 나타냄

➡️ 출력 gradient * jacobian matrix = 앞쪽(input쪽) gradient

Back-propagation on Neural Nets

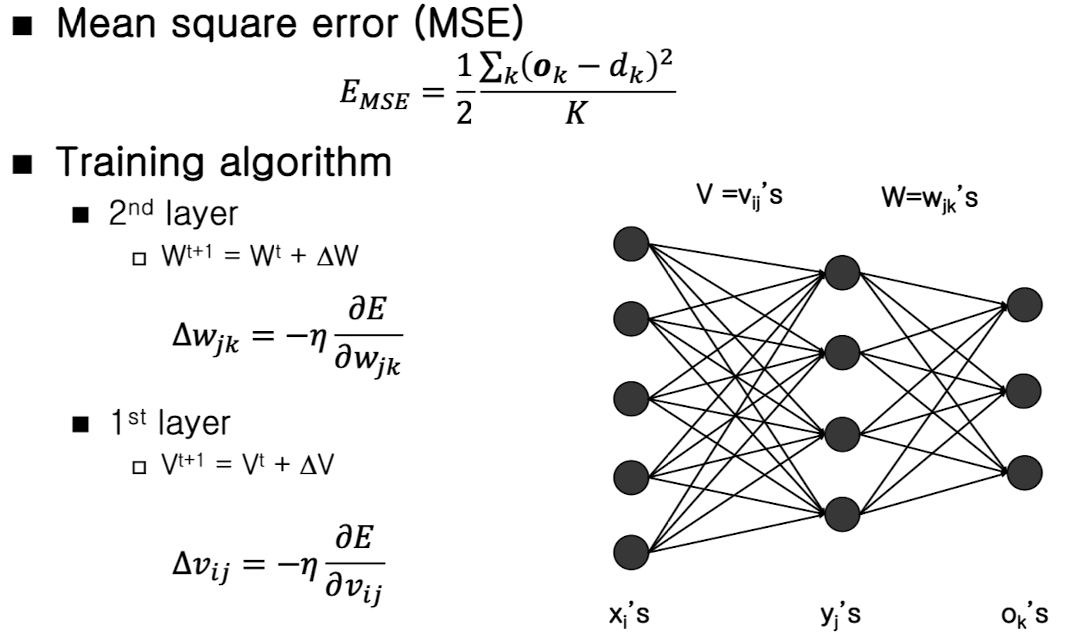

Back-propagation on MLP

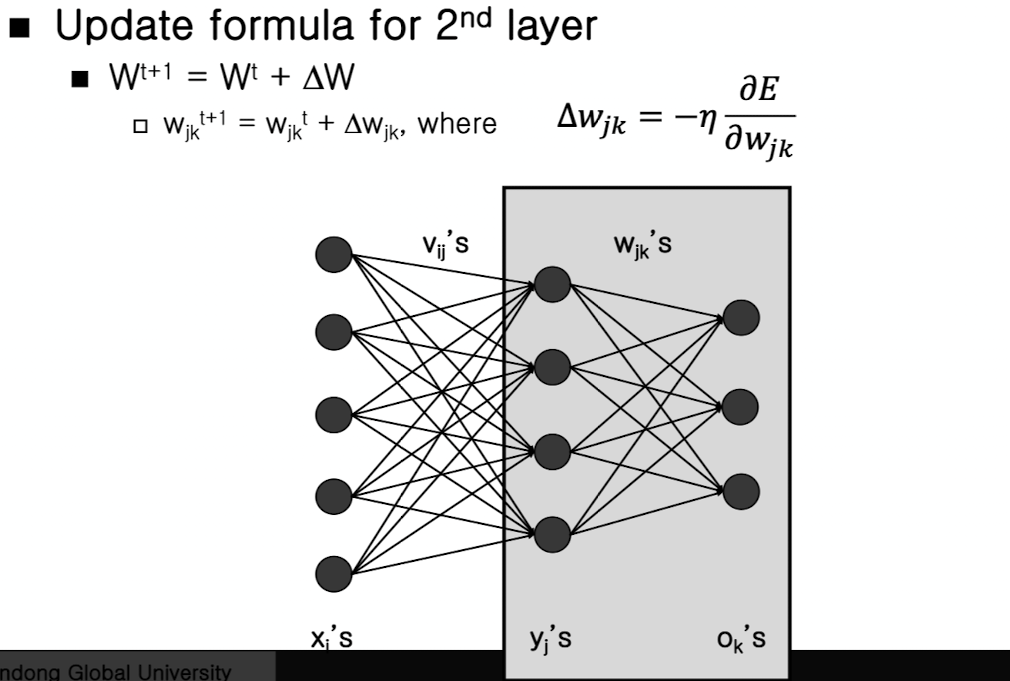

MLP - Training of 2nd Layer

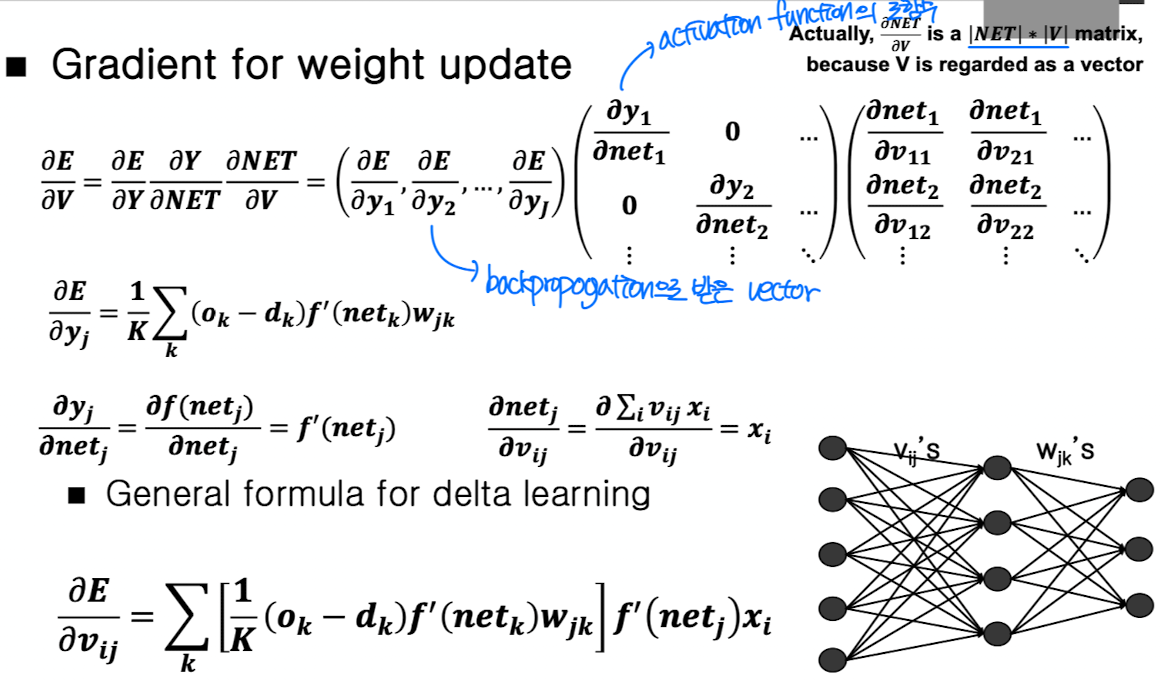

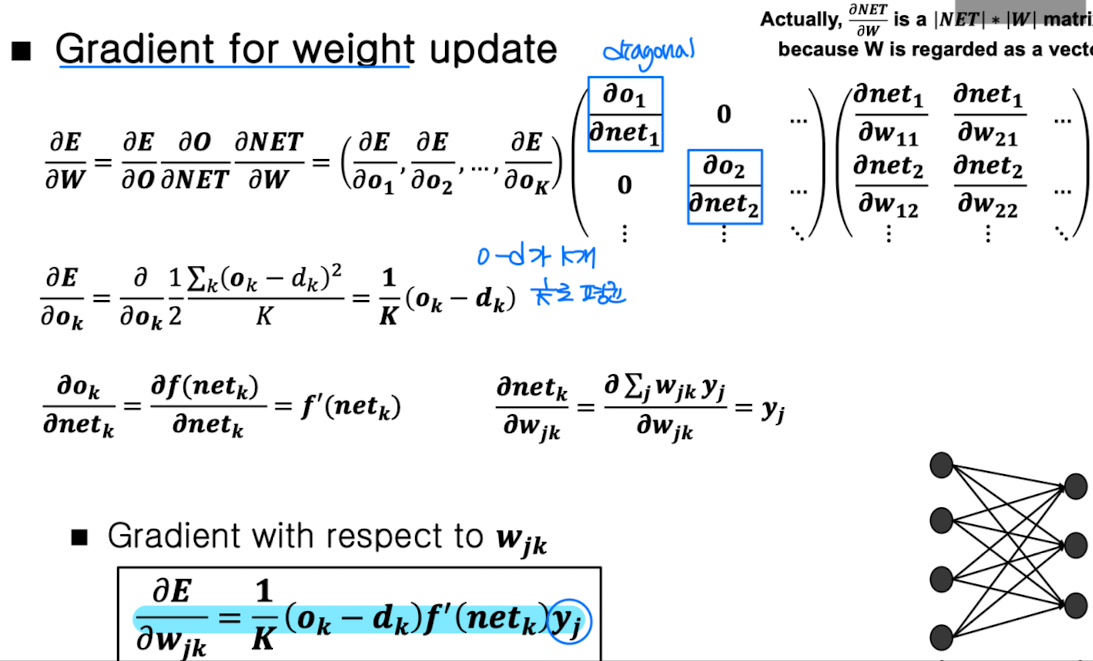

Gradient for weight update

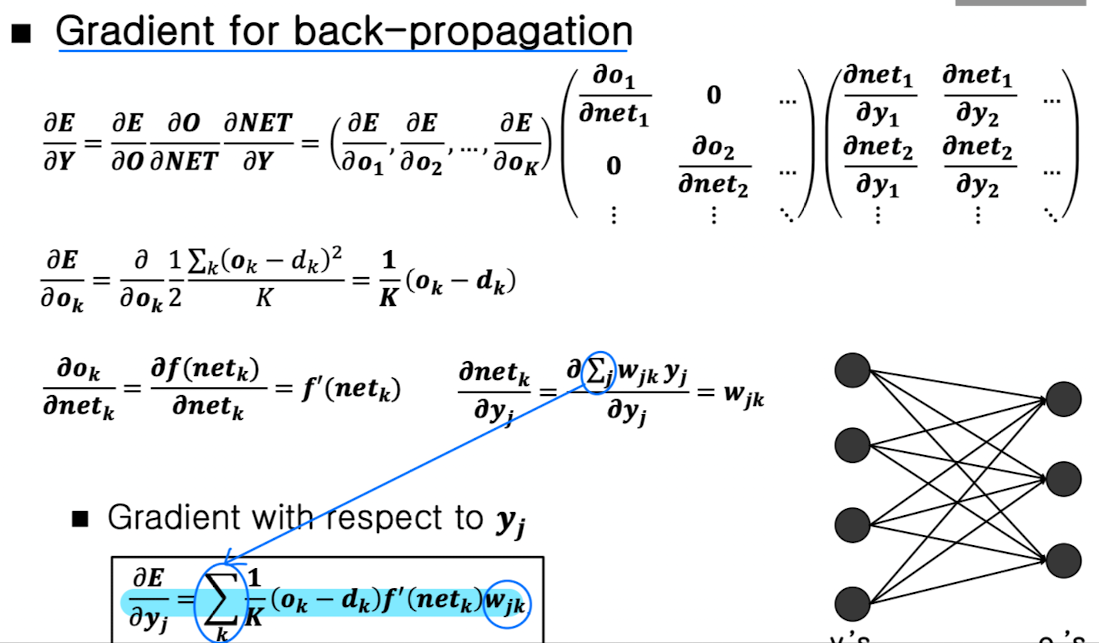

Gradient for back-propagation

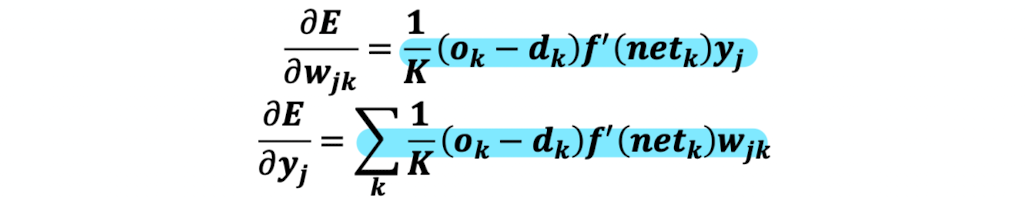

Gradients

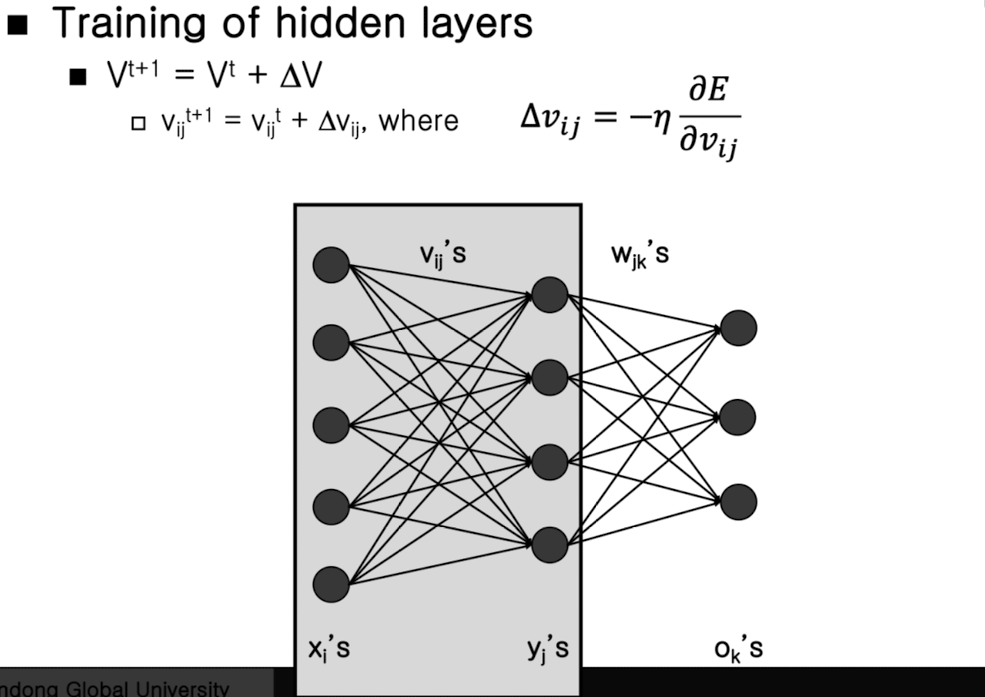

MLP - Training of 1st Layer

➡️ weight에 대한 gradient만 계산